はじめに

今回は、レプリカセット、デプロイメント、サービスあたり。

この辺は、ノードを意識した機能も出てくるため、minikube環境ではなく、VirtualBox上に複数ノードによるKubernetesクラスター環境を構築して試してみることにします。

関連記事

コンテナ型仮想化技術 Study01 / Docker基礎

コンテナ型仮想化技術 Study02 / Docker レジストリ

コンテナ型仮想化技術 Study03 / Docker Compose

コンテナ型仮想化技術 Study04 / Minikube & kubectl簡易操作

コンテナ型仮想化技術 Study05 / Pod操作

コンテナ型仮想化技術 Study06 / ReplicaSet, Deployment, Service

コンテナ型仮想化技術 Study06' / Kubernetesネットワーク問題判別

コンテナ型仮想化技術 Study07 / ストレージ

コンテナ型仮想化技術 Study08 / Statefulset, Ingress

コンテナ型仮想化技術 Study09 / Helm

参考情報

kubernetes セットアップ

2019年版・Kubernetesクラスタ構築入門

K8s Node障害時の振る舞いについての検証記録

Kubernetesはクラスタで障害があったとき、どういう動きをするのか

Kubernetesのノード障害時の挙動とノード復帰後の再スケジューリング

マルチノードのkubernetesクラスター環境構築

Windows10 + VirtualBox上に、マルチノードのKubernetes環境を構築します。

構成を支援するツールも環境によって色々提供されているっぽいですが、オンプレ環境に一から構築しようと思ったらkubeadm辺りが使われるんですかね。

https://kubernetes.io/ja/docs/setup/independent/install-kubeadm/

お勉強用に手っ取り環境作りたいだけなので、以下に提供されているものをそのまま使います。

https://github.com/takara9/vagrant-kubernetes

こいつを使うとVagrantとAnsibleを使ってKubernetesクラスター環境を構築してくれます(MasterNode x1, WorkerNode x2)。素晴らしいですねぇ。

構築手順メモ

【H/W】

ThankPad T480

CPU Intel Core i5-8350U 1.70GHz

Memory 32GB

【OS,S/W】

Windows10

VirtualBox 6.0.8

Vagrant 2.2.4

Git 2.21.0

プロジェクトをクローンして、ローカルにVagrantfileなどを持ってきます。

c:\y\Vagrant>git clone https://github.com/takara9/vagrant-kubernetes

Cloning into 'vagrant-kubernetes'...

remote: Enumerating objects: 21, done.

remote: Counting objects: 100% (21/21), done.

remote: Compressing objects: 100% (14/14), done.

Receiving objects: 87% (201/230)

Receiving objects: 100% (230/230), 37.66 KiB | 332.00 KiB/s, done.

Resolving deltas: 100% (122/122), done.

で、起動。これだけ。楽ちん。素晴らしい。

c:\y\Vagrant>cd vagrant-kubernetes

c:\y\Vagrant\vagrant-kubernetes>vagrant up

==> vagrant: A new version of Vagrant is available: 2.2.6 (installed version: 2.2.4)!

==> vagrant: To upgrade visit: https://www.vagrantup.com/downloads.html

Bringing machine 'node1' up with 'virtualbox' provider...

Bringing machine 'node2' up with 'virtualbox' provider...

Bringing machine 'master' up with 'virtualbox' provider...

==> node1: Importing base box 'ubuntu/xenial64'...

==> node1: Matching MAC address for NAT networking...

==> node1: Checking if box 'ubuntu/xenial64' version '20190613.1.0' is up to date...

==> node1: A newer version of the box 'ubuntu/xenial64' for provider 'virtualbox' is

==> node1: available! You currently have version '20190613.1.0'. The latest is version

==> node1: '20191113.0.0'. Run `vagrant box update` to update.

==> node1: Setting the name of the VM: vagrant-kubernetes_node1_1573712027100_58519

==> node1: Clearing any previously set network interfaces...

==> node1: Preparing network interfaces based on configuration...

node1: Adapter 1: nat

node1: Adapter 2: hostonly

==> node1: Forwarding ports...

node1: 22 (guest) => 2222 (host) (adapter 1)

==> node1: Running 'pre-boot' VM customizations...

==> node1: Booting VM...

==> node1: Waiting for machine to boot. This may take a few minutes...

node1: SSH address: 127.0.0.1:2222

node1: SSH username: vagrant

node1: SSH auth method: private key

node1:

node1: Vagrant insecure key detected. Vagrant will automatically replace

node1: this with a newly generated keypair for better security.

node1:

node1: Inserting generated public key within guest...

node1: Removing insecure key from the guest if it's present...

node1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> node1: Machine booted and ready!

==> node1: Checking for guest additions in VM...

node1: The guest additions on this VM do not match the installed version of

node1: VirtualBox! In most cases this is fine, but in rare cases it can

node1: prevent things such as shared folders from working properly. If you see

node1: shared folder errors, please make sure the guest additions within the

node1: virtual machine match the version of VirtualBox you have installed on

node1: your host and reload your VM.

node1:

node1: Guest Additions Version: 5.1.38

node1: VirtualBox Version: 6.0

==> node1: Setting hostname...

==> node1: Configuring and enabling network interfaces...

==> node1: Mounting shared folders...

node1: /vagrant => C:/y/Vagrant/vagrant-kubernetes

==> node1: Running provisioner: ansible_local...

node1: Installing Ansible...

Vagrant has automatically selected the compatibility mode '2.0'

according to the Ansible version installed (2.8.6).

Alternatively, the compatibility mode can be specified in your Vagrantfile:

https://www.vagrantup.com/docs/provisioning/ansible_common.html#compatibility_mode

node1: Running ansible-playbook...

PLAY [all] *********************************************************************

TASK [Gathering Facts] *********************************************************

ok: [node1]

TASK [include vars] ************************************************************

ok: [node1]

TASK [debug] *******************************************************************

ok: [node1] => {

"k8s_minor_ver": "14"

}

TASK [Add Docker GPG key] ******************************************************

changed: [node1]

TASK [Add Docker APT repository] ***********************************************

changed: [node1]

TASK [Install a list of packages] **********************************************

[WARNING]: Could not find aptitude. Using apt-get instead

changed: [node1]

TASK [usermod -aG docker vagrant] **********************************************

changed: [node1]

TASK [Set sysctl] **************************************************************

changed: [node1]

TASK [Add GlusterFS Repository] ************************************************

changed: [node1]

TASK [Install GlusterFS client] ************************************************

changed: [node1]

TASK [add Kubernetes apt-key] **************************************************

changed: [node1]

TASK [add Kubernetes' APT repository] ******************************************

changed: [node1]

TASK [Install kubectl] *********************************************************

changed: [node1]

TASK [Install kubelet] *********************************************************

changed: [node1]

TASK [Install kubeadm] *********************************************************

changed: [node1]

TASK [include vars] ************************************************************

ok: [node1]

TASK [getting hostonly ip address] *********************************************

changed: [node1]

TASK [debug] *******************************************************************

ok: [node1] => {

"ip.stdout_lines[1].split(':')[1].split(' ')[0]": "172.16.20.12"

}

TASK [change 10-kubeadm.conf for v1.11 or later] *******************************

changed: [node1]

TASK [change 10-kubeadm.conf for v1.9 or v1.10] ********************************

skipping: [node1]

TASK [daemon-reload and restart kubelet] ***************************************

changed: [node1]

PLAY RECAP *********************************************************************

node1 : ok=20 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

==> node2: Importing base box 'ubuntu/xenial64'...

==> node2: Matching MAC address for NAT networking...

==> node2: Checking if box 'ubuntu/xenial64' version '20190613.1.0' is up to date...

==> node2: Setting the name of the VM: vagrant-kubernetes_node2_1573712312261_68038

==> node2: Fixed port collision for 22 => 2222. Now on port 2200.

==> node2: Clearing any previously set network interfaces...

==> node2: Preparing network interfaces based on configuration...

node2: Adapter 1: nat

node2: Adapter 2: hostonly

==> node2: Forwarding ports...

node2: 22 (guest) => 2200 (host) (adapter 1)

==> node2: Running 'pre-boot' VM customizations...

==> node2: Booting VM...

==> node2: Waiting for machine to boot. This may take a few minutes...

node2: SSH address: 127.0.0.1:2200

node2: SSH username: vagrant

node2: SSH auth method: private key

node2:

node2: Vagrant insecure key detected. Vagrant will automatically replace

node2: this with a newly generated keypair for better security.

node2:

node2: Inserting generated public key within guest...

node2: Removing insecure key from the guest if it's present...

node2: Key inserted! Disconnecting and reconnecting using new SSH key...

==> node2: Machine booted and ready!

==> node2: Checking for guest additions in VM...

node2: The guest additions on this VM do not match the installed version of

node2: VirtualBox! In most cases this is fine, but in rare cases it can

node2: prevent things such as shared folders from working properly. If you see

node2: shared folder errors, please make sure the guest additions within the

node2: virtual machine match the version of VirtualBox you have installed on

node2: your host and reload your VM.

node2:

node2: Guest Additions Version: 5.1.38

node2: VirtualBox Version: 6.0

==> node2: Setting hostname...

==> node2: Configuring and enabling network interfaces...

==> node2: Mounting shared folders...

node2: /vagrant => C:/y/Vagrant/vagrant-kubernetes

==> node2: Running provisioner: ansible_local...

node2: Installing Ansible...

Vagrant has automatically selected the compatibility mode '2.0'

according to the Ansible version installed (2.8.6).

Alternatively, the compatibility mode can be specified in your Vagrantfile:

https://www.vagrantup.com/docs/provisioning/ansible_common.html#compatibility_mode

node2: Running ansible-playbook...

PLAY [all] *********************************************************************

TASK [Gathering Facts] *********************************************************

ok: [node2]

TASK [include vars] ************************************************************

ok: [node2]

TASK [debug] *******************************************************************

ok: [node2] => {

"k8s_minor_ver": "14"

}

TASK [Add Docker GPG key] ******************************************************

changed: [node2]

TASK [Add Docker APT repository] ***********************************************

changed: [node2]

TASK [Install a list of packages] **********************************************

[WARNING]: Could not find aptitude. Using apt-get instead

changed: [node2]

TASK [usermod -aG docker vagrant] **********************************************

changed: [node2]

TASK [Set sysctl] **************************************************************

changed: [node2]

TASK [Add GlusterFS Repository] ************************************************

changed: [node2]

TASK [Install GlusterFS client] ************************************************

changed: [node2]

TASK [add Kubernetes apt-key] **************************************************

changed: [node2]

TASK [add Kubernetes' APT repository] ******************************************

changed: [node2]

TASK [Install kubectl] *********************************************************

changed: [node2]

TASK [Install kubelet] *********************************************************

changed: [node2]

TASK [Install kubeadm] *********************************************************

changed: [node2]

TASK [include vars] ************************************************************

ok: [node2]

TASK [getting hostonly ip address] *********************************************

changed: [node2]

TASK [debug] *******************************************************************

ok: [node2] => {

"ip.stdout_lines[1].split(':')[1].split(' ')[0]": "172.16.20.13"

}

TASK [change 10-kubeadm.conf for v1.11 or later] *******************************

changed: [node2]

TASK [change 10-kubeadm.conf for v1.9 or v1.10] ********************************

skipping: [node2]

TASK [daemon-reload and restart kubelet] ***************************************

changed: [node2]

PLAY RECAP *********************************************************************

node2 : ok=20 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

==> master: Importing base box 'ubuntu/xenial64'...

==> master: Matching MAC address for NAT networking...

==> master: Checking if box 'ubuntu/xenial64' version '20190613.1.0' is up to date...

==> master: Setting the name of the VM: vagrant-kubernetes_master_1573712682152_54641

==> master: Fixed port collision for 22 => 2222. Now on port 2201.

==> master: Clearing any previously set network interfaces...

==> master: Preparing network interfaces based on configuration...

master: Adapter 1: nat

master: Adapter 2: hostonly

==> master: Forwarding ports...

master: 22 (guest) => 2201 (host) (adapter 1)

==> master: Running 'pre-boot' VM customizations...

==> master: Booting VM...

==> master: Waiting for machine to boot. This may take a few minutes...

master: SSH address: 127.0.0.1:2201

master: SSH username: vagrant

master: SSH auth method: private key

master:

master: Vagrant insecure key detected. Vagrant will automatically replace

master: this with a newly generated keypair for better security.

master:

master: Inserting generated public key within guest...

master: Removing insecure key from the guest if it's present...

master: Key inserted! Disconnecting and reconnecting using new SSH key...

==> master: Machine booted and ready!

==> master: Checking for guest additions in VM...

master: The guest additions on this VM do not match the installed version of

master: VirtualBox! In most cases this is fine, but in rare cases it can

master: prevent things such as shared folders from working properly. If you see

master: shared folder errors, please make sure the guest additions within the

master: virtual machine match the version of VirtualBox you have installed on

master: your host and reload your VM.

master:

master: Guest Additions Version: 5.1.38

master: VirtualBox Version: 6.0

==> master: Setting hostname...

==> master: Configuring and enabling network interfaces...

==> master: Mounting shared folders...

master: /vagrant => C:/y/Vagrant/vagrant-kubernetes

==> master: Running provisioner: ansible_local...

master: Installing Ansible...

Vagrant has automatically selected the compatibility mode '2.0'

according to the Ansible version installed (2.8.6).

Alternatively, the compatibility mode can be specified in your Vagrantfile:

https://www.vagrantup.com/docs/provisioning/ansible_common.html#compatibility_mode

master: Running ansible-playbook...

PLAY [all] *********************************************************************

TASK [Gathering Facts] *********************************************************

ok: [master]

TASK [include vars] ************************************************************

ok: [master]

TASK [debug] *******************************************************************

ok: [master] => {

"k8s_minor_ver": "14"

}

TASK [Add Docker GPG key] ******************************************************

changed: [master]

TASK [Add Docker APT repository] ***********************************************

changed: [master]

TASK [Install a list of packages] **********************************************

[WARNING]: Could not find aptitude. Using apt-get instead

changed: [master]

TASK [usermod -aG docker vagrant] **********************************************

changed: [master]

TASK [Set sysctl] **************************************************************

changed: [master]

TASK [Add GlusterFS Repository] ************************************************

changed: [master]

TASK [Install GlusterFS client] ************************************************

changed: [master]

TASK [add Kubernetes apt-key] **************************************************

changed: [master]

TASK [add Kubernetes' APT repository] ******************************************

changed: [master]

TASK [Install kubectl] *********************************************************

changed: [master]

TASK [Install kubelet] *********************************************************

changed: [master]

TASK [Install kubeadm] *********************************************************

changed: [master]

TASK [include vars] ************************************************************

ok: [master]

TASK [getting hostonly ip address] *********************************************

changed: [master]

TASK [debug] *******************************************************************

ok: [master] => {

"ip.stdout_lines[1].split(':')[1].split(' ')[0]": "172.16.20.11"

}

TASK [change 10-kubeadm.conf for v1.11 or later] *******************************

changed: [master]

TASK [change 10-kubeadm.conf for v1.9 or v1.10] ********************************

skipping: [master]

TASK [daemon-reload and restart kubelet] ***************************************

changed: [master]

PLAY RECAP *********************************************************************

master : ok=20 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

==> master: Running provisioner: ansible_local...

Vagrant has automatically selected the compatibility mode '2.0'

according to the Ansible version installed (2.8.6).

Alternatively, the compatibility mode can be specified in your Vagrantfile:

https://www.vagrantup.com/docs/provisioning/ansible_common.html#compatibility_mode

master: Running ansible-playbook...

PLAY [master] ******************************************************************

TASK [Gathering Facts] *********************************************************

ok: [master]

TASK [include k8s vartsion] ****************************************************

ok: [master]

TASK [kubeadm reset v1.11 or later] ********************************************

changed: [master]

TASK [kubeadm reset v1.10 or eary] *********************************************

skipping: [master]

TASK [getting hostonly ip address] *********************************************

changed: [master]

TASK [kubeadm init] ************************************************************

changed: [master]

TASK [file] ********************************************************************

ok: [master]

TASK [kubeadm < 1.13] **********************************************************

skipping: [master]

TASK [kubeadm >= 1.14] *********************************************************

changed: [master]

TASK [change config.yaml] ******************************************************

ok: [master]

TASK [daemon-reload and restart kubelet] ***************************************

changed: [master]

TASK [mkdir kubeconfig] ********************************************************

changed: [master]

TASK [mkdir /vagrant/kubeconfig] ***********************************************

changed: [master]

TASK [chmod admin.conf] ********************************************************

changed: [master]

TASK [copy config to home dir] *************************************************

changed: [master]

TASK [copy config to host dir] *************************************************

changed: [master]

TASK [download Manifest of Flannel for k8s ver <=11] ***************************

skipping: [master]

TASK [download Manifest of Flannel for k8s ver > 11] ***************************

changed: [master]

TASK [add a new line] **********************************************************

changed: [master]

TASK [Deploy Flannel] **********************************************************

changed: [master]

PLAY RECAP *********************************************************************

master : ok=17 changed=13 unreachable=0 failed=0 skipped=3 rescued=0 ignored=0

==> master: Running provisioner: ansible_local...

Vagrant has automatically selected the compatibility mode '2.0'

according to the Ansible version installed (2.8.6).

Alternatively, the compatibility mode can be specified in your Vagrantfile:

https://www.vagrantup.com/docs/provisioning/ansible_common.html#compatibility_mode

master: Running ansible-playbook...

PLAY [nodes] *******************************************************************

TASK [Gathering Facts] *********************************************************

ok: [node2]

ok: [node1]

TASK [include k8s version] *****************************************************

ok: [node1]

ok: [node2]

TASK [include kubeadm join token etc] ******************************************

ok: [node1]

ok: [node2]

TASK [kubeadm reset v1.11 or later] ********************************************

changed: [node2]

changed: [node1]

TASK [kubeadm reset v1.10 or eary] *********************************************

skipping: [node1]

skipping: [node2]

TASK [kubeadm join] ************************************************************

changed: [node2]

changed: [node1]

TASK [change config.yaml] ******************************************************

ok: [node2]

ok: [node1]

TASK [daemon-reload and restart kubelet] ***************************************

changed: [node1]

changed: [node2]

PLAY RECAP *********************************************************************

node1 : ok=7 changed=3 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

node2 : ok=7 changed=3 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

c:\y\Vagrant\vagrant-kubernetes>vagrant status

Current machine states:

node1 running (virtualbox)

node2 running (virtualbox)

master running (virtualbox)

This environment represents multiple VMs. The VMs are all listed

above with their current state. For more information about a specific

VM, run `vagrant status NAME`.

約20分弱くらいで完了しました。

ログを見た感じ、やはりkubeadmを使って構成しているようです。

確認

マスターノードに接続して各種状況確認。

c:\y\Vagrant\vagrant-kubernetes>vagrant ssh master

Welcome to Ubuntu 16.04.6 LTS (GNU/Linux 4.4.0-150-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

102 packages can be updated.

43 updates are security updates.

New release '18.04.3 LTS' available.

Welcome to Ubuntu 16.04.6 LTS (GNU/Linux 4.4.0-150-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

102 packages can be updated.

43 updates are security updates.

New release '18.04.3 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

バージョン

vagrant@master:~$ kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.3", GitCommit:"5e53fd6bc17c0dec8434817e69b04a25d8ae0ff0", GitTreeState:"clean", BuildDate:"2019-06-06T01:44:30Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.9", GitCommit:"500f5aba80d71253cc01ac6a8622b8377f4a7ef9", GitTreeState:"clean", BuildDate:"2019-11-13T11:13:04Z", GoVersion:"go1.12.12", Compiler:"gc", Platform:"linux/amd64"}

cluster-info

vagrant@master:~$ kubectl cluster-info

Kubernetes master is running at https://172.16.20.11:6443

KubeDNS is running at https://172.16.20.11:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

node

vagrant@master:~$ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready master 66m v1.14.3 172.16.20.11 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.6.1

node1 Ready <none> 65m v1.14.3 172.16.20.12 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.6.1

node2 Ready <none> 66m v1.14.3 172.16.20.13 <none> Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://18.6.1

namespace

vagrant@master:~$ kubectl get namespace

NAME STATUS AGE

default Active 4d18h

kube-node-lease Active 4d18h

kube-public Active 4d18h

kube-system Active 4d18h

操作例

レプリカセットの操作

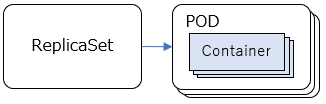

レプリカセットはPodの複製を管理するためのリソースです。

レプリカセットとPodの関連は以下のイメージです。

Podの数を動的に制御することが可能になります(スケール機能)。

作成

以下を少しカスタマイズしてマニフェストファイルを作成してみます。

https://github.com/takara9/codes_for_lessons/blob/master/step08/deployment1.yml

上の定義のKind: DeploymentをReplicaSetに変えただけです。

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: web-deploy

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: nginx

image: nginx:1.16

template以下は、Podの定義と共通のものが使えます。

補足: Labelについて

参考:ラベル(Labels)とセレクター(Selectors)

レプリカセットとPodの紐づけはLabelによって行われるようです。Podの定義となるTemplateでは、labelsという項目でラベル付けを行います。Replicaset(or後続のDeployment)のSpecでは、selectorで管理対象となるPodを指定しますが、上の例では、matchLabelsという項目で、そのlabelにマッチするPodを管理対象としますよ、という指定になっています。

labelでは、上のリンクのように、keyとvalueのペアを使うことになりますが、keyもvalueも任意の文字列が使えますが、慣例的に、keyとしてはアプリケーションの種類を表す"app"や、層の種類を表す"tier"などが使われるようです。

上の例では、app: webというラベルが1つしか付いていませんが、複数ラベルを指定することもできます。

作成

vagrant@master:~/mytest01_replicaset$ kubectl apply -f replicaset.yml

replicaset.apps/web-deploy created

確認

vagrant@master:~/mytest01_replicaset$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-bpqnq 1/1 Running 0 65s 10.244.2.2 node1 <none> <none>

pod/web-deploy-s2z2q 1/1 Running 0 65s 10.244.2.3 node1 <none> <none>

pod/web-deploy-vdqcp 1/1 Running 0 65s 10.244.1.2 node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 4d19h <none>

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy 3 3 3 65s nginx nginx:1.16 app=web

レプリカセットというリソースと、そこから派生してPodが3つ作成されているのが分かります。レプリカセットとPodは階層構造になっており、ネーミングからもその階層が分かります。

レプリカセット

replicaset.apps/web-deploy

Pod

pod/web-deploy-bpqnq

pod/web-deploy-s2z2q

pod/web-deploy-vdqcp

3つのPodは、node1上で2つ、node2上で1つ、というように、きちんとクラスター上に分散されて稼働していますね。

※スケール機能については後続のデプロイメントでも共通なので、そちらで動作検証するので、ここで作成した定義は削除しておきます。

vagrant@master:~/mytest01_replicaset$ kubectl delete -f replicaset.yml

replicaset.apps "web-deploy" deleted

デプロイメントの操作

レプリカセットのさらに上位の、デプロイメントというリソースがあります。

デプロイメントでは、新しいバージョンのコンテナのリリースを管理するための仕組みが提供されています(ロールアウト機能)。

実運用では、レプリカセットを直接使うことは少なく、デプロイメントの単位で運用することがほとんどのようです。

作成

こちらをそのまま使ってデプロイメントを作成してみます。

https://github.com/takara9/codes_for_lessons/blob/master/step08/deployment1.yml

上で試したレプリカセットの定義と、kind: Deploymentの部分のみが異なります。

作成

vagrant@master:~/codes_for_lessons/step08$ kubectl apply -f deployment1.yml

deployment.apps/web-deploy created

確認

vagrant@master:~/codes_for_lessons/step08$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-7c74bc9bb8-49lv9 1/1 Running 0 23s 10.244.1.3 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-5czxf 1/1 Running 0 23s 10.244.2.4 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-sh22g 1/1 Running 0 23s 10.244.1.4 node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 4d19h <none>

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 3/3 3 3 23s nginx nginx:1.16 app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-7c74bc9bb8 3 3 3 23s nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

デプロイメントは、レプリカセットというリソースとPodと合わせて使用されます。デプロイメントを作成したことで、レプリカの数をコントロースするためにレプリカセットと、管理対象のPodがそれぞれ作成されていることが分かります。

それぞれに付けられている名前で、階層構造が分かります。

デプロイメント

deployment.apps/web-deploy

レプリカセット

replicaset.apps/web-deploy-7c74bc9bb8

Pod

pod/web-deploy-7c74bc9bb8-49lv9

pod/web-deploy-7c74bc9bb8-5czxf

pod/web-deploy-7c74bc9bb8-sh22g

スケール機能

マニフェストファイルのreplicasの数を 3=>10に変更してみます。

変更後のymlは以下。

https://github.com/takara9/codes_for_lessons/blob/master/step08/deployment2.yml

vagrant@master:~/codes_for_lessons/step08$ kubectl apply -f deployment2.yml

deployment.apps/web-deploy configured

vagrant@master:~/codes_for_lessons/step08$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-7c74bc9bb8-49lv9 1/1 Running 0 7m10s 10.244.1.3 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-5czxf 1/1 Running 0 7m10s 10.244.2.4 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-b2kmg 1/1 Running 0 22s 10.244.2.6 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-c7r4f 1/1 Running 0 22s 10.244.2.8 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-l2hfq 1/1 Running 0 22s 10.244.2.7 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-nfbh8 1/1 Running 0 22s 10.244.1.6 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-sh22g 1/1 Running 0 7m10s 10.244.1.4 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-sjflb 1/1 Running 0 22s 10.244.1.7 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-v74q2 1/1 Running 0 22s 10.244.1.5 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-w64wq 1/1 Running 0 22s 10.244.2.5 node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 4d20h <none>

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 10/10 10 10 7m10s nginx nginx:1.16 app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-7c74bc9bb8 10 10 10 7m10s nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

Podの数が3=>10になった!

次はコマンドでレプリカ数を5に変更してみます。

vagrant@master:~/codes_for_lessons/step08$ kubectl scale --replicas=5 deployment.apps/web-deploy

deployment.apps/web-deploy scaled

vagrant@master:~/codes_for_lessons/step08$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-7c74bc9bb8-49lv9 1/1 Running 0 9m19s 10.244.1.3 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-5czxf 1/1 Running 0 9m19s 10.244.2.4 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-sh22g 1/1 Running 0 9m19s 10.244.1.4 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-v74q2 1/1 Running 0 2m31s 10.244.1.5 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-w64wq 1/1 Running 0 2m31s 10.244.2.5 node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 4d20h <none>

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 5/5 5 5 9m19s nginx nginx:1.16 app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-7c74bc9bb8 5 5 5 9m19s nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

Pod数が10=>5になりました。

ロールアウト機能

Pod内で稼働するイメージのバージョンを変更する場合など、サービスを稼働させながら新しいPodに順次切り替える機能です。

Pod数10にもどして、デプロイメントの詳細を見てみます。

vagrant@master:~/codes_for_lessons/step08$ kubectl describe deployment web-deploy

Name: web-deploy

Namespace: default

CreationTimestamp: Tue, 19 Nov 2019 02:28:26 +0000

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"web-deploy","namespace":"default"},"spec":{"replicas":10,...

Selector: app=web

Replicas: 10 desired | 10 updated | 10 total | 10 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=web

Containers:

nginx:

Image: nginx:1.16

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available True MinimumReplicasAvailable

OldReplicaSets: <none>

NewReplicaSet: web-deploy-7c74bc9bb8 (10/10 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 10m deployment-controller Scaled up replica set web-deploy-7c74bc9bb8 to 3

Normal ScalingReplicaSet 83s deployment-controller Scaled down replica set web-deploy-7c74bc9bb8 to 5

Normal ScalingReplicaSet 16s (x2 over 3m36s) deployment-controller Scaled up replica set web-deploy-7c74bc9bb8 to 10

```RollingUpdateStrategy: 25% max unavailable, 25% max surge``: この設定は、Podを順次切り替えていく際に、最大25%のPod停止を許容し、最大25%の稼働数超過を許容する、という意味の設定です。

Replica数10の場合...

最大停止数: 10x0.25=2.5 =>切り捨てて2個まで停止を許容する

最大超過数: 10x0.25=2.5 =>切り上げて3個まで超過して稼働を許容する

つまり、10-2=8個のPodは最低限維持してサービスを継続しつつ、10+3=13個までPodを並行稼働させながら、順次新しいPodに入れ替えていく、ということを勝手にやってくれるということになります。

参考: Deployment V1 apps

nginxのイメージを1.16=>1.17に変更したマニフェストファイルを用意します。(以下をそのまま使います)

https://github.com/takara9/codes_for_lessons/blob/master/step08/deployment3.yml

このマニフェストファイルを適用して構成変更を行いますが、状況確認用に、kubectl get replicaset -o wide -wコマンドを別シェルで実行しておきます。-wオプションは、変更が生じたらそれをwatchして表示してくれるオプションです。

ロールアウト実行

vagrant@master:~/codes_for_lessons/step08$ kubectl apply -f deployment3.yml

deployment.apps/web-deploy configured

入れ替わりの経緯が以下のように確認できます。

vagrant@master:~$ kubectl get replicaset -o wide -w

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

web-deploy-7c74bc9bb8 10 10 10 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 3 0 0 0s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 10 10 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 5 0 0 0s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 10 10 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 5 0 0 0s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 8 8 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 5 3 0 0s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 5 5 0 0s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 5 5 1 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 7 8 8 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 7 8 8 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 6 5 1 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 7 7 7 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 6 5 1 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 6 6 1 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 6 6 2 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 6 7 7 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 7 6 2 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 6 7 7 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 7 6 2 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 7 7 2 9s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 6 6 6 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 7 7 3 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 5 6 6 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 8 7 3 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 5 6 6 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 8 7 3 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 8 8 3 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 5 5 5 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 8 8 4 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 4 5 5 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 4 5 5 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 9 8 4 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 4 4 4 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 9 8 4 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 9 9 4 10s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 9 9 5 11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 3 4 4 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 3 4 4 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 10 9 5 11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 3 3 3 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 10 9 5 11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 10 10 5 11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 10 10 6 12s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 2 3 3 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 2 3 3 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 2 2 2 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 10 10 7 12s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 1 2 2 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 1 2 2 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 1 1 1 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 10 10 8 13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 0 1 1 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 0 1 1 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 0 0 0 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 10 10 9 14s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 10 10 10 15s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

例えば、コマンド実行直後に、新しいレプリカセット(web-deploy-6d6465dbbf)がDESIRED:3 で作成され、既存のレプリカセット(web-deploy-7c74bc9bb8)がDESIRED:8となっています。

web-deploy-6d6465dbbf 3 0 0 0s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 10 10 12m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

このように、最低限8個のPodを維持しつつ、かつ最大13個までの並行度を保ちながら、新しいバージョンのものに順次置き換わっていきます。

最終的に旧レプリカセットのPod数は0となり、新レプリカセットのPod数が10になりロールアウトが完了しました。

ロールアウトの履歴を確認することもできます。

vagrant@master:~$ kubectl rollout history deployment.apps/web-deploy

deployment.apps/web-deploy

REVISION CHANGE-CAUSE

1 <none>

2 <none>

マニフェストファイルやkubectlコマンドの--recordフラグなどで、CHANGE-CAUSEの記述を指定することができるようですが、今回特に指定していないので<none>になってます。

REVISIONの番号指定で詳細を確認できます。

vagrant@master:~$ kubectl rollout history deployment.apps/web-deploy --revision=1

deployment.apps/web-deploy with revision #1

Pod Template:

Labels: app=web

pod-template-hash=7c74bc9bb8

Containers:

nginx:

Image: nginx:1.16

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

vagrant@master:~$ kubectl rollout history deployment.apps/web-deploy --revision=2

deployment.apps/web-deploy with revision #2

Pod Template:

Labels: app=web

pod-template-hash=6d6465dbbf

Containers:

nginx:

Image: nginx:1.17

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

ロールバック機能

ロールアウトした変更を元に戻す機能です。

ロールアウト前(1つ前)の状態に戻します。(--to-revision= 指定で特定のrevisionに戻すこともできるようです。)

vagrant@master:~/codes_for_lessons/step08$ kubectl rollout undo deployment.apps/web-deploy

deployment.apps/web-deploy rolled back

先の例と同様、変更状況をWatchしてみると

vagrant@master:~$ kubectl get replicaset -o wide -w

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

web-deploy-6d6465dbbf 10 10 10 4m29s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 0 0 0 16m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 0 0 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 3 0 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 8 10 10 5m11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 3 0 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 3 3 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 8 10 10 5m11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 5 3 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 8 8 8 5m11s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 5 3 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 5 5 0 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 5 5 1 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 7 8 8 5m12s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 6 5 1 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 7 8 8 5m12s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 7 7 7 5m12s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 6 5 1 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 6 6 1 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 6 6 2 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 6 7 7 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 7 6 2 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 6 7 7 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 7 6 3 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 6 6 6 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 7 6 3 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 7 7 3 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 5 6 6 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 7 3 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 5 6 6 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 7 3 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 5 5 5 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 8 8 3 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 8 8 4 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 4 5 5 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 9 8 4 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 4 5 5 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 4 4 4 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 9 8 4 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 9 9 4 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 9 9 5 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 3 4 4 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 9 5 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 3 4 4 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 9 5 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-7c74bc9bb8 10 10 5 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 3 3 3 5m13s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 10 6 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 2 3 3 5m14s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 2 3 3 5m14s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 2 2 2 5m14s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 10 7 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 1 2 2 5m16s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 1 2 2 5m16s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 1 1 1 5m16s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 10 8 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 0 1 1 5m17s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 10 9 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

web-deploy-6d6465dbbf 0 1 1 5m17s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-6d6465dbbf 0 0 0 5m17s nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

web-deploy-7c74bc9bb8 10 10 10 17m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

nginx:1.16を使ったPodが10個稼働した状態になりました。

rolloutの履歴みると、revision:1がなくなってrevision3が増えてますね。

vagrant@master:~/codes_for_lessons/step08$ kubectl rollout history deployment.apps/web-deploy

deployment.apps/web-deploy

REVISION CHANGE-CAUSE

2 <none>

3 <none>

vagrant@master:~/codes_for_lessons/step08$ kubectl rollout history deployment.apps/web-deploy --revision=3

deployment.apps/web-deploy with revision #3

Pod Template:

Labels: app=web

pod-template-hash=7c74bc9bb8

Containers:

nginx:

Image: nginx:1.16

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

自己回復機能

コンテナレベルの障害は、ポッドが持っている機能で、RestartPolicyに従って自動再起動などの処理が行われることになりますが、ポッドレベルの障害(ノード障害など)の場合は、レプリカセットが持つ機能により、設定したレプリカ数を維持するようPodが別ノードで稼働することになります。

動作を比較するために、上のデプロイメントに加え、Pod単体の定義を1つ追加します(以下のマニフェストファイルを使用)。

https://github.com/takara9/codes_for_lessons/blob/master/step08/pod.yml

また、先に作成したデプロイメントのレプリカ数を5に変更しておきます。

vagrant@master:~/codes_for_lessons/step08$ kubectl apply -f pod.yml

pod/test1 created

vagrant@master:~/codes_for_lessons/step08$ kubectl scale --replicas=5 deployment.apps/web-deploy

deployment.apps/web-deploy scaled

状況としてはこんな感じ。

vagrant@master:~/codes_for_lessons/step08$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/test1 1/1 Running 0 66s 10.244.2.22 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-ff75w 1/1 Running 0 62m 10.244.2.19 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-r2lpz 1/1 Running 0 62m 10.244.2.18 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-s864t 1/1 Running 0 62m 10.244.1.16 node2 <none> <none>

pod/web-deploy-7c74bc9bb8-vgbr6 1/1 Running 0 62m 10.244.2.17 node1 <none> <none>

pod/web-deploy-7c74bc9bb8-xlh2v 1/1 Running 0 62m 10.244.1.15 node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 4d21h <none>

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 5/5 5 5 79m nginx nginx:1.16 app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-6d6465dbbf 0 0 0 67m nginx nginx:1.17 app=web,pod-template-hash=6d6465dbbf

replicaset.apps/web-deploy-7c74bc9bb8 5 5 5 79m nginx nginx:1.16 app=web,pod-template-hash=7c74bc9bb8

Pod単体のpod/test1がnode1で稼働し、デプロイメントから派生して稼働したpod/web-deploy-xxxは5個のレプリカが稼働していますが、node1上で3つ、node2上で2つ稼働している状態です。

先の例と同じようにPodの状況をウォッチしておきながら、test1が稼働しているnode1を停止させてみます。

c:\y\Vagrant\vagrant-kubernetes>vagrant halt node1

==> node1: Attempting graceful shutdown of VM...

以下、node1停止時点の状態です。

vagrant@master:~$ kubectl get pod -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test1 1/1 Running 0 6m48s 10.244.2.22 node1 <none> <none>

web-deploy-7c74bc9bb8-ff75w 1/1 Running 0 67m 10.244.2.19 node1 <none> <none>

web-deploy-7c74bc9bb8-r2lpz 1/1 Running 0 67m 10.244.2.18 node1 <none> <none>

web-deploy-7c74bc9bb8-s864t 1/1 Running 0 67m 10.244.1.16 node2 <none> <none>

web-deploy-7c74bc9bb8-vgbr6 1/1 Running 0 67m 10.244.2.17 node1 <none> <none>

web-deploy-7c74bc9bb8-xlh2v 1/1 Running 0 67m 10.244.1.15 node2 <none> <none>

5分後にノード障害を検知して...

web-deploy-7c74bc9bb8-vgbr6 1/1 Terminating 0 71m 10.244.2.17 node1 <none> <none>

web-deploy-7c74bc9bb8-ff75w 1/1 Terminating 0 71m 10.244.2.19 node1 <none> <none>

test1 1/1 Terminating 0 10m 10.244.2.22 node1 <none> <none>

web-deploy-7c74bc9bb8-r2lpz 1/1 Terminating 0 71m 10.244.2.18 node1 <none> <none>

web-deploy-7c74bc9bb8-fsjg4 0/1 Pending 0 0s <none> <none> <none> <none>

web-deploy-7c74bc9bb8-fsjg4 0/1 Pending 0 0s <none> node2 <none> <none>

web-deploy-7c74bc9bb8-chkft 0/1 Pending 0 0s <none> <none> <none> <none>

web-deploy-7c74bc9bb8-chkft 0/1 Pending 0 0s <none> node2 <none> <none>

web-deploy-7c74bc9bb8-c87d9 0/1 Pending 0 0s <none> <none> <none> <none>

web-deploy-7c74bc9bb8-fsjg4 0/1 ContainerCreating 0 0s <none> node2 <none> <none>

web-deploy-7c74bc9bb8-c87d9 0/1 Pending 0 0s <none> node2 <none> <none>

web-deploy-7c74bc9bb8-chkft 0/1 ContainerCreating 0 1s <none> node2 <none> <none>

web-deploy-7c74bc9bb8-c87d9 0/1 ContainerCreating 0 1s <none> node2 <none> <none>

web-deploy-7c74bc9bb8-fsjg4 1/1 Running 0 2s 10.244.1.20 node2 <none> <none>

web-deploy-7c74bc9bb8-c87d9 1/1 Running 0 3s 10.244.1.21 node2 <none> <none>

web-deploy-7c74bc9bb8-chkft 1/1 Running 0 3s 10.244.1.22 node2 <none> <none>

結果、こういう状態になっています。

vagrant@master:~$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test1 1/1 Terminating 0 14m 10.244.2.22 node1 <none> <none>

web-deploy-7c74bc9bb8-c87d9 1/1 Running 0 3m46s 10.244.1.21 node2 <none> <none>

web-deploy-7c74bc9bb8-chkft 1/1 Running 0 3m46s 10.244.1.22 node2 <none> <none>

web-deploy-7c74bc9bb8-ff75w 1/1 Terminating 0 75m 10.244.2.19 node1 <none> <none>

web-deploy-7c74bc9bb8-fsjg4 1/1 Running 0 3m46s 10.244.1.20 node2 <none> <none>

web-deploy-7c74bc9bb8-r2lpz 1/1 Terminating 0 75m 10.244.2.18 node1 <none> <none>

web-deploy-7c74bc9bb8-s864t 1/1 Running 0 75m 10.244.1.16 node2 <none> <none>

web-deploy-7c74bc9bb8-vgbr6 1/1 Terminating 0 75m 10.244.2.17 node1 <none> <none>

web-deploy-7c74bc9bb8-xlh2v 1/1 Running 0 75m 10.244.1.15 node2 <none> <none>

node1で稼働していたtest1と、3つのweb-deploy-xxxがTerminatingの状態になりました。

一方で、3つのweb-deploy-xxxがnode2で新たにRunningとなっています。

つまり、web-deploy-xxxがnode2で5つ稼働している状態となりました。(test1は復旧しない)

node1を再起動してみます。

c:\y\Vagrant\vagrant-kubernetes>vagrant up node1

Bringing machine 'node1' up with 'virtualbox' provider...

==> node1: Checking if box 'ubuntu/xenial64' version '20190613.1.0' is up to date...

==> node1: A newer version of the box 'ubuntu/xenial64' for provider 'virtualbox' is

==> node1: available! You currently have version '20190613.1.0'. The latest is version

==> node1: '20191114.0.0'. Run `vagrant box update` to update.

==> node1: Clearing any previously set forwarded ports...

==> node1: Clearing any previously set network interfaces...

==> node1: Preparing network interfaces based on configuration...

node1: Adapter 1: nat

node1: Adapter 2: hostonly

==> node1: Forwarding ports...

node1: 22 (guest) => 2222 (host) (adapter 1)

==> node1: Running 'pre-boot' VM customizations...

==> node1: Booting VM...

==> node1: Waiting for machine to boot. This may take a few minutes...

node1: SSH address: 127.0.0.1:2222

node1: SSH username: vagrant

node1: SSH auth method: private key

==> node1: Machine booted and ready!

==> node1: Checking for guest additions in VM...

node1: The guest additions on this VM do not match the installed version of

node1: VirtualBox! In most cases this is fine, but in rare cases it can

node1: prevent things such as shared folders from working properly. If you see

node1: shared folder errors, please make sure the guest additions within the

node1: virtual machine match the version of VirtualBox you have installed on

node1: your host and reload your VM.

node1:

node1: Guest Additions Version: 5.1.38

node1: VirtualBox Version: 6.0

==> node1: Setting hostname...

==> node1: Configuring and enabling network interfaces...

==> node1: Mounting shared folders...

node1: /vagrant => C:/y/Vagrant/vagrant-kubernetes

==> node1: Machine already provisioned. Run `vagrant provision` or use the `--provision`

==> node1: flag to force provisioning. Provisioners marked to run always will still run.

node1起動後のPodの状態を見てみると...

vagrant@master:~/codes_for_lessons/step08$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-deploy-7c74bc9bb8-c87d9 1/1 Running 0 10m 10.244.1.21 node2 <none> <none>

web-deploy-7c74bc9bb8-chkft 1/1 Running 0 10m 10.244.1.22 node2 <none> <none>

web-deploy-7c74bc9bb8-fsjg4 1/1 Running 0 10m 10.244.1.20 node2 <none> <none>

web-deploy-7c74bc9bb8-s864t 1/1 Running 0 82m 10.244.1.16 node2 <none> <none>

web-deploy-7c74bc9bb8-xlh2v 1/1 Running 0 82m 10.244.1.15 node2 <none> <none>

TerminatingのステータスとなっていたPodがクリアされました。

test1のPodも削除された状態です(node1障害によりPod自体が削除され、それを管理してくれる人がいないので、復旧はされない)。

補足: 障害検知/Podの別ノードへの退避時間について

ノード障害を検知するのに5分って結構かかるなと思って、これが何に依存するのか調べてみると、以下に解説してくれている人がいました。Taint Based Evictionという機能の挙動に依存するようです。

参考: Kubernetesのノード障害時の挙動とノード復帰後の再スケジューリング

公式ドキュメントとしてはこの辺ですかね。

Taints and Tolerations

Note that Kubernetes automatically adds a toleration for node.kubernetes.io/not-ready with tolerationSeconds=300 unless the pod configuration provided by the user already has a toleration for node.kubernetes.io/not-ready. Likewise it adds a toleration for node.kubernetes.io/unreachable with tolerationSeconds=300 unless the pod configuration provided by the user already has a toleration for node.kubernetes.io/unreachable.

These automatically-added tolerations ensure that the default pod behavior of remaining bound for 5 minutes after one of these problems is detected is maintained. The two default tolerations are added by the DefaultTolerationSeconds admission controller.

また、node1復旧後、node2に片寄されたPodは片寄されたままなので(node2で動き続ける)、次にnode2に障害があったら、完全に5分間サービスが停止してしまうことになります。

node1復旧後はnode1/node2にうまく分散配置しなおして欲しいのですが、どうも勝手にそうはなってくれないようです。

以下のような記事がありましたが、どうやら結構泥臭い方法で再配置のオペレーションをしないといけなさそうです。

参考 K8s Node障害時の振る舞いについての検証記録 - 復旧後にポッドの配置をリバランスするには?

うーん、もう少しスマートな方法はないのだろうか???

サービスの操作

サービスは、Podの集合に対しての経路設定や外部のエンドポイントの名前解決などを行ってくれるリソースです。

複数のPodに対する代表アドレスを設定したり、それをk8sクラスターの外に公開したり、ロードバランサーとの連携を行ったりする時に使用します。

以下の4つのタイプのサービスが定義できるようです。

- ClusterIP: デフォルトのタイプ。クラスタ内でPodの集合(レプリカセットで管理されているものなど)に対して代表IPアドレスを割り当てて負荷分散させることができます。(外部からのアクセスは不可)

- NodePort: ClusterIPに加え、外部に対してポートを公開します(各ノードのIPアドレス+ポートでアクセス可能となる)。

- LoadBalancer: LoadBalancerと連携して外部からアクセス可能にするもの。(ローカルのKubernetes環境では利用不可。IKSやGKEなどクラウドサービス環境で利用。)

- ExternalName: Pod上のサービスから外部のサービスを利用する際の名前解決を行うもの。

type: ClusterIP (マルチノード環境)

一旦上で作成したデプロイメントは削除して、サービスのテスト用に、以下のマニフェストファイルを適用し、デプロイメントとサービスを作成してみます。

https://github.com/takara9/codes_for_lessons/blob/master/step09/deploy.yml

https://github.com/takara9/codes_for_lessons/blob/master/step09/svc.yml

作成

vagrant@master:~/codes_for_lessons/step09$ kubectl apply -f deploy.yml

deployment.apps/web-deploy created

vagrant@master:~/codes_for_lessons/step09$ kubectl apply -f svc.yml

service/web-service created

確認

vagrant@master:~$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-6b5f67b747-5qr49 1/1 Running 0 40m 10.244.2.3 node2 <none> <none>

pod/web-deploy-6b5f67b747-8cjw4 1/1 Running 0 40m 10.244.1.4 node1 <none> <none>

pod/web-deploy-6b5f67b747-w8qbm 1/1 Running 0 40m 10.244.2.2 node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 61m <none>

service/web-service ClusterIP 10.32.0.79 <none> 80/TCP 13s app=web

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 3/3 3 3 40m nginx nginx:latest app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-6b5f67b747 3 3 3 40m nginx nginx:latest app=web,pod-template-hash=6b5f67b747

この代表となるポートはクラスター内からしかアクセスできないため、テスト用に踏み台となるPodを作成して、そこからアクセスしてみます。

busyboxのPodを立ち上げてshを実行します。

vagrant@master:~$ kubectl run -it busybox --restart=Never --rm --image=busybox:latest sh

If you don't see a command prompt, try pressing enter.

/ #

この時、別シェルでPodの状況を見ると、このbusyboxのPodはnode1で上がってきているのが分かります。

vagrant@master:~$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 2m7s 10.244.1.6 node1 <none> <none>

web-deploy-6b5f67b747-5qr49 1/1 Running 0 43m 10.244.2.3 node2 <none> <none>

web-deploy-6b5f67b747-8cjw4 1/1 Running 0 43m 10.244.1.4 node1 <none> <none>

web-deploy-6b5f67b747-w8qbm 1/1 Running 0 43m 10.244.2.2 node2 <none> <none>

busyboxのシェルで、サービスにアクセスしてみると...

んんん???うまくいく場合とエラーになる場合がある。

/ # wget -q -O - http://web-service

wget: can't connect to remote host (10.32.0.79): No route to host

/ # wget -q -O - http://web-service

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

どうも、busyboxのPodと同じノード(node1)で稼働しているPodのアドレスに対してはPingは通るが、node2上で稼働しているPodにはPingが通らない様子...

/ # ping -c 3 10.244.2.2

PING 10.244.2.2 (10.244.2.2): 56 data bytes

--- 10.244.2.2 ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

/ # ping -c 3 10.244.2.3

PING 10.244.2.3 (10.244.2.3): 56 data bytes

--- 10.244.2.3 ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

/ # ping -c 3 10.244.1.4

PING 10.244.1.4 (10.244.1.4): 56 data bytes

64 bytes from 10.244.1.4: seq=0 ttl=64 time=0.057 ms

64 bytes from 10.244.1.4: seq=1 ttl=64 time=0.048 ms

64 bytes from 10.244.1.4: seq=2 ttl=64 time=0.052 ms

--- 10.244.1.4 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.048/0.052/0.057 ms

うむむ...

ノードをまたがるPod間のネットワークについては、Kubernetesでその仕様は決まっているようですが、実装はKubernetes自体には含まれていないようです。様々な実装が提供されており、必要に応じてそのネットワーク実装を選択し、導入/構成しないといけないようです。

参考:

Cluster Networking

DockerとKubernetesのPodのネットワーキングについてまとめました

オンプレミスKubernetesのネットワーク

で、今回使っている環境は、vagrantから呼ばれるAnsibleのplaybookを見ると、flannelと呼ばれるネットワーク実装の構成を行っているようです。

参考: https://github.com/takara9/vagrant-kubernetes/blob/1.14/ansible-playbook/k8s_master.yml

namespace:kube-system のリソース見てみると、flannel関連のリソースが追加されているのが分かります。

vagrant@master:~/codes_for_lessons/step09$ kubectl -n kube-system get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/coredns-584795fc57-2q98h 1/1 Running 0 93m 10.244.1.2 node1 <none> <none>

pod/coredns-584795fc57-5854n 1/1 Running 0 93m 10.244.1.3 node1 <none> <none>

pod/etcd-master 1/1 Running 0 93m 172.16.20.11 master <none> <none>

pod/kube-apiserver-master 1/1 Running 0 93m 172.16.20.11 master <none> <none>

pod/kube-controller-manager-master 1/1 Running 0 93m 172.16.20.11 master <none> <none>

pod/kube-flannel-ds-amd64-9hfp6 1/1 Running 0 93m 172.16.20.13 node2 <none> <none>

pod/kube-flannel-ds-amd64-blbpx 1/1 Running 0 93m 172.16.20.12 node1 <none> <none>

pod/kube-flannel-ds-amd64-s8r6n 1/1 Running 0 93m 172.16.20.11 master <none> <none>

pod/kube-proxy-7fgjh 1/1 Running 0 93m 172.16.20.12 node1 <none> <none>

pod/kube-proxy-bvj2r 1/1 Running 0 93m 172.16.20.11 master <none> <none>

pod/kube-proxy-l44k9 1/1 Running 0 93m 172.16.20.13 node2 <none> <none>

pod/kube-scheduler-master 1/1 Running 0 93m 172.16.20.11 master <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kube-dns ClusterIP 10.32.0.10 <none> 53/UDP,53/TCP,9153/TCP 93m k8s-app=kube-dns

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

daemonset.apps/kube-flannel-ds-amd64 3 3 3 3 3 beta.kubernetes.io/arch=amd64 93m kube-flannel quay.io/coreos/flannel:v0.10.0-amd64 app=flannel,tier=node

daemonset.apps/kube-flannel-ds-arm 0 0 0 0 0 beta.kubernetes.io/arch=arm 93m kube-flannel quay.io/coreos/flannel:v0.10.0-arm app=flannel,tier=node

daemonset.apps/kube-flannel-ds-arm64 0 0 0 0 0 beta.kubernetes.io/arch=arm64 93m kube-flannel quay.io/coreos/flannel:v0.10.0-arm64 app=flannel,tier=node

daemonset.apps/kube-flannel-ds-ppc64le 0 0 0 0 0 beta.kubernetes.io/arch=ppc64le 93m kube-flannel quay.io/coreos/flannel:v0.10.0-ppc64le app=flannel,tier=node

daemonset.apps/kube-flannel-ds-s390x 0 0 0 0 0 beta.kubernetes.io/arch=s390x 93m kube-flannel quay.io/coreos/flannel:v0.10.0-s390x app=flannel,tier=node

daemonset.apps/kube-proxy 3 3 3 3 3 <none> 93m kube-proxy k8s.gcr.io/kube-proxy:v1.14.9 k8s-app=kube-proxy

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/coredns 2/2 2 2 93m coredns k8s.gcr.io/coredns:1.3.1 k8s-app=kube-dns

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/coredns-584795fc57 2 2 2 93m coredns k8s.gcr.io/coredns:1.3.1 k8s-app=kube-dns,pod-template-hash=584795fc57

んー、この辺定義されていれば、ノードを跨ってもクラスター内のPod間では疎通できると思うんだが...

ということで、ネットワーク関連の問題判別実施。詳細は以下参照。

コンテナ型仮想化技術 Study06' / Kubernetesネットワーク問題判別

ということで、上の対応を実施した後、一旦リソース削除してやり直し。

vagrant@master:~/codes_for_lessons/step09$ kubectl apply -f deploy.yml

deployment.apps/web-deploy created

vagrant@master:~/codes_for_lessons/step09$ kubectl apply -f svc.yml

service/web-service created

確認

vagrant@master:~$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-6b5f67b747-4bl7p 1/1 Running 0 50s 10.244.2.22 node2 <none> <none>

pod/web-deploy-6b5f67b747-wfslh 1/1 Running 0 50s 10.244.2.23 node2 <none> <none>

pod/web-deploy-6b5f67b747-x7g2k 1/1 Running 0 50s 10.244.1.34 node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 39d <none>

service/web-service ClusterIP 10.32.0.218 <none> 80/TCP 36s app=web

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 3/3 3 3 50s nginx nginx:latest app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-6b5f67b747 3 3 3 50s nginx nginx:latest app=web,pod-template-hash=6b5f67b747

テスト用に踏み台となるPodを作成して、そこからアクセスしてみます。

busyboxのPodを立ち上げてshを実行します。

vagrant@master:~$ kubectl run -it busybox --restart=Never --rm --image=busybox:latest sh

If you don't see a command prompt, try pressing enter.

/ #

この時、別シェルでPodの状況を見ると、このbusyboxのPodはnode1で上がってきているのが分かります。

vagrant@master:~$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 13s 10.244.1.35 node1 <none> <none>

web-deploy-6b5f67b747-4bl7p 1/1 Running 0 98s 10.244.2.22 node2 <none> <none>

web-deploy-6b5f67b747-wfslh 1/1 Running 0 98s 10.244.2.23 node2 <none> <none>

web-deploy-6b5f67b747-x7g2k 1/1 Running 0 98s 10.244.1.34 node1 <none> <none>

busyboxのシェルで、サービスにアクセスしてみると...

/ # wget -q -O - http://web-service

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

サービス名の大文字をキーに環境変数を確認すると...

/ # env | grep WEB_SERVICE

WEB_SERVICE_SERVICE_PORT=80

WEB_SERVICE_PORT=tcp://10.32.0.218:80

WEB_SERVICE_PORT_80_TCP_ADDR=10.32.0.218

WEB_SERVICE_PORT_80_TCP_PORT=80

WEB_SERVICE_PORT_80_TCP_PROTO=tcp

WEB_SERVICE_PORT_80_TCP=tcp://10.32.0.218:80

WEB_SERVICE_SERVICE_HOST=10.32.0.218

pingは通らないのね...

/ # ping -c 3 web-service

PING web-service (10.32.0.218): 56 data bytes

--- web-service ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

type: ClusterIP (minikube環境)

デプロイメントとサービスを作成

vagrant@minikube:~/codes_for_lessons/step09$ kubectl apply -f deploy.yml

deployment.apps/web-deploy created

vagrant@minikube:~/codes_for_lessons/step09$ kubectl apply -f svc.yml

service/web-service created

確認

vagrant@minikube:~$ kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-654cd6d4d8-gsn8r 1/1 Running 0 24s 172.17.0.4 minikube <none> <none>

pod/web-deploy-654cd6d4d8-qz9fm 1/1 Running 0 24s 172.17.0.3 minikube <none> <none>

pod/web-deploy-654cd6d4d8-xt78f 1/1 Running 0 24s 172.17.0.2 minikube <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20d <none>

service/web-service ClusterIP 10.96.176.255 <none> 80/TCP 17s app=web

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 3/3 3 3 24s nginx nginx:latest app=web

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/web-deploy-654cd6d4d8 3 3 3 24s nginx nginx:latest app=web,pod-template-hash=654cd6d4d8

busyboxから確認

vagrant@minikube:~$ kubectl run -it busybox --restart=Never --rm --image=busybox sh

If you don't see a command prompt, try pressing enter.

/ # wget -q -O - http://web-service

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

/ # nslookup web-service

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: web-service.default.svc.cluster.local

Address: 10.96.176.255

*** Can't find web-service.svc.cluster.local: No answer

*** Can't find web-service.cluster.local: No answer

*** Can't find web-service.dhcp.makuhari.japan.ibm.com: No answer

*** Can't find web-service.default.svc.cluster.local: No answer

*** Can't find web-service.svc.cluster.local: No answer

*** Can't find web-service.cluster.local: No answer

*** Can't find web-service.dhcp.makuhari.japan.ibm.com: No answer

/ # ping -c 3 web-service

PING web-service (10.96.176.255): 56 data bytes

--- web-service ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

minikubeの環境だと、ノードが1つしかないからか、何度やってもhttp://web-serviceへのアクセスは成功しますね(意図した通りに動いている!)。

nslookupで名前解決もできている。

"web-service"に対してpingは通らないのだが、この挙動は正しいのかな?

type: NodePort (マルチノード環境)

以下のサービス追加

https://github.com/takara9/codes_for_lessons/blob/master/step09/svc-np.yml

vagrant@master:~/codes_for_lessons/step09$ kubectl apply -f svc-np.yml

service/web-service-np created

確認

vagrant@master:~$ kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 39d <none>

web-service ClusterIP 10.32.0.192 <none> 80/TCP 107s app=web

web-service-np NodePort 10.32.0.127 <none> 80:32399/TCP 65s app=web

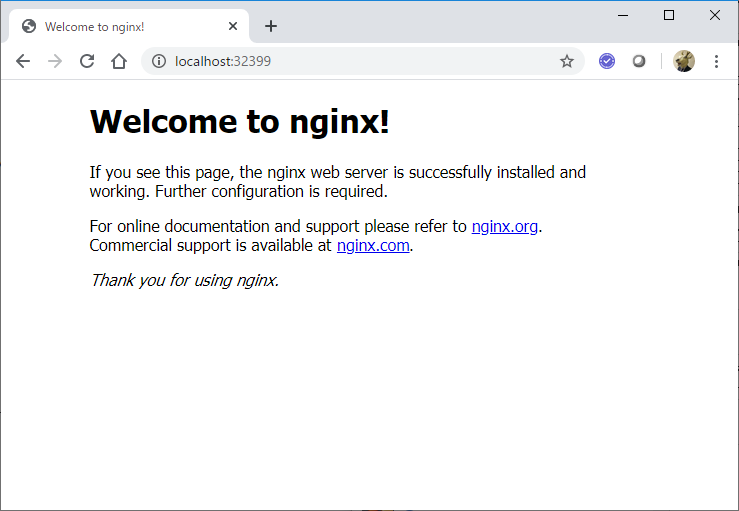

32300ポートで公開された。

node1でListenされているポートを確認

vagrant@node1:~$ netstat -an |grep 32399

tcp6 0 0 :::32399 :::* LISTEN

Listenされてますね。

このノードはWindows上のVirtualBoxのゲストOSとして動いているので、このポートを同じ番号でホストOSからもアクセスできるようにしてあげて、ホストOS(Windows)のブラウザからアクセスしてみると...

ちゃんとnginxのサーバーにアクセスできました!

type: NodePort (minikube環境)

以下のサービス追加

https://github.com/takara9/codes_for_lessons/blob/master/step09/svc-np.yml

vagrant@minikube:~/codes_for_lessons/step09$ kubectl apply -f svc-np.yml

service/web-service-np created

確認

vagrant@minikube:~$ kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20d <none>

web-service ClusterIP 10.96.176.255 <none> 80/TCP 10m app=web

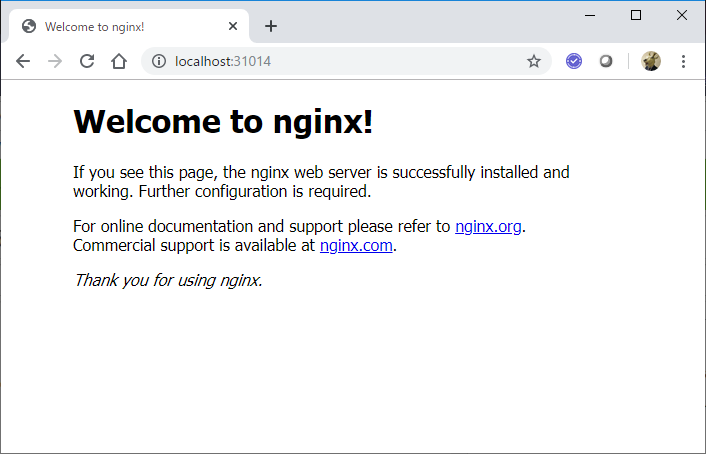

web-service-np NodePort 10.111.60.245 <none> 80:31014/TCP 19s app=web

31014ポートで公開された。ノード上でポートの様子を見てみると...

vagrant@minikube:~$ netstat -an | grep 31014

tcp6 0 0 :::31014 :::* LISTEN

Listenされてますね。

このノードはWindows上のVirtualBoxのゲストOSとして動いているので、このポートを同じ番号でホストOSからもアクセスできるようにしてあげて、ホストOS(Windows)のブラウザからアクセスしてみると...

ちゃんとnginxのサーバーにアクセスできました!

type: ExternalName

以下のサービスを追加して、Pod内から、www.yaho.co.jpに対してyahooという名前でアクセスできるようにしてみます。

https://github.com/takara9/codes_for_lessons/blob/master/step09/svc-ext-dns.yml

サービス追加前のPod(busybox)からのアクセス状況

/ # ping -c 3 yahoo

ping: bad address 'yahoo'

/ # nslookup yahoo

Server: 10.32.0.10

Address: 10.32.0.10:53

** server can't find yahoo.default.svc.cluster.local: NXDOMAIN

*** Can't find yahoo.svc.cluster.local: No answer

*** Can't find yahoo.cluster.local: No answer

*** Can't find yahoo.dhcp.makuhari.japan.ibm.com: No answer

*** Can't find yahoo.default.svc.cluster.local: No answer

*** Can't find yahoo.svc.cluster.local: No answer

*** Can't find yahoo.cluster.local: No answer

*** Can't find yahoo.dhcp.makuhari.japan.ibm.com: No answer

サービス追加

vagrant@master:~/codes_for_lessons/step09$ kubectl apply -f svc-ext-dns.yml

service/yahoo created

vagrant@master:~/codes_for_lessons/step09$ kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 165m <none>

web-service ClusterIP 10.32.0.79 <none> 80/TCP 100m app=web

yahoo ExternalName <none> www.yahoo.co.jp <none> 17s <none>

サービス追加後のPod(busybox)からのアクセス状況

/ # ping -c 3 yahoo

PING yahoo (183.79.217.124): 56 data bytes

64 bytes from 183.79.217.124: seq=0 ttl=44 time=25.644 ms

64 bytes from 183.79.217.124: seq=1 ttl=44 time=24.332 ms

64 bytes from 183.79.217.124: seq=2 ttl=44 time=24.400 ms

--- yahoo ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 24.332/24.792/25.644 ms

/ # nslookup yahoo

Server: 10.32.0.10

Address: 10.32.0.10:53

** server can't find yahoo.default.svc.cluster.local: NXDOMAIN

*** Can't find yahoo.svc.cluster.local: No answer

*** Can't find yahoo.cluster.local: No answer

*** Can't find yahoo.dhcp.makuhari.japan.ibm.com: No answer

*** Can't find yahoo.default.svc.cluster.local: No answer

*** Can't find yahoo.svc.cluster.local: No answer

*** Can't find yahoo.cluster.local: No answer

*** Can't find yahoo.dhcp.makuhari.japan.ibm.com: No answer

Pod内のコンテナからyahooに対して名前解決/Pingが通ることが確認できました。

※というか、Pod内から外のサーバーへのアクセスはできるのね。ネットワークむつかし。