はじめに

以下に提供されている、Vagrant+Ansibleで構築されるKubernetesクラスター環境でネットワーク関連の問題が生じたので、問題判別がてらKubernetesのネットワーク関連情報を調べた時のログです。

https://github.com/takara9/vagrant-kubernetes

障害事象: ノードを跨るPod間の通信ができない!(同一ノード上で稼働するPod同士はPingが通る)

※ネットワーク実装にはflannelを利用している環境です。

関連記事

コンテナ型仮想化技術 Study01 / Docker基礎

コンテナ型仮想化技術 Study02 / Docker レジストリ

コンテナ型仮想化技術 Study03 / Docker Compose

コンテナ型仮想化技術 Study04 / Minikube & kubectl簡易操作

コンテナ型仮想化技術 Study05 / Pod操作

コンテナ型仮想化技術 Study06 / ReplicaSet, Deployment, Service

コンテナ型仮想化技術 Study06' / Kubernetesネットワーク問題判別

コンテナ型仮想化技術 Study07 / ストレージ

コンテナ型仮想化技術 Study08 / Statefulset, Ingress

コンテナ型仮想化技術 Study09 / Helm

参考情報

DockerとKubernetesのPodのネットワーキングについてまとめました

Kubernetesのネットワーク構成

マルチホストでのDocker Container間通信 第1回: Dockerネットワークの基礎

マルチホストでのDocker Container間通信 第2回 Port Forwarding と Overlay Network

マルチホストでのDocker Container間通信 第3回: Kubernetesのネットワーク(CNI, kube-proxy, kube-dns)

VirtualBox CentOS7.2 64bitでNAT、ホストオンリーアダプターを使用

Kubernetes Network Deep Dive (NodePort, ClusterIP, Flannel)

kubeadm で kubernetes v1.8 + Flannel をインストール

現状構成確認

状況確認用Pod

ネットワーク情報確認用に、以下のようなマニフェストで簡易Pod作成して動かしてみました。

apiVersion: v1

kind: Pod

metadata:

name: testpod01

spec:

restartPolicy: Never

containers:

- name: alpine01

image: alpine:latest

command: ["sleep", "36000"]

- name: alpine02

image: alpine:latest

command: ["sleep", "36000"]

apiVersion: v1

kind: Pod

metadata:

name: testpod02

spec:

restartPolicy: Never

containers:

- name: alpine01

image: alpine:latest

command: ["sleep", "36000"]

- name: alpine02

image: alpine:latest

command: ["sleep", "36000"]

※Podはどのノードで稼働するか制御できませんが、delete/applyを繰り返して、うまいことtestpoe01がnode1で、testpod02がnode2で動くように調整しました。(明示的にノード指定することもできるのかな?)

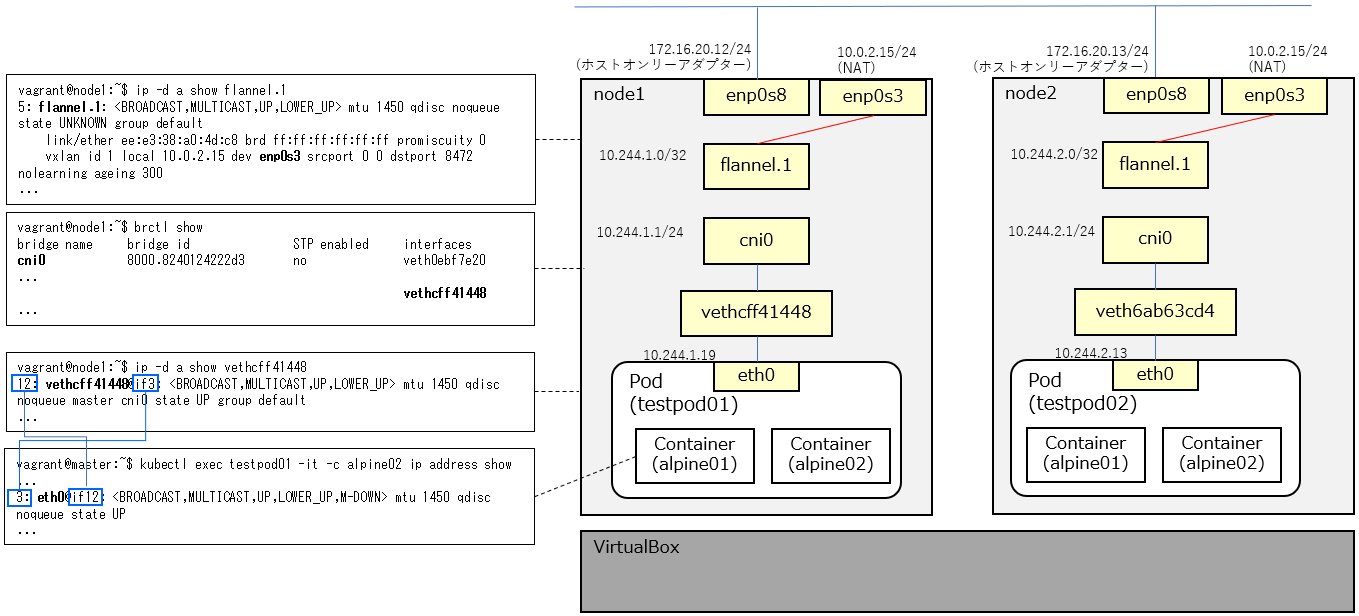

構成図

作成された環境のネットワーク関連の情報を整理して図にするとこんな感じ。

(結論としては、図の赤線のところが想定と違っていた!)

Pod情報

vagrant@master:~$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

testpod01 2/2 Running 0 11s 10.244.1.19 node1 <none> <none>

testpod02 2/2 Running 0 26s 10.244.2.13 node2 <none> <none>

web-deploy-6b5f67b747-5qr49 1/1 Running 3 22d 10.244.2.9 node2 <none> <none>

web-deploy-6b5f67b747-8cjw4 1/1 Running 3 22d 10.244.1.14 node1 <none> <none>

web-deploy-6b5f67b747-w8qbm 1/1 Running 3 22d 10.244.2.8 node2 <none> <none>

node1情報

ip address

vagrant@node1:~$ ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 02:2e:c1:de:99:b7 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::2e:c1ff:fede:99b7/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:6e:db:23 brd ff:ff:ff:ff:ff:ff

inet 172.16.20.12/24 brd 172.16.20.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe6e:db23/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9a:89:56:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether ee:e3:38:a0:4d:c8 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::ece3:38ff:fea0:4dc8/64 scope link

valid_lft forever preferred_lft forever

6: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 82:40:12:42:22:d3 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.1/24 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::8040:12ff:fe42:22d3/64 scope link

valid_lft forever preferred_lft forever

7: vetha1166fa7@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 82:fe:98:c0:22:b7 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::80fe:98ff:fec0:22b7/64 scope link

valid_lft forever preferred_lft forever

8: veth71c51d57@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 46:f5:63:60:dc:a4 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::44f5:63ff:fe60:dca4/64 scope link

valid_lft forever preferred_lft forever

9: veth0ebf7e20@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 5e:7d:6f:20:2e:b3 brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::5c7d:6fff:fe20:2eb3/64 scope link

valid_lft forever preferred_lft forever

12: vethcff41448@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 62:e9:61:bc:69:38 brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::60e9:61ff:febc:6938/64 scope link

valid_lft forever preferred_lft forever

flannel.1

vagrant@node1:~$ ip -d a show flannel.1

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether ee:e3:38:a0:4d:c8 brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 10.0.2.15 dev enp0s3 srcport 0 0 dstport 8472 nolearning ageing 300

inet 10.244.1.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::ece3:38ff:fea0:4dc8/64 scope link

valid_lft forever preferred_lft forever

cni0

vagrant@node1:~$ brctl show

bridge name bridge id STP enabled interfaces

cni0 8000.8240124222d3 no veth0ebf7e20

veth71c51d57

vetha1166fa7

vethcff41448

docker0 8000.02429a895603 no

vethxxx

vagrant@node1:~$ ip -d a show veth0ebf7e20

9: veth0ebf7e20@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 5e:7d:6f:20:2e:b3 brd ff:ff:ff:ff:ff:ff link-netnsid 2 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::5c7d:6fff:fe20:2eb3/64 scope link

valid_lft forever preferred_lft forever

vagrant@node1:~$ ip -d a show veth71c51d57

8: veth71c51d57@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 46:f5:63:60:dc:a4 brd ff:ff:ff:ff:ff:ff link-netnsid 1 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::44f5:63ff:fe60:dca4/64 scope link

valid_lft forever preferred_lft forever

vagrant@node1:~$ ip -d a show vetha1166fa7

7: vetha1166fa7@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 82:fe:98:c0:22:b7 brd ff:ff:ff:ff:ff:ff link-netnsid 0 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::80fe:98ff:fec0:22b7/64 scope link

valid_lft forever preferred_lft forever

vagrant@node1:~$ ip -d a show vethcff41448

12: vethcff41448@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 62:e9:61:bc:69:38 brd ff:ff:ff:ff:ff:ff link-netnsid 3 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::60e9:61ff:febc:6938/64 scope link

valid_lft forever preferred_lft forever

route

vagrant@node1:~$ route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 10.0.2.2 0.0.0.0 UG 0 0 0 enp0s3

10.0.2.0 * 255.255.255.0 U 0 0 0 enp0s3

10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.1.0 * 255.255.255.0 U 0 0 0 cni0

10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1

172.16.20.0 * 255.255.255.0 U 0 0 0 enp0s8

172.17.0.0 * 255.255.0.0 U 0 0 0 docker0

iptables

vagrant@node1:~$ sudo iptables-save

# Generated by iptables-save v1.6.0 on Thu Dec 12 07:33:20 2019

*nat

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [3:180]

:POSTROUTING ACCEPT [3:180]

:DOCKER - [0:0]

:KUBE-MARK-DROP - [0:0]

:KUBE-MARK-MASQ - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-POSTROUTING - [0:0]

:KUBE-SEP-7GFCTXWQKX25H6R4 - [0:0]

:KUBE-SEP-DHQ66TZSUU4UMWNL - [0:0]

:KUBE-SEP-E4UY4UBD4GOYNURB - [0:0]

:KUBE-SEP-L6GLNUXQY5PA3CTM - [0:0]

:KUBE-SEP-QUQ56RLGZWOVRUKT - [0:0]

:KUBE-SEP-TQ45FLVQ3Q7BSK2N - [0:0]

:KUBE-SEP-U27GJ7HRAVMAXEJO - [0:0]

:KUBE-SEP-UL6KPOJKIOHGI6N5 - [0:0]

:KUBE-SEP-X24BMK6YRKXG7IN3 - [0:0]

:KUBE-SEP-XMYN27PEYRASWXN5 - [0:0]

:KUBE-SERVICES - [0:0]

:KUBE-SVC-ERIFXISQEP7F7OF4 - [0:0]

:KUBE-SVC-F5FFDDFGTCKTJ7GQ - [0:0]

:KUBE-SVC-JD5MR3NA4I4DYORP - [0:0]

:KUBE-SVC-NPX46M4PTMTKRN6Y - [0:0]

:KUBE-SVC-TCOU7JCQXEZGVUNU - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 10.244.0.0/16 -d 10.244.0.0/16 -j RETURN

-A POSTROUTING -s 10.244.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE

-A POSTROUTING ! -s 10.244.0.0/16 -d 10.244.1.0/24 -j RETURN

-A POSTROUTING ! -s 10.244.0.0/16 -d 10.244.0.0/16 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

-A KUBE-MARK-DROP -j MARK --set-xmark 0x8000/0x8000

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

-A KUBE-SEP-7GFCTXWQKX25H6R4 -s 10.244.1.16/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-7GFCTXWQKX25H6R4 -p udp -m udp -j DNAT --to-destination 10.244.1.16:53

-A KUBE-SEP-DHQ66TZSUU4UMWNL -s 10.244.1.15/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-DHQ66TZSUU4UMWNL -p udp -m udp -j DNAT --to-destination 10.244.1.15:53

-A KUBE-SEP-E4UY4UBD4GOYNURB -s 10.244.2.9/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-E4UY4UBD4GOYNURB -p tcp -m tcp -j DNAT --to-destination 10.244.2.9:80

-A KUBE-SEP-L6GLNUXQY5PA3CTM -s 172.16.20.11/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-L6GLNUXQY5PA3CTM -p tcp -m tcp -j DNAT --to-destination 172.16.20.11:6443

-A KUBE-SEP-QUQ56RLGZWOVRUKT -s 10.244.1.15/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-QUQ56RLGZWOVRUKT -p tcp -m tcp -j DNAT --to-destination 10.244.1.15:9153

-A KUBE-SEP-TQ45FLVQ3Q7BSK2N -s 10.244.1.16/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-TQ45FLVQ3Q7BSK2N -p tcp -m tcp -j DNAT --to-destination 10.244.1.16:9153

-A KUBE-SEP-U27GJ7HRAVMAXEJO -s 10.244.1.15/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-U27GJ7HRAVMAXEJO -p tcp -m tcp -j DNAT --to-destination 10.244.1.15:53

-A KUBE-SEP-UL6KPOJKIOHGI6N5 -s 10.244.1.14/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-UL6KPOJKIOHGI6N5 -p tcp -m tcp -j DNAT --to-destination 10.244.1.14:80

-A KUBE-SEP-X24BMK6YRKXG7IN3 -s 10.244.2.8/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-X24BMK6YRKXG7IN3 -p tcp -m tcp -j DNAT --to-destination 10.244.2.8:80

-A KUBE-SEP-XMYN27PEYRASWXN5 -s 10.244.1.16/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-XMYN27PEYRASWXN5 -p tcp -m tcp -j DNAT --to-destination 10.244.1.16:53

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.79/32 -p tcp -m comment --comment "default/web-service: cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.79/32 -p tcp -m comment --comment "default/web-service: cluster IP" -m tcp --dport 80 -j KUBE-SVC-F5FFDDFGTCKTJ7GQ

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-U27GJ7HRAVMAXEJO

-A KUBE-SVC-ERIFXISQEP7F7OF4 -j KUBE-SEP-XMYN27PEYRASWXN5

-A KUBE-SVC-F5FFDDFGTCKTJ7GQ -m statistic --mode random --probability 0.33332999982 -j KUBE-SEP-UL6KPOJKIOHGI6N5

-A KUBE-SVC-F5FFDDFGTCKTJ7GQ -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-X24BMK6YRKXG7IN3

-A KUBE-SVC-F5FFDDFGTCKTJ7GQ -j KUBE-SEP-E4UY4UBD4GOYNURB

-A KUBE-SVC-JD5MR3NA4I4DYORP -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-QUQ56RLGZWOVRUKT

-A KUBE-SVC-JD5MR3NA4I4DYORP -j KUBE-SEP-TQ45FLVQ3Q7BSK2N

-A KUBE-SVC-NPX46M4PTMTKRN6Y -j KUBE-SEP-L6GLNUXQY5PA3CTM

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DHQ66TZSUU4UMWNL

-A KUBE-SVC-TCOU7JCQXEZGVUNU -j KUBE-SEP-7GFCTXWQKX25H6R4

COMMIT

# Completed on Thu Dec 12 07:33:20 2019

# Generated by iptables-save v1.6.0 on Thu Dec 12 07:33:20 2019

*filter

:INPUT ACCEPT [196:22804]

:FORWARD DROP [0:0]

:OUTPUT ACCEPT [154:23157]

:DOCKER - [0:0]

:DOCKER-ISOLATION-STAGE-1 - [0:0]

:DOCKER-ISOLATION-STAGE-2 - [0:0]

:DOCKER-USER - [0:0]

:KUBE-EXTERNAL-SERVICES - [0:0]

:KUBE-FIREWALL - [0:0]

:KUBE-FORWARD - [0:0]

:KUBE-SERVICES - [0:0]

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -j DOCKER-USER

-A FORWARD -j DOCKER-ISOLATION-STAGE-1

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -o docker0 -j DOCKER

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

-A FORWARD -s 10.244.0.0/16 -j ACCEPT

-A FORWARD -d 10.244.0.0/16 -j ACCEPT

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2

-A DOCKER-ISOLATION-STAGE-1 -j RETURN

-A DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP

-A DOCKER-ISOLATION-STAGE-2 -j RETURN

-A DOCKER-USER -j RETURN

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -s 10.244.0.0/16 -m comment --comment "kubernetes forwarding conntrack pod source rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-FORWARD -d 10.244.0.0/16 -m comment --comment "kubernetes forwarding conntrack pod destination rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

COMMIT

# Completed on Thu Dec 12 07:33:20 2019

node2情報

ip address

vagrant@node2:~$ ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 02:2e:c1:de:99:b7 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::2e:c1ff:fede:99b7/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:10:b3:8c brd ff:ff:ff:ff:ff:ff

inet 172.16.20.13/24 brd 172.16.20.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe10:b38c/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:83:af:29:fa brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether fe:4e:ee:24:6f:2e brd ff:ff:ff:ff:ff:ff

inet 10.244.2.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::fc4e:eeff:fe24:6f2e/64 scope link

valid_lft forever preferred_lft forever

6: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 72:75:5d:48:82:35 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.1/24 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::7075:5dff:fe48:8235/64 scope link

valid_lft forever preferred_lft forever

7: vethad150db2@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 16:2a:ae:0d:fd:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::142a:aeff:fe0d:fd88/64 scope link

valid_lft forever preferred_lft forever

8: vethc91bd68e@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 66:45:47:97:2f:b1 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::6445:47ff:fe97:2fb1/64 scope link

valid_lft forever preferred_lft forever

12: veth6ab63cd4@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 42:1f:91:2b:25:15 brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::401f:91ff:fe2b:2515/64 scope link

valid_lft forever preferred_lft forever

flannel.1

vagrant@node2:~$ ip -d a show flannel.1

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether fe:4e:ee:24:6f:2e brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 10.0.2.15 dev enp0s3 srcport 0 0 dstport 8472 nolearning ageing 300

inet 10.244.2.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::fc4e:eeff:fe24:6f2e/64 scope link

valid_lft forever preferred_lft forever

cni0

vagrant@node2:~$ brctl show

bridge name bridge id STP enabled interfaces

cni0 8000.72755d488235 no veth6ab63cd4

vethad150db2

vethc91bd68e

docker0 8000.024283af29fa no

vethxxx

vagrant@node2:~$ ip -d a show veth6ab63cd4

12: veth6ab63cd4@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 42:1f:91:2b:25:15 brd ff:ff:ff:ff:ff:ff link-netnsid 2 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::401f:91ff:fe2b:2515/64 scope link

valid_lft forever preferred_lft forever

vagrant@node2:~$ ip -d a show vethad150db2

7: vethad150db2@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 16:2a:ae:0d:fd:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::142a:aeff:fe0d:fd88/64 scope link

valid_lft forever preferred_lft forever

vagrant@node2:~$ ip -d a show vethc91bd68e

8: vethc91bd68e@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 66:45:47:97:2f:b1 brd ff:ff:ff:ff:ff:ff link-netnsid 1 promiscuity 1

veth

bridge_slave state forwarding priority 32 cost 2 hairpin on guard off root_block off fastleave off learning on flood on

inet6 fe80::6445:47ff:fe97:2fb1/64 scope link

valid_lft forever preferred_lft forever

route

vagrant@node2:~$ route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 10.0.2.2 0.0.0.0 UG 0 0 0 enp0s3

10.0.2.0 * 255.255.255.0 U 0 0 0 enp0s3

10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.2.0 * 255.255.255.0 U 0 0 0 cni0

172.16.20.0 * 255.255.255.0 U 0 0 0 enp0s8

172.17.0.0 * 255.255.0.0 U 0 0 0 docker0

iptables

vagrant@node2:~$ sudo iptables-save

# Generated by iptables-save v1.6.0 on Thu Dec 12 08:06:51 2019

*nat

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

:DOCKER - [0:0]

:KUBE-MARK-DROP - [0:0]

:KUBE-MARK-MASQ - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-POSTROUTING - [0:0]

:KUBE-SEP-7GFCTXWQKX25H6R4 - [0:0]

:KUBE-SEP-DHQ66TZSUU4UMWNL - [0:0]

:KUBE-SEP-E4UY4UBD4GOYNURB - [0:0]

:KUBE-SEP-L6GLNUXQY5PA3CTM - [0:0]

:KUBE-SEP-QUQ56RLGZWOVRUKT - [0:0]

:KUBE-SEP-TQ45FLVQ3Q7BSK2N - [0:0]

:KUBE-SEP-U27GJ7HRAVMAXEJO - [0:0]

:KUBE-SEP-UL6KPOJKIOHGI6N5 - [0:0]

:KUBE-SEP-X24BMK6YRKXG7IN3 - [0:0]

:KUBE-SEP-XMYN27PEYRASWXN5 - [0:0]

:KUBE-SERVICES - [0:0]

:KUBE-SVC-ERIFXISQEP7F7OF4 - [0:0]

:KUBE-SVC-F5FFDDFGTCKTJ7GQ - [0:0]

:KUBE-SVC-JD5MR3NA4I4DYORP - [0:0]

:KUBE-SVC-NPX46M4PTMTKRN6Y - [0:0]

:KUBE-SVC-TCOU7JCQXEZGVUNU - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 10.244.0.0/16 -d 10.244.0.0/16 -j RETURN

-A POSTROUTING -s 10.244.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE

-A POSTROUTING ! -s 10.244.0.0/16 -d 10.244.2.0/24 -j RETURN

-A POSTROUTING ! -s 10.244.0.0/16 -d 10.244.0.0/16 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

-A KUBE-MARK-DROP -j MARK --set-xmark 0x8000/0x8000

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

-A KUBE-SEP-7GFCTXWQKX25H6R4 -s 10.244.1.16/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-7GFCTXWQKX25H6R4 -p udp -m udp -j DNAT --to-destination 10.244.1.16:53

-A KUBE-SEP-DHQ66TZSUU4UMWNL -s 10.244.1.15/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-DHQ66TZSUU4UMWNL -p udp -m udp -j DNAT --to-destination 10.244.1.15:53

-A KUBE-SEP-E4UY4UBD4GOYNURB -s 10.244.2.9/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-E4UY4UBD4GOYNURB -p tcp -m tcp -j DNAT --to-destination 10.244.2.9:80

-A KUBE-SEP-L6GLNUXQY5PA3CTM -s 172.16.20.11/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-L6GLNUXQY5PA3CTM -p tcp -m tcp -j DNAT --to-destination 172.16.20.11:6443

-A KUBE-SEP-QUQ56RLGZWOVRUKT -s 10.244.1.15/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-QUQ56RLGZWOVRUKT -p tcp -m tcp -j DNAT --to-destination 10.244.1.15:9153

-A KUBE-SEP-TQ45FLVQ3Q7BSK2N -s 10.244.1.16/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-TQ45FLVQ3Q7BSK2N -p tcp -m tcp -j DNAT --to-destination 10.244.1.16:9153

-A KUBE-SEP-U27GJ7HRAVMAXEJO -s 10.244.1.15/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-U27GJ7HRAVMAXEJO -p tcp -m tcp -j DNAT --to-destination 10.244.1.15:53

-A KUBE-SEP-UL6KPOJKIOHGI6N5 -s 10.244.1.14/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-UL6KPOJKIOHGI6N5 -p tcp -m tcp -j DNAT --to-destination 10.244.1.14:80

-A KUBE-SEP-X24BMK6YRKXG7IN3 -s 10.244.2.8/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-X24BMK6YRKXG7IN3 -p tcp -m tcp -j DNAT --to-destination 10.244.2.8:80

-A KUBE-SEP-XMYN27PEYRASWXN5 -s 10.244.1.16/32 -j KUBE-MARK-MASQ

-A KUBE-SEP-XMYN27PEYRASWXN5 -p tcp -m tcp -j DNAT --to-destination 10.244.1.16:53

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.79/32 -p tcp -m comment --comment "default/web-service: cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.79/32 -p tcp -m comment --comment "default/web-service: cluster IP" -m tcp --dport 80 -j KUBE-SVC-F5FFDDFGTCKTJ7GQ

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.32.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.32.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-U27GJ7HRAVMAXEJO

-A KUBE-SVC-ERIFXISQEP7F7OF4 -j KUBE-SEP-XMYN27PEYRASWXN5

-A KUBE-SVC-F5FFDDFGTCKTJ7GQ -m statistic --mode random --probability 0.33332999982 -j KUBE-SEP-UL6KPOJKIOHGI6N5

-A KUBE-SVC-F5FFDDFGTCKTJ7GQ -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-X24BMK6YRKXG7IN3

-A KUBE-SVC-F5FFDDFGTCKTJ7GQ -j KUBE-SEP-E4UY4UBD4GOYNURB

-A KUBE-SVC-JD5MR3NA4I4DYORP -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-QUQ56RLGZWOVRUKT

-A KUBE-SVC-JD5MR3NA4I4DYORP -j KUBE-SEP-TQ45FLVQ3Q7BSK2N

-A KUBE-SVC-NPX46M4PTMTKRN6Y -j KUBE-SEP-L6GLNUXQY5PA3CTM

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DHQ66TZSUU4UMWNL

-A KUBE-SVC-TCOU7JCQXEZGVUNU -j KUBE-SEP-7GFCTXWQKX25H6R4

COMMIT

# Completed on Thu Dec 12 08:06:51 2019

# Generated by iptables-save v1.6.0 on Thu Dec 12 08:06:51 2019

*filter

:INPUT ACCEPT [168:21194]

:FORWARD DROP [0:0]

:OUTPUT ACCEPT [121:20581]

:DOCKER - [0:0]

:DOCKER-ISOLATION-STAGE-1 - [0:0]

:DOCKER-ISOLATION-STAGE-2 - [0:0]

:DOCKER-USER - [0:0]

:KUBE-EXTERNAL-SERVICES - [0:0]

:KUBE-FIREWALL - [0:0]

:KUBE-FORWARD - [0:0]

:KUBE-SERVICES - [0:0]

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -j DOCKER-USER

-A FORWARD -j DOCKER-ISOLATION-STAGE-1

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -o docker0 -j DOCKER

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

-A FORWARD -s 10.244.0.0/16 -j ACCEPT

-A FORWARD -d 10.244.0.0/16 -j ACCEPT

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2

-A DOCKER-ISOLATION-STAGE-1 -j RETURN

-A DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP

-A DOCKER-ISOLATION-STAGE-2 -j RETURN

-A DOCKER-USER -j RETURN

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -s 10.244.0.0/16 -m comment --comment "kubernetes forwarding conntrack pod source rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-FORWARD -d 10.244.0.0/16 -m comment --comment "kubernetes forwarding conntrack pod destination rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

COMMIT

# Completed on Thu Dec 12 08:06:51 2019

Container情報

ip address

vagrant@master:~$ kubectl exec testpod01 -it -c alpine01 ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether fa:0b:ed:99:7e:e1 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.19/24 scope global eth0

valid_lft forever preferred_lft forever

vagrant@master:~$ kubectl exec testpod01 -it -c alpine02 ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether fa:0b:ed:99:7e:e1 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.19/24 scope global eth0

valid_lft forever preferred_lft forever

vagrant@master:~$ kubectl exec testpod02 -it -c alpine01 ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether 96:87:a1:ab:16:a8 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.13/24 scope global eth0

valid_lft forever preferred_lft forever

vagrant@master:~$ kubectl exec testpod02 -it -c alpine02 ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

3: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP

link/ether 96:87:a1:ab:16:a8 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.13/24 scope global eth0

valid_lft forever preferred_lft forever

原因調査

ネットワーク構成情報を整理してみると、どうやらflannel.1の送信先インターフェースが意図したものではないのが問題らしいということが分かりました。

が、はて、なぜこうなってるんでしょう?

利用しているのはVagrant+Ansibleで環境構築しているので、その辺の構成ファイル覗いていると...

masterノードのansible playbookで、flannelマニフェストをダウンロードして、インターフェース追加して、デプロイしている。

https://github.com/takara9/vagrant-kubernetes/blob/1.14/ansible-playbook/k8s_master.yml

この、インターフェース追加の所で、--iface=enp0s8というのを明示的に追加している。

マスターノードに残っている/home/vagrant/kube-flannel.ymlを見てみると...

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

# BEGIN ANSIBLE MANAGED BLOCK

- --iface=enp0s8

# END ANSIBLE MANAGED BLOCK

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

...

んんん???

このマニフェストファイルでは、どうやら全プラットフォーム用のflannelの定義が記載されているようです。でも、インターフェースを明示指定するためのオプションが、s390x用の定義のargsにしか追加されていない!

現環境のDaemonset見てみると、全プラットフォーム用のリソースが定義されているが、amd64用のものだけがREADYになっているようです。

vagrant@master:~$ kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel-ds-amd64 3 3 3 3 3 beta.kubernetes.io/arch=amd64 22d

kube-flannel-ds-arm 0 0 0 0 0 beta.kubernetes.io/arch=arm 22d

kube-flannel-ds-arm64 0 0 0 0 0 beta.kubernetes.io/arch=arm64 22d

kube-flannel-ds-ppc64le 0 0 0 0 0 beta.kubernetes.io/arch=ppc64le 22d

kube-flannel-ds-s390x 0 0 0 0 0 beta.kubernetes.io/arch=s390x 22d

kube-proxy 3 3 3 3 3 <none> 22d

詳細見てみると...

vagrant@master:~$ kubectl describe daemonset -n kube-system kube-flannel-ds-amd64

Name: kube-flannel-ds-amd64

Selector: app=flannel,tier=node

Node-Selector: beta.kubernetes.io/arch=amd64

Labels: app=flannel

tier=node

...

Containers:

kube-flannel:

Image: quay.io/coreos/flannel:v0.10.0-amd64

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

Args:

--ip-masq

--kube-subnet-mgr

Limits:

cpu: 100m

memory: 50Mi

...

vagrant@master:~$ kubectl describe daemonset -n kube-system kube-flannel-ds-s390x

Name: kube-flannel-ds-s390x

Selector: app=flannel,tier=node

Node-Selector: beta.kubernetes.io/arch=s390x

Labels: app=flannel

tier=node

...

Containers:

kube-flannel:

Image: quay.io/coreos/flannel:v0.10.0-s390x

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

Args:

--ip-masq

--kube-subnet-mgr

--iface=enp0s8

Limits:

cpu: 100m

memory: 50Mi

...

やはり、s390x用の定義にだけ、iface=enp0s8が追加されていて、amd64用の定義にはありませんね。

対応

定義修正

マスターノードに残っている、kube-flannel.yamlのamd64用の定義にも、--iface=enp0s8の指定を追加します。

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=enp0s8

resources:

requests:

cpu: "100m"

memory: "50Mi"

修正したマニフェストファイルを適用!

vagrant@master:~$ kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel unchanged

clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.extensions/kube-flannel-ds-amd64 configured

daemonset.extensions/kube-flannel-ds-arm64 unchanged

daemonset.extensions/kube-flannel-ds-arm unchanged

daemonset.extensions/kube-flannel-ds-ppc64le unchanged

daemonset.extensions/kube-flannel-ds-s390x unchanged

んー、動的には変わってないようなので、node1を再起動してみます。

node1再起動 => NG

c:\y\Vagrant\vagrant-kubernetes>vagrant halt node1

==> node1: Attempting graceful shutdown of VM...

c:\y\Vagrant\vagrant-kubernetes>vagrant up node1

Bringing machine 'node1' up with 'virtualbox' provider...

==> node1: Checking if box 'ubuntu/xenial64' version '20190613.1.0' is up to date...

==> node1: A newer version of the box 'ubuntu/xenial64' for provider 'virtualbox' is

==> node1: available! You currently have version '20190613.1.0'. The latest is version

==> node1: '20191211.0.0'. Run `vagrant box update` to update.

==> node1: Clearing any previously set forwarded ports...

==> node1: Clearing any previously set network interfaces...

==> node1: Preparing network interfaces based on configuration...

node1: Adapter 1: nat

node1: Adapter 2: hostonly

==> node1: Forwarding ports...

node1: 22 (guest) => 2222 (host) (adapter 1)

==> node1: Running 'pre-boot' VM customizations...

==> node1: Booting VM...

==> node1: Waiting for machine to boot. This may take a few minutes...

node1: SSH address: 127.0.0.1:2222

node1: SSH username: vagrant

node1: SSH auth method: private key

==> node1: Machine booted and ready!

==> node1: Checking for guest additions in VM...

node1: The guest additions on this VM do not match the installed version of

node1: VirtualBox! In most cases this is fine, but in rare cases it can

node1: prevent things such as shared folders from working properly. If you see

node1: shared folder errors, please make sure the guest additions within the

node1: virtual machine match the version of VirtualBox you have installed on

node1: your host and reload your VM.

node1:

node1: Guest Additions Version: 5.1.38

node1: VirtualBox Version: 6.0

==> node1: Setting hostname...

==> node1: Configuring and enabling network interfaces...

==> node1: Mounting shared folders...

node1: /vagrant => C:/y/Vagrant/vagrant-kubernetes

==> node1: Machine already provisioned. Run `vagrant provision` or use the `--provision`

==> node1: flag to force provisioning. Provisioners marked to run always will still run.

node1のflannel.1確認

vagrant@node1:~$ ip -d a show flannel.1

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 6e:2f:1a:43:03:bf brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 10.0.2.15 dev enp0s3 srcport 0 0 dstport 8472 nolearning ageing 300

inet 10.244.1.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::6c2f:1aff:fe43:3bf/64 scope link

valid_lft forever preferred_lft forever

んん?変わってない。

マスター含めた全ノード再起動 => NG

c:\y\Vagrant\vagrant-kubernetes>vagrant halt

==> master: Attempting graceful shutdown of VM...

==> node2: Attempting graceful shutdown of VM...

==> node1: Attempting graceful shutdown of VM...

c:\y\Vagrant\vagrant-kubernetes>vagrant up

(略)

node1のflannel.1確認 => 変わらず!

調査

don't know directly running "kubectl apply -f kube-flannel.yml" does not work at my side, it still show using interface with name eth0.

after running kubectl delete -f kube-flannel.yml then kubectl apply -f kube-flannel.yml, it shows using the interface with eth1:

こういう記述があったので、一度明示的にdeleteしてからやってみる。

delete / apply

delete

vagrant@master:~$ kubectl delete -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.extensions "kube-flannel-ds-amd64" deleted

daemonset.extensions "kube-flannel-ds-arm64" deleted

daemonset.extensions "kube-flannel-ds-arm" deleted

daemonset.extensions "kube-flannel-ds-ppc64le" deleted

daemonset.extensions "kube-flannel-ds-s390x" deleted

vagrant@master:~$ kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-proxy 3 3 3 3 3 <none> 22d

この時点で、各ノードのflannel.1インターフェースは削除されてない。

vagrant@node1:~$ ip -d a show flannel.1

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 7e:bc:93:d4:a2:db brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 10.0.2.15 dev enp0s3 srcport 0 0 dstport 8472 nolearning ageing 300

inet 10.244.1.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::7cbc:93ff:fed4:a2db/64 scope link

valid_lft forever preferred_lft forever

apply

vagrant@master:~$ kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

node1のflannel.1確認

vagrant@node1:~$ ip -d a show flannel.1

10: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 4a:c2:e2:cb:9f:89 brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 172.16.20.12 dev enp0s8 srcport 0 0 dstport 8472 nolearning ageing 300

inet 10.244.1.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::48c2:e2ff:fecb:9f89/64 scope link

valid_lft forever preferred_lft forever

変わった!!!! enp0s8を参照するようになりました!

node2, マスターノードも同様に変更されてました!

動作確認

さて、tesetpod01、testpod02をそれぞれ別のノードで動く状態にして...

vagrant@master:~$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

testpod01 2/2 Running 0 10m 10.244.1.26 node1 <none> <none>

testpod02 2/2 Running 0 10m 10.244.2.17 node2 <none> <none>

web-deploy-6b5f67b747-5qr49 1/1 Running 4 22d 10.244.2.14 node2 <none> <none>

web-deploy-6b5f67b747-8cjw4 1/1 Running 5 22d 10.244.1.25 node1 <none> <none>

web-deploy-6b5f67b747-w8qbm 1/1 Running 4 22d 10.244.2.15 node2 <none> <none>

testpod01のコンテナから、別ノードで動いているtestpod02にping投げてみます。

vagrant@master:~$ kubectl exec testpod01 -it -c alpine01 sh

/ # hostname

testpod01

/ # ping -c 3 10.244.2.17

PING 10.244.2.17 (10.244.2.17): 56 data bytes

64 bytes from 10.244.2.17: seq=0 ttl=62 time=0.428 ms

64 bytes from 10.244.2.17: seq=1 ttl=62 time=0.463 ms

64 bytes from 10.244.2.17: seq=2 ttl=62 time=0.518 ms

--- 10.244.2.17 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.428/0.469/0.518 ms

ノードを跨ぐPod通信ができるようになりました!!!

memo

brctlコマンドが無かったので、node1/node2にbridge-utilsパッケージをインストール

vagrant@node1:~$ sudo apt install bridge-utils

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

linux-headers-4.4.0-150 linux-headers-4.4.0-150-generic linux-image-4.4.0-150-generic linux-modules-4.4.0-150-generic

Use 'sudo apt autoremove' to remove them.

The following NEW packages will be installed:

bridge-utils

0 upgraded, 1 newly installed, 0 to remove and 62 not upgraded.

Need to get 28.6 kB of archives.

After this operation, 102 kB of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu xenial/main amd64 bridge-utils amd64 1.5-9ubuntu1 [28.6 kB]

Fetched 28.6 kB in 1s (28.2 kB/s)

Selecting previously unselected package bridge-utils.

(Reading database ... 119045 files and directories currently installed.)

Preparing to unpack .../bridge-utils_1.5-9ubuntu1_amd64.deb ...

Unpacking bridge-utils (1.5-9ubuntu1) ...

Processing triggers for man-db (2.7.5-1) ...

Setting up bridge-utils (1.5-9ubuntu1) ...