前回に引き続いてsinGANだが、以下の参考でTensorflowで書き換えられており、コードが理解しやすいので、SRGAN(書いてないけど...)及び前回のsinGANで失敗した「でっかいまゆゆに挑戦」で遊んでみた。

【参考】

①SinGANを一部実装してみた

②ppooiiuuyh/SinGAN-tensorflow2.0

今回は、参考①の実装を試しつつ遊んでみました。

※参考②はそれなりに複雑なのでゆっくり読みます

SRの出力例は以下のとおりでした。(上記参考とたぶん同様だと思います)

※十分に拡大超解像画像が得られています。

| 1 | 2 |

|---|---|

|

|

この記事は上記の出力例をオリジナルのPytorchの出力と比較した以下の図から出発します。

すなわち、同じような処理をしているのですが、どうも得られた精度が異なっているように見えます。

これはたぶん詳細なハイパーパラメータの違いが原因ではないかというところからの出発です。

とにかくPytorchだとコードが読み切れないが今回の参考のコードは読みやすいし改変しやすいので、まだまだ不十分ですが遊んでみました。

| Pytorch版 | Tensorflow版 |

|---|---|

|

|

やったこと

・環境

・ハイパーパラメータを確認する

・中間層の画像を出力してみる

・でっかいまゆゆに挑戦する

・環境

上記参考①サイトのGithubサイトからZipファイルをダウンロードして展開する。

通常のconda環境で以下のdependencyをインストールする。

python -m pip install -r requirements.txt

以下で実行できると思う。

ただし、input画像の格納場所と結果格納ディレクトリが異なっているので自分の環境に合わせて変更する。

(base) C:\Users\user\SinGAN_tf_impl-master>python main.py "SR" "Input/images/33039_LR.png"

・ハイパーパラメータを確認する

両コードのハイパーパラメータがどういう値でそれぞれどのように使われているかを解読するだけで、ウワンだと厳しい。

しかし、今回は両コード共に以下にまとめられていて比較的判読しやすい。

確かにこれですべてではなく、特にどちらもSR.pyなどで、

parser.add_argument('--sr_factor', type=int, default=4)

が再定義されています。

ということで、Tensorflow版のハイパーパラメータはmain.pyで、以下のとおり定義されています。

import argparse

from train import *

# from SinGAN.train import *

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('mode')

parser.add_argument('input_image')

parser.add_argument('--save_dir', default='models')

#Network hyper parameter

parser.add_argument('--hidden_channels', type=int, default=32)

parser.add_argument('--k_size', type=int, default=3)

parser.add_argument('--n_layers', type=int, default=5)

#train settings

parser.add_argument('--n_iter', type=int, default=2000)

parser.add_argument('--lr_g', type=float, default=0.0005)

parser.add_argument('--lr_d', type=float, default=0.0005)

parser.add_argument('--beta1', type=float, default=0.5)

parser.add_argument('--g_times', type=int, default=3)

parser.add_argument('--d_times', type=int, default=3)

parser.add_argument('--gp_weight', type=float, default=0.1)

parser.add_argument('--alpha', type=float, default=10)

#data manipulation

parser.add_argument('--scale_factor', type=float, default=0.75)

parser.add_argument('--noise_weight', type=float, default=0.1)

parser.add_argument('--min_size', type=int, default=18)

#SR params

parser.add_argument('--sr_factor', type=int, default=4)

args = parser.parse_args()

if args.mode == 'SR':

train_SR(args)

一方Pytorch版はconfig.pyで以下のとおり定義されている。

ということで、

ここではすべてのパラメータが一致していることが確認できた。

ただし、

parser.add_argument('--scale_factor', type=float, default=0.75)

については、実際まだ理解できていませんが、Pytorch版では結果ディレクトリ名から以下のパラメータなどになっている可能性があります。

例;scale_factor=0.793701,alpha=100

import argparse

def get_arguments():

parser = argparse.ArgumentParser()

#parser.add_argument('--mode', help='task to be done', default='train')

#workspace:

parser.add_argument('--not_cuda', action='store_true', help='disables cuda', default=0)

#load, input, save configurations:

parser.add_argument('--netG', default='', help="path to netG (to continue training)")

parser.add_argument('--netD', default='', help="path to netD (to continue training)")

parser.add_argument('--manualSeed', type=int, help='manual seed')

parser.add_argument('--nc_z',type=int,help='noise # channels',default=3)

parser.add_argument('--nc_im',type=int,help='image # channels',default=3)

parser.add_argument('--out',help='output folder',default='Output')

#networks hyper parameters:

parser.add_argument('--nfc', type=int, default=32)

parser.add_argument('--min_nfc', type=int, default=32)

parser.add_argument('--ker_size',type=int,help='kernel size',default=3)

parser.add_argument('--num_layer',type=int,help='number of layers',default=5)

parser.add_argument('--stride',help='stride',default=1)

parser.add_argument('--padd_size',type=int,help='net pad size',default=0)#math.floor(opt.ker_size/2)

#pyramid parameters:

parser.add_argument('--scale_factor',type=float,help='pyramid scale factor',default=0.75)#pow(0.5,1/6))

parser.add_argument('--noise_amp',type=float,help='addative noise cont weight',default=0.1)

parser.add_argument('--min_size',type=int,help='image minimal size at the coarser scale',default=25)

parser.add_argument('--max_size', type=int,help='image minimal size at the coarser scale', default=250)

#optimization hyper parameters:

parser.add_argument('--niter', type=int, default=2000, help='number of epochs to train per scale')

parser.add_argument('--gamma',type=float,help='scheduler gamma',default=0.1)

parser.add_argument('--lr_g', type=float, default=0.0005, help='learning rate, default=0.0005')

parser.add_argument('--lr_d', type=float, default=0.0005, help='learning rate, default=0.0005')

parser.add_argument('--beta1', type=float, default=0.5, help='beta1 for adam. default=0.5')

parser.add_argument('--Gsteps',type=int, help='Generator inner steps',default=3)

parser.add_argument('--Dsteps',type=int, help='Discriminator inner steps',default=3)

parser.add_argument('--lambda_grad',type=float, help='gradient penelty weight',default=0.1)

parser.add_argument('--alpha',type=float, help='reconstruction loss weight',default=10)

return parser

・中間層の画像を出力してみる

次はモデルの構造を疑ってみました。

これも解決していませんが、Tensorflow版はわかりやすいので以下の改変をして標準出力に出力してみました。おまけに改変前後の出力を示します。

※Noneで指定していた部分が数値になっています

コード的には当該箇所を以下のとおり変更しました。

for i in range(n_blocks):

scale = math.pow(scale_factor, n_blocks-i-1)

cur_h, cur_w = int(h*scale), int(w*scale)

img = tf.image.resize(real_image, (cur_h, cur_w))

resolutions.append((cur_h, cur_w))

#inp = tf.keras.Input(shape=(None, None, 3))

#noise = tf.keras.Input(shape=(None, None, 3))

inp = tf.keras.Input(shape=(cur_h, cur_w, 3))

noise = tf.keras.Input(shape=(cur_h, cur_w, 3))

G = tf.keras.Model(inputs=[inp, noise], outputs=model.G_block(inp, noise, name='G_block_%d'%i, hidden_maps=args.hidden_channels, num_layers=args.n_layers))

D = tf.keras.Model(inputs=inp, outputs=model.D_block(inp, name='D_block_%d'%i, hidden_maps=args.hidden_channels, num_layers=args.n_layers))

lr_g = tf.Variable(args.lr_g, trainable=False)

lr_d = tf.Variable(args.lr_d, trainable=False)

opt_G = tf.keras.optimizers.Adam(lr_g, args.beta1)

opt_D = tf.keras.optimizers.Adam(lr_d, args.beta1)

G.summary()

D.summary()

これでネットワークがPytorch版と同じかどうかが判別するはずです。

Pytorch版は少し変わるもののある一定の構造でしばらくすると変化するもので、どうやら少しロジックが異なるような気がします。

※これも出力比較しただけでソースでの確認はできていません

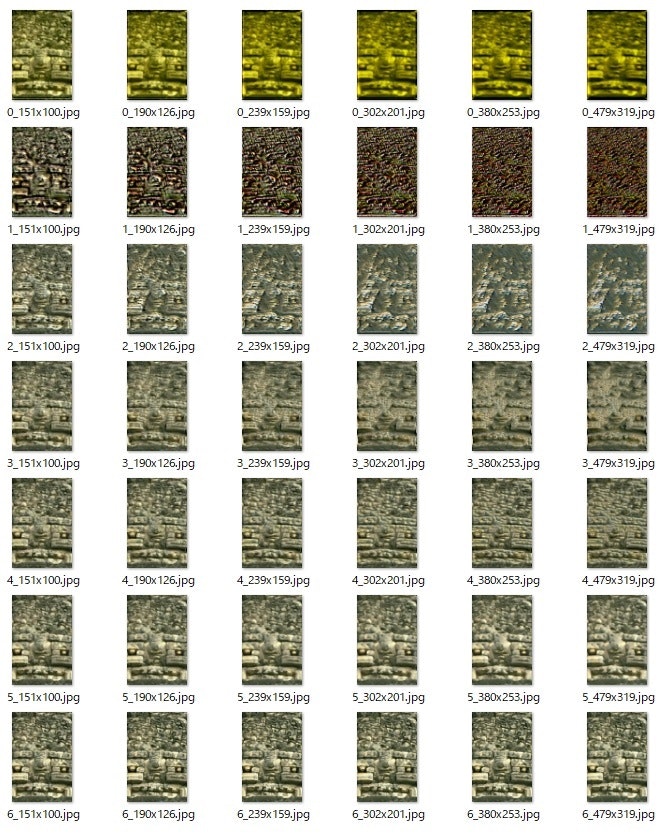

そこで、今回は学習の中間状態の様子を画像で出力してみました。

上記コードの続きを以下のように変更しました。

if i > 0:

for (prev, cur) in zip(Gs[-1].layers, G.layers):

cur.set_weights(prev.get_weights())

for (prev, cur) in zip(Ds[-1].layers, D.layers):

cur.set_weights(prev.get_weights())

init_opt(opt_G, G)

init_opt(opt_D, D)

with tqdm(range(args.n_iter)) as bar:

bar.set_description('Block %d / %d'%(i+1, n_blocks))

for iteration in bar:

if i == 0:

prev_img = tf.zeros_like(img)

else:

prev_img = proc_image(tf.zeros([1, resolutions[0][0], resolutions[0][1], 3]), Gs, args.noise_weight, resolutions)

g_loss, d_loss = train_step(img, prev_img, args.noise_weight, G, D, opt_G, opt_D, args.g_times, args.d_times, args.alpha)

bar.set_postfix(ordered_dict=OrderedDict(

g_loss=g_loss.numpy(), d_loss=d_loss.numpy()

))

if iteration == int(args.n_iter*0.8):

lr_d.assign(args.lr_d*0.1)

lr_g.assign(args.lr_g*0.1)

Gs.append(G)

Ds.append(D)

G.save(os.path.join(save_dir, 'SR_G_%d_res_%dx%d.h5'%(i+1, cur_h, cur_w)))

D.save(os.path.join(save_dir, 'SR_D_%d_res_%dx%d.h5'%(i+1, cur_h, cur_w)))

scale_factor = math.pow(1/2, 1/3)

target_res = 4

scale = 1.0 / scale_factor

n, h, w, c = real_image.shape

t_h, t_w = h*target_res, w*target_res

iter_times = int(math.log(target_res, scale))

img = real_image

os.makedirs(os.path.join(save_dir, 'result'), exist_ok=True)

for j in range(1, iter_times+1, 1):

res = (int(h*math.pow(scale, j)), int(w*math.pow(scale, j)))

img = tf.image.resize(img, size=res)

img = G([img, tf.zeros_like(img)])

image = np.squeeze(img)

image = (np.clip(image, -1.0, 1.0) + 1.0) * 127.5

image = Image.fromarray(image.astype(np.uint8))

image.save(save_dir+'/result/'+str(i)+'_%dx%d.jpg'%res)

つまり、最後の画像出力部分を一段内側にして中間状態の画像を出力するように変更しました。

ということで以下のような図が得られます。

これを見ると、この随時学習は以下の参考③にあるように

「→ヒエラルキー状のPatch-GANs

粗い画像から段階的に高解像にしながら様々なスケールの特徴をcaptureする

画像全体を記憶しないように,小さいreceptive field(受容野)を設定」

ということが分かる。

【参考】

③SinGANの論文解説

・でっかいまゆゆに挑戦する

ということで、でっかいまゆゆに挑戦してみました。

以下がその結果です。

⇒前回は拡大しすぎると線が入ってしまったが、そこは穏やかに見えて、まあ成功なのではないかと思う。

| $size^2$ | まゆゆ |

|---|---|

| 128 $original$ |  |

| 161 |  |

| 203 |  |

| 255 |  |

| 322 |  |

| 406 |  |

| 511 |  |

| 645 |  |

| 812 |  |

| 1023 |  |

まとめ

・sinGAN-Tensorflow版を試してみた

・中間学習の画像を出力してみた

・「でっかいまゆゆに挑戦」して割と良い結果を得た

・もう少しsinGANを極めたくなった

おまけ

Tensorflow版の改変版は以下のとおり

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 30, 20, 3)] 0

__________________________________________________________________________________________________

input_2 (InputLayer) [(None, 30, 20, 3)] 0

__________________________________________________________________________________________________

tf_op_layer_add (TensorFlowOpLa [(None, 30, 20, 3)] 0 input_1[0][0]

input_2[0][0]

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, 30, 20, 32) 896 tf_op_layer_add[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, 30, 20, 32) 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, 30, 20, 32) 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, 30, 20, 32) 9248 leaky_re_lu[0][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, 30, 20, 32) 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, 30, 20, 32) 9248 leaky_re_lu[1][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, 30, 20, 32) 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, 30, 20, 32) 9248 leaky_re_lu[2][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, 30, 20, 32) 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, 30, 20, 3) 867 leaky_re_lu[3][0]

__________________________________________________________________________________________________

tf_op_layer_Tanh (TensorFlowOpL [(None, 30, 20, 3)] 0 conv_block_4_conv_4[0][0]

__________________________________________________________________________________________________

tf_op_layer_add_1 (TensorFlowOp [(None, 30, 20, 3)] 0 tf_op_layer_Tanh[0][0]

input_1[0][0]

==================================================================================================

Total params: 30,019

Trainable params: 29,763

Non-trainable params: 256

__________________________________________________________________________________________________

Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 30, 20, 3)] 0

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, 30, 20, 32) 896 input_1[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, 30, 20, 32) 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, 30, 20, 32) 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, 30, 20, 32) 9248 leaky_re_lu[4][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, 30, 20, 32) 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, 30, 20, 32) 9248 leaky_re_lu[5][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, 30, 20, 32) 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, 30, 20, 32) 9248 leaky_re_lu[6][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, 30, 20, 32) 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, 30, 20, 1) 289 leaky_re_lu[7][0]

==================================================================================================

Total params: 29,441

Trainable params: 29,185

Non-trainable params: 256

__________________________________________________________________________________________________

Tensorflow版のオリジナルは以下のとおり

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, None, None, 0

__________________________________________________________________________________________________

input_2 (InputLayer) [(None, None, None, 0

__________________________________________________________________________________________________

tf_op_layer_add (TensorFlowOpLa [(None, None, None, 0 input_1[0][0]

input_2[0][0]

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, None, None, 3 896 tf_op_layer_add[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, None, None, 3 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, None, None, 3 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, None, None, 3 9248 leaky_re_lu[0][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, None, None, 3 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, None, None, 3 9248 leaky_re_lu[1][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, None, None, 3 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, None, None, 3 9248 leaky_re_lu[2][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, None, None, 3 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, None, None, 3 867 leaky_re_lu[3][0]

__________________________________________________________________________________________________

tf_op_layer_Tanh (TensorFlowOpL [(None, None, None, 0 conv_block_4_conv_4[0][0]

__________________________________________________________________________________________________

tf_op_layer_add_1 (TensorFlowOp [(None, None, None, 0 tf_op_layer_Tanh[0][0]

input_1[0][0]

==================================================================================================

Total params: 30,019

Trainable params: 29,763

Non-trainable params: 256

__________________________________________________________________________________________________

Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, None, None, 0

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, None, None, 3 896 input_1[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, None, None, 3 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, None, None, 3 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, None, None, 3 9248 leaky_re_lu[4][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, None, None, 3 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, None, None, 3 9248 leaky_re_lu[5][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, None, None, 3 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, None, None, 3 9248 leaky_re_lu[6][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, None, None, 3 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, None, None, 1 289 leaky_re_lu[7][0]

==================================================================================================

Total params: 29,441

Trainable params: 29,185

Non-trainable params: 256

__________________________________________________________________________________________________

Block 1 / 7: 100%|████████████████████████████████████████████████████████| 2000/2000 [01:32<00:00, 21.58it/s, g_loss=[0.9006956], d_loss=[-0.02634283]]

おまけ2

実は、Tensorflow版のモデルは以下のようにインプット画像が大きくなるにしたがってテンソルサイズが大きくなっていきます。

そして、各モデルはサイズが大きくなってもパラメータ数は変化していません。

(base) C:\Users\user\SinGAN_tf_impl-master>python main.py "SR" "Input/images/mayuyu128.jpg"

2019-12-30 23:23:33.694956: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 20, 20, 3)] 0

__________________________________________________________________________________________________

input_2 (InputLayer) [(None, 20, 20, 3)] 0

__________________________________________________________________________________________________

tf_op_layer_add (TensorFlowOpLa [(None, 20, 20, 3)] 0 input_1[0][0]

input_2[0][0]

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, 20, 20, 32) 896 tf_op_layer_add[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, 20, 20, 32) 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, 20, 20, 32) 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, 20, 20, 32) 9248 leaky_re_lu[0][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, 20, 20, 32) 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, 20, 20, 32) 9248 leaky_re_lu[1][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, 20, 20, 32) 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, 20, 20, 32) 9248 leaky_re_lu[2][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, 20, 20, 32) 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, 20, 20, 3) 867 leaky_re_lu[3][0]

__________________________________________________________________________________________________

tf_op_layer_Tanh (TensorFlowOpL [(None, 20, 20, 3)] 0 conv_block_4_conv_4[0][0]

__________________________________________________________________________________________________

tf_op_layer_add_1 (TensorFlowOp [(None, 20, 20, 3)] 0 tf_op_layer_Tanh[0][0]

input_1[0][0]

==================================================================================================

Total params: 30,019

Trainable params: 29,763

Non-trainable params: 256

__________________________________________________________________________________________________

Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 20, 20, 3)] 0

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, 20, 20, 32) 896 input_1[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, 20, 20, 32) 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, 20, 20, 32) 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, 20, 20, 32) 9248 leaky_re_lu[4][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, 20, 20, 32) 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, 20, 20, 32) 9248 leaky_re_lu[5][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, 20, 20, 32) 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, 20, 20, 32) 9248 leaky_re_lu[6][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, 20, 20, 32) 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, 20, 20, 1) 289 leaky_re_lu[7][0]

==================================================================================================

Total params: 29,441

Trainable params: 29,185

Non-trainable params: 256

__________________________________________________________________________________________________

Block 1 / 9: 100%|███████████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [01:13<00:00, 27.10it/s, g_loss=[7.9145103], d_loss=[-0.0302126]]

Model: "model_2"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_3 (InputLayer) [(None, 25, 25, 3)] 0

__________________________________________________________________________________________________

input_4 (InputLayer) [(None, 25, 25, 3)] 0

__________________________________________________________________________________________________

tf_op_layer_add_2 (TensorFlowOp [(None, 25, 25, 3)] 0 input_3[0][0]

input_4[0][0]

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, 25, 25, 32) 896 tf_op_layer_add_2[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, 25, 25, 32) 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) multiple 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, 25, 25, 32) 9248 leaky_re_lu[8][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, 25, 25, 32) 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, 25, 25, 32) 9248 leaky_re_lu[9][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, 25, 25, 32) 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, 25, 25, 32) 9248 leaky_re_lu[10][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, 25, 25, 32) 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, 25, 25, 3) 867 leaky_re_lu[11][0]

__________________________________________________________________________________________________

tf_op_layer_Tanh_1 (TensorFlowO [(None, 25, 25, 3)] 0 conv_block_4_conv_4[0][0]

__________________________________________________________________________________________________

tf_op_layer_add_3 (TensorFlowOp [(None, 25, 25, 3)] 0 tf_op_layer_Tanh_1[0][0]

input_3[0][0]

==================================================================================================

Total params: 30,019

Trainable params: 29,763

Non-trainable params: 256

__________________________________________________________________________________________________

Model: "model_3"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_3 (InputLayer) [(None, 25, 25, 3)] 0

__________________________________________________________________________________________________

conv_block_0_conv_0 (Conv2D) (None, 25, 25, 32) 896 input_3[0][0]

__________________________________________________________________________________________________

conv_block_0_BN_0 (BatchNormali (None, 25, 25, 32) 128 conv_block_0_conv_0[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) multiple 0 conv_block_0_BN_0[0][0]

conv_block_1_BN_1[0][0]

conv_block_2_BN_2[0][0]

conv_block_3_BN_3[0][0]

__________________________________________________________________________________________________

conv_block_1_conv_1 (Conv2D) (None, 25, 25, 32) 9248 leaky_re_lu[12][0]

__________________________________________________________________________________________________

conv_block_1_BN_1 (BatchNormali (None, 25, 25, 32) 128 conv_block_1_conv_1[0][0]

__________________________________________________________________________________________________

conv_block_2_conv_2 (Conv2D) (None, 25, 25, 32) 9248 leaky_re_lu[13][0]

__________________________________________________________________________________________________

conv_block_2_BN_2 (BatchNormali (None, 25, 25, 32) 128 conv_block_2_conv_2[0][0]

__________________________________________________________________________________________________

conv_block_3_conv_3 (Conv2D) (None, 25, 25, 32) 9248 leaky_re_lu[14][0]

__________________________________________________________________________________________________

conv_block_3_BN_3 (BatchNormali (None, 25, 25, 32) 128 conv_block_3_conv_3[0][0]

__________________________________________________________________________________________________

conv_block_4_conv_4 (Conv2D) (None, 25, 25, 1) 289 leaky_re_lu[15][0]

==================================================================================================

Total params: 29,441

Trainable params: 29,185

Non-trainable params: 256

__________________________________________________________________________________________________

Block 2 / 9: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [01:42<00:00, 19.48it/s, g_loss=[0.7259917], d_loss=[-0.00471149]]

Model: "model_4"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_5 (InputLayer) [(None, 32, 32, 3)] 0

__________________________________________________________________________________________________

...

Model: "model_5"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_5 (InputLayer) [(None, 32, 32, 3)] 0

__________________________________________________________________________________________________

...

Block 3 / 9: 100%|█████████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [03:49<00:00, 8.71it/s, g_loss=[0.05491346], d_loss=[-0.00068739]]

Model: "model_6"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_7 (InputLayer) [(None, 40, 40, 3)] 0

__________________________________________________________________________________________________

...

Model: "model_7"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_7 (InputLayer) [(None, 40, 40, 3)] 0

__________________________________________________________________________________________________

...

Block 4 / 9: 100%|█████████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [05:04<00:00, 6.56it/s, g_loss=[0.13994163], d_loss=[-0.00033907]]

Model: "model_8"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_9 (InputLayer) [(None, 50, 50, 3)] 0

__________________________________________________________________________________________________

...

Model: "model_9"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_9 (InputLayer) [(None, 50, 50, 3)] 0

__________________________________________________________________________________________________

...

Block 5 / 9: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [07:46<00:00, 4.29it/s, g_loss=[0.1438144], d_loss=[-0.00011725]]

Model: "model_10"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_11 (InputLayer) [(None, 64, 64, 3)] 0

__________________________________________________________________________________________________

...

Model: "model_11"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_11 (InputLayer) [(None, 64, 64, 3)] 0

__________________________________________________________________________________________________

...

Block 6 / 9: 100%|███████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [12:43<00:00, 2.62it/s, g_loss=[0.09378527], d_loss=[-7.251864e-05]]

Model: "model_12"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_13 (InputLayer) [(None, 80, 80, 3)] 0

__________________________________________________________________________________________________

...

Model: "model_13"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_13 (InputLayer) [(None, 80, 80, 3)] 0

__________________________________________________________________________________________________

...

Block 7 / 9: 100%|██████████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [19:51<00:00, 1.68it/s, g_loss=[0.1352475], d_loss=[-0.00010792]]

Model: "model_14"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_15 (InputLayer) [(None, 101, 101, 3) 0

__________________________________________________________________________________________________

...

Block 8 / 9: 100%|██████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [32:49<00:00, 1.02it/s, g_loss=[0.12389164], d_loss=[-2.1162363e-05]]

Model: "model_16"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_17 (InputLayer) [(None, 128, 128, 3) 0

__________________________________________________________________________________________________

...

Model: "model_17"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_17 (InputLayer) [(None, 128, 128, 3) 0

__________________________________________________________________________________________________

...

Block 9 / 9: 100%|███████████████████████████████████████████████████████████████████████████████████████████| 2000/2000 [50:12<00:00, 1.51s/it, g_loss=[0.13538168], d_loss=[2.7423768e-05]]