超解像アルゴリズムであるSRGAN-Kerasを動かしてみた。

超解像というのは、低解像度の画像を高解像度画像に変換する深層学習のアルゴリズムだそうです。すなわち、ここでは(64,64,3)の画像を(256,256,3)に変換します。

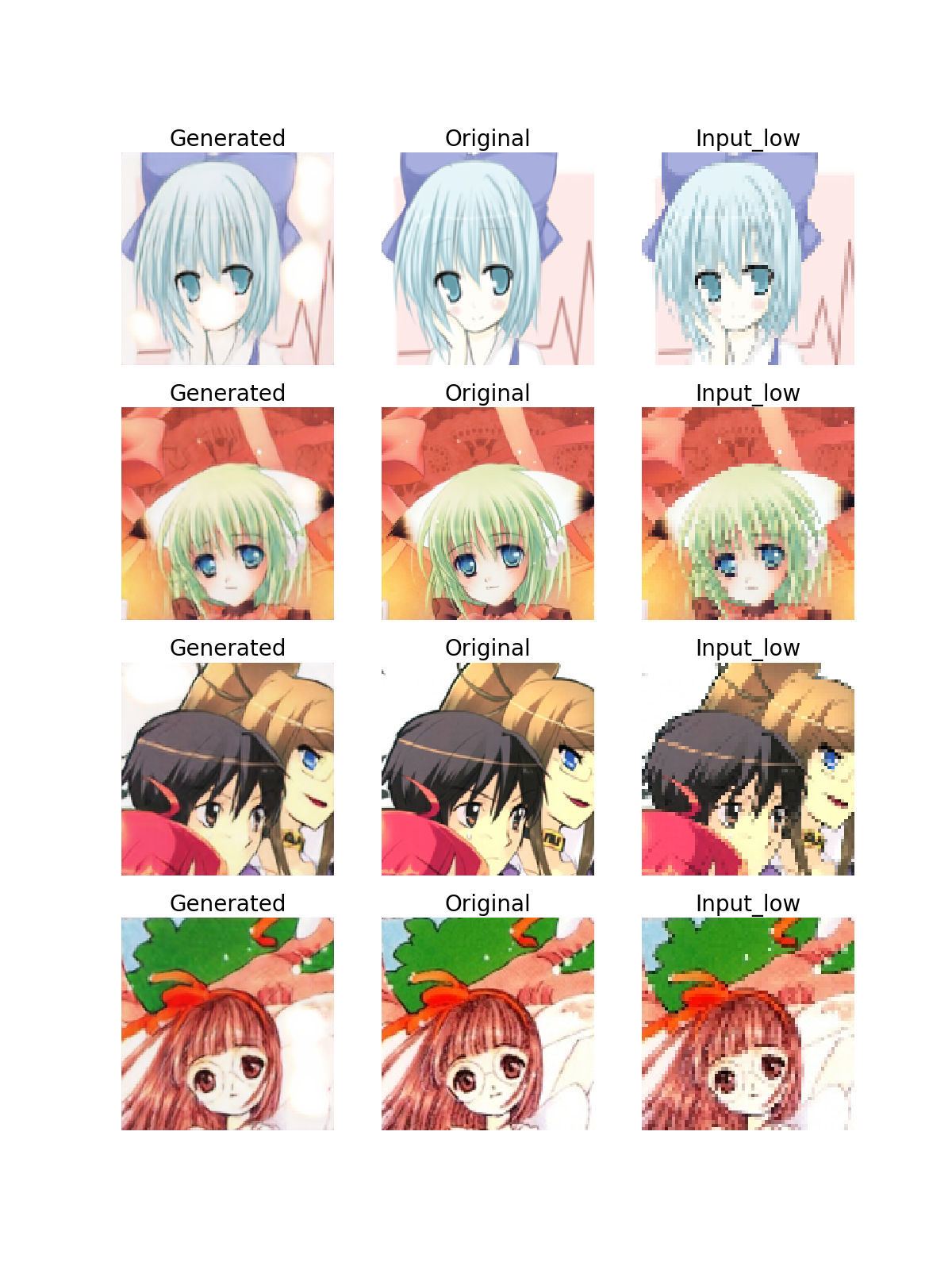

以下が今回の結果です。Input_lowの低解像度画像をOriginalの高解像度画像に近づけるべく学習してGeneratedの画像が得られました。

コードは参考①のものをベースに実行しましたが、アーキテクチャは参考②がわかりやすい。

【参考】

①eriklindernoren/Keras-GAN/srgan/

②deepak112/Keras-SRGAN

やったこと

・Keras版を動かす

・未知画像の高解像度化を実施してみる

・Keras版を動かす

データ読み込み

コードはデータ入力と本体に分かれており、まずデータ入力ができなくて難儀した。

最終的に動いたコードは以下に置いた。

・DCGAN-Keras/SRGAN-Keras/data_loader.py

コード改造した部分の解説する。

Libは以下の通り、globは以前のままでも動くが、前回のDCGANのコードに合わせた。

これは、scipy.mscが動かないのでPILで取得することに伴い前回コードを基本に取得方法を変更した。

あと、同じ理由で、np.random.choiceからrandom.sampleに変更した。

# import scipy

# from glob import glob

import glob

import numpy as np

import matplotlib.pyplot as plt

import random

import cv2

from PIL import Image

二行目の#self.dataset_name = dataset_nameは利用していない。

class DataLoader():

def __init__(self, dataset_name, img_res=(128, 128)):

#self.dataset_name = dataset_name

self.img_res = img_res

#path = glob('./data/%s/*' % (self.dataset_name))

#batch_images = np.random.choice(path, size=batch_size)

を

files = glob.glob("./in_images1/**/*.png", recursive=True)

batch_images = random.sample(files, batch_size)

に書き換えた。

def load_data(self, batch_size=1, is_testing=False):

data_type = "train" if not is_testing else "test"

#path = glob('./data/%s/*' % (self.dataset_name))

#batch_images = np.random.choice(path, size=batch_size)

files = glob.glob("./in_images1/**/*.png", recursive=True)

batch_images = random.sample(files, batch_size)

#img = self.imread(img_path)が動かないので、

img = Image.open(img_path)に変更しました。

そして、def imread()は不要になりました。

imgs_hr = []

imgs_lr = []

for img_path in batch_images:

img = Image.open(img_path)

#img = self.imread(img_path)

img_hr = scipy.misc.imresize(img, self.img_res)

が動かないので、

img_hr = img.resize((h, w))に変更しました。

また、

#img_hr = (img_hr - 127.5) / 127.5

の位置はこのコードのように全体に対して実施したほうが効率的です。

h, w = self.img_res

low_h, low_w = int(h / 4), int(w / 4)

img_hr = img.resize((h, w)) #(64, 64)

img_lr = img.resize((low_h, low_w))

img_hr = np.array(img_hr)

#img_hr = (img_hr - 127.5) / 127.5

img_lr = np.array(img_lr)

#img_lr = (img_lr - 127.5) / 127.5

#img_hr = scipy.misc.imresize(img, self.img_res)

#img_lr = scipy.misc.imresize(img, (low_h, low_w))

# If training => do random flip

if not is_testing and np.random.random() < 0.5:

img_hr = np.fliplr(img_hr)

img_lr = np.fliplr(img_lr)

imgs_hr.append(img_hr)

imgs_lr.append(img_lr)

imgs_hr = np.array(imgs_hr) / 127.5 - 1.

imgs_lr = np.array(imgs_lr) / 127.5 - 1.

return imgs_hr, imgs_lr

"""

def imread(self, path):

return scipy.misc.imread(path, mode='RGB').astype(np.float)

"""

SRGANを動かす

・DCGAN-Keras/SRGAN-Keras/srgan.py

このコードはオリジナルが基本動いたので、必要な以下の機能を追加しました。

1.weightsを保存できるようにしました(省略)

2.学習結果画像の表示を低解像度、学習用高解像度画像、再生画像を出力するようにしました

以下のコードで実施しています。

def sample_images(self, epoch):

os.makedirs('images/%s' % self.dataset_name, exist_ok=True)

r, c = 4, 3 #2,2を拡張

imgs_hr, imgs_lr = self.data_loader.load_data(batch_size=4, is_testing=True)

fake_hr = self.generator.predict(imgs_lr)

# Rescale images 0 - 1

imgs_lr = 0.5 * imgs_lr + 0.5

fake_hr = 0.5 * fake_hr + 0.5

imgs_hr = 0.5 * imgs_hr + 0.5

# Save generated images and the high resolution originals

titles = ['Generated', 'Original', 'Input_low'] #'Input_low'を追加

fig, axs = plt.subplots(r, c,figsize=(12, 16)) #figsizeで拡大

cnt = 0

for row in range(r):

for col, image in enumerate([fake_hr, imgs_hr, imgs_lr]):

axs[row, col].imshow(image[row])

axs[row, col].set_title(titles[col],size=20) #sizeで拡大

axs[row, col].axis('off')

cnt += 1

fig.savefig("images/%s/%d.png" % (self.dataset_name, epoch))

plt.close()

このコードで上記のような学習画像が得られます。

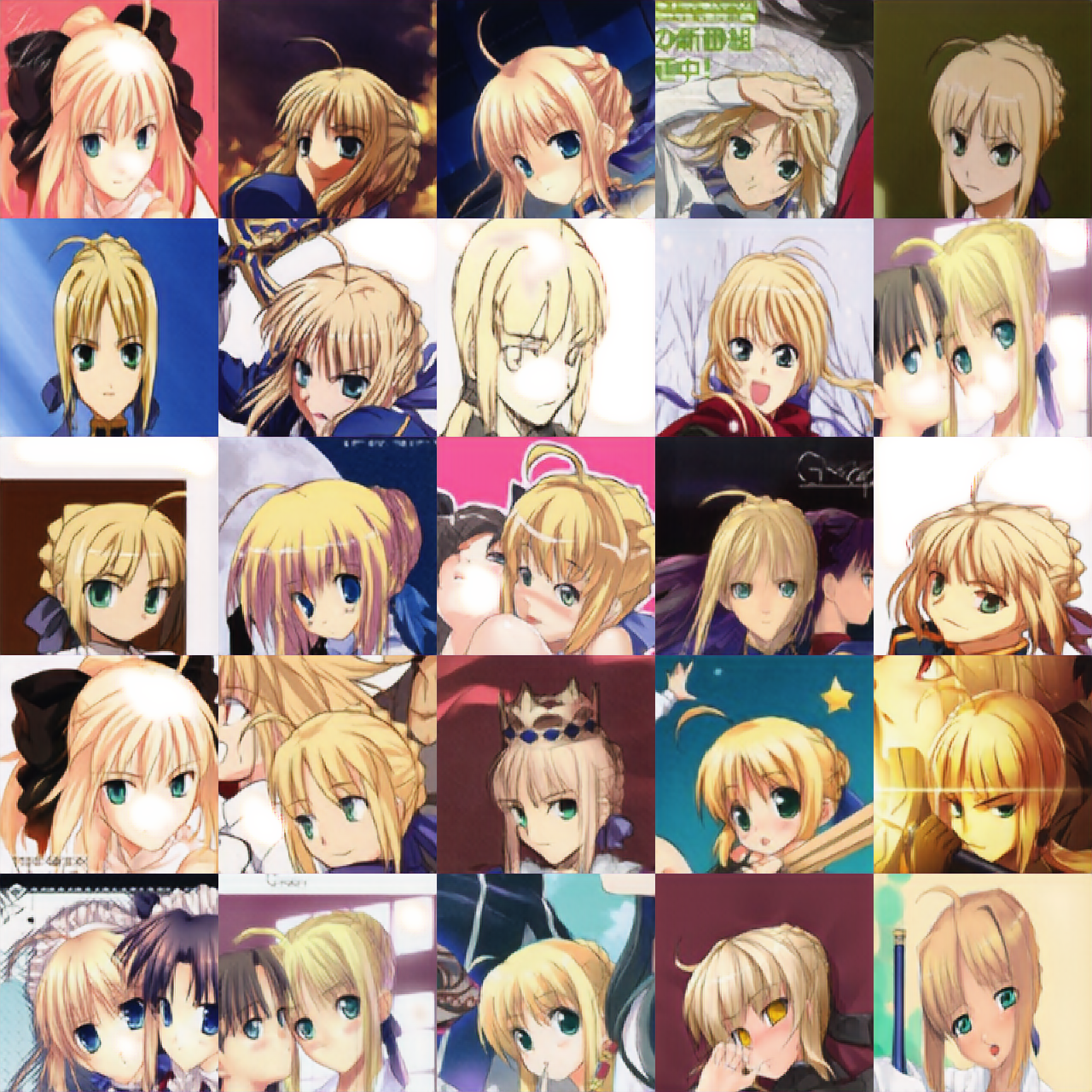

3.generateを利用して完全に未学習データで検証

未学習データを使って、高解像度画像に変換できるかどうしてもやる必要を感じて以下のコードで実施しました。

コードはほぼDCGANと同一です。

以下変更部分を解説します。

def generate(self, batch_size=25, sample_interval=50):

BATCH_SIZE=batch_size

ite=10000

self.generator = self.build_generator() #self.build_generatorをインスタンス化します

g = self.generator

g.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0002, beta_1=0.5))

g.load_weights('./weights/generator_10000.h5') #学習済みweights読込

for i in range(10):

noise = np.random.uniform(size=[BATCH_SIZE, 64*64*3], low=-1.0, high=1.0) #今回の読込データサイズに合わせます

imgs_hr, imgs_lr = self.data_loader.load_data(batch_size=BATCH_SIZE, is_testing=True) #データを読込む

print('noise[0]',imgs_lr[0])

plt.imshow(imgs_lr[0].reshape(64,64,3))

plt.pause(1)

noise=imgs_lr.reshape(BATCH_SIZE,64,64,3) #generatorのインプットに形状を合わせる

generated_images = g.predict(noise)

plt.imshow(generated_images[0])

plt.pause(1)

image_noise = combine_images2(noise) #低解像度の出力サイズを高解像に合わせる

image_noise.save("./images/noise_%s%d.png" % (ite,i))

image = combine_images(generated_images)

image.save("./images/%s%d.png" % (ite,i))

print(i)

os.makedirs(os.path.join(".", "images"), exist_ok=True)

image.save("./images/%s%d.png" % (ite,i))

25個のイメージを結合する関数は以下の通りです。

BATCH_SIZEをべたで与えてしまっていますが悪しからず。

def combine_images2(generated_images, cols=5, rows=5):

BATCH_SIZE=25

imgs=[]

for i in range(BATCH_SIZE):

img=cv2.resize(generated_images[i],(256,256))

imgs.append(img)

imgs=np.array(imgs)

shape = imgs.shape

h = shape[1]

w = shape[2]

image = np.zeros((rows * h, cols * w, 3))

for index, img in enumerate(imgs):

if index >= cols * rows:

break

i = index // cols

j = index % cols

image[i*h:(i+1)*h, j*w:(j+1)*w, :] = img[:, :, :]

image = image * 127.5 + 127.5

image = Image.fromarray(image.astype(np.uint8))

return image

この関数で以下が得られます。

「低解像画像」

「再生画像」無事に綺麗な出力が得られました

最後にmain関数を示します。

def get_args():

parser = argparse.ArgumentParser()

parser.add_argument("--mode", type=str)

args = parser.parse_args()

return args

if __name__ == '__main__':

gan = SRGAN()

args = get_args()

if args.mode == "train":

gan.train(epochs=30000, batch_size=1, sample_interval=1000)

elif args.mode == "generate":

gan.generate(batch_size=25, sample_interval=1000)

実行は以下の通りです。

「学習」

>python srgan.py --mode train

「未学習データ検証」

>python srgan.py --mode generate

まとめ

・超解像深層学習アルゴリズムSRGAN-Kerasを動かして遊んでみた

・低解像度画像を高解像度画像に変換できた

・未学習データも高解像度画像に変換できた

・DCGANとの関係を明らかにして、乱数からの高解像度画像につなげたい

おまけ

ちょっと長いけど載せておきます。

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_3 (InputLayer) (None, 256, 256, 3) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 256, 256, 64) 1792

_________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 256, 256, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 128, 128, 64) 36928

_________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 128, 128, 64) 0

_________________________________________________________________

batch_normalization_1 (Batch (None, 128, 128, 64) 256

_________________________________________________________________

conv2d_3 (Conv2D) (None, 128, 128, 128) 73856

_________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 128, 128, 128) 0

_________________________________________________________________

batch_normalization_2 (Batch (None, 128, 128, 128) 512

_________________________________________________________________

conv2d_4 (Conv2D) (None, 64, 64, 128) 147584

_________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 64, 64, 128) 0

_________________________________________________________________

batch_normalization_3 (Batch (None, 64, 64, 128) 512

_________________________________________________________________

conv2d_5 (Conv2D) (None, 64, 64, 256) 295168

_________________________________________________________________

leaky_re_lu_5 (LeakyReLU) (None, 64, 64, 256) 0

_________________________________________________________________

batch_normalization_4 (Batch (None, 64, 64, 256) 1024

_________________________________________________________________

conv2d_6 (Conv2D) (None, 32, 32, 256) 590080

_________________________________________________________________

leaky_re_lu_6 (LeakyReLU) (None, 32, 32, 256) 0

_________________________________________________________________

batch_normalization_5 (Batch (None, 32, 32, 256) 1024

_________________________________________________________________

conv2d_7 (Conv2D) (None, 32, 32, 512) 1180160

_________________________________________________________________

leaky_re_lu_7 (LeakyReLU) (None, 32, 32, 512) 0

_________________________________________________________________

batch_normalization_6 (Batch (None, 32, 32, 512) 2048

_________________________________________________________________

conv2d_8 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

leaky_re_lu_8 (LeakyReLU) (None, 16, 16, 512) 0

_________________________________________________________________

batch_normalization_7 (Batch (None, 16, 16, 512) 2048

_________________________________________________________________

dense_1 (Dense) (None, 16, 16, 1024) 525312

_________________________________________________________________

leaky_re_lu_9 (LeakyReLU) (None, 16, 16, 1024) 0

_________________________________________________________________

dense_2 (Dense) (None, 16, 16, 1) 1025

=================================================================

Total params: 5,219,137

Trainable params: 5,215,425

Non-trainable params: 3,712

_________________________________________________________________

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_4 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 64, 64, 64) 15616 input_4[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 64, 64, 64) 0 conv2d_9[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 64, 64, 64) 36928 activation_1[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 64, 64, 64) 0 conv2d_10[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 64, 64, 64) 256 activation_2[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_8[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 64, 64, 64) 256 conv2d_11[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 64, 64, 64) 0 batch_normalization_9[0][0]

activation_1[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 64, 64, 64) 36928 add_1[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 64, 64, 64) 0 conv2d_12[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 64, 64, 64) 256 activation_3[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_10[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 64, 64, 64) 256 conv2d_13[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 64, 64, 64) 0 batch_normalization_11[0][0]

add_1[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 64, 64, 64) 36928 add_2[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 64, 64, 64) 0 conv2d_14[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 64, 64, 64) 256 activation_4[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_12[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 64, 64, 64) 256 conv2d_15[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 64, 64, 64) 0 batch_normalization_13[0][0]

add_2[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 64, 64, 64) 36928 add_3[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 64, 64, 64) 0 conv2d_16[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 64, 64, 64) 256 activation_5[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_14[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 64, 64, 64) 256 conv2d_17[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 64, 64, 64) 0 batch_normalization_15[0][0]

add_3[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 64, 64, 64) 36928 add_4[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 64, 64, 64) 0 conv2d_18[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 64, 64, 64) 256 activation_6[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_16[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 64, 64, 64) 256 conv2d_19[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 64, 64, 64) 0 batch_normalization_17[0][0]

add_4[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 64, 64, 64) 36928 add_5[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 64, 64, 64) 0 conv2d_20[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 64, 64, 64) 256 activation_7[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_18[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 64, 64, 64) 256 conv2d_21[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 64, 64, 64) 0 batch_normalization_19[0][0]

add_5[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 64, 64, 64) 36928 add_6[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 64, 64, 64) 0 conv2d_22[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 64, 64, 64) 256 activation_8[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_20[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 64, 64, 64) 256 conv2d_23[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 64, 64, 64) 0 batch_normalization_21[0][0]

add_6[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 64, 64, 64) 36928 add_7[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 64, 64, 64) 0 conv2d_24[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 64, 64, 64) 256 activation_9[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_22[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 64, 64, 64) 256 conv2d_25[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 64, 64, 64) 0 batch_normalization_23[0][0]

add_7[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 64, 64, 64) 36928 add_8[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 64, 64, 64) 0 conv2d_26[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 64, 64, 64) 256 activation_10[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_24[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 64, 64, 64) 256 conv2d_27[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 64, 64, 64) 0 batch_normalization_25[0][0]

add_8[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 64, 64, 64) 36928 add_9[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 64, 64, 64) 0 conv2d_28[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 64, 64, 64) 256 activation_11[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_26[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 64, 64, 64) 256 conv2d_29[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 64, 64, 64) 0 batch_normalization_27[0][0]

add_9[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 64, 64, 64) 36928 add_10[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 64, 64, 64) 0 conv2d_30[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 64, 64, 64) 256 activation_12[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_28[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 64, 64, 64) 256 conv2d_31[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 64, 64, 64) 0 batch_normalization_29[0][0]

add_10[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 64, 64, 64) 36928 add_11[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 64, 64, 64) 0 conv2d_32[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 64, 64, 64) 256 activation_13[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_30[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 64, 64, 64) 256 conv2d_33[0][0]

__________________________________________________________________________________________________

add_12 (Add) (None, 64, 64, 64) 0 batch_normalization_31[0][0]

add_11[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 64, 64, 64) 36928 add_12[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 64, 64, 64) 0 conv2d_34[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 64, 64, 64) 256 activation_14[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_32[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 64, 64, 64) 256 conv2d_35[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 64, 64, 64) 0 batch_normalization_33[0][0]

add_12[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 64, 64, 64) 36928 add_13[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 64, 64, 64) 0 conv2d_36[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 64, 64, 64) 256 activation_15[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_34[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 64, 64, 64) 256 conv2d_37[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 64, 64, 64) 0 batch_normalization_35[0][0]

add_13[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 64, 64, 64) 36928 add_14[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 64, 64, 64) 0 conv2d_38[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 64, 64, 64) 256 activation_16[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_36[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 64, 64, 64) 256 conv2d_39[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 64, 64, 64) 0 batch_normalization_37[0][0]

add_14[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 64, 64, 64) 36928 add_15[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 64, 64, 64) 0 conv2d_40[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 64, 64, 64) 256 activation_17[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 64, 64, 64) 36928 batch_normalization_38[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 64, 64, 64) 256 conv2d_41[0][0]

__________________________________________________________________________________________________

add_16 (Add) (None, 64, 64, 64) 0 batch_normalization_39[0][0]

add_15[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 64, 64, 64) 36928 add_16[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 64, 64, 64) 256 conv2d_42[0][0]

__________________________________________________________________________________________________

add_17 (Add) (None, 64, 64, 64) 0 batch_normalization_40[0][0]

activation_1[0][0]

__________________________________________________________________________________________________

up_sampling2d_1 (UpSampling2D) (None, 128, 128, 64) 0 add_17[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 128, 128, 256 147712 up_sampling2d_1[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 128, 128, 256 0 conv2d_43[0][0]

__________________________________________________________________________________________________

up_sampling2d_2 (UpSampling2D) (None, 256, 256, 256 0 activation_18[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 256, 256, 256 590080 up_sampling2d_2[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 256, 256, 256 0 conv2d_44[0][0]

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 256, 256, 3) 62211 activation_19[0][0]

==================================================================================================

Total params: 2,042,691

Trainable params: 2,038,467

Non-trainable params: 4,224

__________________________________________________________________________________________________

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_6 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

model_3 (Model) (None, 256, 256, 3) 2042691 input_6[0][0]

__________________________________________________________________________________________________

model_2 (Model) (None, 16, 16, 1) 5219137 model_3[1][0]

__________________________________________________________________________________________________

model_1 (Model) (None, 64, 64, 256) 143667240 model_3[1][0]

==================================================================================================

Total params: 150,929,068

Trainable params: 2,038,467

Non-trainable params: 148,890,601

__________________________________________________________________________________________________