1.すぐに利用したい方へ(as soon as)

「Natural Language Processing with Python Cookbook」 By Pratap Dangeti, Krishna Bhavsar, Naresh Kumar

http://shop.oreilly.com/product/9781787289321.do

docker

dockerを導入し、Windows, Macではdockerを起動しておいてください。

Windowsでは、BiosでIntel Virtualizationをenableにしないとdockerが起動しない場合があります。

また、セキュリティの警告などが出ることがあります。

docker run

$ docker pull kaizenjapan/anaconda-resheff

$ docker run -it -p 8888:8888 kaizenjapan/anaconda-resheff /bin/bash

以下のshell sessionでは

(base) root@f19e2f06eabb:/#は入力促進記号(comman prompt)です。実際には数字の部分が違うかもしれません。この行の#の右側を入力してください。

それ以外の行は出力です。出力にエラー、違いがあれば、コメント欄などでご連絡くださると幸いです。

それぞれの章のフォルダに移動します。

dockerの中と、dockerを起動したOSのシェルとが表示が似ている場合には、どちらで捜査しているか間違えることがあります。dockerの入力促進記号(comman prompt)に気をつけてください。

ファイル共有または複写

dockerとdockerを起動したOSでは、ファイル共有をするか、ファイル複写するかして、生成したファイルをブラウザ等表示させてください。参考文献欄にやり方のURLを記載しています。

dockerを起動したOSのディスクの整理を行う上で、どのやり方がいいか模索中です。一部の方法では、最初から共有設定にしています。

複写の場合は、dockerを起動したOS側コマンドを実行しました。お使いのdockerの番号で置き換えてください。複写したファイルをブラウザで表示し内容確認しました。

02__up_and_running/hello_world.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter01# python recipe1.py

Traceback (most recent call last):

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 80, in __load

try: root = nltk.data.find('{}/{}'.format(self.subdir, zip_name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource reuters not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('reuters')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "recipe1.py", line 3, in <module>

files = reuters.fileids()

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 116, in __getattr__

self.__load()

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 81, in __load

except LookupError: raise e

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 78, in __load

root = nltk.data.find('{}/{}'.format(self.subdir, self.__name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource reuters not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('reuters')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter01# vi recipe1.py

2行挿入

import nltk

nltk.download('reuters')

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter01# python recipe1.py

[nltk_data] Downloading package reuters to /root/nltk_data...

['test/14826', 'test/14828', 'test/14829', 'test/14832', 'test/14833', 'test/14839', 'test/14840', 'test/14841', 'test/14842', 'test/14843', 'test/14844', 'test/14849', 'test/14852', 'test/14854', 'test/14858', 'test/14859', 'test/14860', 'test/14861',

中略

s Ghanaian and Brazilian , they said .

Other technical points need to be sorted out , including limits on how much cocoa the buffer stock manager can buy in nearby , intermediate and forward positions and the consequent effect on prices in the various deliveries , delegates said .

Chapter01/recipe2.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter01# python recipe2.py

Traceback (most recent call last):

File "recipe2.py", line 4, in <module>

reader = CategorizedPlaintextCorpusReader(r'/Volumes/Data/NLP-CookBook/Reviews/txt_sentoken', r'.*\.txt', cat_pattern=r'(\w+)/*')

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/reader/plaintext.py", line 154, in __init__

PlaintextCorpusReader.__init__(self, *args, **kwargs)

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/reader/plaintext.py", line 62, in __init__

CorpusReader.__init__(self, root, fileids, encoding)

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/reader/api.py", line 84, in __init__

root = FileSystemPathPointer(root)

File "/opt/conda/lib/python3.6/site-packages/nltk/compat.py", line 221, in _decorator

return init_func(*args, **kwargs)

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 318, in __init__

raise IOError('No such file or directory: %r' % _path)

OSError: No such file or directory: '/Volumes/Data/NLP-CookBook/Reviews/txt_sentoken'

Chapter02/recipe3.py

base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter02# python recipe3.py

PDF 1:

This is a sample PDF document I am using to demonstrate in the tutorial.

PDF 2:

This is a sample PDF document

password protected.

Chapter02/recipe4.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter02# python recipe4.py

Traceback (most recent call last):

File "recipe4.py", line 1, in <module>

import docx

File "/opt/conda/lib/python3.6/site-packages/docx.py", line 30, in <module>

from exceptions import PendingDeprecationWarning

ModuleNotFoundError: No module named 'exceptions'

Chapter03/recipe3.py

base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# python recipe3.py

['My', 'name', 'is', 'maximu', 'decimu', 'meridiu', ',', 'command', 'of', 'the', 'armi', 'of', 'the', 'north', ',', 'gener', 'of', 'the', 'felix', 'legion', 'and', 'loyal', 'servant', 'to', 'the', 'true', 'emperor', ',', 'marcu', 'aureliu', '.', 'father', 'to', 'a', 'murder', 'son', ',', 'husband', 'to', 'a', 'murder', 'wife', '.', 'and', 'I', 'will', 'have', 'my', 'vengeanc', ',', 'in', 'thi', 'life', 'or', 'the', 'next', '.']

Traceback (most recent call last):

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 80, in __load

try: root = nltk.data.find('{}/{}'.format(self.subdir, zip_name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource wordnet not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('wordnet')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "recipe3.py", line 11, in <module>

lemmas = [lemmatizer.lemmatize(t) for t in tokens]

File "recipe3.py", line 11, in <listcomp>

lemmas = [lemmatizer.lemmatize(t) for t in tokens]

File "/opt/conda/lib/python3.6/site-packages/nltk/stem/wordnet.py", line 40, in lemmatize

lemmas = wordnet._morphy(word, pos)

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 116, in __getattr__

self.__load()

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 81, in __load

except LookupError: raise e

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 78, in __load

root = nltk.data.find('{}/{}'.format(self.subdir, self.__name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource wordnet not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('wordnet')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# vi recipe3.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# python recipe3.py

[nltk_data] Downloading package wordnet to /root/nltk_data...

[nltk_data] Unzipping corpora/wordnet.zip.

['My', 'name', 'is', 'maximu', 'decimu', 'meridiu', ',', 'command', 'of', 'the', 'armi', 'of', 'the', 'north', ',', 'gener', 'of', 'the', 'felix', 'legion', 'and', 'loyal', 'servant', 'to', 'the', 'true', 'emperor', ',', 'marcu', 'aureliu', '.', 'father', 'to', 'a', 'murder', 'son', ',', 'husband', 'to', 'a', 'murder', 'wife', '.', 'and', 'I', 'will', 'have', 'my', 'vengeanc', ',', 'in', 'thi', 'life', 'or', 'the', 'next', '.']

['My', 'name', 'is', 'Maximus', 'Decimus', 'Meridius', ',', 'commander', 'of', 'the', 'army', 'of', 'the', 'north', ',', 'General', 'of', 'the', 'Felix', 'legion', 'and', 'loyal', 'servant', 'to', 'the', 'true', 'emperor', ',', 'Marcus', 'Aurelius', '.', 'Father', 'to', 'a', 'murdered', 'son', ',', 'husband', 'to', 'a', 'murdered', 'wife', '.', 'And', 'I', 'will', 'have', 'my', 'vengeance', ',', 'in', 'this', 'life', 'or', 'the', 'next', '.']

Chapter03/recipe4.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# python recipe4.py

Traceback (most recent call last):

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 80, in __load

try: root = nltk.data.find('{}/{}'.format(self.subdir, zip_name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource gutenberg not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('gutenberg')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "recipe4.py", line 3, in <module>

print(gutenberg.fileids())

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 116, in __getattr__

self.__load()

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 81, in __load

except LookupError: raise e

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 78, in __load

root = nltk.data.find('{}/{}'.format(self.subdir, self.__name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource gutenberg not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('gutenberg')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# vi recipe4.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# python recipe4.py

[nltk_data] Downloading package gutenberg to /root/nltk_data...

[nltk_data] Unzipping corpora/gutenberg.zip.

['austen-emma.txt', 'austen-persuasion.txt', 'austen-sense.txt', 'bible-kjv.txt', 'blake-poems.txt', 'bryant-stories.txt', 'burgess-busterbrown.txt', 'carroll-alice.txt', 'chesterton-ball.txt', 'chesterton-brown.txt', 'chesterton-thursday.txt', 'edgeworth-parents.txt', 'melville-moby_dick.txt', 'milton-paradise.txt', 'shakespeare-caesar.txt', 'shakespeare-hamlet.txt', 'shakespeare-macbeth.txt', 'whitman-leaves.txt']

Following are the most common 10 words in the bag

[(',', 70509), ('the', 62103), (':', 43766), ('and', 38847), ('of', 34480), ('.', 26160), ('to', 13396), ('And', 12846), ('that', 12576), ('in', 12331)]

Following are the most common 10 words in the bag minus the stopwords

[('shall', 9838), ('unto', 8997), ('lord', 7964), ('thou', 5474), ('thy', 4600), ('god', 4472), ('said', 3999), ('thee', 3827), ('upon', 2748), ('man', 2735)]

/opt/conda/lib/python3.6/site-packages/matplotlib/figure.py:448: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

% get_backend())

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# vi recipe4.py

Chapter03/recipe5.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# python recipe5.py

Our Algorithm : 1

NLTK Algorithm : 1

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter03# python recipe6.py

First Story words : ['in', 'a', 'far', 'away', 'kingdom', 'there', 'was', 'a', 'river', 'this', 'river', 'was', 'home', 'to', 'many', 'golden', 'swans', 'the', 'swans', 'spent', 'most', 'of', 'their', 'time', 'on', 'the', 'banks', 'of', 'the', 'river', 'every', 'six', 'months', 'the', 'swans', 'would', 'leave', 'a', 'golden', 'feather', 'as', 'a', 'fee', 'for', 'using', 'the', 'lake', 'the', 'soldiers', 'of', 'the', 'kingdom', 'would', 'collect', 'the', 'feathers', 'and', 'deposit', 'them', 'in', 'the', 'royal', 'treasury', 'one', 'day', 'a', 'homeless', 'bird', 'saw', 'the', 'river', 'the', 'water', 'in', 'this', 'river', 'seems', 'so', 'cool', 'and', 'soothing', 'i', 'will', 'make', 'my', 'home', 'here', 'thought', 'the', 'bird', 'as', 'soon', 'as', 'the', 'bird', 'settled', 'down', 'near', 'the', 'river', 'the', 'golden', 'swans', 'noticed', 'her', 'they', 'came', 'shouting', 'this', 'river', 'belongs', 'to', 'us', 'we', 'pay', 'a', 'golden', 'feather', 'to', 'the', 'king', 'to', 'use', 'this', 'river', 'you', 'can', 'not', 'live', 'here', 'i', 'am', 'homeless', 'brothers', 'i', 'too', 'will', 'pay', 'the', 'rent', 'please', 'give', 'me', 'shelter', 'the', 'bird', 'pleaded', 'how', 'will', 'you', 'pay', 'the', 'rent', 'you', 'do', 'not', 'have', 'golden', 'feathers', 'said', 'the', 'swans', 'laughing', 'they', 'further', 'added', 'stop', 'dreaming', 'and', 'leave', 'once', 'the', 'humble', 'bird', 'pleaded', 'many', 'times', 'but', 'the', 'arrogant', 'swans', 'drove', 'the', 'bird', 'away', 'i', 'will', 'teach', 'them', 'a', 'lesson', 'decided', 'the', 'humiliated', 'bird', 'she', 'went', 'to', 'the', 'king', 'and', 'said', 'o', 'king', 'the', 'swans', 'in', 'your', 'river', 'are', 'impolite', 'and', 'unkind', 'i', 'begged', 'for', 'shelter', 'but', 'they', 'said', 'that', 'they', 'had', 'purchased', 'the', 'river', 'with', 'golden', 'feathers', 'the', 'king', 'was', 'angry', 'with', 'the', 'arrogant', 'swans', 'for', 'having', 'insulted', 'the', 'homeless', 'bird', 'he', 'ordered', 'his', 'soldiers', 'to', 'bring', 'the', 'arrogant', 'swans', 'to', 'his', 'court', 'in', 'no', 'time', 'all', 'the', 'golden', 'swans', 'were', 'brought', 'to', 'the', 'king’s', 'court', 'do', 'you', 'think', 'the', 'royal', 'treasury', 'depends', 'upon', 'your', 'golden', 'feathers', 'you', 'can', 'not', 'decide', 'who', 'lives', 'by', 'the', 'river', 'leave', 'the', 'river', 'at', 'once', 'or', 'you', 'all', 'will', 'be', 'beheaded', 'shouted', 'the', 'king', 'the', 'swans', 'shivered', 'with', 'fear', 'on', 'hearing', 'the', 'king', 'they', 'flew', 'away', 'never', 'to', 'return', 'the', 'bird', 'built', 'her', 'home', 'near', 'the', 'river', 'and', 'lived', 'there', 'happily', 'forever', 'the', 'bird', 'gave', 'shelter', 'to', 'all', 'other', 'birds', 'in', 'the', 'river', '']

Second Story words : ['long', 'time', 'ago', 'there', 'lived', 'a', 'king', 'he', 'was', 'lazy', 'and', 'liked', 'all', 'the', 'comforts', 'of', 'life', 'he', 'never', 'carried', 'out', 'his', 'duties', 'as', 'a', 'king', '“our', 'king', 'does', 'not', 'take', 'care', 'of', 'our', 'needs', 'he', 'also', 'ignores', 'the', 'affairs', 'of', 'his', 'kingdom', 'the', 'people', 'complained', 'one', 'day', 'the', 'king', 'went', 'into', 'the', 'forest', 'to', 'hunt', 'after', 'having', 'wandered', 'for', 'quite', 'sometime', 'he', 'became', 'thirsty', 'to', 'his', 'relief', 'he', 'spotted', 'a', 'lake', 'as', 'he', 'was', 'drinking', 'water', 'he', 'suddenly', 'saw', 'a', 'golden', 'swan', 'come', 'out', 'of', 'the', 'lake', 'and', 'perch', 'on', 'a', 'stone', '“oh', 'a', 'golden', 'swan', 'i', 'must', 'capture', 'it', 'thought', 'the', 'king', 'but', 'as', 'soon', 'as', 'he', 'held', 'his', 'bow', 'up', 'the', 'swan', 'disappeared', 'and', 'the', 'king', 'heard', 'a', 'voice', '“i', 'am', 'the', 'golden', 'swan', 'if', 'you', 'want', 'to', 'capture', 'me', 'you', 'must', 'come', 'to', 'heaven', 'surprised', 'the', 'king', 'said', '“please', 'show', 'me', 'the', 'way', 'to', 'heaven', '“do', 'good', 'deeds', 'serve', 'your', 'people', 'and', 'the', 'messenger', 'from', 'heaven', 'would', 'come', 'to', 'fetch', 'you', 'to', 'heaven', 'replied', 'the', 'voice', 'the', 'selfish', 'king', 'eager', 'to', 'capture', 'the', 'swan', 'tried', 'doing', 'some', 'good', 'deeds', 'in', 'his', 'kingdom', '“now', 'i', 'suppose', 'a', 'messenger', 'will', 'come', 'to', 'take', 'me', 'to', 'heaven', 'he', 'thought', 'but', 'no', 'messenger', 'came', 'the', 'king', 'then', 'disguised', 'himself', 'and', 'went', 'out', 'into', 'the', 'street', 'there', 'he', 'tried', 'helping', 'an', 'old', 'man', 'but', 'the', 'old', 'man', 'became', 'angry', 'and', 'said', '“you', 'need', 'not', 'try', 'to', 'help', 'i', 'am', 'in', 'this', 'miserable', 'state', 'because', 'of', 'out', 'selfish', 'king', 'he', 'has', 'done', 'nothing', 'for', 'his', 'people', 'suddenly', 'the', 'king', 'heard', 'the', 'golden', 'swan’s', 'voice', '“do', 'good', 'deeds', 'and', 'you', 'will', 'come', 'to', 'heaven', 'it', 'dawned', 'on', 'the', 'king', 'that', 'by', 'doing', 'selfish', 'acts', 'he', 'will', 'not', 'go', 'to', 'heaven', 'he', 'realized', 'that', 'his', 'people', 'needed', 'him', 'and', 'carrying', 'out', 'his', 'duties', 'was', 'the', 'only', 'way', 'to', 'heaven', 'after', 'that', 'day', 'he', 'became', 'a', 'responsible', 'king', '']

First Story vocabulary : {'', 'they', 'brought', 'soon', 'saw', 'you', 'bird', 'six', 'depends', 'purchased', 'that', 'a', 'day', 'give', 'fear', 'humble', 'there', 'shelter', 'by', 'do', 'times', 'who', 'arrogant', 'went', 'every', 'at', 'said', 'impolite', 'drove', 'be', 'most', 'leave', 'flew', 'fee', 'use', 'soldiers', 'are', 'their', 'had', 'can', 'feathers', 'soothing', 'not', 'he', 'brothers', 'happily', 'humiliated', 'bring', 'spent', 'lives', 'royal', 'king', 'river', 'so', 'too', 'kingdom', 'treasury', 'her', 'his', 'water', 'am', 'beheaded', 'golden', 'begged', 'court', 'this', 'think', 'no', 'stop', 'or', 'i', 'added', 'here', 'in', 'she', 'further', 'months', 'please', 'thought', 'on', 'time', 'seems', 'swans', 'your', 'one', 'home', 'banks', 'how', 'noticed', 'pleaded', 'far', 'shouting', 'my', 'the', 'as', 'never', 'decide', 'many', 'other', 'for', 'would', 'feather', 'using', 'live', 'make', 'will', 'laughing', 'upon', 'settled', 'them', 'shouted', 'ordered', 'and', 'cool', 'teach', 'hearing', 'unkind', 'deposit', 'lived', 'belongs', 'having', 'built', 'lake', 'to', 'pay', 'me', 'o', 'rent', 'insulted', 'but', 'return', 'dreaming', 'came', 'king’s', 'forever', 'us', 'were', 'birds', 'we', 'down', 'angry', 'all', 'away', 'was', 'decided', 'near', 'shivered', 'homeless', 'have', 'with', 'gave', 'of', 'collect', 'once', 'lesson'}

Second Story vocabulary {'', 'helping', 'soon', 'saw', 'heard', 'you', 'selfish', '“you', 'take', 'fetch', 'that', 'a', 'day', 'if', 'there', 'comforts', 'dawned', 'by', 'suppose', 'duties', '“please', 'went', 'man', 'said', 'carrying', 'state', 'go', 'surprised', 'drinking', 'up', 'has', 'complained', 'want', 'way', 'from', 'messenger', 'heaven', 'not', 'he', 'disappeared', 'ago', 'street', 'sometime', 'also', 'after', 'it', 'king', '“now', 'kingdom', 'became', 'affairs', 'his', 'our', 'doing', 'water', 'am', 'old', 'golden', 'this', 'no', 'i', 'realized', 'tried', 'good', 'in', 'come', 'acts', 'thought', 'on', 'time', 'care', 'because', 'wandered', 'swan', 'relief', 'your', 'life', 'one', 'hunt', 'suddenly', 'spotted', 'responsible', 'some', 'capture', 'an', 'the', 'held', 'forest', 'replied', 'never', 'as', 'for', 'would', 'perch', '“oh', 'try', 'must', 'serve', 'miserable', 'will', 'then', 'himself', 'needs', 'show', 'swan’s', 'bow', '“our', 'liked', 'and', 'carried', 'lived', 'into', 'only', 'having', 'lake', 'to', 'me', '“do', 'eager', 'but', 'lazy', 'disguised', 'thirsty', 'quite', 'came', 'out', 'deeds', 'does', 'ignores', '“i', 'stone', 'angry', 'all', 'help', 'done', 'needed', 'was', 'people', 'nothing', 'of', 'long', 'voice', 'need', 'him'}

Common Vocabulary : {'', 'and', 'soon', 'saw', 'thought', 'on', 'you', 'not', 'he', 'time', 'lived', 'your', 'having', 'one', 'that', 'lake', 'to', 'me', 'a', 'day', 'king', 'but', 'there', 'kingdom', 'by', 'his', 'the', 'went', 'water', 'never', 'am', 'as', 'came', 'said', 'golden', 'for', 'this', 'would', 'angry', 'no', 'all', 'was', 'i', 'will', 'in', 'of'}

##Chapter04

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter04# python recipe1.py

Found a match!

Found a match!

Found a match!

Found a match!

Found a match!

Found a match!

Not matched!

Found a match!

Found a match!

Found a match!

Found a match!

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter04# python recipe2.py

Pattern to test start and end with

Found a match!

Begin with a word

Found a match!

End with a word and optional punctuation

Found a match!

Finding a word which contains character, not start or end of the word

Found a match!

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter04# python recipe3.py

Searching for "Tuffy" in "Tuffy eats pie, Loki eats peas!" ->

Found!

Searching for "Pie" in "Tuffy eats pie, Loki eats peas!" ->

Not Found!

Searching for "Loki" in "Tuffy eats pie, Loki eats peas!" ->

Found!

Found "festival" at 12:20

Found "festival" at 42:50

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter04# python recipe4.py

Date found in the URL : [('2017', '10', '28'), ('2017', '05', '12')]

True

False

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter04# python recipe5.py

21 Ramkrishna Rd

['light', 'color']

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter04# python recipe6.py

['I', 'am', 'big!', "It's", 'the', 'pictures', 'that', 'got', 'small.']

['I', 'am', 'big', 'It', 's', 'the', 'pictures', 'that', 'got', 'small', '']

['I', 'am', 'big', '!', 'It', "'s", 'the', 'pictures', 'that', 'got', 'small', '.']

##Chapter05

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# ls

Exploring.py Grammar.py OwnTagger.py PCFG.py RecursiveCFG.py Train3.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# python Exploring.py

['Bangalore', 'is', 'the', 'capital', 'of', 'Karnataka', '.']

[('Bangalore', 'NNP'), ('is', 'VBZ'), ('the', 'DT'), ('capital', 'NN'), ('of', 'IN'), ('Karnataka', 'NNP'), ('.', '.')]

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# python Grammar.py

Grammar with 12 productions (start state = ROOT)

ROOT -> WORD

WORD -> ' '

WORD -> NUMBER LETTER

WORD -> LETTER NUMBER

NUMBER -> '0'

NUMBER -> '1'

NUMBER -> '2'

NUMBER -> '3'

LETTER -> 'a'

LETTER -> 'b'

LETTER -> 'c'

LETTER -> 'd'

Generated Word: , Size : 0

Generated Word: 0a, Size : 2

Generated Word: 0b, Size : 2

Generated Word: 0c, Size : 2

Generated Word: 0d, Size : 2

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# python OwnTagger.py

[('Mysore', 'NN'), ('is', 'NN'), ('an', 'NN'), ('amazing', 'NN'), ('place', 'NN'), ('on', 'NN'), ('earth', 'NN'), ('.', 'NN'), ('I', 'NN'), ('have', 'NN'), ('visited', 'NN'), ('Mysore', 'NN'), ('10', 'NN'), ('times', 'NN'), ('.', 'NN')]

[('Mysore', None), ('is', 'VERB'), ('an', 'INDEFINITE-ARTICLE'), ('amazing', 'ADJECTIVE'), ('place', None), ('on', 'PREPOSITION'), ('earth', None), ('.', None), ('I', None), ('have', None), ('visited', None), ('Mysore', None), ('10', None), ('times', None), ('.', None)]

[('Mysore', 'NNP'), ('is', 'VBZ'), ('an', 'DT'), ('amazing', 'JJ'), ('place', 'NN'), ('on', 'IN'), ('earth', 'NN'), ('.', '.'), ('I', None), ('have', None), ('visited', None), ('Mysore', 'NNP'), ('10', None), ('times', None), ('.', '.')]

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# python PCFG.py

Grammar with 13 productions (start state = ROOT)

ROOT -> WORD [1.0]

WORD -> P1 [0.25]

WORD -> P1 P2 [0.25]

WORD -> P1 P2 P3 [0.25]

WORD -> P1 P2 P3 P4 [0.25]

P1 -> 'A' [1.0]

P2 -> 'B' [0.5]

P2 -> 'C' [0.5]

P3 -> 'D' [0.3]

P3 -> 'E' [0.3]

P3 -> 'F' [0.4]

P4 -> 'G' [0.9]

P4 -> 'H' [0.1]

String : A, Size : 1

String : AB, Size : 2

String : AC, Size : 2

String : ABD, Size : 3

String : ABE, Size : 3

String : ABF, Size : 3

String : ACD, Size : 3

String : ACE, Size : 3

String : ACF, Size : 3

String : ABDG, Size : 4

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# python RecursiveCFG.py

Grammar with 12 productions (start state = ROOT)

ROOT -> WORD

WORD -> ' '

WORD -> '0' WORD '0'

WORD -> '1' WORD '1'

WORD -> '2' WORD '2'

WORD -> '3' WORD '3'

WORD -> '4' WORD '4'

WORD -> '5' WORD '5'

WORD -> '6' WORD '6'

WORD -> '7' WORD '7'

WORD -> '8' WORD '8'

WORD -> '9' WORD '9'

Palindrome : , Size : 0

Palindrome : 00, Size : 2

Palindrome : 0000, Size : 4

Palindrome : 0110, Size : 4

Palindrome : 0220, Size : 4

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter05# python Train3.py

[('Iphone', None), ('is', 'VBZ'), ('purchased', 'VBN'), ('by', 'IN'), ('Steve', 'NNP'), ('Jobs', 'NNP'), ('in', 'IN'), ('Bangalore', 'NNP'), ('Market', 'NNP')]

##Chapter06/Chunker.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# ls

Chunker.py ParsingChart.py ParsingDG.py ParsingRD.py ParsingSR.py SimpleChunker.py Training.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python Chunker.py

Traceback (most recent call last):

File "Chunker.py", line 8, in <module>

chunks = nltk.ne_chunk(tags)

File "/opt/conda/lib/python3.6/site-packages/nltk/chunk/__init__.py", line 176, in ne_chunk

chunker = load(chunker_pickle)

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 836, in load

opened_resource = _open(resource_url)

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 954, in _open

return find(path_, path + ['']).open()

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource maxent_ne_chunker not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('maxent_ne_chunker')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

- ''

**********************************************************************

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# vi Chunker.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python Chunker.py

[nltk_data] Downloading package maxent_ne_chunker to

[nltk_data] /root/nltk_data...

[nltk_data] Unzipping chunkers/maxent_ne_chunker.zip.

Traceback (most recent call last):

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 80, in __load

try: root = nltk.data.find('{}/{}'.format(self.subdir, zip_name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource words not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('words')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "Chunker.py", line 8, in <module>

chunks = nltk.ne_chunk(tags)

File "/opt/conda/lib/python3.6/site-packages/nltk/chunk/__init__.py", line 177, in ne_chunk

return chunker.parse(tagged_tokens)

File "/opt/conda/lib/python3.6/site-packages/nltk/chunk/named_entity.py", line 123, in parse

tagged = self._tagger.tag(tokens)

File "/opt/conda/lib/python3.6/site-packages/nltk/tag/sequential.py", line 63, in tag

tags.append(self.tag_one(tokens, i, tags))

File "/opt/conda/lib/python3.6/site-packages/nltk/tag/sequential.py", line 83, in tag_one

tag = tagger.choose_tag(tokens, index, history)

File "/opt/conda/lib/python3.6/site-packages/nltk/tag/sequential.py", line 632, in choose_tag

featureset = self.feature_detector(tokens, index, history)

File "/opt/conda/lib/python3.6/site-packages/nltk/tag/sequential.py", line 680, in feature_detector

return self._feature_detector(tokens, index, history)

File "/opt/conda/lib/python3.6/site-packages/nltk/chunk/named_entity.py", line 99, in _feature_detector

'en-wordlist': (word in self._english_wordlist()),

File "/opt/conda/lib/python3.6/site-packages/nltk/chunk/named_entity.py", line 50, in _english_wordlist

self._en_wordlist = set(words.words('en-basic'))

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 116, in __getattr__

self.__load()

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 81, in __load

except LookupError: raise e

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 78, in __load

root = nltk.data.find('{}/{}'.format(self.subdir, self.__name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource words not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('words')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# vi Chunker.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python Chunker.py

[nltk_data] Downloading package maxent_ne_chunker to

[nltk_data] /root/nltk_data...

[nltk_data] Package maxent_ne_chunker is already up-to-date!

[nltk_data] Downloading package words to /root/nltk_data...

[nltk_data] Unzipping corpora/words.zip.

(S

(PERSON Lalbagh/NNP)

(PERSON Botanical/NNP Gardens/NNP)

is/VBZ

a/DT

well/RB

known/VBN

botanical/JJ

garden/NN

in/IN

(GPE Bengaluru/NNP)

,/,

(GPE India/NNP)

./.)

##Chapter06/ParsingChart.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python ParsingChart.py

|.Bangal. is . the .capita. of .Karnat.|

|[------] . . . . .| [0:1] 'Bangalore'

|. [------] . . . .| [1:2] 'is'

|. . [------] . . .| [2:3] 'the'

|. . . [------] . .| [3:4] 'capital'

|. . . . [------] .| [4:5] 'of'

|. . . . . [------]| [5:6] 'Karnataka'

|[------] . . . . .| [0:1] NNP -> 'Bangalore' *

|[------> . . . . .| [0:1] T1 -> NNP * VBZ

|. [------] . . . .| [1:2] VBZ -> 'is' *

|[-------------] . . . .| [0:2] T1 -> NNP VBZ *

|[-------------> . . . .| [0:2] S -> T1 * T4

|. . [------] . . .| [2:3] DT -> 'the' *

|. . [------> . . .| [2:3] T2 -> DT * NN

|. . . [------] . .| [3:4] NN -> 'capital' *

|. . [-------------] . .| [2:4] T2 -> DT NN *

|. . [-------------> . .| [2:4] T4 -> T2 * T3

|. . . . [------] .| [4:5] IN -> 'of' *

|. . . . [------> .| [4:5] T3 -> IN * NNP

|. . . . . [------]| [5:6] NNP -> 'Karnataka' *

|. . . . . [------>| [5:6] T1 -> NNP * VBZ

|. . . . [-------------]| [4:6] T3 -> IN NNP *

|. . . . [-------------]| [4:6] T4 -> T3 *

|. . [---------------------------]| [2:6] T4 -> T2 T3 *

|[=========================================]| [0:6] S -> T1 T4 *

Total Edges : 24

(S

(T1 (NNP Bangalore) (VBZ is))

(T4 (T2 (DT the) (NN capital)) (T3 (IN of) (NNP Karnataka))))

Traceback (most recent call last):

File "ParsingChart.py", line 25, in <module>

tree.draw()

File "/opt/conda/lib/python3.6/site-packages/nltk/tree.py", line 690, in draw

draw_trees(self)

File "/opt/conda/lib/python3.6/site-packages/nltk/draw/tree.py", line 863, in draw_trees

TreeView(*trees).mainloop()

File "/opt/conda/lib/python3.6/site-packages/nltk/draw/tree.py", line 756, in __init__

self._top = Tk()

File "/opt/conda/lib/python3.6/tkinter/__init__.py", line 2020, in __init__

self.tk = _tkinter.create(screenName, baseName, className, interactive, wantobjects, useTk, sync, use)

_tkinter.TclError: no display name and no $DISPLAY environment variable

Chapter06/SimpleChunker.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python SimpleChunker.py

(S

(NP Ravi/NNP)

is/VBZ

(NP the/DT CEO/NNP)

of/IN

(NP a/DT Company/NNP)

./.)

(S

He/PRP

is/VBZ

very/RB

(NP powerful/JJ public/JJ speaker/NN)

also/RB

./.)

##Chapter06/Training.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python Training.py

Traceback (most recent call last):

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 80, in __load

try: root = nltk.data.find('{}/{}'.format(self.subdir, zip_name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource conll2000 not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('conll2000')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "Training.py", line 78, in <module>

test_regexp()

File "Training.py", line 48, in test_regexp

test_sents = conll2000.chunked_sents('test.txt', chunk_types=['NP'])

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 116, in __getattr__

self.__load()

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 81, in __load

except LookupError: raise e

File "/opt/conda/lib/python3.6/site-packages/nltk/corpus/util.py", line 78, in __load

root = nltk.data.find('{}/{}'.format(self.subdir, self.__name))

File "/opt/conda/lib/python3.6/site-packages/nltk/data.py", line 675, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource conll2000 not found.

Please use the NLTK Downloader to obtain the resource:

>>> import nltk

>>> nltk.download('conll2000')

Searched in:

- '/root/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/opt/conda/nltk_data'

- '/opt/conda/share/nltk_data'

- '/opt/conda/lib/nltk_data'

**********************************************************************

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# vi Training.py

(base) root@355403acf21a:/Natural-Language-Processing-with-Python-Cookbook/Chapter06# python Training.py

[nltk_data] Downloading package conll2000 to /root/nltk_data...

[nltk_data] Unzipping corpora/conll2000.zip.

ChunkParse score:

IOB Accuracy: 87.7%%

Precision: 70.6%%

Recall: 67.8%%

F-Measure: 69.2%%

ChunkParse score:

IOB Accuracy: 93.3%%

Precision: 82.3%%

Recall: 86.8%%

F-Measure: 84.5%%

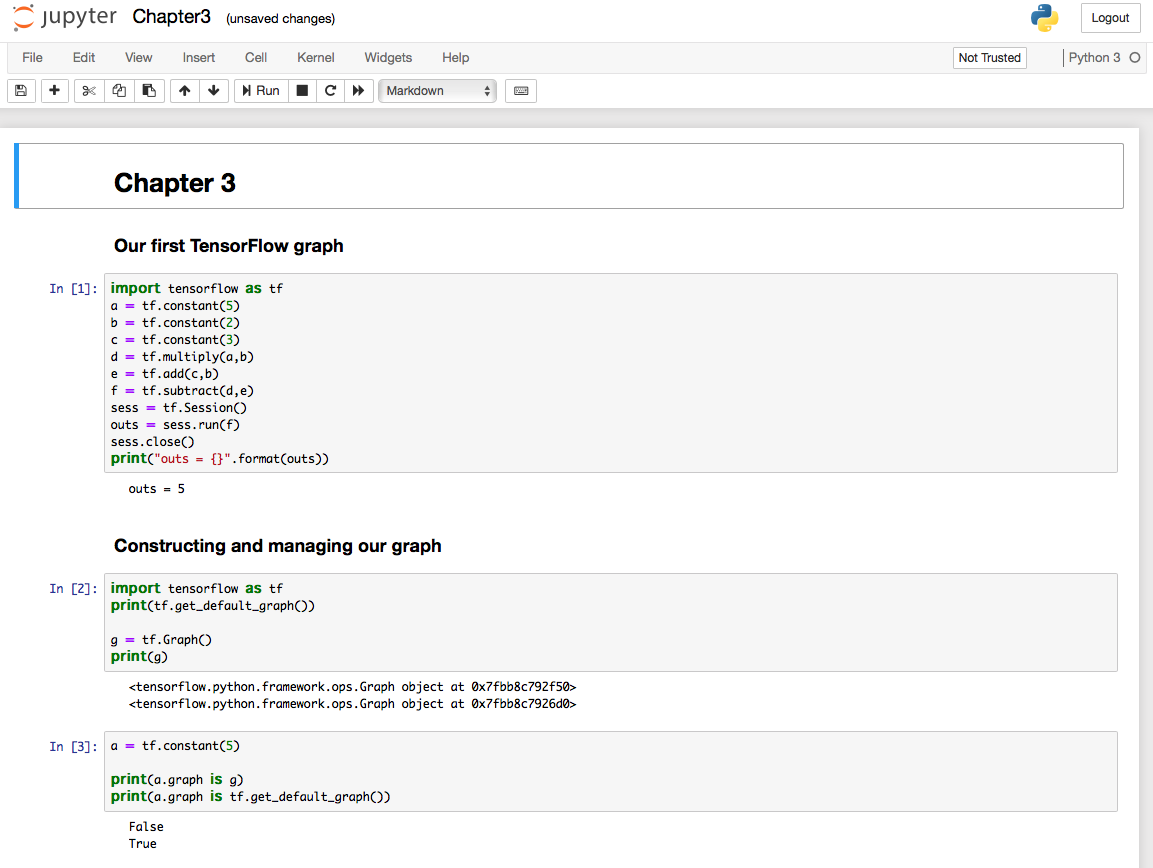

#Jupyter Notebook

# jupyter notebook --ip=0.0.0.0 --allow-root

[I 00:53:42.766 NotebookApp] JupyterLab extension loaded from /opt/conda/lib/python3.6/site-packages/jupyterlab

[I 00:53:42.768 NotebookApp] JupyterLab application directory is /opt/conda/share/jupyter/lab

[I 00:53:42.869 NotebookApp] Serving notebooks from local directory: /Oreilly-Learning-TensorFlow

[I 00:53:42.870 NotebookApp] The Jupyter Notebook is running at:

[I 00:53:42.870 NotebookApp] http://(3bf1f723168d or 127.0.0.1):8888/?token=59f9aea81386c391383ce20469943f0c5dcbd6e858b9c379

[I 00:53:42.870 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[W 00:53:42.871 NotebookApp] No web browser found: could not locate runnable browser.

[C 00:53:42.871 NotebookApp]

ブラウザで

localhost:8888

を開く

token=59f9aea81386c391383ce20469943f0c5dcbd6e858b9c379

の=の右側の数字をコピペする。

2.dockerを自力で構築する方へ

ここから下は、上記のpullしていただいたdockerをどういう方針で、どういう手順で作ったかを記録します。

上記のdockerを利用する上での参考資料です。本の続きを実行する上では必要ありません。

自力でdocker/anacondaを構築する場合の手順になります。

dockerfileを作る方法ではありません。ごめんなさい。

docker

ubuntu, debianなどのLinuxを、linux, windows, mac osから共通に利用できる仕組み。

利用するOSの設定を変更せずに利用できるのがよい。

同じ仕様で、大量の人が利用することができる。

ソフトウェアの開発元が公式に対応しているものと、利用者が便利に仕立てたものの両方が利用可能である。今回は、公式に配布しているものを、自分で仕立てて、他の人にも利用できるようにする。

python

DeepLearningの実習をPhthonで行って来た。

pythonを使う理由は、多くの機械学習の仕組みがpythonで利用できることと、Rなどの統計解析の仕組みもpythonから容易に利用できることがある。

anaconda

pythonには、2と3という版の違いと、配布方法の違いなどがある。

Anacondaでpython3をこの1年半利用してきた。

Anacondaを利用した理由は、統計解析のライブラリと、JupyterNotebookが初めから入っているからである。

docker公式配布

ubuntu, debianなどのOSの公式配布,gcc, anacondaなどの言語の公式配布などがある。

これらを利用し、docker-hubに登録することにより、公式配布の質の確認と、変更権を含む幅広い情報の共有ができる。dockerが公式配布するものではなく、それぞれのソフト提供者の公式配布という意味。

###docker pull

docker公式配布の利用は、URLからpullすることで実現する。

docker Anaconda

anacondaが公式配布しているものを利用。

$ docker pull kaizenjapan/anaconda-keras

Using default tag: latest

latest: Pulling from continuumio/anaconda3

Digest: sha256:e07b9ca98ac1eeb1179dbf0e0bbcebd87701f8654878d6d8ce164d71746964d1

Status: Image is up to date for continuumio/anaconda3:latest

$ docker run -it -p 8888:8888 continuumio/anaconda3 /bin/bash

実際にはkeras, tensorflow を利用していた他のpushをpull

apt

(base) root@d8857ae56e69:/# apt update

(base) root@d8857ae56e69:/# apt install -y procps

(base) root@d8857ae56e69:/# apt install -y vim

(base) root@d8857ae56e69:/# apt install -y apt-utils

(base) root@d8857ae56e69:/# apt install sudo

ソース git

(base) root@f19e2f06eabb:/# git clone https://github.com/PacktPublishing/Natural-Language-Processing-with-Python-Cookbook

conda

(base) root@f19e2f06eabb:/d# conda update --prefix /opt/conda anaconda

pip

(base) root@f19e2f06eabb:/d# pip install --upgrade pip

pip install PyPDF2

pip install docx

docker hubへの登録

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

caef766a99ff continuumio/anaconda3 "/usr/bin/tini -- /b…" 10 hours ago Up 10 hours 0.0.0.0:8888->8888/tcp sleepy_bassi

$ docker commit caef766a99ff kaizenjapan/anaconda-resheff

$ docker push kaizenjapan/anaconda-resheff

参考資料(reference)

なぜdockerで機械学習するか 書籍・ソース一覧作成中 (目標100)

https://qiita.com/kaizen_nagoya/items/ddd12477544bf5ba85e2

dockerで機械学習(1) with anaconda(1)「ゼロから作るDeep Learning - Pythonで学ぶディープラーニングの理論と実装」斎藤 康毅 著

https://qiita.com/kaizen_nagoya/items/a7e94ef6dca128d035ab

dockerで機械学習(2)with anaconda(2)「ゼロから作るDeep Learning2自然言語処理編」斎藤 康毅 著

https://qiita.com/kaizen_nagoya/items/3b80dfc76933cea522c6

dockerで機械学習(3)with anaconda(3)「直感Deep Learning」Antonio Gulli、Sujit Pal 第1章,第2章

https://qiita.com/kaizen_nagoya/items/483ae708c71c88419c32

dockerで機械学習(71) 環境構築(1) docker どっかーら、どーやってもエラーばっかり。

https://qiita.com/kaizen_nagoya/items/690d806a4760d9b9e040

dockerで機械学習(72) 環境構築(2) Docker for Windows

https://qiita.com/kaizen_nagoya/items/c4daa5cf52e9f0c2c002

dockerで機械学習(73) 環境構築(3) docker/linux/macos bash スクリプト, ms-dos batchファイル

https://qiita.com/kaizen_nagoya/items/3f7b39110b7f303a5558

dockerで機械学習(74) 環境構築(4) R 難関いくつ?

https://qiita.com/kaizen_nagoya/items/5fb44773bc38574bcf1c

dockerで機械学習(75)環境構築(5)docker関連ファイルの管理

https://qiita.com/kaizen_nagoya/items/4f03df9a42c923087b5d

OpenCVをPythonで動かそうとしてlibGL.soが無いって言われたけど解決した。

https://qiita.com/toshitanian/items/5da24c0c0bd473d514c8

サーバサイドにおけるmatplotlibによる作図Tips

https://qiita.com/TomokIshii/items/3a26ee4453f535a69e9e

Dockerでホストとコンテナ間でのファイルコピー

https://qiita.com/gologo13/items/7e4e404af80377b48fd5

Docker for Mac でファイル共有を利用する

https://qiita.com/seijimomoto/items/1992d68de8baa7e29bb5

「名古屋のIoTは名古屋のOSで」Dockerをどっかーらどうやって使えばいいんでしょう。TOPPERS/FMP on RaspberryPi with Macintosh編 5つの関門

https://qiita.com/kaizen_nagoya/items/9c46c6da8ceb64d2d7af

64bitCPUへの道 and/or 64歳の決意

https://qiita.com/kaizen_nagoya/items/cfb5ffa24ded23ab3f60

ゼロから作るDeepLearning2自然言語処理編 読書会の進め方(例)

https://qiita.com/kaizen_nagoya/items/025eb3f701b36209302e

Ubuntu 16.04 LTS で NVIDIA Docker を使ってみる

https://blog.amedama.jp/entry/2017/04/03/235901

Ethernet 記事一覧 Ethernet(0)

https://qiita.com/kaizen_nagoya/items/88d35e99f74aefc98794

Wireshark 一覧 wireshark(0)、Ethernet(48)

https://qiita.com/kaizen_nagoya/items/fbed841f61875c4731d0

線網(Wi-Fi)空中線(antenna)(0) 記事一覧(118/300目標)

https://qiita.com/kaizen_nagoya/items/5e5464ac2b24bd4cd001

C++ Support(0)

https://qiita.com/kaizen_nagoya/items/8720d26f762369a80514

Coding Rules(0) C Secure , MISRA and so on

https://qiita.com/kaizen_nagoya/items/400725644a8a0e90fbb0

Autosar Guidelines C++14 example code compile list(1-169)

https://qiita.com/kaizen_nagoya/items/8ccbf6675c3494d57a76

Error一覧(C/C++, python, bash...) Error(0)

https://qiita.com/kaizen_nagoya/items/48b6cbc8d68eae2c42b8

なぜdockerで機械学習するか 書籍・ソース一覧作成中 (目標100)

https://qiita.com/kaizen_nagoya/items/ddd12477544bf5ba85e2

言語処理100本ノックをdockerで。python覚えるのに最適。:10+12

https://qiita.com/kaizen_nagoya/items/7e7eb7c543e0c18438c4

プログラムちょい替え(0)一覧:4件

https://qiita.com/kaizen_nagoya/items/296d87ef4bfd516bc394

一覧の一覧( The directory of directories of mine.) Qiita(100)

https://qiita.com/kaizen_nagoya/items/7eb0e006543886138f39

官公庁・学校・公的団体(NPOを含む)システムの課題、官(0)

https://qiita.com/kaizen_nagoya/items/04ee6eaf7ec13d3af4c3

プログラマが知っていると良い「公序良俗」

https://qiita.com/kaizen_nagoya/items/9fe7c0dfac2fbd77a945

LaTeX(0) 一覧

https://qiita.com/kaizen_nagoya/items/e3f7dafacab58c499792

自動制御、制御工学一覧(0)

https://qiita.com/kaizen_nagoya/items/7767a4e19a6ae1479e6b

Rust(0) 一覧

https://qiita.com/kaizen_nagoya/items/5e8bb080ba6ca0281927

小川清最終講義、最終講義(再)計画, Ethernet(100) 英語(100) 安全(100)

https://qiita.com/kaizen_nagoya/items/e2df642e3951e35e6a53

文書履歴(document history)

ver. 0.10 初稿 20181024

最後までおよみいただきありがとうございました。

いいね 💚、フォローをお願いします。

Thank you very much for reading to the last sentence.

Please press the like icon 💚 and follow me for your happy life.