GPU で Tensorflow 動作させるための環境のセットアップを行いましたが、

いろいろと試行錯誤したので、手順化しました。

環境

使用した環境は以下の通り。

- GPU

- GT710

- OS

- Ubuntu 14.04

- Software

- Python 2.7

- Tensorflow 1.1.0

- CUDA 8.0

- CUDNN 8.0

手順

nvidia GPU ドライバーのインストール

リポジトリを追加し、インストール可能なドライバ一覧を表示します。

$ add-apt-repository ppa:xorg-edgers/ppa

$ apt-get update

$ apt-cache search 'nvidia-[0-9]+$'

nvidia-173 - NVIDIA legacy binary driver - version 173.14.39

nvidia-310 - Transitional package for nvidia-310

nvidia-319 - Transitional package for nvidia-319

nvidia-304 - NVIDIA legacy binary driver - version 304.135

nvidia-331 - Transitional package for nvidia-331

nvidia-340 - NVIDIA binary driver - version 340.102

nvidia-346 - Transitional package for nvidia-346

nvidia-367 - Transitional package for nvidia-375

nvidia-375 - NVIDIA binary driver - version 375.66

nvidia-352 - Transitional package for nvidia-375

nvidia-361 - NVIDIA binary driver - version 361.93.02

nvidia-343 - NVIDIA binary driver - version 343.19

NVIDIAドライバダウンロードのページに行き、

自分の GPU 情報を選択し、バージョンを確認します。

私の場合、バージョンは 375.66だったので、

nvidia-375をインストールし、OS を再起動します。

$ apt-get install nvidia-375

$ reboot

OS が起動したら、GPU がうまく認識しているか確認します。

Sun Jun 11 19:10:42 2017

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 375.66 Driver Version: 375.66 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce GT 710 Off | 0000:01:00.0 N/A | N/A |

| 50% 39C P8 N/A / N/A | 45MiB / 973MiB | N/A Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 Not Supported |

+-----------------------------------------------------------------------------+

きちんと表示されました。

残念ながら、"GT710" だと実行プロセスの表示が "Not Supported" となってしまうようです。

CUDA 8.0 のインストール

CUDA のダウンロードサイトから deb(local) のインストーラをダウンロードし、サーバへアップロードします。

$ conda update conda

$ sudo dpkg -i cuda-repo-ubuntu1404-8-0-local-ga2_8.0.61-1_amd64.deb

$ sudo apt-get update

$ sudo apt-get install cuda -y

$ echo 'export CUDA_HOME=/usr/local/cuda-8.0' >> ~/.bashrc

$ echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:${CUDA_HOME}/lib64' >> ~/.bashrc

$ echo 'export PATH=$PATH:${CUDA_HOME}/bin' >> ~/.bashrc

cuDNN 8.0 のインストール

続けて cuDNN のダウンロードサイトからcuDNN v6.0 Library for Linux をダウンロードし、サーバへアップします。

$ mkdir .cudnn

$ tar xvzf cudnn-8.0-linux-x64-v6.0.tgz

$ cp cuda/include/cudnn.h /usr/local/cuda-8.0/include

$ cp cuda/lib64/libcudnn* /usr/local/cuda-8.0/lib64

$ chmod a+r /usr/local/cuda-8.0/lib64/libcudnn*

Chainerのインストール

$ pip install chainer

Tensorflow GPU 版のインストール

今回は、Tensorflow(GPU版) の 1.1.0 をインストールします。

$ pip install --upgrade https://storage.googleapis.com/tensorflow/linux/gpu/tensorflow_gpu-1.1.0-cp27-none-linux_x86_64.whl

無事に tensorflow-gpu がインストールされました。

$ pip show tensorflow-gpu

Name: tensorflow-gpu

Version: 1.1.0

Summary: TensorFlow helps the tensors flow

Home-page: http://tensorflow.org/

Author: Google Inc.

Author-email: opensource@google.com

License: Apache 2.0

Location: /usr/local/lib/python2.7/dist-packages

Requires: six, protobuf, werkzeug, mock, numpy, wheel

テスト

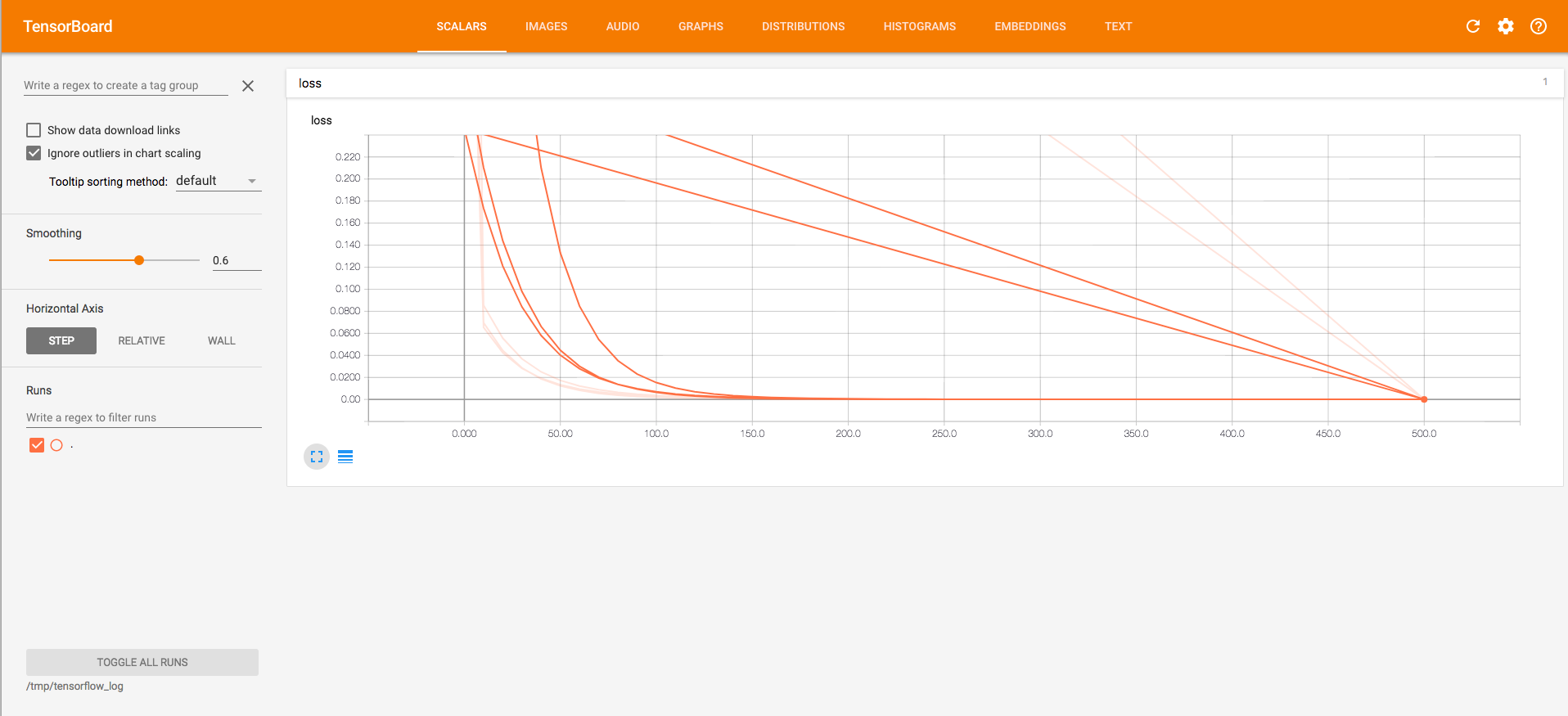

TensorBoard 実行テスト

こちらの記事を参考にしました。

まずは Tensorflow で処理を実行。

ちゃんと GPU を使えているようです。

$ cd workspace/

$ vi test_tensorboard.py

$ python test_tensorboard.py

...

name: GeForce GT 710

major: 3 minor: 5 memoryClockRate (GHz) 0.954

pciBusID 0000:01:00.0

Total memory: 973.06MiB

Free memory: 917.94MiB

2017-06-11 18:39:00.647707: I tensorflow/core/common_runtime/gpu/gpu_device.cc:908] DMA: 0

2017-06-11 18:39:00.647716: I tensorflow/core/common_runtime/gpu/gpu_device.cc:918] 0: Y

2017-06-11 18:39:00.647726: I tensorflow/core/common_runtime/gpu/gpu_device.cc:977] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GT 710, pci bus id: 0000:01:00.0)

step = 0 acc = 0.760875 W = [[ 0.65886593 0.49195457 -0.63697767]

[-0.63696146 -0.22959542 0.19458175]

[ 0.79449177 -0.0710125 -0.29113555]

[-0.76938224 0.56564403 -0.4688437 ]

[ 0.07713437 0.33765936 -0.62760568]] b = [ 0. 0. 0.]

...

Tensorflow の処理が完了したら、続けてサーバを起動します。

ブラウザから "http://:6006" にアクセスすると、それらしく表示できてます。

Tensorflow を使用した Neural Style

Neural Style とは、写真に絵画のスタイルを適用させて変換するというものです。

出典: https://github.com/jcjohnson/neural-style

これをテストとして選択した理由は、個人的な趣味です。

内部のアルゴリズムについて知りたい方は、こちらの記事が参考になるかと思います。

いろいろな人がアレンジして GitHub 等にアップされているようですが、

今回は、こちらからソースコードを git cloneして使用しました。

CPUを使用した場合、所要時間は約3時間、メモリは最大で約1GB使用されていました。

GPUではどれくらい早くなるのか試してみます。

Total memory: 973.06MiB

Free memory: 917.94MiB

2017-06-12 01:05:52.124247: I tensorflow/core/common_runtime/gpu/gpu_device.cc:908] DMA: 0

2017-06-12 01:05:52.124255: I tensorflow/core/common_runtime/gpu/gpu_device.cc:918] 0: Y

2017-06-12 01:05:52.124269: I tensorflow/core/common_runtime/gpu/gpu_device.cc:977] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GT 710, pci bus id: 0000:01:00.0)

2017-06-12 01:05:59.972866: I tensorflow/core/common_runtime/gpu/gpu_device.cc:977] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GT 710, pci bus id: 0000:01:00.0)

2017-06-12 01:06:23.256214: I tensorflow/core/common_runtime/gpu/gpu_device.cc:977] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GT 710, pci bus id: 0000:01:00.0)

Optimization started...

Iteration 1/1000

2017-06-12 01:06:26.068541: W tensorflow/core/common_runtime/bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 869.06MiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2017-06-12 01:06:30.749864: W tensorflow/core/common_runtime/bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 435.38MiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2017-06-12 01:06:33.123894: W tensorflow/core/common_runtime/bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 140.36MiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2017-06-12 01:06:33.989175: W tensorflow/core/common_runtime/bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 217.69MiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2017-06-12 01:06:33.989209: W tensorflow/core/common_runtime/bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 278.55MiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

...

Iteration 1/1000

2017-06-12 00:02:09.760816: W tensorflow/core/common_runtime/bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 869.06MiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

...

2017-06-12 00:02:28.112595: I tensorflow/core/common_runtime/bfc_allocator.cc:643] Bin (268435456): Total Chunks: 0, Chunks in use: 0 0B allocated for chunks. 0B client-requested for chunks. 0B in use in bin. 0B client-requested in use in bin.

2017-06-12 00:02:28.112610: I tensorflow/core/common_runtime/bfc_allocator.cc:660] Bin for 24.19MiB was 16.00MiB, Chunk State:

2017-06-12 00:02:28.112624: I tensorflow/core/common_runtime/bfc_allocator.cc:678] Chunk at 0x401060000 of size 1280

2017-06-12 00:02:28.112636: I tensorflow/core/common_runtime/bfc_allocator.cc:678] Chunk at 0x401060500 of size 1280

2017-06-12 00:02:28.112645: I tensorflow/core/common_runtime/bfc_allocator.cc:678] Chunk at 0x401060a00 of size 1280

2017-06-12 00:02:28.112655: I tensorflow/core/common_runtime/bfc_allocator.cc:678] Chunk at 0x401060f00 of size 256

...

2017-06-12 00:02:28.114124: I tensorflow/core/common_runtime/bfc_allocator.cc:696] 3 Chunks of size 50724864 totalling 145.12MiB

2017-06-12 00:02:28.114138: I tensorflow/core/common_runtime/bfc_allocator.cc:696] 3 Chunks of size 101253120 totalling 289.69MiB

2017-06-12 00:02:28.114181: I tensorflow/core/common_runtime/bfc_allocator.cc:700] Sum Total of in-use chunks: 713.83MiB

2017-06-12 00:02:28.114200: I tensorflow/core/common_runtime/bfc_allocator.cc:702] Stats:

Limit: 752812032

InUse: 748501248

MaxInUse: 748501248

NumAllocs: 156

MaxAllocSize: 280330240

2017-06-12 00:02:28.114230: W tensorflow/core/common_runtime/bfc_allocator.cc:277] ***************************************************************************************************x

2017-06-12 00:02:28.114257: W tensorflow/core/framework/op_kernel.cc:1152] Resource exhausted: OOM when allocating tensor with shape[1,256,129,192]

Traceback (most recent call last):

File "neural_style.py", line 206, in <module>

main()

File "neural_style.py", line 177, in main

checkpoint_iterations=options.checkpoint_iterations

File "/root/neural-style/stylize.py", line 148, in stylize

train_step.run()

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 1552, in run

_run_using_default_session(self, feed_dict, self.graph, session)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 3776, in _run_using_default_session

session.run(operation, feed_dict)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.py", line 778, in run

run_metadata_ptr)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.py", line 982, in _run

feed_dict_string, options, run_metadata)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.py", line 1032, in _do_run

target_list, options, run_metadata)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/client/session.py", line 1052, in _do_call

raise type(e)(node_def, op, message)

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[1,256,129,192]

[[Node: Conv2D_7 = Conv2D[T=DT_FLOAT, data_format="NHWC", padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/gpu:0"](Relu_6, Const_7)]]

Caused by op u'Conv2D_7', defined at:

File "neural_style.py", line 206, in <module>

main()

File "neural_style.py", line 177, in main

checkpoint_iterations=options.checkpoint_iterations

File "/root/neural-style/stylize.py", line 89, in stylize

net = vgg.net_preloaded(vgg_weights, image, pooling)

File "/root/neural-style/vgg.py", line 42, in net_preloaded

current = _conv_layer(current, kernels, bias)

File "/root/neural-style/vgg.py", line 54, in _conv_layer

padding='SAME')

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/gen_nn_ops.py", line 403, in conv2d

data_format=data_format, name=name)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py", line 768, in apply_op

op_def=op_def)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 2336, in create_op

original_op=self._default_original_op, op_def=op_def)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/ops.py", line 1228, in __init__

self._traceback = _extract_stack()

ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape[1,256,129,192]

[[Node: Conv2D_7 = Conv2D[T=DT_FLOAT, data_format="NHWC", padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/gpu:0"](Relu_6, Const_7)]]

nvidia-smiコマンドで GPUメモリの使用量を5秒おきに観察してみると、物理的にも限界っぽい..

45MiB / 973MiB

45MiB / 973MiB

45MiB / 973MiB

774MiB / 973MiB

774MiB / 973MiB

774MiB / 973MiB

774MiB / 973MiB

774MiB / 973MiB

774MiB / 973MiB

774MiB / 973MiB

828MiB / 973MiB

842MiB / 973MiB

842MiB / 973MiB

842MiB / 973MiB

45MiB / 973MiB

以下のようにGPUの最大メモリを 80% に指定することで、無事に "ran out of memory" を回避しました。

config = tf.ConfigProto(

gpu_options=tf.GPUOptions(

per_process_gpu_memory_fraction=0.8

)

)

tf.Session(config=config)

CPU と同様に処理した結果、かかった時間は、約4時間!

CPU でやったときより悪化してる..

Tensorflow に必要なハードウェア

いろいろ検索するとDeep learningに必須なハード:GPUという記事がありました。

こちらの記事によると Tensorflow を動作させるには、CC(Compute Capability)が 3.0以上ある必要があるとのことです。

nvidiaのページで CC の値を確認することができます。

GT710 は.. "3.5" !

実質使うとなると "5.0" 以上はあったほうが良いということでしょうか。

そのうち GTX1060 あたり買おうかな..

参考URL

- UbuntuでNVIDIAのドライバをインストールする

- p2インスタンス上にCUDA, cuDNNを用いたChainer環境を構築する

- [TF]Tensorboardを使って学習結果をVisualizationしてみた

- anishathalye/neural-style

- TensorflowでGPUを制限・無効化する

- Deep learningに必須なハード:GPU

- Neural Style Transfer: Prismaの背景技術を解説する