はじめに

HoloLensアドベントカレンダー2020の19日目の記事です。

前回は「文字を読んで」と言うと、画像からテキスト抽出し読み上げました。今回は、Custom Visionを用いて小銭を検出し、いくらか答えてくれるようにしました。「ヨンシル、これいくら?」

開発環境

- Azure

- Custom Vision

- Speech SDK 1.14.0

- Unity 2019.4.1f1

- MRTK 2.5.1

- Windows 10 PC

- HoloLens2

導入

1.前回の記事まで終わらせてください。

2.まずは、Custom Visionで小銭を学習します。手元にあった1円、10円、100円のみを学習します。

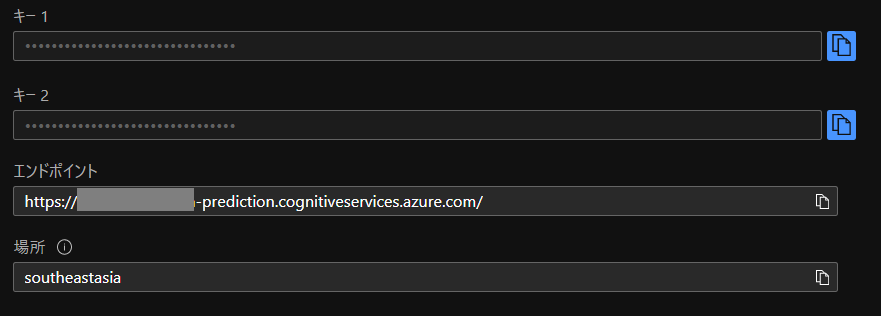

3.Azureポータルから「Custom Vision」を作成。キーをメモっておきます。

|

|

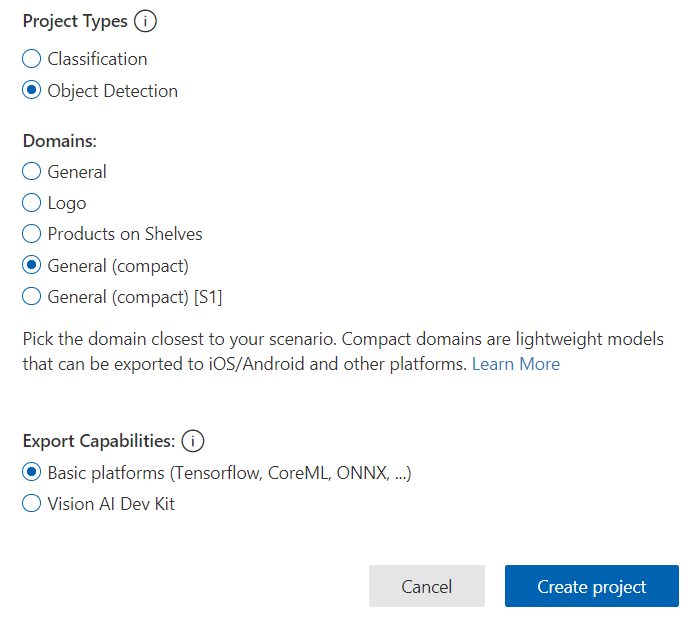

4.Custom Visionにサインインし、新しくプロジェクトを作成します。プロジェクトタイプはObject Detection、学習したモデルをエクスポートしてエッジ推論もできるようにGeneral(compact)、Export CapabilitiesをBasic platformsに設定します。

|

|

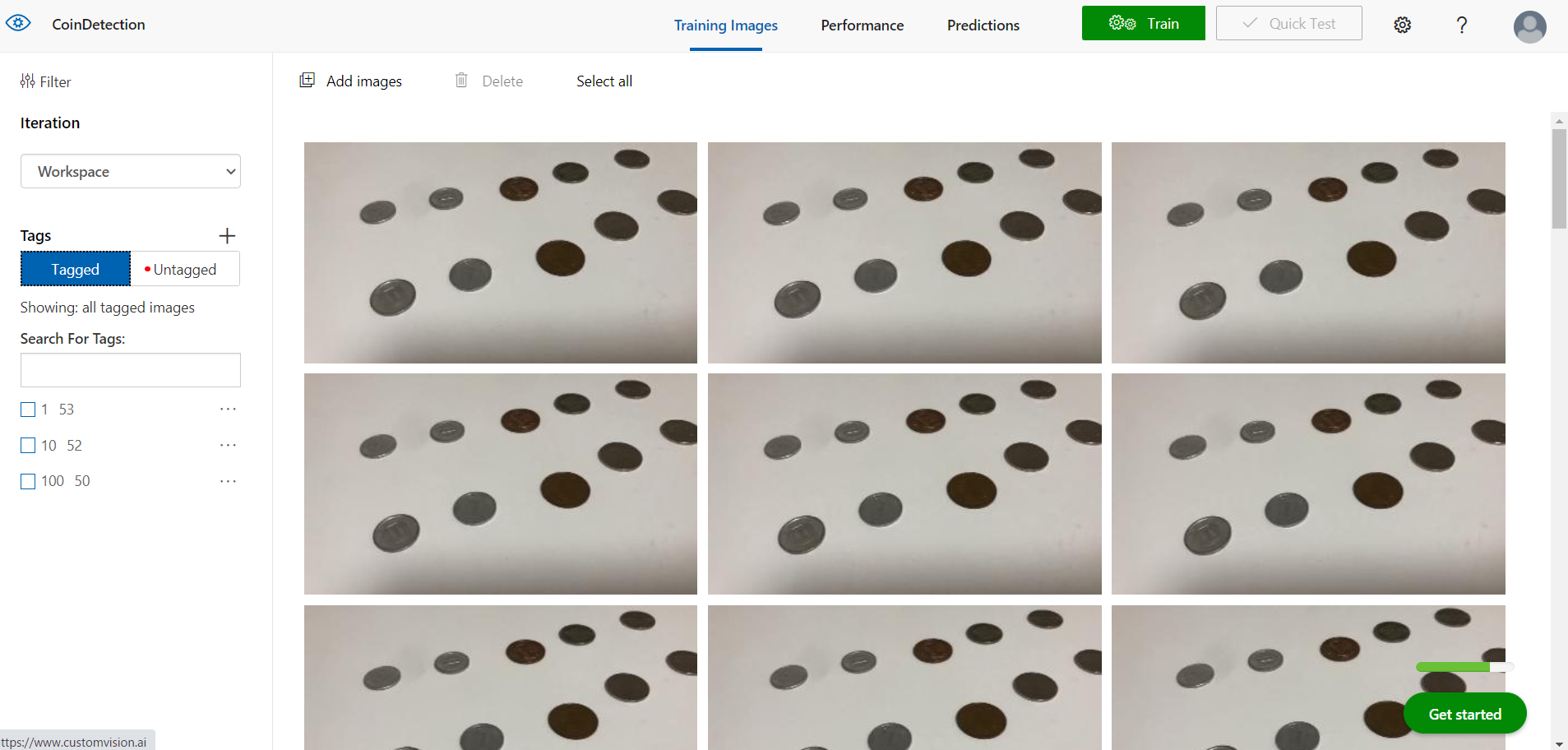

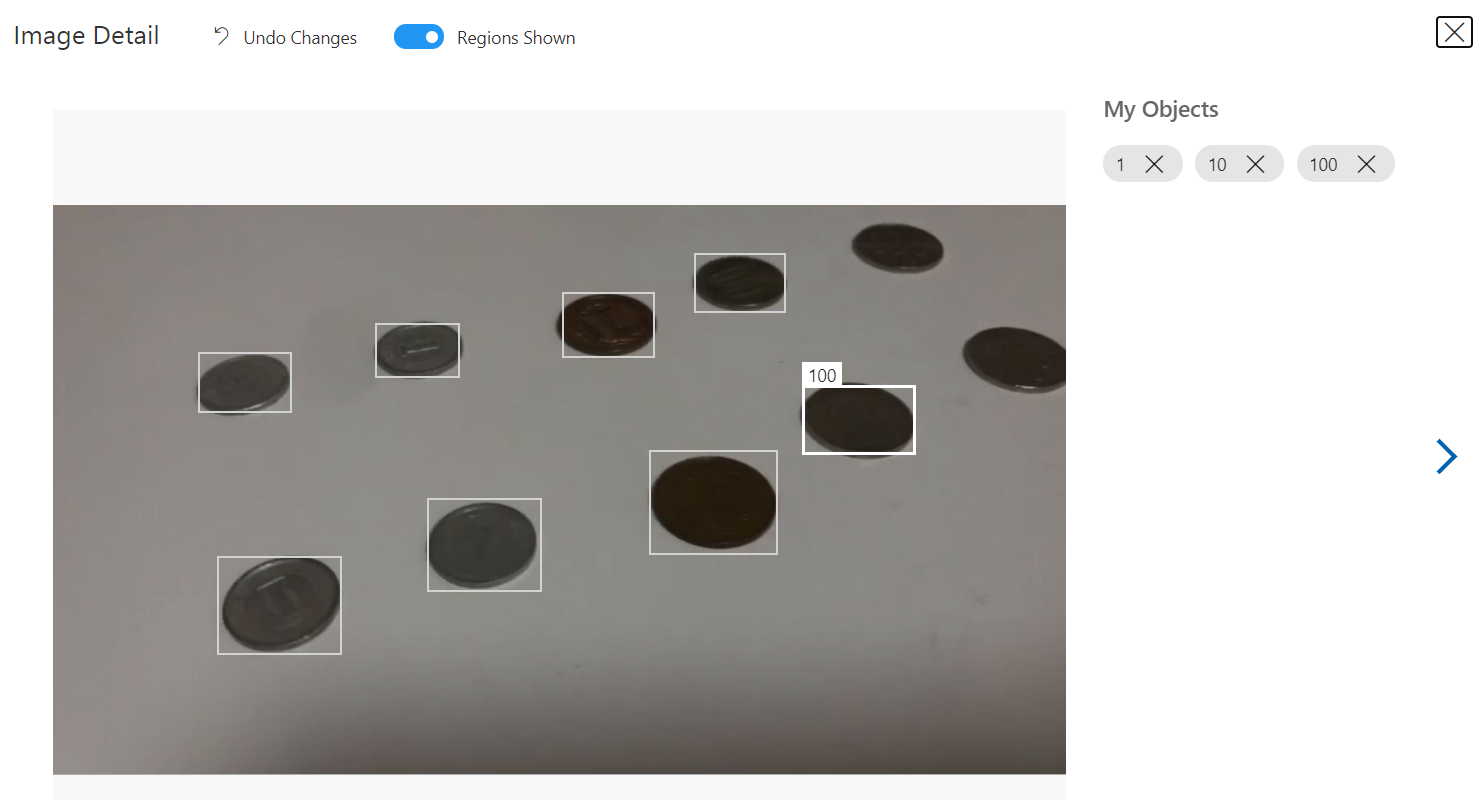

5.小銭を撮影し、学習データをアップロード、タグを付けます。

|

|

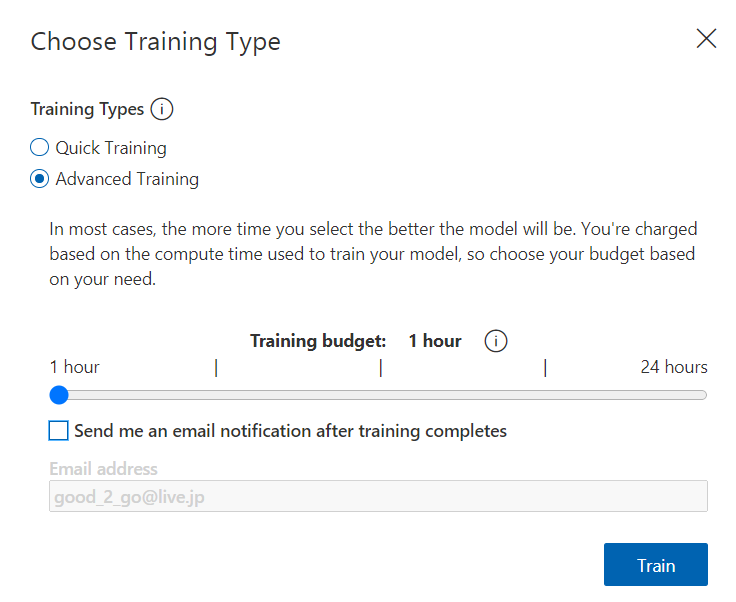

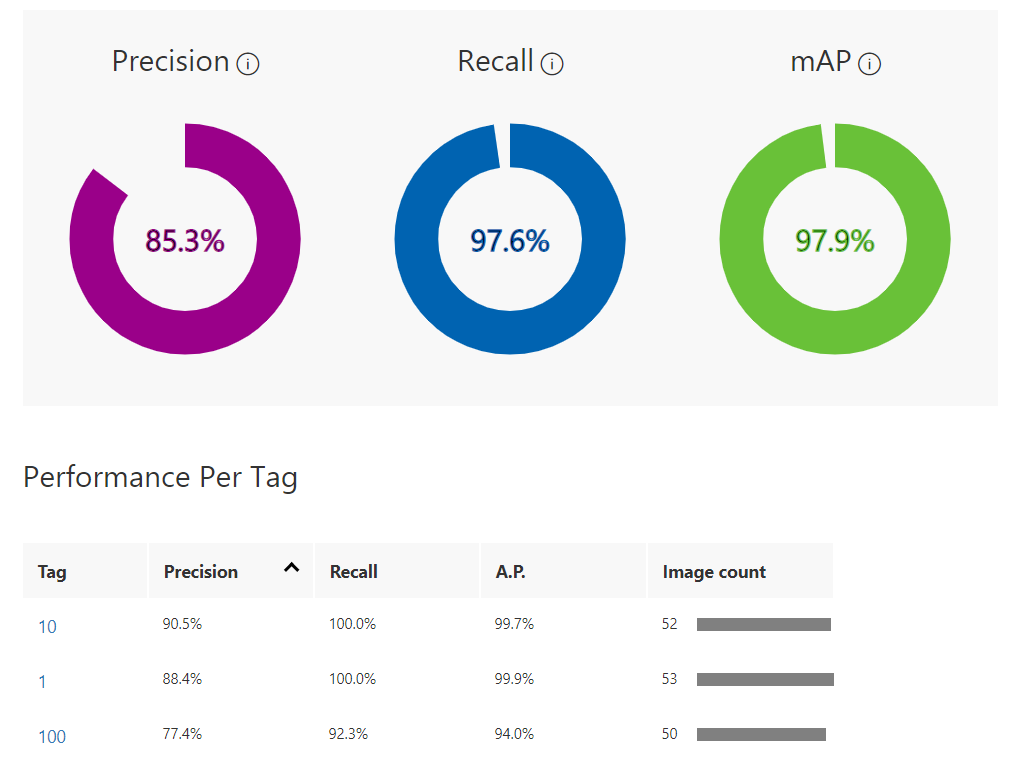

6.Advanced Trainingで1時間学習させました。

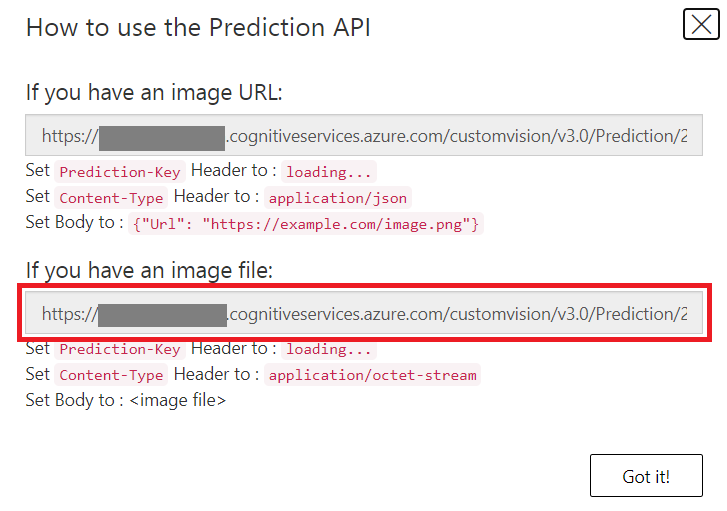

7.学習した結果がこちらです。作ったモデルはPublishし、画像ファイルから推論するエンドポイントをメモっておきます。

|

|

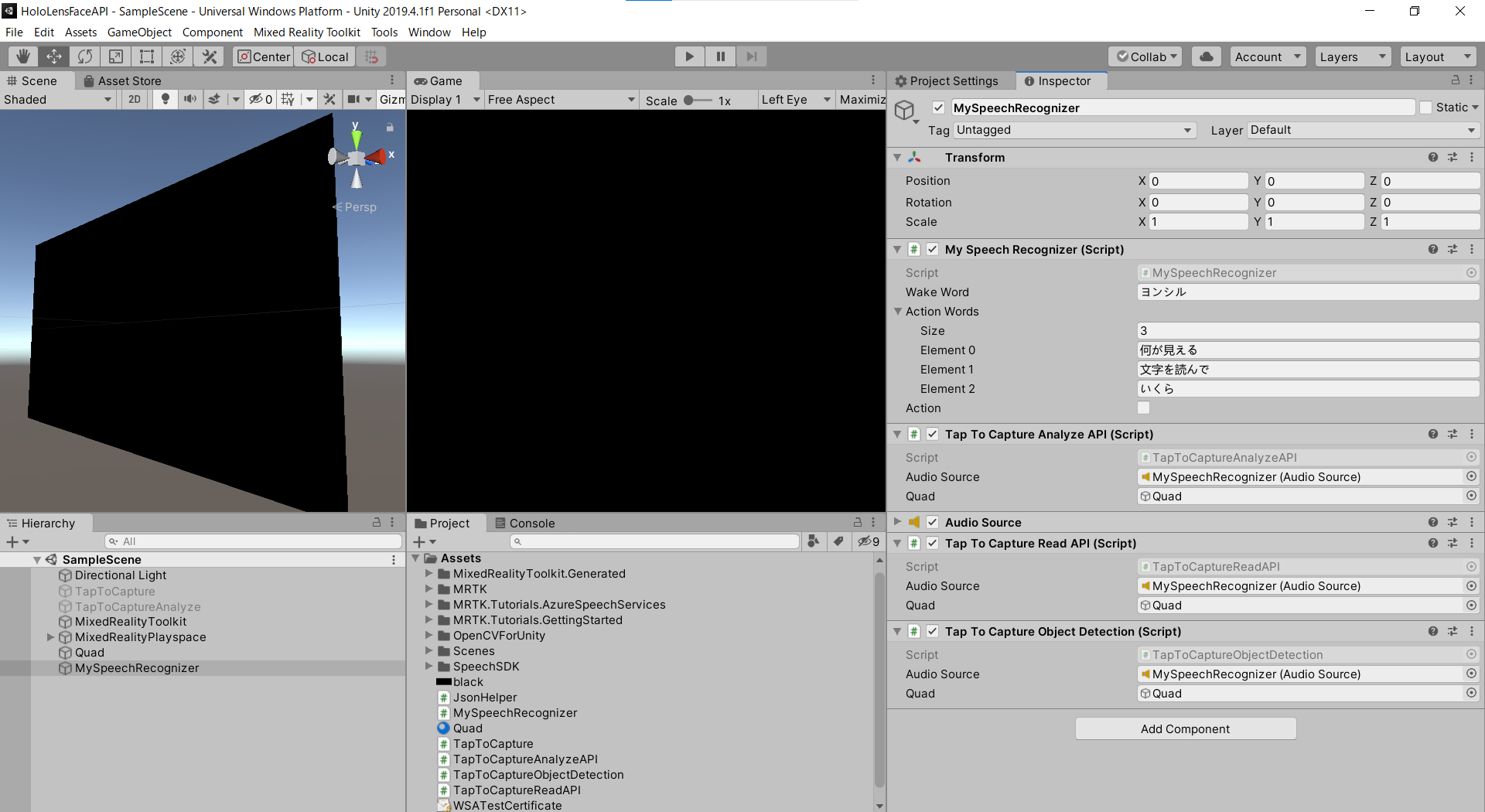

8.Unityのプロジェクトはこんな感じ。前回のMySpeechRecognizerのActionワードに「いくら」を追加します。新しく「TapToCaptureObjectDetection.cs」をAdd Componentし、「いくら」を音声認識すると、画像をキャプチャし、物体検出、読み上げという流れになります。

9.MySpeechRecognizer.csのUpdate関数を次のように編集し、「いくら」を音声認識するとTapToCaptureObjectDetection.csのAirTap関数を実行します。

async void Update()

{

if (recognizedString != "")

{

// Debug.Log(recognizedString);

if (action){

foreach(string ActionWord in ActionWords){

if (recognizedString.ToLower().Contains(ActionWord.ToLower()))

{

Debug.Log("Action");

if(ActionWord == "何が見える"){

Debug.Log("Analyze Image");

this.GetComponent<TapToCaptureAnalyzeAPI>().AirTap();

}else if(ActionWord == "文字を読んで"){

Debug.Log("Read");

this.GetComponent<TapToCaptureReadAPI>().AirTap();

}else if(ActionWord == "いくら"){

Debug.Log("Custom Vision");

this.GetComponent<TapToCaptureObjectDetection>().AirTap();

}

action = false;

}

}

}else if (recognizedString.ToLower().Contains(WakeWord.ToLower()))

{

Debug.Log("Wake");

await this.GetComponent<TapToCaptureAnalyzeAPI>().SynthesizeAudioAsync("はい");

action = true;

}

}

}

9.「TapToCaptureObjectDetection.cs」スクリプトはこちらになります。

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System;

using UnityEngine;

using Microsoft.MixedReality.Toolkit.Utilities;

using System.Threading.Tasks;

using OpenCVForUnity.CoreModule;

using OpenCVForUnity.UnityUtils;

using OpenCVForUnity.ImgprocModule;

// SpeechSDK ここから

using System.IO;

using System.Text;

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Audio;

// SpeechSDK ここまで

public class TapToCaptureObjectDetection : MonoBehaviour

{

// CustomVision ここから

private string cv_endpoint = "<Insert Your Prediction URL>";

private string cv_subscription_key = "<Insert Your Key>";

[System.Serializable]

public class CustomVisionResult

{

public string id;

public string project;

public string iteration;

public string created;

public Predictions[] predictions;

// https://baba-s.hatenablog.com/entry/2016/01/20/100000

public override string ToString()

{

return JsonUtility.ToJson( this, true );

}

}

[System.Serializable]

public class Predictions

{

public float probability;

public string tagId;

public string tagName;

public BoundingBox boundingBox;

}

[System.Serializable]

public class BoundingBox

{

public float left;

public float top;

public float width;

public float height;

}

// https://mathwords.net/iou

public float CalculateIOU(BoundingBox box0, BoundingBox box1)

{

var x1 = Math.Max(box0.left, box1.left);

var y1 = Math.Max(box0.top, box1.top);

var x2 = Math.Min(box0.left + box0.width, box1.left + box1.width);

var y2 = Math.Min(box0.top + box0.height, box1.top + box1.height);

var w = Math.Max(0, x2 - x1);

var h = Math.Max(0, y2 - y1);

return w * h / ((box0.width * box0.height) + (box1.width * box1.height) - (w * h));

}

// Custom Vision ここまで

// SpeechSDK ここから

public AudioSource audioSource;

public async Task SynthesizeAudioAsync(string text)

{

var config = SpeechConfig.FromSubscription("YourSubscriptionKey", "YourServiceRegion");

var synthesizer = new SpeechSynthesizer(config, null); // nullを省略するとPCのスピーカーから出力されるが、HoloLensでは出力されない。

string ssml = "<speak version=\"1.0\" xmlns=\"https://www.w3.org/2001/10/synthesis\" xml:lang=\"ja-JP\"> <voice name=\"ja-JP-Ichiro\">" + text + "</voice> </speak>";

// Starts speech synthesis, and returns after a single utterance is synthesized.

// using (var result = synthesizer.SpeakTextAsync(text).Result)

using (var result = synthesizer.SpeakSsmlAsync(ssml).Result)

{

// Checks result.

if (result.Reason == ResultReason.SynthesizingAudioCompleted)

{

// Native playback is not supported on Unity yet (currently only supported on Windows/Linux Desktop).

// Use the Unity API to play audio here as a short term solution.

// Native playback support will be added in the future release.

var sampleCount = result.AudioData.Length / 2;

var audioData = new float[sampleCount];

for (var i = 0; i < sampleCount; ++i)

{

audioData[i] = (short)(result.AudioData[i * 2 + 1] << 8 | result.AudioData[i * 2]) / 32768.0F;

}

// The output audio format is 16K 16bit mono

var audioClip = AudioClip.Create("SynthesizedAudio", sampleCount, 1, 16000, false);

audioClip.SetData(audioData, 0);

audioSource.clip = audioClip;

audioSource.Play();

// newMessage = "Speech synthesis succeeded!";

}

else if (result.Reason == ResultReason.Canceled)

{

var cancellation = SpeechSynthesisCancellationDetails.FromResult(result);

// newMessage = $"CANCELED:\nReason=[{cancellation.Reason}]\nErrorDetails=[{cancellation.ErrorDetails}]\nDid you update the subscription info?";

}

}

}

// SpeechSDK ここまで

public GameObject quad;

UnityEngine.Windows.WebCam.PhotoCapture photoCaptureObject = null;

Texture2D targetTexture = null;

private bool waitingForCapture;

void Start(){

waitingForCapture = false;

}

public void AirTap()

{

if (waitingForCapture) return;

waitingForCapture = true;

Resolution cameraResolution = UnityEngine.Windows.WebCam.PhotoCapture.SupportedResolutions.OrderByDescending((res) => res.width * res.height).First();

targetTexture = new Texture2D(cameraResolution.width, cameraResolution.height);

// PhotoCapture オブジェクトを作成します

UnityEngine.Windows.WebCam.PhotoCapture.CreateAsync(false, delegate (UnityEngine.Windows.WebCam.PhotoCapture captureObject) {

photoCaptureObject = captureObject;

UnityEngine.Windows.WebCam.CameraParameters cameraParameters = new UnityEngine.Windows.WebCam.CameraParameters();

cameraParameters.hologramOpacity = 0.0f;

cameraParameters.cameraResolutionWidth = cameraResolution.width;

cameraParameters.cameraResolutionHeight = cameraResolution.height;

cameraParameters.pixelFormat = UnityEngine.Windows.WebCam.CapturePixelFormat.BGRA32;

// カメラをアクティベートします

photoCaptureObject.StartPhotoModeAsync(cameraParameters, delegate (UnityEngine.Windows.WebCam.PhotoCapture.PhotoCaptureResult result) {

// 写真を撮ります

photoCaptureObject.TakePhotoAsync(OnCapturedPhotoToMemoryAsync);

});

});

}

async void OnCapturedPhotoToMemoryAsync(UnityEngine.Windows.WebCam.PhotoCapture.PhotoCaptureResult result, UnityEngine.Windows.WebCam.PhotoCaptureFrame photoCaptureFrame)

{

// ターゲットテクスチャに RAW 画像データをコピーします

photoCaptureFrame.UploadImageDataToTexture(targetTexture);

byte[] bodyData = targetTexture.EncodeToJPG();

Response response = new Response();

Dictionary<string, string> headers = new Dictionary<string, string>();

headers.Add("Prediction-key", cv_subscription_key);

try

{

string query = cv_endpoint;

// headers.Add("Content-Type": "application/octet-stream");

response = await Rest.PostAsync(query, bodyData, headers, -1, true);

}

catch (Exception e)

{

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

return;

}

if (!response.Successful)

{

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

return;

}

Debug.Log(response.ResponseCode);

// Debug.Log(response.ResponseBody);

CustomVisionResult results = JsonUtility.FromJson<CustomVisionResult>(response.ResponseBody);

Debug.Log(results);

int coin = 0;

Mat imgMat = new Mat(targetTexture.height, targetTexture.width, CvType.CV_8UC4);

Utils.texture2DToMat(targetTexture, imgMat);

for(int i = 0; i < results.predictions.Length; i++){ // probabilityは降順

if (results.predictions[i].probability > 0.8f){

for (int j = i+1; j < results.predictions.Length; j++){

if(CalculateIOU(results.predictions[i].boundingBox, results.predictions[j].boundingBox) > 0.2f){ // だいぶ被ってたら消す

results.predictions[j].probability = 0.0f;

}

}

// Debug.Log(results.predictions[i].tagName);

coin += Int32.Parse(results.predictions[i].tagName);

Imgproc.putText(imgMat, results.predictions[i].tagName, new Point(results.predictions[i].boundingBox.left*targetTexture.width, results.predictions[i].boundingBox.top*targetTexture.height-10), Imgproc.FONT_HERSHEY_SIMPLEX, 2, new Scalar(255, 255, 0, 255), 4, Imgproc.LINE_AA, false);

Imgproc.rectangle(imgMat, new Point(results.predictions[i].boundingBox.left*targetTexture.width, results.predictions[i].boundingBox.top*targetTexture.height), new Point(results.predictions[i].boundingBox.left*targetTexture.width + results.predictions[i].boundingBox.width*targetTexture.width, results.predictions[i].boundingBox.top*targetTexture.height + results.predictions[i].boundingBox.height*targetTexture.height), new Scalar(255, 255, 0, 255), 4);

}

}

Texture2D texture = new Texture2D(imgMat.cols(), imgMat.rows(), TextureFormat.RGBA32, false);

Utils.matToTexture2D(imgMat, texture);

Renderer quadRenderer = quad.GetComponent<Renderer>() as Renderer;

quadRenderer.material.SetTexture("_MainTex", texture);

// SpeechSDK 追加分ここから

if (coin == 0){

await SynthesizeAudioAsync("すみません、わかりませんでした。"); // jp

}else{

Debug.Log(coin.ToString()+"円です。");

await SynthesizeAudioAsync(coin.ToString()+"円です。"); // jp

}

// SpeechSDK 追加分ここまで

// カメラを非アクティブにします

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

}

void OnStoppedPhotoMode(UnityEngine.Windows.WebCam.PhotoCapture.PhotoCaptureResult result)

{

// photo capture のリソースをシャットダウンします

photoCaptureObject.Dispose();

photoCaptureObject = null;

waitingForCapture = false;

}

}

10.エンドポイントとキーをメモっておいたものを貼りつけます。MRTKのRestライブラリを用いて、キャプチャした画像をPOSTします。

11.レスポンスは次のような形で返ってくるので、CustomVisionResultクラス、Predictionsクラス、BoundingBoxクラスを作成しました。

{"id":"8498c190-caae-4dc0-b98f-55d95239ac8c","project":"2b7ff8c6-64d3-42d8-a9cf-df60a99eec38","iteration":"ea198606-c388-4ec7-99bf-b7badbfda81d","created":"2020-12-20T16:15:59.129Z","predictions":[{"probability":0.9034805,"tagId":"8faabbcc-452a-4bf7-8f1b-fdacad8c923e","tagName":"100","boundingBox":{"left":0.46884796,"top":0.39544287,"width":0.09181544,"height":0.13678041}},{"probability":0.8434237,"tagId":"8faabbcc-452a-4bf7-8f1b-fdacad8c923e","tagName":"100","boundingBox":{"left":0.27559033,"top":0.2615706,"width":0.067119986,"height":0.093027055}},{"probability":0.8418253,"tagId":"8faabbcc-452a-4bf7-8f1b-fdacad8c923e","tagName":"100","boundingBox":{"left":0.34035426,"top":0.2708075,"width":0.06956527,"height":0.0960823}},...

12.検出できたら、Probabilityが0.8以上のものを選びます。複数検出されている場合はIoUを計算し、BoundingBoxがだいぶ重なっているもの&Probabilityの低い方は削除します。

13.検出結果からいくらか計算して読み上げます。

実行

動画のように、小銭を数えられるようになりました!結構間違えるので、学習データを増やす必要があります。

小銭数えれるようになった #HoloLens2 #MRTK #Azure #CognitiveServices #CustomVision #Unity pic.twitter.com/2MI94x9zvF

— がちもとさん@熊本 (@sotongshi) December 19, 2020

お疲れ様でした。