はじめに

HoloLensアドベントカレンダー2020の13日目の記事です。

前回は「何が見える?」と質問したら、画像をキャプチャして説明文を生成し、読み上げを行いました。今回は、「文字を読んで」と言うと、画像からテキストを抽出し、読み上げてみます。「ヨンシル、文字を読んで」

開発環境

- Azure

- Computer Vision API (Read API)

- Speech SDK 1.14.0

- Unity 2019.4.1f1

- MRTK 2.5.1

- Windows 10 PC

- HoloLens2

導入

1.前回の記事まで終わらせてください。

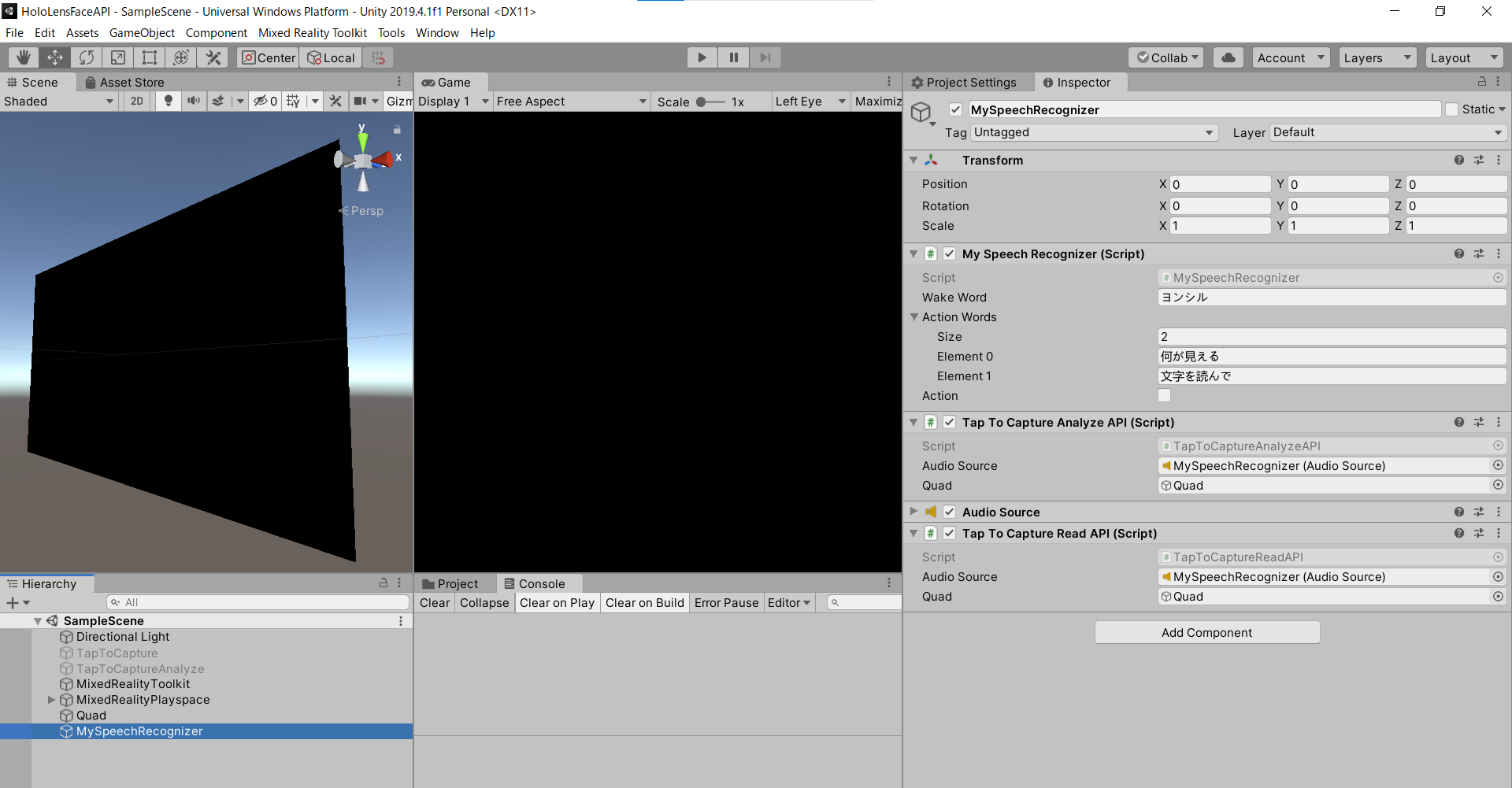

2.Unityプロジェクトはこんな感じ。前回のMySpeechRecognizerのActionワードをリストにして、「文字を読んで」を追加します。新しく「TapToCaptureReadAPI.cs」スクリプトをAdd Componentし、「文字を読んで」と言うと画像キャプチャし、テキスト抽出、読み上げという流れになります。

3.前回の記事のMySpeechRecognizer.csは、Actionワードが一つしか認識できませんでしたが、リストにして複数のActionワードを認識できるようにします。あとはUpdate関数を下記のように編集して、Actionワードが「文字を読んで」のときにTapToCaptureReadAPI.csのAirTap関数を実行します。

// public string ActionWord = "";

public List<string> ActionWords;

async void Update()

{

if (recognizedString != "")

{

// Debug.Log(recognizedString);

if (action){

foreach(string ActionWord in ActionWords){

if (recognizedString.ToLower().Contains(ActionWord.ToLower()))

{

Debug.Log("Action");

if(ActionWord == "何が見える"){

Debug.Log("Analyze Image");

this.GetComponent<TapToCaptureAnalyzeAPI>().AirTap();

}else if(ActionWord == "文字を読んで"){

Debug.Log("Read");

this.GetComponent<TapToCaptureReadAPI>().AirTap();

}

action = false;

}

}

}else if (recognizedString.ToLower().Contains(WakeWord.ToLower()))

{

Debug.Log("Wake");

await this.GetComponent<TapToCaptureAnalyzeAPI>().SynthesizeAudioAsync("はい");

action = true;

}

}

}

4.「TapToCaptureReadAPI.cs」スクリプトは以下のようになります。

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System;

using UnityEngine;

using Microsoft.MixedReality.Toolkit.Utilities;

using System.Threading.Tasks;

// SpeechSDK ここから

using System.IO;

using System.Text;

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Audio;

// SpeechSDK ここまで

public class TapToCaptureReadAPI : MonoBehaviour

{

// Read ここから

private string read_endpoint = "https://<Insert Your Endpoint>/vision/v3.1/read/analyze";

private string read_subscription_key = "<Insert Your API Key>";

// {"status":"succeeded","createdDateTime":"2020-12-13T03:05:57Z","lastUpdatedDateTime":"2020-12-13T03:05:58Z","analyzeResult":{"version":"3.0.0","readResults":[{"page":1,"angle":-1.4203,"width":1280,"height":720,"unit":"pixel","lines":[{"boundingBox":[417,351,481,348,482,365,417,367],"text":"BANANA","words":[{"boundingBox":[420,352,481,348,482,365,421,367],"text":"BANANA","confidence":0.595}]},{"boundingBox":[541,348,604,347,605,360,542,362],"text":"ALMOND","words":[{"boundingBox":[542,349,605,347,605,360,543,363],"text":"ALMOND","confidence":0.916}]},{"boundingBox":[644,345,724,344,724,358,645,359],"text":"STRAWBERRY","words":[{"boundingBox":[647,346,724,344,724,358,647,359],"text":"STRAWBERRY","confidence":0.638}]},{"boundingBox":[757,334,843,337,843,356,757,353],"text":"PINEAPPLE","words":[{"boundingBox":[763,335,842,338,843,355,763,353],"text":"PINEAPPLE","confidence":0.623}]},{"boundingBox":[385,382,748,374,750,465,387,475],"text":"LOOK","words":[{"boundingBox":[385,384,746,374,746,467,387,475],"text":"LOOK","confidence":0.983}]},{"boundingBox":[479,474,668,468,670,501,480,508],"text":"A La Mode","words":[{"boundingBox":[482,475,512,474,513,508,483,509],"text":"A","confidence":0.987},{"boundingBox":[519,474,567,472,567,506,520,508],"text":"La","confidence":0.987},{"boundingBox":[573,472,669,468,670,501,574,505],"text":"Mode","confidence":0.984}]},{"boundingBox":[489,508,666,501,666,518,490,526],"text":"CHOCOLATE","words":[{"boundingBox":[489,509,666,501,666,519,490,527],"text":"CHOCOLATE","confidence":0.981}]}]}]}}

[System.Serializable]

public class Read

{

public string status;

public string createdDateTime;

public string lastUpdatedDateTime;

public AnalyzeResult analyzeResult;

}

[System.Serializable]

public class AnalyzeResult

{

public ReadResults[] readResults;

}

[System.Serializable]

public class ReadResults

{

public Lines[] lines;

}

[System.Serializable]

public class Lines

{

public string text;

}

// Read ここまで

// SpeechSDK ここから

public AudioSource audioSource;

public async Task SynthesizeAudioAsync(string text)

{

var config = SpeechConfig.FromSubscription("YourSubscriptionKey", "YourServiceRegion");

var synthesizer = new SpeechSynthesizer(config, null); // nullを省略するとPCのスピーカーから出力されるが、HoloLensでは出力されない。

string ssml = "<speak version=\"1.0\" xmlns=\"https://www.w3.org/2001/10/synthesis\" xml:lang=\"ja-JP\"> <voice name=\"ja-JP-Ichiro\">" + text + "</voice> </speak>";

// Starts speech synthesis, and returns after a single utterance is synthesized.

// using (var result = synthesizer.SpeakTextAsync(text).Result)

using (var result = synthesizer.SpeakSsmlAsync(ssml).Result)

{

// Checks result.

if (result.Reason == ResultReason.SynthesizingAudioCompleted)

{

// Native playback is not supported on Unity yet (currently only supported on Windows/Linux Desktop).

// Use the Unity API to play audio here as a short term solution.

// Native playback support will be added in the future release.

var sampleCount = result.AudioData.Length / 2;

var audioData = new float[sampleCount];

for (var i = 0; i < sampleCount; ++i)

{

audioData[i] = (short)(result.AudioData[i * 2 + 1] << 8 | result.AudioData[i * 2]) / 32768.0F;

}

// The output audio format is 16K 16bit mono

var audioClip = AudioClip.Create("SynthesizedAudio", sampleCount, 1, 16000, false);

audioClip.SetData(audioData, 0);

audioSource.clip = audioClip;

audioSource.Play();

// newMessage = "Speech synthesis succeeded!";

}

else if (result.Reason == ResultReason.Canceled)

{

var cancellation = SpeechSynthesisCancellationDetails.FromResult(result);

// newMessage = $"CANCELED:\nReason=[{cancellation.Reason}]\nErrorDetails=[{cancellation.ErrorDetails}]\nDid you update the subscription info?";

}

}

}

// SpeechSDK ここまで

public GameObject quad;

UnityEngine.Windows.WebCam.PhotoCapture photoCaptureObject = null;

Texture2D targetTexture = null;

private bool waitingForCapture;

void Start(){

waitingForCapture = false;

}

public void AirTap()

{

if (waitingForCapture) return;

waitingForCapture = true;

Resolution cameraResolution = UnityEngine.Windows.WebCam.PhotoCapture.SupportedResolutions.OrderByDescending((res) => res.width * res.height).First();

targetTexture = new Texture2D(cameraResolution.width, cameraResolution.height);

// PhotoCapture オブジェクトを作成します

UnityEngine.Windows.WebCam.PhotoCapture.CreateAsync(false, delegate (UnityEngine.Windows.WebCam.PhotoCapture captureObject) {

photoCaptureObject = captureObject;

UnityEngine.Windows.WebCam.CameraParameters cameraParameters = new UnityEngine.Windows.WebCam.CameraParameters();

cameraParameters.hologramOpacity = 0.0f;

cameraParameters.cameraResolutionWidth = cameraResolution.width;

cameraParameters.cameraResolutionHeight = cameraResolution.height;

cameraParameters.pixelFormat = UnityEngine.Windows.WebCam.CapturePixelFormat.BGRA32;

// カメラをアクティベートします

photoCaptureObject.StartPhotoModeAsync(cameraParameters, delegate (UnityEngine.Windows.WebCam.PhotoCapture.PhotoCaptureResult result) {

// 写真を撮ります

photoCaptureObject.TakePhotoAsync(OnCapturedPhotoToMemoryAsync);

});

});

}

async void OnCapturedPhotoToMemoryAsync(UnityEngine.Windows.WebCam.PhotoCapture.PhotoCaptureResult result, UnityEngine.Windows.WebCam.PhotoCaptureFrame photoCaptureFrame)

{

// ターゲットテクスチャに RAW 画像データをコピーします

photoCaptureFrame.UploadImageDataToTexture(targetTexture);

byte[] bodyData = targetTexture.EncodeToJPG();

Response response = new Response();

Dictionary<string, string> headers = new Dictionary<string, string>();

headers.Add("Ocp-Apim-Subscription-Key", read_subscription_key);

try

{

string query = read_endpoint;

// headers.Add("Content-Type": "application/octet-stream");

response = await Rest.PostAsync(query, bodyData, headers, -1, true);

}

catch (Exception e)

{

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

return;

}

if (!response.Successful)

{

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

return;

}

Debug.Log(response.ResponseCode);

Debug.Log(response.ResponseBody);

string operation_url = response.ResponseBody;

bool poll = true;

int i = 0;

Read read;

do {

System.Threading.Thread.Sleep(1000);

// https://docs.microsoft.com/ja-jp/azure/cognitive-services/computer-vision/concept-recognizing-text

// https://github.com/Azure-Samples/cognitive-services-quickstart-code/blob/master/dotnet/ComputerVision/REST/CSharp-hand-text.md

// {"status":"running","createdDateTime":"2020-12-12T19:39:44Z","lastUpdatedDateTime":"2020-12-12T19:39:44Z"}

response = await Rest.GetAsync(operation_url, headers, -1, null, true);

Debug.Log(response.ResponseCode);

Debug.Log(response.ResponseBody);

read = JsonUtility.FromJson<Read>(response.ResponseBody);

if (read.status == "succeeded"){

poll = false;

}

++i;

} while (i < 60 && poll);

if (i == 60 && poll == true)

{

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

return;

}

string read_text = "";

foreach (ReadResults readResult in read.analyzeResult.readResults){

foreach (Lines line in readResult.lines){

read_text = read_text + line.text + "\n";

// Debug.Log(line.text);

}

}

read_text += "以上です。";

// SpeechSDK 追加分ここから

Debug.Log(read_text);

await SynthesizeAudioAsync(read_text); // jp

// SpeechSDK 追加分ここまで

// カメラを非アクティブにします

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

}

void OnStoppedPhotoMode(UnityEngine.Windows.WebCam.PhotoCapture.PhotoCaptureResult result)

{

// photo capture のリソースをシャットダウンします

photoCaptureObject.Dispose();

photoCaptureObject = null;

waitingForCapture = false;

}

}

5.Computer Vision API (Read API) のエンドポイントとキーは、画像説明文生成のときと同じものを用います。

6.TapToCaptureReadAPI.csのAirTap関数で画像をキャプチャしたあと、Read APIに画像をPOSTします。すると、ResponseのHeadersに{"Operation-Location":URL}が返ってくるので、そのURLに対してGETする必要があります。しかし、MRTKのRest.csはResponseHeadersを返してくれないので、ProcessRequestAsync関数の275行目からを次のように編集します。

・・・

if (readResponseData)

{

// For Read API

Dictionary<string, string> tmp = webRequest.GetResponseHeaders();

if (tmp == null)

{

return new Response(true, webRequest.downloadHandler?.text, webRequest.downloadHandler?.data, webRequest.responseCode);

}else{

if(tmp.ContainsKey("Operation-Location")){

string responseHeaders = tmp["Operation-Location"];

string downloadHandlerText = webRequest.downloadHandler?.text;

return new Response(true, responseHeaders, webRequest.downloadHandler?.data, webRequest.responseCode);

}else{

return new Response(true, webRequest.downloadHandler?.text, webRequest.downloadHandler?.data, webRequest.responseCode);

}

}

}

else // This option can be used only if action will be triggered in the same scope as the webrequest

{

return new Response(true, () => webRequest.downloadHandler?.text, () => webRequest.downloadHandler?.data, webRequest.responseCode);

}

・・・

7.これでResponseBodyにResponseHeadersのURLが返ってくるので、そのURLに対してGETします。

8.テキスト抽出が実行中の場合は下記のようなjsonが返ってくるので、"status"が"succeeded"になるまで、1秒おきにGETします。

{"status":"running","createdDateTime":"2020-12-12T19:39:44Z","lastUpdatedDateTime":"2020-12-12T19:39:44Z"}

9."status"が"succeeded"になったら、テキスト抽出結果が次のようなjsonで返っくるので、仕様に合わせてReadクラス、AnalyzeResultクラス、ReadResultsクラス、Linesクラスを作成しました。

{"status":"succeeded","createdDateTime":"2020-12-13T03:05:57Z","lastUpdatedDateTime":"2020-12-13T03:05:58Z","analyzeResult":{"version":"3.0.0","readResults":[{"page":1,"angle":-1.4203,"width":1280,"height":720,"unit":"pixel","lines":[{"boundingBox":[417,351,481,348,482,365,417,367],"text":"BANANA","words":[{"boundingBox":[420,352,481,348,482,365,421,367],"text":"BANANA","confidence":0.595}]},{"boundingBox":[541,348,604,347,605,360,542,362],"text":"ALMOND","words":[{"boundingBox":[542,349,605,347,605,360,543,363],"text":"ALMOND","confidence":0.916}]},{"boundingBox":[644,345,724,344,724,358,645,359],"text":"STRAWBERRY","words":[{"boundingBox":[647,346,724,344,724,358,647,359],"text":"STRAWBERRY","confidence":0.638}]},{"boundingBox":[757,334,843,337,843,356,757,353],"text":"PINEAPPLE","words":[{"boundingBox":[763,335,842,338,843,355,763,353],"text":"PINEAPPLE","confidence":0.623}]},{"boundingBox":[385,382,748,374,750,465,387,475],"text":"LOOK","words":[{"boundingBox":[385,384,746,374,746,467,387,475],"text":"LOOK","confidence":0.983}]},{"boundingBox":[479,474,668,468,670,501,480,508],"text":"A La Mode","words":[{"boundingBox":[482,475,512,474,513,508,483,509],"text":"A","confidence":0.987},{"boundingBox":[519,474,567,472,567,506,520,508],"text":"La","confidence":0.987},{"boundingBox":[573,472,669,468,670,501,574,505],"text":"Mode","confidence":0.984}]},{"boundingBox":[489,508,666,501,666,518,490,526],"text":"CHOCOLATE","words":[{"boundingBox":[489,509,666,501,666,519,490,527],"text":"CHOCOLATE","confidence":0.981}]}]}]}}

10.テキスト抽出結果を音声合成に投げて読み上げます。

実行

実行動画を見てください。こんな感じで文字を読めるようになりました!割と小さい文字もいけます。名刺とかも読めるし、便利かもしれないです。日本語対応はまだなので待つしかないですね。(現在の対応言語:Dutch, English, French, German, Italian, Portuguese and Spanish)

画像から文字を読めるようになった!#Azure #CognitiveServices #OCR #Read #TTS #HoloLens2 #MRTK #Unity #AI pic.twitter.com/e37gEAQhO0

— 藤本賢志(ガチ本) (@sotongshi) December 13, 2020

ちなみにWakeワードの「ヨンシル」は「4種類」や「キャンセル」などに誤認しやすいので、変えた方がいいです。「ガチモト」は「が地元」や「合致もっと」になるので、「藤本」がいいです。。