kaggleのデータセットを使って、レントゲンの肺炎画像を診断する。

使用したデータセット: Chest X-Ray Images (Pneumonia)

今回挑戦すること

kaggleで公開されているデータセットを使用し、kaggleに慣れる。

kerasで自作モデルを作り、VGG-16転移学習モデルと比較を行う。

評価は混同行列の適合率及び再現率で行い、より再現率を重視する。

ディレクトリ構造

X-ray

├─ train

├─ NORMAL(1341)

└─ PNEMONIA(3785)

├─ test

├─ NORMAL(234)

└─ PNEMONIA(390)

└─ val

├─ NORMAL(8)

└─ PNEMONIA(8)

下準備

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from keras.utils.np_utils import to_categorical

from keras.applications.vgg16 import VGG16

from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPooling2D, Input, SeparableConv2D

from keras.layers.normalization import BatchNormalization

from keras.models import Sequential, Model

from keras.optimizers import Adam, SGD, RMSprop

from mlxtend.plotting import plot_confusion_matrix

from sklearn.metrics import confusion_matrix

import cv2

%matplotlib inline

import os

test_size = 0.8

test_dir = "../input/chest-xray-pneumonia/chest_xray/test"

train_dir = "../input/chest-xray-pneumonia/chest_xray/train"

val_dir = "../input/chest-xray-pneumonia/chest_xray/val"

def data_path(path, n_p):

'''

path: ディレクトリパス

n_p: path以下のNORMAL/PHEMONIAフォルダ

'''

# 引数からディレクトリパスを作成し、変数に格納

path = path + "/" + n_p

files = os.listdir(path)

# 画像を格納するリスト

t_list = []

# ファイルから画像を1つずつ取得

for imgfile in files:

img = path + "/" + imgfile

# 画像読み込み

img = cv2.imread(img)

# 画像サイズ変換

img = cv2.resize(img, (256, 256))

# 画像をリストに追加

t_list.append((img))

return t_list

自作モデルで学習・評価

Most Votesの方のコードを参考に作成してみました。

def my_model(shape):

# 入力層

input_img = Input(shape=shape, name="img_input")

# conv1 2回畳み込み+プーリング

x = Conv2D(128, (3,3), activation = "relu", padding= "same")(input_img)

x = Conv2D(128,(2,2), activation = "relu", padding= "same")(x)

x = MaxPooling2D((2,2))(x)

# conv2 2回畳み込み+プーリング

x = SeparableConv2D(128, (3,3), activation="relu", padding="same")(x)

x = SeparableConv2D(128, (3,3), activation="relu", padding = "same")(x)

x = MaxPooling2D((2,2))(x)

# conv3 2回畳み込み+正規化+畳み込み+プーリング

x = SeparableConv2D(256, (2,2), activation="relu", padding="same")(x)

x = SeparableConv2D(256, (2,2), activation="relu", padding="same")(x)

x = BatchNormalization()(x)

x = SeparableConv2D(256, (3, 3), activation="relu", padding = "same")(x)

x = MaxPooling2D((4,4))(x)

# conv4 畳み込み+正規化+畳み込み+正規化+畳み込み

x = SeparableConv2D(512, (3, 3), activation="relu", padding = "same")(x)

x = BatchNormalization()(x)

x = SeparableConv2D(512, (3, 3), activation="relu", padding = "same")(x)

x = BatchNormalization()(x)

x = SeparableConv2D(512, (3, 3), activation="relu", padding = "same")(x)

# 平坦化

x = Flatten()(x)

# 全結合層

x = Dense(512, activation="relu")(x)

x = Dropout(0.7)(x)

x = Dense(256, activation="relu")(x)

x = Dropout(0.6)(x)

x = Dense(64, activation="relu")(x)

x = Dropout(0.4)(x)

x = Dense(2, activation="softmax")(x)

model = Model(inputs = input_img, outputs=x)

return model

model = my_model(shape=(256, 256,3))

model.summary()

>>

Model: "model_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

img_input (InputLayer) (None, 256, 256, 3) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 256, 256, 128) 3584

_________________________________________________________________

conv2d_2 (Conv2D) (None, 256, 256, 128) 65664

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 128, 128, 128) 0

_________________________________________________________________

separable_conv2d_1 (Separabl (None, 128, 128, 128) 17664

_________________________________________________________________

separable_conv2d_2 (Separabl (None, 128, 128, 128) 17664

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 64, 64, 128) 0

_________________________________________________________________

separable_conv2d_3 (Separabl (None, 64, 64, 256) 33536

_________________________________________________________________

separable_conv2d_4 (Separabl (None, 64, 64, 256) 66816

_________________________________________________________________

batch_normalization_1 (Batch (None, 64, 64, 256) 1024

_________________________________________________________________

separable_conv2d_5 (Separabl (None, 64, 64, 256) 68096

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 16, 16, 256) 0

_________________________________________________________________

separable_conv2d_6 (Separabl (None, 16, 16, 512) 133888

_________________________________________________________________

batch_normalization_2 (Batch (None, 16, 16, 512) 2048

_________________________________________________________________

separable_conv2d_7 (Separabl (None, 16, 16, 512) 267264

_________________________________________________________________

batch_normalization_3 (Batch (None, 16, 16, 512) 2048

_________________________________________________________________

separable_conv2d_8 (Separabl (None, 16, 16, 512) 267264

_________________________________________________________________

flatten_1 (Flatten) (None, 131072) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 67109376

_________________________________________________________________

dropout_1 (Dropout) (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 256) 131328

_________________________________________________________________

dropout_2 (Dropout) (None, 256) 0

_________________________________________________________________

dense_3 (Dense) (None, 64) 16448

_________________________________________________________________

dropout_3 (Dropout) (None, 64) 0

_________________________________________________________________

dense_4 (Dense) (None, 2) 130

=================================================================

Total params: 68,203,842

Trainable params: 68,201,282

Non-trainable params: 2,560

_________________________________________________________________

def pred_img(img):

img = cv2.resize(img, (256, 256))

pred = np.argmax(model.predict(np.array([img])))

if pred == 0:

return 'Normal'

else:

return 'Pneumonia'

# trainデータのラベル化

# 0: NORMAL, 1: PNEUMONIA

'''

trainデータをtrainとtestに分割

valデータが少なく、またtrainデータに偏りがあるためtrainデータを分割して使用。

'''

train_nor = data_path(train_dir, "NORMAL")

train_pne = data_path(train_dir, "PNEUMONIA")

X = np.array(train_nor + train_pne)

Y = np.array([0]*len(train_nor) + [1]*len(train_pne))

rand_index = np.random.permutation(np.arange(len(X)))

X = X[rand_index]

Y = Y[rand_index]

X_train = X[:int(len(X)*test_size)]

Y_train = Y[:int(len(Y)*test_size)]

X_test = X[int(len(X)*test_size):]

Y_test = Y[int(len(Y)*test_size):]

Y_train = to_categorical(Y_train)

Y_test = to_categorical(Y_test)

# 損失関数は2値分類なのでbinary_crossentropyを使用

model.compile(loss="binary_crossentropy",

optimizer = Adam(lr=1e-4, decay=1e-5), metrics=['accuracy'])

model.fit(X_train, Y_train, batch_size=16, epochs = 10)

>>

Epoch 1/10

4172/4172 [==============================] - 84s 20ms/step - loss: 0.4250 - accuracy: 0.7893

Epoch 2/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.2329 - accuracy: 0.9099

Epoch 3/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.1688 - accuracy: 0.9458

Epoch 4/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.1349 - accuracy: 0.9545

Epoch 5/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.1061 - accuracy: 0.9612

Epoch 6/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.0868 - accuracy: 0.9676

Epoch 7/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.0890 - accuracy: 0.9722

Epoch 8/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.0671 - accuracy: 0.9779

Epoch 9/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.0630 - accuracy: 0.9772

Epoch 10/10

4172/4172 [==============================] - 80s 19ms/step - loss: 0.0514 - accuracy: 0.9823

scores = model.evaluate(X_test, Y_test, verbose=1)

print('Test loss:', scores[0])

print('Test accuracy:', scores[1])

>>

1044/1044 [==============================] - 5s 5ms/step

Test loss: 0.060373730071681155

Test accuracy: 0.9779693484306335

test_nor = data_path(test_dir, "NORMAL")

test_pne = data_path(test_dir, "PNEUMONIA")

X_T = np.array(test_nor + test_pne)

Y_T = np.array([0]*len(test_nor) + [1]*len(test_pne))

rand_index = np.random.permutation(np.arange(len(X_T)))

X_T = X_T[rand_index]

Y_T = Y_T[rand_index]

Y_T = to_categorical(Y_T)

path_train_pne = os.listdir("../input/chest-xray-pneumonia/chest_xray/train/PNEUMONIA/")

for i in range(3):

img = cv2.imread(train_dir + "/PNEUMONIA/" + path_train_pne[i])

# print(img)

b,g,r = cv2.split(img)

img = cv2.merge([r,g,b])

plt.imshow(img)

plt.show()

print(pred_img(img))

scores = model.evaluate(X_T, Y_T, verbose=1)

print('loss:', scores[0])

print('accuracy:', scores[1])

>>

624/624 [==============================] - 3s 4ms/step

loss: 1.051183255819174

accuracy: 0.7644230723381042

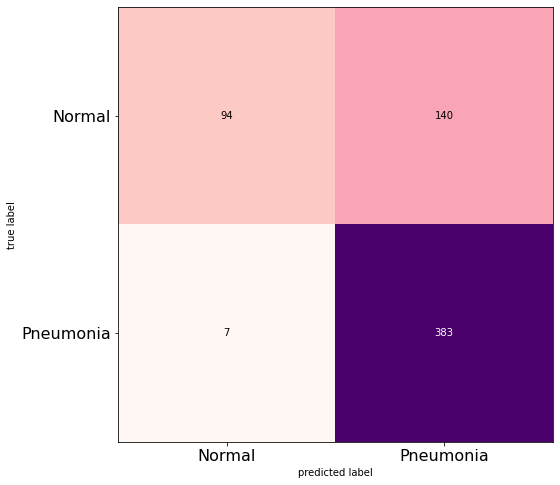

pred = model.predict(X_T)

pred = np.argmax(pred, axis=-1)

orig_test_label = np.argmax(Y_T, axis=-1)

cm = confusion_matrix(orig_test_label, pred)

plt.figure()

plot_confusion_matrix(cm,figsize=(12,8), hide_ticks=True, cmap=plt.cm.RdPu)

plt.xticks(range(2), ['Normal', 'Pneumonia'], fontsize=16)

plt.yticks(range(2), ['Normal', 'Pneumonia'], fontsize=16)

plt.show()

VGG16を用いた転移学習

input_tensor = Input(shape=(256, 256, 3))

vgg16 = VGG16(include_top=False, weights='imagenet', input_tensor=input_tensor)

top_model = Sequential()

top_model.add(Flatten(input_shape=vgg16.output_shape[1:]))

top_model.add(Dense(128, activation='relu'))

top_model.add(Dropout(0.2))

top_model.add(Dense(64, activation = "relu"))

top_model.add(Dense(2, activation = "softmax"))

model2 = Model(input=vgg16.input, output=top_model(vgg16.output))

for layer in model.layers[:15]:

layer.trainable = False

model2.compile(loss='categorical_crossentropy',

optimizer=SGD(lr=1e-4, momentum=0.9),

metrics=['accuracy'])

model2.fit(X_train, Y_train, batch_size=100, epochs=10)

>>

Epoch 1/10

4172/4172 [==============================] - 50s 12ms/step - loss: 0.3822 - accuracy: 0.8993

Epoch 2/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0939 - accuracy: 0.9652

Epoch 3/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0565 - accuracy: 0.9789

Epoch 4/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0387 - accuracy: 0.9851

Epoch 5/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0227 - accuracy: 0.9935

Epoch 6/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0225 - accuracy: 0.9916

Epoch 7/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0147 - accuracy: 0.9957

Epoch 8/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0089 - accuracy: 0.9976

Epoch 9/10

4172/4172 [==============================] - 40s 10ms/step - loss: 0.0080 - accuracy: 0.9976

Epoch 10/10

4172/4172 [==============================] - 41s 10ms/step - loss: 0.0092 - accuracy: 0.9966

model2.summary()

>>

Model: "model_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 256, 256, 3) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 256, 256, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 256, 256, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 128, 128, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 128, 128, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 128, 128, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 64, 64, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 64, 64, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 32, 32, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 32, 32, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 16, 16, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 8, 8, 512) 0

_________________________________________________________________

sequential_1 (Sequential) (None, 2) 4202818

=================================================================

Total params: 18,917,506

Trainable params: 18,917,506

Non-trainable params: 0

_________________________________________________________________

scores2 = model2.evaluate(X_T, Y_T, verbose=1)

print('loss2:', scores[0])

print('accuracy2:', scores[1])

>>

624/624 [==============================] - 3s 5ms/step

loss2: 1.051183255819174

accuracy2: 0.7644230723381042

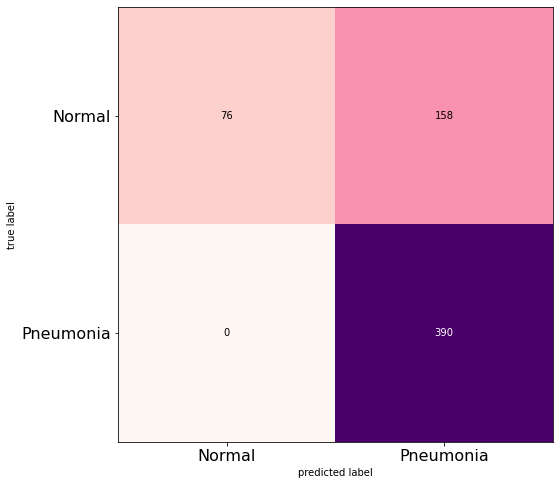

pred2 = model2.predict(X_T)

pred2 = np.argmax(pred2, axis=-1)

cm2 = confusion_matrix(orig_test_label, pred2)

plt.figure()

plot_confusion_matrix(cm2,figsize=(12,8), hide_ticks=True, cmap=plt.cm.RdPu)

plt.xticks(range(2), ['Normal', 'Pneumonia'], fontsize=16)

plt.yticks(range(2), ['Normal', 'Pneumonia'], fontsize=16)

plt.show()

'''

今回の場合

適合率(precision): 肺炎と予測した画像のうち、本当に肺炎画像である割合

再現率(recall): 肺炎画像を正しく肺炎と認識した割合

再現率が高く、適合率が低いと、画像を正しく認識していることになる。

=> 再現率と適合率はトレードオフだが、肺炎画像は見落としてはいけないので、今回の場合は再現率が重要視される。

'''

# 自作モデルの適合率と再現率

tn, fp, fn, tp = cm.ravel()

precision = tp/(tp+fp)

recall = tp/(tp+fn)

print("--my model--")

print("Recall {:.2f}".format(recall))

print("Precision {:.2f}".format(precision))

# VGG-16転移学習モデルの適合率と再現率

tn2, fp2, fn2, tp2 = cm2.ravel()

precision2 = tp2/(tp2+fp2)

recall2 = tp2/(tp2+fn2)

print("--VGG16--")

print("Recall {:.2f}".format(recall2))

print("Precision {:.2f}".format(precision2))

>>

--my model--

Recall 0.98

Precision 0.73

--VGG16--

Recall 1.00

Precision 0.71

結果

今回重要視したいRecallは、VGG-16で転移学習したモデルで100%、自作モデルは98%であった。

参考資料

Kerasで始めるModel作成方法の違い

Kerasでちょっと難しいModelやTrainingを実装するときのTips

Kerasによる2クラス分類(Pima Indians Diabetes)

具体例で覚える畳み込み計算(Conv2D、DepthwiseConv2D、SeparableConv2D、Conv2DTranspose)

混同行列(Confusion Matrix) とは 〜 2 値分類の機械学習のクラス分類について

公式

モデル

モデルについて

Functional APIのガイド

ModelクラスAPI

SequentialモデルでKerasを始めてみよう

SequentialモデルAPI