概要

Kaggleの血液検査データセットを使ってデータ分析をしてみた。

いろいろ試してみた結果、量が多くなったので分割します。

今回はその⑤ (全5回)

他の回はこちらから

①~モデルの性能比較をしてみた~

②~特徴選択をして、重要度を可視化してみた~

③~アンサンブル学習をしてみた~

④~外れ値の取り扱い~

使用したデータセット:Patient Treatment Classification (Electronic Health Record Dataset)

インドネシアの病院で集められた血液検査の結果から、患者に治療が必要かどうかを判定する

モデル

今回使用したモデルは以下の6種類

- XGBoost

- ランダムフォレスト

- ロジスティック回帰

- 決定木

- k-近傍法

データ確認

データは血液検査の結果。

データの検査方法が分からなかったため、正常値は2016年度国立がん研究センターのデータをお借りしました。(※ 正常値は測定方法などにより若干のばらつきあり)

| 列No | 検査値 | 和訳 | 正常値 | 高いと | 低いと |

|---|---|---|---|---|---|

| 1 | HAEMATOCRIT | ヘマトクリット | 男性:40.7~50.1 %, 女性:35.1~44.4 % |

多血症など | 貧血など |

| 2 | HAEMOGLOBINS | ヘモグロビン | 男性:13.7~16.8 g/dl, 女性:11.6~14.8 g/dl |

多血症など | 貧血など |

| 3 | ERYTHROCYTE | 赤血球 | 男性:4.35~5.55 x 106 /μL, 女性:3.86~4.92 x 106 /μL |

多血症など | 貧血など |

| 4 | LEUCOCYTE | 白血球 | 3.3~8.6 x 103/μL | 感染症・白血病など | 一部感染症・膠原病・貧血など |

| 5 | THROMBOCYTE | 血小板 | 158~348 x 103/μL | 血小板血症・白血病・多血症など | 貧血・紫斑病など |

| 6 | MCH | 平均赤血球ヘモグロビン量 | 27.5~33.2 pg | 巨赤芽球性貧血など | 鉄欠乏性貧血など |

| 7 | MCHC | 平均赤血球ヘモグロビン濃度 | 31.7~35.3 g/dL | 脱水・多血症など | 貧血など |

| 8 | MCV | 平均赤血球容積 | 83.6~98.2 fL | 巨赤芽球性貧血など | 鉄欠乏性貧血など |

| 9 | AGE | 年齢 | ― | ― | ― |

| 10 | SEX | 性別 | ― | ― | ― |

| 11 | SOURCE | 治療が必要か | out: 治療不要, in: 治療が必要 | ― | ― |

前処理は①と同じなので割愛

不均衡データの処理

from imblearn.over_sampling import SMOTE

from decimal import Decimal, ROUND_HALF_UP

import itertools

print(df_samp.shape)

print(df_samp["SOURCE"].value_counts())

>>

(4412, 35)

0 2628

1 1784

Name: SOURCE, dtype: int64

out: :2628データ、in: 1784データなので不均衡データとして処理する。

今回はSMOTEでOver-Samplingする。

SMOTEで水増し

data_y = df_samp["SOURCE"]

data_X = df_samp.drop("SOURCE",axis=1)

train_X, test_X, train_y, test_y = train_test_split(data_X, data_y, test_size=0.1)

sm = SMOTE()

x_resampled, y_resampled = sm.fit_resample(data_X, data_y.tolist())

# y_resampled.value_counts()

y_resampled = pd.DataFrame(y_resampled, columns=["SOURCE"])

y_resampled.value_counts()

>>

SOURCE

0 2628

1 2628

dtype: int64

水増ししたデータの性別が少数になったため、四捨五入して整数にする

#0:男性 1:女性

int_column_list = ["SEX"]

for my_col in x_resampled.columns:

# 整数にする

if my_col in int_column_list:

x_resampled[my_col] = x_resampled[my_col].map(lambda x: int(Decimal(str(x)).quantize(Decimal('0'), rounding=ROUND_HALF_UP)))

学習と結果

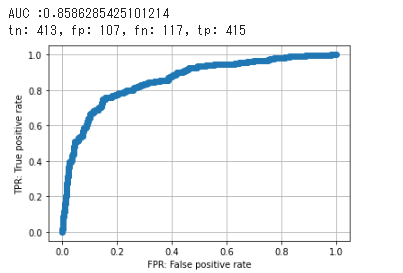

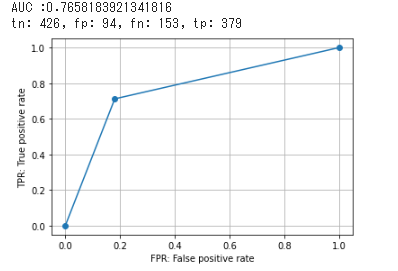

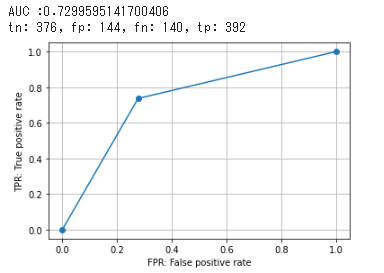

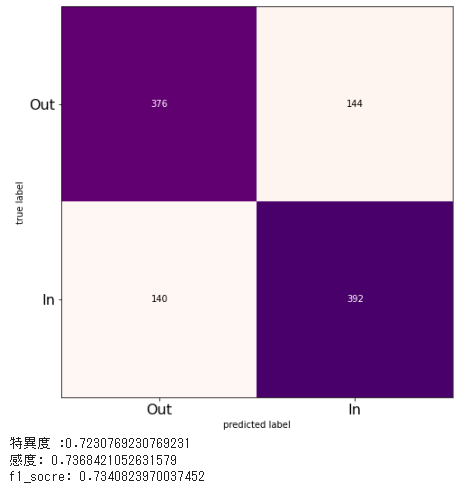

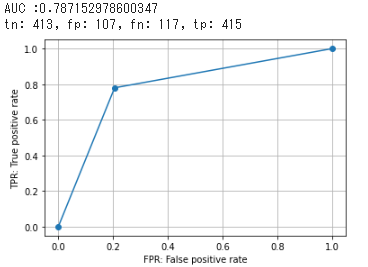

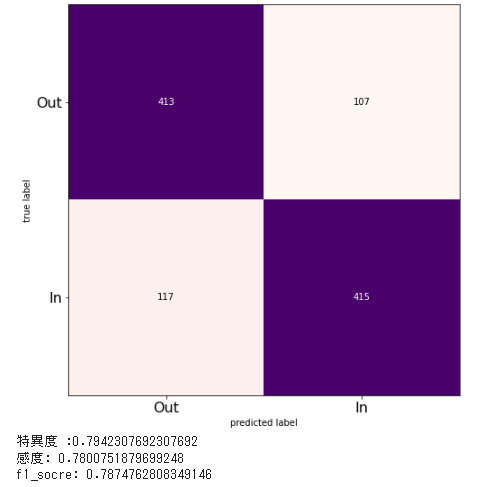

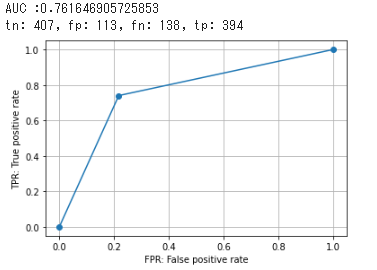

XGBoost

# 変数を訓練用とテスト用に分割

train_X, test_X, train_y, test_y = train_test_split(x_resampled, y_resampled, test_size=0.2, random_state=42)

train_X, val_X, train_y, val_y = train_test_split(train_X, train_y, test_size=0.1, random_state=42)

# GBDTで学習

dtrain = xgb.DMatrix(train_X, label=train_y)

dval = xgb.DMatrix(val_X, label=val_y)

dtest = xgb.DMatrix(test_X)

params = {'objective': 'binary:logistic', 'silent':1, 'random_state': 71, 'eval_metric': 'auc'}

num_round = 25

watchlist = [(dtrain, 'train'), (dval, 'eval')]

GBDT_model_SM = xgb.train(params, dtrain, num_round, evals=watchlist)

val_pred = GBDT_model_SM.predict(dval)

val_y = val_y.values.tolist()

val_y = list(itertools.chain.from_iterable(val_y))

score_val = log_loss(val_y, val_pred)

print(f'logloss_val: {score_val: 4f}')

pred_y = GBDT_model_SM.predict(dtest)

test_y = test_y.values.tolist()

pred_y = pred_y.tolist()

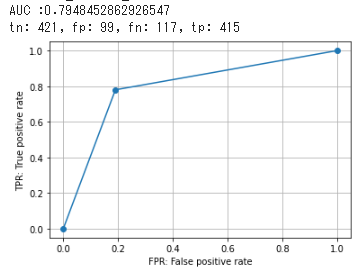

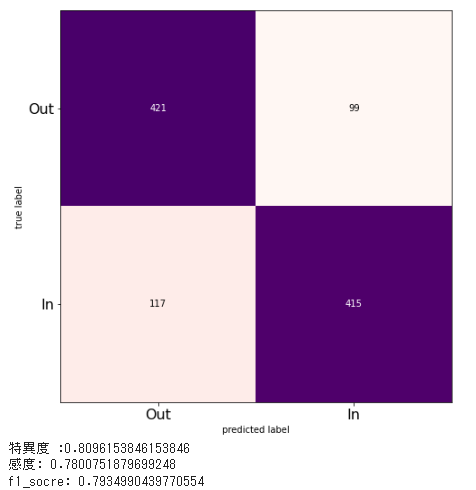

AUC_GBDT_SM = create_ROCcurve(test_y, pred_y)

tn_GBDT_SM, fp_GBDT_SM, fn_GBDT_SM, tp_GBDT_SM, specificity_GBDT_SM, recall_GBDT_SM, f1_GBDT_SM = create_cm(test_y, pred_y, True)

accuracy_GBDT_SM = accuracy_score(test_y, pred_y)

print(f'acc: {accuracy_GBDT_SM}')

AUC_list.append(["AUC_GBDT_SM:", np.round(AUC_GBDT_SM, 3)])

specificity_list.append(["specificity_GBDT_SM:", np.round(specificity_GBDT_SM, 3)])

recall_list.append(["recall_GBDT_SM:", np.round(recall_GBDT_SM, 3)])

f1_score_list.append(["f1_GBDT_SM:", np.round(f1_GBDT_SM, 3)])

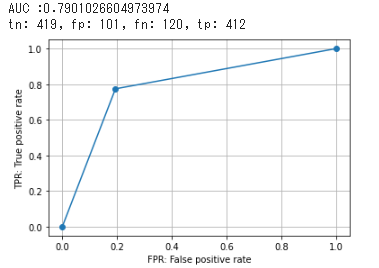

結果

ランダムフォレスト

train_X, test_X, train_y, test_y = train_test_split(x_resampled, y_resampled, test_size=0.2, random_state=42)

train_X, val_X, train_y, val_y = train_test_split(train_X, train_y, test_size=0.1, random_state=42)

train_X = np.asarray(train_X).astype(np.float32)

train_y = np.asarray(train_y).astype(np.float32)

val_X = np.asarray(val_X).astype(np.float32)

val_y = np.asarray(val_y).astype(np.float32)

test_X = np.asarray(test_X).astype(np.float32)

test_y = np.asarray(test_y).astype(np.float32)

RF_model_SM = RandomForestClassifier(max_depth=15, n_estimators=82)

RF_model_SM.fit(train_X, train_y)

RF_model_SM.score(test_X, test_y)

pred_y = RF_model_SM.predict(test_X)

AUC_RF_SM = create_ROCcurve(test_y, pred_y)

tn_RF_SM, fp_RF_SM, fn_RF_SM, tp_RF_SM, specificity_RF_SM, recall_RF_SM, f1_RF_SM = create_cm(test_y, pred_y)

AUC_list.append(["AUC_RF_SM", np.round(AUC_RF_SM, 3)])

specificity_list.append(["specificity_RF_SM", np.round(specificity_RF_SM, 3)])

recall_list.append(["recall_RF_SM", np.round(recall_RF_SM, 3)])

f1_score_list.append(["f1_RF_SM", np.round(f1_RF_SM, 3)])

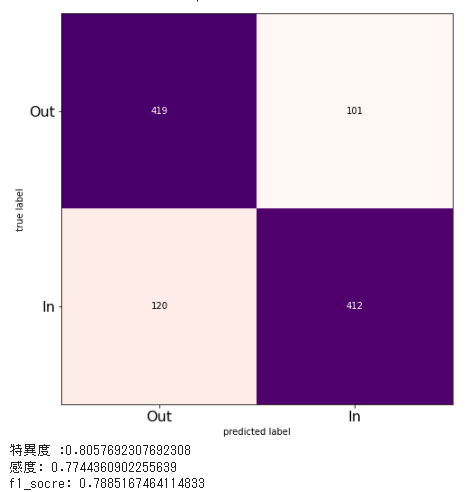

結果

ロジスティック回帰

# ロジスティック回帰

LR_model_SM = LogisticRegression(max_iter=4500, C=79.42047819026727, random_state=40)

LR_model_SM.fit(train_X, train_y)

LR_model_SM.score(test_X, test_y)

pred_y = LR_model_SM.predict(test_X)

AUC_LR_SM = create_ROCcurve(test_y, pred_y)

tn_LR_SM, fp_LR_SM, fn_LR_SM, tp_LR_SM, specificity_LR_SM, recall_LR_SM, f1_LR_SM = create_cm(test_y, pred_y)

AUC_list.append(["AUC_LR_SM", np.round(AUC_LR_SM, 3)])

specificity_list.append(["specificity_LR_SM", np.round(specificity_LR_SM, 3)])

recall_list.append(["recall_LR_SM", np.round(recall_LR_SM, 3)])

f1_score_list.append(["f1_LR_SM", np.round(f1_LR_SM, 3)])

結果

決定木

DT_model_SM = DecisionTreeClassifier(max_depth=6)

DT_model_SM.fit(train_X, train_y)

DT_model_SM.score(test_X, test_y)

pred_y = DT_model_SM.predict(test_X)

AUC_DT_SM = create_ROCcurve(test_y, pred_y)

tn_DT_SM, fp_DT_SM, fn_DT_SM, tp_DT_SM, specificity_DT_SM, recall_DT_SM, f1_DT_SM = create_cm(test_y, pred_y)

AUC_list.append(["AUC_DT_SM", np.round(AUC_DT_SM, 3)])

specificity_list.append(["specificity_DT_SM", np.round(specificity_DT_SM, 3)])

recall_list.append(["recall_DT_SM", np.round(recall_DT_SM, 3)])

f1_score_list.append(["f1_DT_SM", np.round(f1_DT_SM, 3)])

結果

k-近傍法

KN_model_SM = KNeighborsClassifier(n_neighbors=15)

KN_model_SM.fit(train_X, train_y)

KN_model_SM.score(test_X, test_y)

pred_y = KN_model_SM.predict(test_X)

AUC_KN_SM = create_ROCcurve(test_y, pred_y)

tn_KN_SM, fp_KN_SM, fn_KN_SM, tp_KN_SM, specificity_KN_SM, recall_KN_SM, f1_KN_SM = create_cm(test_y, pred_y)

AUC_list.append(["AUC_KN_SM", np.round(AUC_KN_SM, 3)])

specificity_list.append(["specificity_KN_SM", np.round(specificity_KN_SM, 3)])

recall_list.append(["recall_KN_SM", np.round(recall_KN_SM, 3)])

f1_score_list.append(["f1_KN_SM", np.round(f1_KN_SM, 3)])

結果

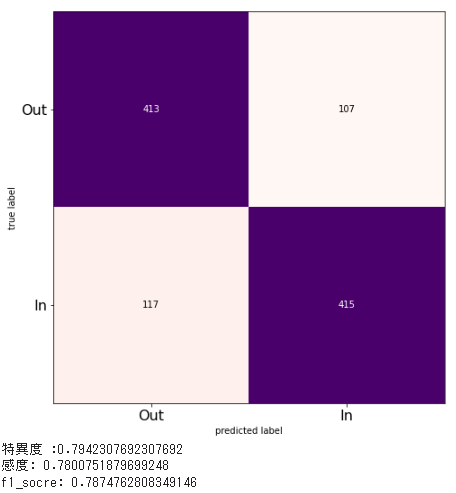

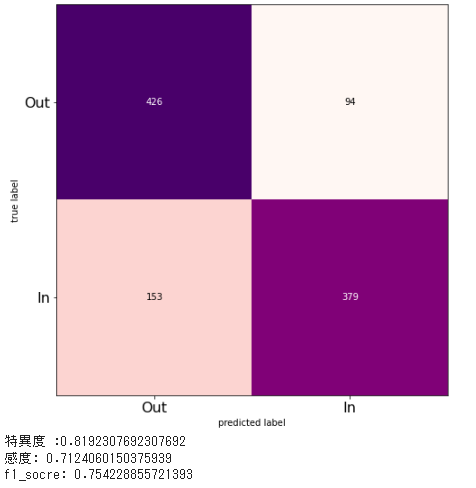

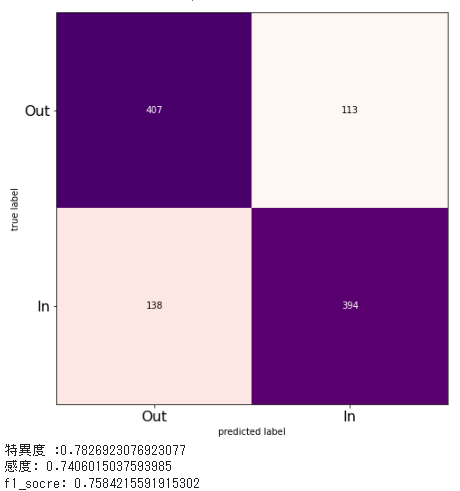

スタッキング(ランダムフォレスト、ロジスティック回帰、決定木)

estimators = [('DT', DT_model_SM), ('RF', RF_model_SM), ('LR', LR_model_SM)]

stk_clf_SM = StackingClassifier(estimators=estimators, final_estimator=LogisticRegression(solver='liblinear'))

stk_clf_SM.fit(train_X, train_y)

stk_clf_SM.score(test_X, test_y)

pred_y = stk_clf_SM.predict(test_X)

# モデル評価

AUC_STK_SM = create_ROCcurve(test_y, pred_y)

tn_STK_SM, fp_STK_SM, fn_STK_SM, tp_STK_SM, specificity_STK_SM, recall_STK_SM, f1_STK_SM = create_cm(test_y, pred_y, True)

AUC_list.append(["AUC_Stacking_SM", np.round(AUC_STK_SM, 3)])

specificity_list.append(["specificity_Stacking_SM", np.round(specificity_STK_SM, 3)])

recall_list.append(["recall_Stacking_SM", np.round(recall_STK_SM, 3)])

f1_score_list.append(["f1_Stacking_SM", np.round(f1_STK_SM, 3)])

結果

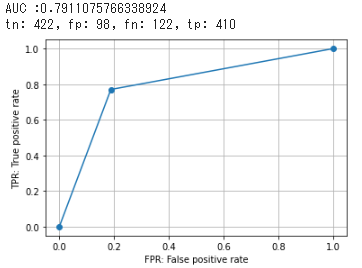

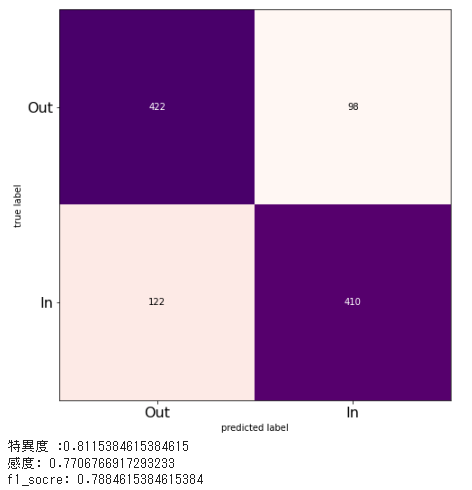

スタッキング2(ランダムフォレスト、ロジスティック回帰、決定木、k-近傍法)

estimators = [('DT', DT_model_SM), ('RF', RF_model_SM), ('LR', LR_model_SM), ('KN', KN_model_SM)]

stk_clf_SM2 = StackingClassifier(estimators=estimators, final_estimator=LogisticRegression(solver='liblinear'))

stk_clf_SM2.fit(train_X, train_y)

stk_clf_SM2.score(test_X, test_y)

pred_y = stk_clf_SM2.predict(test_X)

# モデル評価

AUC_STK_SM2 = create_ROCcurve(test_y, pred_y)

tn_STK_SM2, fp_STK_SM2, fn_STK_SM2, tp_STK_SM2, specificity_STK_SM2, recall_STK_SM2, f1_STK_SM2 = create_cm(test_y, pred_y, True)

AUC_list.append(["AUC_Stacking_SM2", np.round(AUC_STK_SM2, 3)])

specificity_list.append(["specificity_Stacking_SM2", np.round(specificity_STK_SM2, 3)])

recall_list.append(["recall_Stacking_SM2", np.round(recall_STK_SM2, 3)])

f1_score_list.append(["f1_Stacking_SM2", np.round(f1_STK_SM2, 3)])

結果

外れ値除外 & 不均衡データ解消

data_y_LOF = df_samp_LOF["SOURCE"]

data_X_LOF = df_samp_LOF.drop("SOURCE",axis=1)

train_X, test_X, train_y, test_y = train_test_split(data_X, data_y, test_size=0.1)

sm_LOF = SMOTE()

x_resampled_LOF, y_resampled_LOF = sm_LOF.fit_resample(data_X, data_y.tolist())

y_resampled_LOF = pd.DataFrame(y_resampled_LOF, columns=["SOURCE"])

y_resampled_LOF.value_counts()

int_column_list = ["SEX"]

for my_col in x_resampled_LOF.columns:

# 整数にする

if my_col in int_column_list:

x_resampled_LOF[my_col] = x_resampled_LOF[my_col].map(lambda x: int(Decimal(str(x))

.quantize(Decimal('0'), rounding=ROUND_HALF_UP)))

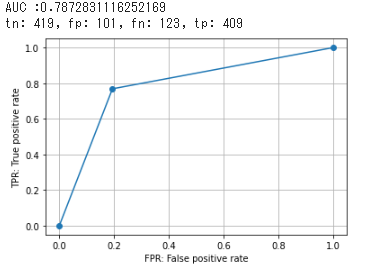

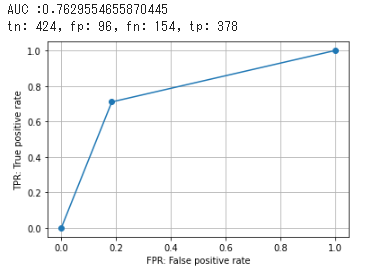

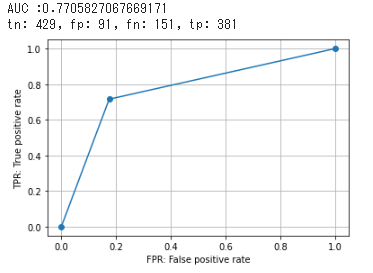

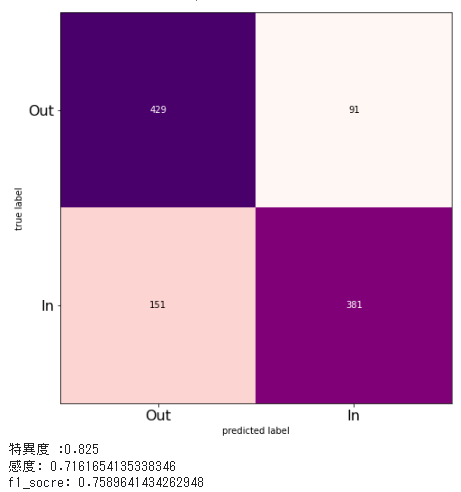

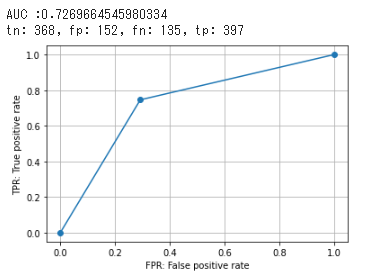

XGBoost

# 変数を訓練用とテスト用に分割

train_X, test_X, train_y, test_y = train_test_split(x_resampled_LOF, y_resampled_LOF, test_size=0.2, random_state=42)

train_X, val_X, train_y, val_y = train_test_split(train_X, train_y, test_size=0.1, random_state=42)

# GBDTで学習

dtrain = xgb.DMatrix(train_X, label=train_y)

dval = xgb.DMatrix(val_X, label=val_y)

dtest = xgb.DMatrix(test_X)

params = {'objective': 'binary:logistic', 'silent':1, 'random_state': 71, 'eval_metric': 'auc'}

num_round = 25

watchlist = [(dtrain, 'train'), (dval, 'eval')]

GBDT_model_SM_LOF = xgb.train(params, dtrain, num_round, evals=watchlist)

val_pred = GBDT_model_SM_LOF.predict(dval)

val_y = val_y.values.tolist()

val_y = list(itertools.chain.from_iterable(val_y))

score_val = log_loss(val_y, val_pred)

print(f'logloss_val: {score_val: 4f}')

pred_y = GBDT_model_SM_LOF.predict(dtest)

test_y = test_y.values.tolist()

pred_y = pred_y.tolist()

AUC_GBDT_SM_LOF = create_ROCcurve(test_y, pred_y)

tn_GBDT_SM_LOF, fp_GBDT_SM_LOF, fn_GBDT_SM_LOF, tp_GBDT_SM_LOF, specificity_GBDT_SM_LOF, recall_GBDT_SM_LOF, f1_GBDT_SM_LOF = create_cm(test_y, pred_y, True)

accuracy_GBDT_SM_LOF = accuracy_score(test_y, pred_y)

print(f'acc: {accuracy_GBDT_SM_LOF}')

AUC_list.append(["AUC_GBDT_SM_LOF:", np.round(AUC_GBDT_SM_LOF, 3)])

specificity_list.append(["specificity_GBDT_SM_LOF:", np.round(specificity_GBDT_SM_LOF, 3)])

recall_list.append(["recall_GBDT_SM_LOF:", np.round(recall_GBDT_SM_LOF, 3)])

f1_score_list.append(["f1_GBDT_SM_LOF:", np.round(f1_GBDT_SM_LOF, 3)])

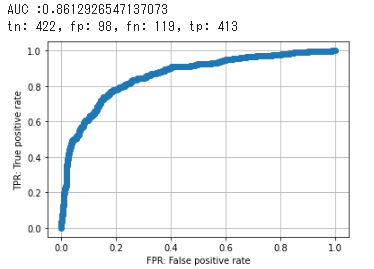

結果

ランダムフォレスト

train_X, test_X, train_y, test_y = train_test_split(x_resampled, y_resampled, test_size=0.2, random_state=42)

train_X, val_X, train_y, val_y = train_test_split(train_X, train_y, test_size=0.1, random_state=42)

train_X = np.asarray(train_X).astype(np.float32)

train_y = np.asarray(train_y).astype(np.float32)

val_X = np.asarray(val_X).astype(np.float32)

val_y = np.asarray(val_y).astype(np.float32)

test_X = np.asarray(test_X).astype(np.float32)

test_y = np.asarray(test_y).astype(np.float32)

test_y = test_y.values.tolist()

pred_y = pred_y.tolist()

AUC_GBDT_SM_LOF = create_ROCcurve(test_y, pred_y)

tn_GBDT_SM_LOF, fp_GBDT_SM_LOF, fn_GBDT_SM_LOF, tp_GBDT_SM_LOF, specificity_GBDT_SM_LOF, recall_GBDT_SM_LOF, f1_GBDT_SM_LOF = create_cm(test_y, pred_y, True)

accuracy_GBDT_SM_LOF = accuracy_score(test_y, pred_y)

print(f'acc: {accuracy_GBDT_SM_LOF}')

AUC_list.append(["AUC_GBDT_SM_LOF:", np.round(AUC_GBDT_SM_LOF, 3)])

specificity_list.append(["specificity_GBDT_SM_LOF:", np.round(specificity_GBDT_SM_LOF, 3)])

recall_list.append(["recall_GBDT_SM_LOF:", np.round(recall_GBDT_SM_LOF, 3)])

f1_score_list.append(["f1_GBDT_SM_LOF:", np.round(f1_GBDT_SM_LOF, 3)])

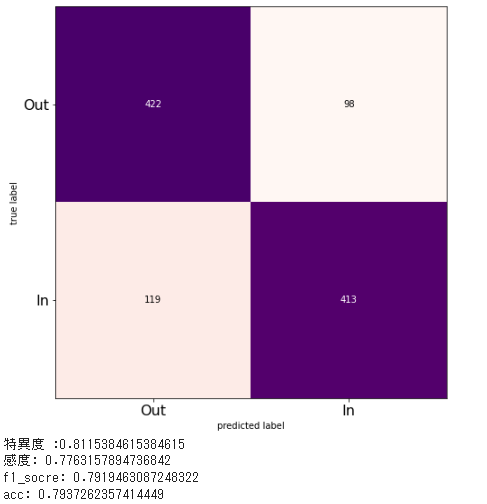

結果

ロジスティック回帰

LR_model_SM_LOF = LogisticRegression(max_iter=4500, C=79.42047819026727, random_state=40)

LR_model_SM_LOF.fit(train_X, train_y)

LR_model_SM_LOF.score(test_X, test_y)

pred_y = LR_model_SM_LOF.predict(test_X)

AUC_LR_SM_LOF = create_ROCcurve(test_y, pred_y)

tn_LR_SM_LOF, fp_LR_SM_LOF, fn_LR_SM_LOF, tp_LR_SM_LOF, specificity_LR_SM_LOF, recall_LR_SM_LOF, f1_LR_SM_LOF = create_cm(test_y, pred_y)

AUC_list.append(["AUC_LR_SM_LOF", np.round(AUC_LR_SM_LOF, 3)])

specificity_list.append(["specificity_LR_SM_LOF", np.round(specificity_LR_SM_LOF, 3)])

recall_list.append(["recall_LR_SM_LOF", np.round(recall_LR_SM_LOF, 3)])

f1_score_list.append(["f1_LR_SM_LOF", np.round(f1_LR_SM_LOF, 3)])

結果

決定木

DT_model_SM_LOF = DecisionTreeClassifier(max_depth=6)

DT_model_SM_LOF.fit(train_X, train_y)

DT_model_SM_LOF.score(test_X, test_y)

pred_y = DT_model_SM_LOF.predict(test_X)

AUC_DT_SM_LOF = create_ROCcurve(test_y, pred_y)

tn_DT_SM_LOF, fp_DT_SM_LOF, fn_DT_SM_LOF, tp_DT_SM_LOF, specificity_DT_SM_LOF, recall_DT_SM_LOF, f1_DT_SM_LOF = create_cm(test_y, pred_y)

AUC_list.append(["AUC_DT_SM_LOF", np.round(AUC_DT_SM_LOF, 3)])

specificity_list.append(["specificity_DT_SM_LOF", np.round(specificity_DT_SM_LOF, 3)])

recall_list.append(["recall_DT_SM_LOF", np.round(recall_DT_SM_LOF, 3)])

f1_score_list.append(["f1_DT_SM_LOF", np.round(f1_DT_SM_LOF, 3)])

結果

k-近傍法

KN_model_SM_LOF = KNeighborsClassifier(n_neighbors=15)

KN_model_SM_LOF.fit(train_X, train_y)

KN_model_SM_LOF.score(test_X, test_y)

pred_y = KN_model_SM_LOF.predict(test_X)

AUC_KN_SM_LOF = create_ROCcurve(test_y, pred_y)

tn_KN_SM_LOF, fp_KN_SM_LOF, fn_KN_SM_LOF, tp_KN_SM_LOF, specificity_KN_SM_LOF, recall_KN_SM_LOF, f1_KN_SM_LOF = create_cm(test_y, pred_y)

AUC_list.append(["AUC_KN_SM_LOF", np.round(AUC_KN_SM_LOF, 3)])

specificity_list.append(["specificity_KN_SM_LOF", np.round(specificity_KN_SM_LOF, 3)])

recall_list.append(["recall_KN_SM_LOF", np.round(recall_KN_SM_LOF, 3)])

f1_score_list.append(["f1_KN_SM_LOF", np.round(f1_KN_SM_LOF, 3)])

結果

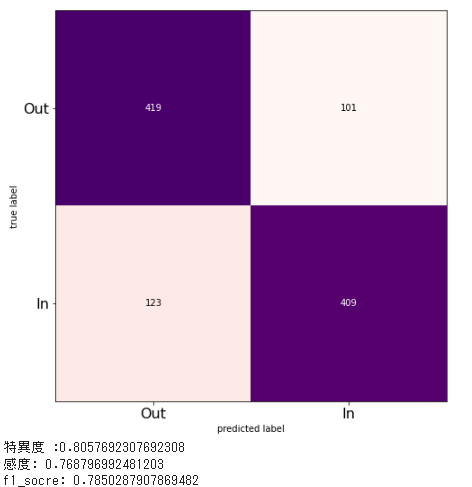

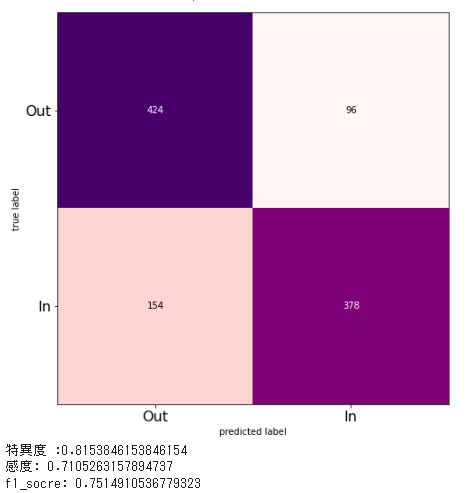

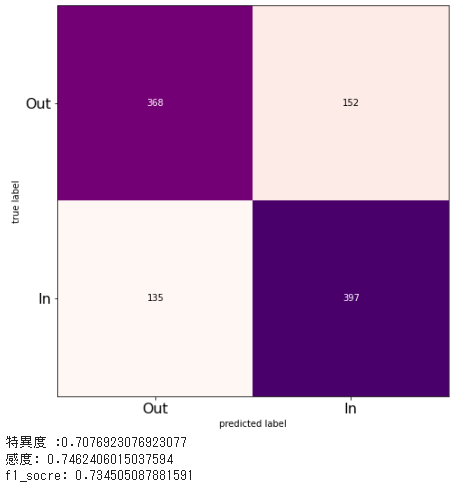

スタッキング2(ランダムフォレスト、ロジスティック回帰、決定木、k-近傍法)

estimators = [('DT', DT_model_SM_LOF), ('RF', RF_model_SM_LOF), ('LR', LR_model_SM_LOF), ('KN', KN_model_SM_LOF)]

stk_clf_SM_LOF = StackingClassifier(estimators=estimators, final_estimator=LogisticRegression(solver='liblinear'))

stk_clf_SM_LOF.fit(train_X, train_y)

stk_clf_SM_LOF.score(test_X, test_y)

pred_y = stk_clf_SM_LOF.predict(test_X)

results_SM_LOF = model_selection.cross_val_score(stk_clf, test_X, test_y, cv = kfold)

scores[('4.Stacking', 'train_score')] = results_SM_LOF.mean()

scores[('4.Stacking', 'test_score')] = stk_clf_SM_LOF.score(test_X, test_y)

# モデル評価

print(pd.Series(scores).unstack())

AUC_STK_SM_LOF = create_ROCcurve(test_y, pred_y)

tn_STK_SM_LOF, fp_STK_SM_LOF, fn_STK_SM_LOF, tp_STK_SM_LOF, specificity_STK_SM_LOF, recall_STK_SM_LOF, f1_STK_SM_LOF = create_cm(test_y, pred_y, True)

AUC_list.append(["AUC_Stacking_SM_LOF", np.round(AUC_STK_SM_LOF, 3)])

specificity_list.append(["specificity_Stacking_SM_LOF", np.round(specificity_STK_SM_LOF, 3)])

recall_list.append(["recall_Stacking_SM_LOF", np.round(recall_STK_SM_LOF, 3)])

f1_score_list.append(["f1_Stacking_SM_LOF", np.round(f1_STK_SM_LOF, 3)])

結果

評価指標

AUC_list = sorted(AUC_list, reverse=True, key=lambda x: x[1])

specificity_list = sorted(specificity_list, reverse=True, key=lambda x: x[1])

recall_list = sorted(recall_list, reverse=True, key=lambda x: x[1])

f1_score_list = sorted(f1_score_list, reverse=True, key=lambda x: x[1])

print("---ROC_AUC---")

for i in range(len(AUC_list)):

print(AUC_list[i])

print("---特異度---")

for i in range(len(specificity_list)):

print(specificity_list[i])

print("----感度----")

for i in range(len(recall_list)):

print(recall_list[i])

print("----f1値----")

for i in range(len(f1_score_list)):

print(f1_score_list[i])

| ROC_AUC | 特異度 | 感度 | f1値 |

|---|---|---|---|

| ['AUC_GBDT_SM_LOF:', 0.861] | ['specificity_RF', 0.859] | ['recall_RF_SM_LOF', 0.78] | ['f1_Stacking_SM_LOF', 0.793] |

| ['AUC_GBDT_SM:', 0.859] | ['specificity_GBDT:', 0.855] | ['recall_Stacking_SM_LOF', 0.78] | ['f1_GBDT_SM_LOF:', 0.792] |

| ['AUC_GBDT:', 0.816] | ['specificity_Stacking', 0.853] | ['recall_GBDT_SM:', 0.78] | ['f1_Stacking_SM2', 0.789] |

| ['AUC_Stacking_SM_LOF', 0.795] | ['specificity_LR', 0.837] | ['recall_GBDT_SM_LOF:', 0.776] | ['f1_Stacking_SM', 0.788] |

| ['AUC_Stacking_SM', 0.791] | ['specificity_LR_SM_LOF', 0.825] | ['recall_Stacking_SM2', 0.774] | ['f1_RF_SM_LOF', 0.787] |

| ['AUC_Stacking_SM2', 0.79] | ['specificity_LR_SM', 0.819] | ['recall_Stacking_SM', 0.771] | ['f1_GBDT_SM:', 0.787] |

| ['AUC_RF_SM', 0.787] | ['specificity_DT_SM', 0.815] | ['recall_RF_SM', 0.769] | ['f1_RF_SM', 0.785] |

| ['AUC_RF_SM_LOF', 0.787] | ['specificity_KN', 0.813] | ['recall_KN_SM_LOF', 0.746] | ['f1_LR_SM_LOF', 0.759] |

| ['AUC_LR_SM_LOF', 0.771] | ['specificity_Stacking_SM', 0.812] | ['recall_DT_SM_LOF', 0.741] | ['f1_DT_SM_LOF', 0.758] |

| ['AUC_LR_SM', 0.766] | ['specificity_GBDT_SM_LOF:', 0.812] | ['recall_KN_SM', 0.737] | ['f1_LR_SM', 0.754] |

| ['AUC_DT_SM', 0.763] | ['specificity_Stacking_SM_LOF', 0.81] | ['recall_LR_SM_LOF', 0.716] | ['f1_DT_SM', 0.751] |

| ['AUC_DT_SM_LOF', 0.762] | ['specificity_RF_SM', 0.806] | ['recall_LR_SM', 0.712] | ['f1_KN_SM_LOF', 0.735] |

| ['AUC_RF', 0.755] | ['specificity_Stacking_SM2', 0.806] | ['recall_DT_SM', 0.711] | ['f1_KN_SM', 0.734] |

| ['AUC_Stacking', 0.755] | ['specificity_RF_SM_LOF', 0.794] | ['recall_Stacking', 0.658] | ['f1_Stacking', 0.708] |

| ['AUC_KN_SM', 0.73] | ['specificity_GBDT_SM:', 0.794] | ['recall_RF', 0.652] | ['f1_RF', 0.707] |

| ['AUC_KN_SM_LOF', 0.727] | ['specificity_DT_SM_LOF', 0.783] | ['recall_GBDT:', 0.634] | ['f1_GBDT:', 0.692] |

| ['AUC_LR', 0.723] | ['specificity_DT', 0.737] | ['recall_DT', 0.618] | ['f1_LR', 0.666] |

| ['AUC_KN', 0.702] | ['specificity_KN_SM', 0.723] | ['recall_LR', 0.61] | ['f1_KN', 0.641] |

| ['AUC_DT', 0.677] | ['specificity_KN_SM_LOF', 0.708] | ['recall_KN', 0.591] | ['f1_DT', 0.625] |

考察

不均衡データをオーバーサンプリングすることで、感度に大幅な改善が見られた。

またStacikngやGBDTなどのアンサンブル学習は単体での学習結果よりもよい結果が得られた。

加えて、外れ値を除外することで精度が改善されることがわかった。

参考資料

不均衡データの扱い方と評価指標!SmoteをPythonで実装して検証していく!

機械学習における不均衡データの扱いについて解説