今回、minikubeを使って、Kubernetesクラスタ環境の理解を深めるため、etcd保持データを確認してみました。

Kubernetesクラスタと言っても、スタンドアロン構成なので、あくまでも学習用ではありますが、、、

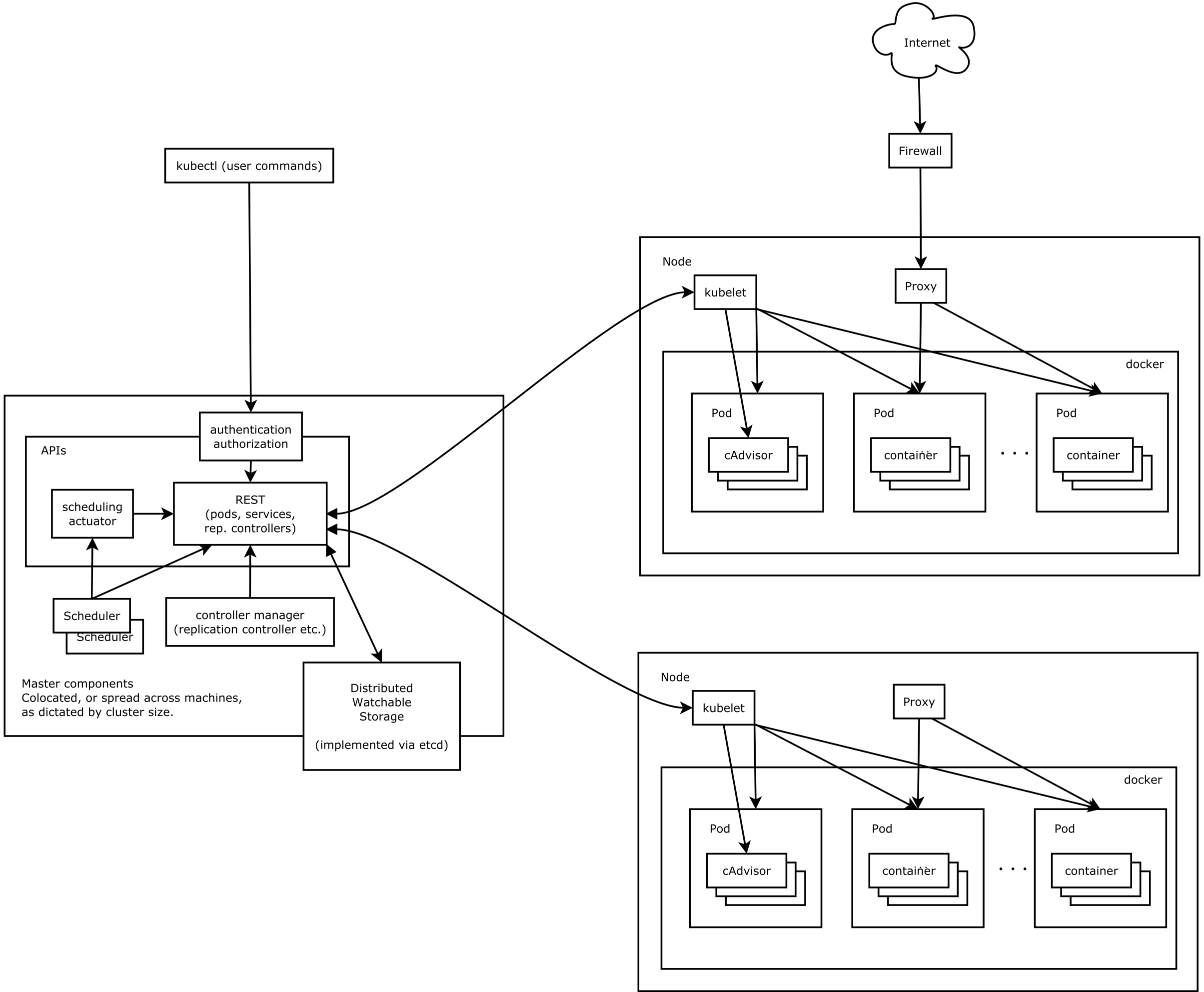

Kubernetesクラスタ環境は、こちらの図がわかりやすいでしょうか。

[ Kubernetes architecture から引用 ]

今回のハマりポイントは、Kubernetes(v1.10.0)のetcd_v3環境に外部からアクセスする際には、ssl認証が必要になったみたいなので、その設定に、結構、苦労しました。

あと、いきなり、結論になりますが、"kube-ectd-helper"は、etcd保持データを確認する上で、相当、役に立つことがわかりました。

https://github.com/yamamoto-febc/kube-etcd-helper

◾️Kubernetesクラスタ環境の事前準備

(1) Kubernetesクラスタのセットアップ

-

事前に、virtualboxをインストールしておく

-

minikubeをインストールする

$ brew cask install minikube

- minikubeで動作可能なkubernetesバージョンを確認する

$ minikube get-k8s-versions

The following Kubernetes versions are available when using the localkube bootstrapper:

- v1.10.0

- v1.9.4

- v1.9.0

- v1.8.0

- v1.7.5

... (snip)

- minikubeを起動する

$ minikube --vm-driver=virtualbox --kubernetes-version=v1.10.0 start

Starting local Kubernetes v1.10.0 cluster...

Starting VM...

Downloading Minikube ISO

153.08 MB / 153.08 MB [============================================] 100.00% 0s

Getting VM IP address...

Moving files into cluster...

Downloading kubeadm v1.10.0

Downloading kubelet v1.10.0

Finished Downloading kubelet v1.10.0

Finished Downloading kubeadm v1.10.0

Setting up certs...

Connecting to cluster...

Setting up kubeconfig...

Starting cluster components...

Kubectl is now configured to use the cluster.

Loading cached images from config file.

すると、kubeクラスタ設定ファイルが自動で生成されるようです

apiVersion: v1

clusters:

- cluster:

certificate-authority: /Users/ttsubo/.minikube/ca.crt

server: https://192.168.99.100:8443

name: minikube

contexts:

- context:

cluster: minikube

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user:

client-certificate: /Users/ttsubo/.minikube/client.crt

client-key: /Users/ttsubo/.minikube/client.key

- ひとまず、minikubeを停止してみる

$ minikube stop

Stopping local Kubernetes cluster...

Machine stopped.

- 再度、minikubeを起動する

$ minikube start

Starting local Kubernetes v1.10.0 cluster...

Starting VM...

Getting VM IP address...

Moving files into cluster...

Setting up certs...

Connecting to cluster...

Setting up kubeconfig...

Starting cluster components...

Kubectl is now configured to use the cluster.

Loading cached images from config file.

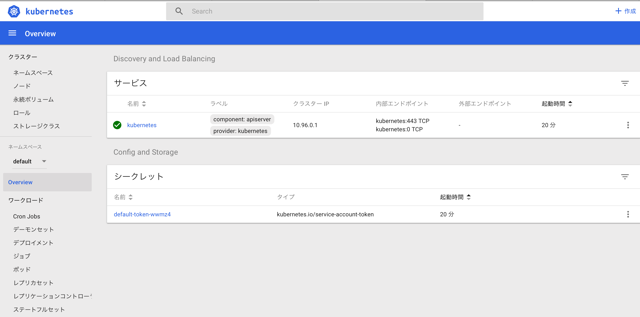

- Kubernetes Dashboardを使ってみる

$ minikube service list

|-------------|----------------------|-----------------------------|

| NAMESPACE | NAME | URL |

|-------------|----------------------|-----------------------------|

| default | kubernetes | No node port |

| kube-system | kube-dns | No node port |

| kube-system | kubernetes-dashboard | http://192.168.99.100:30000 |

|-------------|----------------------|-----------------------------|

- Webブラウザから、URLにアクセスする

(2) kubectlコマンドを用いて、Kubernetesクラスタ構成を確認しておく

- まずは、Kubernetesクラスタ構成を確認してみる

$ kubectl cluster-info

Kubernetes master is running at https://192.168.99.100:8443

KubeDNS is running at https://192.168.99.100:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

ここでは、Kubernetesクラスタとして、"master"と"KubeDNS"が存在していることが確認できました。

- Kubernetesクラスタが正常であることを確認する

$ kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

Kubernetesクラスタ環境には、etcdが正常に動作していることが確認できました。

- Kubernetesノードの状態を確認する

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready master 8m v1.10.0

Kubernetesクラスタ環境には、"minikube"ノードが存在しているのみです。

あくまでのminikube活用用途が、Kubernetes学習用であり、実際には、クラスタ構成が組まれているわけではなく、スタンドアロン構成になっている

- Kubernetes proxyを確認する

$ kubectl get daemonSets --namespace=kube-system kube-proxy

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-proxy 1 1 1 1 1 <none> 9m

- Kubernetes DNSを確認する

$ kubectl get deployments --namespace=kube-system kube-dns

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kube-dns 1 1 1 1 9m

- Kubernetes Service(kube-dns)の様子も確認する

$ kubectl get services --namespace=kube-system kube-dns

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 10m

◾️etcdctlを用いて、etcd保持データを確認する

まずは、手始めに、etcdctlコマンドを用いて、etcd保持データを確認してみます

https://github.com/coreos/etcd/tree/master/etcdctl

(1) minikube内のdocker環境を確認しておく

- まずは、minikube本体にアクセスしてみる

$ minikube ssh

_ _

_ _ ( ) ( )

___ ___ (_) ___ (_)| |/') _ _ | |_ __

/' _ ` _ `\| |/' _ `\| || , < ( ) ( )| '_`\ /'__`\

| ( ) ( ) || || ( ) || || |\`\ | (_) || |_) )( ___/

(_) (_) (_)(_)(_) (_)(_)(_) (_)`\___/'(_,__/'`\____)

$

- minikube本体で動作しているdockerコンテナを確認してみる

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

262be08b3b01 4689081edb10 "/storage-provisioner" 3 minutes ago Up 3 minutes k8s_storage-provisioner_storage-provisioner_kube-system_5c5ca163-881c-11e8-a9f9-080027e54c38_3

ac07f4428c74 e94d2f21bc0c "/dashboard --insecu…" 3 minutes ago Up 3 minutes k8s_kubernetes-dashboard_kubernetes-dashboard-5498ccf677-78l9h_kube-system_5c4ed7e7-881c-11e8-a9f9-080027e54c38_3

c232dd3091c1 80cc5ea4b547 "/kube-dns --domain=…" 4 minutes ago Up 4 minutes k8s_kubedns_kube-dns-86f4d74b45-t6x44_kube-system_5bb1bf56-881c-11e8-a9f9-080027e54c38_2

7b7b84c03b0f bfc21aadc7d3 "/usr/local/bin/kube…" 4 minutes ago Up 4 minutes k8s_kube-proxy_kube-proxy-hzk9z_kube-system_3aa32216-881d-11e8-bb59-080027e54c38_0

a1a14d4cadbb k8s.gcr.io/pause-amd64:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_kube-proxy-hzk9z_kube-system_3aa32216-881d-11e8-bb59-080027e54c38_0

13af4461c37d 6f7f2dc7fab5 "/sidecar --v=2 --lo…" 5 minutes ago Up 5 minutes k8s_sidecar_kube-dns-86f4d74b45-t6x44_kube-system_5bb1bf56-881c-11e8-a9f9-080027e54c38_1

2470295afca9 c2ce1ffb51ed "/dnsmasq-nanny -v=2…" 5 minutes ago Up 5 minutes k8s_dnsmasq_kube-dns-86f4d74b45-t6x44_kube-system_5bb1bf56-881c-11e8-a9f9-080027e54c38_1

521ac16d4cc9 k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kubernetes-dashboard-5498ccf677-78l9h_kube-system_5c4ed7e7-881c-11e8-a9f9-080027e54c38_1

2ae6545e4388 k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kube-dns-86f4d74b45-t6x44_kube-system_5bb1bf56-881c-11e8-a9f9-080027e54c38_1

56ecfe2b0c47 k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_storage-provisioner_kube-system_5c5ca163-881c-11e8-a9f9-080027e54c38_1

7ea25f89862f 52920ad46f5b "etcd --client-cert-…" 5 minutes ago Up 5 minutes k8s_etcd_etcd-minikube_kube-system_d147900f60a89b9a088b717dd46e8468_0

a020edd0f5eb 704ba848e69a "kube-scheduler --ad…" 5 minutes ago Up 5 minutes k8s_kube-scheduler_kube-scheduler-minikube_kube-system_31cf0ccbee286239d451edb6fb511513_1

c37717e73ffc ad86dbed1555 "kube-controller-man…" 5 minutes ago Up 5 minutes k8s_kube-controller-manager_kube-controller-manager-minikube_kube-system_2b6ebab44014cdb1f032370ac66a3414_0

ecaf45ecbe7f 9c16409588eb "/opt/kube-addons.sh" 5 minutes ago Up 5 minutes k8s_kube-addon-manager_kube-addon-manager-minikube_kube-system_3afaf06535cc3b85be93c31632b765da_1

d2d6d907961c af20925d51a3 "kube-apiserver --ad…" 5 minutes ago Up 5 minutes k8s_kube-apiserver_kube-apiserver-minikube_kube-system_d580ff9e51219739e97a467bbc71404f_0

b30b1bfee43e k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kube-addon-manager-minikube_kube-system_3afaf06535cc3b85be93c31632b765da_1

a95ac58713e6 k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kube-scheduler-minikube_kube-system_31cf0ccbee286239d451edb6fb511513_1

918b4263d0eb k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kube-apiserver-minikube_kube-system_d580ff9e51219739e97a467bbc71404f_0

dda3f5fd34f1 k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_kube-controller-manager-minikube_kube-system_2b6ebab44014cdb1f032370ac66a3414_0

34ffdcb640e3 k8s.gcr.io/pause-amd64:3.1 "/pause" 5 minutes ago Up 5 minutes k8s_POD_etcd-minikube_kube-system_d147900f60a89b9a088b717dd46e8468_0

- dockerイメージを確認してみる

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy-amd64 v1.10.0 bfc21aadc7d3 3 months ago 97MB

k8s.gcr.io/kube-controller-manager-amd64 v1.10.0 ad86dbed1555 3 months ago 148MB

k8s.gcr.io/kube-scheduler-amd64 v1.10.0 704ba848e69a 3 months ago 50.4MB

k8s.gcr.io/kube-apiserver-amd64 v1.10.0 af20925d51a3 3 months ago 225MB

k8s.gcr.io/etcd-amd64 3.1.12 52920ad46f5b 4 months ago 193MB

k8s.gcr.io/kube-addon-manager v8.6 9c16409588eb 4 months ago 78.4MB

k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64 1.14.8 c2ce1ffb51ed 6 months ago 41MB

k8s.gcr.io/k8s-dns-sidecar-amd64 1.14.8 6f7f2dc7fab5 6 months ago 42.2MB

k8s.gcr.io/k8s-dns-kube-dns-amd64 1.14.8 80cc5ea4b547 6 months ago 50.5MB

k8s.gcr.io/pause-amd64 3.1 da86e6ba6ca1 6 months ago 742kB

k8s.gcr.io/kubernetes-dashboard-amd64 v1.8.1 e94d2f21bc0c 7 months ago 121MB

gcr.io/k8s-minikube/storage-provisioner v1.8.1 4689081edb10 8 months ago 80.8MB

(2) etcdへのアクセス環境を準備する

- etcdコネクション開設のために、SSLファイルを取得する

$ docker cp $(docker ps | grep "k8s_etcd_etcd-minikube_kube-system" | awk '{print$1}'):/var/lib/localkube/certs/etcd/ca.crt $HOME/cert.pem

$ docker cp $(docker ps | grep "k8s_etcd_etcd-minikube_kube-system" | awk '{print$1}'):/var/lib/localkube/certs/etcd/ca.key $HOME/key.pem

$ docker cp $(docker ps | grep "k8s_etcd_etcd-minikube_kube-system" | awk '{print$1}'):/var/lib/localkube/certs/etcd/server.crt $HOME/server-cert.pem

$ ls -l

total 12

-rw-r--r-- 1 docker docker 1025 Jul 15 10:45 cert.pem

-rw------- 1 docker docker 1679 Jul 15 10:45 key.pem

-rw-r--r-- 1 docker docker 1078 Jul 15 10:45 server-cert.pem

- etcdクライアント用コンテナ"etcd_client"を作成しておく

$ docker run -itd --net host --name etcd_client \

-e ETCDCTL_INSECURE_SKIP_TLS_VERIFY=true \

-e ETCDCTL_API=3 \

-e ETCDCTL_CERT=/etc/etcd/ssl/cert.pem \

-e ETCDCTL_KEY=/etc/etcd/ssl/key.pem \

-v $HOME:/etc/etcd/ssl \

k8s.gcr.io/etcd-amd64:3.1.12 /bin/sh

(3) etcdへのアクセスしてみる

- etcdクラスタ構成のメンバを確認する

$ docker exec -it etcd_client etcdctl -w table member list

+------------------+---------+---------+-----------------------+------------------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |

+------------------+---------+---------+-----------------------+------------------------+

| 8e9e05c52164694d | started | default | http://localhost:2380 | https://127.0.0.1:2379 |

+------------------+---------+---------+-----------------------+------------------------+

- エンドポイントのステータスを確認する

$ docker exec -it etcd_client etcdctl -w table endpoint status

+----------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+----------------+------------------+---------+---------+-----------+-----------+------------+

| 127.0.0.1:2379 | 8e9e05c52164694d | 3.1.12 | 1.7 MB | true | 3 | 1517 |

+----------------+------------------+---------+---------+-----------+-----------+------------+

- etcdデータストアで保持しているキー情報をリストアップしてみる

$ docker exec -it etcd_client etcdctl get "" \

> --prefix --keys-only | sed '/^\s*$/d'

/registry/apiregistration.k8s.io/apiservices/v1.

/registry/apiregistration.k8s.io/apiservices/v1.apps

/registry/apiregistration.k8s.io/apiservices/v1.authentication.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.autoscaling

/registry/apiregistration.k8s.io/apiservices/v1.batch

/registry/apiregistration.k8s.io/apiservices/v1.networking.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.rbac.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.storage.k8s.io

... (snip)

/registry/deployments/kube-system/kube-dns

/registry/deployments/kube-system/kubernetes-dashboard

... (snip)

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/kube-system/kube-controller-manager

/registry/services/endpoints/kube-system/kube-dns

/registry/services/endpoints/kube-system/kube-scheduler

/registry/services/endpoints/kube-system/kubernetes-dashboard

/registry/services/specs/default/kubernetes

/registry/services/specs/kube-system/kube-dns

/registry/services/specs/kube-system/kubernetes-dashboard

/registry/storageclasses/standard

compact_rev_key

- kube-controller-managerの登録情報を確認してみる

$ docker exec -it etcd_client etcdctl -w fields get \

> /registry/services/endpoints/kube-system/kube-controller-manager

"ClusterID" : 14841639068965178418

"MemberID" : 10276657743932975437

"Revision" : 1386

"RaftTerm" : 3

"Key" : "/registry/services/endpoints/kube-system/kube-controller-manager"

"CreateRevision" : 190

"ModRevision" : 1386

"Version" : 363

"Value" : "k8s\x00\n\x0f\n\x02v1\x12\tEndpoints\x12\xcd\x02\n\xca\x02\n\x17kube-controller-manager\x12\x00\x1a\vkube-system\"\x00*$57a4ef53-881c-11e8-a9f9-080027e54c382\x008\x00B\b\b\x8a̬\xda\x05\x10\x00b\xe7\x01\n(control-plane.alpha.kubernetes.io/leader\x12\xba\x01{\"holderIdentity\":\"minikube_13eebf66-881d-11e8-9401-080027e54c38\",\"leaseDurationSeconds\":15,\"acquireTime\":\"2018-07-15T10:52:18Z\",\"renewTime\":\"2018-07-15T11:01:07Z\",\"leaderTransitions\":1}z\x00\x1a\x00\"\x00"

"Lease" : 0

"More" : false

"Count" : 1

- kube-dnsの登録情報を確認してみる

$ docker exec -it etcd_client etcdctl -w fields get \

> /registry/deployments/kube-system/kube-dns

"ClusterID" : 14841639068965178418

"MemberID" : 10276657743932975437

"Revision" : 1429

"RaftTerm" : 3

"Key" : "/registry/deployments/kube-system/kube-dns"

"CreateRevision" : 169

"ModRevision" : 858

"Version" : 6

"Value" : "k8s\x00\n\x15\n\aapps/v1\x12\nDeployment\x12\x86\x11\n\x8e\x01\n\bkube-dns\x12\x00\x1a\vkube-system\"\x00*$564fbaa3-881c-11e8-a9f9-080027e54c382\x008\x01B\b\b\x88̬\xda\x05\x10\x00Z\x13\n\ak8s-app\x12\bkube-dnsb&\n!deployment.kubernetes.io/revision\x12\x011z\x00\x12\xfc\r\b\x01\x12\x15\n\x13\n\ak8s-app\x12\bkube-dns\x1a\xb1\r\n'\n\x00\x12\x00\x1a\x00\"\x00*\x002\x008\x00B\x00Z\x13\n\ak8s-app\x12\bkube-dnsz\x00\x12\x85\r\n'\n\x0fkube-dns-config\x12\x14\x9a\x01\x11\n\n\n\bkube-dns\x18\xa4\x03 \x01\x12\xe4\x03\n\akubedns\x12(k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.8\"\x17--domain=cluster.local.\"\x10--dns-port=10053\"\x1d--config-dir=/kube-dns-config\"\x05--v=2*\x002\x17\n\tdns-local\x10\x00\x18\xc5N\"\x03UDP*\x002\x1b\n\rdns-tcp-local\x10\x00\x18\xc5N\"\x03TCP*\x002\x15\n\ametrics\x10\x00\x18\xc7N\"\x03TCP*\x00:\x18\n\x0fPROMETHEUS_PORT\x12\x0510055B4\n\x11\n\x06memory\x12\a\n\x05170Mi\x12\r\n\x03cpu\x12\x06\n\x04100m\x12\x10\n\x06memory\x12\x06\n\x0470MiJ'\n\x0fkube-dns-config\x10\x00\x1a\x10/kube-dns-config\"\x00R5\n)\x12'\n\x14/healthcheck/kubedns\x12\a\b\x00\x10\xc6N\x1a\x00\x1a\x00\"\x04HTTP\x10<\x18\x05 \n(\x010\x05Z+\n\x1f\x12\x1d\n\n/readiness\x12\a\b\x00\x10\x91?\x1a\x00\x1a\x00\"\x04HTTP\x10\x03\x18\x05 \n(\x010\x03j\x14/dev/termination-logr\fIfNotPresent\x80\x01\x00\x88\x01\x00\x90\x01\x00\xa2\x01\x04File\x12\xa5\x04\n\adnsmasq\x12-k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.8\"\x04-v=2\"\f-logtostderr\"%-configDir=/etc/k8s/dns/dnsmasq-nanny\"\x14-restartDnsmasq=true\"\x02--\"\x02-k\"\x11--cache-size=1000\"\r--no-negcache\"\x10--log-facility=-\"'--server=/cluster.local/127.0.0.1#10053\"&--server=/in-addr.arpa/127.0.0.1#10053\"\"--server=/ip6.arpa/127.0.0.1#10053*\x002\x10\n\x03dns\x10\x00\x185\"\x03UDP*\x002\x14\n\adns-tcp\x10\x00\x185\"\x03TCP*\x00B!\x12\r\n\x03cpu\x12\x06\n\x04150m\x12\x10\n\x06memory\x12\x06\n\x0420MiJ1\n\x0fkube-dns-config\x10\x00\x1a\x1a/etc/k8s/dns/dnsmasq-nanny\"\x00R5\n)\x12'\n\x14/healthcheck/dnsmasq\x12\a\b\x00\x10\xc6N\x1a\x00\x1a\x00\"\x04HTTP\x10<\x18\x05 \n(\x010\x05j\x14/dev/termination-logr\fIfNotPresent\x80\x01\x00\x88\x01\x00\x90\x01\x00\xa2\x01\x04File\x12\xf7\x02\n\asidecar\x12'k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.8\"\x05--v=2\"\r--logtostderr\"J--probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,SRV\"G--probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,SRV*\x002\x15\n\ametrics\x10\x00\x18\xc6N\"\x03TCP*\x00B \x12\f\n\x03cpu\x12\x05\n\x0310m\x12\x10\n\x06memory\x12\x06\n\x0420MiR)\n\x1d\x12\x1b\n\b/metrics\x12\a\b\x00\x10\xc6N\x1a\x00\x1a\x00\"\x04HTTP\x10<\x18\x05 \n(\x010\x05j\x14/dev/termination-logr\fIfNotPresent\x80\x01\x00\x88\x01\x00\x90\x01\x00\xa2\x01\x04File\x1a\x06Always \x1e2\aDefaultB\bkube-dnsJ\bkube-dnsR\x00X\x00`\x00h\x00r\x00\x82\x01\x00\x8a\x01\x00\x92\x01,\n*\n(\n&\n$\n\x17beta.kubernetes.io/arch\x12\x02In\x1a\x05amd64\x9a\x01\x11default-scheduler\xb2\x01 \n\x12CriticalAddonsOnly\x12\x06Exists\x1a\x00\"\x00\xb2\x010\n\x1enode-role.kubernetes.io/master\x12\x00\x1a\x00\"\nNoSchedule\xc2\x01\x00\"$\n\rRollingUpdate\x12\x13\n\x06\b\x00\x10\x00\x1a\x00\x12\t\b\x01\x10\x00\x1a\x0310%(\x000\n8\x00H\xd8\x04\x1a\xf3\x01\b\x01\x10\x01\x18\x01 \x01(\x002~\n\vProgressing\x12\x04True\"\x16NewReplicaSetAvailable*=ReplicaSet \"kube-dns-86f4d74b45\" has successfully progressed.2\b\b\xce̬\xda\x05\x10\x00:\b\b\x91̬\xda\x05\x10\x002e\n\tAvailable\x12\x04True\"\x18MinimumReplicasAvailable*$Deployment has minimum availability.2\b\b\xa1Ϭ\xda\x05\x10\x00:\b\b\xa1Ϭ\xda\x05\x10\x008\x01\x1a\x00\"\x00"

"Lease" : 0

"More" : false

"Count" : 1

最近のKubernetesでは、etcd_v3が使われていますが、etcdクライアントから、必要な情報を確認するには、etcdctlでは、Valueフィールドの内容を、ちゃんとパースできないので、ほとんど役に立ちそうにありませんね。

◾️kube-ectd-helperを用いて、etcd保持データを確認する

続いて、代替え案として、"kube-ectd-helper"を用いて、etcd保持データを確認してみます。

https://github.com/yamamoto-febc/kube-etcd-helper

(1) minikube内に、kube-ectd-helperコマンドを配備する

- まずは、minikube本体にアクセスしてみる

$ minikube ssh

_ _

_ _ ( ) ( )

___ ___ (_) ___ (_)| |/') _ _ | |_ __

/' _ ` _ `\| |/' _ `\| || , < ( ) ( )| '_`\ /'__`\

| ( ) ( ) || || ( ) || || |\`\ | (_) || |_) )( ___/

(_) (_) (_)(_)(_) (_)(_)(_) (_)`\___/'(_,__/'`\____)

$

- kube-ectd-helperをダウンロードする

$ curl -L -o kube-etcd-helper_linux_amd64 \

> https://github.com/yamamoto-febc/kube-etcd-helper/releases/download/0.0.4/kube-etcd-helper_linux_amd64

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 619 0 619 0 0 785 0 --:--:-- --:--:-- --:--:-- 784

100 22.4M 100 22.4M 0 0 812k 0 0:00:28 0:00:28 --:--:-- 973k

$ ls -l

total 22984

-rw-r--r-- 1 docker docker 1025 Jul 15 10:45 cert.pem

-rw------- 1 docker docker 1679 Jul 15 10:45 key.pem

-rwxr-xr-x 1 docker docker 23522912 Jul 15 11:08 kube-etcd-helper_linux_amd64

-rw-r--r-- 1 docker docker 1078 Jul 15 10:45 server-cert.pem

- kube-ectd-helperの実行権限を付与する

$ chmod 755 kube-etcd-helper_linux_amd64

- etcdアクセス用のシェル変数を設定する

$ export ETCD_KEY=key.pem

$ export ETCD_CERT=cert.pem

$ export ETCD_CA_CERT=server-cert.pem

$ export ETCD_ENDPOINT=https://127.0.0.1:2379

(2) etcdへのアクセスしてみる

- etcdデータストアで保持しているキー情報をリストアップしてみる

$ ./kube-etcd-helper_linux_amd64 ls

/registry/apiregistration.k8s.io/apiservices/v1.

/registry/apiregistration.k8s.io/apiservices/v1.apps

/registry/apiregistration.k8s.io/apiservices/v1.authentication.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.autoscaling

/registry/apiregistration.k8s.io/apiservices/v1.batch

/registry/apiregistration.k8s.io/apiservices/v1.networking.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.rbac.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.storage.k8s.io

... (snip)

/registry/deployments/kube-system/kube-dns

/registry/deployments/kube-system/kubernetes-dashboard

... (snip)

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/kube-system/kube-controller-manager

/registry/services/endpoints/kube-system/kube-dns

/registry/services/endpoints/kube-system/kube-scheduler

/registry/services/endpoints/kube-system/kubernetes-dashboard

/registry/services/specs/default/kubernetes

/registry/services/specs/kube-system/kube-dns

/registry/services/specs/kube-system/kubernetes-dashboard

/registry/storageclasses/standard

compact_rev_key

- kube-controller-managerの登録情報を確認してみる

$ ./kube-etcd-helper_linux_amd64 get --pretty \

> /registry/services/endpoints/kube-system/kube-controller-manager

/v1, Kind=Endpoints

{

"metadata": {

"annotations": {

"control-plane.alpha.kubernetes.io/leader": "{\"holderIdentity\":\"minikube_13eebf66-881d-11e8-9401-080027e54c38\",\"leaseDurationSeconds\":15,\"acquireTime\":\"2018-07-15T10:52:18Z\",\"renewTime\":\"2018-07-15T11:13:33Z\",\"leaderTransitions\":1}"

},

"creationTimestamp": "2018-07-15T10:46:34Z",

"name": "kube-controller-manager",

"namespace": "kube-system",

"uid": "57a4ef53-881c-11e8-a9f9-080027e54c38"

},

"subsets": null

}

おぉ、バッチリ、確認できますね。素晴らしい!!

- kube-dnsの登録情報を確認してみる

$ ./kube-etcd-helper_linux_amd64 get --pretty \

> /registry/deployments/kube-system/kube-dns

apps/v1, Kind=Deployment

{

"metadata": {

"annotations": {

"deployment.kubernetes.io/revision": "1"

},

"creationTimestamp": "2018-07-15T10:46:32Z",

"generation": 1,

"labels": {

"k8s-app": "kube-dns"

},

"name": "kube-dns",

"namespace": "kube-system",

"uid": "564fbaa3-881c-11e8-a9f9-080027e54c38"

},

"spec": {

"progressDeadlineSeconds": 600,

"replicas": 1,

"revisionHistoryLimit": 10,

"selector": {

"matchLabels": {

"k8s-app": "kube-dns"

}

},

"strategy": {

"rollingUpdate": {

"maxSurge": "10%",

"maxUnavailable": 0

},

"type": "RollingUpdate"

},

"template": {

"metadata": {

"creationTimestamp": null,

"labels": {

"k8s-app": "kube-dns"

}

},

"spec": {

"affinity": {

"nodeAffinity": {

"requiredDuringSchedulingIgnoredDuringExecution": {

"nodeSelectorTerms": [

{

"matchExpressions": [

{

"key": "beta.kubernetes.io/arch",

"operator": "In",

"values": [

"amd64"

]

}

]

}

]

}

}

},

"containers": [

{

"args": [

"--domain=cluster.local.",

"--dns-port=10053",

"--config-dir=/kube-dns-config",

"--v=2"

],

"env": [

{

"name": "PROMETHEUS_PORT",

"value": "10055"

}

],

"image": "k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.8",

"imagePullPolicy": "IfNotPresent",

"livenessProbe": {

"failureThreshold": 5,

"httpGet": {

"path": "/healthcheck/kubedns",

"port": 10054,

"scheme": "HTTP"

},

"initialDelaySeconds": 60,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 5

},

"name": "kubedns",

"ports": [

{

"containerPort": 10053,

"name": "dns-local",

"protocol": "UDP"

},

{

"containerPort": 10053,

"name": "dns-tcp-local",

"protocol": "TCP"

},

{

"containerPort": 10055,

"name": "metrics",

"protocol": "TCP"

}

],

"readinessProbe": {

"failureThreshold": 3,

"httpGet": {

"path": "/readiness",

"port": 8081,

"scheme": "HTTP"

},

"initialDelaySeconds": 3,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 5

},

"resources": {

"limits": {

"memory": "170Mi"

},

"requests": {

"cpu": "100m",

"memory": "70Mi"

}

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"volumeMounts": [

{

"mountPath": "/kube-dns-config",

"name": "kube-dns-config"

}

]

},

{

"args": [

"-v=2",

"-logtostderr",

"-configDir=/etc/k8s/dns/dnsmasq-nanny",

"-restartDnsmasq=true",

"--",

"-k",

"--cache-size=1000",

"--no-negcache",

"--log-facility=-",

"--server=/cluster.local/127.0.0.1#10053",

"--server=/in-addr.arpa/127.0.0.1#10053",

"--server=/ip6.arpa/127.0.0.1#10053"

],

"image": "k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.8",

"imagePullPolicy": "IfNotPresent",

"livenessProbe": {

"failureThreshold": 5,

"httpGet": {

"path": "/healthcheck/dnsmasq",

"port": 10054,

"scheme": "HTTP"

},

"initialDelaySeconds": 60,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 5

},

"name": "dnsmasq",

"ports": [

{

"containerPort": 53,

"name": "dns",

"protocol": "UDP"

},

{

"containerPort": 53,

"name": "dns-tcp",

"protocol": "TCP"

}

],

"resources": {

"requests": {

"cpu": "150m",

"memory": "20Mi"

}

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"volumeMounts": [

{

"mountPath": "/etc/k8s/dns/dnsmasq-nanny",

"name": "kube-dns-config"

}

]

},

{

"args": [

"--v=2",

"--logtostderr",

"--probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,SRV",

"--probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,SRV"

],

"image": "k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.8",

"imagePullPolicy": "IfNotPresent",

"livenessProbe": {

"failureThreshold": 5,

"httpGet": {

"path": "/metrics",

"port": 10054,

"scheme": "HTTP"

},

"initialDelaySeconds": 60,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 5

},

"name": "sidecar",

"ports": [

{

"containerPort": 10054,

"name": "metrics",

"protocol": "TCP"

}

],

"resources": {

"requests": {

"cpu": "10m",

"memory": "20Mi"

}

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File"

}

],

"dnsPolicy": "Default",

"restartPolicy": "Always",

"schedulerName": "default-scheduler",

"securityContext": {},

"serviceAccount": "kube-dns",

"serviceAccountName": "kube-dns",

"terminationGracePeriodSeconds": 30,

"tolerations": [

{

"key": "CriticalAddonsOnly",

"operator": "Exists"

},

{

"effect": "NoSchedule",

"key": "node-role.kubernetes.io/master"

}

],

"volumes": [

{

"configMap": {

"defaultMode": 420,

"name": "kube-dns",

"optional": true

},

"name": "kube-dns-config"

}

]

}

}

},

"status": {

"availableReplicas": 1,

"conditions": [

{

"lastTransitionTime": "2018-07-15T10:46:41Z",

"lastUpdateTime": "2018-07-15T10:47:42Z",

"message": "ReplicaSet \"kube-dns-86f4d74b45\" has successfully progressed.",

"reason": "NewReplicaSetAvailable",

"status": "True",

"type": "Progressing"

},

{

"lastTransitionTime": "2018-07-15T10:53:21Z",

"lastUpdateTime": "2018-07-15T10:53:21Z",

"message": "Deployment has minimum availability.",

"reason": "MinimumReplicasAvailable",

"status": "True",

"type": "Available"

}

],

"observedGeneration": 1,

"readyReplicas": 1,

"replicas": 1,

"updatedReplicas": 1

}

}

こちらも、問題なく、確認できました!!

◾️終わりに

Kubernetesクラスタ環境でのetcd保持データを随時調査できる環境を保持しておくことは、トラブルシューティング等で、とても役立つと思います。

残念ながら、最新のKubernetesクラスタ環境だと、etcdctlがほとんど役に立たないことが判明してしまいました。

"kube-ectd-helper"は、その欠点を補完しつつ、トラブルシューティング等に、色々と役立ちそうです。