「ゼロから作るDeep Learning」(斎藤 康毅 著 オライリー・ジャパン刊)を読んでいる時に、参照したサイト等をメモしていきます。 その16 ← →その18

DeepConvNet

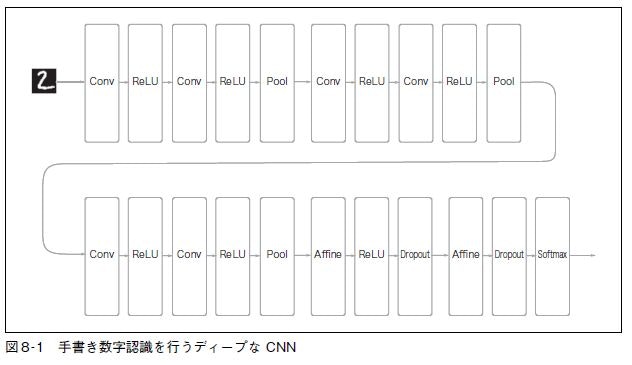

本のP241から説明されている DeepConvNet を Keras で構築してみます。

レイヤがやたら多くなって、そこんとこがディープなんだろうとは思いますが、これでどうして認識精度が上がるのか、まったくわかっていません。

が

見本のスクリプトをまねて動かすことはできるわけで、

やってみました。

from google.colab import drive

drive.mount('/content/drive')

import sys, os

sys.path.append('/content/drive/My Drive/Colab Notebooks/deep_learning/common')

sys.path.append('/content/drive/My Drive/Colab Notebooks/deep_learning/dataset')

# TensorFlow と tf.keras のインポート

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from keras.layers import Dense, Activation, Flatten, Conv2D, MaxPooling2D, Dropout

# ヘルパーライブラリのインポート

import numpy as np

import matplotlib.pyplot as plt

from mnist import load_mnist

# データの読み込み

(x_train, t_train), (x_test, t_test) = load_mnist(flatten=False)

X_train = x_train.transpose(0,2,3,1)

X_test = x_test.transpose(0,2,3,1)

input_shape=(28,28,1)

filter_num = 16

filter_size = 3

filter_stride = 1

filter_num2 = 32

filter_num3 = 64

pool_size_h=2

pool_size_w=2

pool_stride=2

d_rate = 0.5

hidden_size=100

output_size=10

model = keras.Sequential(name="DeepConvNet")

model.add(keras.Input(shape=input_shape))

model.add(Conv2D(filter_num, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(Conv2D(filter_num, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(MaxPooling2D(pool_size=(pool_size_h, pool_size_w),strides=pool_stride))

model.add(Conv2D(filter_num2, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(Conv2D(filter_num2, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(MaxPooling2D(pool_size=(pool_size_h, pool_size_w),strides=pool_stride))

model.add(Conv2D(filter_num3, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(Conv2D(filter_num3, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(MaxPooling2D(pool_size=(pool_size_h, pool_size_w),strides=pool_stride))

model.add(keras.layers.Flatten())

model.add(Dense(hidden_size, activation="relu", kernel_initializer='he_normal'))

model.add(Dropout(d_rate))

model.add(Dense(output_size))

model.add(Dropout(d_rate))

model.add(Activation("softmax"))

# モデルのコンパイル

model.compile(loss="sparse_categorical_crossentropy",

optimizer="adam",

metrics=["accuracy"])

padding="same" という指定を入れることで、入力画像のサイズと同じサイズの出力画像になります。

model.summary()

Model: "DeepConvNet"

Layer (type) Output Shape Param #

conv2d (Conv2D) (None, 28, 28, 16) 160

conv2d_1 (Conv2D) (None, 28, 28, 16) 2320

max_pooling2d (MaxPooling2D) (None, 14, 14, 16) 0

conv2d_2 (Conv2D) (None, 14, 14, 32) 4640

conv2d_3 (Conv2D) (None, 14, 14, 32) 9248

max_pooling2d_1 (MaxPooling2 (None, 7, 7, 32) 0

conv2d_4 (Conv2D) (None, 7, 7, 64) 18496

conv2d_5 (Conv2D) (None, 7, 7, 64) 36928

max_pooling2d_2 (MaxPooling2 (None, 3, 3, 64) 0

flatten (Flatten) (None, 576) 0

dense (Dense) (None, 100) 57700

dropout (Dropout) (None, 100) 0

dense_1 (Dense) (None, 10) 1010

dropout_1 (Dropout) (None, 10) 0

activation (Activation) (None, 10) 0

Total params: 130,502

Trainable params: 130,502

Non-trainable params: 0

model.fit(X_train, t_train, epochs=5, batch_size=128)

test_loss, test_acc = model.evaluate(X_test, t_test, verbose=2)

print('\nTest accuracy:', test_acc)

313/313 - 6s - loss: 0.0313 - accuracy: 0.9902

Test accuracy: 0.9901999831199646

ちゃんと動いているようです。

モデルの保存

save_file = '/content/drive/My Drive/Colab Notebooks/deep_learning/dataset/DeepConvNet_weight'

model.save_weights(save_file)

保存したモデルの復元

# TensorFlow と tf.keras のインポート

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from keras.layers import Dense, Activation, Flatten, Conv2D, MaxPooling2D, Dropout

# ヘルパーライブラリのインポート

import numpy as np

import matplotlib.pyplot as plt

def create_model():

import numpy as np

import matplotlib.pyplot as plt

input_shape=(28,28,1)

filter_num = 16

filter_size = 3

filter_stride = 1

filter_num2 = 32

filter_num3 = 64

pool_size_h=2

pool_size_w=2

pool_stride=2

hidden_size=100

output_size=10

model = keras.Sequential(name="DeepConvNet")

model.add(keras.Input(shape=input_shape))

model.add(Conv2D(filter_num, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(Conv2D(filter_num, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(MaxPooling2D(pool_size=(pool_size_h, pool_size_w),strides=pool_stride))

model.add(Conv2D(filter_num2, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(Conv2D(filter_num2, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(MaxPooling2D(pool_size=(pool_size_h, pool_size_w),strides=pool_stride))

model.add(Conv2D(filter_num3, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(Conv2D(filter_num3, filter_size, strides=filter_stride, padding="same", activation="relu", kernel_initializer='he_normal'))

model.add(MaxPooling2D(pool_size=(pool_size_h, pool_size_w),strides=pool_stride))

model.add(keras.layers.Flatten())

model.add(Dense(hidden_size, activation="relu", kernel_initializer='he_normal'))

model.add(Dropout(0.5))

model.add(Dense(output_size))

model.add(Dropout(0.5))

model.add(Activation("softmax"))

#モデルのコンパイル

model.compile(loss="sparse_categorical_crossentropy",

optimizer="adam",

metrics=["accuracy"])

return model

model = create_model()

save_file = '/content/drive/My Drive/Colab Notebooks/deep_learning/dataset/DeepConvNet_weight'

model.load_weights(save_file)

model.summary()