概要

飲食店情報を取得するAPIを構築する

実装

はじめに

https://qiita.com/itaya/items/0cd39400e274b926d5be

https://qiita.com/itaya/items/d6918a17d9f546a4ea60

https://qiita.com/itaya/items/52dcc8a38c16c0a48d1a

これらの記事で作ったコードをAPIとして実装するので、こちら読んでない方はぜひ読んでください。

情報取得用クラス作成

飲食店情報取得クラスを作成といっても、上記で記載していた記事のものをクラス化するだけです。

gnavi_crawler.rb

require 'uri'

require 'nokogiri'

require 'kconv'

require 'net/http'

require 'uri'

class GnaviCrawler

def main(area, keyword)

area = URI.encode_www_form_component(area)

keyword = URI.encode_www_form_component(keyword)

uri = URI.parse("https://r.gnavi.co.jp/area/jp/rs/?fwp=#{area}&date=&fw=#{keyword}")

request = Net::HTTP::Get.new(uri)

request["Authority"] = "r.gnavi.co.jp"

request["Accept"] = "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9"

request["Accept-Language"] = "ja"

request["Cache-Control"] = "max-age=0"

request["Cookie"] = "_td_global=2c7201bb-65d9-447f-8871-5c8aef70e6f0; _ra=1664900409701|e581f62a-e16f-4c02-97dc-b7ab203924e6; GU=e9f8c8528b624ea8cb1a55aadf705bd0; gt=GT139faa476002ac1e4ae520fpfeoIzpEqTDWQ1Rwc2xzV; ds=622474de466075942cc80e90a606c14bc6c474c7b09327535e48d587017aa4c0; gUser=03139faa476003ac1e4ae520L_I2dpFCb1q8eMMN-5-LmU; gcom=%7B%22login_type%22%3A1%2C%22guser_type%22%3A0%7D; _gid=GA1.3.151665219.1669743686; __gads=ID=61224817bcdcc5e2:T=1669743685:S=ALNI_MYQItLoO9f62eC3t0aHssBPge-pXQ; __gpi=UID=00000b86e8eecc21:T=1669743685:RT=1669743685:S=ALNI_MZ324J7fmISVdaU-A1IuoqdhZBTIQ; _ts_yjad=1669743686386; _fbp=fb.2.1669743686439.1159228644; _gcl_au=1.1.1560200380.1669743686; __pp_uid=INDH6tuKhtkFKURk66AXkbVTWOoJTybu; __lt__cid=c5331b8a-2798-4df1-9a8c-afb6b1af0c3c; __lt__sid=ab800c27-56441560; _dctagfq=1318:1670338799.0.0|1319:1670338799.0.0|1320:1670338799.0.0|1321:1670338799.0.0|1536:1670338799.0.0|1841:1670338799.0.0|1856:1670338799.0.0|1871:1670338799.0.0|1886:1670338799.0.0|1901:1670338799.0.0|1916:1670338799.0.0|1931:1670338799.0.0; ___o2u_o2id=0793b44a-526d-4f6f-bbfb-c923eb5be40f; rtosrch=gg7t002%3Dhttps%253A%252F%252Fr.gnavi.co.jp%252Farea%252Fjp%252Frs%252F%253Ffwp%253D%2525E6%2525A8%2525AA%2525E6%2525B5%25259C%2526date%253D%2526fw%253D%2525E3%252581%25259F%2525E3%252581%252593%2525E7%252584%2525BC%2525E3%252581%25258D%26a567203%3Dhttps%253A%252F%252Fr.gnavi.co.jp%252Farea%252Fjp%252Frs%252F%253Ffw%253D%2525E5%2525B1%252585%2525E9%252585%252592%2525E5%2525B1%25258B%2526fwp%253D%2525E6%2525A8%2525AA%2525E6%2525B5%25259C; GHistory=a567203%3A110%3A1669744542%2Cgg7t002%3A110%3A1669744522; ghistory_reserve=a567203%3A1669744542%2Cgg7t002%3A1669744522; __cribnotes_prm=__t_1669744547013_%7B%22uuid%22%3A%227a38921c-4d2e-4d30-bcb9-80d0686da7f0%22%7D; cto_bundle=sGp-Ll9kUDVaaHJYMHN3SkFtYyUyQkFmUTh2QWdka0pTQ0xqWlhHVFpETzB3ckh4dG1ya0J4NTB1Y0Z1V09mOUtMRDJWRlBKbDNSU2FTMWFDRXgyOFU4Q0l6UzAzYWFoWE9TMVd0WmVqOFZVcjBmQ3JHVHJyNkFodzhVcDlKTGdRYTBIWXNEdHdET3RzeENSc0Q0eDRGdUlwJTJGS3pWJTJGaXk1QW9sajk1ZUhJRHNaelpaYSUyRllHR0klMkZlNnhWWXIlMkJYTXB3eU00eUw; s_sess=%20sc_oncook%3D%3B%20sc_prop1%3Dr%3B%20cpnt_referer%3D%3B; _dc_gtm_UA-43329175-1=1; _td=639fb236-c732-4fb7-b246-0a6ed66c2a87; _ga_L9BHK8C28C=GS1.1.1669743685.1.1.1669744820.51.0.0; _ga=GA1.1.111137152.1669743686"

request["Sec-Ch-Ua"] = "\"Google Chrome\";v=\"105\", \"Not)A;Brand\";v=\"8\", \"Chromium\";v=\"105\""

request["Sec-Ch-Ua-Mobile"] = "?0"

request["Sec-Ch-Ua-Platform"] = "\"macOS\""

request["Sec-Fetch-Dest"] = "document"

request["Sec-Fetch-Mode"] = "navigate"

request["Sec-Fetch-Site"] = "same-origin"

request["Sec-Fetch-User"] = "?1"

request["Upgrade-Insecure-Requests"] = "1"

request["User-Agent"] = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

req_options = {

use_ssl: uri.scheme == "https",

}

response = Net::HTTP.start(uri.hostname, uri.port, req_options) do |http|

http.request(request)

end

doc = Nokogiri::HTML.parse(response.body.toutf8, nil, 'utf-8')

doc.css('.style_restaurantNameWrap__wvXSR').map do |target|

target.text + '0'

end

end

end

retty_crawler.rb

require 'uri'

require 'nokogiri'

require 'kconv'

require 'open-uri'

require 'yaml'

class RettyCrawler

def main(area, keyword)

area = URI.encode_www_form_component(area)

keyword = URI.encode_www_form_component(keyword)

file = URI.open("https://retty.me/restaurant-search/search-result/?latlng=35.466195%2C139.622704&free_word_area=#{area}&free_word_category=#{keyword}")

doc = Nokogiri::HTML.parse(file.read, nil, 'utf-8')

puts "###お店の名前を取得###"

restaurants_str = doc.css('div[is="restaurant-list"]')[0][':restaurants'].gsub('\n\n','')

YAML.load(restaurants_str).map do |restaurant|

restaurant['name'].gsub(/\\u([\da-fA-F]{4})/) { [$1].pack('H*').unpack('n*').pack('U*') }

end

end

end

tabelog_crawler.rb

require 'uri'

require 'nokogiri'

require 'mechanize'

require 'kconv'

class TabelogCrawler

def main(area, keyword)

agent = Mechanize.new

area = area

keyword = keyword

page = agent.get("https://tabelog.com/rst/rstsearch/?LstKind=1&voluntary_search=1&lid=top_navi1&sa=%E5%A4%A7%E9%98%AA%E5%B8%82&sk=#{keyword}&vac_net=&search_date=2022%2F11%2F29%28%E7%81%AB%29&svd=20221129&svt=1900&svps=2&hfc=1&form_submit=&area_datatype=MajorMunicipal&area_id=27100&key_datatype=Genre3&key_id=40&sa_input=#{area}")

doc = Nokogiri::HTML.parse(page.body.toutf8, nil, 'utf-8')

doc.css('.list-rst__rst-name-target').map do |target|

target.text

end

end

end

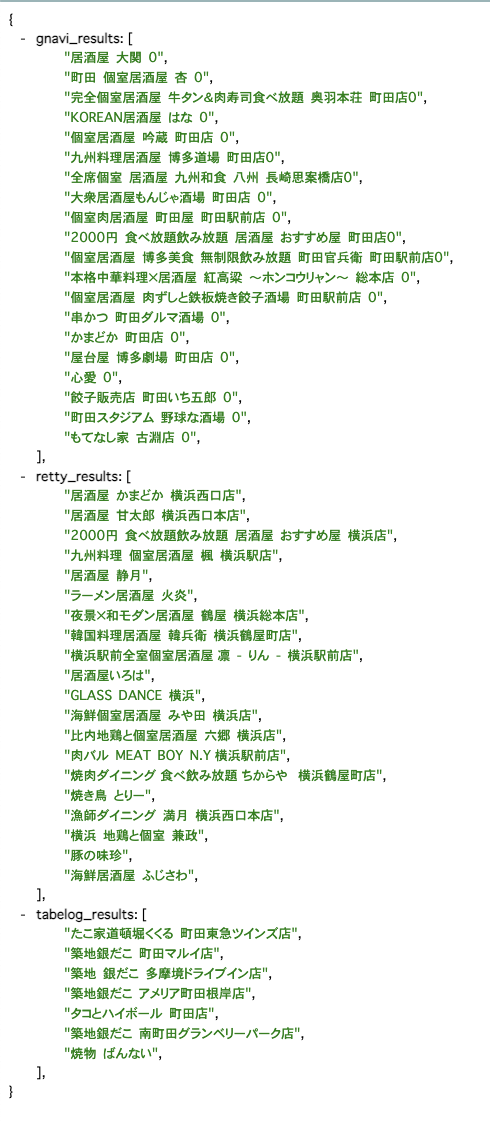

API実装

実際のAPIは呼び出すだけなので割と簡単です。

require 'sinatra'

require 'sinatra/json'

require 'active_support/all'

require './gnavi_crawler'

require './retty_crawler'

require './tabelog_crawler'

set :show_exceptions, false

class InvalidError < StandardError; end

gnavi_crawler = GnaviCrawler.new

retty_crawler = RettyCrawler.new

tabelog_crawler = TabelogCrawler.new

get '/' do

keyword = params['keyword']

area = params['area']

raise InvalidError if keyword.blank? || area.blank?

gnavi_results = gnavi_crawler.main(area, keyword)

retty_results = retty_crawler.main(area, keyword)

tabelog_results = tabelog_crawler.main(area, keyword)

data = {

gnavi_results: gnavi_results,

retty_results: retty_results,

tabelog_results: tabelog_results,

}

json data

end

error InvalidError do

status 400

data = {result: '入力値不正'}

json data

end

error 500 do

data = {result: '予期せぬエラー'}

json data

end