はじめに

IBM Power Data and AI で公開されていたブログ記事を試したログです。

IBM Power 11 上の Linux で RAG を構築して PDF ファイルから回答を生成する Chat UI を作成しています。

RAG(Retrieval-Augmented Generation)とは、検索(Retrieval)と生成(Generation)を組み合わせたAIの仕組みです。

参照手順:

環境

以下の環境で稼働を確認しています。

Power11 LPAR (Power Virtual Server を使用)

OS :RHEL9.6

1 CPU / 32 GB memory

((注) Spyre は使用していません)

# cat /etc/os-release

NAME="Red Hat Enterprise Linux"

VERSION="9.6 (Plow)"

ID="rhel"

ID_LIKE="fedora"

VERSION_ID="9.6"

PLATFORM_ID="platform:el9"

PRETTY_NAME="Red Hat Enterprise Linux 9.6 (Plow)"

ANSI_COLOR="0;31"

LOGO="fedora-logo-icon"

CPE_NAME="cpe:/o:redhat:enterprise_linux:9::baseos"

HOME_URL="https://www.redhat.com/"

DOCUMENTATION_URL="https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/9"

BUG_REPORT_URL="https://issues.redhat.com/"

REDHAT_BUGZILLA_PRODUCT="Red Hat Enterprise Linux 9"

REDHAT_BUGZILLA_PRODUCT_VERSION=9.6

REDHAT_SUPPORT_PRODUCT="Red Hat Enterprise Linux"

REDHAT_SUPPORT_PRODUCT_VERSION="9.6"

下記の構成要素を使用します。

| コンポーネント | 説明 |

|---|---|

| Power LPAR | デプロイ対象の環境(IBM Power システムの論理パーティション) |

| Micromamba + Python | 軽量なパッケージ管理および環境管理ツール |

| Gradio (streamlit) | チャットボットとの対話用のWebベースUI |

| ChromaDB | ドキュメントの埋め込みベクトルを格納するベクトルデータベース |

| HuggingFace Granite (4-bit, GGUF) | 推論用の大規模言語モデル(LLM) |

| LangChain + Docling | ドキュメントのチャンク化とRAG統合のためのライブラリ |

構築

前提モジュールの導入

dnf は RHEL9 の BaseOS, AppStream, Supplementary リポジトリが構成済みです。

# dnf repolist

Updating Subscription Management repositories.

This system has release set to 9.6 and it receives updates only for this release.

repo id repo name

rhel-9-for-ppc64le-appstream-rpms Red Hat Enterprise Linux 9 for Power, little endian - AppStream (RPMs)

rhel-9-for-ppc64le-baseos-rpms Red Hat Enterprise Linux 9 for Power, little endian - BaseOS (RPMs)

rhel-9-for-ppc64le-supplementary-rpms Red Hat Enterprise Linux 9 for Power, little endian - Supplementary (RPMs)

手順の通り git mesa-libGL bzip2 gcc g++ zlib-devel vim gcc-toolset-12 を導入します。

-

dnf install git mesa-libGL bzip2 gcc g++ zlib-devel vim gcc-toolset-12を実行

実行ログ

[root@rag-test work]# dnf install git mesa-libGL bzip2 gcc g++ zlib-devel vim gcc-toolset-12

Updating Subscription Management repositories.

This system has release set to 9.6 and it receives updates only for this release.

Red Hat Enterprise Linux 9 for Power, little endian - BaseOS (RPMs) 129 MB/s | 51 MB 00:00

Red Hat Enterprise Linux 9 for Power, little endian - AppStream (RPMs) 148 MB/s | 63 MB 00:00

Red Hat Enterprise Linux 9 for Power, little endian - Supplementary (RPMs) 24 kB/s | 3.4 kB 00:00

Dependencies resolved.

============================================================================================================================================

Package Architecture Version Repository Size

============================================================================================================================================

Installing:

bzip2 ppc64le 1.0.8-10.el9_5 rhel-9-for-ppc64le-baseos-rpms 60 k

gcc ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-appstream-rpms 28 M

gcc-c++ ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-appstream-rpms 11 M

gcc-toolset-12 ppc64le 12.0-6.el9 rhel-9-for-ppc64le-appstream-rpms 11 k

git ppc64le 2.47.3-1.el9_6 rhel-9-for-ppc64le-appstream-rpms 51 k

mesa-libGL ppc64le 24.2.8-3.el9_6 rhel-9-for-ppc64le-appstream-rpms 187 k

vim-enhanced ppc64le 2:8.2.2637-22.el9_6.1 rhel-9-for-ppc64le-appstream-rpms 1.9 M

zlib-devel ppc64le 1.2.11-40.el9 rhel-9-for-ppc64le-appstream-rpms 47 k

Installing dependencies:

boost-regex ppc64le 1.75.0-10.el9 rhel-9-for-ppc64le-appstream-rpms 290 k

cpp ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-appstream-rpms 9.2 M

emacs-filesystem noarch 1:27.2-14.el9_6.2 rhel-9-for-ppc64le-appstream-rpms 8.9 k

environment-modules ppc64le 5.3.0-1.el9 rhel-9-for-ppc64le-baseos-rpms 592 k

gcc-toolset-12-annobin-docs noarch 11.08-2.el9 rhel-9-for-ppc64le-appstream-rpms 95 k

gcc-toolset-12-annobin-plugin-gcc ppc64le 11.08-2.el9 rhel-9-for-ppc64le-appstream-rpms 895 k

gcc-toolset-12-binutils ppc64le 2.38-19.el9 rhel-9-for-ppc64le-appstream-rpms 6.2 M

gcc-toolset-12-binutils-gold ppc64le 2.38-19.el9 rhel-9-for-ppc64le-appstream-rpms 1.0 M

gcc-toolset-12-dwz ppc64le 0.14-4.el9 rhel-9-for-ppc64le-appstream-rpms 138 k

gcc-toolset-12-gcc ppc64le 12.2.1-7.7.el9_4 rhel-9-for-ppc64le-appstream-rpms 37 M

gcc-toolset-12-gcc-c++ ppc64le 12.2.1-7.7.el9_4 rhel-9-for-ppc64le-appstream-rpms 12 M

gcc-toolset-12-gcc-gfortran ppc64le 12.2.1-7.7.el9_4 rhel-9-for-ppc64le-appstream-rpms 12 M

gcc-toolset-12-gdb ppc64le 11.2-4.el9 rhel-9-for-ppc64le-appstream-rpms 4.2 M

gcc-toolset-12-libquadmath-devel ppc64le 12.2.1-7.7.el9_4 rhel-9-for-ppc64le-appstream-rpms 180 k

gcc-toolset-12-libstdc++-devel ppc64le 12.2.1-7.7.el9_4 rhel-9-for-ppc64le-appstream-rpms 3.4 M

gcc-toolset-12-runtime ppc64le 12.0-6.el9 rhel-9-for-ppc64le-appstream-rpms 62 k

git-core ppc64le 2.47.3-1.el9_6 rhel-9-for-ppc64le-appstream-rpms 5.2 M

git-core-doc noarch 2.47.3-1.el9_6 rhel-9-for-ppc64le-appstream-rpms 3.0 M

glibc-devel ppc64le 2.34-168.el9_6.20 rhel-9-for-ppc64le-appstream-rpms 562 k

gpm-libs ppc64le 1.20.7-29.el9 rhel-9-for-ppc64le-appstream-rpms 24 k

kernel-headers ppc64le 5.14.0-570.58.1.el9_6 rhel-9-for-ppc64le-appstream-rpms 3.5 M

libX11 ppc64le 1.7.0-11.el9 rhel-9-for-ppc64le-appstream-rpms 699 k

libX11-common noarch 1.7.0-11.el9 rhel-9-for-ppc64le-appstream-rpms 209 k

libX11-xcb ppc64le 1.7.0-11.el9 rhel-9-for-ppc64le-appstream-rpms 12 k

libXau ppc64le 1.0.9-8.el9 rhel-9-for-ppc64le-appstream-rpms 34 k

libXext ppc64le 1.3.4-8.el9 rhel-9-for-ppc64le-appstream-rpms 43 k

libXfixes ppc64le 5.0.3-16.el9 rhel-9-for-ppc64le-appstream-rpms 22 k

libXxf86vm ppc64le 1.1.4-18.el9 rhel-9-for-ppc64le-appstream-rpms 21 k

libasan ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-appstream-rpms 435 k

libdrm ppc64le 2.4.123-2.el9 rhel-9-for-ppc64le-appstream-rpms 114 k

libgfortran ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-baseos-rpms 510 k

libglvnd ppc64le 1:1.3.4-1.el9 rhel-9-for-ppc64le-appstream-rpms 136 k

libglvnd-glx ppc64le 1:1.3.4-1.el9 rhel-9-for-ppc64le-appstream-rpms 150 k

libmpc ppc64le 1.2.1-4.el9 rhel-9-for-ppc64le-appstream-rpms 70 k

libpkgconf ppc64le 1.7.3-10.el9 rhel-9-for-ppc64le-baseos-rpms 42 k

libquadmath ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-baseos-rpms 193 k

libstdc++-devel ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-appstream-rpms 2.4 M

libubsan ppc64le 11.5.0-5.el9_5 rhel-9-for-ppc64le-appstream-rpms 202 k

libwayland-server ppc64le 1.21.0-1.el9 rhel-9-for-ppc64le-appstream-rpms 47 k

libxcb ppc64le 1.13.1-9.el9 rhel-9-for-ppc64le-appstream-rpms 263 k

libxcrypt-devel ppc64le 4.4.18-3.el9 rhel-9-for-ppc64le-appstream-rpms 32 k

libxshmfence ppc64le 1.3-10.el9 rhel-9-for-ppc64le-appstream-rpms 14 k

llvm-libs ppc64le 19.1.7-2.el9 rhel-9-for-ppc64le-appstream-rpms 29 M

make ppc64le 1:4.3-8.el9 rhel-9-for-ppc64le-baseos-rpms 554 k

mesa-dri-drivers ppc64le 24.2.8-3.el9_6 rhel-9-for-ppc64le-appstream-rpms 7.4 M

mesa-filesystem ppc64le 24.2.8-3.el9_6 rhel-9-for-ppc64le-appstream-rpms 11 k

mesa-libgbm ppc64le 24.2.8-3.el9_6 rhel-9-for-ppc64le-appstream-rpms 41 k

mesa-libglapi ppc64le 24.2.8-3.el9_6 rhel-9-for-ppc64le-appstream-rpms 44 k

perl-DynaLoader ppc64le 1.47-481.1.el9_6 rhel-9-for-ppc64le-appstream-rpms 25 k

perl-Error noarch 1:0.17029-7.el9 rhel-9-for-ppc64le-appstream-rpms 46 k

perl-File-Find noarch 1.37-481.1.el9_6 rhel-9-for-ppc64le-appstream-rpms 25 k

perl-Git noarch 2.47.3-1.el9_6 rhel-9-for-ppc64le-appstream-rpms 38 k

perl-TermReadKey ppc64le 2.38-11.el9 rhel-9-for-ppc64le-appstream-rpms 41 k

pkgconf ppc64le 1.7.3-10.el9 rhel-9-for-ppc64le-baseos-rpms 45 k

pkgconf-m4 noarch 1.7.3-10.el9 rhel-9-for-ppc64le-baseos-rpms 16 k

pkgconf-pkg-config ppc64le 1.7.3-10.el9 rhel-9-for-ppc64le-baseos-rpms 12 k

scl-utils ppc64le 1:2.0.3-4.el9 rhel-9-for-ppc64le-appstream-rpms 44 k

source-highlight ppc64le 3.1.9-12.el9 rhel-9-for-ppc64le-appstream-rpms 695 k

tcl ppc64le 1:8.6.10-7.el9 rhel-9-for-ppc64le-baseos-rpms 1.2 M

vim-common ppc64le 2:8.2.2637-22.el9_6.1 rhel-9-for-ppc64le-appstream-rpms 7.0 M

vim-filesystem noarch 2:8.2.2637-22.el9_6.1 rhel-9-for-ppc64le-baseos-rpms 13 k

Transaction Summary

============================================================================================================================================

Install 69 Packages

Total download size: 191 M

Installed size: 655 M

Is this ok [y/N]: y

Downloading Packages:

(1/69): pkgconf-1.7.3-10.el9.ppc64le.rpm 9.0 kB/s | 45 kB 00:05

~ 省略 ~

(69/69): kernel-headers-5.14.0-570.58.1.el9_6.ppc64le.rpm 56 MB/s | 3.5 MB 00:00

--------------------------------------------------------------------------------------------------------------------------------------------

Total 29 MB/s | 191 MB 00:06

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : libmpc-1.2.1-4.el9.ppc64le 1/69

~ 省略 ~

Verifying : kernel-headers-5.14.0-570.58.1.el9_6.ppc64le 69/69

Installed products updated.

Installed:

boost-regex-1.75.0-10.el9.ppc64le

~ 省略 ~

zlib-devel-1.2.11-40.el9.ppc64le

Complete!

[root@rag-test work]#

続いてリポジトリーをサーバーにクローンします。

- git repository https://github.com/HenrikMader/RAG_public をクローン

[root@rag-test work]# git clone https://github.com/HenrikMader/RAG_public

Cloning into 'RAG_public'...

remote: Enumerating objects: 587, done.

remote: Counting objects: 100% (143/143), done.

remote: Compressing objects: 100% (91/91), done.

remote: Total 587 (delta 81), reused 91 (delta 50), pack-reused 444 (from 2)

Receiving objects: 100% (587/587), 641.65 MiB | 40.49 MiB/s, done.

Resolving deltas: 100% (231/231), done.

Updating files: 100% (133/133), done.

[root@rag-test work]#

[root@rag-test work]# ls -l RAG_public/

total 128

-rw-r--r--. 1 root root 4734 Nov 2 23:41 README.md

-rw-r--r--. 1 root root 7347 Nov 2 23:41 admin_database.py

drwxr-xr-x. 2 root root 188 Nov 2 23:41 ansible

-rw-r--r--. 1 root root 50439 Nov 2 23:41 architecture_redbooks.png

-rw-r--r--. 1 root root 1417 Nov 2 23:41 check_retrieval.py

-rw-r--r--. 1 root root 6473 Nov 2 23:41 chromaDB_md.py

-rw-r--r--. 1 root root 2350 Nov 2 23:41 converter_docling.py

drwxr-xr-x. 4 root root 116 Nov 2 23:41 db

drwxr-xr-x. 8 root root 128 Nov 2 23:42 files_for_database

-rw-r--r--. 1 root root 3239 Nov 2 23:42 requirements.txt

-rw-r--r--. 1 root root 4564 Nov 2 23:42 run_model_openai_backend.py

-rw-r--r--. 1 root root 13784 Nov 2 23:42 streamlit.py

-rw-r--r--. 1 root root 13784 Nov 2 23:42 streamlit_adv.py

drwxr-xr-x. 2 root root 53 Nov 2 23:42 systemd

drwxr-xr-x. 3 root root 20 Nov 2 23:42 work_in_progress

対象リポジトリーがクローンできました。

micromamba の導入

micromambaは、軽量なパッケージマネージャ(mamba(condaの高速版)の軽量バージョン) で、Pythonの仮想環境を管理するためのツールです。

[root@rag-test work]# curl -Ls https://micro.mamba.pm/api/micromamba/linux-ppc64le/latest | tar -xvj bin/micromamba

bin/micromamba

[root@rag-test work]# echo $?

0

- micromamba コマンド PATH を通します

/work の位置で導入したので、/work/bin がパスとなります。

(確認)

[root@rag-test work]# ls -l bin

total 25904

-rwxrwxr-x. 1 root root 26522328 Oct 17 11:31 micromamba

(修正前のPATHを確認)

[root@rag-test work]# echo $PATH

/root/.local/bin:/root/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin

(パスを通す)

[root@rag-test work]# export PATH=$PATH:/work/bin/

(確認)

[root@rag-test work]# which micromamba

/work/bin/micromamba

- 現在の Bash シェルに micromamba の環境設定を読み込みます

[root@rag-test work]# eval "$(micromamba shell hook --shell bash)"

[root@rag-test work]# echo $?

0

[root@rag-test work]# micromamba --version

2.3.3

micromamba を使用して Python 仮想環境(rag_env)を作成

micromamba create -n rag_env python=3.11 を実行します。

実行ログ

[root@rag-test work]# micromamba create -n rag_env python=3.11

conda-forge/noarch 23.1MB @ 38.4MB/s 0.3s

conda-forge/linux-ppc64le 17.0MB @ 1.8MB/s 9.5s

Transaction

Prefix: /root/.local/share/mamba/envs/rag_env

Updating specs:

- python=3.11

Package Version Build Channel Size

─────────────────────────────────────────────────────────────────────────────────

Install:

─────────────────────────────────────────────────────────────────────────────────

+ _libgcc_mutex 0.1 conda_forge conda-forge 3kB

+ _openmp_mutex 4.5 2_gnu conda-forge 24kB

+ bzip2 1.0.8 heb0841c_8 conda-forge 205kB

+ ca-certificates 2025.10.5 hbd8a1cb_0 conda-forge 156kB

+ ld_impl_linux-ppc64le 2.44 h01e97ec_4 conda-forge 919kB

+ libexpat 2.7.1 hf512061_0 conda-forge 84kB

+ libffi 3.5.2 h4197a55_0 conda-forge 62kB

+ libgcc 15.2.0 h0d7acf9_7 conda-forge 738kB

+ libgcc-ng 15.2.0 hfdc3801_7 conda-forge 29kB

+ libgomp 15.2.0 h0d7acf9_7 conda-forge 456kB

+ liblzma 5.8.1 h190368a_2 conda-forge 140kB

+ libnsl 2.0.1 h190368a_1 conda-forge 36kB

+ libsqlite 3.50.4 h8cc42db_0 conda-forge 1MB

+ libstdcxx 15.2.0 h262982c_7 conda-forge 4MB

+ libuuid 2.41.2 h10e6d09_0 conda-forge 44kB

+ libxcrypt 4.4.36 ha17a0cc_1 conda-forge 124kB

+ libzlib 1.3.1 h190368a_2 conda-forge 69kB

+ ncurses 6.5 h8645e7e_3 conda-forge 982kB

+ openssl 3.5.4 hb9a03e6_0 conda-forge 3MB

+ pip 25.2 pyh8b19718_0 conda-forge 1MB

+ python 3.11.14 h4314784_2_cpython conda-forge 16MB

+ readline 8.2 hf4ca6f9_2 conda-forge 307kB

+ setuptools 80.9.0 pyhff2d567_0 conda-forge 749kB

+ tk 8.6.13 noxft_he73cbed_102 conda-forge 4MB

+ tzdata 2025b h78e105d_0 conda-forge 123kB

+ wheel 0.45.1 pyhd8ed1ab_1 conda-forge 63kB

+ zstd 1.5.7 h53ff00b_2 conda-forge 621kB

Summary:

Install: 27 packages

Total download: 35MB

─────────────────────────────────────────────────────────────────────────────────

Confirm changes: [Y/n] Y

Transaction starting

pip 1.2MB @ 4.9MB/s 0.2s

openssl 3.3MB @ 10.0MB/s 0.2s

tk 3.6MB @ 8.9MB/s 0.2s

libsqlite 1.0MB @ ??.?MB/s 0.1s

ld_impl_linux-ppc64le 919.2kB @ 3.1MB/s 0.1s

python 15.9MB @ 39.2MB/s 0.3s

setuptools 748.8kB @ ??.?MB/s 0.1s

libgcc 737.7kB @ 2.5MB/s 0.1s

libgomp 456.2kB @ 5.4MB/s 0.1s

readline 307.4kB @ ??.?MB/s 0.1s

zstd 621.1kB @ ??.?MB/s 0.1s

bzip2 204.6kB @ 2.1MB/s 0.1s

ncurses 981.7kB @ ??.?MB/s 0.2s

libxcrypt 124.1kB @ ??.?MB/s 0.1s

ca-certificates 155.9kB @ ??.?MB/s 0.1s

tzdata 123.0kB @ ??.?MB/s 0.1s

libexpat 84.0kB @ ??.?MB/s 0.0s

liblzma 140.1kB @ ??.?MB/s 0.1s

wheel 62.9kB @ ??.?MB/s 0.1s

libzlib 69.4kB @ ??.?MB/s 0.1s

libuuid 43.9kB @ ??.?MB/s 0.1s

libnsl 36.2kB @ ??.?MB/s 0.1s

_openmp_mutex 23.7kB @ ??.?MB/s 0.1s

libffi 61.9kB @ ??.?MB/s 0.2s

libgcc-ng 29.2kB @ ??.?MB/s 0.1s

_libgcc_mutex 2.5kB @ ??.?MB/s 0.1s

libstdcxx 4.1MB @ 1.7MB/s 2.3s

Linking tzdata-2025b-h78e105d_0

~ 省略 ~

Transaction finished

To activate this environment, use:

micromamba activate rag_env

Or to execute a single command in this environment, use:

micromamba run -n rag_env mycommand

[root@rag-test work]#

続いて python 仮想環境の使用を開始します。

- rag_env 仮想環境の有効化

[root@rag-test work]# micromamba activate rag_env

(rag_env) [root@rag-test work]#

仮想環境に、rocketce、defaults チャンネルから pytorch-cpu、pyyaml、httptools、onnxruntime、"pandas<1.6.0"、tokenizers などのパッケージを導入します。

micromamba install -c rocketce -c defaults pytorch-cpu pyyaml httptools onnxruntime "pandas<1.6.0" tokenizers を実行します。

"`micromamba install 実行ログ (長いです)

[root@rag-test work]# micromamba install -c rocketce -c defaults pytorch-cpu pyyaml httptools onnxruntime "pandas<1.6.0" tokenizers

warning libmamba 'repo.anaconda.com', a commercial channel hosted by Anaconda.com, is used.

warning libmamba Please make sure you understand Anaconda Terms of Services.

warning libmamba See: https://legal.anaconda.com/policies/en/

pkgs/r/linux-ppc64le ??.?MB @ ??.?MB/s 0.1s

pkgs/r/noarch 2.0MB @ 6.2MB/s 0.2s

rocketce/noarch ??.?MB @ ??.?MB/s 0.2s

rocketce/linux-ppc64le 197.1kB @ 367.2kB/s 0.4s

pkgs/main/linux-ppc64le 3.5MB @ 5.4MB/s 0.4s

pkgs/main/noarch 794.8kB @ 1.4MB/s 0.5s

Pinned packages:

- python=3.11

Transaction

Prefix: /root/.local/share/mamba/envs/rag_env

Updating specs:

- pytorch-cpu

- pyyaml

- httptools

- onnxruntime

- pandas<1.6.0

- tokenizers

Package Version Build Channel Size

─────────────────────────────────────────────────────────────────────────────────────────────────

Install:

─────────────────────────────────────────────────────────────────────────────────────────────────

+ _pytorch_select 1.0 cpu_2 rocketce 4kB

+ av 10.0.0 py311h6b2f95f_2 rocketce 859kB

+ blas 1.0 openblas rocketce 10kB

+ bottleneck 1.3.5 py311h34f6284_0 pkgs/main 143kB

+ brotli-python 1.0.9 py311h4a02239_7 pkgs/main 350kB

+ certifi 2023.11.17 py311h6ffa863_0 pkgs/main 161kB

+ cffi 1.15.1 py311hf118e41_3 pkgs/main 304kB

+ charset-normalizer 3.3.2 pyhd3eb1b0_0 pkgs/main 46kB

+ click 8.1.7 py311h6ffa863_0 pkgs/main 221kB

+ coloredlogs 15.0.1 py311h6ffa863_1 pkgs/main 77kB

+ cryptography 43.0.3 opence_py311_h0780ff5_1 rocketce 2MB

+ ffmpeg 4.2.2 opence_0 rocketce 49MB

+ filelock 3.9.0 py311h6ffa863_0 pkgs/main 21kB

+ flatbuffers 23.1.21 h8171b20_0 rocketce 1MB

+ freetype 2.12.1 hd23a775_0 pkgs/main 771kB

+ fsspec 2023.10.0 pyh6818f58_0 rocketce 124kB

+ giflib 5.2.1 hf118e41_3 pkgs/main 91kB

+ gmp 6.2.1 h29c3540_3 pkgs/main 612kB

+ gmpy2 2.1.2 py311hb5ca514_0 pkgs/main 192kB

+ httptools 0.5.0 py311hf118e41_1 pkgs/main 131kB

+ huggingface_hub 0.20.0 pyh8d54cd5_0 rocketce 217kB

+ humanfriendly 10.0 py311h6ffa863_1 pkgs/main 155kB

+ idna 3.4 py311h6ffa863_0 pkgs/main 97kB

+ jinja2 3.1.2 py311h6ffa863_0 pkgs/main 297kB

+ jpeg 9e hf118e41_1 pkgs/main 414kB

+ lame 3.100 h69ee22e_1003 rocketce 399kB

+ lcms2 2.12 h2045e0b_0 pkgs/main 367kB

+ lerc 3.0 h29c3540_0 pkgs/main 218kB

+ leveldb 1.23 h70bd564_2 rocketce 263kB

+ libdeflate 1.17 hf118e41_1 pkgs/main 76kB

+ libgfortran-ng 11.2.0 hb3889a9_1 pkgs/main 20kB

+ libgfortran5 11.2.0 h1234567_1 pkgs/main 2MB

+ libopenblas 0.3.27 openmp_h1234567_1 rocketce 5MB

+ libopus 1.3.1 h3275034_1 rocketce 336kB

+ libpng 1.6.39 h4177371_0 rocketce 311kB

+ libprotobuf 3.21.12 h1776448_0 rocketce 2MB

+ libstdcxx-ng 11.2.0 h1234567_1 pkgs/main 875kB

+ libtiff 4.5.1 h4a02239_0 pkgs/main 657kB

+ libvpx 1.13.1 h4a02239_0 pkgs/main 2MB

+ libwebp 1.3.2 h0f96ee2_0 pkgs/main 102kB

+ libwebp-base 1.3.2 hf118e41_0 pkgs/main 442kB

+ llvm-openmp 14.0.6 h38e9386_0 rocketce 1MB

+ lmdb 0.9.29 h29c3540_0 pkgs/main 527kB

+ lz4-c 1.9.4 h4a02239_0 pkgs/main 192kB

+ markupsafe 2.1.1 py311hf118e41_0 pkgs/main 26kB

+ mpc 1.1.0 h107f33c_1 pkgs/main 122kB

+ mpfr 4.0.2 hb69a4c5_1 pkgs/main 508kB

+ mpmath 1.3.0 py311h6ffa863_0 pkgs/main 1MB

+ networkx 3.1 py311h6ffa863_0 pkgs/main 3MB

+ numactl 2.0.16 hba61f60_1 rocketce 121kB

+ numexpr 2.8.7 py311hc46fc55_0 pkgs/main 174kB

+ numpy 1.26.0 py311he13ab3c_0 rocketce 10kB

+ numpy-base 1.26.0 py311h65089e6_0 rocketce 7MB

+ onnxruntime 1.16.3 h8a242e7_cpu_py311_pb4.21.12_2 rocketce 7MB

+ openjpeg 2.4.0 hfe35807_0 pkgs/main 394kB

+ packaging 23.2 pyhee63e60_0 rocketce 49kB

+ pandas 1.5.3 py311hefa2406_0 pkgs/main 13MB

+ pillow 10.0.1 py311he33076b_0 pkgs/main 960kB

+ protobuf 4.21.12 py311ha7baec7_1 rocketce 390kB

+ pycparser 2.21 pyhd3eb1b0_0 pkgs/main 97kB

+ pyopenssl 24.2.1 pyh6fd39a9_2 rocketce 128kB

+ pysocks 1.7.1 py311h6ffa863_0 pkgs/main 36kB

+ python-dateutil 2.8.2 pyhd3eb1b0_0 pkgs/main 238kB

+ python-flatbuffers 23.1.21 pyh3ef9d41_0 rocketce 34kB

+ pytorch-base 2.1.2 cpu_py311_pb4.21.12_8 rocketce 81MB

+ pytorch-cpu 2.1.2 py311_1 rocketce 4kB

+ pytz 2023.3.post1 py311h6ffa863_0 pkgs/main 225kB

+ pyyaml 6.0.1 py311hf118e41_0 pkgs/main 213kB

+ re2 2022.04.01 h29c3540_0 pkgs/main 276kB

+ requests 2.31.0 py311h6ffa863_0 pkgs/main 127kB

+ scipy 1.11.3 py311he4133b3_0 pkgs/main 25MB

+ sentencepiece 0.1.99 h8067ab1_py311_pb4.21.12_2 rocketce 3MB

+ six 1.16.0 pyhd3eb1b0_1 pkgs/main 19kB

+ snappy 1.1.9 h29c3540_0 pkgs/main 674kB

+ sqlite 3.41.2 hf118e41_0 pkgs/main 2MB

+ sympy 1.11.1 py311h6ffa863_0 pkgs/main 17MB

+ tabulate 0.8.10 py311h6ffa863_0 pkgs/main 58kB

+ tokenizers 0.15.2 opence_py311_hb3c3cb4_0 rocketce 2MB

+ torchdata 0.7.1 py311_0 rocketce 302kB

+ torchtext-base 0.16.2 cpu_py311_3 rocketce 2MB

+ torchvision-base 0.16.2 cpu_py311_1 rocketce 1MB

+ tqdm 4.65.0 py311h7837921_0 pkgs/main 164kB

+ typing-extensions 4.11.0 0 rocketce 9kB

+ typing_extensions 4.11.0 pyh7b35c80_0 rocketce 37kB

+ urllib3 1.26.18 py311h6ffa863_0 pkgs/main 257kB

+ xz 5.4.6 h25e8235_0 rocketce 670kB

+ yaml 0.2.5 h7b6447c_0 pkgs/main 83kB

+ zlib 1.2.13 hb9dc296_0 rocketce 108kB

Remove:

─────────────────────────────────────────────────────────────────────────────────────────────────

- liblzma 5.8.1 h190368a_2 conda-forge Cached

- libsqlite 3.50.4 h8cc42db_0 conda-forge Cached

- libstdcxx 15.2.0 h262982c_7 conda-forge Cached

- libzlib 1.3.1 h190368a_2 conda-forge Cached

Downgrade:

─────────────────────────────────────────────────────────────────────────────────────────────────

- ld_impl_linux-ppc64le 2.44 h01e97ec_4 conda-forge Cached

+ ld_impl_linux-ppc64le 2.38 hec883e6_1 pkgs/main 890kB

- libffi 3.5.2 h4197a55_0 conda-forge Cached

+ libffi 3.4.4 h4a02239_0 pkgs/main 146kB

- libuuid 2.41.2 h10e6d09_0 conda-forge Cached

+ libuuid 1.41.5 h7e908c5_0 rocketce 33kB

- python 3.11.14 h4314784_2_cpython conda-forge Cached

+ python 3.11.8 h8d86120_0 rocketce 31MB

- tk 8.6.13 noxft_he73cbed_102 conda-forge Cached

+ tk 8.6.12 h7e00dab_0 pkgs/main 3MB

- zstd 1.5.7 h53ff00b_2 conda-forge Cached

+ zstd 1.5.5 h57e4825_0 pkgs/main 740kB

Summary:

Install: 88 packages

Remove: 4 packages

Downgrade: 6 packages

Total download: 282MB

─────────────────────────────────────────────────────────────────────────────────────────────────

Confirm changes: [Y/n] Y

Transaction starting

sympy 17.2MB @ 11.1MB/s 1.1s

scipy 24.7MB @ 12.4MB/s 1.4s

python 31.3MB @ 15.1MB/s 1.8s

pandas 13.2MB @ 9.4MB/s 1.1s

ffmpeg 49.0MB @ 20.9MB/s 2.2s

numpy-base 7.2MB @ 5.4MB/s 1.3s

pytorch-base 80.7MB @ 28.7MB/s 2.8s

onnxruntime 7.1MB @ 5.3MB/s 1.2s

networkx 3.5MB @ 4.7MB/s 0.5s

tk 3.5MB @ 2.3MB/s 0.9s

libopenblas 4.9MB @ 3.8MB/s 1.2s

sentencepiece 3.4MB @ 2.9MB/s 1.1s

libprotobuf 2.5MB @ 1.3MB/s 1.1s

sqlite 1.6MB @ 1.2MB/s 0.9s

tokenizers 2.2MB @ 1.1MB/s 1.1s

torchtext-base 1.8MB @ 998.8kB/s 1.2s

libvpx 1.6MB @ 3.2MB/s 0.4s

libgfortran5 1.6MB @ 1.5MB/s 0.9s

torchvision-base 1.5MB @ 871.5kB/s 0.9s

flatbuffers 1.4MB @ 833.0kB/s 1.0s

cryptography 1.5MB @ 689.8kB/s 1.2s

mpmath 1.1MB @ 872.5kB/s 0.8s

llvm-openmp 1.2MB @ 1.1MB/s 1.1s

pillow 959.9kB @ 1.5MB/s 0.5s

ld_impl_linux-ppc64le 890.0kB @ 1.1MB/s 0.5s

freetype 771.4kB @ 1.1MB/s 0.4s

snappy 674.4kB @ 1.0MB/s 0.3s

libstdcxx-ng 875.0kB @ 280.6kB/s 0.8s

zstd 740.0kB @ 490.2kB/s 0.7s

av 859.2kB @ 789.9kB/s 1.1s

libtiff 657.2kB @ 200.3kB/s 0.7s

mpfr 508.2kB @ 658.1kB/s 0.3s

gmp 612.4kB @ 326.6kB/s 0.9s

lmdb 527.3kB @ 162.7kB/s 0.8s

libwebp-base 442.5kB @ 325.5kB/s 0.4s

xz 670.3kB @ 363.8kB/s 1.3s

jpeg 414.4kB @ ??.?MB/s 0.6s

lame 398.6kB @ 234.2kB/s 0.5s

openjpeg 393.9kB @ 325.5kB/s 0.4s

lcms2 367.2kB @ 523.0kB/s 0.5s

brotli-python 349.8kB @ ??.?MB/s 0.6s

protobuf 390.0kB @ 404.3kB/s 0.9s

libopus 336.4kB @ 221.0kB/s 0.8s

cffi 304.4kB @ 217.0kB/s 0.6s

jinja2 296.9kB @ 875.7kB/s 0.3s

libpng 311.2kB @ 267.2kB/s 0.9s

python-dateutil 238.1kB @ 871.5kB/s 0.2s

urllib3 257.1kB @ ??.?MB/s 0.4s

torchdata 302.1kB @ 352.1kB/s 0.8s

click 221.3kB @ 709.7kB/s 0.2s

pytz 224.9kB @ ??.?MB/s 0.3s

re2 275.6kB @ 48.3kB/s 0.7s

gmpy2 192.1kB @ ??.?MB/s 0.2s

leveldb 263.0kB @ 196.2kB/s 0.9s

huggingface_hub 216.7kB @ 93.0kB/s 0.4s

pyyaml 213.0kB @ ??.?MB/s 0.4s

numexpr 173.9kB @ 184.6kB/s 0.2s

tqdm 164.4kB @ 259.6kB/s 0.1s

certifi 160.5kB @ 491.4kB/s 0.2s

libffi 146.3kB @ ??.?MB/s 0.2s

lerc 217.8kB @ 131.8kB/s 0.8s

lz4-c 191.6kB @ ??.?MB/s 0.5s

httptools 131.0kB @ ??.?MB/s 0.3s

humanfriendly 154.8kB @ ??.?MB/s 0.5s

requests 126.8kB @ ??.?MB/s 0.3s

bottleneck 143.3kB @ ??.?MB/s 0.7s

mpc 122.3kB @ 212.9kB/s 0.5s

pyopenssl 127.7kB @ 83.2kB/s 0.9s

zlib 108.4kB @ 83.4kB/s 0.4s

fsspec 124.0kB @ 99.6kB/s 0.7s

pycparser 96.6kB @ 354.9kB/s 0.1s

numactl 120.7kB @ 93.0kB/s 0.8s

libwebp 101.8kB @ ??.?MB/s 0.3s

idna 97.0kB @ ??.?MB/s 0.2s

giflib 91.4kB @ ??.?MB/s 0.2s

tabulate 58.4kB @ 77.9kB/s 0.2s

charset-normalizer 45.5kB @ ??.?MB/s 0.1s

coloredlogs 77.4kB @ ??.?MB/s 0.4s

libdeflate 75.6kB @ ??.?MB/s 0.4s

yaml 83.1kB @ 19.4kB/s 0.6s

pysocks 35.7kB @ ??.?MB/s 0.3s

packaging 48.9kB @ 27.3kB/s 0.6s

typing_extensions 37.0kB @ 40.9kB/s 0.7s

filelock 20.5kB @ ??.?MB/s 0.2s

markupsafe 25.7kB @ ??.?MB/s 0.3s

six 18.9kB @ ??.?MB/s 0.1s

python-flatbuffers 33.8kB @ 29.8kB/s 0.6s

libuuid 32.8kB @ 27.3kB/s 0.6s

typing-extensions 9.4kB @ ??.?MB/s 0.3s

numpy 9.9kB @ 11.3kB/s 0.4s

libgfortran-ng 20.2kB @ ??.?MB/s 0.5s

blas 9.6kB @ 15.4kB/s 0.5s

_pytorch_select 4.3kB @ 5.3kB/s 0.3s

pytorch-cpu 4.5kB @ ??.?MB/s 0.5s

~ 省略 ~

Transaction finished

(rag_env) [root@rag-test work]#

続いて micromambaを使って、機械学習やデータ処理に必要なライブラリをまとめてインストールし、軽量な推論・NLP・データ処理環境構築を行います。

-

pip install -U --extra-index-url https://repo.fury.io/mgiessing --prefer-binary streamlit chromadb transformers psutil langchain sentence_transformers gradio==3.50.2 llama-cpp-python scikit-learn docling einops openaiを実行します。

チャンネル指定:rocketce、defaults からパッケージを取得

主なライブラリ:

pytorch-cpu:CPU版のPyTorch(GPU不要)

onnxruntime:ONNXモデルの推論エンジン

tokenizers:高速なNLP用トークナイザー

pandas <1.6.0:データ処理ライブラリ(バージョン制限あり)

pyyaml:YAMLファイルの読み書き

httptools:高速なHTTPパーサー

| パッケージ名 | 説明 |

|---|---|

| streamlit | データアプリケーションを簡単に作成できるWeb UIライブラリ。データ可視化やダッシュボード作成に最適 |

| chromadb | 高速なベクトル検索エンジン。埋め込みベースの検索や類似性検索に使用される |

| transformers | Hugging Faceが提供する、BERTやGPTなどの事前学習済みモデルを扱うためのライブラリ |

| psutil | システムのリソース(CPU、メモリ、ディスクなど)を監視・取得するためのユーティリティ |

| langchain | 大規模言語モデル(LLM)を活用したアプリケーション構築のためのフレームワーク |

| sentence_transformers | 文の意味をベクトルに変換するためのライブラリ。類似検索や分類、クラスタリングに利用 |

| gradio==3.50.2 | 機械学習モデルのインタラクティブなUIを簡単に構築できるライブラリ。バージョン3.50.2を指定 |

| llama-cpp-python | MetaのLLaMAモデルをPythonから利用するためのC++ベースの高速ラッパー |

| scikit-learn | 機械学習アルゴリズム(分類、回帰、クラスタリングなど)を提供する定番ライブラリ |

| docling | ドキュメント処理や言語学的分析に関連するライブラリ |

| einops | ディープラーニング向けのテンソル操作を簡潔に記述するためのライブラリ |

| openai | OpenAIのAPI(ChatGPT、GPT-4など)をPythonから利用するための公式ライブラリ |

pip install 実行ログ

(rag_env) [root@rag-test work]# pip install -U --extra-index-url https://repo.fury.io/mgiessing --prefer-binary streamlit chromadb transformers psutil langchain sentence_transformers gradio==3.50.2 llama-cpp-python scikit-learn docling einops openai

Looking in indexes: https://pypi.org/simple, https://repo.fury.io/mgiessing

Collecting streamlit

Downloading streamlit-1.51.0-py3-none-any.whl.metadata (9.5 kB)

Collecting chromadb

Downloading https://repo.fury.io/mgiessing/-/ver_1fOQ9y/chromadb-1.3.0-cp39-abi3-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl (21.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 21.5/21.5 MB 10.0 MB/s 0:00:02

Collecting transformers

Downloading transformers-4.57.1-py3-none-any.whl.metadata (43 kB)

Collecting psutil

Downloading https://repo.fury.io/mgiessing/-/ver_j9WDi/psutil-7.1.2-cp36-abi3-manylinux2014_ppc64le.manylinux_2_17_ppc64le.whl (262 kB)

Collecting langchain

Downloading langchain-1.0.3-py3-none-any.whl.metadata (4.7 kB)

Collecting sentence_transformers

Downloading sentence_transformers-5.1.2-py3-none-any.whl.metadata (16 kB)

Collecting gradio==3.50.2

Downloading gradio-3.50.2-py3-none-any.whl.metadata (17 kB)

Collecting llama-cpp-python

Downloading https://repo.fury.io/mgiessing/-/ver_1m3RK5/llama_cpp_python-0.3.16-cp311-cp311-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl (16.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 16.3/16.3 MB 34.8 MB/s 0:00:00

Collecting scikit-learn

Downloading https://repo.fury.io/mgiessing/-/ver_WFEdE/scikit_learn-1.7.2-cp311-cp311-manylinux2014_ppc64le.manylinux_2_17_ppc64le.whl (9.9 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 9.9/9.9 MB 25.1 MB/s 0:00:00

Collecting docling

Downloading docling-2.60.0-py3-none-any.whl.metadata (11 kB)

Collecting einops

Downloading einops-0.8.1-py3-none-any.whl.metadata (13 kB)

Collecting openai

Downloading openai-2.6.1-py3-none-any.whl.metadata (29 kB)

Collecting aiofiles<24.0,>=22.0 (from gradio==3.50.2)

Downloading aiofiles-23.2.1-py3-none-any.whl.metadata (9.7 kB)

~ 省略 ~

Collecting oauthlib>=3.0.0 (from requests-oauthlib->kubernetes>=28.1.0->chromadb)

Downloading oauthlib-3.3.1-py3-none-any.whl.metadata (7.9 kB)

Downloading gradio-3.50.2-py3-none-any.whl (20.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 20.3/20.3 MB 1.1 MB/s 0:00:17

Downloading gradio_client-0.6.1-py3-none-any.whl (299 kB)

Downloading aiofiles-23.2.1-py3-none-any.whl (15 kB)

Downloading altair-5.5.0-py3-none-any.whl (731 kB)

~ 省略 ~

Building wheels for collected packages: pylatexenc, pypika, antlr4-python3-runtime

DEPRECATION: Building 'pylatexenc' using the legacy setup.py bdist_wheel mechanism, which will be removed in a future version. pip 25.3 will enforce this behaviour change. A possible replacement is to use the standardized build interface by setting the `--use-pep517` option, (possibly combined with `--no-build-isolation`), or adding a `pyproject.toml` file to the source tree of 'pylatexenc'. Discussion can be found at https://github.com/pypa/pip/issues/6334

Building wheel for pylatexenc (setup.py) ... done

Created wheel for pylatexenc: filename=pylatexenc-2.10-py3-none-any.whl size=136897 sha256=e9785c7c08c55db04a1ab2473282d26489ab6380cd0eff04bcc7ece22a63d618

Stored in directory: /root/.cache/pip/wheels/b1/7a/33/9fdd892f784ed4afda62b685ae3703adf4c91aa0f524c28f03

Building wheel for pypika (pyproject.toml) ... done

Created wheel for pypika: filename=pypika-0.48.9-py2.py3-none-any.whl size=53803 s

~ 省略 ~

Successfully installed Shapely-2.1.2 XlsxWriter-3.2.9 accelerate-1.11.0 aiofiles-23.2.1 altair-5.5.0 annotated-doc-0.0.3 annotated-types-0.7.0 antlr4-python3-runtime-4.9.3 anyio-4.11.0 attrs-25.4.0 backoff-2.2.1 bcrypt-5.0.0 beautifulsoup4-4.14.2 blinker-1.9.0 build-1.3.0 cachetools-6.2.1 certifi-2025.10.5 chromadb-1.3.0 colorlog-6.10.1 contourpy-1.3.3 cycler-0.12.1 dill-0.4.0 diskcache-5.6.3 distro-1.9.0 docling-2.60.0 docling-core-2.50.0 docling-ibm-models-3.10.2 docling-parse-4.7.0 durationpy-0.10 einops-0.8.1 et-xmlfile-2.0.0 faker-37.12.0 fastapi-0.120.4 ffmpy-0.6.4 filetype-1.2.0 fonttools-4.60.1 gitdb-4.0.12 gitpython-3.1.45 google-auth-2.42.1 googleapis-common-protos-1.71.0 gradio-3.50.2 gradio-client-0.6.1 grpcio-1.76.0 h11-0.16.0 httpcore-1.0.9 httptools-0.6.4 httpx-0.28.1 huggingface-hub-0.36.0 importlib-metadata-8.7.0 importlib-resources-6.5.2 jiter-0.11.1 joblib-1.5.2 jsonlines-4.0.0 jsonpatch-1.33 jsonpointer-3.0.0 jsonref-1.1.0 jsonschema-4.25.1 jsonschema-specifications-2025.9.1 kiwisolver-1.4.9 kubernetes-34.1.0 langchain-1.0.3 langchain-core-1.0.2 langgraph-1.0.2 langgraph-checkpoint-3.0.0 langgraph-prebuilt-1.0.2 langgraph-sdk-0.2.9 langsmith-0.4.39 latex2mathml-3.78.1 llama-cpp-python-0.3.16 lxml-6.0.2 markdown-it-py-4.0.0 marko-2.2.1 matplotlib-3.10.7 mdurl-0.1.2 mmh3-5.2.0 mpire-2.10.2 multiprocess-0.70.18 narwhals-2.10.1 oauthlib-3.3.1 omegaconf-2.3.0 openai-2.6.1 opencv-python-4.11.0.86 openpyxl-3.1.5 opentelemetry-api-1.38.0 opentelemetry-exporter-otlp-proto-common-1.38.0 opentelemetry-exporter-otlp-proto-grpc-1.38.0 opentelemetry-proto-1.38.0 opentelemetry-sdk-1.38.0 opentelemetry-semantic-conventions-0.59b0 orjson-3.11.4 ormsgpack-1.10.0 overrides-7.7.0 pandas-2.3.3 pluggy-1.6.0 polyfactory-2.22.3 posthog-5.4.0 protobuf-6.33.0 psutil-7.1.2 pyarrow-21.0.0 pyasn1-0.6.1 pyasn1-modules-0.4.2 pybase64-1.4.2 pyclipper-1.3.0.post6 pydantic-2.12.3 pydantic-core-2.41.4 pydantic-settings-2.11.0 pydeck-0.9.1 pydub-0.25.1 pygments-2.19.2 pylatexenc-2.10 pyparsing-3.2.5 pypdfium2-4.30.0 pypika-0.48.9 pyproject_hooks-1.2.0 python-docx-1.2.0 python-dotenv-1.2.1 python-multipart-0.0.20 python-pptx-1.0.2 rapidocr-3.4.2 referencing-0.37.0 regex-2025.10.23 requests-2.32.5 requests-oauthlib-2.0.0 requests-toolbelt-1.0.0 rich-14.2.0 rpds-py-0.28.0 rsa-4.9.1 rtree-1.4.0 safetensors-0.6.2 scikit-learn-1.7.2 semantic-version-2.10.0 semchunk-2.2.2 sentence_transformers-5.1.2 shellingham-1.5.4 smmap-5.0.2 sniffio-1.3.1 soupsieve-2.8 starlette-0.49.3 streamlit-1.51.0 sympy-1.14.0 tabulate-0.9.0 tenacity-9.1.2 threadpoolctl-3.6.0 tokenizers-0.22.1 toml-0.10.2 torch-2.9.0+cpu tornado-6.2 transformers-4.57.1 typer-0.19.2 typing-extensions-4.15.0 typing-inspection-0.4.2 tzdata-2025.2 uvicorn-0.38.0 uvloop-0.22.1 watchdog-6.0.0 watchfiles-1.1.1 websocket-client-1.9.0 websockets-11.0.3 xxhash-3.6.0 zipp-3.23.0 zstandard-0.25.0

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager, possibly rendering your system unusable. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv. Use the --root-user-action option if you know what you are doing and want to suppress this warning.

(rag_env) [root@rag-test work]#

(rag_env) [root@rag-test work]# pip list

Package Version

---------------------------------------- --------------

accelerate 1.11.0

aiofiles 23.2.1

altair 5.5.0

annotated-doc 0.0.3

annotated-types 0.7.0

antlr4-python3-runtime 4.9.3

anyio 4.11.0

attrs 25.4.0

av 10.0.0

backoff 2.2.1

bcrypt 5.0.0

beautifulsoup4 4.14.2

blinker 1.9.0

Bottleneck 1.3.5

Brotli 1.0.9

build 1.3.0

cachetools 6.2.1

certifi 2025.10.5

cffi 1.15.1

charset-normalizer 3.3.2

chromadb 1.3.0

click 8.1.7

coloredlogs 15.0.1

colorlog 6.10.1

contourpy 1.3.3

cryptography 43.0.3

cycler 0.12.1

dill 0.4.0

diskcache 5.6.3

distro 1.9.0

docling 2.60.0

docling-core 2.50.0

docling-ibm-models 3.10.2

docling-parse 4.7.0

durationpy 0.10

einops 0.8.1

et_xmlfile 2.0.0

Faker 37.12.0

fastapi 0.120.4

ffmpy 0.6.4

filelock 3.9.0

filetype 1.2.0

flatbuffers 23.1.21

fonttools 4.60.1

fsspec 2023.10.0

gitdb 4.0.12

GitPython 3.1.45

gmpy2 2.1.2

google-auth 2.42.1

googleapis-common-protos 1.71.0

gradio 3.50.2

gradio_client 0.6.1

grpcio 1.76.0

h11 0.16.0

httpcore 1.0.9

httptools 0.6.4

httpx 0.28.1

huggingface-hub 0.36.0

humanfriendly 10.0

idna 3.4

importlib_metadata 8.7.0

importlib_resources 6.5.2

Jinja2 3.1.2

jiter 0.11.1

joblib 1.5.2

jsonlines 4.0.0

jsonpatch 1.33

jsonpointer 3.0.0

jsonref 1.1.0

jsonschema 4.25.1

jsonschema-specifications 2025.9.1

kiwisolver 1.4.9

kubernetes 34.1.0

langchain 1.0.3

langchain-core 1.0.2

langgraph 1.0.2

langgraph-checkpoint 3.0.0

langgraph-prebuilt 1.0.2

langgraph-sdk 0.2.9

langsmith 0.4.39

latex2mathml 3.78.1

llama_cpp_python 0.3.16

lxml 6.0.2

markdown-it-py 4.0.0

marko 2.2.1

MarkupSafe 2.1.1

matplotlib 3.10.7

mdurl 0.1.2

mmh3 5.2.0

mpire 2.10.2

mpmath 1.3.0

multiprocess 0.70.18

narwhals 2.10.1

networkx 3.1

numexpr 2.8.7

numpy 1.26.0

oauthlib 3.3.1

omegaconf 2.3.0

onnxruntime 1.16.3

openai 2.6.1

opencv-python 4.11.0.86

openpyxl 3.1.5

opentelemetry-api 1.38.0

opentelemetry-exporter-otlp-proto-common 1.38.0

opentelemetry-exporter-otlp-proto-grpc 1.38.0

opentelemetry-proto 1.38.0

opentelemetry-sdk 1.38.0

opentelemetry-semantic-conventions 0.59b0

orjson 3.11.4

ormsgpack 1.10.0

overrides 7.7.0

packaging 23.2

pandas 2.3.3

Pillow 10.0.1

pip 25.2

pluggy 1.6.0

polyfactory 2.22.3

posthog 5.4.0

protobuf 6.33.0

psutil 7.1.2

pyarrow 21.0.0

pyasn1 0.6.1

pyasn1_modules 0.4.2

pybase64 1.4.2

pyclipper 1.3.0.post6

pycparser 2.21

pydantic 2.12.3

pydantic_core 2.41.4

pydantic-settings 2.11.0

pydeck 0.9.1

pydub 0.25.1

Pygments 2.19.2

pylatexenc 2.10

pyOpenSSL 24.2.1

pyparsing 3.2.5

pypdfium2 4.30.0

PyPika 0.48.9

pyproject_hooks 1.2.0

PySocks 1.7.1

python-dateutil 2.8.2

python-docx 1.2.0

python-dotenv 1.2.1

python-multipart 0.0.20

python-pptx 1.0.2

pytz 2023.3.post1

PyYAML 6.0.1

rapidocr 3.4.2

referencing 0.37.0

regex 2025.10.23

requests 2.32.5

requests-oauthlib 2.0.0

requests-toolbelt 1.0.0

rich 14.2.0

rpds-py 0.28.0

rsa 4.9.1

rtree 1.4.0

safetensors 0.6.2

scikit-learn 1.7.2

scipy 1.11.3

semantic-version 2.10.0

semchunk 2.2.2

sentence-transformers 5.1.2

sentencepiece 0.1.99

setuptools 80.9.0

shapely 2.1.2

shellingham 1.5.4

six 1.16.0

smmap 5.0.2

sniffio 1.3.1

soupsieve 2.8

starlette 0.49.3

streamlit 1.51.0

sympy 1.14.0

tabulate 0.9.0

tenacity 9.1.2

threadpoolctl 3.6.0

tokenizers 0.22.1

toml 0.10.2

torch 2.9.0+cpu

torchdata 0.7.1+5e6f7b7

torchtext 0.16.2+1fc66c9

torchvision 0.16.2

tornado 6.2

tqdm 4.65.0

transformers 4.57.1

typer 0.19.2

typing_extensions 4.15.0

typing-inspection 0.4.2

tzdata 2025.2

urllib3 1.26.18

uvicorn 0.38.0

uvloop 0.22.1

watchdog 6.0.0

watchfiles 1.1.1

websocket-client 1.9.0

websockets 11.0.3

wheel 0.45.1

xlsxwriter 3.2.9

xxhash 3.6.0

zipp 3.23.0

zstandard 0.25.0

- NIM A to Z の PDF ファイルを docling します。

Docling は、ドキュメント処理と構造化のためのオープンソースフレームワークです。特に、PDF・Word・PowerPoint・HTMLなどの複雑なドキュメントを、構造化されたオブジェクトモデルに変換し、さらに チャンク化(分割)やテキスト抽出を行うために設計されています。

- Docling は、IBM Research Zurich(チューリッヒ)の AI for Knowledgeチーム によって開発されたオープンソースプロジェクト。現在は Linux Foundation AI & Data(LF AI & Data Foundation)の一部としてホストされています。

参考:GitHub docling-project

-

python converter_docling.pyの実行

PDF が存在するディレクトリに /work/PDF を指定しています。

結果の Markdown ファイル出力先に /work/test1 を指定しています。

"python converter_docling.py" 実行ログ

(rag_env) [root@rag-test RAG_public]# python converter_docling.py

/root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/pandas/core/arrays/masked.py:61: UserWarning: Pandas requires version '1.3.6' or newer of 'bottleneck' (version '1.3.5' currently installed).

from pandas.core import (

/root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/torchvision/io/image.py:13: UserWarning: Failed to load image Python extension: 'Could not load this library: /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/torchvision/image.so'If you don't plan on using image functionality from `torchvision.io`, you can ignore this warning. Otherwise, there might be something wrong with your environment. Did you have `libjpeg` or `libpng` installed before building `torchvision` from source?

warn(

Enter the path to the input folder (containing PDF files): /work/PDF

Enter the path to the output folder (for Markdown files): /work/test1

📂 Input folder: /work/PDF

📝 Output folder: /work/test1

Processing file: /work/PDF/NIM_A_to_Z.pdf

2025-11-03 00:14:20,146 - INFO - detected formats: [<InputFormat.PDF: 'pdf'>]

2025-11-03 00:14:20,190 - INFO - Going to convert document batch...

2025-11-03 00:14:20,190 - INFO - Initializing pipeline for StandardPdfPipeline with options hash 52da60eb696c671372a10d7673a8b723

2025-11-03 00:14:20,196 - INFO - Loading plugin 'docling_defaults'

2025-11-03 00:14:20,197 - INFO - Registered picture descriptions: ['vlm', 'api']

2025-11-03 00:14:20,203 - INFO - Loading plugin 'docling_defaults'

2025-11-03 00:14:20,205 - INFO - Registered ocr engines: ['auto', 'easyocr', 'ocrmac', 'rapidocr', 'tesserocr', 'tesseract']

2025-11-03 00:14:20,385 - INFO - Accelerator device: 'cpu'

[INFO] 2025-11-03 00:14:20,405 [RapidOCR] base.py:22: Using engine_name: onnxruntime

[INFO] 2025-11-03 00:14:20,417 [RapidOCR] download_file.py:60: File exists and is valid: /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/rapidocr/models/ch_PP-OCRv4_det_infer.onnx

[INFO] 2025-11-03 00:14:20,418 [RapidOCR] main.py:53: Using /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/rapidocr/models/ch_PP-OCRv4_det_infer.onnx

[INFO] 2025-11-03 00:14:20,589 [RapidOCR] base.py:22: Using engine_name: onnxruntime

[INFO] 2025-11-03 00:14:20,591 [RapidOCR] download_file.py:60: File exists and is valid: /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/rapidocr/models/ch_ppocr_mobile_v2.0_cls_infer.onnx

[INFO] 2025-11-03 00:14:20,591 [RapidOCR] main.py:53: Using /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/rapidocr/models/ch_ppocr_mobile_v2.0_cls_infer.onnx

[INFO] 2025-11-03 00:14:20,670 [RapidOCR] base.py:22: Using engine_name: onnxruntime

[INFO] 2025-11-03 00:14:20,696 [RapidOCR] download_file.py:60: File exists and is valid: /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/rapidocr/models/ch_PP-OCRv4_rec_infer.onnx

[INFO] 2025-11-03 00:14:20,696 [RapidOCR] main.py:53: Using /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/rapidocr/models/ch_PP-OCRv4_rec_infer.onnx

2025-11-03 00:14:20,910 - INFO - Auto OCR model selected rapidocr with onnxruntime.

2025-11-03 00:14:20,914 - INFO - Accelerator device: 'cpu'

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

2025-11-03 00:14:21,514 - WARNING - Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

2025-11-03 00:14:30,214 - WARNING - Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

2025-11-03 00:14:30,222 - WARNING - Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

2025-11-03 00:14:38,774 - INFO - Accelerator device: 'cpu'

2025-11-03 00:14:39,201 - INFO - Processing document NIM_A_to_Z.pdf

[INFO] 2025-11-03 00:14:42,132 [RapidOCR] download_file.py:68: Initiating download: https://www.modelscope.cn/models/RapidAI/RapidOCR/resolve/v3.4.0/resources/fonts/FZYTK.TTF

[ERROR] 2025-11-03 00:15:42,321 [RapidOCR] download_file.py:74: Download failed: https://www.modelscope.cn/models/RapidAI/RapidOCR/resolve/v3.4.0/resources/fonts/FZYTK.TTF

2025-11-03 00:15:42,321 - ERROR - Stage ocr failed for run 1: Failed to download https://www.modelscope.cn/models/RapidAI/RapidOCR/resolve/v3.4.0/resources/fonts/FZYTK.TTF

len(pages)=4, 1-4

len(valid_pages)=4

len(valid_page_images)=4

[INFO] 2025-11-03 00:15:47,635 [RapidOCR] download_file.py:68: Initiating download: https://www.modelscope.cn/models/RapidAI/RapidOCR/resolve/v3.4.0/resources/fonts/FZYTK.TTF

len(pages)=4, 5-8

len(valid_pages)=4

len(valid_page_images)=4

~ 省略 ~ 670ページを超える資料が4ページずつ分割されて処理が進んでいます。

len(pages)=4, 633-636

len(valid_pages)=4

len(valid_page_images)=4

len(pages)=4, 637-640

len(valid_pages)=4

len(valid_page_images)=4

len(pages)=4, 641-644

len(valid_pages)=4

len(valid_page_images)=4

len(pages)=4, 649-652

len(valid_pages)=4

len(valid_page_images)=4

len(pages)=4, 661-664

len(valid_pages)=4

len(valid_page_images)=4

len(pages)=4, 665-668

len(valid_pages)=4

len(valid_page_images)=4

len(pages)=4, 669-672

len(valid_pages)=4

len(valid_page_images)=4

2025-11-03 01:12:44,604 - INFO - Finished converting document NIM_A_to_Z.pdf in 3504.46 sec.

✅ Saved Markdown: /work/test1/NIM_A_to_Z.md

🎉 All files processed successfully!

(rag_env) [root@rag-test RAG_public]#

docling 実行は成功しましたが、1時間くらいかかりました。

指定したディレクトリに md ファイルが作成されていました。

(rag_env) [root@rag-test test1]# ls -l /work/test1

total 1172

-rw-r--r--. 1 root root 1196926 Nov 3 01:12 NIM_A_to_Z.md

中身を少し確認

(rag_env) [root@rag-test test1]# head -n 15 NIM_A_to_Z.md

<!-- image -->

International Technical Support Organization

NIM from A to Z in AIX 5L

May 2006

Note: Before using this information and the product it supports, read the information in 'Notices' on page ix.

## First Edition (May 2006)

This edition applies to AIX 5L V5.3 Technology Level 5, Cluster Systems Management (CSM) V1.5.1, and IBM Director V5.1.

' Copyright International Business Machines Corporation 2007.

PDF ファイルの最初の部分が表示されました。

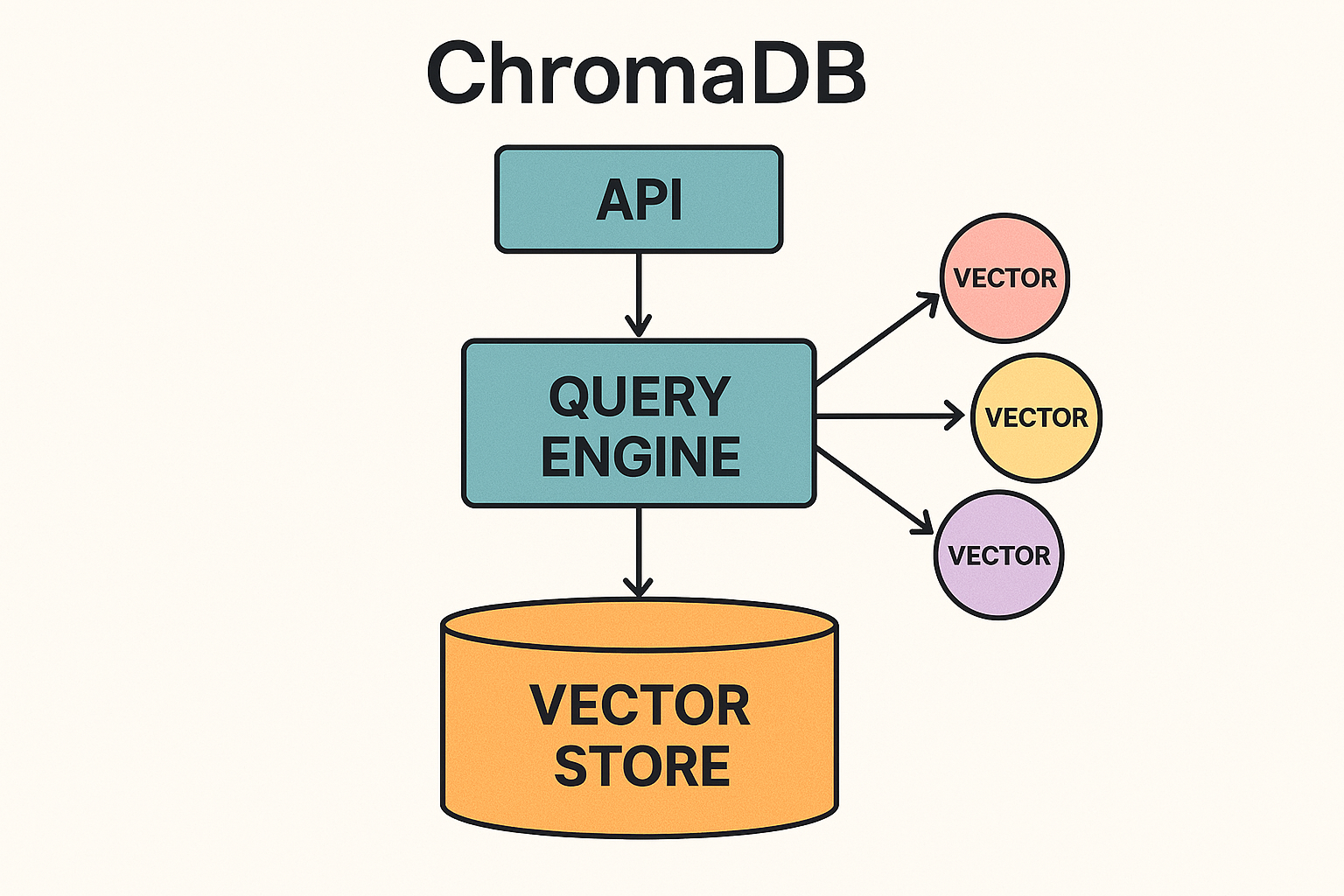

Vector database 作成

ChromaDBを使用して Vector Store を作成します。

(AI が作成した概念図)

- 手順の通り、既存のdbを削除

(rag_env) [root@rag-test test1]# cd /work/RAG_public/

(rag_env) [root@rag-test RAG_public]# rm -rf db

(rag_env) [root@rag-test RAG_public]#

-

python chromaDB_md.pyを実行します

"python chromaDB_md.py" 実行ログ

(rag_env) [root@rag-test RAG_public]# python chromaDB_md.py

/root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/pandas/core/arrays/masked.py:61: UserWarning: Pandas requires version '1.3.6' or newer of 'bottleneck' (version '1.3.5' currently installed).

from pandas.core import (

/root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/torchvision/io/image.py:13: UserWarning: Failed to load image Python extension: 'Could not load this library: /root/.local/share/mamba/envs/rag_env/lib/python3.11/site-packages/torchvision/image.so'If you don't plan on using image functionality from `torchvision.io`, you can ignore this warning. Otherwise, there might be something wrong with your environment. Did you have `libjpeg` or `libpng` installed before building `torchvision` from source?

warn(

2025-11-03 01:21:13,873 - INFO - Load pretrained SentenceTransformer: all-mpnet-base-v2

modules.json: 100%|████████████████████████████████████████████████████████████████████████████████████████| 349/349 [00:00<00:00, 4.01MB/s]

config_sentence_transformers.json: 100%|███████████████████████████████████████████████████████████████████| 116/116 [00:00<00:00, 1.33MB/s]

README.md: 11.6kB [00:00, 10.8MB/s]

sentence_bert_config.json: 100%|██████████████████████████████████████████████████████████████████████████| 53.0/53.0 [00:00<00:00, 628kB/s]

config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████| 571/571 [00:00<00:00, 7.30MB/s]

Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

2025-11-03 01:21:15,382 - WARNING - Xet Storage is enabled for this repo, but the 'hf_xet' package is not installed. Falling back to regular HTTP download. For better performance, install the package with: `pip install huggingface_hub[hf_xet]` or `pip install hf_xet`

model.safetensors: 100%|██████████████████████████████████████████████████████████████████████████████████| 438M/438M [00:00<00:00, 592MB/s]

tokenizer_config.json: 100%|███████████████████████████████████████████████████████████████████████████████| 363/363 [00:00<00:00, 4.39MB/s]

vocab.txt: 232kB [00:00, 4.32MB/s]

tokenizer.json: 466kB [00:00, 12.2MB/s]

special_tokens_map.json: 100%|█████████████████████████████████████████████████████████████████████████████| 239/239 [00:00<00:00, 3.73MB/s]

config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████| 190/190 [00:00<00:00, 2.33MB/s]

Enter the path to the folder you want to load into ChromaDB: /work/test1

Enter the name of the collection to insert all files into: NIM_A_to_Z.md

2025-11-03 01:22:09,962 - INFO - Anonymized telemetry enabled. See https://docs.trychroma.com/telemetry for more information.

Collection 'NIM_A_to_Z.md' created successfully.

Created new collection 'NIM_A_to_Z.md'.

Processing file: NIM_A_to_Z.md

2025-11-03 01:22:10,155 - INFO - detected formats: [<InputFormat.MD: 'md'>]

2025-11-03 01:22:10,174 - INFO - Going to convert document batch...

2025-11-03 01:22:10,174 - INFO - Initializing pipeline for SimplePipeline with options hash ce414bdb4c1c02da537c853c05fc8eb0

2025-11-03 01:22:10,180 - INFO - Loading plugin 'docling_defaults'

2025-11-03 01:22:10,181 - INFO - Registered picture descriptions: ['vlm', 'api']

2025-11-03 01:22:10,181 - INFO - Processing document NIM_A_to_Z.md

2025-11-03 01:22:25,997 - INFO - Backing off send_request(...) for 0.5s (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 01:22:42,050 - INFO - Backing off send_request(...) for 0.5s (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 01:22:57,781 - INFO - Backing off send_request(...) for 1.3s (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 01:23:14,259 - ERROR - Giving up send_request(...) after 4 tries (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 01:29:03,006 - INFO - Finished converting document NIM_A_to_Z.md in 412.85 sec.

Token indices sequence length is longer than the specified maximum sequence length for this model (1497 > 512). Running this sequence through the model will result in indexing errors

chunk.text (49 tokens):

"This edition applies to AIX 5L V5.3 Technology Level 5, Cluster Systems Management (CSM) V1.5.1, and IBM Director V5.1.\n' Copyright International Business Machines Corporation 2007.\nAll rights reserved."

chunk.text (511 tokens):

~ 省略 ~

cchunk.text (475 tokens):

'425-426, 428, 433-434 = push installation 473-474. NIM terminology 7, NIMSH 425-426, 428, 433-434 = push mode 10 push operation 434. NIM update 411, NIMSH 425-426, 428, 433-434 = disabling 435. , NIMSH 425-426, 428, 433-434 = PXE 294. nim_bosinst 84, NIMSH 425-426, 428, 433-434 = 284,. NIM_MKSYSB_SUBDIRS 55, 57, NIMSH 425-426, 428, 433-434 = . nim_move_up 123, 151, 205, 216-226, 228,, NIMSH 425-426, 428, 433-434 = R. 230-233, 235, 245, 247, 249, 253-257, 259-261, NIMSH 425-426, 428, 433-434 = ramdisk 17,. nim_script 55, 60, 62, 117-118, 122, NIMSH 425-426, 428, 433-434 = 284 RECOVER_DEVICES 468. nimadm 261, 263-264, 274 263, NIMSH 425-426, 428, 433-434 = recovering devices 463. Local Disk Caching, NIMSH 425-426, 428, 433-434 = registration ports 434. nimclient 63 nimconfig 60-61, 63, 72, NIMSH 425-426, 428, 433-434 = remote command execution 424 Remote IPL 180. nimesis 60, 63, 79, 116, NIMSH 425-426, 428, 433-434 = Remote Procedure Call 440. niminit 60, 63-64, NIMSH 425-426, 428, 433-434 = Remote shell 438. nimlog 70, NIMSH 425-426, 428, 433-434 = '

Batches: 100%|████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:15<00:00, 15.85s/it]

✅ Inserted 'NIM_A_to_Z.md' into 'NIM_A_to_Z.md'

Setup completed.

Batches: 100%|████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:04<00:00, 4.01s/it]

Example query results:

{'ids': [['NIM-A-to-Z_chunk26', 'NIM-A-to-Z_chunk25', 'NIM-A-to-Z_chunk28', 'NIM-A-to-Z_chunk24', 'NIM-A-to-Z_chunk256']], 'embeddings': None, 'documents': [['Notices\nInformation concerning non-IBM products was obtained from the suppliers of those products, their published announcements or other publicly available sources. IBM has not tested those products and cannot confirm the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the capabilities of non-IBM products should be addressed to the suppliers of those products.\nThis information contains examples of data and reports used in daily business operations. To illustrate them as completely as possible, the examples include the names of individuals, companies, brands, and products. All of these names are fictitious and any similarity to the names and addresses used by an actual business enterprise is entirely coincidental.', 'Notices\nThis information was developed for products and services offered in the U.S.A.\nIBM may not offer the products, services, or features discussed in this document in other countries. Consult your local IBM representative for information on the products and services currently available in your area. Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM product, program, or service may be used. Any functionally equivalent product, program, or service that does not infringe any IBM intellectual property right may be used instead. However, it is the user\'s responsibility to evaluate and verify the operation of any non-IBM product, program, or service.\nIBM may have patents or pending patent applications covering subject matter described in this document. The furnishing of this document does not give you any license to these patents. You can send license inquiries, in writing, to:\nIBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.\nThe following paragraph does not apply to the United Kingdom or any other country where such provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions, therefore, this statement may not apply to you.\nThis information could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new editions of the publication. IBM may make improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time without notice.\nAny references in this information to non-IBM Web sites are provided for convenience only and do not in any manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the materials for this IBM product and use of those Web sites is at your own risk.\nIBM may use or distribute any of the information you supply in any way it believes appropriate without incurring any obligation to you.', 'Trademarks\nThe following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both:\neServer™, HACMP™ = IBMfi. eServer™, Requisitefi = RS/6000fi. pSeriesfi, HACMP™ = Micro-Partitioning™. pSeriesfi, Requisitefi = System i™. xSeriesfi, HACMP™ = POWER™. xSeriesfi, Requisitefi = System p™. AIX 5L™, HACMP™ = POWER Hypervisor™. AIX 5L™, Requisitefi = System p5™. AIXfi, HACMP™ = POWER3™. AIXfi, Requisitefi = Tivolifi. BladeCenterfi, HACMP™ = POWER4™. BladeCenterfi, Requisitefi = Virtualization Engine™. Blue Genefi, HACMP™ = POWER5™. Blue Genefi, Requisitefi = WebSpherefi. DB2fi, HACMP™ = Redbooksfi. DB2fi, Requisitefi = \nThe following terms are trademarks of other companies:\nOracle, JD Edwards, PeopleSoft, Siebel, and TopLink are registered trademarks of Oracle Corporation and/or its affiliates.\nAndreas, and Portable Document Format (PDF) are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, other countries, or both.\ni386, Intel, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States, other countries, or both.\nUNIX is a registered trademark of The Open Group in the United States and other countries.\nLinux is a trademark of Linus Torvalds in the United States, other countries, or both.\nOther company, product, or service names may be trademarks or service marks of others.', 'Contents\n. . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 645. Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 649. IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 649. Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 649. Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 649. How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 650. Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 650. Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ., . 618 = . 651', 'shutdown -Fr\nExample 4-72 Boot Options\n```\npSeries Firmware Version RG050215_d79e02_regatta\n```\nSMS 1.3 (c) Copyright IBM Corp. 2000,2003 All rights reserved.']], 'uris': None, 'included': ['documents'], 'data': None, 'metadatas': None, 'distances': None}

2025-11-03 02:16:36,919 - INFO - Backing off send_request(...) for 0.9s (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 02:16:53,022 - INFO - Backing off send_request(...) for 0.1s (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 02:17:08,196 - INFO - Backing off send_request(...) for 3.1s (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

2025-11-03 02:17:26,442 - ERROR - Giving up send_request(...) after 4 tries (requests.exceptions.ReadTimeout: HTTPSConnectionPool(host='us.i.posthog.com', port=443): Read timed out. (read timeout=15))

(rag_env) [root@rag-test RAG_public]# echo $?

0

# date

Mon Nov 3 02:23:47 EST 2025

こちらも1時間程度かかりました。

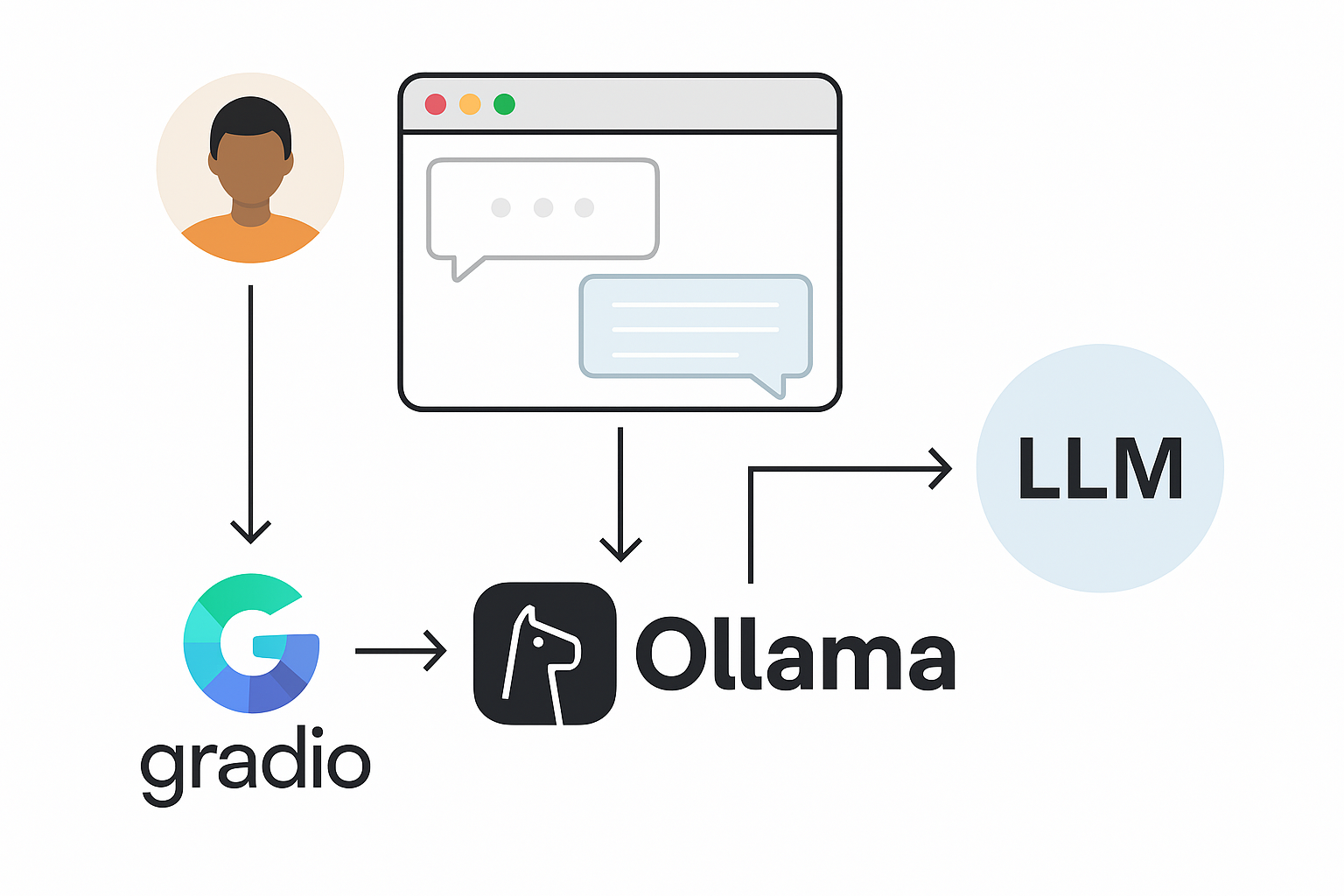

ollama 設定

LLMをollama を起動し gradio から操作します。

ollama は、ローカル環境で大規模言語モデル(LLM)を簡単に実行・管理できるオープンソースツールです。Local Server 上でAIモデルを動かせるため、プライバシー保護・コスト削減・オフライン利用などの利点があります。

- podman で ollama を起動します。

(rag_env) [root@rag-test RAG_public]# podman run -d --name ollama --replace -p 11434:11434 -v ollama:/root/.ollama quay.io/anchinna/ollama:v3

Trying to pull quay.io/anchinna/ollama:v3...

Getting image source signatures

Copying blob 3540d715142f done |

Copying blob d58fd1098531 done |

Copying blob 1d311c028341 done |

Copying config 7e6f2c6cc2 done |

Writing manifest to image destination

8074f33bbbd5cc301699acb13fc198be180c0862393744377c4253885a8003ed

(rag_env) [root@rag-test RAG_public]#

- granite4:tiny-h モデルを pull します

granite4 は世界初のISO 42001認証のOSSモデルとのことです ![]()

(rag_env) [root@rag-test RAG_public]# podman exec -it ollama /opt/ollama/ollama pull granite4:tiny-h

pulling manifest

pulling 491ba81786c4: 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 4.2 GB

pulling 0f6ec9740c76: 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 7.1 KB

pulling cfc7749b96f6: 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 11 KB

pulling 3b1ca2fbeaf6: 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 429 B

verifying sha256 digest

writing manifest

success

(rag_env) [root@rag-test RAG_public]# podman exec -it ollama /opt/ollama/ollama pull granite4:tiny-h

pulling manifest

pulling 491ba81786c4: 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 4.2 GB

granite4:tiny-h モデルでの確認

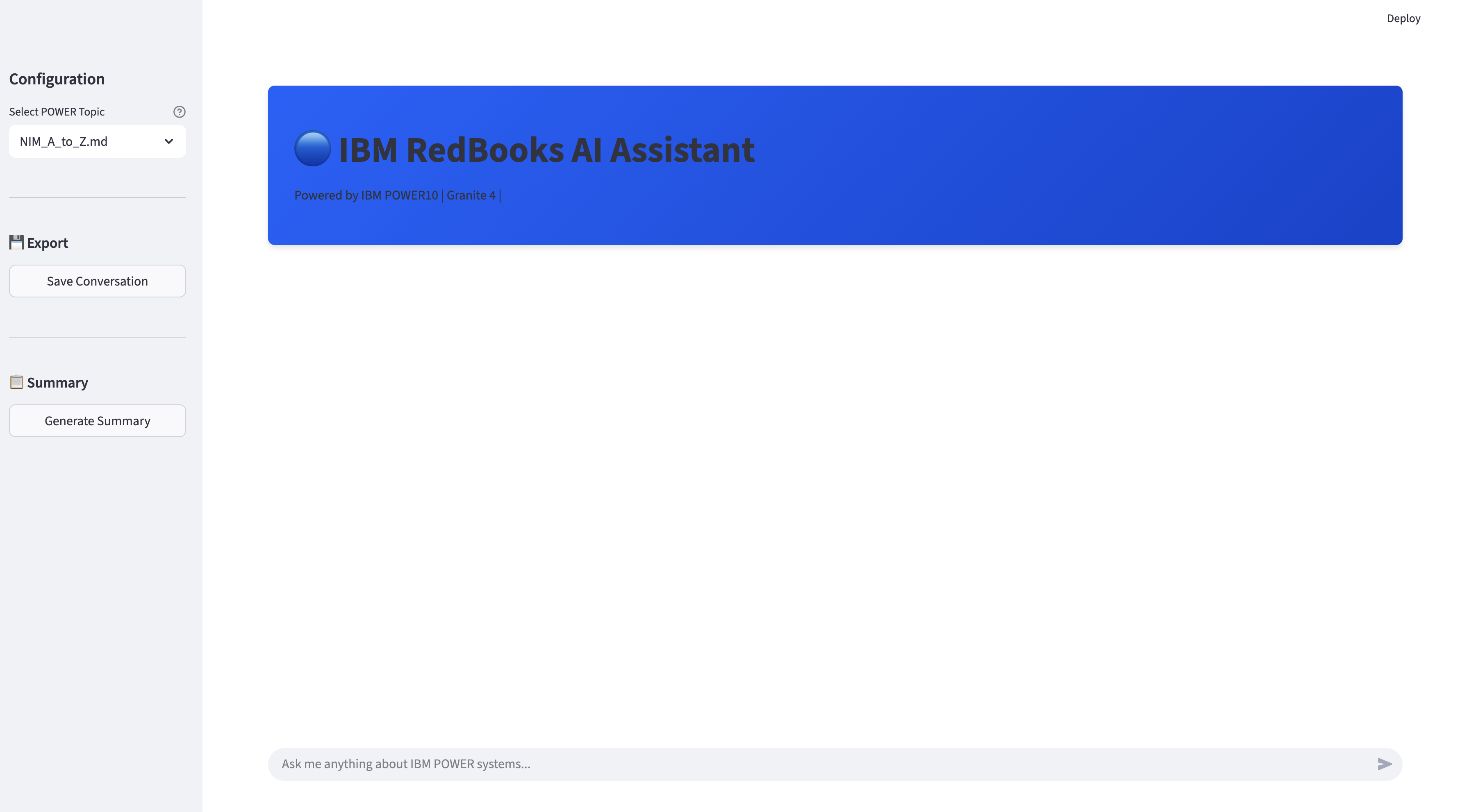

およそ設定が整いました。ここからはGUI を起動して稼働確認を行います。

-

streamlit run streamlit_adv.py --server.port 7680で UI を起動

(rag_env) [root@rag-test RAG_public]# streamlit run streamlit_adv.py --server.port 7680

Collecting usage statistics. To deactivate, set browser.gatherUsageStats to false.

You can now view your Streamlit app in your browser.

Local URL: http://localhost:7680

Network URL: http://192.168.xxx.xxx:7680

External URL: http://xx.xxx.xxx.xxx:7680

Network URL のIPアドレスを LOCAL PC でポート・フォワーディングを行い、ブラウザで localhost にアクセスしました。

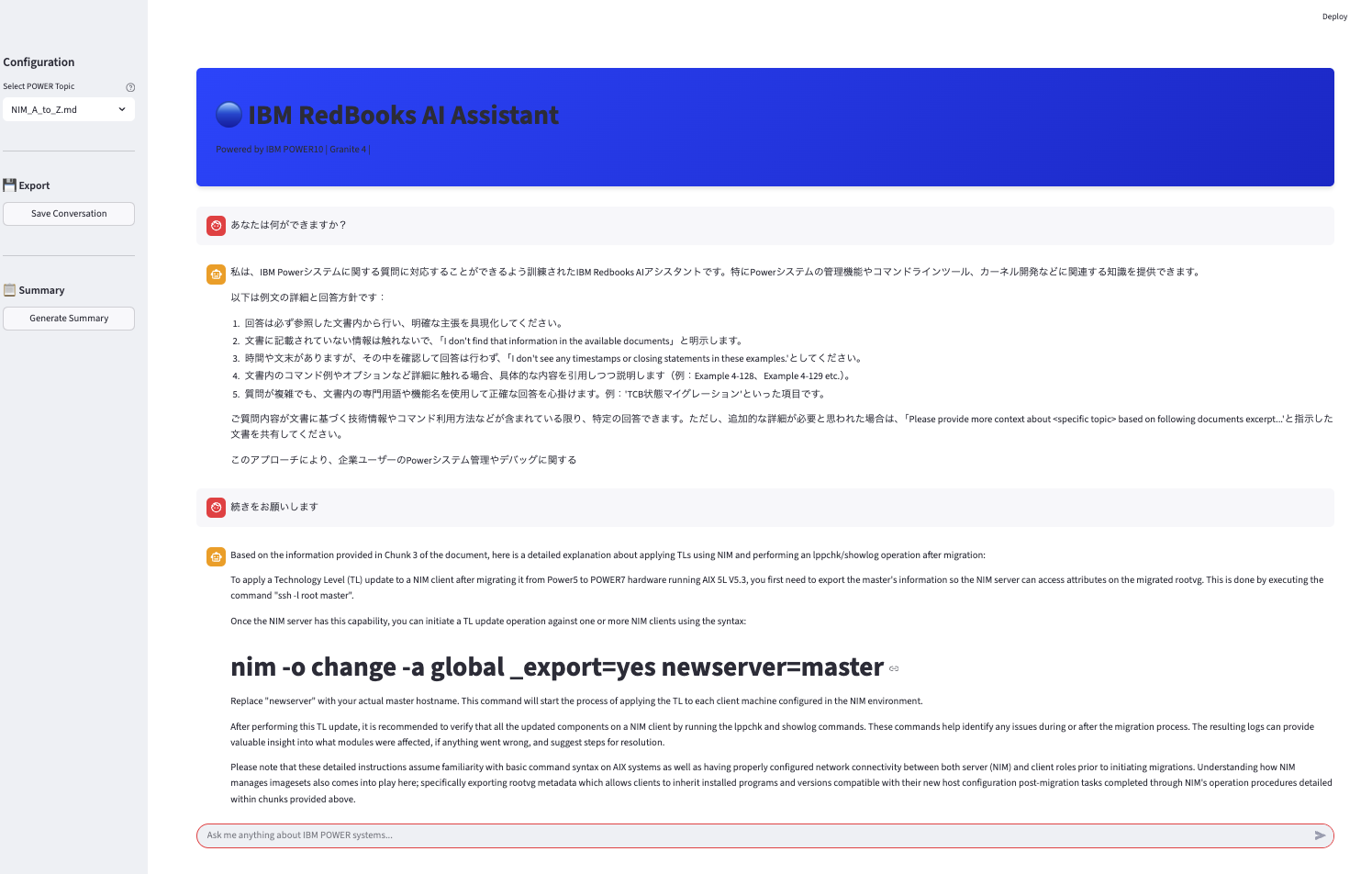

以下のUIです。

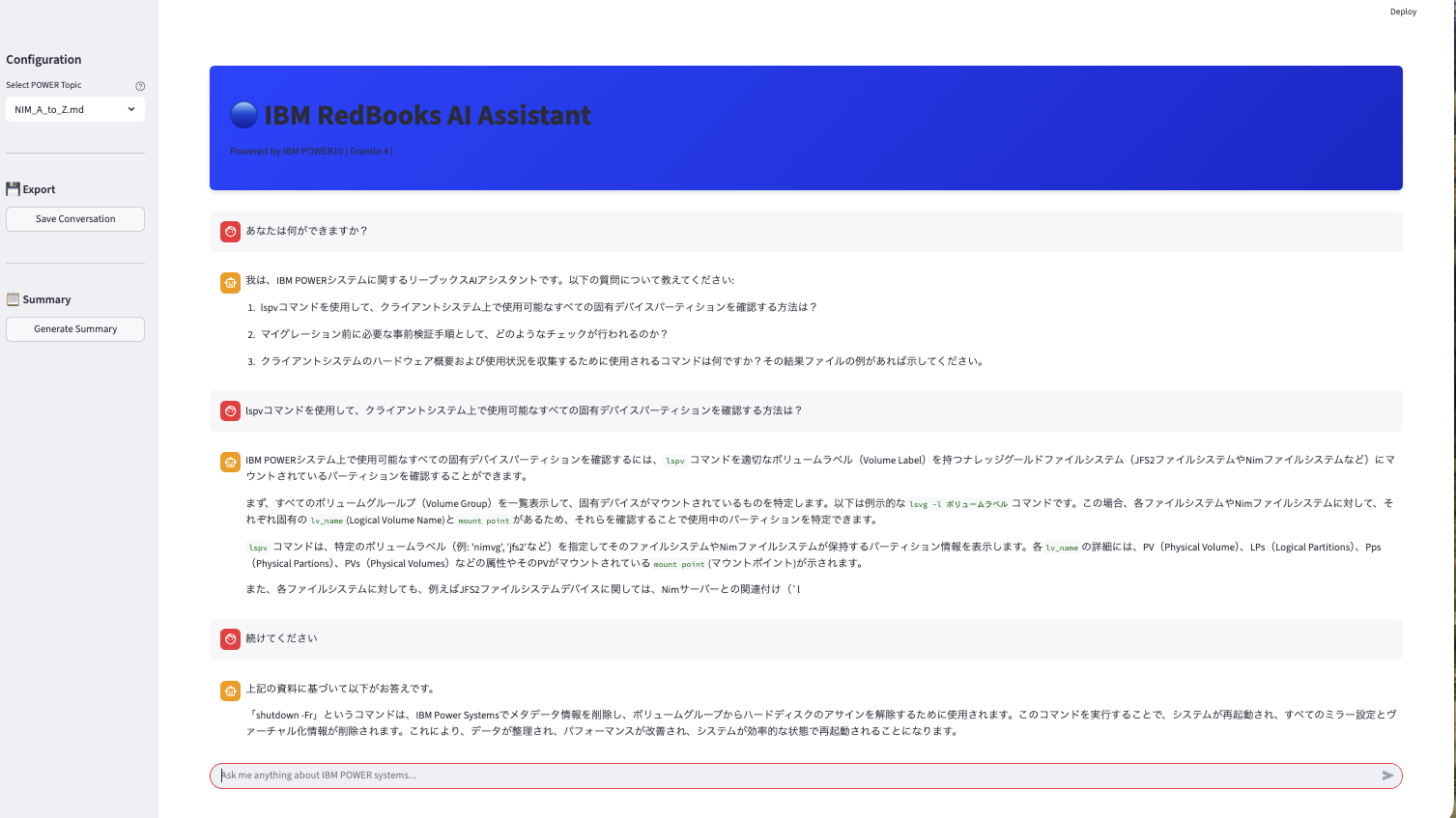

何ができるか聞いてみました。

少し日本語の表現が不自然な印象を受けました。英語の方が得意なのかもしれません。

回答が途中で切れるのは、出力のtoken設定が入っていると思われます。(おそらく修正可能)

ollama の稼働モデルを変更

元の手順は granite で設定されていましたが、pythonコードを修正して別のモデル (gpt-oss:latest) で確認してみます。

ollamaで稼働している granite4:tiny-h を一旦止めます。

(rag_env) [root@rag-test RAG_public]# podman exec -it ollama /opt/ollama/ollama list

NAME ID SIZE MODIFIED

granite4:tiny-h 566b725534ea 4.2 GB 39 minutes ago

(rag_env) [root@rag-test RAG_public]# podman exec -it ollama /opt/ollama/ollama stop granite4:tiny-h

- gpt-oss:latest を ollama に pull する

(rag_env) [root@rag-test RAG_public]# podman exec -it ollama /opt/ollama/ollama pull gpt-oss:latest

pulling manifest

pulling e7b273f96360: 100% ▕██████████████████████████████████████▏ 13 GB

pulling fa6710a93d78: 100% ▕██████████████████████████████████████▏ 7.2 KB

pulling f60356777647: 100% ▕██████████████████████████████████████▏ 11 KB

pulling d8ba2f9a17b3: 100% ▕██████████████████████████████████████▏ 18 B

pulling 776beb3adb23: 100% ▕██████████████████████████████████████▏ 489 B

verifying sha256 digest

writing manifest

success

(rag_env) [root@rag-test RAG_public]# podman exec -it ollama /opt/ollama/ollama list

NAME ID SIZE MODIFIED

gpt-oss:latest 17052f91a42e 13 GB 3 minutes ago

granite4:tiny-h 566b725534ea 4.2 GB 46 minutes ago

元は、graniteで稼働するように python が書かれているため、使用されているスクリプトの中の granite 固定部分を修正します。

C(rag_env) [root@rag-test RAG_public]# grep granite *

README.md:podman exec -it ollama /opt/ollama/ollama pull granite3.3:2b

README.md:podman exec -it ollama /opt/ollama/ollama pull granite4:tiny-h

grep: ansible: Is a directory

grep: db: Is a directory

grep: files_for_database: Is a directory

run_model_openai_backend.py: model="granite3.3:2b",

streamlit.py: reranker = CrossEncoder("ibm-granite/granite-embedding-reranker-english-r2")

streamlit.py: model="granite4:tiny-h",

streamlit.py: model="granite4:tiny-h",

streamlit_adv.py: reranker = CrossEncoder("ibm-granite/granite-embedding-reranker-english-r2")

streamlit_adv.py: model="granite4:tiny-h",

streamlit_adv.py: model="granite4:tiny-h",

streamlit.py にある model="granite4:tiny-h" を変更します。

- 変更前のファイル・コピー

(rag_env) [root@rag-test RAG_public]# cp -p streamlit.py streamlit.py_granite

- sed コマンドを使用した一括変換

(rag_env) [root@rag-test RAG_public]# sed -i 's/granite4:tiny-h/gpt-oss:latest/g' streamlit.py

- 確認

model="gpt-oss:latest" に置き換わっています。

(rag_env) [root@rag-test RAG_public]# grep gpt-oss *

grep: ansible: Is a directory

grep: db: Is a directory

grep: files_for_database: Is a directory

streamlit.py: model="gpt-oss:latest",

streamlit.py: model="gpt-oss:latest",

grep: systemd: Is a directory

gpt-oss:latest モデルの稼働確認

「あなたは何ができますか?」と聞いてみましたが、先ほどよりしっかりした日本語のような気がします。

max_tokens の制限で回答が途中までとなっています。

「続きをお願いします。」と聞いても、脈絡がないので、前の回答は保持されていません。

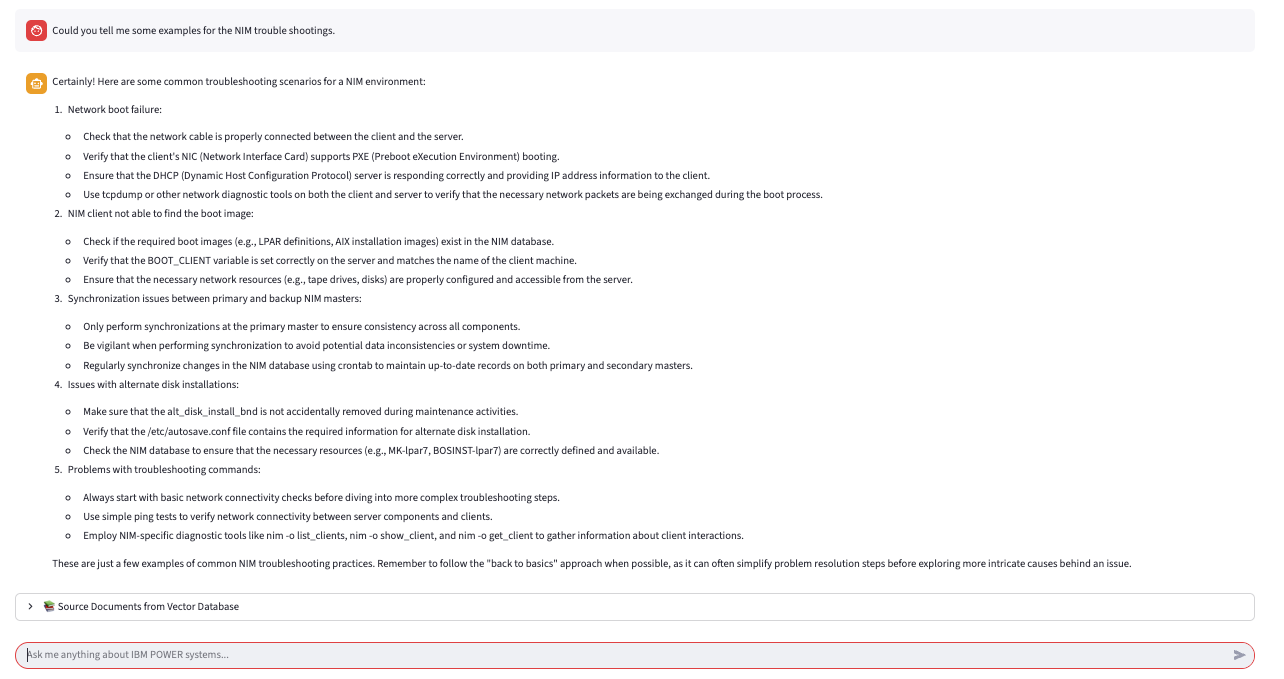

下記は英語で質問してみましたが。

回答まで1分くらい待ちますが、書き出すと速いです。

微小リソースの割り当てと思うと、あまり悪くないのではとも思います。

(すみません、特に具体的なツールで測定はしていません)

PowerVS の課金を気にして試していませんが、また別でリソース変更して確認してみたいと思います。

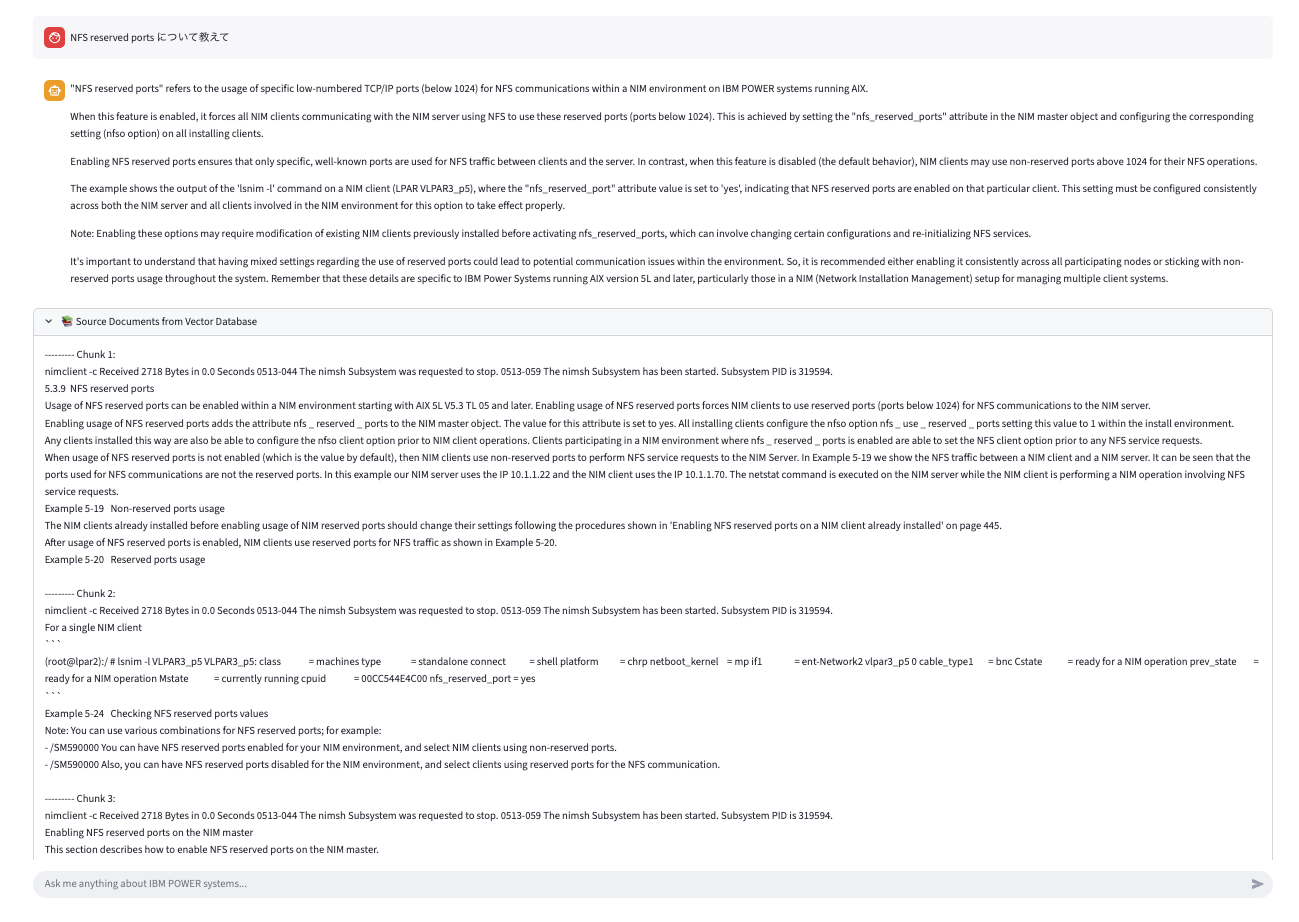

読み込ませた Redbooks の中に記載があった、NFS reserved Ports について聞いてみます。

完全に正確な回答とは言えまないかもしれません。具体的なポート番号を返してくれたら回答としては良かったと思います。

ただ、Vector Database でどの箇所を確認したかが見えており、参照された場所が明確に見える点は良いと思いました。

Tips: 別のターミナルでの micromamba 稼働時には変数設定が必要

別のターミナルで micromamba を activateを実行する場合は、最初にeval "$(micromamba shell hook --shell bash)"の実行が必要でした。

[root@rag-test work]# eval "$(micromamba shell hook --shell bash)"

[root@rag-test work]# micromamba activate rag_env

(rag_env) [root@rag-test work]#

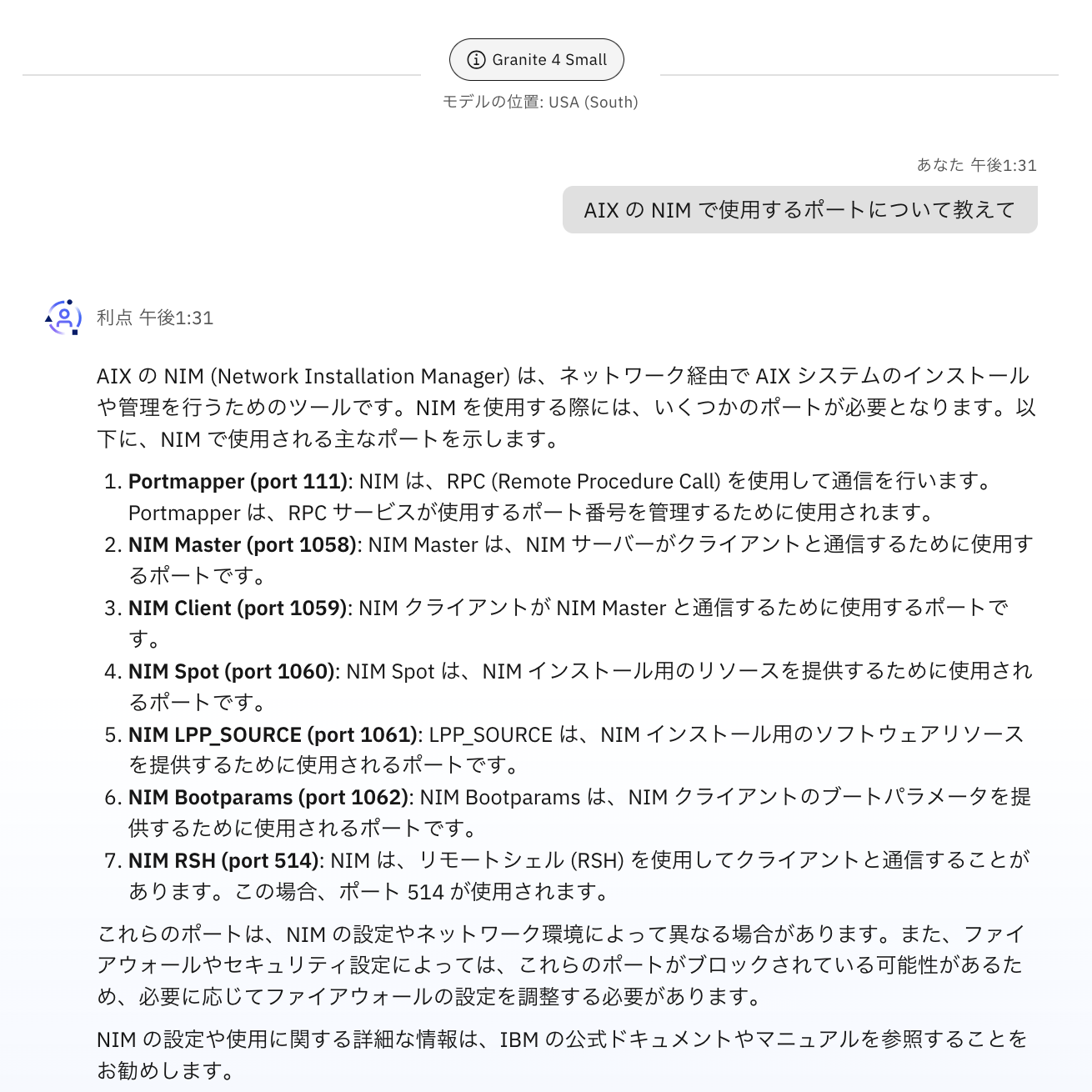

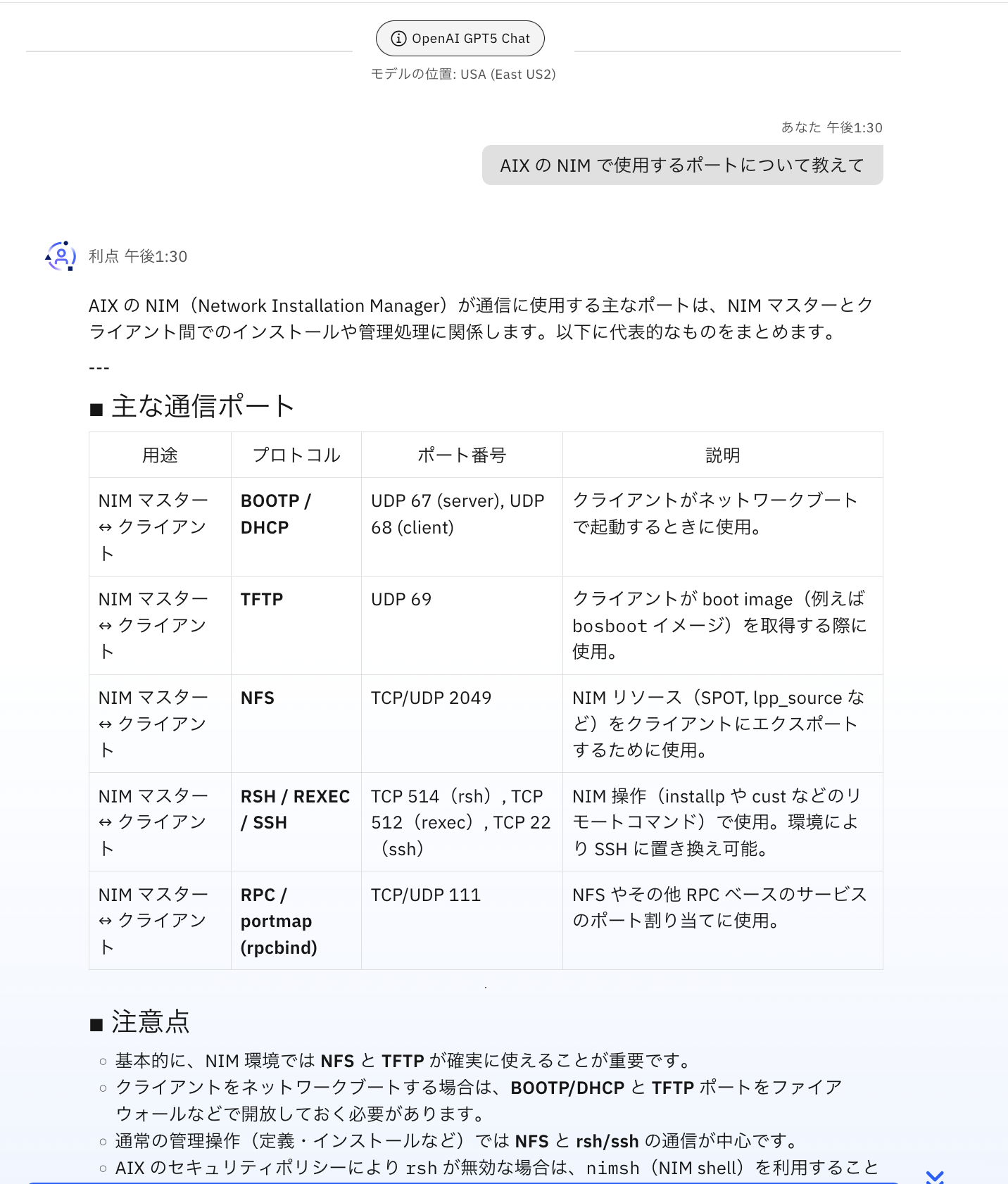

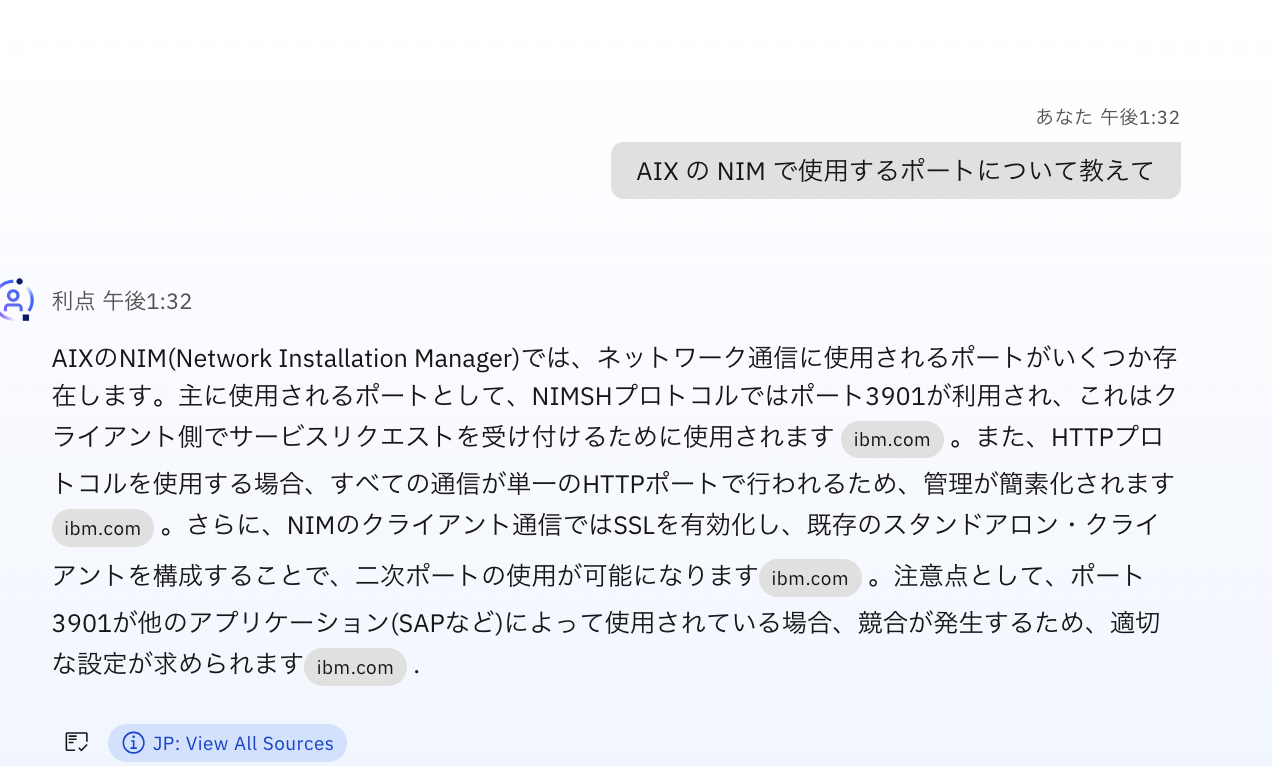

(参考) RAGなしで質問した場合の確認(モデル性能のみ)

IBM Consulting Advantage で granite4 Small を指定して NIM のポートについて聞いてみました。

- Granite 4 Small の場合

Web検索を含めると、該当のTechnote のリンクを加えて連携していただけています。

なお、OpenAI GPT5 Chat では表形式で出してくれているなど、出力結果がわかりやすい点には優位性があると思いました。

- OpenAI GPT5 Chat

AI技術は日進月歩で進化しているため、OSSモデルにおいても今後こうした点が改善されることを期待しています。

おわりに

docling などに時間は多少かかりましたが、用意されていた python コードは実行エラーにならなかったのはありがたかったです。

RAGを行わない場合でもきちんと出る回答もありますので、精度向上はプロンプティングを行うか、RAGを設定するか、元々のモデルの精度に期待した方が良いのか、いまだ悩みどころです。

ご参考論文

以下の論文では 「ファインチューニング」 と 「検索拡張生成(RAG)」 を比較した研究が記載されていますが、結論は RAG の方がファインチューニングより優れているとのことです。

Fine-Tuning or Retrieval? Comparing Knowledge Injection in LLMs

以上です。