Mooc(Udacity, Coursera)で機械学習(Scikit-learn, TensorFlow)をやってました。

Mooc

-

[Neural Networks for Machine Learning | Coursera](https://www.coursera.org/learn/

neural-networks/home/welcome)

Andrew Ng先生のが有名だけど、Googleの提供しているDeep Learning - UdacityはScikit-learnとかTensorFlowとか扱っているのがいい感じ

Neural Networks for Machine Learning

- Hinton先生

- 実行環境がOctaveだった

- 諦めてインストールしたけれど今回はそれで終わり

Deep Learning - Udacity

- 課題がJupyter Notebookで与えられるのがすごくいい(動く!)

- 理論面は物足りないかも

- 「あ、苦労してるんだな」って言うシーンがいくつかあるのでオススメ

Deep Learning - Udacity

Setup

-

環境を用意するのが面倒だったのでDocker利用

-

TensorFlow v1.0が出ていたのでアップデートした

Scikit-learn

-

一つ目の課題が "Scikit-learnを使ってLogistic回帰しよう" だった

-

tensorflow/1_notmnist.ipynb at master · tensorflow/tensorflow

Scikit-learn

-

scikit-learn/scikit-learn: scikit-learn: machine learning in Python

-

sklearn.linear_model.LogisticRegression — scikit-learn 0.18.1 documentation

-

L1 Penalty and Sparsity in Logistic Regression — scikit-learn 0.18.1 documentation

Python : 共通する要素の取り出し

v = [1,2,3]

w = [2,3,4]

[x for x in v if x in w] # [2,3]

TensorFlow

Tensorflowで同じタスクを実行する内容だった。

-

tensorflow/2_fullyconnected.ipynb at master · tensorflow/tensorflow

-

Assignment 2: 2 hidden layers and NaN loss - Courses / Deep Learning - Udacity Discussion Forum

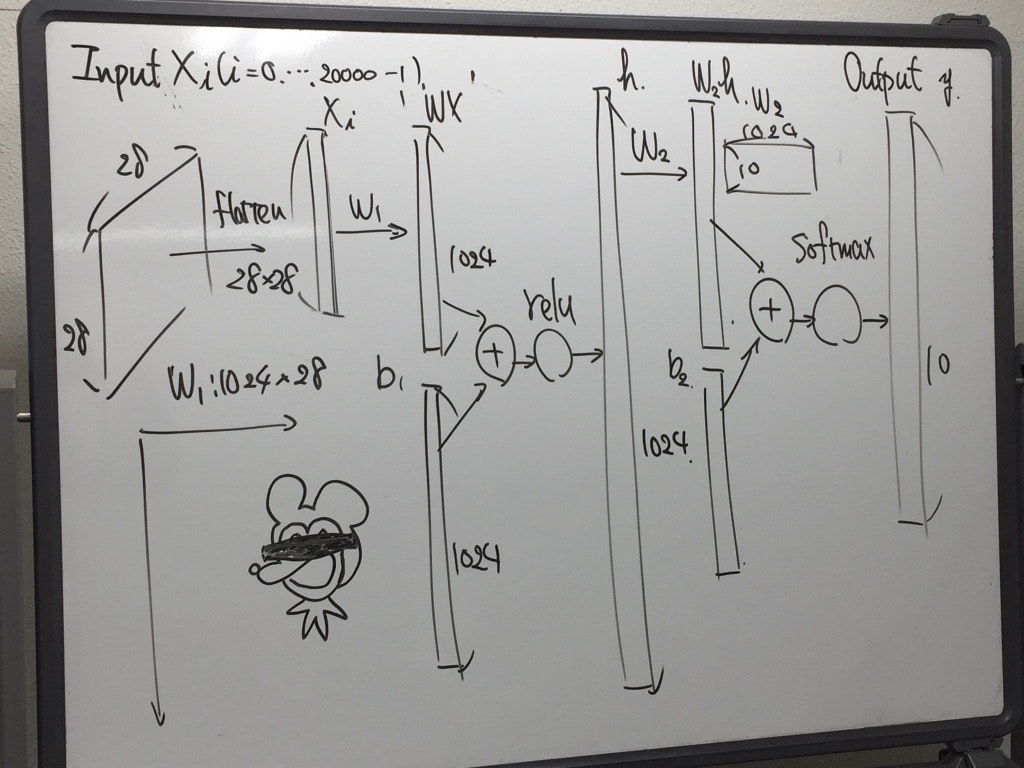

こんな感じで3層NNを作ってaccuracy 94.2%を達成したので満足した。

コードの一部を抜き出すとこんな感じ。

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=1.732/sum(shape))

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

batch_size = 128

hidden_layer_size = 1024

input_layer_size = 28*28

output_layer_size = 10

graph = tf.Graph()

with graph.as_default():

# Input data. For the training data, we use a placeholder that will be fed

# at run time with a training minibatch.

tf_train_dataset = tf.placeholder(tf.float32, shape=(batch_size, image_size * image_size))

tf_train_labels = tf.placeholder(tf.float32, shape=(batch_size, num_labels))

tf_valid_dataset = tf.constant(valid_dataset)

tf_test_dataset = tf.constant(test_dataset)

# Variables

weight1 = weight_variable( (input_layer_size, hidden_layer_size) )

bias1 = bias_variable( [hidden_layer_size] )

# Hidden Layer

hidden_layer = tf.nn.relu(tf.matmul(tf_train_dataset, weight1) + bias1)

# Variables

weight2 = weight_variable( (hidden_layer_size, output_layer_size) )

bias2 = bias_variable( [output_layer_size] )

logits = tf.matmul(hidden_layer, weight2) + bias2

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=logits, labels=tf_train_labels))

# optimizer

optimizer = tf.train.AdamOptimizer(0.001).minimize(loss)

# optimizer = tf.train.GradientDescentOptimizer(0.5).minimize(loss)

# prediction

train_prediction = tf.nn.softmax(logits)

valid_prediction = tf.nn.softmax(tf.matmul(tf.nn.relu(tf.matmul(tf_valid_dataset, weight1) + bias1), weight2) + bias2)

test_prediction = tf.nn.softmax(tf.matmul(tf.nn.relu(tf.matmul(tf_test_dataset, weight1) + bias1), weight2) + bias2)