Foreword

This is a private reviewing note about A3C and its dependent research papers.

Introduction

Title: Asynchronous Methods for Deep Reinforcement Learning

Authors:

Volodymyr Mnih, Adrià Puigdomènech Badia, Mehdi Mirza, Alex Graves, Tim Harley, Timothy P. Lillicrap, David Silver, Koray Kavukcuoglu

Publish Year: 2016

Link: https://arxiv.org/pdf/1602.01783.pdf

Main Contribution

They have proposed the more efficient and stable way of learning, which is an asynchronous actor-learners learning method in RL, compared to DQN which was known as the state-of-the-art performance at that time.

Related Work

According to them, Gorila architecture in Massively Parallel Methods for Deep Reinforcement Learning inspired this work so much. So please refer to my other note, https://qiita.com/Rowing0914/items/bbd9854af7c464baae52

However historically speaking, parallel learning has quite long paradigm. In 2011, Li&Schuurmans discovered the map reduce framework to parallelising batch RL with linear approximation function. Actually parallelism geared the learning speed. Also, dated back to 1994, Tsitsiklis has found that the asynchronous optimisation setting in Q-learning.

Model Architecture ~A3C~

Indeed, they have combined with asynchronous format and four off-line RL methods and then evaluated the performance. However, in this section, I would like to cover A3C which has surpassed the performance of DQN and the most successful one among four tested approaches.

Actor-Critic Model in nutshell

$s_t$ : state at time step $t$

$a_t$ : action at time step $t$

$r\in[0,1]$ : discount rate

R_t = \sum^{\infty}_{k=0} \gamma^k r_{t+k}\\

Q^π = E[R_t|s_t,a_t]\\

V^π(s) = E[R_t|s]

Since this method uses function approximation, we can replace action-value function with $Q(s, a;\theta)$. Then let us define the loss function for updating the parameters used in func approximation.

L_i(\theta_i) = E \big[r+\gamma max_{a'} Q(s', a';\theta_{i-1}) - Q(s,a;\theta_i) \big]^2

By now, we remember the actor-critic method! Then let's move on to the asynchronous format of actor-critic learning.

In fact, it's not just an asynchronous version of actor-critic, but also they have merged the Advantage function inside.

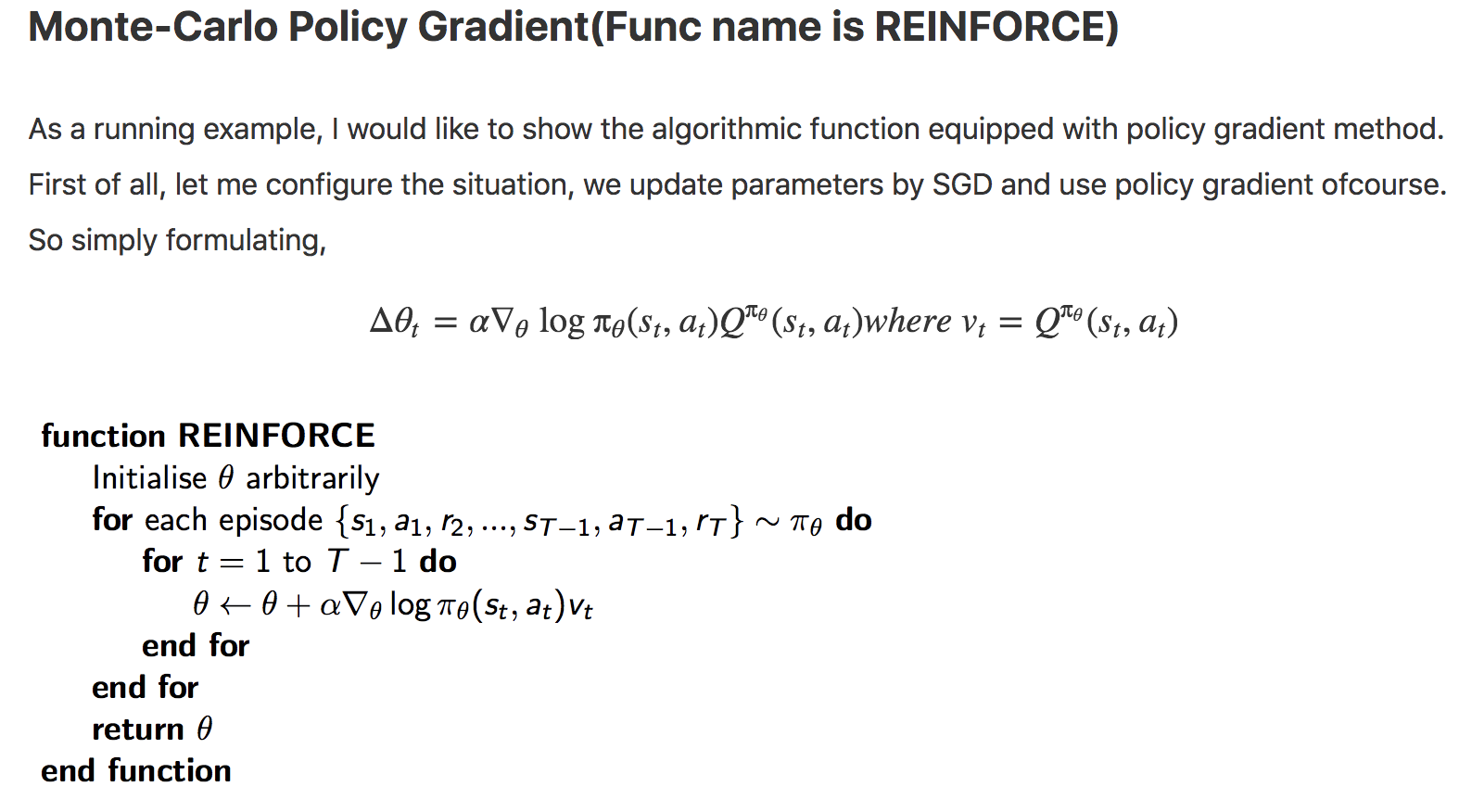

REINFORCE algo in nutshell

source:https://qiita.com/Rowing0914/items/90a1b5f8c3e2b5a05a5f#monte-carlo-policy-gradientfunc-name-is-reinforce

source:https://qiita.com/Rowing0914/items/90a1b5f8c3e2b5a05a5f#monte-carlo-policy-gradientfunc-name-is-reinforce

So all in all, A3C will be like below.

Implementation

I am trying follow this!

https://qiita.com/sugulu/items/acbc909dd9b74b043e45#%E5%AE%9F%E8%A3%85%E3%82%B3%E3%83%BC%E3%83%89%E3%81%AE%E3%82%AF%E3%83%A9%E3%82%B9%E6%A7%8B%E6%88%90

References

-

Massively Parallel Methods for Deep Reinforcement Learning

Note: https://qiita.com/Rowing0914/items/bbd9854af7c464baae52 -

Neural Fitted Q Iteration - First Experiences

with a Data Efficient Neural Reinforcement

Learning Method

Note : https://qiita.com/Rowing0914/items/3d1b9a6ad4fe0a53cf5b -

Human-level control through deep reinforcement

learning

Note : https://qiita.com/Rowing0914/items/d1edf7df1df559792f62 - [Deep reinforcement learning with double q-learning] (https://arxiv.org/pdf/1509.06461.pdf)

Note : https://qiita.com/Rowing0914/items/323464f2675fe5c9b6e7