Introduction

This is the branch article about Deep Reinforcement Learning.

Main contribution is here.

https://qiita.com/Rowing0914/items/9c5b4ffeb15f4fc12340

In this article, I would like to summarise a famous research paper about DDQN.

Also, i would like to share the implementation as well!

Hope you will like it!

Link: double q network

https://papers.nips.cc/paper/3964-double-q-learning.pdf

double dqn

https://arxiv.org/pdf/1509.06461.pdf

Main Contributor: Hado van Hasselt

Overestimation of Q-learning

What we have seen in the deep q network was quite brilliant achievement.

Especially the generalisation for various games of Atari-26000, it outperformed the previous benchmark significantly. However, Hasselt et al have found the huge overestimation arising in value estimation as well. To avoid this overestimation, they have introduced DDQN(deep double q network). Indeed, this was not the first time for the overestimation to be reported, actually this problem has certainly long history since Q-Learning was introduced by Watkins in 1989. Since then many researchers have been tried to extend this immense algorithm to reach higher goal.

And Hasselt was one of them, and found that the optimism arise in the step of maximisatoin of the value function in some stochastic environments using q-learning. And even the novel approach introduced by Mnih et al., 2015 has caused the same issue as well.

So with the foundation of double q learning, they have approached this issue.

As you can see from this chart, orange bars indicate the bias in a single Q learning and on the other hand the blue ones show the one in double q learning. It is evident that the bias increase with the number of actions.

As you can see from this chart, orange bars indicate the bias in a single Q learning and on the other hand the blue ones show the one in double q learning. It is evident that the bias increase with the number of actions.

Double Q Learning algo

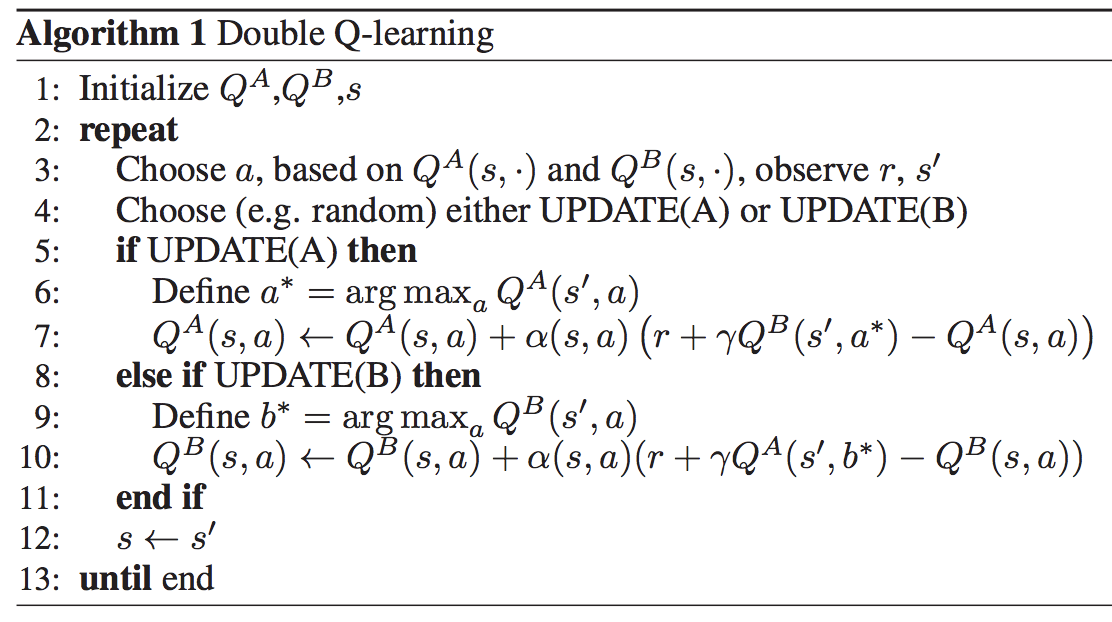

In double q learning, they have approached the issue of overestimation which caused by the usage of the value function for updating and selecting action.

To avoid this, they used two different action-value function for the process of maximisation of the expected values in next step. Each Q function is updated with a value from the other Q function for the next state.

Deep Double Q Learning

Simply replacing the q function with the approximated estimate neural network.

The update algo is same as DQN.

Implementation

Try this!

it's using keras and well implemented!

Mind this point below.

def run_experiment(self, agent):

self.env.monitor.start('/tmp/cartpole', force=True)

for n in range(N_EPISODES):

self.run_episode(agent)

self.env.monitor.close()

pass

this should be

def run_experiment(self, agent):

for n in range(N_EPISODES):

self.run_episode(agent)

pass