このページについて

このページはハッカソンを迅速にお楽しみいただくために開発した開発レシピ集です。レシピのまとめページはIBM Cloudのビギナー開発者向け「最初のレシピ」集になります。

あくまで個人の活動として掲載しているので、IBM ソーシャル・コンピューティング・ガイドラインに則っています。

すなわち、このサイトの掲載内容は私自身の見解であり、必ずしもIBMの立場、戦略、意見を代表するものではありません。

はじめに

IBM CloudとはIBM社が提供するクラウドサービスです。

クラウドとは・・・と紙で理解するよりは、実際に使って理解したほうが早いです。

そのため、ここでは画像認識サービスであるSpeech To Textについてレシピとしてまとめます。

Speech To Textを試してみる。

アプリの稼働環境を作る

- この環境はすでにNode-RED環境が構築されている場合は、作業不要です

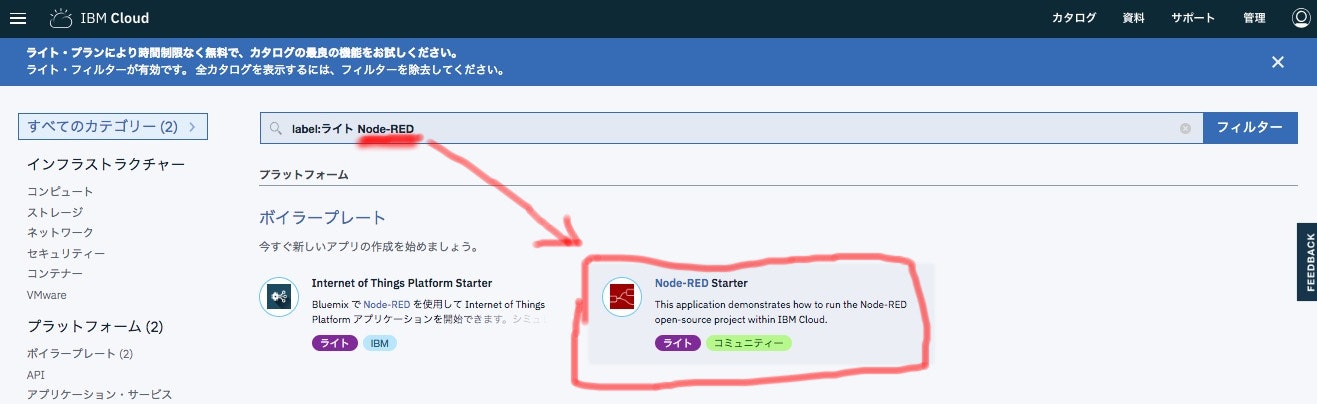

カタログを選択し、カタログ画面を出します。

検索で「Node-RED」と入力し、Node-REDスターターを選択します。

Speech To Textを導入する

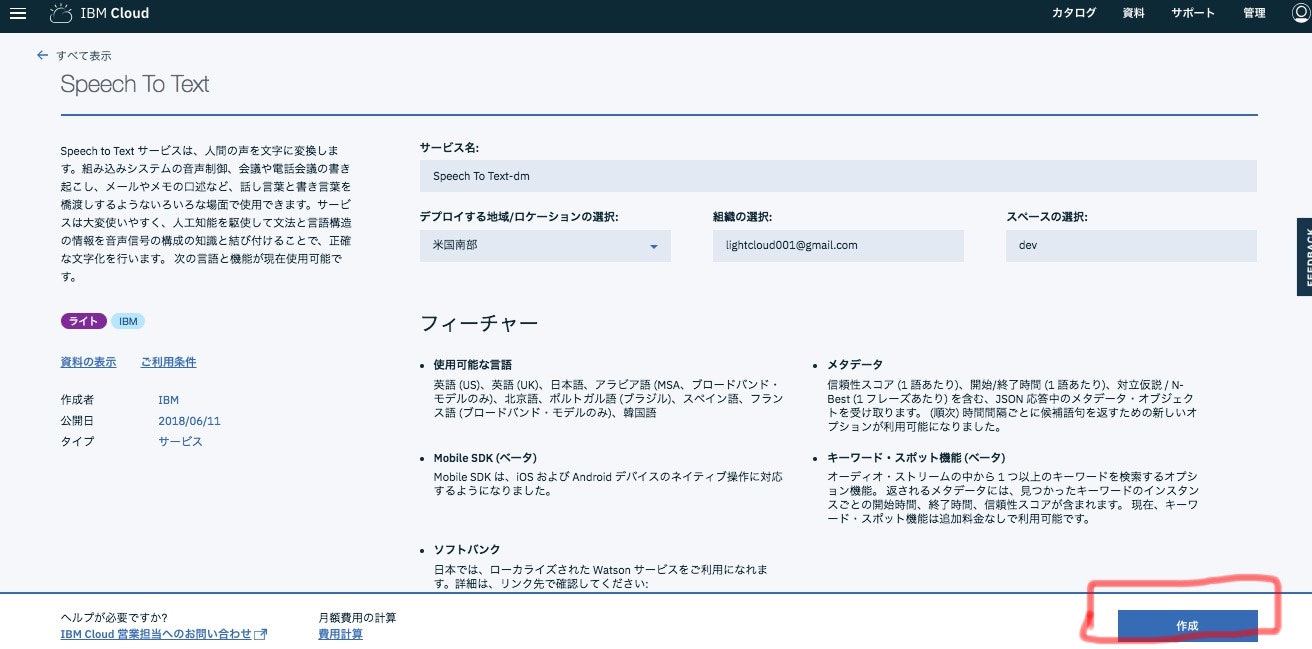

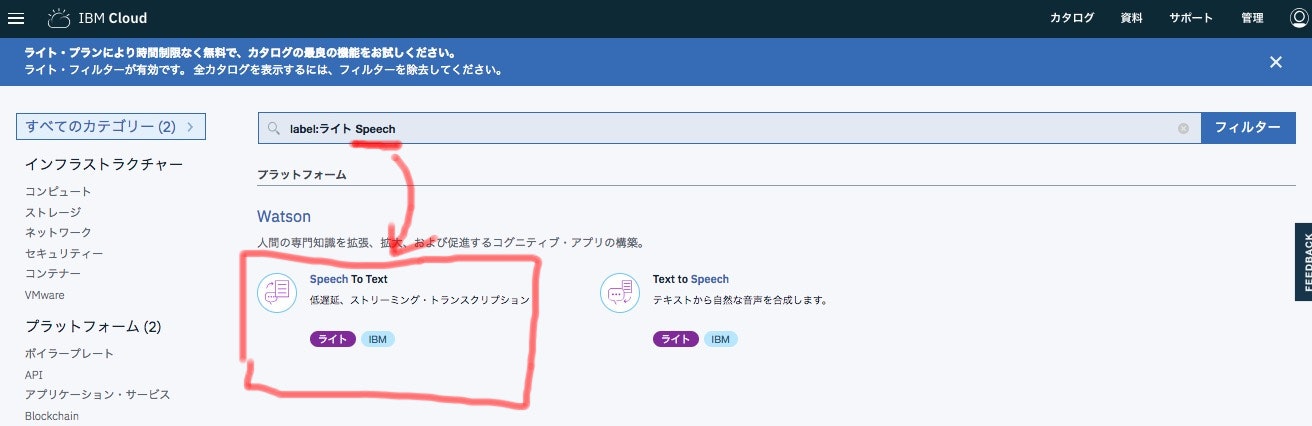

カタログを選択し、カタログ画面を出します。

検索で「Speech」と入力し、「Speech To Text」を選択します。

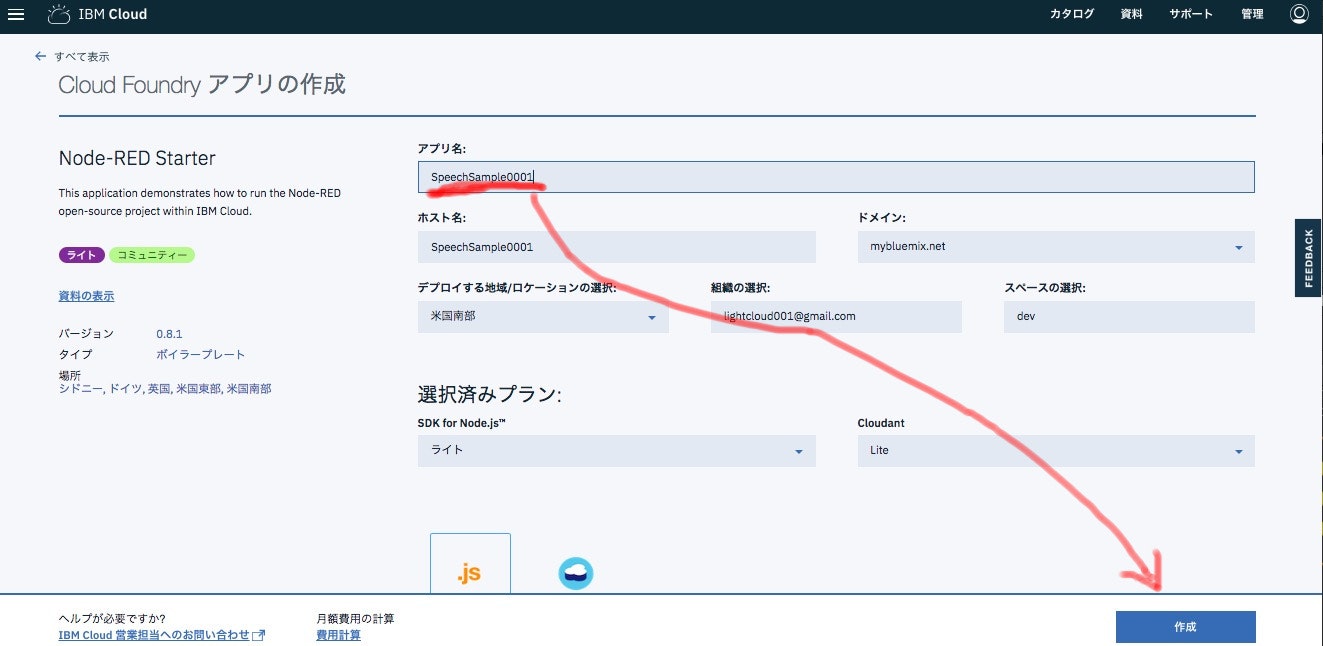

アプリ名を入力し、「作成」ボタンを押します。

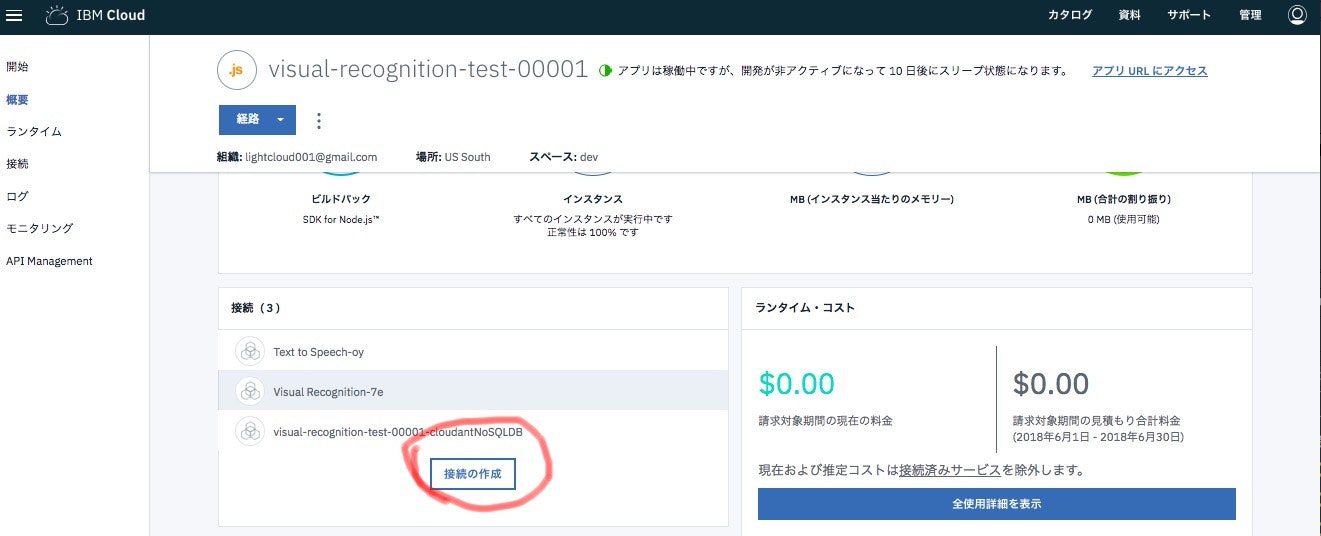

Node-REDとSpeech To Textを関連付ける

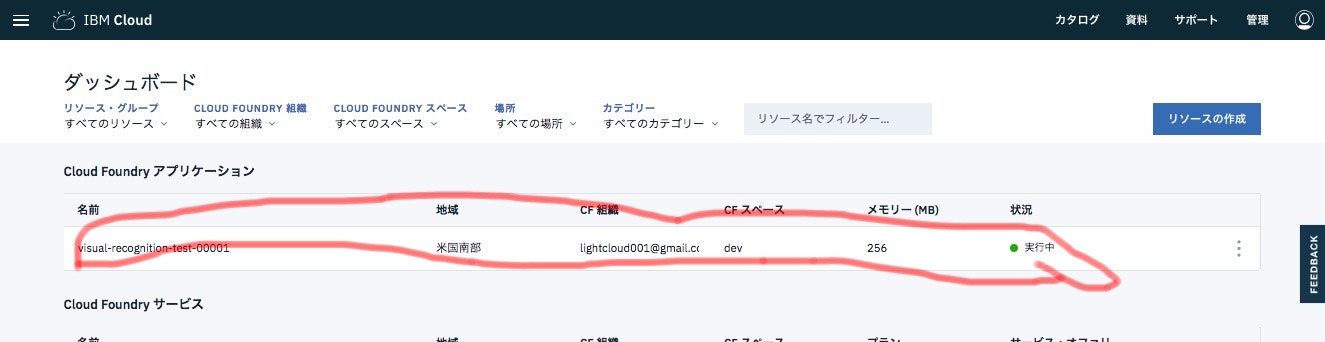

左上のメニューから「ダッシュボード」を選び、ダッシュボード画面に移動します。

ダッシュボードにあるCoud FoundaryアプリケーションのNode-REDをクリックします。

先ほど作成したSpeech To Textにマウスカーソルを合わせます。

そうすると、「Connect」というボタンが出てくるのでクリックします。

「再ステージ」とは、Node-RED環境とSpeech To Text機能を関連付ける処理を行った際に、環境を再構築するものです。

これで関連付けは完了です。

アプリを実装する

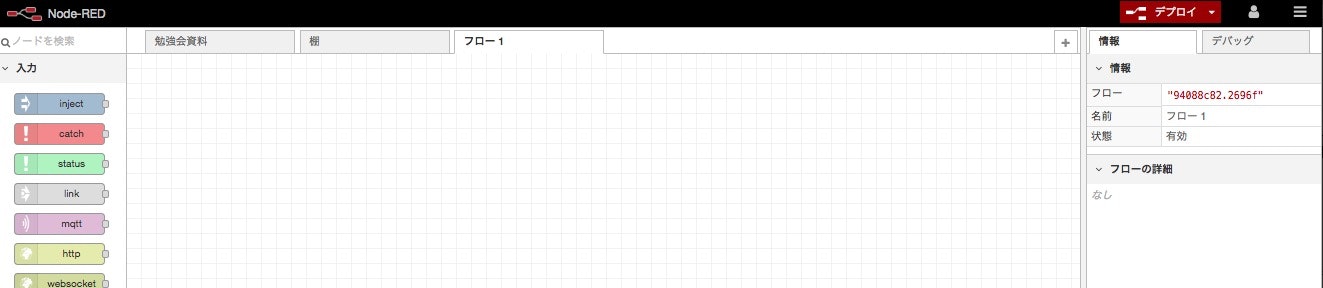

Node-RED画面を起動します。

まずはダッシュボードに戻り、先ほどと同じCloud Foundryアプリケーションを選びます。

経路のプルダウンの▲をクリックします。

そうすると、URLが出てくるのでクリックします。

そうすると、別画面にNode-RED画面が出てきます。

ログインしていない場合は、ログインを行ってください。

プログラムを「読み込み」します。

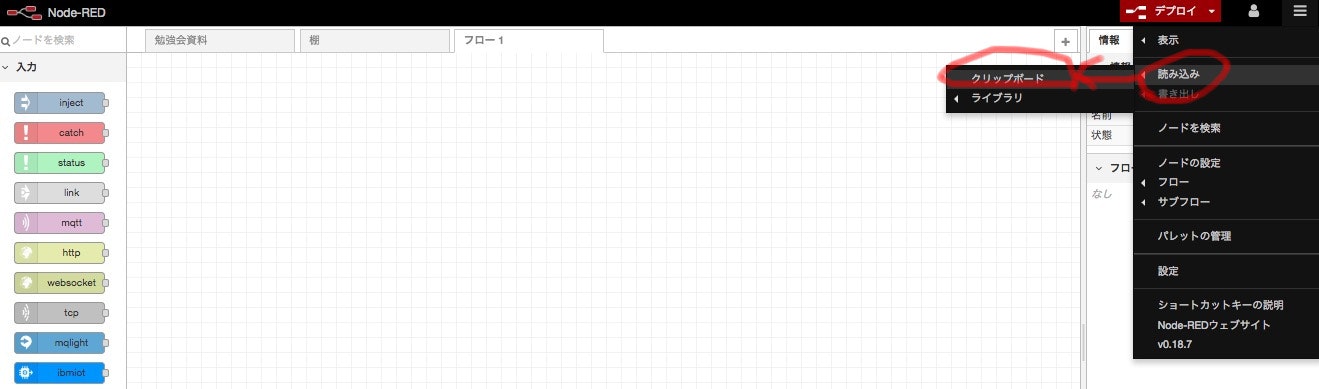

まずはNode-REDの右上のメニューから、「読み込み」「クリップボード」をクリックします。

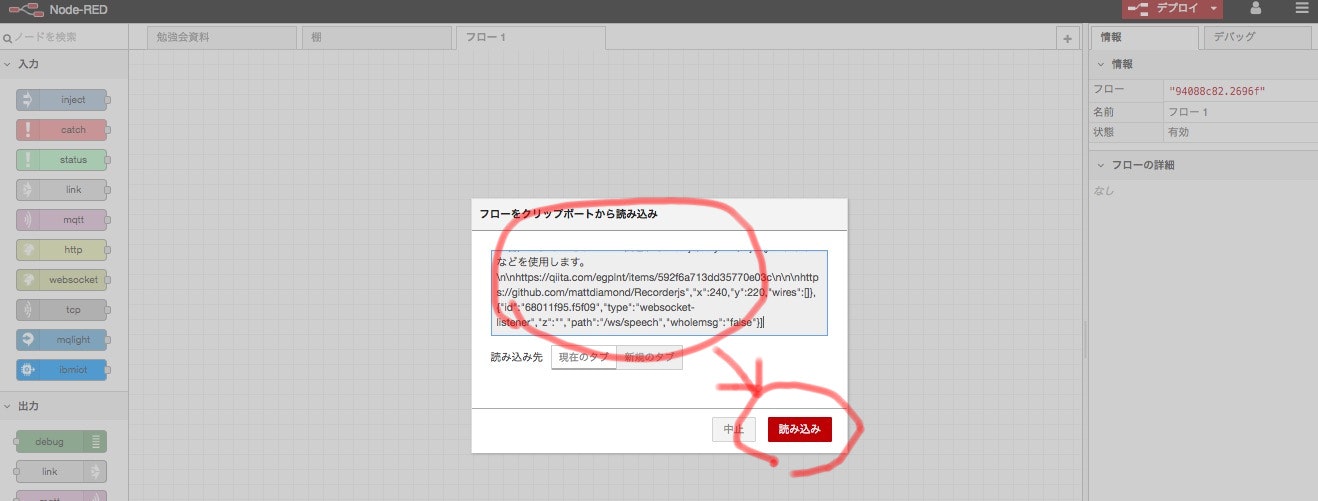

下記のようにテキストエリアに下記のプログラムをコピー&ペーストしてください。

そして、「読み込み」ボタンをクリックしてください。

[{"id":"523c1a1.6ce25e4","type":"tab","label":"勉強会資料","disabled":false,"info":""},{"id":"974c4091.b93e3","type":"template","z":"523c1a1.6ce25e4","name":"HTML","field":"payload","fieldType":"msg","syntax":"mustache","template":"\n(function(f){if(typeof exports===\"object\"&&typeof module!==\"undefined\"){module.exports=f()}else if(typeof define===\"function\"&&define.amd){define([],f)}else{var g;if(typeof window!==\"undefined\"){g=window}else if(typeof global!==\"undefined\"){g=global}else if(typeof self!==\"undefined\"){g=self}else{g=this}g.Recorder = f()}})(function(){var define,module,exports;return (function e(t,n,r){function s(o,u){if(!n[o]){if(!t[o]){var a=typeof require==\"function\"&&require;if(!u&&a)return a(o,!0);if(i)return i(o,!0);var f=new Error(\"Cannot find module '\"+o+\"'\");throw f.code=\"MODULE_NOT_FOUND\",f}var l=n[o]={exports:{}};t[o][0].call(l.exports,function(e){var n=t[o][1][e];return s(n?n:e)},l,l.exports,e,t,n,r)}return n[o].exports}var i=typeof require==\"function\"&&require;for(var o=0;o<r.length;o++)s(r[o]);return s})({1:[function(require,module,exports){\n\"use strict\";\n\nnavigator.getUserMedia = navigator.mozGetUserMedia;\n\nmodule.exports = require(\"./recorder\").Recorder;\n\n},{\"./recorder\":2}],2:[function(require,module,exports){\n'use strict';\n\nvar _createClass = (function () {\n function defineProperties(target, props) {\n for (var i = 0; i < props.length; i++) {\n var descriptor = props[i];descriptor.enumerable = descriptor.enumerable || false;descriptor.configurable = true;if (\"value\" in descriptor) descriptor.writable = true;Object.defineProperty(target, descriptor.key, descriptor);\n }\n }return function (Constructor, protoProps, staticProps) {\n if (protoProps) defineProperties(Constructor.prototype, protoProps);if (staticProps) defineProperties(Constructor, staticProps);return Constructor;\n };\n})();\n\nObject.defineProperty(exports, \"__esModule\", {\n value: true\n});\nexports.Recorder = undefined;\n\nvar _inlineWorker = require('inline-worker');\n\nvar _inlineWorker2 = _interopRequireDefault(_inlineWorker);\n\nfunction _interopRequireDefault(obj) {\n return obj && obj.__esModule ? obj : { default: obj };\n}\n\nfunction _classCallCheck(instance, Constructor) {\n if (!(instance instanceof Constructor)) {\n throw new TypeError(\"Cannot call a class as a function\");\n }\n}\n\nvar Recorder = exports.Recorder = (function () {\n function Recorder(source, cfg) {\n var _this = this;\n\n _classCallCheck(this, Recorder);\n\n this.config = {\n bufferLen: 4096,\n numChannels: 2,\n mimeType: 'audio/wav'\n };\n this.recording = false;\n this.callbacks = {\n getBuffer: [],\n exportWAV: []\n };\n\n Object.assign(this.config, cfg);\n this.context = source.context;\n this.node = (this.context.createScriptProcessor || this.context.createJavaScriptNode).call(this.context, this.config.bufferLen, this.config.numChannels, this.config.numChannels);\n\n this.node.onaudioprocess = function (e) {\n if (!_this.recording) return;\n\n var buffer = [];\n for (var channel = 0; channel < _this.config.numChannels; channel++) {\n buffer.push(e.inputBuffer.getChannelData(channel));\n }\n _this.worker.postMessage({\n command: 'record',\n buffer: buffer\n });\n };\n\n source.connect(this.node);\n this.node.connect(this.context.destination); //this should not be necessary\n\n var self = {};\n this.worker = new _inlineWorker2.default(function () {\n var recLength = 0,\n recBuffers = [],\n sampleRate = undefined,\n numChannels = undefined;\n\n self.onmessage = function (e) {\n switch (e.data.command) {\n case 'init':\n init(e.data.config);\n break;\n case 'record':\n record(e.data.buffer);\n break;\n case 'exportWAV':\n exportWAV(e.data.type);\n break;\n case 'getBuffer':\n getBuffer();\n break;\n case 'clear':\n clear();\n break;\n }\n };\n\n function init(config) {\n sampleRate = config.sampleRate;\n numChannels = config.numChannels;\n initBuffers();\n }\n\n function record(inputBuffer) {\n for (var channel = 0; channel < numChannels; channel++) {\n recBuffers[channel].push(inputBuffer[channel]);\n }\n recLength += inputBuffer[0].length;\n }\n\n function exportWAV(type) {\n var buffers = [];\n for (var channel = 0; channel < numChannels; channel++) {\n buffers.push(mergeBuffers(recBuffers[channel], recLength));\n }\n var interleaved = undefined;\n if (numChannels === 2) {\n interleaved = interleave(buffers[0], buffers[1]);\n } else {\n interleaved = buffers[0];\n }\n var dataview = encodeWAV(interleaved);\n var audioBlob = new Blob([dataview], { type: type });\n\n self.postMessage({ command: 'exportWAV', data: audioBlob });\n }\n\n function getBuffer() {\n var buffers = [];\n for (var channel = 0; channel < numChannels; channel++) {\n buffers.push(mergeBuffers(recBuffers[channel], recLength));\n }\n self.postMessage({ command: 'getBuffer', data: buffers });\n }\n\n function clear() {\n recLength = 0;\n recBuffers = [];\n initBuffers();\n }\n\n function initBuffers() {\n for (var channel = 0; channel < numChannels; channel++) {\n recBuffers[channel] = [];\n }\n }\n\n function mergeBuffers(recBuffers, recLength) {\n var result = new Float32Array(recLength);\n var offset = 0;\n for (var i = 0; i < recBuffers.length; i++) {\n result.set(recBuffers[i], offset);\n offset += recBuffers[i].length;\n }\n return result;\n }\n\n function interleave(inputL, inputR) {\n var length = inputL.length + inputR.length;\n var result = new Float32Array(length);\n\n var index = 0,\n inputIndex = 0;\n\n while (index < length) {\n result[index++] = inputL[inputIndex];\n result[index++] = inputR[inputIndex];\n inputIndex++;\n }\n return result;\n }\n\n function floatTo16BitPCM(output, offset, input) {\n for (var i = 0; i < input.length; i++, offset += 2) {\n var s = Math.max(-1, Math.min(1, input[i]));\n output.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7FFF, true);\n }\n }\n\n function writeString(view, offset, string) {\n for (var i = 0; i < string.length; i++) {\n view.setUint8(offset + i, string.charCodeAt(i));\n }\n }\n\n function encodeWAV(samples) {\n var buffer = new ArrayBuffer(44 + samples.length * 2);\n var view = new DataView(buffer);\n\n /* RIFF identifier */\n writeString(view, 0, 'RIFF');\n /* RIFF chunk length */\n view.setUint32(4, 36 + samples.length * 2, true);\n /* RIFF type */\n writeString(view, 8, 'WAVE');\n /* format chunk identifier */\n writeString(view, 12, 'fmt ');\n /* format chunk length */\n view.setUint32(16, 16, true);\n /* sample format (raw) */\n view.setUint16(20, 1, true);\n /* channel count */\n view.setUint16(22, numChannels, true);\n /* sample rate */\n view.setUint32(24, sampleRate, true);\n /* byte rate (sample rate * block align) */\n view.setUint32(28, sampleRate * 4, true);\n /* block align (channel count * bytes per sample) */\n view.setUint16(32, numChannels * 2, true);\n /* bits per sample */\n view.setUint16(34, 16, true);\n /* data chunk identifier */\n writeString(view, 36, 'data');\n /* data chunk length */\n view.setUint32(40, samples.length * 2, true);\n\n floatTo16BitPCM(view, 44, samples);\n\n return view;\n }\n }, self);\n\n this.worker.postMessage({\n command: 'init',\n config: {\n sampleRate: this.context.sampleRate,\n numChannels: this.config.numChannels\n }\n });\n\n this.worker.onmessage = function (e) {\n var cb = _this.callbacks[e.data.command].pop();\n if (typeof cb == 'function') {\n cb(e.data.data);\n }\n };\n }\n\n _createClass(Recorder, [{\n key: 'record',\n value: function record() {\n this.recording = true;\n }\n }, {\n key: 'stop',\n value: function stop() {\n this.recording = false;\n }\n }, {\n key: 'clear',\n value: function clear() {\n this.worker.postMessage({ command: 'clear' });\n }\n }, {\n key: 'getBuffer',\n value: function getBuffer(cb) {\n cb = cb || this.config.callback;\n if (!cb) throw new Error('Callback not set');\n\n this.callbacks.getBuffer.push(cb);\n\n this.worker.postMessage({ command: 'getBuffer' });\n }\n }, {\n key: 'exportWAV',\n value: function exportWAV(cb, mimeType) {\n mimeType = mimeType || this.config.mimeType;\n cb = cb || this.config.callback;\n if (!cb) throw new Error('Callback not set');\n\n this.callbacks.exportWAV.push(cb);\n\n this.worker.postMessage({\n command: 'exportWAV',\n type: mimeType\n });\n }\n }], [{\n key: 'forceDownload',\n value: function forceDownload(blob, filename) {\n var url = (window.URL || window.webkitURL).createObjectURL(blob);\n var link = window.document.createElement('a');\n link.href = url;\n link.download = filename || 'output.wav';\n var click = document.createEvent(\"Event\");\n click.initEvent(\"click\", true, true);\n link.dispatchEvent(click);\n }\n }]);\n\n return Recorder;\n})();\n\nexports.default = Recorder;\n\n},{\"inline-worker\":3}],3:[function(require,module,exports){\n\"use strict\";\n\nmodule.exports = require(\"./inline-worker\");\n},{\"./inline-worker\":4}],4:[function(require,module,exports){\n(function (global){\n\"use strict\";\n\nvar _createClass = (function () { function defineProperties(target, props) { for (var key in props) { var prop = props[key]; prop.configurable = true; if (prop.value) prop.writable = true; } Object.defineProperties(target, props); } return function (Constructor, protoProps, staticProps) { if (protoProps) defineProperties(Constructor.prototype, protoProps); if (staticProps) defineProperties(Constructor, staticProps); return Constructor; }; })();\n\nvar _classCallCheck = function (instance, Constructor) { if (!(instance instanceof Constructor)) { throw new TypeError(\"Cannot call a class as a function\"); } };\n\nvar WORKER_ENABLED = !!(global === global.window && global.URL && global.Blob && global.Worker);\n\nvar InlineWorker = (function () {\n function InlineWorker(func, self) {\n var _this = this;\n\n _classCallCheck(this, InlineWorker);\n\n if (WORKER_ENABLED) {\n var functionBody = func.toString().trim().match(/^function\\s*\\w*\\s*\\([\\w\\s,]*\\)\\s*{([\\w\\W]*?)}$/)[1];\n var url = global.URL.createObjectURL(new global.Blob([functionBody], { type: \"text/javascript\" }));\n\n return new global.Worker(url);\n }\n\n this.self = self;\n this.self.postMessage = function (data) {\n setTimeout(function () {\n _this.onmessage({ data: data });\n }, 0);\n };\n\n setTimeout(function () {\n func.call(self);\n }, 0);\n }\n\n _createClass(InlineWorker, {\n postMessage: {\n value: function postMessage(data) {\n var _this = this;\n\n setTimeout(function () {\n _this.self.onmessage({ data: data });\n }, 0);\n }\n }\n });\n\n return InlineWorker;\n})();\n\nmodule.exports = InlineWorker;\n}).call(this,typeof global !== \"undefined\" ? global : typeof self !== \"undefined\" ? self : typeof window !== \"undefined\" ? window : {})\n},{}]},{},[1])(1)\n});","x":410,"y":280,"wires":[["fd1c8f57.32df5"]]},{"id":"c1cd2e63.a2a21","type":"http in","z":"523c1a1.6ce25e4","name":"","url":"/recorder.js","method":"get","upload":false,"swaggerDoc":"","x":250,"y":280,"wires":[["974c4091.b93e3"]]},{"id":"fd1c8f57.32df5","type":"http response","z":"523c1a1.6ce25e4","name":"","x":540,"y":280,"wires":[]},{"id":"840ddd70.4f396","type":"template","z":"523c1a1.6ce25e4","name":"HTML","field":"payload","fieldType":"msg","syntax":"mustache","template":"<!DOCTYPE html>\n<html lang=\"ja\" class=\"no-js\">\n<head>\n <meta charset=\"UTF-8\" />\n <meta http-equiv=\"X-UA-Compatible\" content=\"IE=edge\" />\n <script src=\"recorder.js\"></script>\n</head>\n<body>\n <h3>音声の登録</h3>\n <div onclick=\"startRecording(this);\" style=\"height:40px;width:200px;background-color:#DDF\">音声入力</div>\n <br>\n <div onclick=\"stopRecording(this);\" style=\"height:40px;width:200px;background-color:#DFD\">停止</div>\n\n <h3>録音ファイル</h3>\n <ul id=\"recordingslist\"></ul>\n\n <h3>認識結果</h3>\n <div id=\"stt_result\"></div>\n </div>\n</div>\n\n<script type=\"text/javascript\">\nvar audio_context;\nvar recorder;\n\n// 音声を録音する\nfunction startUserMedia(stream)\n{\n var input = audio_context.createMediaStreamSource(stream);\n recorder = new Recorder(input);\n}\n// 録音開始時の処理\nfunction startRecording(button)\n{\n recorder && recorder.record();\n button.disabled = true;\n button.nextElementSibling.disabled = false;\n}\n// 録音終了時の処理\nfunction stopRecording(button)\n{\n recorder && recorder.stop();\n button.disabled = true;\n button.previousElementSibling.disabled = false;\n\n // ダウンロードリンクを作る\n createDownloadLink();\n recorder.clear();\n}\n\n//録音した音声のダウンロードリンクを作る\nfunction createDownloadLink()\n{\n recorder && recorder.exportWAV(function(blob) {\n var url = URL.createObjectURL(blob);\n var li = document.createElement('li');\n var au = document.createElement('audio');\n var hf = document.createElement('a');\n au.controls = true;\n au.src = url;\n hf.href = url;\n hf.download = new Date().toISOString() + '.wav';\n hf.innerHTML = hf.download;\n socketFunc(blob);\n li.appendChild(au);\n li.appendChild(hf);\n\n recordingslist.appendChild(li);\n });\n}\nwindow.onload = function init()\n{\n // 音声を録音するための初期化処理\n window.AudioContext = window.AudioContext || window.webkitAudioContext;\n navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia;\n window.URL = window.URL || window.webkitURL;\n audio_context = new AudioContext;\n\n navigator.getUserMedia({audio: true}, startUserMedia, function(e) {});\n};\n\n// データ通信を行う処理\nfunction socketFunc(blob) {\n var socketaddy = \"wss://\" + window.location.host + \"/ws/speech\";\n\n socket = new WebSocket(socketaddy);\n socket.binaryType = 'arraybuffer';\n socket.onopen = function () {\n // 音声データを送る\n socket.send(blob);\n }\n socket.onmessage = function (e) {\n var stt_result_element = document.getElementById('stt_result');\n var tLabel = document.createTextNode(e.data);\n console.log(e.data);\n\n var p = document.createElement('p');\n p.setAttribute('id', e.data);\n p.appendChild(tLabel);\n\n var classesBox = document.createElement('div');\n classesBox.appendChild(p);\n\n // add child\n stt_result_element.appendChild(classesBox);\n\n socket.onclose = function () {\n socket.close();\n }\n };\n};\n</script>\n\n</body>\n</html>\n","x":410,"y":140,"wires":[["7e22ca7a.bb3a04"]]},{"id":"c3bc7173.9e7a6","type":"http in","z":"523c1a1.6ce25e4","name":"","url":"/sample","method":"get","upload":false,"swaggerDoc":"","x":240,"y":140,"wires":[["840ddd70.4f396"]]},{"id":"7e22ca7a.bb3a04","type":"http response","z":"523c1a1.6ce25e4","name":"","x":540,"y":140,"wires":[]},{"id":"aae14c21.10fb7","type":"comment","z":"523c1a1.6ce25e4","name":"画面とかプログラムとか","info":"","x":190,"y":80,"wires":[]},{"id":"dccaac49.e43db","type":"watson-speech-to-text","z":"523c1a1.6ce25e4","name":"STT","alternatives":"","speakerlabels":false,"smartformatting":false,"lang":"ja-JP","langhidden":"ja-JP","langcustomhidden":"","band":"NarrowbandModel","bandhidden":"","password":"","apikey":"","payload-response":true,"streaming-mode":false,"streaming-mute":false,"auto-connect":false,"discard-listening":false,"disable-precheck":false,"default-endpoint":false,"service-endpoint":"https://stream.watsonplatform.net/speech-to-text/api","x":410,"y":400,"wires":[["ea18a21d.9c6b8","81e04e6e.25a89"]]},{"id":"2533639e.80c8ac","type":"websocket in","z":"523c1a1.6ce25e4","name":"","server":"68011f95.f5f09","client":"","x":260,"y":400,"wires":[["dccaac49.e43db"]]},{"id":"81e04e6e.25a89","type":"websocket out","z":"523c1a1.6ce25e4","name":"","server":"68011f95.f5f09","client":"","x":600,"y":400,"wires":[]},{"id":"9c21236a.9dddc","type":"comment","z":"523c1a1.6ce25e4","name":"音声認識機能","info":"","x":150,"y":340,"wires":[]},{"id":"ea18a21d.9c6b8","type":"debug","z":"523c1a1.6ce25e4","name":"","active":true,"console":"false","complete":"false","x":590,"y":460,"wires":[]},{"id":"9ee16d3e.ef28f","type":"comment","z":"523c1a1.6ce25e4","name":"外部ライブラリ(ここの中身は考えない)","info":"Chrome, Firefox, Edge における音声ファイルの生成、及び転送\nChrome などのモダンブラウザでは同じ手法でWAVファイルの生成、サーバへの転送を行うことが可能です。またRecorder.jsと呼ばれる録音、WAVファイルの生成を行うための JavaScript のライブラリが存在し、このライブラリを利用することで、比較的簡潔に処理を実装することが可能です。\nrecorder.js\nhttps://github.com/mattdiamond/Recorderjs\n\n生成した音声ファイルをサーバへ転送するには jQuery の $.ajax() メソッドなどを使用します。\n\nhttps://qiita.com/egplnt/items/592f6a713dd35770e03c\n\n\nhttps://github.com/mattdiamond/Recorderjs","x":240,"y":220,"wires":[]},{"id":"68011f95.f5f09","type":"websocket-listener","z":"","path":"/ws/speech","wholemsg":"false"}]

デプロイボタンを押してください。

アプリを動かす

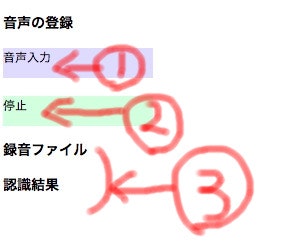

下記のような画面がでると思います。

画面の①「音声入力」ボタンを押してください。

そして、「こんにちは」と発話してみてください。

そのあと②「停止」ボタンを押してください。

そうすると、③のエリアに音声ファイルと、認識結果(日本語)が出力されるはずです