Ingress Controllerとは

- 必要になったらALBを作成してくれるPodのこと

- いずれかのNodeで起動する

- Ingressを使う場合GCPでは意識しなくて良いが、AWSではこのように手動で作成する必要がある

- ALBを作成せずにCluster内にNginxをデプロイすることでサービス公開するNginx Ingressというのもあるみたい

参考

クラスタ作成

eksctl create cluster --name=k8s-cluster --nodes=2 --node-type=t2.medium

サービスアカウント作成、ロールのバインド

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/rbac-role.yaml

出力結果

clusterrole.rbac.authorization.k8s.io/alb-ingress-controller configured

clusterrolebinding.rbac.authorization.k8s.io/alb-ingress-controller configured

serviceaccount/alb-ingress created

AWS ALB Ingress Controllerのデプロイ

マニュフェストファイルをダウンロード

curl -sS "https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/alb-ingress-controller.yaml" > alb-ingress-controller.yaml

cluster-nameの部分をデプロイしているクラスタ名へ変更

# Name of your cluster. Used when naming resources created

# by the ALB Ingress Controller, providing distinction between

# clusters.

- --cluster-name=k8s-cluster

デプロイ

kubectl apply -f alb-ingress-controller.yaml

デプロイ確認

AWS ALB IngressController用のPodが作成されている

kubectl get pod --all-namespaces | grep alb-ingress

kube-system alb-ingress-controller-574db97b5f-2j7hb 1/1 Running 0 32s

サンプルアプリケーションデプロイ

ECR経由でEKSへデプロイする

ECR作成

aws ecr create-repository --repository-name eks-test-app

出力結果

{

"repository": {

"repositoryUri": "xxxxxxxxxxxx.dkr.ecr.us-east-2.amazonaws.com/eks-test-app",

"registryId": "xxxxxxxxxxxx",

"imageTagMutability": "MUTABLE",

"repositoryArn": "arn:aws:ecr:us-east-2:xxxxxxxxxxxx:repository/eks-test-app",

"repositoryName": "eks-test-app",

"createdAt": 1571584240.0

}

}

ECRログイン情報を取得

aws ecr get-login --no-include-email

上記の出力結果を実行する

docker login -u AWS -p XXXXXX

ローカルにサンプルアプリケーションを作成

server.go

package main

import (

"fmt"

"log"

"net/http"

"os"

)

func main() {

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "healthy!")

})

http.HandleFunc("/target1", func(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "/target1:" + os.Getenv("POD_NAME"))

})

log.Fatal(http.ListenAndServe(":8080", nil))

}

Dockerfile

FROM golang

ADD . /go/src/

EXPOSE 8080

CMD ["/usr/local/go/bin/go", "run", "/go/src/server.go"]

ビルド

docker build -t eks-test-app:target1 .

タグ変更

docker tag eks-test-app:target1 XXXXXXXXXXXX.dkr.ecr.us-east-2.amazonaws.com/eks-test-app:target1

ECRへPush

docker push XXXXXXXXXXXX.dkr.ecr.us-east-2.amazonaws.com/eks-test-app:target1

target2用のアプリも同じ手順で作成

docker build -t eks-test-app:target2 .

docker tag eks-test-app:target2 XXXXXXXXXXXX.dkr.ecr.us-east-2.amazonaws.com/eks-test-app:target2

docker push XXXXXXXXXXXX.dkr.ecr.us-east-2.amazonaws.com/eks-test-app:target2

Pushされているイメージ一覧

マニュフェスト作成

apiVersion: v1

kind: Namespace

metadata:

name: "test-app"

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: "test-app-deployment-target1"

namespace: "test-app"

spec:

replicas: 2

template:

metadata:

labels:

app: "test-app-target1"

spec:

containers:

- image: XXXXXXXXXXXX.dkr.ecr.ap-northeast-1.amazonaws.com/eks-test-app:target1

imagePullPolicy: Always

name: "test-app-target1"

ports:

- containerPort: 8080

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: "test-app-deployment-target2"

namespace: "test-app"

spec:

replicas: 2

template:

metadata:

labels:

app: "test-app-target2"

spec:

containers:

- image: XXXXXXXXXXXX.dkr.ecr.ap-northeast-1.amazonaws.com/eks-test-app:target2

imagePullPolicy: Always

name: "test-app-target2"

ports:

- containerPort: 8080

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

---

apiVersion: v1

kind: Service

metadata:

name: "test-app-service-target1"

namespace: "test-app"

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

type: NodePort

selector:

app: "test-app-target1"

---

apiVersion: v1

kind: Service

metadata:

name: "test-app-service-target2"

namespace: "test-app"

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

type: NodePort

selector:

app: "test-app-target2"

アプリケーションのデプロイ

kubectl apply -f test-app.yaml

出力結果

namespace/test-app created

deployment.extensions/test-app-deployment-target1 created

deployment.extensions/test-app-deployment-target2 created

service/test-app-service-target1 created

service/test-app-service-target2 created

デプロイ確認

kubectl get all --all-namespaces | grep test-app

出力結果

test-app pod/test-app-deployment-target1-66498868db-67zdw 1/1 Running 0 56s

test-app pod/test-app-deployment-target1-66498868db-tvfgx 1/1 Running 0 56s

test-app pod/test-app-deployment-target2-646fd4978f-282qj 1/1 Running 0 55s

test-app pod/test-app-deployment-target2-646fd4978f-xjqw7 1/1 Running 0 55s

test-app service/test-app-service-target1 NodePort 10.100.82.136 <none> 80:31922/TCP 55s

test-app service/test-app-service-target2 NodePort 10.100.208.75 <none> 80:32280/TCP 55s

test-app deployment.apps/test-app-deployment-target1 2/2 2 2 57s

test-app deployment.apps/test-app-deployment-target2 2/2 2 2 56s

test-app replicaset.apps/test-app-deployment-target1-66498868db 2 2 2 57s

test-app replicaset.apps/test-app-deployment-target2-646fd4978f 2 2 2 56s

ALB作成

ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: "ingress"

namespace: "test-app"

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

labels:

app: test-app

spec:

rules:

- http:

paths:

- path: /target1

backend:

serviceName: "test-app-service-target1"

servicePort: 80

- path: /target2

backend:

serviceName: "test-app-service-target2"

servicePort: 80

ALBマニュフェストのapply

kubectl apply -f ingress.yaml

ALBが作成されないっぽい

ログ確認

kubectl logs -n kube-system alb-ingress-controller-5bfd896bd9-hctqk

怪しいエラー

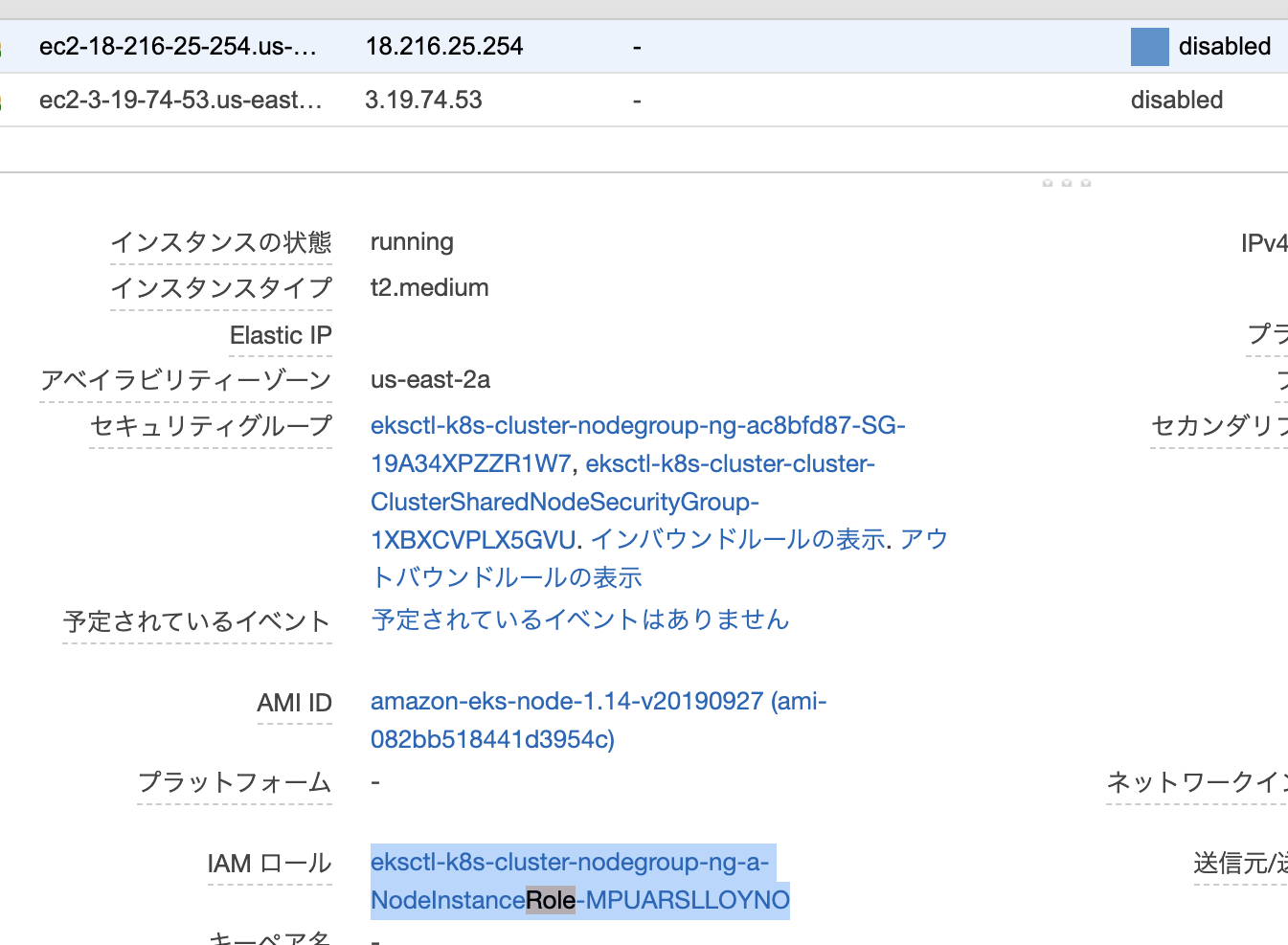

E1020 16:46:07.820806 1 :0] kubebuilder/controller "msg"="Reconciler error" "error"="failed to build LoadBalancer configuration due to failed to get AWS tags. Error: AccessDeniedException: User: arn:aws:sts::241161305159:assumed-role/eksctl-k8s-cluster-nodegroup-ng-a-NodeInstanceRole-MPUARSLLOYNO/i-0c18a25d7a5c8e53c is not authorized to perform: tag:GetResources\n\tstatus code: 400, request id: 7225892f-f803-4a10-86a3-c4769ff83a2d" "Controller"="alb-ingress-controller" "Request"={"Namespace":"test-app","Name":"ingress"}

export REGION=us-east-2

export ALB_POLICY_ARN=$(aws iam create-policy --region=$REGION --policy-name aws-alb-ingress-controller --policy-document "https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/master/docs/examples/iam-policy.json" --query "Policy.Arn" | sed 's/"//g')

export NODE_ROLE_NAME=eksctl-k8s-cluster-nodegroup-ng-a-NodeInstanceRole-MPUARSLLOYNO

aws iam attach-role-policy --region=$REGION --role-name=$NODE_ROLE_NAME --policy-arn=$ALB_POLICY_ARN

再度デプロイ

kubectl delete pod alb-ingress-controller-5bfd896bd9-hctqk -n kube-system

kubectl apply -f alb-ingress-controller.yaml

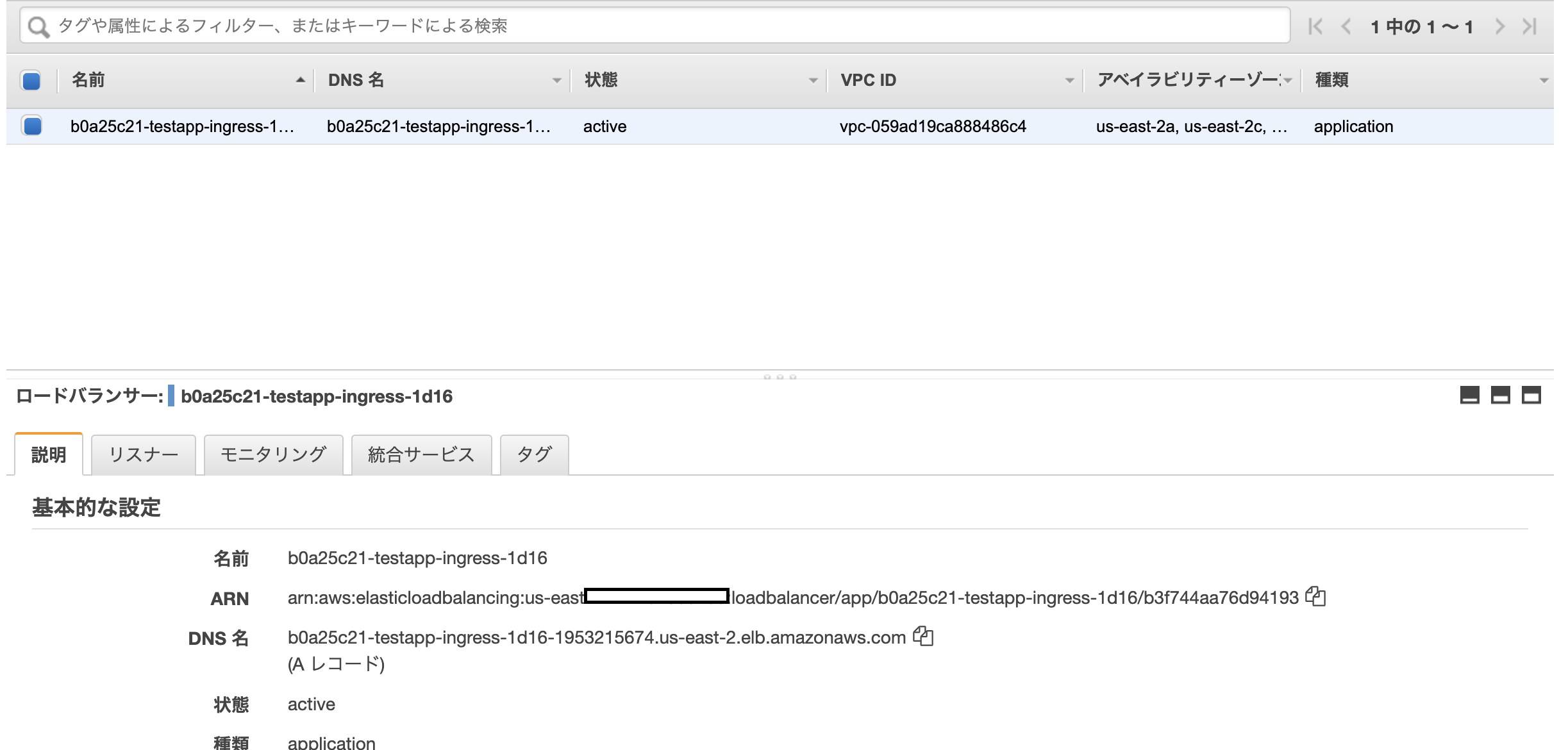

動作確認

target1へのアクセス

~/D/ingress ❯❯❯ curl http://b0a25c21-testapp-ingress-1d16-1953215674.us-east-2.elb.amazonaws.com/target1

/target1:test-app-deployment-target1-66498868db-tvfgx%

~/D/ingress ❯❯❯ curl http://b0a25c21-testapp-ingress-1d16-1953215674.us-east-2.elb.amazonaws.com/target1

/target1:test-app-deployment-target1-66498868db-67zdw%

target2へのアクセス

~/D/ingress ❯❯❯ curl http://b0a25c21-testapp-ingress-1d16-1953215674.us-east-2.elb.amazonaws.com/target2

/target2:test-app-deployment-target2-646fd4978f-xjqw7%

~/D/ingress ❯❯❯ curl http://b0a25c21-testapp-ingress-1d16-1953215674.us-east-2.elb.amazonaws.com/target2

/target2:test-app-deployment-target2-646fd4978f-282qj%