Sample code: tf.stop_gradient()

tf.reset_default_graph()

with tf.variable_scope('test'):

x = tf.get_variable('x', shape = (), initializer = tf.constant_initializer())

a = 0.5 * x

a = tf.stop_gradient(a) # check

f = (x - a - 2.0) ** 2

opt = tf.train.GradientDescentOptimizer(learning_rate = 0.1).minimize(f)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print('initial')

print (sess.run(x))

print (sess.run(f))

sess.run(opt)

print('-' * 10)

print('1st operation')

print (sess.run(x))

print (sess.run(f))

sess.run(opt)

print('-' * 10)

print('2nd operation')

print (sess.run(x))

print (sess.run(f))

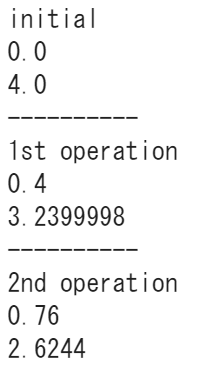

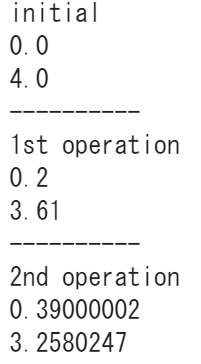

Output results

- with stop gradient

2. without stop gradient

Sample code: tf.clip_by_value()

tf.reset_default_graph()

with tf.variable_scope('test_1'):

init_const = tf.constant_initializer(value = 0.0, dtype = tf.float32)

x = tf.get_variable('x', shape = [], initializer = init_const)

y = tf.get_variable('y', shape = [], initializer = init_const)

y_clipped = tf.clip_by_value(y, 0.0, 2.0)

f = (x - 2.0)**2 + (y_clipped - 3)**2

t_vars = tf.trainable_variables('test_1')

opt = tf.train.GradientDescentOptimizer(0.3).minimize(f, var_list = t_vars)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print ('initial')

print ('x: {:.4f}'.format(sess.run(x)))

print ('y: {:.4f}'.format(sess.run(y)))

print ('y_clipped: {:.4f}'.format(sess.run(y_clipped)))

print ('f: {:.4f}'.format(sess.run(f)))

for i in range(5):

sess.run(opt)

print ('-' * 15)

print ('iteration: {}'.format(i + 1))

print ('x: {:.4f}'.format(sess.run(x)))

print ('y: {:.4f}'.format(sess.run(y)))

print ('y_clipped: {:.4f}'.format(sess.run(y_clipped)))

print ('f: {:.4f}'.format(sess.run(f)))

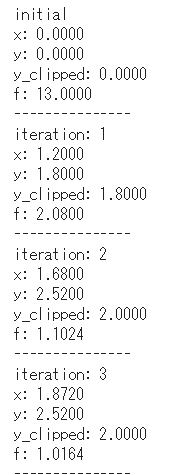

Output results

Sample code: tf.trainable_variables()

tf.reset_default_graph()

with tf.variable_scope('var_1'):

init_const = tf.constant_initializer(value = 0.0, dtype = tf.float32)

x = tf.get_variable('x', shape = [], initializer = init_const)

with tf.variable_scope('var_2'):

init_const = tf.constant_initializer(value = 0.0, dtype = tf.float32)

y = tf.get_variable('y', shape = [], initializer = init_const)

f = (x - 2.0)**2 + (y - 3)**2

t_vars_1 = tf.trainable_variables('var_1')

t_vars_2 = tf.trainable_variables('var_2')

t_vars_all = t_vars_1 + t_vars_2

opt = tf.train.GradientDescentOptimizer(0.3).minimize(f, var_list = t_vars_1)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print ('initial')

print ('x: {:.4f}'.format(sess.run(x)))

print ('y: {:.4f}'.format(sess.run(y)))

print ('f: {:.4f}'.format(sess.run(f)))

for i in range(5):

sess.run(opt)

print ('-' * 15)

print ('iteration: {}'.format(i + 1))

print ('x: {:.4f}'.format(sess.run(x)))

print ('y: {:.4f}'.format(sess.run(y)))

print ('f: {:.4f}'.format(sess.run(f)))

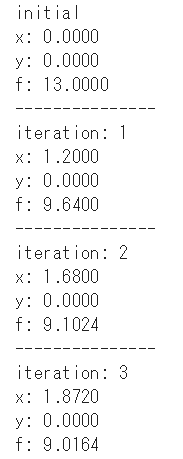

Output results

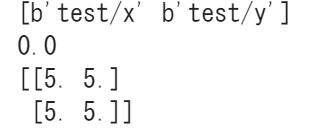

Sample code: tf.variables_initializer()

tf.reset_default_graph()

with tf.variable_scope('test'):

init_const_1 = tf.constant_initializer(value = 0.0, dtype = tf.float32)

init_const_2 = tf.constant_initializer(value = 5.0, dtype = tf.float32)

x = tf.get_variable('x', shape = [], initializer = init_const_1)

y = tf.get_variable('y', shape = [2, 2], initializer = init_const_2)

with tf.Session() as sess:

u_vars = tf.report_uninitialized_variables()

print (sess.run(u_vars))

test_vars = tf.trainable_variables('test')

for var in test_vars:

sess.run(tf.variables_initializer([var]))

print (sess.run(x))

print (sess.run(y))