はじめに

CornetNet-Liteの記事でCenterNetをやるといっていたのですが、その後、Semantic SegmentationのLEDNetと、BiSeNetをやっていたので、時間が空いてしまいました。しかし、自分の記憶のためにもCenterNetも(できれば、LEDNetもBiSeNetも)書いておくべきだと思い、書いておきます。

1.CenterNetとは

Objects as Pointsの方です。CenterNet: Keypoint Triplets for Object Detectionではないです。1日の違いなので、名前が被ったのはお互い不幸でしたね。(私も混乱しました。)

CenterNetの詳細は私が的外れな解説をするより、Slide ShaderにDL輪講会のものがありますので、そちらを参考に、原論文をあたってください。

CornerNetもそうですが、従来のやり方で多数出てきていた、アンカーボックスとNMSを使わずに、一番それらしいボックスをいかにして出すかということが肝なんですね。

では、githubからクローニングして、推論していきましょう。

2.インストール

論文著者のgithubにINSTALL.mdがあるので、それに沿ってやっていきましょう。

2.0.仮想環境構築

(base) F:\Users\sounansu\Anaconda3>conda create --name CenterNet python=3.6

Collecting package metadata (current_repodata.json): done

Solving environment: done

## Package Plan ##

environment location: F:\Users\sounansu\Anaconda3\envs\CenterNet

added / updated specs:

- python=3.6

The following packages will be downloaded:

package | build

---------------------------|-----------------

certifi-2019.6.16 | py36_0 151 KB

pip-19.1.1 | py36_0 1.6 MB

python-3.6.8 | h9f7ef89_7 15.9 MB

setuptools-41.0.1 | py36_0 521 KB

sqlite-3.28.0 | he774522_0 616 KB

vc-14.1 | h0510ff6_4 6 KB

vs2015_runtime-14.15.26706 | h3a45250_4 1.1 MB

wheel-0.33.4 | py36_0 57 KB

wincertstore-0.2 | py36h7fe50ca_0 14 KB

------------------------------------------------------------

Total: 20.0 MB

The following NEW packages will be INSTALLED:

certifi pkgs/main/win-64::certifi-2019.6.16-py36_0

pip pkgs/main/win-64::pip-19.1.1-py36_0

python pkgs/main/win-64::python-3.6.8-h9f7ef89_7

setuptools pkgs/main/win-64::setuptools-41.0.1-py36_0

sqlite pkgs/main/win-64::sqlite-3.28.0-he774522_0

vc pkgs/main/win-64::vc-14.1-h0510ff6_4

vs2015_runtime pkgs/main/win-64::vs2015_runtime-14.15.26706-h3a45250_4

wheel pkgs/main/win-64::wheel-0.33.4-py36_0

wincertstore pkgs/main/win-64::wincertstore-0.2-py36h7fe50ca_0

Proceed ([y]/n)? y

Downloading and Extracting Packages

pip-19.1.1 | 1.6 MB | ############################################################ | 100%

vs2015_runtime-14.15 | 1.1 MB | ############################################################ | 100%

python-3.6.8 | 15.9 MB | ############################################################ | 100%

sqlite-3.28.0 | 616 KB | ############################################################ | 100%

wheel-0.33.4 | 57 KB | ############################################################ | 100%

setuptools-41.0.1 | 521 KB | ############################################################ | 100%

certifi-2019.6.16 | 151 KB | ############################################################ | 100%

vc-14.1 | 6 KB | ############################################################ | 100%

wincertstore-0.2 | 14 KB | ############################################################ | 100%

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

#

# To activate this environment, use

#

# $ conda activate CenterNet

#

# To deactivate an active environment, use

#

# $ conda deactivate

(base) F:\Users\sounansu\Anaconda3>conda activate CenterNet

2.1.Pytorch0.4.1インストール

ここでPyTorch0.4.1を指定してインストールするのですが、、、。

(CenterNet) F:\Users\sounansu\Anaconda3>conda install pytorch=0.4.1 torchvision -c pytorch

略

done

(CenterNet) F:\Users\sounansu\Anaconda3>

このあと、

And disable cudnn batch normalizatio

のために

# PYTORCH=/path/to/pytorch # usually ~/anaconda3/envs/CenterNet/lib/python3.6/site-packages/

# for pytorch v0.4.1

sed -i "1254s/torch\.backends\.cudnn\.enabled/False/g" ${PYTORCH}/torch/nn/functional.py

と書かれていますが、私のWindowsの環境ではfunctional.pyは

F:\Users\sounansu\Anaconda3\envs\CenterNet\Lib\site-packages\torch\nn\functional.py

でした。行は1254行でした。(どうもこの辺りですでに、PyTorch0.4.1ではなく、PyTorch1.1になっていたようです。)

2.2.COCOAPIのインストール

ここで、COCOAPIのインストールですが、CenterNetのディレクトリに入れたいので、先にCenterNetをクローニングします。(git が入っていなかったので、conda install gitを先にしました。)

2.3.クローニング

(CenterNet) F:\Users\sounansu\Anaconda3>git clone https://github.com/xingyizhou/CenterNet CenterNet

Cloning into 'CenterNet'...

remote: Enumerating objects: 311, done.

remote: Total 311 (delta 0), reused 0 (delta 0), pack-reused 311

MiB | 564.00 KiB/s

Receiving objects: 100% (311/311), 6.25 MiB | 625.00 KiB/s, done.

Resolving deltas: 100% (120/120), done.

(CenterNet) F:\Users\sounansu\Anaconda3>

改めて、COCOAPIをインストール

(CenterNet) F:\Users\sounansu\Anaconda3>cd CenterNet

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet>git clone https://github.com/cocodataset/cocoapi.git

Cloning into 'cocoapi'...

remote: Enumerating objects: 953, done.

remote: Total 953 (delta 0), reused 0 (delta 0), pack-reused 953 eceiving objects: 100% (953/953), 10.97Receiving objects: 100% (953/953), 11.70 MiB | 1.74 MiB/s, done.

Resolving deltas: 100% (565/565), done.

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet>cd cocoapi\PythonAPI

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\cocoapi\PythonAPI>make

'make' は、内部コマンドまたは外部コマンド、

操作可能なプログラムまたはバッチ ファイルとして認識されていません。

conda install をつかってmakeを conda install makeしようとすると、channel にないといわれるので、Makefileのなかをみて、

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\cocoapi\PythonAPI>type Makefile

all:

# install pycocotools locally

python setup.py build_ext --inplace

rm -rf build

install:

# install pycocotools to the Python site-packages

python setup.py build_ext install

rm -rf build

all:の方だから、

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\cocoapi\PythonAPI>python setup.py build_ext --inplace

running build_ext

cythoning pycocotools/_mask.pyx to pycocotools\_mask.c

f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\Cython\Compiler\Main.py:367: FutureWarning: Cython directive 'language_level' not set, using 2 for now (Py2). This will change in a later release! File: F:\Users\sounansu\Anaconda3\CenterNet\cocoapi\PythonAPI\pycocotools\_mask.pyx

tree = Parsing.p_module(s, pxd, full_module_name)

building 'pycocotools._mask' extension

creating build

creating build\temp.win-amd64-3.6

creating build\temp.win-amd64-3.6\common

creating build\temp.win-amd64-3.6\Release

creating build\temp.win-amd64-3.6\Release\pycocotools

C:\Program Files (x86)\Microsoft Visual Studio\2017\Community\VC\Tools\MSVC\14.16.27023\bin\HostX86\x64\cl.exe /c /nologo /Ox /W3 /GL /DNDEBUG /MD -If:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\numpy\core\include -I../common -IF:\Users\sounansu\Anaconda3\envs\CenterNet\include -IF:\Users\sounansu\Anaconda3\envs\CenterNet\include "-IC:\Program Files (x86)\Microsoft Visual Studio\2017\Community\VC\Tools\MSVC\14.16.27023\ATLMFC\include" "-IC:\Program Files (x86)\Microsoft Visual Studio\2017\Community\VC\Tools\MSVC\14.16.27023\include" "-IC:\Program Files (x86)\Windows Kits\NETFXSDK\4.6.1\include\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\ucrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\shared" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\winrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\cppwinrt" "-IC:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0\include\include" /Tc../common/maskApi.c /Fobuild\temp.win-amd64-3.6\Release\../common/maskApi.obj -Wno-cpp -Wno-unused-function -std=c99

cl : コマンド ライン error D8021 : 数値型引数 '/Wno-cpp' は無効です。

error: command 'C:\\Program Files (x86)\\Microsoft Visual Studio\\2017\\Community\\VC\\Tools\\MSVC\\14.16.27023\\bin\\HostX86\\x64\\cl.exe' failed with exit status 2

とすると、なんか顔なじみのエラー(`/Wno-cpp`は無効です)が出てきましたね。というより、CornerNet-LiteのWindows 10での学習(ようやくトレーニング) 1.MS COCO APIsのインストールそのものですから、

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\cocoapi\PythonAPI>git diff

diff --git a/PythonAPI/setup.py b/PythonAPI/setup.py

index dbf0093..cd37940 100644

--- a/PythonAPI/setup.py

+++ b/PythonAPI/setup.py

@@ -9,7 +9,7 @@ ext_modules = [

'pycocotools._mask',

sources=['../common/maskApi.c', 'pycocotools/_mask.pyx'],

include_dirs = [np.get_include(), '../common'],

- extra_compile_args=['-Wno-cpp', '-Wno-unused-function', '-std=c99'],

+ extra_compile_args={'gcc': ['/Qstd=c99']},

)

]

とCornerNet-Liteのときと同じことをしました。

改めて

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\cocoapi\PythonAPI>python setup.py build_ext install

略

Finished processing dependencies for pycocotools==2.0

COCO APIは無事にインストールされました。

2.4.requirementsのインストール

INSTALL.mdと順序を逆にしたので次はrequirements.txtのインストールです。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet>pip install -r requirements.txt

Requirement already satisfied: opencv-python in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from -r requirements.txt (line 1)) (4.1.0.25)

Requirement already satisfied: Cython in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from -r requirements.txt (line 2)) (0.29.7)

Requirement already satisfied: numba in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from -r requirements.txt (line 3)) (0.43.1)

Requirement already satisfied: progress in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from -r requirements.txt (line 4)) (1.5)

Requirement already satisfied: matplotlib in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from -r requirements.txt (line 5)) (3.1.0)

Collecting easydict (from -r requirements.txt (line 6))

Downloading https://files.pythonhosted.org/packages/4c/c5/5757886c4f538c1b3f95f6745499a24bffa389a805dee92d093e2d9ba7db/easydict-1.9.tar.gz

Collecting scipy (from -r requirements.txt (line 7))

Using cached https://files.pythonhosted.org/packages/9e/fd/9a995b7fc18c6c17ce570b3cfdabffbd2718e4f1830e94777c4fd66e1179/scipy-1.3.0-cp36-cp36m-win_amd64.whl

Requirement already satisfied: numpy>=1.11.3 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from opencv-python->-r requirements.txt (line 1)) (1.16.3)

Requirement already satisfied: llvmlite>=0.28.0dev0 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from numba->-r requirements.txt (line 3)) (0.28.0)

Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from matplotlib->-r requirements.txt (line 5)) (2.4.0)

Requirement already satisfied: python-dateutil>=2.1 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from matplotlib->-r requirements.txt (line 5)) (2.8.0)

Requirement already satisfied: cycler>=0.10 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from matplotlib->-r requirements.txt (line 5)) (0.10.0)

Requirement already satisfied: kiwisolver>=1.0.1 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from matplotlib->-r requirements.txt (line 5)) (1.1.0)

Requirement already satisfied: six>=1.5 in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from python-dateutil>=2.1->matplotlib->-r requirements.txt (line 5)) (1.12.0)

Requirement already satisfied: setuptools in f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages (from kiwisolver>=1.0.1->matplotlib->-r requirements.txt (line 5)) (41.0.1)

Building wheels for collected packages: easydict

Building wheel for easydict (setup.py) ... done

Stored in directory: C:\Users\sounansu\AppData\Local\pip\Cache\wheels\9a\88\ec\085d92753646b0eda1b7df49c7afe51a6ecc496556d3012e2e

Successfully built easydict

Installing collected packages: easydict, scipy

Successfully installed easydict-1.9 scipy-1.3.0

2.5.デフォーマブルコンボリューションのインストール

次はDCNv2のインストールなわけですが、make.shを実行すると、別のウインドウが開いてよくわからないので、make.shの中身を一つ一つ実行しました。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>type make.sh

# !/usr/bin/env bash

cd src/cuda

# compile dcn

nvcc -c -o dcn_v2_im2col_cuda.cu.o dcn_v2_im2col_cuda.cu -x cu -Xcompiler -fPIC

nvcc -c -o dcn_v2_im2col_cuda_double.cu.o dcn_v2_im2col_cuda_double.cu -x cu -Xcompiler -fPIC

# compile dcn-roi-pooling

nvcc -c -o dcn_v2_psroi_pooling_cuda.cu.o dcn_v2_psroi_pooling_cuda.cu -x cu -Xcompiler -fPIC

nvcc -c -o dcn_v2_psroi_pooling_cuda_double.cu.o dcn_v2_psroi_pooling_cuda_double.cu -x cu -Xcompiler -fPIC

cd -

python build.py

python build_double.py

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>cd src\cuda

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2\src\cuda>nvcc -c -o dcn_v2_im2col_cuda.cu.o dcn_v2_im2col_cuda.cu -x cu -Xcompiler -fPIC

略

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2\src\cuda>nvcc -c -o dcn_v2_im2col_cuda_double.cu.o dcn_v2_im2col_cuda_double.cu -x cu -Xcompiler -fPIC

略

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2\src\cuda>nvcc -c -o dcn_v2_psroi_pooling_cuda.cu.o dcn_v2_psroi_pooling_cuda.cu -x cu -Xcompiler -fPIC

略

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2\src\cuda>nvcc -c -o dcn_v2_psroi_pooling_cuda_double.cu.o dcn_v2_psroi_pooling_cuda_double.cu -x cu -Xcompiler -fPIC

略

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2\src\cuda>cd ..\..\

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>python build.py

Traceback (most recent call last):

File "build.py", line 3, in <module>

from torch.utils.ffi import create_extension

File "f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\torch\utils\ffi\__init__.py", line 1, in <module>

raise ImportError("torch.utils.ffi is deprecated. Please use cpp extensions instead.")

ImportError: torch.utils.ffi is deprecated. Please use cpp extensions instead.

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>

あらあら、まあまあ、なんかエラーが出ています。issueをみると、pytorchのバージョンが新しいとこのようなエラーが出てその場合はDCNv2の新しいのを取ってくるようにと書いています。pytorchはちゃん0.41を入れたつもりなのですが。。。。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>python

Python 3.6.8 |Anaconda, Inc.| (default, Feb 21 2019, 18:30:04) [MSC v.1916 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> print(torch.__version__)

1.1.0

>>>

いつの間に、、、、。

pytorchが1.1だと、issue7にあるように、DCNv2をご本尊からとってこないとダメ見たいです。(初めからissue7を紹介しておけば、私のこの記事はいらない。。。。)

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet>cd src\lib\models\networks

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks>mv DCNv2 DCNv2_old

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks>git clone https://github.com/CharlesShang/DCNv2

Cloning into 'DCNv2'...

remote: Enumerating objects: 177, done.

remote: Total 177 (delta 0), reused 0 (delta 0), pack-reused 177 eceiving objects: 77% (137/177), 1.24 MiB | 270.00 KiB/s

Receiving objects: 100% (177/177), 1.39 MiB | 278.00 KiB/s, done.

Resolving deltas: 100% (106/106), done.

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks>

ここで、issue7の通りファイルを修正

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks>cd DCNv2

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>git diff

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>git diff

diff --git a/src/cuda/dcn_v2_cuda.cu b/src/cuda/dcn_v2_cuda.cu

index 767ed8f..5a6f7f4 100644

--- a/src/cuda/dcn_v2_cuda.cu

+++ b/src/cuda/dcn_v2_cuda.cu

@@ -8,7 +8,8 @@

#include <THC/THCAtomics.cuh>

#include <THC/THCDeviceUtils.cuh>

-extern THCState *state;

+//extern THCState *state;

+THCState *state = at::globalContext().lazyInitCUDA();

// author: Charles Shang

// https://github.com/torch/cunn/blob/master/lib/THCUNN/generic/SpatialConvolutionMM.cu

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\models\networks\DCNv2>python setup.py build develop

ものすごい量のログが出ます。がうまくいったようです。

(2.6飛ばして)

2.7.学習済み重みの取得

学習済みのデータはMODEL_ZOOからリンクされていて、グーグルドライブに格納されているようなので、好きなのをとってきて、、、というより、

いちいちとるのが面倒なので、全部取ってきました。。。

これで、下準備は終わりです。

3.さあ始めましょう。

私はもうすでに、MSCOCOやPASCAL VOCのデータはダウンロードしていますが、もしダウンロードしていないのなら、Dataset preparationを参考にしてダウンロードしてください。

3.1.Benchmark evaluation

Benchmark evaluationのCOCOをやってみましょう。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>cd

F:\Users\sounansu\Anaconda3\CenterNet\src

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python test.py ctdet --exp_id coco_dla --keep_res --load_model ..\models\ctdet_coco_dla_2x.pth

Traceback (most recent call last):

File "test.py", line 15, in <module>

from external.nms import soft_nms

ModuleNotFoundError: No module named 'external.nms'

たしかnmsのコンパイルってオプションって書いてたのですが。。。

issue7の1. build nmsを参考にコンパイルしましょう。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>cd lib\external

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\external>python setup.py build_ext --inplace

Compiling nms.pyx because it changed.

[1/1] Cythonizing nms.pyx

f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\Cython\Compiler\Main.py:367: FutureWarning: Cython directive 'language_level' not set, using 2 for now (Py2). This will change in a later release! File: F:\Users\sounansu\Anaconda3\CenterNet\src\lib\external\nms.pyx

tree = Parsing.p_module(s, pxd, full_module_name)

running build_ext

building 'nms' extension

creating build

creating build\temp.win-amd64-3.6

creating build\temp.win-amd64-3.6\Release

C:\Program Files (x86)\Microsoft Visual Studio\2017\Community\VC\Tools\MSVC\14.16.27023\bin\HostX86\x64\cl.exe /c /nologo /Ox /W3 /GL /DNDEBUG /MD -If:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\numpy\core\include -IF:\Users\sounansu\Anaconda3\envs\CenterNet\include -IF:\Users\sounansu\Anaconda3\envs\CenterNet\include "-IC:\Program Files (x86)\Microsoft Visual Studio\2017\Community\VC\Tools\MSVC\14.16.27023\ATLMFC\include" "-IC:\Program Files (x86)\Microsoft Visual Studio\2017\Community\VC\Tools\MSVC\14.16.27023\include" "-IC:\Program Files (x86)\Windows Kits\NETFXSDK\4.6.1\include\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\ucrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\shared" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\winrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.17763.0\cppwinrt" "-IC:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.0\include\include" /Tcnms.c /Fobuild\temp.win-amd64-3.6\Release\nms.obj -Wno-cpp -Wno-unused-function

cl : コマンド ライン error D8021 : 数値型引数 '/Wno-cpp' は無効です。

error: command 'C:\\Program Files (x86)\\Microsoft Visual Studio\\2017\\Community\\VC\\Tools\\MSVC\\14.16.27023\\bin\\HostX86\\x64\\cl.exe' failed with exit status 2

まぁ、windowsでやってればよく出るエラーです。issue7にあるようにextra_compile_argsをコメントアウトしましょう。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\external>git diff setup.py

diff --git a/src/lib/external/setup.py b/src/lib/external/setup.py

index c4d2571..3b140cb 100644

--- a/src/lib/external/setup.py

+++ b/src/lib/external/setup.py

@@ -7,7 +7,7 @@ extensions = [

Extension(

"nms",

["nms.pyx"],

- extra_compile_args=["-Wno-cpp", "-Wno-unused-function"]

+# extra_compile_args=["-Wno-cpp", "-Wno-unused-function"]

)

]

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src\lib\external>python setup.py build_ext --inplace

running build_ext

(成功したので略)

ライブラリ build\temp.win-amd64-3.6\Release\nms.cp36-win_amd64.lib とオブジェクト build\temp.win-amd64-3.6\Release\nms.cp36-win_amd64.exp を作成中

コード生成しています。

コード生成が終了しました。

さて、evaluationをやってみましょう。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python test.py ctdet --exp_id coco_dla --keep_res --load_model ../models/ctdet_coco_dla_2x.pth

(エラーがたくさん)

あまりにもエラーが多すぎるので、issue7にあるdemo.pyの方をやってみると、

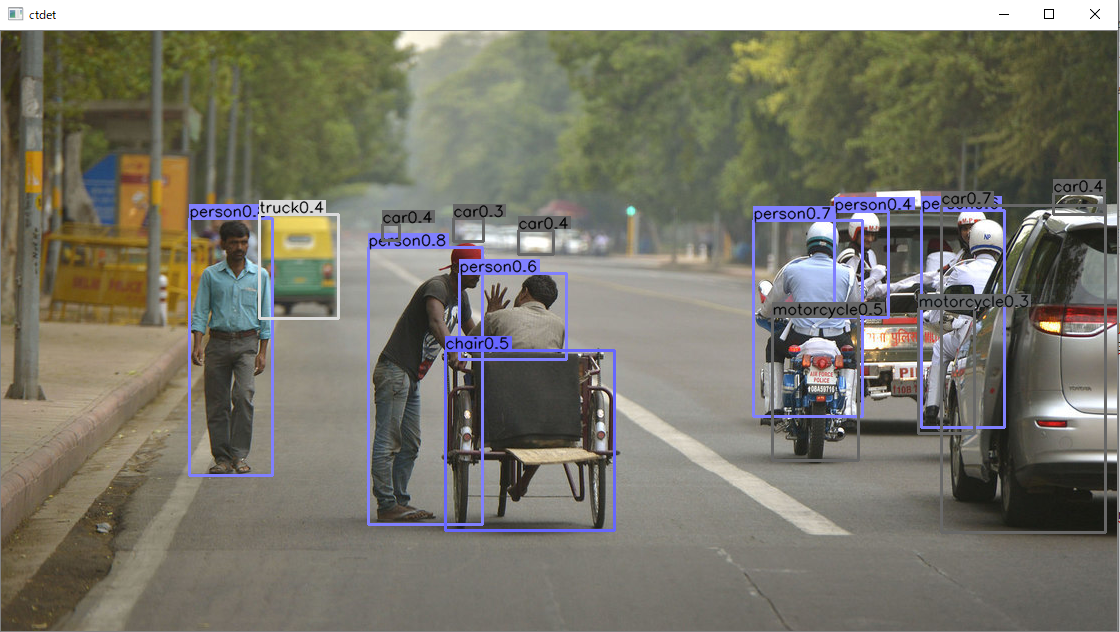

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python demo.py ctdet --demo ../images/17790319373_bd19b24cfc_k.jpg --load_model ../models/ctdet_coco_dla_2x.pth --debug 2

Fix size testing.

training chunk_sizes: [1]

The output will be saved to F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\exp\ctdet\default

heads {'hm': 80, 'wh': 2, 'reg': 2}

Creating model...

loaded ../models/ctdet_coco_dla_2x.pth, epoch 230

tot 2.330s |load 0.000s |pre 0.031s |net 2.217s |dec 0.004s |post 0.078s |merge 0.000s |

evaluationができないので途方に暮れて、Qiitaにも投稿できずにいたのですが、一応issue126でへたっぴな英語で質問したところ、

--not_prefetch_testをつけろと言われました。

issue126ではこれでできるように書いていますが、このままではエラーがでます。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python test.py ctdet --not_prefetch_test --exp_id coco_dla --keep_res --load_model ..\models\ctdet_coco_dla_2x.pth

Keep resolution testing.

training chunk_sizes: [32]

The output will be saved to F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\exp\ctdet\coco_dla

heads {'hm': 80, 'wh': 2, 'reg': 2}

Namespace(K=100, aggr_weight=0.0, agnostic_ex=False, arch='dla_34', aug_ddd=0.5, aug_rot=0, batch_size=32, cat_spec_wh=False, center_thresh=0.1, chunk_sizes=[32], data_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\data', dataset='coco', debug=0, debug_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla\\debug', debugger_theme='white', demo='', dense_hp=False, dense_wh=False, dep_weight=1, dim_weight=1, down_ratio=4, eval_oracle_dep=False, eval_oracle_hm=False, eval_oracle_hmhp=False, eval_oracle_hp_offset=False, eval_oracle_kps=False, eval_oracle_offset=False, eval_oracle_wh=False, exp_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet', exp_id='coco_dla', fix_res=False, flip=0.5, flip_test=False, gpus=[0], gpus_str='0', head_conv=256, heads={'hm': 80, 'wh': 2, 'reg': 2}, hide_data_time=False, hm_hp=True, hm_hp_weight=1, hm_weight=1, hp_weight=1, input_h=512, input_res=512, input_w=512, keep_res=True, kitti_split='3dop', load_model='..\\models\\ctdet_coco_dla_2x.pth', lr=0.000125, lr_step=[90, 120], master_batch_size=32, mean=array([[[0.40789655, 0.44719303, 0.47026116]]], dtype=float32), metric='loss', mse_loss=False, nms=False, no_color_aug=False, norm_wh=False, not_cuda_benchmark=False, not_hm_hp=False, not_prefetch_test=True, not_rand_crop=False, not_reg_bbox=False, not_reg_hp_offset=False, not_reg_offset=False, num_classes=80, num_epochs=140, num_iters=-1, num_stacks=1, num_workers=4, off_weight=1, output_h=128, output_res=128, output_w=128, pad=31, peak_thresh=0.2, print_iter=0, rect_mask=False, reg_bbox=True, reg_hp_offset=True, reg_loss='l1', reg_offset=True, resume=False, root_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..', rot_weight=1, rotate=0, save_all=False, save_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla', scale=0.4, scores_thresh=0.1, seed=317, shift=0.1, std=array([[[0.2886383 , 0.27408165, 0.27809834]]], dtype=float32), task='ctdet', test=False, test_scales=[1.0], trainval=False, val_intervals=5, vis_thresh=0.3, wh_weight=0.1)

==> initializing coco 2017 val data.

loading annotations into memory...

Done (t=0.71s)

creating index...

index created!

Loaded val 5000 samples

Creating model...

loaded ..\models\ctdet_coco_dla_2x.pth, epoch 230

coco_dlaTraceback (most recent call last):

File "test.py", line 124, in <module>

test(opt)

File "test.py", line 108, in test

ret = detector.run(img_path)

File "F:\Users\sounansu\Anaconda3\CenterNet\src\lib\detectors\base_detector.py", line 105, in run

images, meta = self.pre_process(image, scale, meta)

File "F:\Users\sounansu\Anaconda3\CenterNet\src\lib\detectors\base_detector.py", line 38, in pre_process

height, width = image.shape[0:2]

AttributeError: 'NoneType' object has no attribute 'shape'

なぜshapeがない。。。と、過去にこの手のエラーがあった場合は、パスやファイル名を間違った時なので、src\lib\datasets\dataset\coco.pyのdata_dirやimg_dirをprint()文で調べると

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco\val2017

あれ、Dataset preparationと違う。。。(これがwindowsのせいかよくわかりません。)coco.pyをDataset preparationに会うように変更して、

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>git diff lib\datasets\dataset\coco.py

diff --git a/src/lib/datasets/dataset/coco.py b/src/lib/datasets/dataset/coco.py

index d0efc53..a89628c 100644

--- a/src/lib/datasets/dataset/coco.py

+++ b/src/lib/datasets/dataset/coco.py

@@ -21,7 +21,10 @@ class COCO(data.Dataset):

def __init__(self, opt, split):

super(COCO, self).__init__()

self.data_dir = os.path.join(opt.data_dir, 'coco')

- self.img_dir = os.path.join(self.data_dir, '{}2017'.format(split))

+ self.img_dir = os.path.join(self.data_dir, 'images')

+ self.img_dir = os.path.join(self.img_dir, '{}2017'.format(split))

if split == 'test':

self.annot_path = os.path.join(

self.data_dir, 'annotations',

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python test.py ctdet --not_prefetch_test --exp_id coco_dla --keep_res --load_model ..\models\ctdet_coco_dla_2x.pth

Keep resolution testing.

training chunk_sizes: [32]

The output will be saved to F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\exp\ctdet\coco_dla

heads {'hm': 80, 'wh': 2, 'reg': 2}

Namespace(K=100, aggr_weight=0.0, agnostic_ex=False, arch='dla_34', aug_ddd=0.5, aug_rot=0, batch_size=32, cat_spec_wh=False, center_thresh=0.1, chunk_sizes=[32], data_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\data', dataset='coco', debug=0, debug_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla\\debug', debugger_theme='white', demo='', dense_hp=False, dense_wh=False, dep_weight=1, dim_weight=1, down_ratio=4, eval_oracle_dep=False, eval_oracle_hm=False, eval_oracle_hmhp=False, eval_oracle_hp_offset=False, eval_oracle_kps=False, eval_oracle_offset=False, eval_oracle_wh=False, exp_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet', exp_id='coco_dla', fix_res=False, flip=0.5, flip_test=False, gpus=[0], gpus_str='0', head_conv=256, heads={'hm': 80, 'wh': 2, 'reg': 2}, hide_data_time=False, hm_hp=True, hm_hp_weight=1, hm_weight=1, hp_weight=1, input_h=512, input_res=512, input_w=512, keep_res=True, kitti_split='3dop', load_model='..\\models\\ctdet_coco_dla_2x.pth', lr=0.000125, lr_step=[90, 120], master_batch_size=32, mean=array([[[0.40789655, 0.44719303, 0.47026116]]], dtype=float32), metric='loss', mse_loss=False, nms=False, no_color_aug=False, norm_wh=False, not_cuda_benchmark=False, not_hm_hp=False, not_prefetch_test=True, not_rand_crop=False, not_reg_bbox=False, not_reg_hp_offset=False, not_reg_offset=False, num_classes=80, num_epochs=140, num_iters=-1, num_stacks=1, num_workers=4, off_weight=1, output_h=128, output_res=128, output_w=128, pad=31, peak_thresh=0.2, print_iter=0, rect_mask=False, reg_bbox=True, reg_hp_offset=True, reg_loss='l1', reg_offset=True, resume=False, root_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..', rot_weight=1, rotate=0, save_all=False, save_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla', scale=0.4, scores_thresh=0.1, seed=317, shift=0.1, std=array([[[0.2886383 , 0.27408165, 0.27809834]]], dtype=float32), task='ctdet', test=False, test_scales=[1.0], trainval=False, val_intervals=5, vis_thresh=0.3, wh_weight=0.1)

==> initializing coco 2017 val data.

loading annotations into memory...

Done (t=0.70s)

creating index...

index created!

Loaded val 5000 samples

Creating model...

loaded ..\models\ctdet_coco_dla_2x.pth, epoch 230

coco_dla |################################| [4999/5000]|Tot: 0:08:14 |ETA: 0:00:01 |tot 0.098 |load 0.022 |pre 0.025 |net 0.045 |dec 0.004 |post 0.003 |merge 0.000

Loading and preparing results...

DONE (t=2.97s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=54.50s).

Accumulating evaluation results...

DONE (t=11.94s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.374

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.551

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.408

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.206

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.420

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.506

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.521

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.551

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.336

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.594

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.737

MODEL_ZOOにある、37.4%に一致しました。

3.2.トレーニング

Trainingにあるように、でもGPUは一個なので以下のように変更して実行しました。

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python main.py ctdet --exp_id coco_dla --batch_size 32 --master_batch 15 --lr 1.25e-4 --gpus 0

Fix size testing.

training chunk_sizes: [15]

The output will be saved to F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\exp\ctdet\coco_dla

heads {'hm': 80, 'wh': 2, 'reg': 2}

Namespace(K=100, aggr_weight=0.0, agnostic_ex=False, arch='dla_34', aug_ddd=0.5, aug_rot=0, batch_size=32, cat_spec_wh=False, center_thresh=0.1, chunk_sizes=[15], data_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\data', dataset='coco', debug=0, debug_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla\\debug', debugger_theme='white', demo='', dense_hp=False, dense_wh=False, dep_weight=1, dim_weight=1, down_ratio=4, eval_oracle_dep=False, eval_oracle_hm=False, eval_oracle_hmhp=False, eval_oracle_hp_offset=False, eval_oracle_kps=False, eval_oracle_offset=False, eval_oracle_wh=False, exp_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet', exp_id='coco_dla', fix_res=True, flip=0.5, flip_test=False, gpus=[0], gpus_str='0', head_conv=256, heads={'hm': 80, 'wh': 2, 'reg': 2}, hide_data_time=False, hm_hp=True, hm_hp_weight=1, hm_weight=1, hp_weight=1, input_h=512, input_res=512, input_w=512, keep_res=False, kitti_split='3dop', load_model='', lr=0.000125, lr_step=[90, 120], master_batch_size=15, mean=array([[[0.40789655, 0.44719303, 0.47026116]]], dtype=float32), metric='loss', mse_loss=False, nms=False, no_color_aug=False, norm_wh=False, not_cuda_benchmark=False, not_hm_hp=False, not_prefetch_test=False, not_rand_crop=False, not_reg_bbox=False, not_reg_hp_offset=False, not_reg_offset=False, num_classes=80, num_epochs=140, num_iters=-1, num_stacks=1, num_workers=4, off_weight=1, output_h=128, output_res=128, output_w=128, pad=31, peak_thresh=0.2, print_iter=0, rect_mask=False, reg_bbox=True, reg_hp_offset=True, reg_loss='l1', reg_offset=True, resume=False, root_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..', rot_weight=1, rotate=0, save_all=False, save_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla', scale=0.4, scores_thresh=0.1, seed=317, shift=0.1, std=array([[[0.2886383 , 0.27408165, 0.27809834]]], dtype=float32), task='ctdet', test=False, test_scales=[1.0], trainval=False, val_intervals=5, vis_thresh=0.3, wh_weight=0.1)

Creating model...

Setting up data...

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco\images\val2017

==> initializing coco 2017 val data.

loading annotations into memory...

Done (t=0.68s)

creating index...

index created!

Loaded val 5000 samples

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco\images\train2017

==> initializing coco 2017 train data.

loading annotations into memory...

Done (t=17.81s)

creating index...

index created!

Loaded train 118287 samples

Starting training...

ctdet/coco_dlaTraceback (most recent call last):

File "main.py", line 102, in <module>

main(opt)

File "main.py", line 70, in main

log_dict_train, _ = trainer.train(epoch, train_loader)

File "F:\Users\sounansu\Anaconda3\CenterNet\src\lib\trains\base_trainer.py", line 119, in train

return self.run_epoch('train', epoch, data_loader)

File "F:\Users\sounansu\Anaconda3\CenterNet\src\lib\trains\base_trainer.py", line 61, in run_epoch

for iter_id, batch in enumerate(data_loader):

File "f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\torch\utils\data\dataloader.py", line 193, in __iter__

return _DataLoaderIter(self)

File "f:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\torch\utils\data\dataloader.py", line 469, in __init__

w.start()

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\context.py", line 223, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\context.py", line 322, in _Popen

return Popen(process_obj)

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\popen_spawn_win32.py", line 65, in __init__

reduction.dump(process_obj, to_child)

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\reduction.py", line 60, in dump

ForkingPickler(file, protocol).dump(obj)

AttributeError: Can't pickle local object 'get_dataset.<locals>.Dataset'

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\spawn.py", line 105, in spawn_main

exitcode = _main(fd)

File "F:\Users\sounansu\Anaconda3\envs\CenterNet\lib\multiprocessing\spawn.py", line 115, in _main

self = reduction.pickle.load(from_parent)

EOFError: Ran out of input

これも、issue126で質問した結果と、自分のRTX2070のメモリ容量に合わせて、

(CenterNet) F:\Users\sounansu\Anaconda3\CenterNet\src>python main.py ctdet --exp_id coco_dla --batch_size 11 --master_batch 11 --lr 1.25e-4 --gpus 0 --num_workers 0

Fix size testing.

training chunk_sizes: [11]

The output will be saved to F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\exp\ctdet\coco_dla

heads {'hm': 80, 'wh': 2, 'reg': 2}

Namespace(K=100, aggr_weight=0.0, agnostic_ex=False, arch='dla_34', aug_ddd=0.5, aug_rot=0, batch_size=11, cat_spec_wh=False, center_thresh=0.1, chunk_sizes=[11], data_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\data', dataset='coco', debug=0, debug_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla\\debug', debugger_theme='white', demo='', dense_hp=False, dense_wh=False, dep_weight=1, dim_weight=1, down_ratio=4, eval_oracle_dep=False, eval_oracle_hm=False, eval_oracle_hmhp=False, eval_oracle_hp_offset=False, eval_oracle_kps=False, eval_oracle_offset=False, eval_oracle_wh=False, exp_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet', exp_id='coco_dla', fix_res=True, flip=0.5, flip_test=False, gpus=[0], gpus_str='0', head_conv=256, heads={'hm': 80, 'wh': 2, 'reg': 2}, hide_data_time=False, hm_hp=True, hm_hp_weight=1, hm_weight=1, hp_weight=1, input_h=512, input_res=512, input_w=512, keep_res=False, kitti_split='3dop', load_model='', lr=0.000125, lr_step=[90, 120], master_batch_size=11, mean=array([[[0.40789655, 0.44719303, 0.47026116]]], dtype=float32), metric='loss', mse_loss=False, nms=False, no_color_aug=False, norm_wh=False, not_cuda_benchmark=False, not_hm_hp=False, not_prefetch_test=False, not_rand_crop=False, not_reg_bbox=False, not_reg_hp_offset=False, not_reg_offset=False, num_classes=80, num_epochs=140, num_iters=-1, num_stacks=1, num_workers=0, off_weight=1, output_h=128, output_res=128, output_w=128, pad=31, peak_thresh=0.2, print_iter=0, rect_mask=False, reg_bbox=True, reg_hp_offset=True, reg_loss='l1', reg_offset=True, resume=False, root_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..', rot_weight=1, rotate=0, save_all=False, save_dir='F:\\Users\\sounansu\\Anaconda3\\CenterNet\\src\\lib\\..\\..\\exp\\ctdet\\coco_dla', scale=0.4, scores_thresh=0.1, seed=317, shift=0.1, std=array([[[0.2886383 , 0.27408165, 0.27809834]]], dtype=float32), task='ctdet', test=False, test_scales=[1.0], trainval=False, val_intervals=5, vis_thresh=0.3, wh_weight=0.1)

Creating model...

Setting up data...

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco\images\val2017

==> initializing coco 2017 val data.

loading annotations into memory...

Done (t=0.66s)

creating index...

index created!

Loaded val 5000 samples

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco

F:\Users\sounansu\Anaconda3\CenterNet\src\lib\..\..\data\coco\images\train2017

==> initializing coco 2017 train data.

loading annotations into memory...

Done (t=17.87s)

creating index...

index created!

Loaded train 118287 samples

Starting training...

ctdet/coco_dlaf:\users\sounansu\anaconda3\envs\centernet\lib\site-packages\torch\nn\_reduction.py:46: UserWarning: size_average and reduce args will be deprecated, please use reduction='sum' instead.

warnings.warn(warning.format(ret))

ctdet/coco_dla | | train: [1][3/10753]|Tot: 0:00:15 |ETA: 13:28:07 |loss 245.5500 |hm_loss 242.5728 |wh_loss 25.6510 |off_loss 0.4120 |Data 0.412s(0.408s) |Net 3.776s

無事トレーニングが始まりました。

4.終わりに

正直、途中であきらめていたのですが、どうにかトレーニングまでこぎつけました。CenterNetのいいところは、やはり、pose estimationもできることでしょうか。要はobject detectionではクラスのhot spotとオフセットとボックスのところのクラスを、関節にとかに割り当てたという感じでしょうか?なんか不思議です。

Object DetectionはCenterNetでいったん休止して、つぎは最近のSemantic Segmentationをやっていきたい(LEDNetと、BiSeNetを実際はやっています。)です。SegNetとかをやっていたので、新しいものはスピードが驚異的にしか見えません。

では。