This content was translated by AI and may contain inaccuracies. Please refer to the original version written in Japanese if necessary.

1. Selecting a Migration Approach

When migrating from a VMware environment to IBM Cloud VSI for VPC, there are various considerations. Even simply migrating servers requires many tasks, such as image conversion and installing virtio drivers.

Before starting such a migration, please first read Scott Moonen’s article (the Japanese translation is available here). His article is great and it condenses the essential points required for server migration.

In general, migration methods can be broadly classified into the following two approaches.

(a) Creating a Custom Image from a VM Image First, and Provisioning VSI for VPC Using That Custom Image

- This approach creates a reusable Custom Image and deploys

VSI for VPCbased on it. It is a common method described in IBM Cloud Docs, but personally I find some drawbacks. With this method, one Custom Image is required for each VM migration, and that Custom Image is used only once during migration. Originally, the purpose of a Custom Image is to serve as a template for repeatedly provisioning servers with the same configuration. However, in the context of migration, it becomes a template created only for a server that will be provisioned once, which I feel deviates from its original purpose. - To build a Custom Image, it is not sufficient to simply convert it to the

qcow2format. As just one example of the required tasks, in a Linux environment you may need to uninstallVMware Tools, installvirtiodrivers, modify kernel parameters, regenerateinitramfs/initrd, modify/etc/fstab, install and configurecloud-init, and change the network configuration toDHCP. In a Windows environment, for example, prior preparation such as installingvirtiodrivers, installing and configuringcloudbase-init, and runningsysprepis mandatory. To reiterate, these are only examples of the required tasks; for instance, when using LVM on RHEL 9 or later, device filtering via/etc/lvm/devices/system.devicesmust also be taken into account. In addition, in Linux environments,cloud-initmay unintentionally modify existing SSH settings or root password configurations, and in Windows environments,sysprepcan have an impact on system configuration. Therefore, it is necessary to fully understand these effects before performing the work. - Since the boot disk is associated with a Custom Image, for example, migrating 100 VMs requires creating 100 Custom Images. In addition, there is a restriction that the source Custom Image cannot be deleted as long as the VSI provisioned from it has not been deleted.

- Therefore, while Custom Images are a very powerful mechanism when deploying multiple servers as templates, I believe that using them solely for migration purposes does not align with their original intent, and personally I do not favor this approach.

(b) Migrating by Writing the Converted VM Image Data Directly to the Boot Disk (Block Storage)

-

This method achieves migration by directly writing the converted image to the target boot disk without creating a Custom Image. Unlike the Custom Image approach, this method significantly reduces the risk of unintentionally overwriting existing server settings.

-

If you are already bringing your own OS licenses in the VMware environment and plan to continue using those licenses on IBM Cloud, there is no need to register them with IBM Cloud, and therefore even installing

cloud-init/cloudbase-initis unnecessary. However, if you want to use IBM Cloud–provided RHEL/Windows licenses, you need to installcloud-init/cloudbase-initin advance. Also, if you later want to create a Custom Image based on the migratedVSI for VPC, you will still need to installcloud-init/cloudbase-init. -

There are several approaches to implementing this method, but in this article I would like to focus in particular on “Method 4: Direct copy to VPC volumes using VDDK” introduced in Scott’s article. The reason is that with this method, as long as you can connect to vCenter and the target VM is safely powered off, there is almost no need to log in to the VM beforehand to perform any preparation work. This method uses a tool called

virt-v2v, and if the environment is set up correctly, it automates a series of tasks such as:- Uninstalling

VMware Tools(however, as noted in the official virt-v2v documentation, this often does not work well in Windows environments. You may need to uninstall it in advance or manually remove it after migration) - Installing

virtiodrivers - Boot configuration (for Linux, regenerating

initramfs/initrdand modifying/etc/fstab; for Windows, modifying registry values and registering driver services, etc.) - Network configuration

- Installing

qemu-guest-agent - Image conversion

These processes are automated, allowing most of the migration work to be completed with almost “a single command.” Especially in Windows environments, simply installing the

virtiodrivers is not sufficient for them to function properly. Whenvirtiodevices are assigned to a server, additional steps are required to make the OS recognize them, which becomes a time-consuming process. Resetting the association between existing devices and device drivers and allowing new devices to be recognized by the OS can generally be automated usingsysprep, butsysprepis a command with a wide system impact. Becausevirt-v2vconfigures thevirtiodrivers so that they can be used as PnP devices at the first boot, it also handles the process up to the point wherevirtiodevices are automatically recognized during the initial startup of the VSI for VPC. I have tried both approaches a) and b), and I have attempted manual migrations without using eithersyspreporvirt-v2veven with approach b) . However, there are several pitfalls, and carrying out such procedures while carefully accounting for OS- and version-specific differences was a fairly demanding task. Sincevirt-v2vautomates this entire series of operations, I consider it to be a highly effective migration approach. - Uninstalling

-

In this migration method, we adopt an approach that connects directly to vCenter to enable migration with a single command. However, in environments such as IBM Cloud VCF as a Service, where end users cannot directly connect to vCenter or ESXi, it is also possible to first export VM-related files to a Converter VSI and then run

virt-v2vto convert from local files. However, since this procedure only involves changingvirt-v2voptions and should be almost self-explanatory if you understand the approach described here, this article omits detailed explanations of that process. -

IBM Cloud VCF for Classic assumes BYOL for guest OS licenses. If you want to switch to IBM Cloud–provided licenses at the time of migration to VSI for VPC, installing

cloud-init/cloudbase-initin advance will trigger IBM Cloud–specific initialization at boot time from the boot disk. This article does not go into detail on this topic either.

2. List of Operating Systems Verified for Migration from VMware Environments(VCF for Classic on IBM Cloud) using virt-v2v + VDDK

Using this method, I have confirmed that migration from VMware environments (VCF for Classic on IBM Cloud) to VSI for VPC works successfully for the following OS environments.

- Linux

- RHEL9

- RHEL8

- RHEL7

- RHEL6-64bit

- RHEL6-32bit

- RHEL5.11 (64bit)

- Windows

- Windows Server 2025

- Windows Server 2025 (Domain Controller)

- Windows Server 2022

- Windows Server 2019

- Windows Server 2016

It may be possible to make this work in other environments by modifying the scripts described later, but due to the difficulty of preparing test environments and time constraints, I have not personally been able to verify other variations. If I am able to test additional operating systems in the future, I plan to update this article.

3. Overview of the Migration Flow

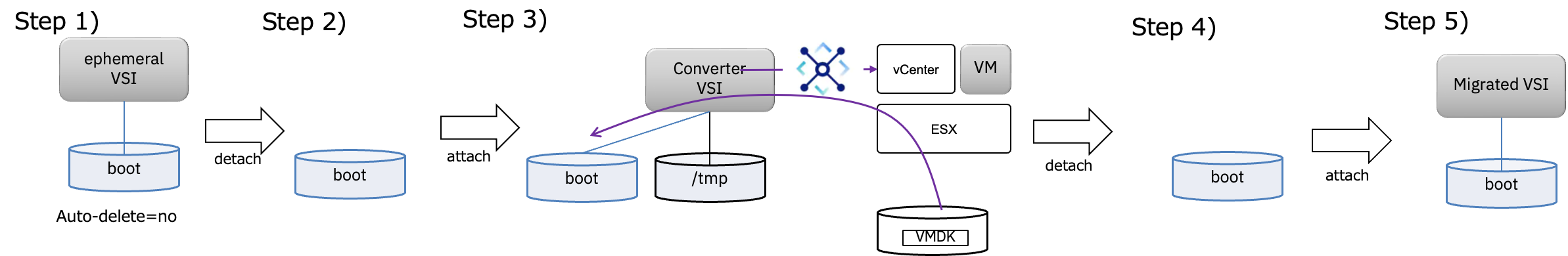

The following diagram illustrates the overall migration flow.

- Step 1: Provision a temporary VSI for VPC in order to create a boot disk for migration. Since IBM Cloud does not provide a feature to order only a standalone boot disk, you must first provision a VSI for VPC. This VSI for VPC is a temporary environment created solely to generate the boot disk and will not actually be used.

- Step 2: Delete the temporarily created VSI for VPC and obtain the boot disk.

- Step 3: Attach the boot disk to a Converter VSI, then execute the virt-v2v command on the Converter VSI to connect to vCenter. Internally, by using VDDK, it efficiently accesses the VMDK files in the VMware environment and starts transferring data from the ESX host where the target VM is located to the Converter VSI. After performing conversion tasks such as installing

virtiodrivers,virt-v2vwrites the contents of the VMware VM to the boot disk. This process can basically be executed with a single command.

In addition, a prerequisite for this process is that the VMware-side VM must be properly powered off. It is also assumed that connectivity between the VPC environment and the IBM Cloud VMware environment has been established in advance via a Transit Gateway. - Step 4: Detach the converted boot disk.

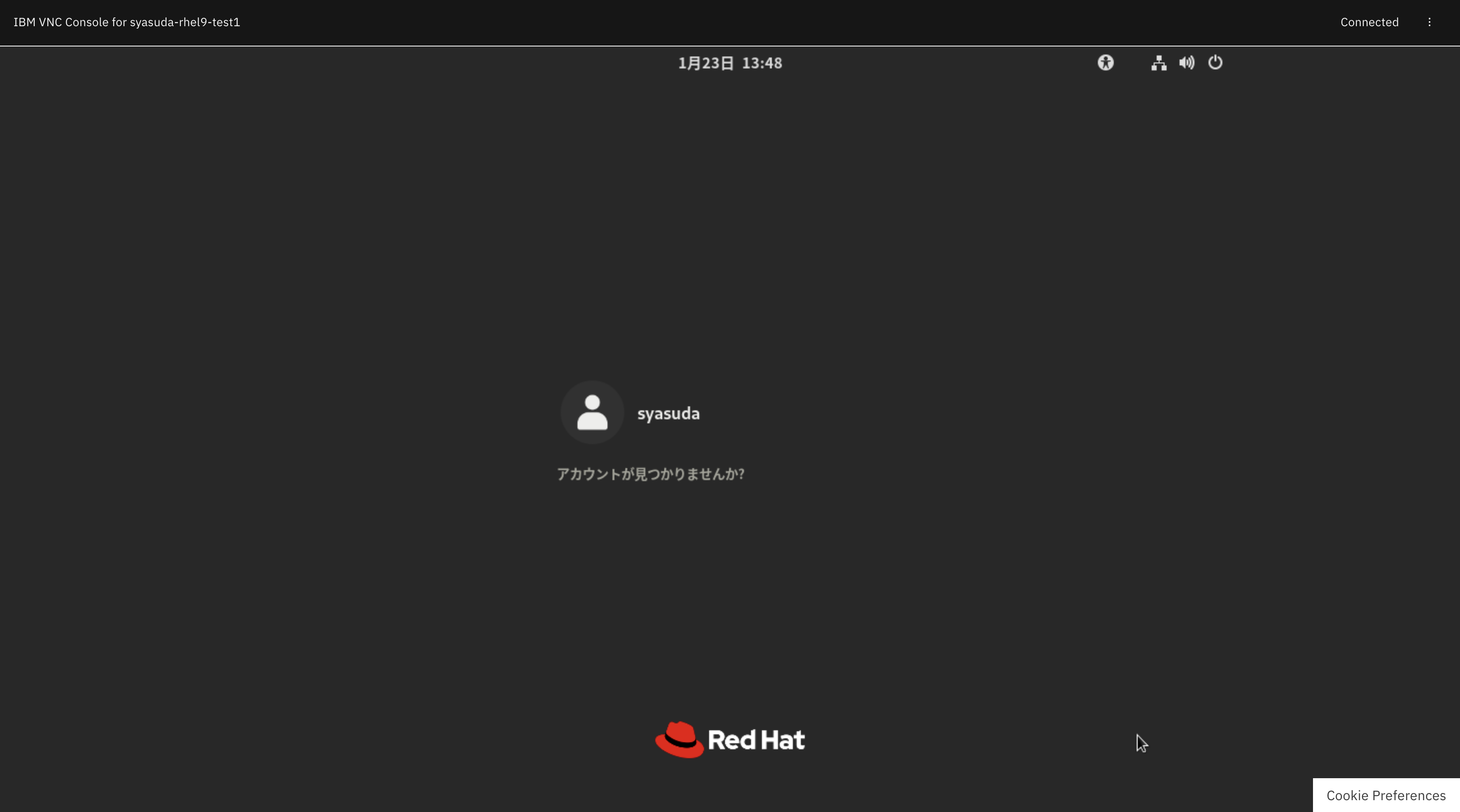

- Step 5: Provision a VSI for VPC using this boot disk. In Windows environments, multiple reboots occur during the first startup, and tasks such as setting up

virtiodrivers are automatically performed.

In the following sections, the points to note for each step will be explained in detail.

4. Preparing the Converter VSI

4-1. Preparing a Fedora Environment

virt-v2v requires the --block-driver virtio-scsi option for IBM Cloud VPC Windows Server environments, and it also requires that the VMware Virtual Disk Development Kit (VDDK) plugin be supported by nbdkit. The build images for virt-v2v are available in RHEL-based and Ubuntu-based variants. However, the former does not include --block-driver virtio-scsi, while the latter may not include the VDDK plugin due to licensing issues, making it difficult to satisfy both requirements at the same time. Red Hat appears to have intentionally removed "--block-driver virtio-scsi", and without this option, I was unable to successfully run Windows Server on VSI for VPC.

https://access.redhat.com/errata/RHBA-2023:6376

https://bugzilla.redhat.com/show_bug.cgi?id=2190387

SLES supports both of these requirements, but since it does not natively support the vpx driver, it cannot connect to vCenter. To meet all these requirements, it may be necessary to compile virt-v2v from source code, but attempting to do so in practice turns out to be a very demanding task.

After trying various operating systems available as standard images on IBM Cloud (article link), I found that Fedora satisfies all of these requirements. However, IBM Cloud only provides Fedora CoreOS. Although I have confirmed that the conversion process actually works with Fedora CoreOS, it must be used with caution because of various restrictions, such as not being able to write to certain directories like /usr. I personally use a standard Fedora installation that I set up myself on a KVM environment and run it as a VSI for VPC, but since that topic deviates from the main subject, I would like to cover it in a separate article if I get the chance. While I recommend building and using a standard Fedora environment without such restrictions, Fedora CoreOS can also be used, so an example configuration using Fedora CoreOS is described below.

In addition, network speed is extremely important when running virt-v2v. If the latency from this Conversion VSI to vCenter or ESX is not within approximately 2 ms, it may indicate that an appropriate Transit Gateway is not being used—for example, a Global Transit Gateway may be routing traffic through another region, or the Conversion VSI may not be deployed in the appropriate region. It is strongly recommended to identify and resolve these issues in advance.

4-2. Installing Modules

When connecting to Fedora CoreOS via SSH, note that you must use the core user instead of the root user.

core@syasuda-converter-fedora:~$ sudo su -

root@syasuda-converter-fedora:~# systemctl stop zincati.service

root@syasuda-converter-fedora:~# rpm-ostree upgrade

root@syasuda-converter-fedora:~# rpm-ostree install virt-v2v

root@syasuda-converter-fedora:~# rpm-ostree install screen tcpdump iftop nc

root@syasuda-converter-fedora:~# systemctl reboot

root@syasuda-converter-fedora:~# cat /etc/os-release

NAME="Fedora Linux"

VERSION="43.20260105.3.0 (CoreOS)"

RELEASE_TYPE=stable

ID=fedora

VERSION_ID=43

VERSION_CODENAME=""

PRETTY_NAME="Fedora CoreOS 43.20260105.3.0"

ANSI_COLOR="0;38;2;60;110;180"

LOGO=fedora-logo-icon

CPE_NAME="cpe:/o:fedoraproject:fedora:43"

HOME_URL="https://getfedora.org/coreos/"

DOCUMENTATION_URL="https://docs.fedoraproject.org/en-US/fedora-coreos/"

SUPPORT_URL="https://github.com/coreos/fedora-coreos-tracker/"

BUG_REPORT_URL="https://github.com/coreos/fedora-coreos-tracker/"

REDHAT_BUGZILLA_PRODUCT="Fedora"

REDHAT_BUGZILLA_PRODUCT_VERSION=43

REDHAT_SUPPORT_PRODUCT="Fedora"

REDHAT_SUPPORT_PRODUCT_VERSION=43

SUPPORT_END=2026-12-02

VARIANT="CoreOS"

VARIANT_ID=coreos

OSTREE_VERSION='43.20260105.3.0'

IMAGE_VERSION='43.20260105.3.0'

root@syasuda-converter-fedora:~# uname -a

Linux syasuda-converter-fedora 6.17.12-300.fc43.x86_64 #1 SMP PREEMPT_DYNAMIC Sat Dec 13 05:06:24 UTC 2025 x86_64 GNU/Linux

root@syasuda-converter-fedora:~# virt-v2v --version

virt-v2v 2.10.0fedora=43,release=1.fc43

root@syasuda-converter-fedora:~# virt-v2v --help | grep "block"

--block-driver <driver> Prefer 'virtio-blk' or 'virtio-scsi'

root@syasuda-converter-fedora:~# rpm -qa|grep vddk

nbdkit-vddk-plugin-1.46.1-1.fc43.x86_64

root@syasuda-converter-fedora:~# nbdkit vddk --dump-plugin

path=/usr/lib64/nbdkit/plugins/nbdkit-vddk-plugin.so

name=vddk

version=1.46.1

api_version=2

struct_size=384

max_thread_model=parallel

thread_model=parallel

errno_is_preserved=0

magic_config_key=file

has_longname=1

has_unload=1

has_dump_plugin=1

has_config=1

has_config_complete=1

has_config_help=1

has_get_ready=1

has_after_fork=1

has_open=1

has_close=1

has_get_size=1

has_block_size=1

has_can_flush=1

has_can_extents=1

has_can_fua=1

has_pread=1

has_pwrite=1

has_flush=1

has_extents=1

vddk_default_libdir=/usr/lib64/vmware-vix-disklib

vddk_has_nfchostport=1

vddk_has_fnm_pathname=0

4-3. Installing VDDK

In this case, since the VMware environment to be migrated was vSphere 8, VMware Virtual Disk Development Kit (VDDK) 8.0.3 for Linux is used.

- VDDK v9.0: https://developer.broadcom.com/sdks/vmware-virtual-disk-development-kit-vddk/latest

- VDDK v8.0: https://developer.broadcom.com/sdks/vmware-virtual-disk-development-kit-vddk/8.0

- VDDK v7.0: https://developer.broadcom.com/sdks/vmware-virtual-disk-development-kit-vddk/7.0

- VDDK v6.7: https://developer.broadcom.com/sdks/vmware-virtual-disk-development-kit-vddk/6.7

root@syasuda-converter-fedora:~# tar -xvzf VMware-vix-disklib-*.x86_64.tar.gz -C /opt

root@syasuda-converter-fedora:~# chown -R root:root /opt/vmware-vix-disklib-distrib/

root@syasuda-converter-fedora:~# ls -l /opt/vmware-vix-disklib-distrib/

total 16

-rw-r--r--. 1 root root 8034 May 27 2024 FILES

drwxr-xr-x. 2 root root 73 Jan 23 02:47 bin64

drwxr-xr-x. 6 root root 4096 Jan 23 02:47 doc

drwxr-xr-x. 2 root root 95 Jan 23 02:47 include

drwxr-xr-x. 2 root root 6 May 27 2024 lib32

drwxr-xr-x. 2 root root 4096 Jan 23 02:47 lib64

4-4. Configuring /etc/hosts

Name resolution must be available for the vCenter/ESX hostnames.

# Loopback entries; do not change.

# For historical reasons, localhost precedes localhost.localdomain:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# See hosts(5) for proper format and other examples:

# 192.168.1.10 foo.example.org foo

# 192.168.1.13 bar.example.org bar

10.173.1.3 vcsosv-vc.acs.japan.local

10.173.166.232 host-km000.acs.japan.local

10.173.166.227 host-km001.acs.japan.local

10.173.166.198 host-km002.acs.japan.local

4-5. Checking the vCenter Fingerprint

root@syasuda-converter-fedora:~# echo | openssl s_client -connect vcsosv-vc.acs.japan.local:443 2>/dev/null | openssl x509 -noout -fingerprint -sha1

sha1 Fingerprint=D2:AE:CF:26:22:8C:89:13:A4:67:89:19:7F:64:1C:97:35:C8:11:54

4-6. Store the vCenter password in a file.

root@syasuda-converter-fedora:~# echo <"vCenter Password"> > vcenter_passwd.txt

root@syasuda-converter-fedora:~# chmod 600 vcenter_passwd.txt

4-7. Preparing virtio-win (Required for Windows Environments)

In Windows environments, it is necessary to install virtio drivers, so an ISO file for this purpose is required. You can download the latest virtio drivers from the Fedora website, and it is known that some environments work fine with these drivers. However, there is also information scattered across the web suggesting that drivers signed by RHEL are more reliable for stable operation. For this reason, I provision RHEL 9 on IBM Cloud and run dnf install virtio-win, which downloads the ISO image under /usr/share/virtio-win/. I then copy this ISO image to this VSI.

root@syasuda-converter-fedora:~# ls -l virtio-win-1.9.50.iso

-rw-r--r--. 1 root root 645939200 Jan 23 02:52 virtio-win-1.9.50.iso

5. Details of Each Migration Step

Step 1: Order an ephemeral VSI to create boot disk

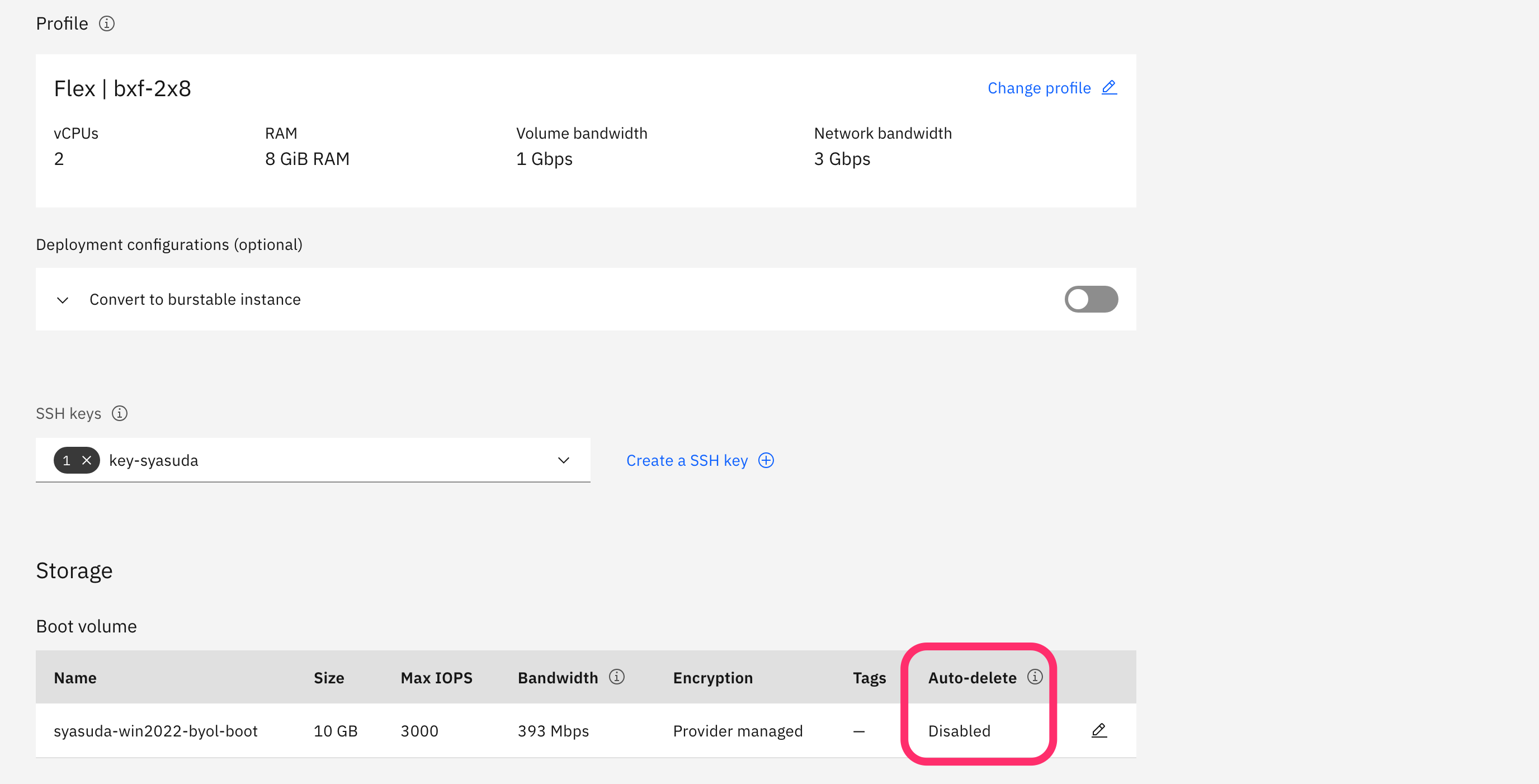

- In this migration method, the converted image is written directly to the boot disk of the VSI for VPC. However, IBM Cloud does not provide a feature to create a bootable disk as a standalone resource. Therefore, when first creating a temporary VSI (hereafter referred to as the ephemeral VSI), set the Auto-delete attribute of the boot disk to No. Then, immediately after the provisioning is complete, delete this ephemeral VSI to leave only the boot disk. Since this boot disk will be overwritten in subsequent steps, the ephemeral VSI does not need to boot successfully. For this reason, I usually press the Stop button immediately after ordering the ephemeral VSI, then execute Force stop to shut down the server, and finally delete the VSI. This allows the entire process to proceed more quickly.

-

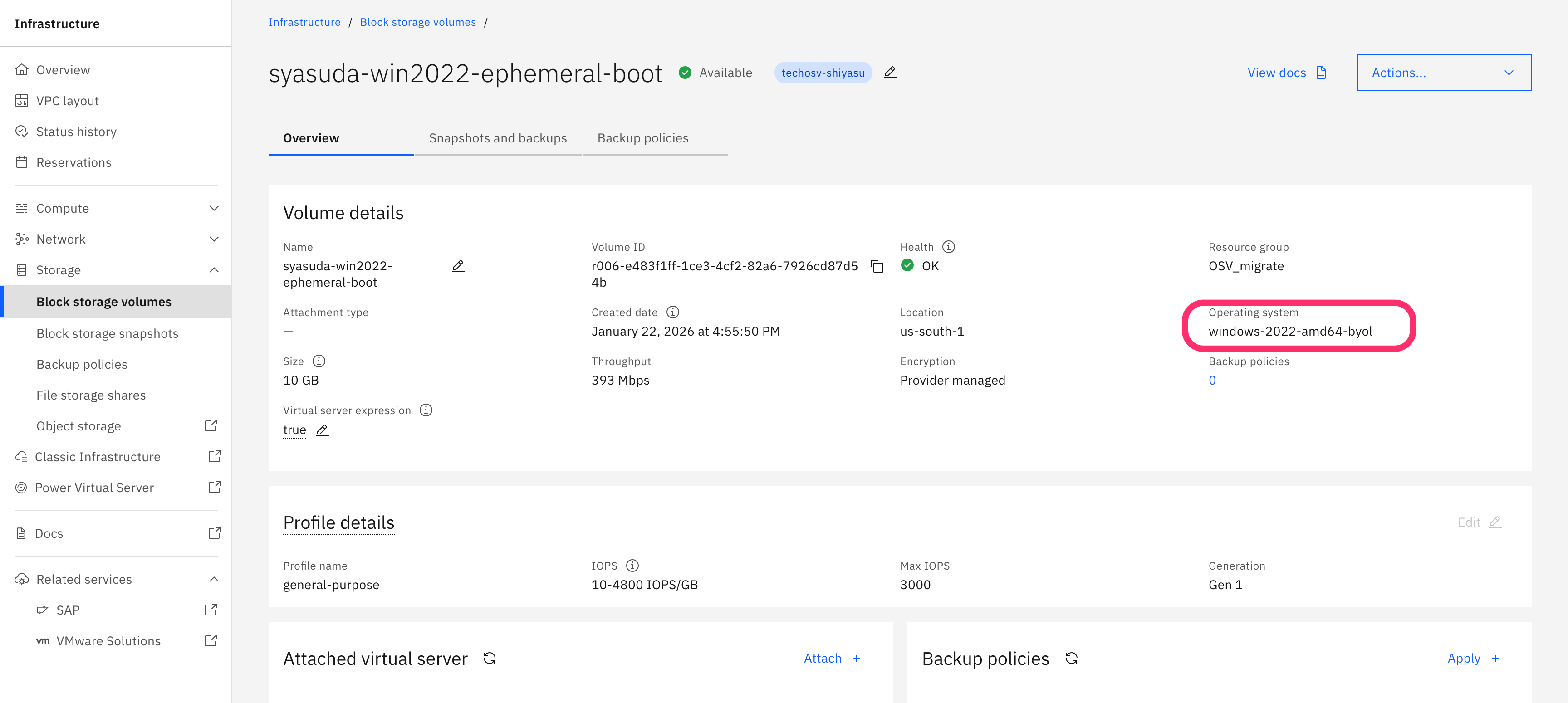

A boot disk retains OS attribute information even after the VSI is deleted. VSI for VPC is considered to internally construct the libvirt domain XML using this information. For example, in Windows environments, the system should boot using

virtio-scsi, but if the OS attribute of the boot disk is set to Linux, IBM Cloud may internally configure it to usevirtio-blkinstead. Therefore, when creating a boot disk, it is preferable that the ephemeral VSI reflect the OS environment you intend to migrate. Running Fedora or RHEL on a boot disk with a CentOS 9 attribute did not cause any particular issues, but attempting to run Windows Server on such a boot disk generally fails. In fact, at first I assumed that any boot disk would work, so I created an ephemeral VSI using the IBM Cloud–provided standard imagecentos-stream-9-amd64and attempted to migrate Windows Server using that boot disk, which led me to encounter numerous problems. For example, I experienced issues such as thevirtiodrivers not being automatically recognized, and a strange situation where Windows Server would not boot when Secure Boot was disabled, but would boot when Secure Boot was enabled.

-

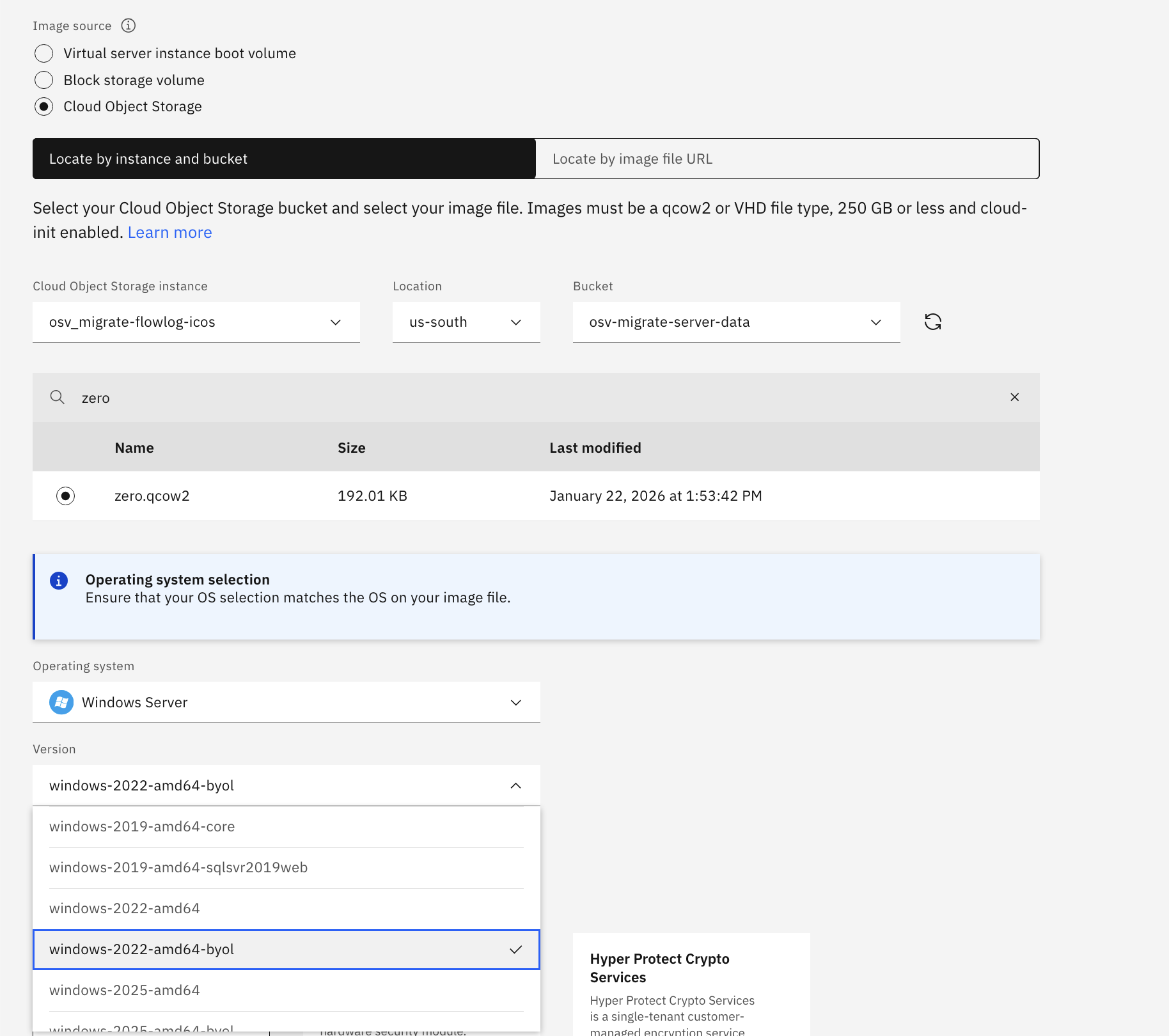

Another important point to note regarding boot disk attributes is whether BYOL is applied. Typically, in IBM Cloud VMware environments (such as VCF for Classic), guest OS licenses are BYOL. However, if you use IBM Cloud–provided standard images, license fees from IBM will be charged. Therefore, to migrate this environment while keeping it as BYOL, the boot disk must also have the BYOL attribute; otherwise, additional charges may apply. Since a Custom Image does not need to be actually bootable, you can create a qcow2 format file as shown below, upload it to ICOS, and then create a Custom Image using this file. When selecting the OS type, be sure to choose one that is marked as BYOL.

[root@fedora ~]# dd if=/dev/zero bs=1M count=1 of=zero.img

[root@fedora ~]# qemu-img convert -f raw -O qcow2 zero.img zero.qcow2

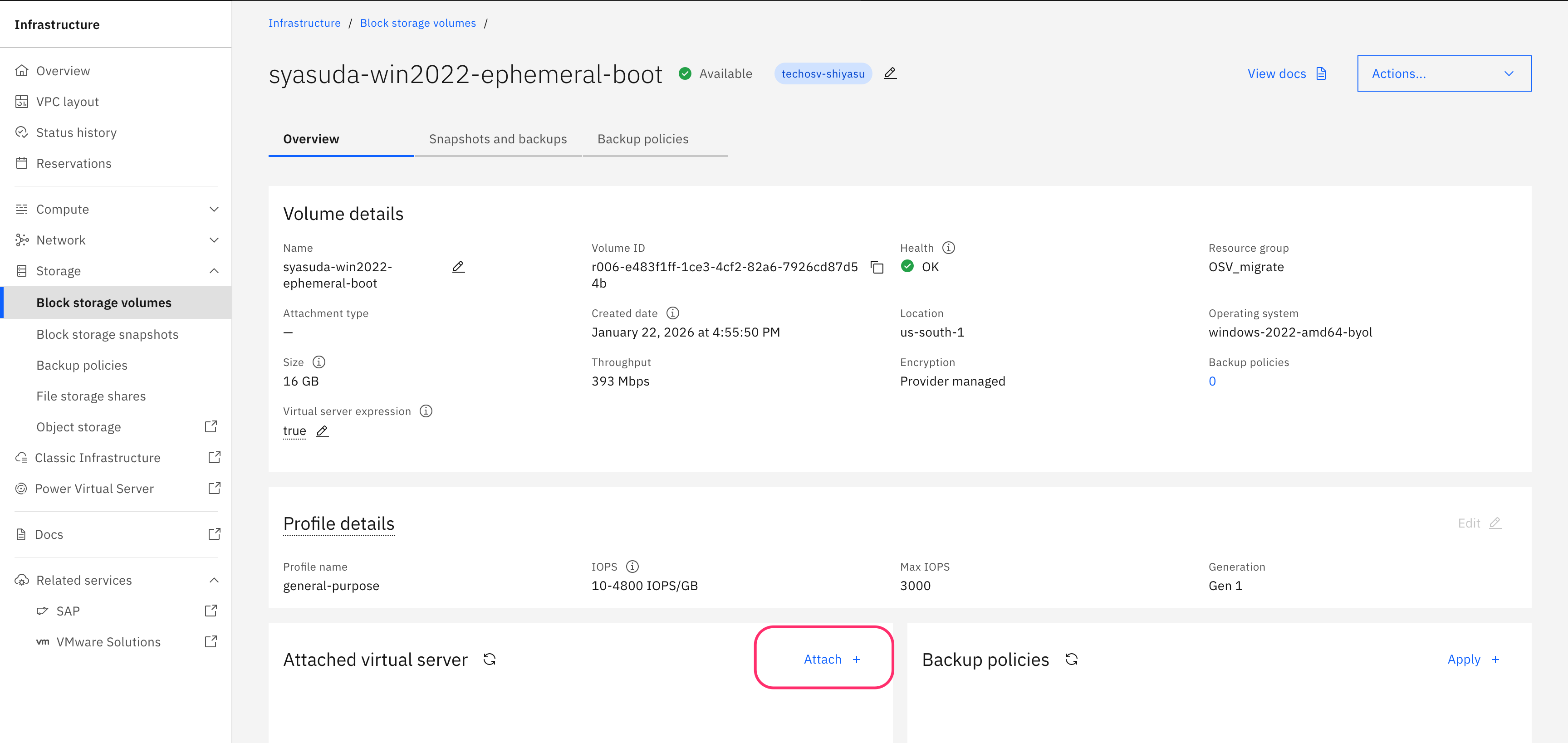

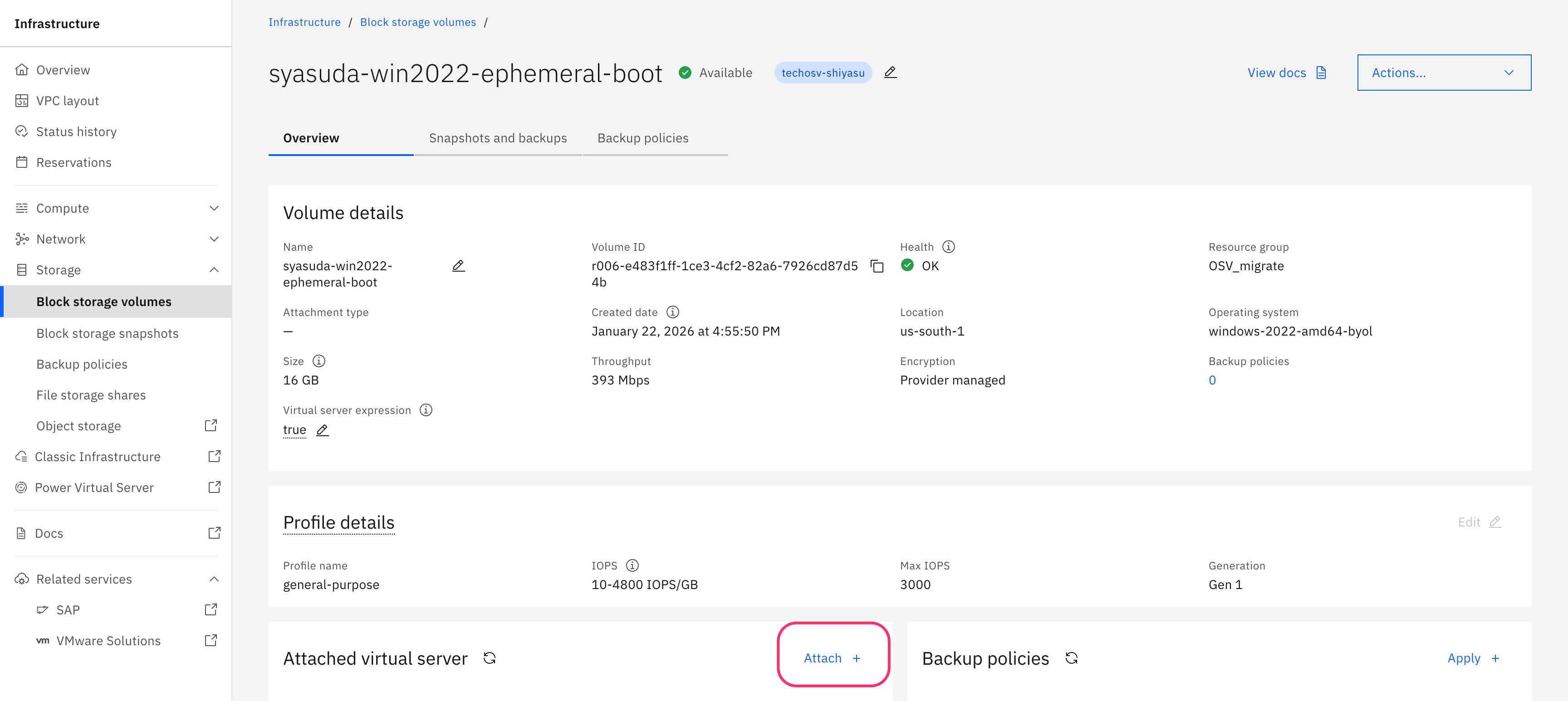

Step 2: Obtain a Boot Disk That Is Not Associated with a VSI

Delete the VSI. As mentioned repeatedly, before deleting the VSI, make sure once again that the Auto-delete attribute of this boot disk is set to Auto-delete=no. Otherwise, the boot disk will be deleted at the same time as the VSI. By performing this operation, you will obtain a boot disk that is not attached to any VSI.

Step 3: Attach the Boot Disk to the Converter VSI and Run virt-v2v

Step 3-1. Expanding the Boot Disk Size (Optional)

Increase the size as needed. If the boot disk was created based on the Custom Image created following the steps above, it should be configured with the minimum size of 10 GB. When using an IBM Cloud–provided default image, the minimum boot disk size is 100 GB, but with this approach, you can change it to any size between 10 GB and 250 GB. In this migration, the target VM on the VMware side is using 16 GB, so in that case, the boot disk must be expanded to at least 16 GB.

However, as of 2026/01/22, note that the maximum size of a boot disk is 250 GB. Also note that once the size is increased, it cannot be reduced. If the disk size is increased beyond 250 GB, it will no longer be recognized as a boot disk, as shown below. This limitation is expected to be resolved in the near future.

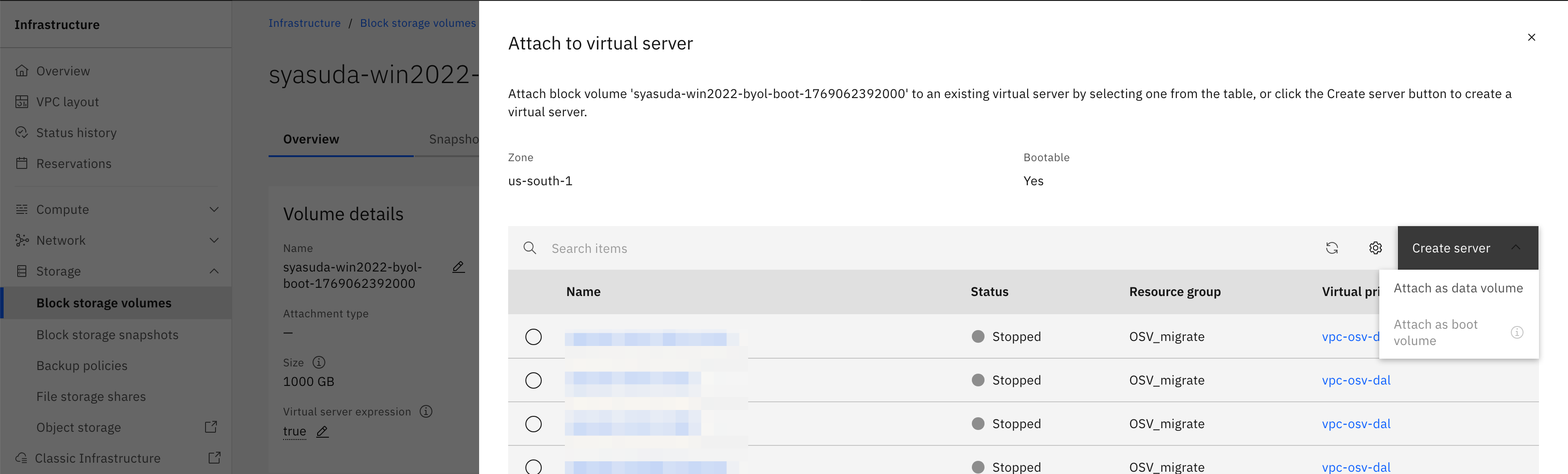

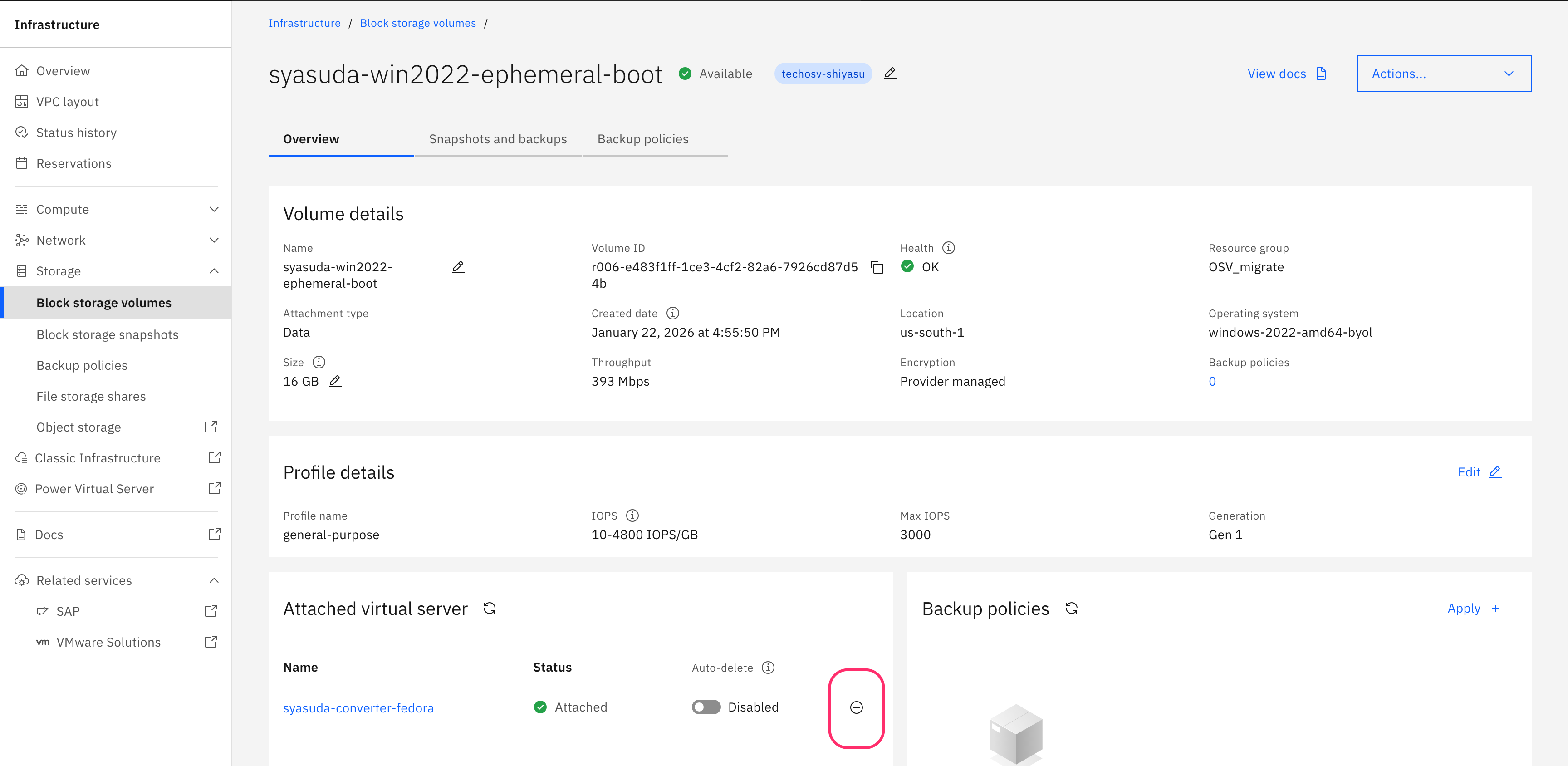

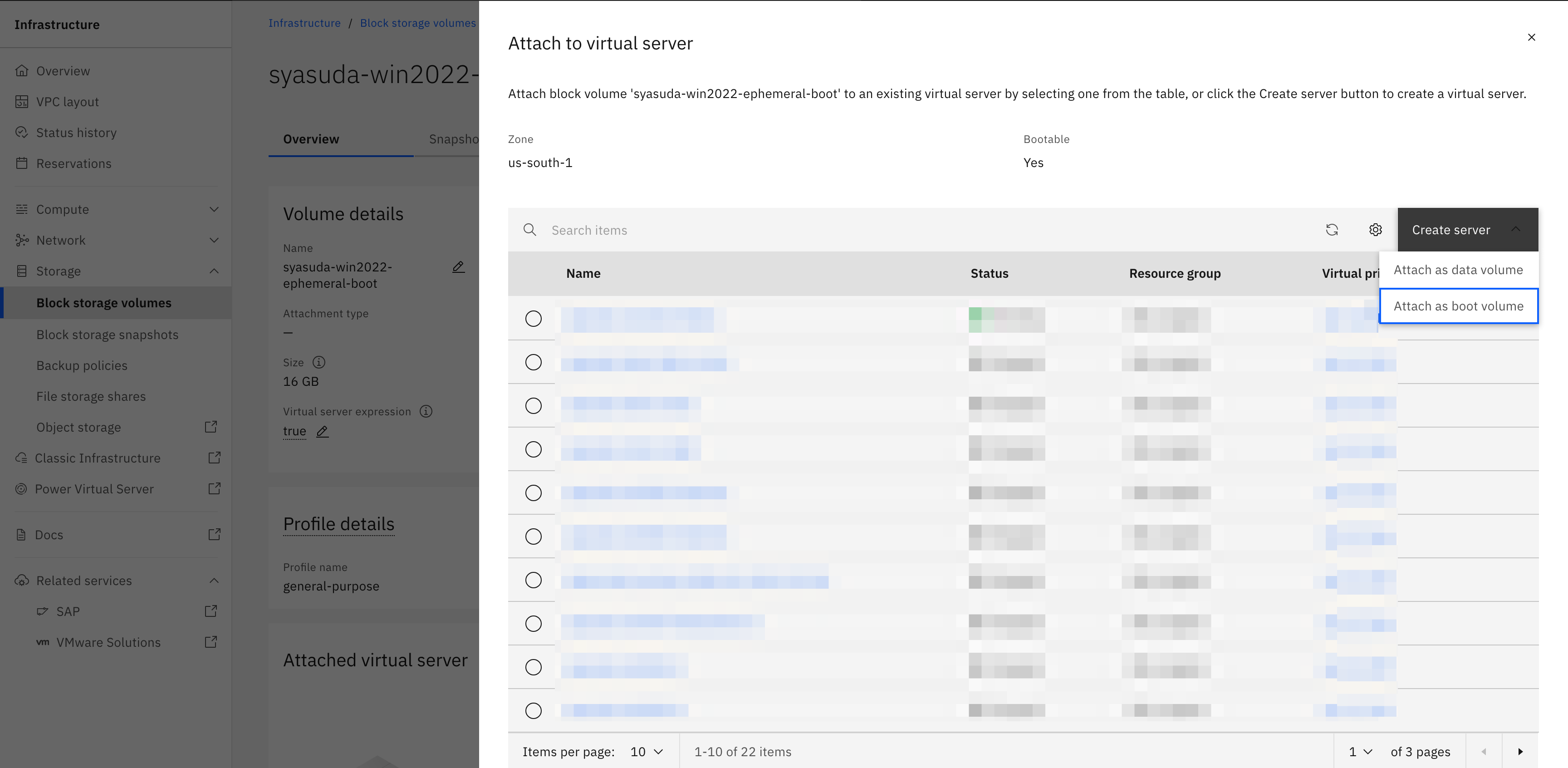

Step 3-2. Assign the previously created boot disk to the Conversion VSI.

root@syasuda-converter-fedora:~# lsblk -pf

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS

/dev/vda

├─/dev/vda1

├─/dev/vda2 vfat FAT16 EFI-SYSTEM 7B77-95E7

├─/dev/vda3 ext4 1.0 boot 99d3b227-8df4-44aa-9e03-c7f3dbc6b118 44.4M 81% /boot

└─/dev/vda4 xfs root d0463b38-8446-453c-83bf-8d7a8a6f8b60 91.4G 8% /var

/sysroot/ostree/deploy/fedora-coreos/var

/sysroot

/etc

/dev/vdb iso9660 Joliet Extension cidata 2026-01-23-02-05-26-00

/dev/vdc swap 1 SWAP-xvdb1 7cd086aa-8f57-4ef4-a5d0-53dae14dc808

/dev/vdd

Step 3-3. Create a Link

As described in Scott’s article and the virt-v2v guidance, virt-v2v allows you to specify an output directory, but not the output file name. Therefore, for example, execute a script like the following to configure the output to the /tmp directory so that it is written directly to the newly attached boot disk (in this case, /dev/vdd).

VM_NAME=syasuda-win2022-1

TARGET_DEV=/dev/vdd

SYMLINK="/tmp/${VM_NAME}-sda"

ln -fs "${TARGET_DEV}" "${SYMLINK}"

ls -l "${SYMLINK}"

root@syasuda-converter-fedora:~# bash createlink_win2022-1.sh

lrwxrwxrwx. 1 root root 8 Jan 23 03:41 /tmp/syasuda-win2022-1-sda -> /dev/vdd

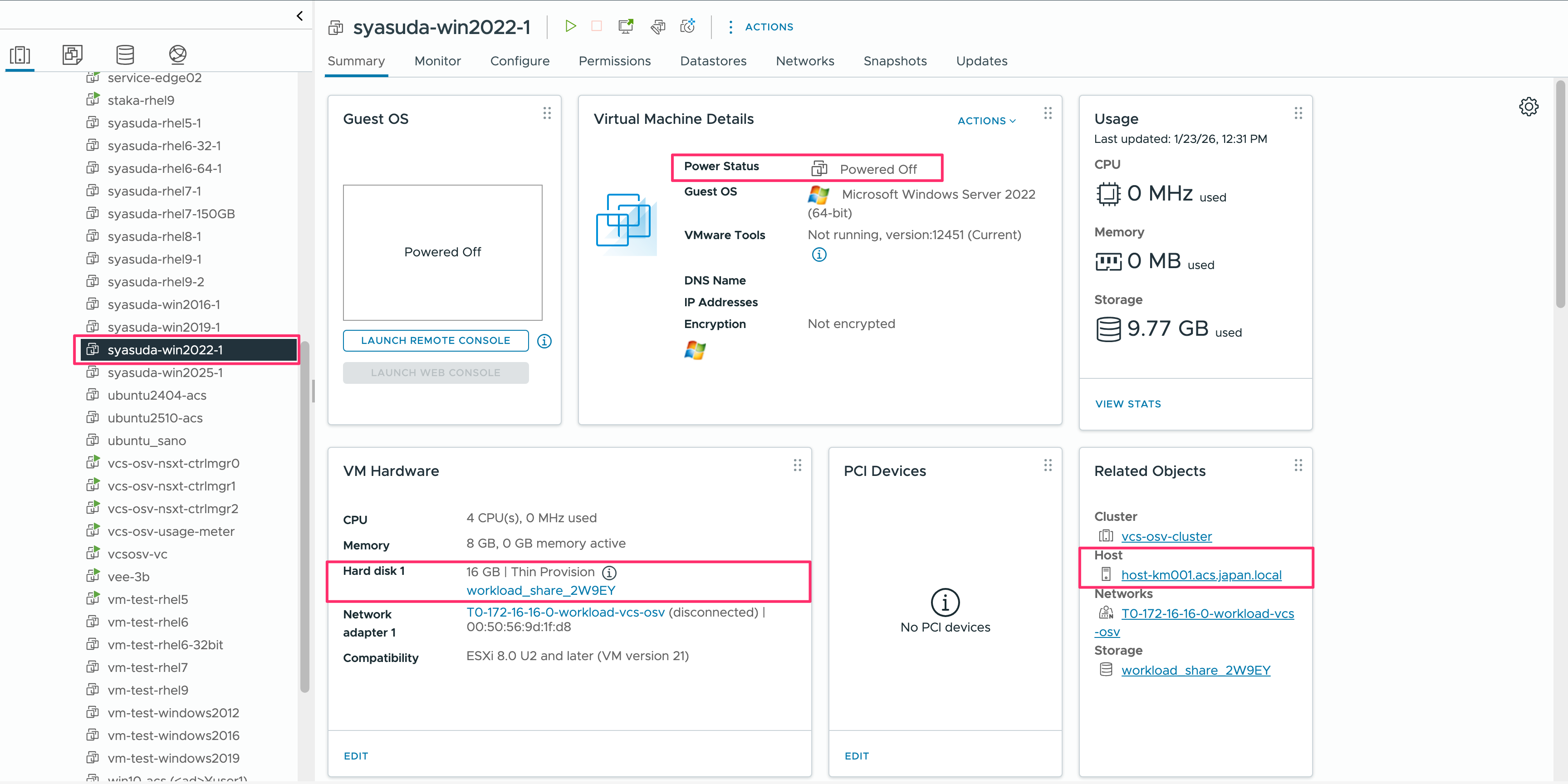

Step 3-4. Gracefully Shut Down the VM in the VMware Environment

Make sure that the VM has been properly shut down.

Also ensure that processes such as Windows Update or driver updates are not running during the conversion. In my case, due to poor timing when stopping automatic Windows Updates, the system was in the middle of attempting to download and install updates. As a result, Windows Update tried to run at every reboot, which caused the VSI for VPC to enter a reboot loop after the conversion.

Step 3-5. Executing the Conversion (RHEL)

- When executing the command, the hostname of the ESX host where the VM resides must be specified.

- In the Linux environment of

VSI for VPC, the boot disk usesvirtio-blk, so you MUST NOT specify the--block-driver virtio-scsioption. - A custom script called

nwscript-update.shis executed.- For RHEL, in order to match the configuration of the standard VSI for VPC image, the setup uses traditional interfaces (e.g.,

eth0,eth1, etc.) instead of predictable interfaces (e.g.,enp0s1,ens192, etc.). In addition, to apply recommended parameters and reset network configurations, the following script is specified when runningvirt-v2v. - Executing this script is not strictly required, but if such a script is not used, you may need to log in later via the VNC Console and manually configure the network settings. Since I want to be able to connect to the migrated VSI immediately after provisioning without performing additional configuration, I configure the process to execute this type of script.

- This script has only been verified on RHEL, and it likely will not work as-is on Ubuntu or other operating systems, but I believe it can be easily extended.

- For RHEL, in order to match the configuration of the standard VSI for VPC image, the setup uses traditional interfaces (e.g.,

root@syasuda-converter-fedora:~# export LIBGUESTFS_BACKEND=direct

root@syasuda-converter-fedora:~# virt-v2v -ic 'vpx://vsphere.local%5CAdministrator@vcsosv-vc.acs.japan.local/IBMCloud/vcs-osv-cluster/host-km000.acs.japan.local?no_verify=1' -it vddk -io vddk-libdir=/opt/vmware-vix-disklib-distrib/ -io vddk-thumbprint=D2:AE:CF:26:22:8C:89:13:A4:67:89:19:7F:64:1C:97:35:C8:11:54 "syasuda-rhel9-2" -ip vcenter_passwd.txt -o disk -os /tmp --run nwscript-update.sh

[ 0.0] Setting up the source: -i libvirt -ic vpx://vsphere.local%5CAdministrator@vcsosv-vc.acs.japan.local/IBMCloud/vcs-osv-cluster/host-km000.acs.japan.local?no_verify=1 -it vddk syasuda-rhel9-2

[ 1.6] Opening the source

[ 10.3] Checking filesystem integrity before conversion

[ 31.5] Detecting if this guest uses BIOS or UEFI to boot

[ 31.8] Inspecting the source

[ 35.0] Detecting the boot device

[ 35.1] Checking for sufficient free disk space in the guest

[ 35.1] Converting Red Hat Enterprise Linux 9.7 (Plow) (rhel9.7) to run on KVM

virt-v2v: This guest has virtio drivers installed.

[ 85.9] Setting a random seed

[ 86.0] Running: nwscript-update.sh

[ 89.8] SELinux relabelling

[ 96.6] Mapping filesystem data to avoid copying unused and blank areas

[ 97.4] Checking filesystem integrity after conversion

[ 99.6] Closing the overlay

[ 99.6] Assigning disks to buses

[ 99.6] Checking if the guest needs BIOS or UEFI to boot

virt-v2v: This guest requires UEFI on the target to boot.

[ 99.6] Setting up the destination: -o disk -os /tmp

[ 101.1] Copying disk 1/1

█ 100% [****************************************]

[ 450.4] Creating output metadata

virt-v2v: warning: get_osinfo_id: unknown guest operating system: rhel9.7

[ 450.4] Finishing off

#!/bin/bash

set -e

############################################

# CentOS/RHEL 7+ (update kernel parameter)

############################################

if grep -qE "release (7|8|9|10)" /etc/redhat-release; then

GRUB_CFG="/etc/default/grub"

CMDLINE=$(grep '^GRUB_CMDLINE_LINUX=' "$GRUB_CFG" | cut -d'"' -f2)

CMDLINE_DEFAULT=$(grep '^GRUB_CMDLINE_LINUX_DEFAULT=' "$GRUB_CFG" | cut -d'"' -f2)

[[ "$CMDLINE_DEFAULT" != *net.ifnames=0* && "$CMDLINE" != *net.ifnames=0* ]] && CMDLINE="$CMDLINE net.ifnames=0"

[[ "$CMDLINE_DEFAULT" != *biosdevname=0* && "$CMDLINE" != *biosdevname=0* ]] && CMDLINE="$CMDLINE biosdevname=0"

[[ "$CMDLINE_DEFAULT" != *vga=normal* && "$CMDLINE" != *vga=normal* ]] && CMDLINE="$CMDLINE vga=normal"

[[ "$CMDLINE_DEFAULT" != *console=tty1* && "$CMDLINE" != *console=tty1* ]] && CMDLINE="$CMDLINE console=tty1"

[[ "$CMDLINE_DEFAULT" != *console=ttyS0* && "$CMDLINE" != *console=ttyS0* ]] && CMDLINE="$CMDLINE console=ttyS0"

sed -i "s|^GRUB_CMDLINE_LINUX=.*|GRUB_CMDLINE_LINUX=\"$CMDLINE\"|" "$GRUB_CFG"

# For RHEL7

grub2-mkconfig -o /boot/grub2/grub.cfg 2>/dev/null || true

# For RHEL8+

grub2-mkconfig -o /boot/grub2/grub.cfg --update-bls-cmdline 2>/dev/null || true

fi

############################################

# Network Settings

############################################

rm -f /etc/NetworkManager/system-connections/* || true

rm -f /etc/sysconfig/network-scripts/ifcfg-* || true

rm -f /etc/sysconfig/network-scripts/route-* || true

rm -f /etc/udev/rules.d/70-persistent-net.rules || true

#keyfile for Network Manager

if grep -qE "release (7|8|9|10)" /etc/redhat-release; then

cat <<EOF >/etc/NetworkManager/system-connections/dhcp-any.nmconnection

[connection]

id=dhcp-any

type=ethernet

autoconnect=true

[ipv4]

method=auto

[ipv6]

method=disabled

EOF

chmod 600 /etc/NetworkManager/system-connections/dhcp-any.nmconnection

fi

#ifcfg for Network Manager or Legacy RHEL/CentOS

IFCFG_FILE="/etc/sysconfig/network-scripts/ifcfg-eth0"

cat > "$IFCFG_FILE" <<EOF

BOOTPROTO=dhcp

DEVICE=eth0

ONBOOT=yes

TYPE=Ethernet

EOF

chmod 600 "$IFCFG_FILE"

Step 3-5. Executing the Conversion (Windows)

- When executing the command, the hostname of the ESX host where the VM resides must be specified.

- Set the

VIRTIO_WINenvironment variable to explicitly specify the location of the virtio drivers. - In the Windows environment of

VSI for VPC, the boot disk usesvirtio-scsi, so you MUST specify the--block-driver virtio-scsioption.

root@syasuda-converter-fedora:~# export LIBGUESTFS_BACKEND=direct

root@syasuda-converter-fedora:~# export VIRTIO_WIN=/root/virtio-win-1.9.50.iso

root@syasuda-converter-fedora:~# virt-v2v -ic 'vpx://vsphere.local%5CAdministrator@vcsosv-vc.acs.japan.local/IBMCloud/vcs-osv-cluster/host-km001.acs.japan.local?no_verify=1' -it vddk -io vddk-libdir=/opt/vmware-vix-disklib-distrib/ -io vddk-thumbprint=D2:AE:CF:26:22:8C:89:13:A4:67:89:19:7F:64:1C:97:35:C8:11:54 "syasuda-win2022-1" -ip vcenter_passwd.txt --block-driver virtio-scsi -o disk -os /tmp

[ 0.0] Setting up the source: -i libvirt -ic vpx://vsphere.local%5CAdministrator@vcsosv-vc.acs.japan.local/IBMCloud/vcs-osv-cluster/host-km001.acs.japan.local?no_verify=1 -it vddk syasuda-win2022-1

[ 1.6] Opening the source

[ 11.1] Checking filesystem integrity before conversion

[ 11.7] Detecting if this guest uses BIOS or UEFI to boot

[ 12.2] Inspecting the source

[ 15.6] Detecting the boot device

[ 15.6] Checking for sufficient free disk space in the guest

[ 15.6] Converting Windows Server 2022 Standard Evaluation (win2k22) to run on KVM

virt-v2v: This guest has virtio drivers installed.

[ 30.8] Setting a random seed

virt-v2v: warning: random seed could not be set for this type of guest

[ 30.8] SELinux relabelling

[ 31.0] Fixing NTFS permissions

[ 31.0] Mapping filesystem data to avoid copying unused and blank areas

[ 31.8] Checking filesystem integrity after conversion

[ 32.3] Closing the overlay

[ 32.4] Assigning disks to buses

[ 32.4] Checking if the guest needs BIOS or UEFI to boot

virt-v2v: This guest requires UEFI on the target to boot.

[ 32.4] Setting up the destination: -o disk -os /tmp

[ 34.0] Copying disk 1/1

█ 100% [****************************************]

[ 383.6] Creating output metadata

[ 383.6] Finishing off

Step 4: Detach the Boot Disk

Use blockdev --flushbufsto reliably flush the page cache associated with the target block device, and then the boot disk to which the converted image was written is detached.

root@syasuda-converter-fedora:~# blockdev --flushbufs /dev/vdd

Step 5: Provision a New VSI Using the Boot Disk

Provision a VSI using this boot disk.

At the time of this initial provisioning, do not enable Secure Boot.

Also pay attention to the profile of the destination server.

- The bx2 profile (Cascade Lake) boots only in BIOS mode. It cannot boot in UEFI mode. Therefore, if the source environment assumes UEFI, this profile cannot be used to boot the system.

- The bx3 profile (Sapphire Rapids) and the bxf profile (Flex Profile) automatically detect the OS configuration and boot in either BIOS mode or UEFI mode.

Results are as follows.