目的

2019年3月26日(火)からミッキーの顔が変わりました

!?

どちらがbeforeでどちらがafter?

私の目ではよくわからないので、機械学習モデルで分類にチャレンジします。

(正解は、左:after 右:before)

準備

コード等は参考文献を大変参考にさせていただきました。

環境

Mac OS sirra 10.13.6

プロセッサ:2.3 GHz Intel Core i5

メモリ:8 GB 2133 MHz LPDDR3

グラフィック:Intel Iris Plus Graphics 640 1536 MB

画像を準備します。

1.google_images_downloadをインストール

pip install google_images_download

2.画像をダウンロードする

下記コマンドで画像を集めに行きます。

googleimagesdownload -k 検索したいワード

下記のように画像を切り出します。

(opencv-pythonでミッキーの顔は切り出せず、手動で切り出しました。ミッキー顔モデルあれば下さい。macだと、command+shift+4でscreen shotすると楽です)

機械学習ライブラリTensorflowをインストール

pip install tensorflow==2.0.0-alpha0

その他ライブラリをインストール(mac)

pip install matplotlib

pip install numpy

コード

256*256 JPEG imageの画像を格納しておきます。フォルダ構成は下記。

(after: new_micky_2, before: pre_micky_2 に読みかえて下さい。すいません。。)

- data

- train

- new_micky_2

- pre_micky_2

- validation

- new_micky_2

- pre_micky_2

- evaluation ※実評価用

Afterミッキー画像データ少ないので、こちらを参照して回転を駆使して画像生成しました。

最終的に下記枚数を用意しました。これを4:1程度の割合でtrain/validationに分けます。

Afterミッキー画像: 660 枚

Beforeミッキー画像: 551 枚

画像増やすコード(参照元:こちら)

in:pre_micky フォルダ --(画像増やす)--> out:pre_micky_2 フォルダ

# -*- coding: utf-8 -*-

import os

from PIL import Image, ImageFilter

def main():

data_dir_path = u"./pre_micky_2/"

data_dir_path_in = u"./pre_micky/"

file_list = os.listdir(r'./pre_micky/')

#data_dir_path = u"./new_micky_2/"

#data_dir_path_in = u"./new_micky/"

#file_list = os.listdir(r'./new_micky/')

for file_name in file_list:

root, ext = os.path.splitext(file_name)

if ext == u'.png' or u'.jpeg' or u'.jpg':

img = Image.open(data_dir_path_in + '/' + file_name)

tmp = img.transpose(Image.FLIP_LEFT_RIGHT)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r01.jpg')

tmp = img.transpose(Image.FLIP_TOP_BOTTOM)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r02.jpg')

tmp = img.transpose(Image.ROTATE_90)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r03.jpg')

tmp = img.transpose(Image.ROTATE_180)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r04.jpg')

tmp = img.transpose(Image.ROTATE_270)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r05.jpg')

tmp = img.rotate(15)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r06.jpg')

tmp = img.rotate(30)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r07.jpg')

tmp = img.rotate(45)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r08.jpg')

tmp = img.rotate(60)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r09.jpg')

tmp = img.rotate(75)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r10.jpg')

tmp = img.rotate(105)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r11.jpg')

tmp = img.rotate(120)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r12.jpg')

tmp = img.rotate(135)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r13.jpg')

tmp = img.rotate(150)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r14.jpg')

tmp = img.rotate(165)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r15.jpg')

tmp = img.rotate(195)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r16.jpg')

tmp = img.rotate(210)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r17.jpg')

tmp = img.rotate(225)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r18.jpg')

tmp = img.rotate(240)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r19.jpg')

tmp = img.rotate(255)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r20.jpg')

tmp = img.rotate(285)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r21.jpg')

tmp = img.rotate(300)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r22.jpg')

tmp = img.rotate(315)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r23.jpg')

tmp = img.rotate(330)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r24.jpg')

tmp = img.rotate(345)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r25.jpg')

tmp = img.filter(ImageFilter.FIND_EDGES)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r26.jpg')

tmp = img.filter(ImageFilter.EDGE_ENHANCE)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r27.jpg')

tmp = img.filter(ImageFilter.EDGE_ENHANCE_MORE)

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r28.jpg')

tmp = img.filter(ImageFilter.UnsharpMask(radius=5, percent=150, threshold=2))

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r29.jpg')

tmp = img.filter(ImageFilter.UnsharpMask(radius=10, percent=200, threshold=5))

if tmp.mode != "RGB":

tmp = tmp.convert("RGB")

tmp.save(data_dir_path + '/' + root +'_r30.jpg')

if __name__ == '__main__':

main()

学習コード

from tensorflow.python.keras import layers

from tensorflow.python.keras import models

from tensorflow.python.keras import optimizers

from tensorflow.python.keras.preprocessing.image import ImageDataGenerator

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

import glob

# モデルの定義

model = models.Sequential()

model.add(layers.Conv2D(16, (3, 3), activation='relu', input_shape=(256, 256, 3)))

model.add(layers.Conv2D(16, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(32, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

# モデルのコンパイル

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

model.summary()

# クラス宣言

train_datagen = ImageDataGenerator(

rescale=1.0 / 255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)

test_datagen = ImageDataGenerator(rescale=1.0 / 255)

# フォルダ指定

batch_size=23

train_generator = train_datagen.flow_from_directory(

'data/train',

target_size=(256, 256),

batch_size=batch_size,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

'data/validation',

target_size=(256, 256),

batch_size=batch_size,

class_mode='binary')

print(train_generator.class_indices)

# 学習

history = model.fit_generator(train_generator,

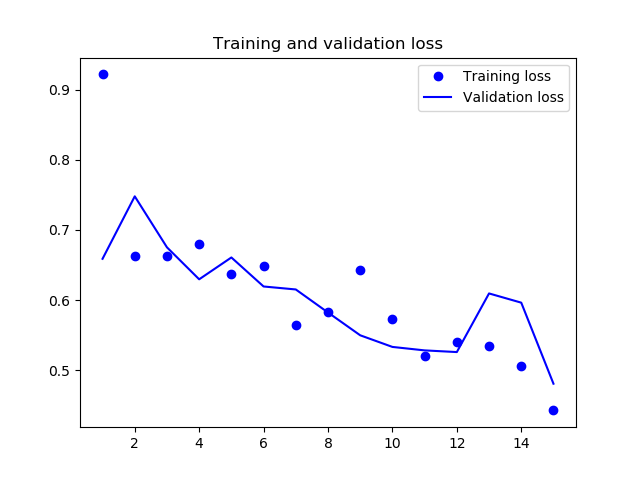

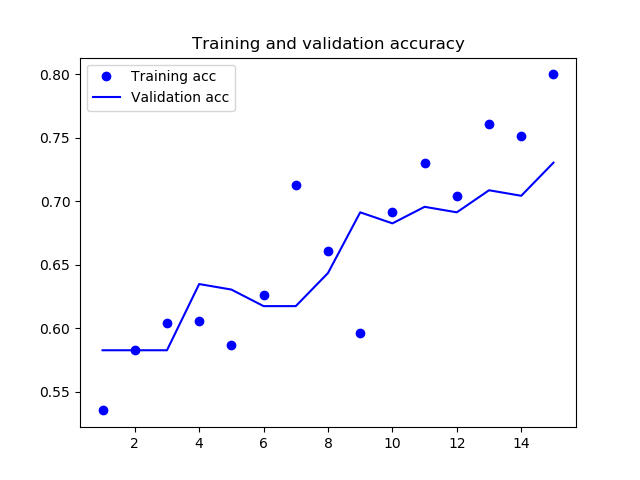

steps_per_epoch=10,

epochs=15,

validation_data=validation_generator,

validation_steps=10)

# model.save('./data/model-mickey.h5')

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

print(acc);

print(val_acc);

print(loss);

print(val_loss);

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.savefig("figure-acc.png")

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.savefig("figure-loss.png")

# evaluation

paths = glob.glob("./data/evaluation/*.jpg")

for path in paths:

img = Image.open(path).convert('RGB')

img = img.resize((256, 256))

x = np.array(img)

x = x / 255.

x = x[None, ...]

pred_prob = model.predict(x, batch_size=1, verbose=0)

# 2値分類なので[0,1]の確率を出力する。0.5以上なら1とする。

pred = np.round(pred_prob, 0)

print("---{0}---".format(path))

print(pred_prob)

print("{0}の予測結果は{1}です.".format(path, pred))

テスト結果

学習コードを実行します。

一回の実行に約10分掛かりました。

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 254, 254, 16) 160

_________________________________________________________________

conv2d_1 (Conv2D) (None, 252, 252, 16) 2320

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 126, 126, 16) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 124, 124, 32) 4640

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 62, 62, 32) 0

_________________________________________________________________

flatten (Flatten) (None, 123008) 0

_________________________________________________________________

dense (Dense) (None, 512) 62980608

_________________________________________________________________

dense_1 (Dense) (None, 256) 131328

_________________________________________________________________

dense_2 (Dense) (None, 1) 257

=================================================================

Total params: 63,119,313

Trainable params: 63,119,313

Non-trainable params: 0

_________________________________________________________________

Found 719 images belonging to 2 classes.

Found 452 images belonging to 2 classes.

{'new_micky_2': 0, 'pre_micky_2': 1}

Epoch 1/15

10/10 [==============================] - 22s 2s/step - loss: 0.9005 - acc: 0.5352 - val_loss: 0.6590 - val_acc: 0.5826

Epoch 2/15

10/10 [==============================] - 21s 2s/step - loss: 0.6633 - acc: 0.5826 - val_loss: 0.7479 - val_acc: 0.5826

Epoch 3/15

10/10 [==============================] - 19s 2s/step - loss: 0.6628 - acc: 0.6043 - val_loss: 0.6753 - val_acc: 0.5826

Epoch 4/15

10/10 [==============================] - 22s 2s/step - loss: 0.6604 - acc: 0.6056 - val_loss: 0.6298 - val_acc: 0.6348

Epoch 5/15

10/10 [==============================] - 21s 2s/step - loss: 0.6367 - acc: 0.5870 - val_loss: 0.6609 - val_acc: 0.6304

Epoch 6/15

10/10 [==============================] - 18s 2s/step - loss: 0.6484 - acc: 0.6261 - val_loss: 0.6195 - val_acc: 0.6174

Epoch 7/15

10/10 [==============================] - 20s 2s/step - loss: 0.5648 - acc: 0.7130 - val_loss: 0.6153 - val_acc: 0.6174

Epoch 8/15

10/10 [==============================] - 22s 2s/step - loss: 0.5836 - acc: 0.6609 - val_loss: 0.5825 - val_acc: 0.6435

Epoch 9/15

10/10 [==============================] - 20s 2s/step - loss: 0.6334 - acc: 0.5962 - val_loss: 0.5500 - val_acc: 0.6913

Epoch 10/15

10/10 [==============================] - 21s 2s/step - loss: 0.5732 - acc: 0.6913 - val_loss: 0.5334 - val_acc: 0.6826

Epoch 11/15

10/10 [==============================] - 21s 2s/step - loss: 0.5201 - acc: 0.7304 - val_loss: 0.5285 - val_acc: 0.6957

Epoch 12/15

10/10 [==============================] - 19s 2s/step - loss: 0.5405 - acc: 0.7043 - val_loss: 0.5261 - val_acc: 0.6913

Epoch 13/15

10/10 [==============================] - 19s 2s/step - loss: 0.5479 - acc: 0.7606 - val_loss: 0.6096 - val_acc: 0.7087

Epoch 14/15

10/10 [==============================] - 20s 2s/step - loss: 0.5019 - acc: 0.7512 - val_loss: 0.5965 - val_acc: 0.7043

Epoch 15/15

10/10 [==============================] - 21s 2s/step - loss: 0.4430 - acc: 0.8000 - val_loss: 0.4810 - val_acc: 0.7304

Training and validation accuracy

現状、約70%前後の精度が出ているようです。(少なくとも私の目よりは正確です。)

尚、ディズニー好きの方に尋ねると精度が0.77 でした、熟練者の目は侮れないと感じたと共に、

短時間でこれだけの学習モデルを構築できる時代になったのだなと感じる次第です。

実評価結果

ラベル

0 : 'new_micky_2'

1 : 'pre_micky_2'

勘所のある方は目視で予測をお願い致します。

---./data/evaluation/1.jpg---

[[0.01404036]]

./data/evaluation/1.jpgの予測結果は[[0.]]です.

(正解:0 new)

---./data/evaluation/2.jpg---

[[0.0665699]]

./data/evaluation/2.jpgの予測結果は[[0.]]です.

(正解:0 new)

---./data/evaluation/3.jpg---

[[0.07572532]]

./data/evaluation/3.jpgの予測結果は[[0.]]です.

(正解:0 new)

---./data/evaluation/4.jpg---

[[0.89546007]]

./data/evaluation/4.jpgの予測結果は[[1.]]です.

(正解:1 pre)

---./data/evaluation/5.jpg---

[[0.8607153]]

./data/evaluation/5.jpgの予測結果は[[1.]]です.

(正解:1 pre)

---./data/evaluation/6.jpg---

[[0.85950744]]

./data/evaluation/6.jpgの予測結果は[[1.]]です.

(正解:1 pre)

実評価テストは6問中6問正解してしまった。

学習モデルにとっては、簡単なテスト画像過ぎたのかもしれない。

ただし、今だに私はbefore/afterの区別がつかない。。

精度向上や評価指標など、

改善点があればご指導ご鞭撻の程よろしくお願いいたします。

(CNNやImageGeneratorの知見獲得は今後の課題とさせてください。)

2019/4/14追記

おそらく過学習している事に気付きました。現状の方式だと汎用性に乏しいと思います。

・データの与え方

・学習方法

に少なくとも工夫が必要と思います。

CodingError対策

Exception: A target array with shape (xxx, x) was passed for an output of shape (None, 1)

class_mode=の部分が不一致の場合に起こりました。class_mode='binary'にして解決

ImportError: cannot import name 'models' from 'tensorflow'

kerasの旧I/Fを使用した際に起こりました。最新I/Fを使用しましょう。

本ケースの場合は下記のimportの部分が誤っていました。

from tensorflow.python.keras import models

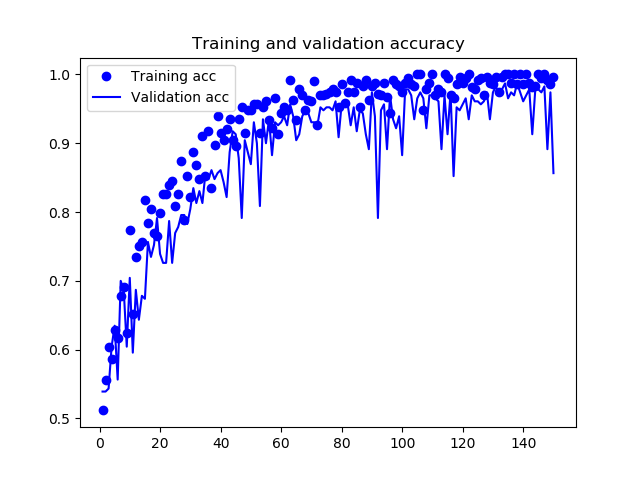

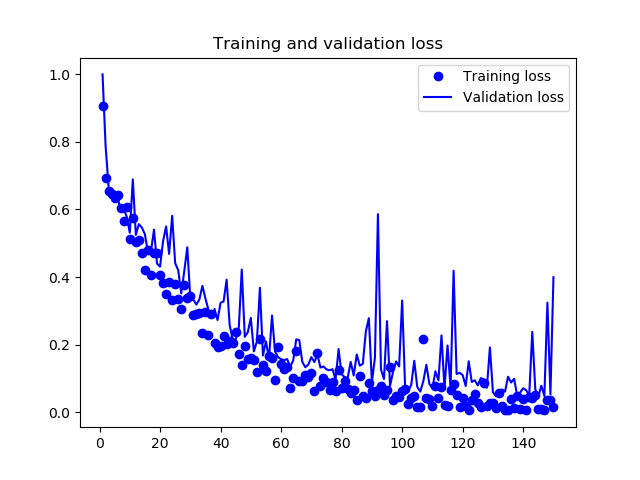

2019/4/4追記

EPOCH数を、15→150に増やして評価を再実施しました。

Epoch 1/150

10/10 [==============================] - 21s 2s/step - loss: 0.8748 - acc: 0.5117 - val_loss: 0.9994 - val_acc: 0.5391

Epoch 2/150

10/10 [==============================] - 19s 2s/step - loss: 0.6923 - acc: 0.5565 - val_loss: 0.7888 - val_acc: 0.5391

Epoch 3/150

10/10 [==============================] - 19s 2s/step - loss: 0.6538 - acc: 0.6043 - val_loss: 0.6662 - val_acc: 0.5435

Epoch 4/150

10/10 [==============================] - 20s 2s/step - loss: 0.6446 - acc: 0.5870 - val_loss: 0.6353 - val_acc: 0.6043

Epoch 5/150

10/10 [==============================] - 18s 2s/step - loss: 0.6443 - acc: 0.6291 - val_loss: 0.6254 - val_acc: 0.6348

Epoch 6/150

10/10 [==============================] - 17s 2s/step - loss: 0.6435 - acc: 0.6174 - val_loss: 0.6368 - val_acc: 0.5565

Epoch 7/150

10/10 [==============================] - 19s 2s/step - loss: 0.6046 - acc: 0.6783 - val_loss: 0.5926 - val_acc: 0.7000

Epoch 8/150

10/10 [==============================] - 19s 2s/step - loss: 0.5660 - acc: 0.6913 - val_loss: 0.6009 - val_acc: 0.6870

Epoch 9/150

10/10 [==============================] - 17s 2s/step - loss: 0.6128 - acc: 0.6244 - val_loss: 0.5784 - val_acc: 0.6043

Epoch 10/150

10/10 [==============================] - 19s 2s/step - loss: 0.5116 - acc:

---

(省略)

---

Epoch 50/150

10/10 [==============================] - 19s 2s/step - loss: 0.1624 - acc: 0.9478 - val_loss: 0.2794 - val_acc: 0.8696

Epoch 51/150

10/10 [==============================] - 684s 68s/step - loss: 0.1539 - acc: 0.9565 - val_loss: 0.1804 - val_acc: 0.9304

Epoch 52/150

10/10 [==============================] - 24s 2s/step - loss: 0.1200 - acc: 0.9565 - val_loss: 0.2099 - val_acc: 0.9000

Epoch 53/150

10/10 [==============================] - 18s 2s/step - loss: 0.2662 - acc: 0.9155 - val_loss: 0.3686 - val_acc: 0.8087

Epoch 54/150

10/10 [==============================] - 17s 2s/step - loss: 0.1405 - acc: 0.9522 - val_loss: 0.1684 - val_acc: 0.9348

Epoch 55/150

10/10 [==============================] - 19s 2s/step - loss: 0.1212 - acc: 0.9609 - val_loss: 0.2098 - val_acc: 0.9000

Epoch 56/150

10/10 [==============================] - 18s 2s/step - loss: 0.1852 - acc: 0.9343 - val_loss: 0.1590 - val_acc: 0.9348

Epoch 57/150

10/10 [==============================] - 17s 2s/step - loss: 0.1600 - acc: 0.9217 - val_loss: 0.2864 - val_acc: 0.8826

Epoch 58/150

10/10 [==============================] - 19s 2s/step - loss: 0.0964 - acc: 0.9652 - val_loss: 0.1801 - val_acc: 0.9304

Epoch 59/150

10/10 [==============================] - 18s 2s/step - loss: 0.1942 - acc: 0.9130 - val_loss: 0.1634 - val_acc: 0.9261

---

(省略)

---

Epoch 90/150

10/10 [==============================] - 19s 2s/step - loss: 0.0646 - acc: 0.9826 - val_loss: 0.0888 - val_acc: 0.9739

Epoch 91/150

10/10 [==============================] - 19s 2s/step - loss: 0.0477 - acc: 0.9870 - val_loss: 0.1615 - val_acc: 0.9391

Epoch 92/150

10/10 [==============================] - 17s 2s/step - loss: 0.0634 - acc: 0.9718 - val_loss: 0.5863 - val_acc: 0.7913

Epoch 93/150

10/10 [==============================] - 18s 2s/step - loss: 0.0783 - acc: 0.9696 - val_loss: 0.1271 - val_acc: 0.9478

Epoch 94/150

10/10 [==============================] - 19s 2s/step - loss: 0.0525 - acc: 0.9870 - val_loss: 0.0987 - val_acc: 0.9565

Epoch 95/150

10/10 [==============================] - 18s 2s/step - loss: 0.0629 - acc: 0.9671 - val_loss: 0.2698 - val_acc: 0.8913

Epoch 96/150

10/10 [==============================] - 18s 2s/step - loss: 0.1338 - acc: 0.9435 - val_loss: 0.0786 - val_acc: 0.9696

Epoch 97/150

10/10 [==============================] - 19s 2s/step - loss: 0.0356 - acc: 0.9913 - val_loss: 0.1204 - val_acc: 0.9348

Epoch 98/150

10/10 [==============================] - 18s 2s/step - loss: 0.0477 - acc: 0.9859 - val_loss: 0.1508 - val_acc: 0.9217

Epoch 99/150

10/10 [==============================] - 17s 2s/step - loss: 0.0461 - acc: 0.9826 - val_loss: 0.1359 - val_acc: 0.9391

Epoch 100/150

10/10 [==============================] - 20s 2s/step - loss: 0.0621 - acc: 0.9739 - val_loss: 0.3308 - val_acc: 0.8826

---

(省略)

---

Epoch 145/150

10/10 [==============================] - 19s 2s/step - loss: 0.0113 - acc: 1.0000 - val_loss: 0.0474 - val_acc: 0.9783

Epoch 146/150

10/10 [==============================] - 19s 2s/step - loss: 0.0091 - acc: 0.9953 - val_loss: 0.0789 - val_acc: 0.9739

Epoch 147/150

10/10 [==============================] - 16s 2s/step - loss: 0.0075 - acc: 1.0000 - val_loss: 0.0487 - val_acc: 0.9826

Epoch 148/150

10/10 [==============================] - 19s 2s/step - loss: 0.0381 - acc: 0.9913 - val_loss: 0.3247 - val_acc: 0.8913

Epoch 149/150

10/10 [==============================] - 18s 2s/step - loss: 0.0348 - acc: 0.9859 - val_loss: 0.0532 - val_acc: 0.9739

Epoch 150/150

10/10 [==============================] - 17s 2s/step - loss: 0.0148 - acc: 0.9957 - val_loss: 0.3998 - val_acc: 0.8565

---./data/evaluation/1.jpg---

[[1.1617852e-11]]

./data/evaluation/1.jpgの予測結果は[[0.]]です.

---./data/evaluation/2.jpg---

[[1.0098709e-08]]

./data/evaluation/2.jpgの予測結果は[[0.]]です.

---./data/evaluation/3.jpg---

[[3.072831e-10]]

./data/evaluation/3.jpgの予測結果は[[0.]]です.

---./data/evaluation/4.jpg---

[[0.9999826]]

./data/evaluation/4.jpgの予測結果は[[1.]]です.

---./data/evaluation/5.jpg---

[[0.9847676]]

./data/evaluation/5.jpgの予測結果は[[1.]]です.

---./data/evaluation/6.jpg---

[[0.9999534]]

./data/evaluation/6.jpgの予測結果は[[1.]]です.

EPOCH=50付近でacc=90%に達しました。

ただ汎化性能を示せているか怪しいです、汎化性能を高めるためには、

入力データのノイズ除去/入力データ自体の枚数N増しが必要?

参考

【速報】ミッキーの顔がニューフェイスに変わった!

TensorFlowで画像認識「〇〇判別機」を作る

Install TensorFlow with pip

haarcascades

少ないカラー画像を水増しして学習データを増やし、精度向上を図る!?

Kerasを使って2クラス分類のCNN

[Keras/TensorFlow] Kerasで自前のデータから学習と予測

KerasでCNNしたった

【NumPy入門 np.round】配列の要素を四捨五入する方法と、0.5は0か1か問題