AWS WAFのログ

AWS WAFは以下のようなログを出力します。

デフォルトではOFFになっており、公式ではKinesis Firehose経由での配信がサポートされています。

こちらのログを、AthenaおよびDatadogで閲覧できるようにインフラを構築してみましょう。

{

"id": "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz1234567890",

"content": {

"timestamp": "2021-05-15T00:00:00.000Z",

"tags": [

"aws_account:123456789012",

"env:production",

"region:ap-northeast-1",

"service:waf",

"source:waf",

"sourcecategory:aws"

],

"service": "waf",

"attributes": {

"http": {

"url_details": {

"path": "/"

},

"method": "POST",

"request_id": "abcdefghij1234567890"

},

"webaclId": "arn:aws:wafv2:ap-northeast-1:1234567890:regional/webacl/Sample/abcdefghij1234567890",

"httpSourceId": "123456789012:abcdefghij1234567890:production",

"httpSourceName": "APIGW",

"system": {

"action": "ALLOW"

},

"network": {

"client": {

"ip": "100.100.100.100"

}

},

"httpRequest": {

"country": "JP",

"httpVersion": "HTTP/1.1",

"args": "",

"headers": [

{

"name": "X-Forwarded-For",

"value": "100.100.100.100"

},

{

"name": "X-Forwarded-Proto",

"value": "https"

},

{

"name": "X-Forwarded-Port",

"value": "443"

},

...(省略)

]

},

"ruleGroupList": [

{

"ruleGroupId": "AWS#AWSManagedRulesCommonRuleSet"

}

],

"terminatingRuleId": "Default_Action",

"terminatingRuleType": "REGULAR",

"formatVersion": 1,

"timestamp": 1621036800

}

}

}

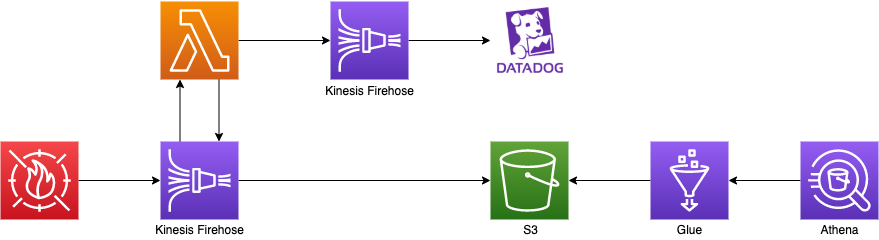

構成図

構成図は以下の通りとなります。

インフラ定義

Terraformで定義します。

コンソール画面でも構築可能ですので、参考にされる方はTerraformを読み替えてください。

S3

まずはWAFログを配置するS3バケットを作成します。

resource "aws_s3_bucket" "log_bucket" {

bucket = "xxxxxxxxxxxxxxxxxxxx" // バケット名

acl = "private"

}

Glueテーブル

Athenaで閲覧できるように、Glueでテーブルを作成します。

ログを配置するパスは

s3://xxxxxxxxxxxxxxxxxxxx/waf/year=2021/month=05/day=15/hour=00/XXXXXXXXXX.parquet

のようなルールとしました。(後述するFirehoseの配信設定で定義します)

Partition Projection機能によるパーティションを設定しています。

year=${"$"}{year}/month=${"$"}{month}/day=${"$"}{day}/hour=${"$"}{hour}

年/月/日/時 をそれぞれパーティションキーとしました。

resource "aws_glue_catalog_database" "database" {

name = "${var.service_name}_logs"

}

resource "aws_glue_catalog_table" "waf_catalog_table" {

database_name = aws_glue_catalog_database.database.name

name = "waf"

parameters = {

classification = "parquet"

"projection.enabled" = true

"projection.year.type" = "integer"

"projection.year.digits" = "4"

"projection.year.interval" = "1"

"projection.year.range" = "2021,2099"

"projection.month.type" = "enum"

"projection.month.values" = "01,02,03,04,05,06,07,08,09,10,11,12"

"projection.day.type" = "enum"

"projection.day.values" = "01,02,03,04,05,06,07,08,09,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31"

"projection.hour.type" = "enum"

"projection.hour.values" = "01,02,03,04,05,06,07,08,09,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24"

"storage.location.template" = "s3://${aws_s3_bucket.log_bucket.name}/waf/year=${"$"}{year}/month=${"$"}{month}/day=${"$"}{day}/hour=${"$"}{hour}"

}

partition_keys {

name = "year"

type = "string"

}

partition_keys {

name = "month"

type = "string"

}

partition_keys {

name = "day"

type = "string"

}

partition_keys {

name = "hour"

type = "string"

}

storage_descriptor {

location = "s3://${aws_s3_bucket.log_bucket.name}/waf/"

input_format = "org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat"

output_format = "org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat"

ser_de_info {

name = "waf"

serialization_library = "org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe"

parameters = {

"serialization.format" = 1

}

}

columns {

name = "timestamp"

type = "bigint"

}

columns {

name = "formatversion"

type = "int"

}

columns {

name = "webaclid"

type = "string"

}

columns {

name = "terminatingruleid"

type = "string"

}

columns {

name = "terminatingruletype"

type = "string"

}

columns {

name = "action"

type = "string"

}

columns {

name = "terminatingrulematchdetails"

type = "array<struct<conditiontype:string,location:string,matcheddata:array<string>>>"

}

columns {

name = "httpsourcename"

type = "string"

}

columns {

name = "httpsourceid"

type = "string"

}

columns {

name = "rulegrouplist"

type = "array<struct<rulegroupid:string,terminatingrule:struct<ruleid:string,action:string>,nonterminatingmatchingrules:array<struct<action:string,ruleid:string>>,excludedrules:array<struct<exclusiontype:string,ruleid:string>>>>"

}

columns {

name = "ratebasedrulelist"

type = "array<struct<ratebasedruleid:string,limitkey:string,maxrateallowed:int>>"

}

columns {

name = "nonterminatingmatchingrules"

type = "array<struct<ruleid:string,action:string>>"

}

columns {

name = "httprequest"

type = "struct<clientIp:string,country:string,headers:array<struct<name:string,value:string>>,uri:string,args:string,httpVersion:string,httpMethod:string,requestId:string>"

}

}

}

Kinesis Firehose

Firehoseの名前はaws-waf-logs-で始まる必要があります。

また、S3に配信するFirehoseとDatadogに配信するFirehoseをそれぞれ定義します。

S3配信用Firehoseは、Lambdaを挟むことによりDatadog配信用Firehoseにも送信するアーキテクチャとします。

(Lambdaの実装は後述します。)

KMSキーなどの変数部分はそれぞれで定義方法を判断してください。

S3配信用Firehose

ポイントは、ログを配置するS3のPrefixです。

ここは前述のGlue定義でパーティション分割のキーとなるので以下のように定義します。

prefix = "waf/year=!{timestamp:yyyy}/month=!{timestamp:MM}/day=!{timestamp:dd}/hour=!{timestamp:HH}/"

resource "aws_iam_role" "firehose_role" {

name = "${var.service_name}-Firehose-Role"

assume_role_policy = data.aws_iam_policy_document.firehose_assume_role.json

}

data "aws_iam_policy_document" "firehose_assume_role" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["firehose.amazonaws.com"]

}

condition {

test = "StringEquals"

variable = "sts:ExternalId"

values = [

var.aws_account_id

]

}

}

}

resource "aws_iam_policy" "firehose_policy" {

name = "${var.service_name}-Firehose-Policy"

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Action": [

"glue:GetTable",

"glue:GetTableVersion",

"glue:GetTableVersions"

],

"Resource": "*"

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"s3:AbortMultipartUpload",

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket",

"s3:ListBucketMultipartUploads",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::${aws_s3_bucket.log_bucket.name}",

"arn:aws:s3:::${aws_s3_bucket.log_bucket.name}/*",

"arn:aws:s3:::%FIREHOSE_BUCKET_NAME%",

"arn:aws:s3:::%FIREHOSE_BUCKET_NAME%/*"

]

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"lambda:InvokeFunction",

"lambda:GetFunctionConfiguration"

],

"Resource": "arn:aws:lambda:ap-northeast-1:${var.aws_account_id}:function:*:*"

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"logs:PutLogEvents"

],

"Resource": [

"*"

]

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"kinesis:DescribeStream",

"kinesis:GetShardIterator",

"kinesis:GetRecords",

"kinesis:ListShards"

],

"Resource": "arn:aws:kinesis:ap-northeast-1:${var.aws_account_id}:stream/%FIREHOSE_STREAM_NAME%"

},

{

"Effect": "Allow",

"Action": [

"kms:Decrypt"

],

"Resource": [

"arn:aws:kms:ap-northeast-1:${var.aws_account_id}:key/%SSE_KEY_ID%"

],

"Condition": {

"StringEquals": {

"kms:ViaService": "kinesis.%REGION_NAME%.amazonaws.com"

},

"StringLike": {

"kms:EncryptionContext:aws:kinesis:arn": "arn:aws:kinesis:%REGION_NAME%:${var.aws_account_id}:stream/%FIREHOSE_STREAM_NAME%"

}

}

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "firehose_policy_0" {

policy_arn = aws_iam_policy.firehose_policy.arn

role = aws_iam_role.firehose_role.name

}

resource "aws_kinesis_firehose_delivery_stream" "waf_firehose" {

destination = "extended_s3"

name = "aws-waf-logs-${var.service_name}"

extended_s3_configuration {

role_arn = aws_iam_role.firehose_role.arn

bucket_arn = aws_s3_bucket.log_bucket.arn

prefix = "waf/year=!{timestamp:yyyy}/month=!{timestamp:MM}/day=!{timestamp:dd}/hour=!{timestamp:HH}/"

error_output_prefix = "waf/errors/!{firehose:random-string}/!{firehose:error-output-type}/!{timestamp:yyyy-MM-dd}/"

compression_format = "UNCOMPRESSED"

buffer_interval = 300

buffer_size = 128

kms_key_arn = var.kms_key_arn

processing_configuration {

enabled = true

processors {

type = "Lambda"

parameters {

parameter_name = "LambdaArn"

parameter_value = "arn:aws:lambda:ap-northeast-1:${var.aws_account_id}:function:${var.waf_firehose_lambda_name}:$LATEST"

}

}

}

cloudwatch_logging_options {

enabled = true

log_group_name = "/aws/kinesisfirehose/aws-waf-logs-${var.service_name}"

log_stream_name = "S3Delivery"

}

data_format_conversion_configuration {

input_format_configuration {

deserializer {

open_x_json_ser_de {}

}

}

output_format_configuration {

serializer {

parquet_ser_de {}

}

}

schema_configuration {

database_name = aws_glue_catalog_database.database.name

table_name = aws_glue_catalog_table.waf_catalog_table.name

role_arn = aws_iam_role.firehose_role.arn

}

}

}

server_side_encryption {

enabled = true

key_type = "AWS_OWNED_CMK"

}

}

Datadog配信用Firehose

Datadogに送信するためにはHTTPエンドポイントによる配信を使用します。

APIキーは変数で挿入する形としています。

resource "aws_iam_role" "firehose_role" {

name = "DatadogForwarder-Firehose-Role"

assume_role_policy = data.aws_iam_policy_document.firehose_assume_role.json

}

data "aws_iam_policy_document" "firehose_assume_role" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["firehose.amazonaws.com"]

}

condition {

test = "StringEquals"

variable = "sts:ExternalId"

values = [

var.aws_account_id

]

}

}

}

resource "aws_iam_policy" "firehose_policy" {

name = "DatadogForwarder-Firehose-Policy"

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Action": [

"glue:GetTable",

"glue:GetTableVersion",

"glue:GetTableVersions"

],

"Resource": "*"

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"s3:AbortMultipartUpload",

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket",

"s3:ListBucketMultipartUploads",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::${aws_s3_bucket.log_bucket.name}",

"arn:aws:s3:::${aws_s3_bucket.log_bucket.name}/*",

"arn:aws:s3:::%FIREHOSE_BUCKET_NAME%",

"arn:aws:s3:::%FIREHOSE_BUCKET_NAME%/*"

]

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"lambda:InvokeFunction",

"lambda:GetFunctionConfiguration"

],

"Resource": "arn:aws:lambda:ap-northeast-1:${var.aws_account_id}:function:*:*"

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"logs:PutLogEvents"

],

"Resource": [

"*"

]

},

{

"Sid": "",

"Effect": "Allow",

"Action": [

"kinesis:DescribeStream",

"kinesis:GetShardIterator",

"kinesis:GetRecords",

"kinesis:ListShards"

],

"Resource": "arn:aws:kinesis:ap-northeast-1:${var.aws_account_id}:stream/%FIREHOSE_STREAM_NAME%"

},

{

"Effect": "Allow",

"Action": [

"kms:Decrypt"

],

"Resource": [

"arn:aws:kms:ap-northeast-1:${var.aws_account_id}:key/%SSE_KEY_ID%"

],

"Condition": {

"StringEquals": {

"kms:ViaService": "kinesis.%REGION_NAME%.amazonaws.com"

},

"StringLike": {

"kms:EncryptionContext:aws:kinesis:arn": "arn:aws:kinesis:%REGION_NAME%:${var.aws_account_id}:stream/%FIREHOSE_STREAM_NAME%"

}

}

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "firehose_policy_0" {

policy_arn = aws_iam_policy.firehose_policy.arn

role = aws_iam_role.firehose_role.name

}

resource "aws_kinesis_firehose_delivery_stream" "waf_datadog_forwarder" {

name = "aws-waf-logs-datadog-forwarder"

destination = "http_endpoint"

http_endpoint_configuration {

name = "Datadog"

url = "https://aws-kinesis-http-intake.logs.datadoghq.com/v1/input"

access_key = var.datadog_api_key_value

role_arn = aws_iam_role.firehose_role.arn

buffering_interval = 60

buffering_size = 4

retry_duration = 60

processing_configuration {

enabled = false

}

request_configuration {

content_encoding = "GZIP"

common_attributes {

name = "env"

value = var.stage

}

}

s3_backup_mode = "FailedDataOnly"

}

s3_configuration {

bucket_arn = aws_s3_bucket.log_bucket.arn

prefix = "/firehose/aws-waf-logs-datadog-forwarder"

compression_format = "GZIP"

kms_key_arn = var.kms_key_arn

role_arn = aws_iam_role.firehose_role.arn

}

server_side_encryption {

enabled = true

key_type = "AWS_OWNED_CMK"

}

}

Lambda

S3配信用Firehoseから、Datadog転送用Firehoseにも送信するようにLambdaを使用します。

S3配信用Firehoseには、受領したデータをそのまま返します。

'use strict';

const AWS = require('aws-sdk');

const deliveryStreamName = 'aws-waf-logs-datadog-forwarder';

const firehose = new AWS.Firehose({

region: 'ap-northeast-1',

});

module.exports.forwarder = async (event, context, callback) => {

const data = event.records.map(record => {

return {

Data: Buffer.from(record.data, 'base64').toString('utf8'),

}

});

firehose.putRecordBatch({

DeliveryStreamName: deliveryStreamName,

Records: data,

}, (err) => {

if (err) {

console.error(err, err.stack);

}

});

const output = event.records.map(record => {

return {

recordId: record.recordId,

result: 'Ok',

data: record.data,

};

});

callback(null, { records: output });

}

LambdaのロールにFirehose権限を付与するのを忘れないようにしましょう。

{

"Action": [

"firehose:PutRecordBatch"

],

"Resource": [

"arn:aws:firehose:ap-northeast-1:123456789012:deliverystream/*"

],

"Effect": "Allow"

}

WAF側の設定

WAFのログは、ONにするときにFirehoseを指定することになり

複雑な設定は不要ですので当記事の解説は割愛します。

おわりに

以上によりAthena、Datadogへのログ配信が可能になります。

Athenaではパーティションキーを使用して検索しましょう。

(例)

select * from waf where year = '2021' and month = '05' and day = '15' and hour = '00'