tensorflowでのGradients

Reinforcement Learningの主力モデルの一つであるA3Cのモデルを現在勉強中。

その際、数式の中でGradientsの表示が出てきたので、tensorflowでどのように表現することが出来るか探ってみました。

Gradients

今回はGradientsのhistgramを表示することにフォーカスしたいので、MNISTのプログラムからGradientsをどのように取得すべきかを検討してみました。

参考リンク

TensorBoard: Visualizing Learning

TensorBoard: How to plot histogram for gradients?

上記リンクを参考にして記事を書いています。

MNIST単純モデル

まずは、単純なtensorflowのconvolution modelを構築。

2層のconvolution layerからfully_connected層を経て10digitsのOutputsを作成します。

def weight_variable(shape):

initial_value = tf.truncated_normal(shape, stddev=0.1)

W = tf.get_variable("W",initializer=initial_value)

return W

def bias_variable(shape):

initial_value = tf.truncated_normal(shape, 0.0, 0.001)

b = tf.get_variable("b",initializer=initial_value)

return b

def conv2d(x, W, name="conv"):

with tf.variable_scope(name):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

def proc1():

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

x = tf.placeholder(tf.float32, [None, 784])

y_ = tf.placeholder(tf.float32, [None, 10])

with tf.variable_scope("mnist") as scope:

name = "conv1"

with tf.variable_scope(name) as scope:

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x, [-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1, name) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

name = "conv2"

with tf.variable_scope(name) as scope:

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2, name) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

name = "fc1"

with tf.variable_scope(name) as scope:

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

name = "fc2"

with tf.variable_scope(name) as scope:

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

with tf.variable_scope("logits_pred") as scope:

#logits = tf.matmul(x, W) + b

#logits = tf.nn.relu(logits)

logits = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

Trainable_Variables

各層でのそれぞれのweightsを取得したいので、

var = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES,"mnist")

取得したweightsが現状どのようになっているの確認したければ、

for v in var:

if "W" in v.name:

print(v)

とすることで簡易的に確認することができます。あくまでも簡易的です。

Training

train scopeを作成してその中でtensorflowのhistgramを使用してgradientsを出力するようにしています。

with tf.variable_scope("train") as scope:

grads = tf.gradients(cost, var)

gradients = list(zip(grads, var))

regularizer = 0.0

for w in Ws:

regularizer += tf.nn.l2_loss(w)

#

#print(gradients)

beta = 0.01

loss = tf.reduce_mean(cost + beta * regularizer)

opt = tf.train.GradientDescentOptimizer(1e-4)

#train_op = tf.train.AdamOptimizer(1e-4).minimize(loss)

g_and_v = opt.compute_gradients(cost, var)

#p = 1.

#eta = opt._learning_rate

#my_grads_and_vars = [(g-(1/eta)*p, v) for g, v in grads_and_vars]

train_op = opt.apply_gradients(grads_and_vars=g_and_v)

for index, grad in enumerate(g_and_v):

tf.summary.histogram("{}-grad".format(grads[index][1].name), grads[index])

tf.Session()

最後はtf.SessionでmergeされたOperatorをsummary_writerで書き出すことをしています。

merged_summary_op = tf.summary.merge_all()

save_path = None

with tf.Session() as sess:

if save_path is None:

save_path = 'experiments/' + \

strftime("%d-%m-%Y-%H:%M:%S/model", gmtime())

print("No save path specified, so saving to", save_path)

if not os.path.exists(save_path):

logging.debug("%s doesn't exist, so creating" , save_path)

os.makedirs(save_path)

init_op = tf.group(tf.global_variables_initializer(),

tf.local_variables_initializer())

sess.run(init_op)

saver = tf.train.Saver()

summary_writer = tf.summary.FileWriter(save_path, sess.graph)

for it in range(10000):

data,labels = mnist.train.next_batch(32)

#print(data.shape, labels.shape)

feeds = {x:data, y_:labels, keep_prob: 0.5}

train_op.run(feed_dict=feeds)

summary_str = sess.run(merged_summary_op, feed_dict=feeds)

summary_writer.add_summary(summary_str, it)

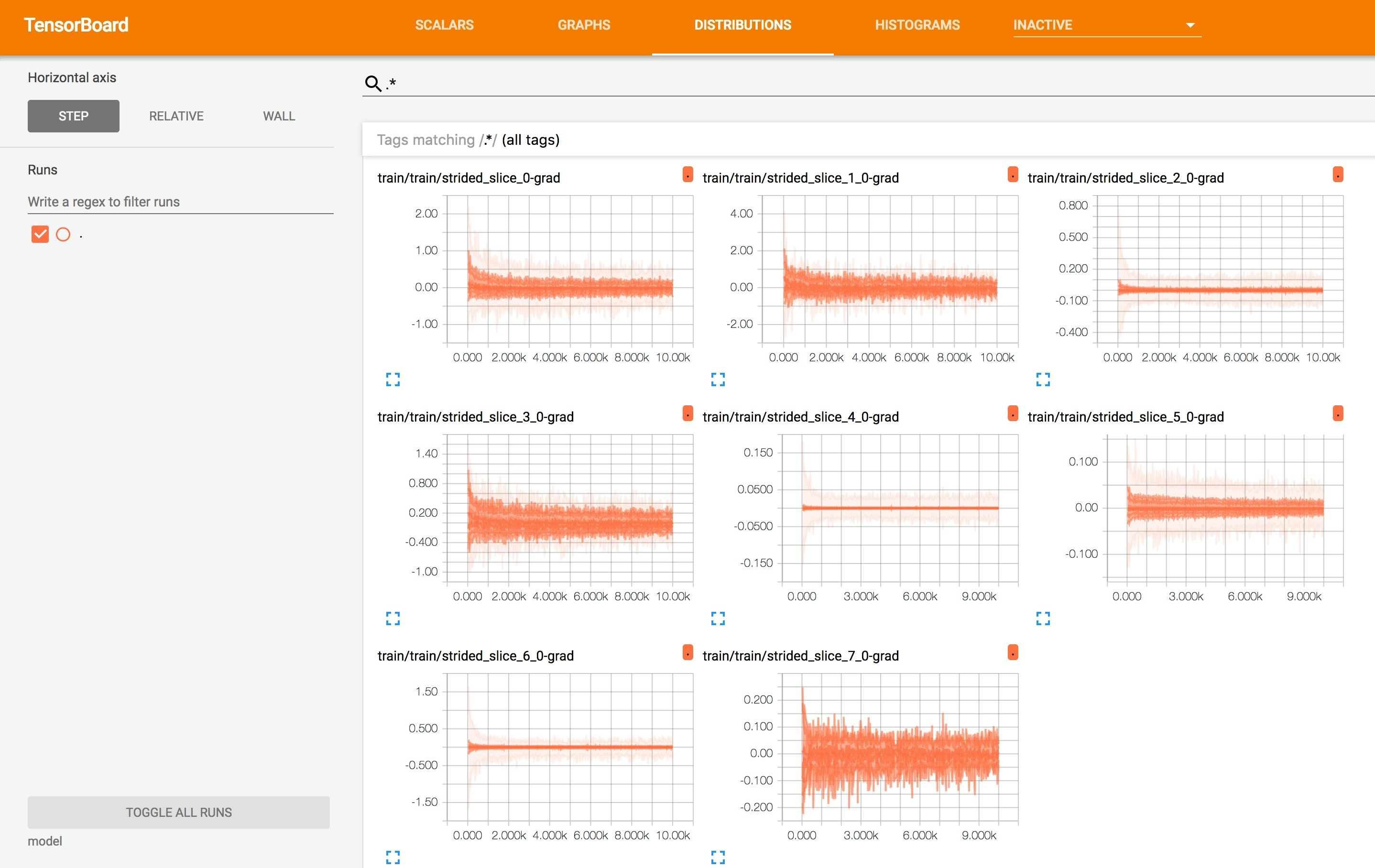

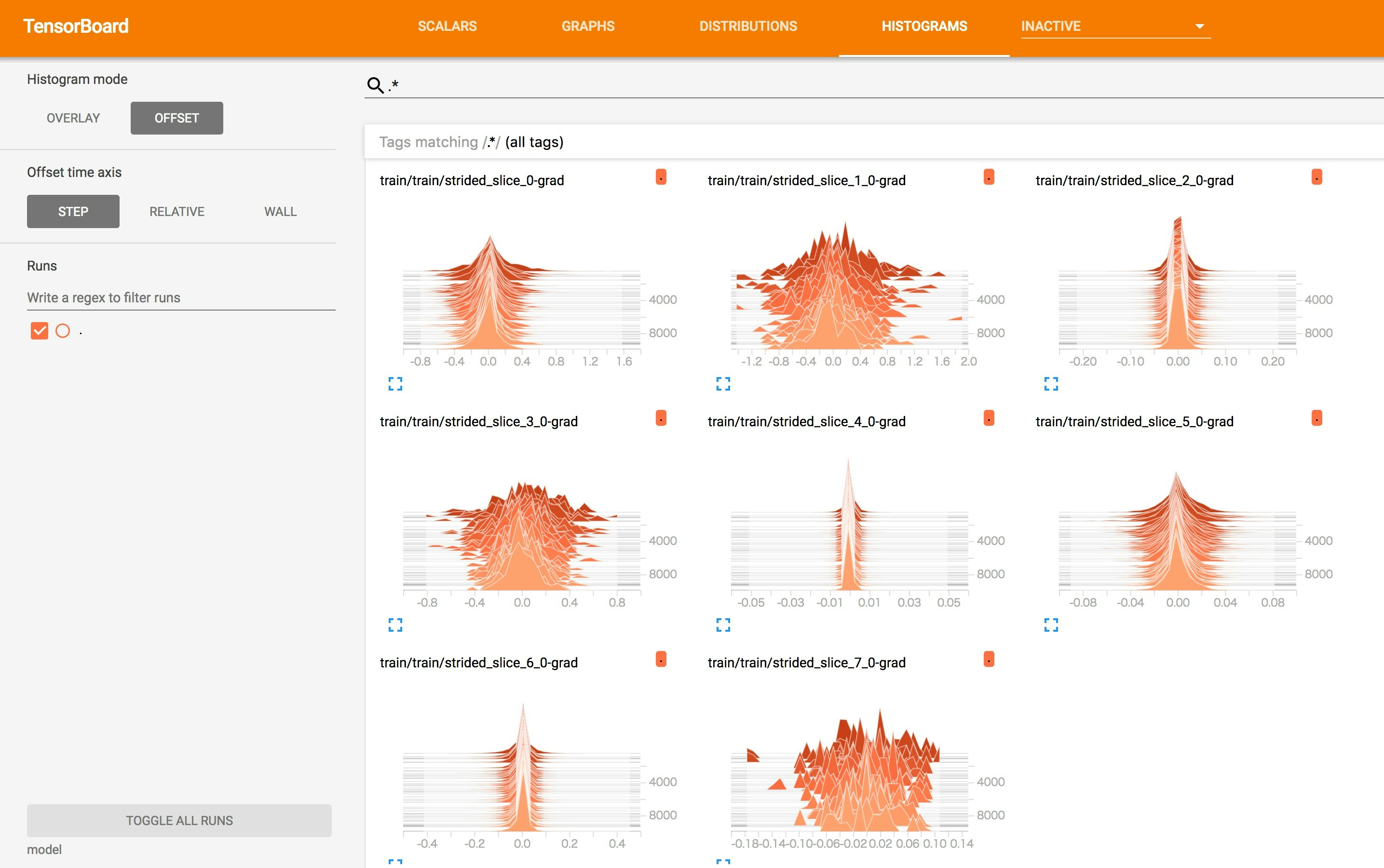

tensorboardでの結果表示

tensorboardでの結果表示imageを載せておきます。

実行環境:

- ubuntu 16TLS

- tensorflow 1.4

- GTX1080Ti x 1

なお、10000回のiterationにかかる時間は30分以上でした。