流行りに遅れてるかもしれませんが、機械学習について色々調べています。どれくらい凄いことが出来るのかざっと確かめるために MNIST と呼ばれる数字画像を色々な方法で分類してみました。

from sklearn import datasets

from sklearn.linear_model import LogisticRegression, LinearRegression

from sklearn.metrics import accuracy_score, f1_score

from sklearn.model_selection import train_test_split

from sklearn.neural_network import MLPClassifier

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.svm import LinearSVC, SVC

from tensorflow import keras

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

データのダウンロードと前処理

まず scikit-learn のライブラリを使って数字データをダウンロードします。ちょっと時間がかかります。X に画像データ、y に正解ラベルが入ります。

# Load data from https://www.openml.org/d/554

%time X, y = datasets.fetch_openml('mnist_784', version=1, return_X_y=True)

CPU times: user 23.4 s, sys: 1.88 s, total: 25.3 s

Wall time: 25.5 s

画像データは 70,000 * 784 の個の配列です。numpy の世界では配列の形は [70000, 784] のように外側 → 内側の順に書きます。

- 1ピクセルは 0 - 255 です。

- 一つの数字は 28 * 28 = 784 個のピクセルです

- 全部で 70,000 個の数字画像が含まれています。

print(f'正解データ: {y}')

print(f'画像の次元: {X.shape}')

print(f'最初のデータ: \n{X[0]}')

# 画像データは 784 個のピクセルが一列に並んでいるので、

# 表示するには X[0].reshape(28,28) のように reshape で 28 x 28 の二次元配列に変換します。

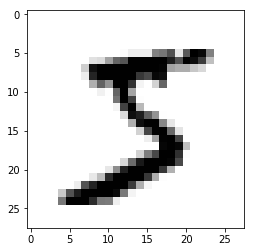

print(f'最初のデータ画像')

plt.imshow(X[0].reshape(28,28), cmap=plt.cm.gray_r)

正解データ: ['5' '0' '4' ... '4' '5' '6']

画像の次元: (70000, 784)

最初のデータ:

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 3. 18.

18. 18. 126. 136. 175. 26. 166. 255. 247. 127. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 30. 36. 94. 154. 170. 253.

253. 253. 253. 253. 225. 172. 253. 242. 195. 64. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 49. 238. 253. 253. 253. 253. 253.

253. 253. 253. 251. 93. 82. 82. 56. 39. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 18. 219. 253. 253. 253. 253. 253.

198. 182. 247. 241. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 80. 156. 107. 253. 253. 205.

11. 0. 43. 154. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 14. 1. 154. 253. 90.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 139. 253. 190.

2. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 11. 190. 253.

70. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 35. 241.

225. 160. 108. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 81.

240. 253. 253. 119. 25. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

45. 186. 253. 253. 150. 27. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 16. 93. 252. 253. 187. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 249. 253. 249. 64. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

46. 130. 183. 253. 253. 207. 2. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 39. 148.

229. 253. 253. 253. 250. 182. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 24. 114. 221. 253.

253. 253. 253. 201. 78. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 23. 66. 213. 253. 253. 253.

253. 198. 81. 2. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 18. 171. 219. 253. 253. 253. 253. 195.

80. 9. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 55. 172. 226. 253. 253. 253. 253. 244. 133. 11.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 136. 253. 253. 253. 212. 135. 132. 16. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

最初のデータ画像

データを教師データとテストデータに分けておきます。その際にピクセル値が 0 - 1 になるように調整します。また、正解データが文字列なので、そのまま数値に変換します。

X_train, X_test, y_train, y_test = train_test_split(X / 255, # ピクセル値が 0 - 1 になるようにする

y.astype('int64'), # 正解データを数値にする

stratify = y,

random_state=0)

print(f'X_train の長さ: {len(X_train)}')

print(f'X_test の長さ: {len(X_test)}')

print(f'X_train の内容: {X_train}')

print(f'X_test の内容: {X_test}')

print(f'y_train の内容: {y_train}')

print(f'y_test の内容: {y_test}')

X_train の長さ: 52500

X_test の長さ: 17500

X_train の内容: [[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

...

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]]

X_test の内容: [[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

...

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]]

y_train の内容: [2 1 2 ... 6 5 5]

y_test の内容: [7 2 7 ... 3 7 6]

scikit-learn でロジスティック回帰

基本のロジスティック回帰をしてみます。784 個の点で単純に予測するだけなのに意外と 92.5 % と健闘します。

def sklearn_logistic():

clf = LogisticRegression(solver='lbfgs', multi_class='auto')

clf.fit(X_train, y_train) # 学習

print('accuracy_score: %.3f' % clf.score(X_test, y_test)) # 検証

%time sklearn_logistic()

accuracy_score: 0.925

CPU times: user 54.8 s, sys: 2.46 s, total: 57.3 s

Wall time: 14.8 s

/Users/tyamamiya/.local/share/virtualenvs/predictor-VCYBZ8Xn/lib/python3.6/site-packages/sklearn/linear_model/logistic.py:758: ConvergenceWarning: lbfgs failed to converge. Increase the number of iterations.

"of iterations.", ConvergenceWarning)

scikit-learn で Support Vector Machines (SVC)

同様に SVC というやつを試します。めちゃくちゃ時間がかかります(私のマシンで 11 分)。784 個の点で単純に予測するだけなのに 94.6% です。

def sklearn_svc():

clf = SVC(kernel='rbf', gamma='auto', random_state=0, C=2)

clf.fit(X_train, y_train)

print('accuracy_score: %.3f' % clf.score(X_test, y_test))

%time sklearn_svc()

accuracy_score: 0.946

CPU times: user 11min 30s, sys: 1.91 s, total: 11min 32s

Wall time: 11min 33s

scikit-learn でニュラルネットワーク

scikit-learn にはお手軽にニュラルネットワークを試せる MLPClassifier というのがあります。97.6% です。さすが!

def sklearn_mlp():

clf = MLPClassifier(hidden_layer_sizes=(128,), solver='adam', max_iter=20, verbose=10, random_state=0)

clf.fit(X_train, y_train)

print('accuracy_score: %.3f' % clf.score(X_test, y_test))

%time sklearn_mlp()

Iteration 1, loss = 0.42688226

Iteration 2, loss = 0.20116195

Iteration 3, loss = 0.15006803

Iteration 4, loss = 0.11915271

Iteration 5, loss = 0.09875778

Iteration 6, loss = 0.08330753

Iteration 7, loss = 0.07107738

Iteration 8, loss = 0.06181587

Iteration 9, loss = 0.05333600

Iteration 10, loss = 0.04795776

Iteration 11, loss = 0.04195001

Iteration 12, loss = 0.03634990

Iteration 13, loss = 0.03223744

Iteration 14, loss = 0.02872861

Iteration 15, loss = 0.02420542

Iteration 16, loss = 0.02191879

Iteration 17, loss = 0.01904267

Iteration 18, loss = 0.01703576

Iteration 19, loss = 0.01456136

Iteration 20, loss = 0.01302070

accuracy_score: 0.976

CPU times: user 1min 4s, sys: 10.1 s, total: 1min 14s

Wall time: 21.9 s

/Users/tyamamiya/.local/share/virtualenvs/predictor-VCYBZ8Xn/lib/python3.6/site-packages/sklearn/neural_network/multilayer_perceptron.py:562: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (20) reached and the optimization hasn't converged yet.

% self.max_iter, ConvergenceWarning)

Tensorflow でニューラルネットワーク

Tensorflow で同じようなネットワークを作ります。97.7% と同じような値が出ます。

def tensorflow_mlp():

model = keras.Sequential([

keras.layers.Dense(128, activation=tf.nn.relu),

keras.layers.Dense(10, activation=tf.nn.softmax)

])

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train, y_train, epochs=20)

y_test_predict_list = model.predict(X_test)

y_test_predict = np.argmax(y_test_predict_list, axis=1)

print('accuracy_score: %.3f' % accuracy_score(y_test, y_test_predict))

%time tensorflow_mlp()

Epoch 1/20

52500/52500 [==============================] - 3s 63us/step - loss: 0.2745 - acc: 0.9220

Epoch 2/20

52500/52500 [==============================] - 3s 52us/step - loss: 0.1233 - acc: 0.9634

Epoch 3/20

52500/52500 [==============================] - 3s 52us/step - loss: 0.0834 - acc: 0.9747

Epoch 4/20

52500/52500 [==============================] - 3s 53us/step - loss: 0.0621 - acc: 0.9805

Epoch 5/20

52500/52500 [==============================] - 3s 53us/step - loss: 0.0473 - acc: 0.9851: 1

Epoch 6/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0368 - acc: 0.9887

Epoch 7/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0290 - acc: 0.9911

Epoch 8/20

52500/52500 [==============================] - 3s 53us/step - loss: 0.0239 - acc: 0.9929

Epoch 9/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0194 - acc: 0.9938

Epoch 10/20

52500/52500 [==============================] - 3s 55us/step - loss: 0.0141 - acc: 0.9960

Epoch 11/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0140 - acc: 0.9956

Epoch 12/20

52500/52500 [==============================] - 3s 52us/step - loss: 0.0114 - acc: 0.9962

Epoch 13/20

52500/52500 [==============================] - 3s 53us/step - loss: 0.0096 - acc: 0.9971

Epoch 14/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0082 - acc: 0.9974

Epoch 15/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0079 - acc: 0.9974

Epoch 16/20

52500/52500 [==============================] - 3s 55us/step - loss: 0.0077 - acc: 0.9977

Epoch 17/20

52500/52500 [==============================] - 3s 52us/step - loss: 0.0054 - acc: 0.9985

Epoch 18/20

52500/52500 [==============================] - 3s 52us/step - loss: 0.0066 - acc: 0.9981

Epoch 19/20

52500/52500 [==============================] - 3s 54us/step - loss: 0.0018 - acc: 0.9998

Epoch 20/20

52500/52500 [==============================] - 3s 51us/step - loss: 0.0068 - acc: 0.9975

accuracy_score: 0.977

CPU times: user 1min 26s, sys: 15.8 s, total: 1min 42s

Wall time: 57.1 s

Tensorflow で CNN

単純なニューラルネットワークで 97.9% も出てしまいましたが、今流行りのディープラーニングの一つ CNN という手法も試してみます。99.2% 出ます。やりましたね!

これまではピクセル全部を雑にまとめて学習していましたが、Convolution 層というので近くのピクセルから特徴量を作るのがポイントです。

Convolution 層の計算は結構重くて、GPU が無いとちょっと面倒です。https://colab.research.google.com を使うなどして工夫しましょう。逆に、Convolution 層を使わない場合自分のマックで計算してもあまり速くなりませんでした。以下ポイントです。

- Convolution 層 に keras.layers.Conv2D を使うのですが、入力次元が (縦, 横, チャンネル数) と決まっています。モノクロの場合でも (28, 28, 1) のようにチャンネル数 1 の次元を作る必要があります。

- model.fit の時は Conv2D に必要な次元に合わせて X_train.reshape(-1, 28, 28, 1) のように次元を変更します。-1 を指定すると配列の長さから適当に調整してくれます。

- 意外とスコアが上がらないので keras.layers.Dropout という過学習を防ぐ仕組みを入れると何とか 99% 超え出来ました。

def tensorflow_cnn():

model = keras.Sequential([

keras.layers.Conv2D(32, activation=tf.nn.relu, kernel_size=(5,5), padding='same', input_shape=(28, 28, 1)),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Conv2D(32, activation=tf.nn.relu, kernel_size=(5,5), padding='same'),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Flatten(),

keras.layers.Dense(128, activation=tf.nn.relu),

keras.layers.Dropout(0.2),

keras.layers.Dense(10, activation=tf.nn.softmax),

])

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.summary()

model.fit(X_train.reshape(-1, 28, 28, 1), y_train, epochs=10)

y_test_predict_list = model.predict(X_test.reshape(-1, 28, 28, 1))

y_test_predict = np.argmax(y_test_predict_list, axis=1)

print('accuracy_score: %.3f' % accuracy_score(y_test, y_test_predict))

%time tensorflow_cnn()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_33 (Conv2D) (None, 28, 28, 32) 832

_________________________________________________________________

max_pooling2d_22 (MaxPooling (None, 14, 14, 32) 0

_________________________________________________________________

conv2d_34 (Conv2D) (None, 14, 14, 32) 25632

_________________________________________________________________

max_pooling2d_23 (MaxPooling (None, 7, 7, 32) 0

_________________________________________________________________

flatten_36 (Flatten) (None, 1568) 0

_________________________________________________________________

dense_46 (Dense) (None, 128) 200832

_________________________________________________________________

dropout_1 (Dropout) (None, 128) 0

_________________________________________________________________

dense_47 (Dense) (None, 10) 1290

=================================================================

Total params: 228,586

Trainable params: 228,586

Non-trainable params: 0

_________________________________________________________________

Epoch 1/10

52500/52500 [==============================] - 58s 1ms/step - loss: 0.1440 - acc: 0.9545

Epoch 2/10

52500/52500 [==============================] - 54s 1ms/step - loss: 0.0495 - acc: 0.9847

Epoch 3/10

52500/52500 [==============================] - 52s 994us/step - loss: 0.0345 - acc: 0.9888

Epoch 4/10

52500/52500 [==============================] - 52s 984us/step - loss: 0.0273 - acc: 0.9911

Epoch 5/10

52500/52500 [==============================] - 52s 995us/step - loss: 0.0208 - acc: 0.9934

Epoch 6/10

52500/52500 [==============================] - 55s 1ms/step - loss: 0.0182 - acc: 0.9940

Epoch 7/10

52500/52500 [==============================] - 53s 1ms/step - loss: 0.0145 - acc: 0.9954:

Epoch 8/10

52500/52500 [==============================] - 53s 1ms/step - loss: 0.0136 - acc: 0.9955

Epoch 9/10

52500/52500 [==============================] - 53s 1ms/step - loss: 0.0116 - acc: 0.9963

Epoch 10/10

52500/52500 [==============================] - 53s 1ms/step - loss: 0.0109 - acc: 0.9966

accuracy_score: 0.992

CPU times: user 30min 52s, sys: 3min 22s, total: 34min 15s

Wall time: 8min 59s

まとめ

- scikit-learn でロジスティック回帰: 92.5%

- scikit-learn で Support Vector Machines (SVC): 94.6%

- scikit-learn でニュラルネットワーク: 97.6%

- Tensorflow でニューラルネットワーク: 97.7%

- Tensorflow で CNN: 99.2%