Table of contents

- A bit of context

- About this presentation

- Overview of Kubernetes on AWS

- Detailed guide for kube-aws

A bit of context

- Kubernetes

- AWS

- Why Kubernetes on AWS

- Why they matter to you

Kubernetes in a nutshell

Kubernetes in a nutshell

An open-source system for automating deployment, scaling, and management of containerized applications.

http://kubernetes.io/

- Hosted at

https://github.com/kubernetes/kubernetes

https://github.com/kubernetes/kubernetes

Kubernetes does

Kubernetes does

- Automatically keep your applications running according to your requirements

- Types of an application: long-running/one-shot, stateful/stateless, standalone/distributed

- Required resources like CPU, memory, storage, machine, network capability, permissions, etc.

Kubernetes does

Kubernetes does

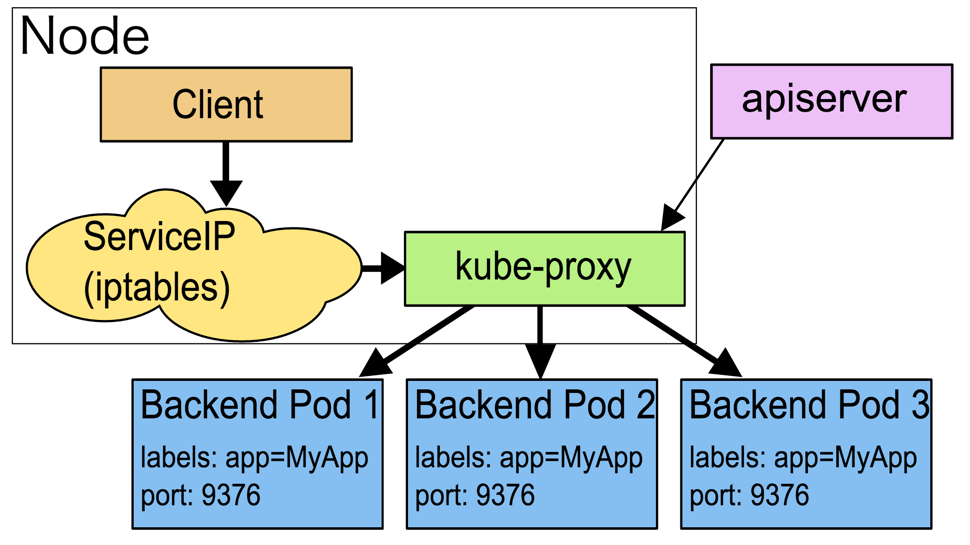

- Provides APIs to CRUD desired states of your applications

- Converges your applications to their desired states

Kubernetes Alternatives

- Docker Swarm

- Hashicorp Nomad

- Kontena

- AWS ECS(EC2 Container Service)

- Mesos

- etc.

3 catchphrases of Kubernetes

- Planet Scale

- Never Outgrow

- Runs Anywhere

"Planet Scale" with Kubernetes

"Planet Scale" with Kubernetes

Designed on the same principles that allows Google to run billions of containers a week

http://kubernetes.io/

You "Never Outgrow" Kubernetes

You "Never Outgrow" Kubernetes

Whether testing locally or running a global enterprise, Kubernetes flexibility grows with you to deliver your applications consistently and easily no matter how complex your need is

http://kubernetes.io/

How we can never outgrow?

- Model your application in Kubernetes way:

- Containers, Pods, Jobs, ReplicaSets, Deployments, StatefulSets, etc.

Kubernetes Models 1/4

- Containers = cgroups + namespaces w/ copy-on-write

- Pods = VMs in the container era w/ shared CPU, memory, volumes, permissions

Kubernetes Models 2/4

- Services = Named sets of pods load balanced via kubernetes internal DNS

Kubernetes Models 3/4

- Jobs = One-shot or long-running jobs backed by pod(s), with optional parallelism

- ReplicaSets = A set of identical pods with its number maintained

Kubernetes Models 4/4

- Deployments = Desired state of your ReplicaSet

- StatefulSets = A set of named, persistent pods to host e.g. stateful distributed system like databases, filesystems

Kubernetes "Runs Anywhere"

Kubernetes "Runs Anywhere"

Kubernetes is open source giving you the freedom to take advantage of on-premise, hybrid, or public cloud infrastructure, letting you effortlessly move workloads to where it matters to you.

http://kubernetes.io/

Anywhere = ?

- Run your Kubernetes locally for development with minikube

- On IaaSes including Baremetal, GCP, AWS, OpenStack, etc.

- On Ubuntu, CentOS, CoreOS, etc.

Context: AWS

-

Kubernetes

Kubernetes - AWS

- Why Kubernetes on AWS

- Why they matter to you

AWS is a leader in IaaS

AWS is IaaS

- VPS

- IaaS

- PaaS

- SaaS

VPS

VPS

- Virtual Private Server

- Equipped with a common set of services(including installed softwares, public IPs, storages, CPUs, memories, etc.)

- e.g. DigitalOcean, さくらのVPS

IaaS

IaaS

- Infrastructure as a Service

- Serves you a series of resources and APIs to build your own virtual data centers

- e.g. Azure, GCP, AWS, etc

PaaS

PaaS

- Platform as a Service

- Serves you a platform to run your application without taking care of the infrastructure

- e.g. Heroku, Google App Engine

SaaS

SaaS

- Software as a Service

- Serves you software(s) or managed applications to assists part of your business(es)

Context: Why Kubernetes on AWS

-

Kubernetes

Kubernetes -

AWS

AWS - Why Kubernetes on AWS

- Why they matter to you

Recap: What is Kubernetes?

- Kubernetes is all about how to run your application in an unified way regardless of where it runs: from home to a datacenter, from a laptop to a rack of blade servers

Kubernetes on What?

- VPS can be used to run Kubernetes

- There're PaaSes to host your Kubernetes clusters

- There're SaaSes hosted by Kubernetes

- There're IaaSes provides foundations to build/host your Kubernetes clusters

So: Why Kubernetes on IaaS?

- IaaS allow us to build our own PaaS based on Kubernetes to host our SaaS according to our requirements

Context: Why they matter to you

-

Kubernetes

Kubernetes -

AWS

AWS -

Why Kubernetes on AWS

Why Kubernetes on AWS - Why they matter to you

builderscon is

builderscon is

- IMHO builderscon is almost a conference for programmers.

- Why all those things matter to us programmers?

From a programmer's viewpoint: API

- Kubernetes provides a rich set of APIs to manage Kubernetes resources

- AWS provides a rich set of APIs to manage AWS resources

From a programmer's viewpoint: Kubernetes and AWS

- Kubernetes is a just framework to program how my applications are managed

- AWS is a just framework to program how my Kubernetes clusters are managed

I'd like to program apps, not manage them

I'd like to program apps, not manage them

- Kubernetes allows us to focus on programming

- Also: Kubernetes and its surrounding ecosystem themselves would be interesting to be programmed

Now, you understand the context

Now, you understand the context

-

Kubernetes

Kubernetes -

AWS

AWS -

Why they matter to you

Why they matter to you

Let's keep going ![]()

Table of contents

- A bit of context

- About this presentation

- Overview of Kubernetes on AWS

- Detailed guide for kube-aws

About this presentation

- Topics explained in this presentation

- Questions answered in this presentation

Topics explained in this presentation

Topics explained in this presentation

- Overview of Kubernetes on AWS

- Detailed guide for kube-aws

- Related personal experience(s) from the presenter

Questions answered in this presentation

Questions answered in this presentation

- How to achieve high scalability w/ Kubernetes on AWS?

- How to achieve high availability w/ Kubernetes on AWS?

- Are there any tool(s) to deploy and manage Kubernetes clusters?

- How to select tool(s) for your use?

- Requirements which arises frequently today

- What is kube-aws? How to use it?

- What's my use-case?

- Requirements

- Tools

Table of contents

- A bit of context

- About this presentation

- Overview of Kubernetes on AWS

- Detailed guide for kube-aws

Overview of Kubernetes on AWS

- How to achieve high availability and scalability

- Tools

- How to select those tools

High availability with Kubernetes on AWS

- High availability in nutshell

- How to achieve it

High availability in nutshell

High availability in nutshell

Entirely/Almost no downtime when a expected/unexpected shutdown of 1 or more nodes and pods

- Pods = Sets of containers running on EC2 instances

- Nodes = EC2 instances

High availability and Redundancy

- Pods = Sets of containers

- 2 or more identical pods

- ReplicaSets in Kubernetes

- Nodes = EC2 instances

- 2 or more nodes usable to host the same set of pods

- Auto Scaling Group and/or Spot Fleet in AWS

High scalability with Kubernetes on AWS

- High scalability in nutshell

- How to achieve it

High scalability in nutshell

High scalability in nutshell

No redundant cost + No user visible frustration in less/more workload

- Pods = Sets of containers running on EC2 instances

- Nodes = EC2 instances

Cost = Number of nodes * unit price

Number of nodes = Total resources required by pods

High scalability for pods

- Pods = Sets of containers

- Less pods when less workload, more pods when more workload

- Kubernetes's Horizontal Pod Autoscaler

- kube-sqs-autoscaler from Wattpad

High scalability for nodes

- Nodes = EC2 instances

- Less nodes when less pods, more pods when more workload

- Kubernetes's cluster-autoscaler

- Adds nodes to meet capacity enough for scheduling all the pending pods

- AWS Auto Scaling

- Adds/removes nodes according to CPU and/or memory usage

Are there any tool(s) to deploy and manage Kubernetes clusters?

Are there any tool(s) to deploy and manage Kubernetes clusters?

- Yes,

- A lot

- klondike, kubernetes-anywhere, kops, kube-aws, kope, kube-cluster, kubeadm-aws, halcyon-kubernetes, tack, etc.

-

An overview of those tools

An overview of those tools

How to select tool(s) for your use?

How to select tool(s) for your use?

Selecting a tool is all about:

- knowing your requirements,

- investing in tools, and then

- connecting them

Knowing your requirements

Requirements which aries frequently today:

- Highly available

- Highly scalable

- Highly secure

- Be locked in to technologies give you higher leverage

Lock-in to what gives you higher leverage?

- An IaaS frequently reduce pricing

- An IaaS frequently add new features, APIs, SDKs, etc., which are ready to be used for your business

- // AWS for me

Table of contents

- A bit of context

- About this presentation

- Overview of Kubernetes on AWS

- Detailed guide for kube-aws

Detailed guide for kube-aws

- For all:

- kube-aws in a nutshell

- For users:

- Usage guide for kube-aws

- For developers:

- Development guide for kube-aws

- For potential contributors:

- Contribution guide for kube-aws

kube-aws in a nutshell

kube-aws in a nutshell

- A single binary to create/update/destroy Kubernetes cluster(s) on AWS

- Infrastructure as Code achieved via AWS CloudFormation + Golang

- Originally developed by CoreOS, currently maintained by community

https://github.com/coreos/kube-aws

Under the hood of kube-aws

Under the hood of kube-aws

- Standard Golang application based on Golang 1.7, glide, cobra, etc., coupled w/

- CoreOS, which has official support for running Kubernetes

- Kubernetes 1.4.x, upstream releases followed timely

- Various AWS services

- VPC, EC2, ELB, Route53, KMS, CloudFormation

kube-aws does not use

kube-aws does not use

- Terraform

- Ansible

- Chef, Puppet, Saltstack, etc.

Usage guide for kube-aws

Create your first Kubernetes cluster on AWS ![]()

- Install

kube-aws - Generate

cluster.yaml - Customize your cluster

- Render the contents of the assets directory

- Validate your configuration

- Updating the cluster without downtime

- Destroying the cluster

Creating your first Kubernetes cluster on AWS

- Create keys

- Install the latest version of

kube-awscommand - Customizing the cluster

Prepare keys

- EC2 Keypair

- Used to authenticate SSH access to your server

- KMS key

- Used to encrypt/decrypt TLS assets generated by kube-aws

Install the kube-aws command

$ platform=darwin-amd64

$ version=v0.9.2-rc.1

$ curl -L https://github.com/coreos/kube-aws/releases/download/${version}/kube-aws-${platform}.tar.gz | tar zxv ${platform}/kube-aws && \

mv ${platform}/kube-aws /usr/local/bin/kube-aws && \

chmod +x /usr/local/bin/kube-aws

$ kube-aws version

kube-aws version v0.9.2-rc.1

![]() https://github.com/coreos/kube-aws/releases

https://github.com/coreos/kube-aws/releases

Customizing the cluster

Generate a cluster.yaml:

$ mkdir my-cluster

$ cd my-cluster

$ kube-aws init \

--cluster-name=my-cluster-name \

--external-dns-name=my-cluster-endpoint \

--region=us-west-1 \

--availability-zone=us-west-1c \

--key-name=key-pair-name \

--kms-key-arn="arn:aws:kms:us-west-1:xxxxxxxxxx:key/xxxxxxxxxxxxxxxxxxx"

Customizing the cluster

cluster.yaml:

clusterName: my-cluster-name

externalDNSName: my-cluster-name-api.my.domain

releaseChannel: stable

createRecordSet: true

recordSetTTL: 300

hostedZoneId: "XASDASDASD" # DEV private only route53 zone

keyName: my-ssh-keypair

region: ap-northeast-1

availabilityZone: ap-northeast-1c

kmsKeyArn: "arn:aws:kms:ap-northeast-1:0w123456789:key/d345fcd1-c77c-4fca-acdc-asdasdf3234232"

controllerCount: 1

controllerInstanceType: m3.medium

controllerRootVolumeSize: 30

controllerRootVolumeType: gp2

workerCount: 1

workerInstanceType: m3.medium

workerRootVolumeSize: 30

workerRootVolumeType: gp2

etcdCount: 1

etcdInstanceType: m3.medium

etcdRootVolumeSize: 30

etcdDataVolumeSize: 30

vpcId: vpc-xxxccc45

routeTableId: "rtb-aaabbb12" # main external no NAT uses internet gateway

vpcCIDR: "10.1.0.0/16"

instanceCIDR: "10.1.10.0/24"

serviceCIDR: "10.3.0.0/24"

podCIDR: "10.2.0.0/16"

dnsServiceIP: 10.3.0.10

stackTags:

Name: "my-cluster-name"

Environment: "development"

Render the contents of the assets directory

$ kube-aws render

$ tree

.

├── cluster.yaml

├── credentials

│ ├── admin-key.pem

│ ├── admin.pem

│ ├── apiserver-key.pem

│ ├── apiserver.pem

│ ├── ca-key.pem

│ ├── ca.pem

│ ├── worker-key.pem

│ └── worker.pem

│ ├── etcd-key.pem

│ └── etcd.pem

│ ├── etcd-client-key.pem

│ └── etcd-client.pem

├── kubeconfig

├── stack-template.json

└── userdata

├── cloud-config-controller

└── cloud-config-worker

Credential files

-

admin*.pem, used on your local machine and/or build servers -

worker*.pem, used in worker nodes -

apiserver*.pem, used in controller nodes -

etcd-client*pem, used in worker and controller nodes -

etcd*pem, used in etcd nodes

Credential file naming

-

foo-key.pem- The private key for

fooaccess

- The private key for

-

foo.pem- THe public key for

fooaccess

- THe public key for

That's a lot!

- Every little mistake in configuration would result in non-functional clusters

- That's why we need

kube-aws validate

Validate your configuration

$ kube-aws validate

- Fails only when there're syntax and/or semantic errors in your configuration files

- Especially in:

- cloud-config

- CloudFormation templates

- No need to wait for minutes until you find easy mistakes like typos

- Especially in:

It is just "validating"

-

kube-aws validatedoesn't(can't) test functional correctness of the resulting Kubernetes cluster - It is covered by our End-to-end testing

Launch your cluster

$ kube-aws up

Creating AWS resources. This should take around 5 minutes.

Success! Your AWS resources have been created:

Cluster Name: my-cluster-name

The containers that power your cluster are now being downloaded.

You should be able to access the Kubernetes API once the containers finish downloading.

Or just export your cluster

$ kube-aws up --export

- Generates a CloudFormation stack template

- A template is a JSON file which defines which AWS resources to create and how they're connected.

Destroy your cluster

$ kube-aws destroy

Development guide for kube-aws

- Understanding how kube-aws is built

- Build your own

kube-aws - Make targets

- End-to-end testing in kube-aws

Understanding how kube-aws is built

- golang 1.7.x

- glide for package management

- Alternatives: Godep, trash, govendor, etc.

-

go gen+go runfor code generation- To embed the default configuration files into kube-aws binaries

-

go testfor unit testing -

gofmtfor code formatting and validation

Build your own kube-aws

- Install required tools

- git clone

- make build

Installing required tools

brew update

brew install go

brew install glide

brew install make

Clone the repository to an appropriate path

mkdir -p $GOPATH/src/github.com/coreos/kube-aws

git clone git@github.com:coreos/kube-aws.git \

$GOPATH/src/github.com/coreos/kube-aws

Build a kube-aws binary

$ make build

./build

Building kube-aws 102cb5b8c9d71af1a5074b77955c4913e7d0b84d

$ bin/kube-aws version

kube-aws version 102cb5b8c9d71af1a5074b77955c4913e7d0b84d

FYI: Make targets

-

make buildto buildbin/kube-aws -

make formatto automatically format all the code of kube-aws -

make testto run all the unit tests

End-to-end testing in kube-aws

- Create a main cluster

- Create a node pool

- Update the main cluster

- Run Kubernetes conformance tests (

)

)

Kubernetes conformance tests

- are a subset of Kubernetes E2E tests

- to confirm that a minimum feature set of functions required for a cluster to be a Kubernetes cluster is properly working

Kubernetes E2E tests

End-to-end (e2e) tests for Kubernetes provide a mechanism to test end-to-end behavior of the system, and is the last signal to ensure end user operations match developer specifications.

End to end testing in Kubernetes

Why E2E tests are needed

Although unit and integration tests provide a good signal, in a distributed system like Kubernetes it is not uncommon that a minor change may pass all unit and integration tests, but cause unforeseen changes at the system level.

End to end testing in Kubernetes

Running End-to-end tests

-

cd e2e && KUBE_AWS_CLUSTER_NAME=my-cluster-name ./run.sh all -

It takes approximately 1 hour to run each test case

-

A good news is that we run it against multiple combinations of settings and options

-

Before releasing new kube-aws releases

-

For you

Contribution guide for kube-aws

- Where to start: GitHub issues

- What are discussed and answered

- Please file your own issue(s)!

Where to start

Our github issues page is the single place to start your contribution:

Discussed and answered in github issues

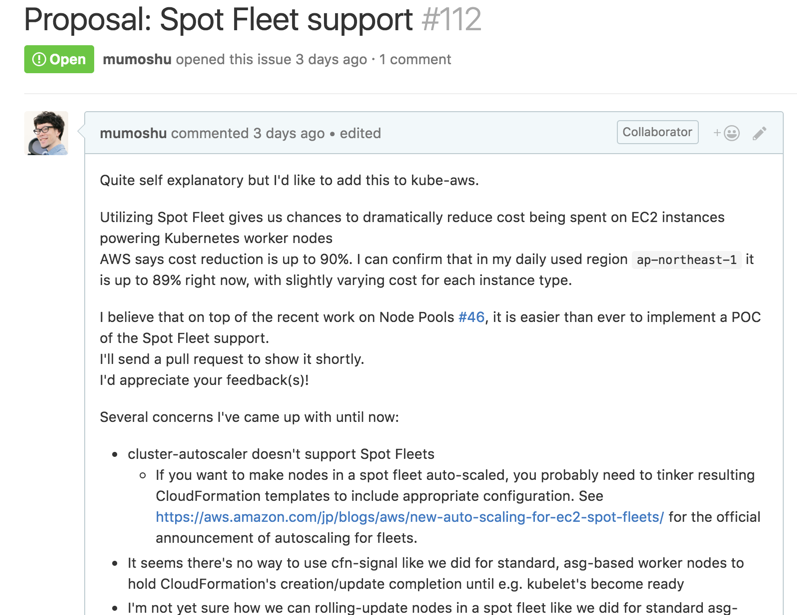

- Feature Request

- Proposal

- For possible features

- For possible improvement(s) in the documentation

- Bug reports

- Questions

What a proposal would look like

Please file your own issue(s)!

Every little feedback is welcomed to shape our goals and plans toward production-ready Kubernetes clusters on AWS

Fin.

Thanks for your attention and interest on Kubernetes on AWS,