#1.すぐに利用したい方へ(as soon as)

「PythonとKerasによるディープラーニング」 :Francois Chollet著

https://book.mynavi.jp/ec/products/detail/id=90124

<この項は書きかけです。順次追記します。>

This article is not completed. I will add some words in order.

本資料の使い方

dockerを利用して、githubで公開されているソースコードの利用の仕方を説明します。

Jupyternotebook形式になっているプログラムは、

全部繋げて実行すればPythonの一つのプログラムとして利用できるものもあります。

8.1 の例では、実際に一つのpythonプログラムとして繋げで動作確認しています。

docker

dockerを導入し、Windows, Macではdockerを起動しておいてください。

Windowsでは、BiosでIntel Virtualizationをenableにしないとdockerが起動しない場合があります。

また、セキュリティの警告などが出ることがあります。

M.S. Windowsでdockerを

https://qiita.com/kaizen_nagoya/items/db25c0493170cb8cbcf6

docker pull and run

$ docker pull kaizenjapan/anaconda-francois

$ docker run -it -p 8888:8888 kaizenjapan/anaconda-francois /bin/bash

以下のshell sessionでは

(base) root@f19e2f06eabb:/#は入力促進記号(comman prompt)です。実際には数字の部分が違うかもしれません。この行の#の右側を入力してください。

それ以外の行は出力です。出力にエラー、違いがあれば、コメント欄などでご連絡くださると幸いです。

それぞれの章のフォルダに移動します。

dockerの中と、dockerを起動したOSのシェルとが表示が似ている場合には、どちらで捜査しているか間違えることがあります。dockerの入力促進記号(comman prompt)に気をつけてください。

ファイル共有または複写

dockerとdockerを起動したOSでは、ファイル共有をするか、ファイル複写するかして、生成したファイルをブラウザ等表示させてください。参考文献欄にやり方のURLを記載しています。

複写の場合は、dockerを起動したOS側コマンドを実行しました。お使いのdockerの番号で置き換えてください。複写したファイルをブラウザで表示し内容確認しました。

(base) root@19b116a46da8:/# ls

bin deep-learning-with-keras-ja dev home lib64 mnt proc run srv tmp var

boot deep-learning-with-python-notebooks etc lib media opt root sbin sys usr

(base) root@19b116a46da8:/# cd deep-learning-with-python-notebooks/

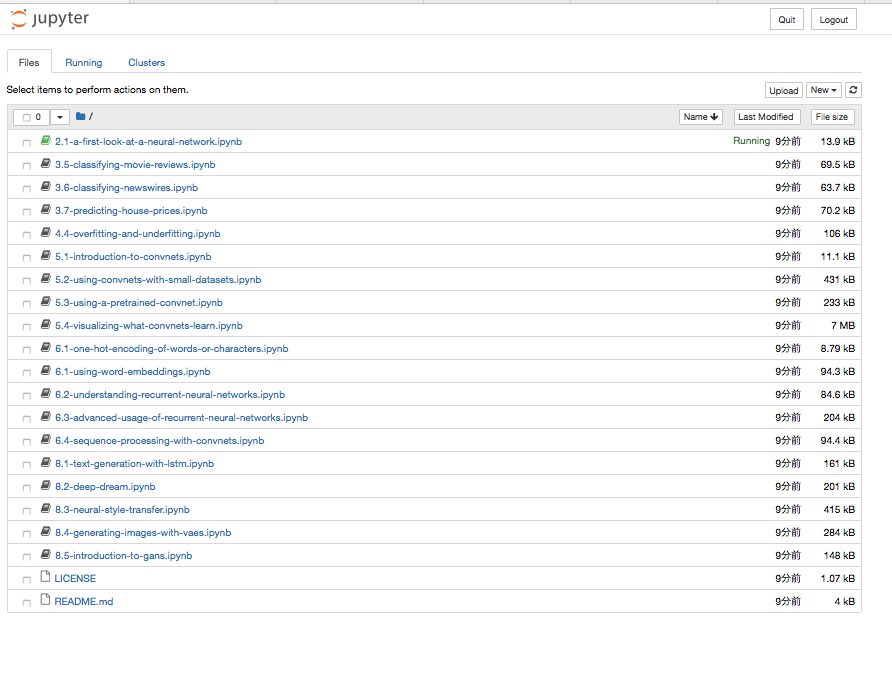

(base) root@19b116a46da8:/deep-learning-with-python-notebooks# ls

2.1-a-first-look-at-a-neural-network.ipynb 5.3-using-a-pretrained-convnet.ipynb 8.1-text-generation-with-lstm.ipynb

3.5-classifying-movie-reviews.ipynb 5.4-visualizing-what-convnets-learn.ipynb 8.2-deep-dream.ipynb

3.6-classifying-newswires.ipynb 6.1-one-hot-encoding-of-words-or-characters.ipynb 8.3-neural-style-transfer.ipynb

3.7-predicting-house-prices.ipynb 6.1-using-word-embeddings.ipynb 8.4-generating-images-with-vaes.ipynb

4.4-overfitting-and-underfitting.ipynb 6.2-understanding-recurrent-neural-networks.ipynb 8.5-introduction-to-gans.ipynb

5.1-introduction-to-convnets.ipynb 6.3-advanced-usage-of-recurrent-neural-networks.ipynb LICENSE

5.2-using-convnets-with-small-datasets.ipynb 6.4-sequence-processing-with-convnets.ipynb README.md

jupyternotebook

(base) root@19b116a46da8:/deep-learning-with-python-notebooks# jupyter notebook --ip=0.0.0.0 --allow-root

[I 04:47:52.531 NotebookApp] Writing notebook server cookie secret to /root/.local/share/jupyter/runtime/notebook_cookie_secret

[I 04:47:52.776 NotebookApp] JupyterLab beta preview extension loaded from /opt/conda/lib/python3.6/site-packages/jupyterlab

[I 04:47:52.776 NotebookApp] JupyterLab application directory is /opt/conda/share/jupyter/lab

[I 04:47:52.785 NotebookApp] Serving notebooks from local directory: /deep-learning-with-python-notebooks

[I 04:47:52.786 NotebookApp] 0 active kernels

[I 04:47:52.786 NotebookApp] The Jupyter Notebook is running at:

[I 04:47:52.786 NotebookApp] http://19b116a46da8:8888/?token=5ca23859604dcac80e266f93ec2194c802e98f432729aa5d

[I 04:47:52.786 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[W 04:47:52.787 NotebookApp] No web browser found: could not locate runnable browser.

[C 04:47:52.787 NotebookApp]

Copy/paste this URL into your browser when you connect for the first time,

to login with a token:

http://19b116a46da8:8888/?token=5ca23859604dcac80e266f93ec2194c802e98f432729aa5d&token=5ca23859604dcac80e266f93ec2194c802e98f432729aa5d

[I 04:48:11.426 NotebookApp] 302 GET / (172.17.0.1) 0.64ms

[W 04:48:11.433 NotebookApp] Clearing invalid/expired login cookie username-localhost-8888

[W 04:48:11.434 NotebookApp] Clearing invalid/expired login cookie username-localhost-8888

[I 04:48:11.435 NotebookApp] 302 GET /tree? (172.17.0.1) 2.66ms

[I 04:48:16.289 NotebookApp] 302 POST /login?next=%2Ftree%3F (172.17.0.1) 1.77ms

[I 04:48:21.752 NotebookApp] Writing notebook-signing key to /root/.local/share/jupyter/notebook_secret

[W 04:48:21.757 NotebookApp] Notebook 2.1-a-first-look-at-a-neural-network.ipynb is not trusted

[I 04:48:22.837 NotebookApp] Kernel started: fe0e9fe5-2acc-488a-b574-315edf559da0

[I 04:48:23.453 NotebookApp] Adapting to protocol v5.1 for kernel fe0e9fe5-2acc-488a-b574-315edf559da0

[I 04:50:22.814 NotebookApp] Saving file at /2.1-a-first-look-at-a-neural-network.ipynb

[W 04:50:22.818 NotebookApp] Notebook 2.1-a-first-look-at-a-neural-network.ipynb is not trusted

[W 04:50:27.598 NotebookApp] Notebook 2.1-a-first-look-at-a-neural-network.ipynb is not trusted

[I 04:50:28.635 NotebookApp] Adapting to protocol v5.1 for kernel fe0e9fe5-2acc-488a-b574-315edf559da0

ブラウザで

localhost:8888

を開く

上記の場合は、token に

5ca23859604dcac80e266f93ec2194c802e98f432729aa5d

を入れる。

警告など

tensor boadの使い方は現在検討中。

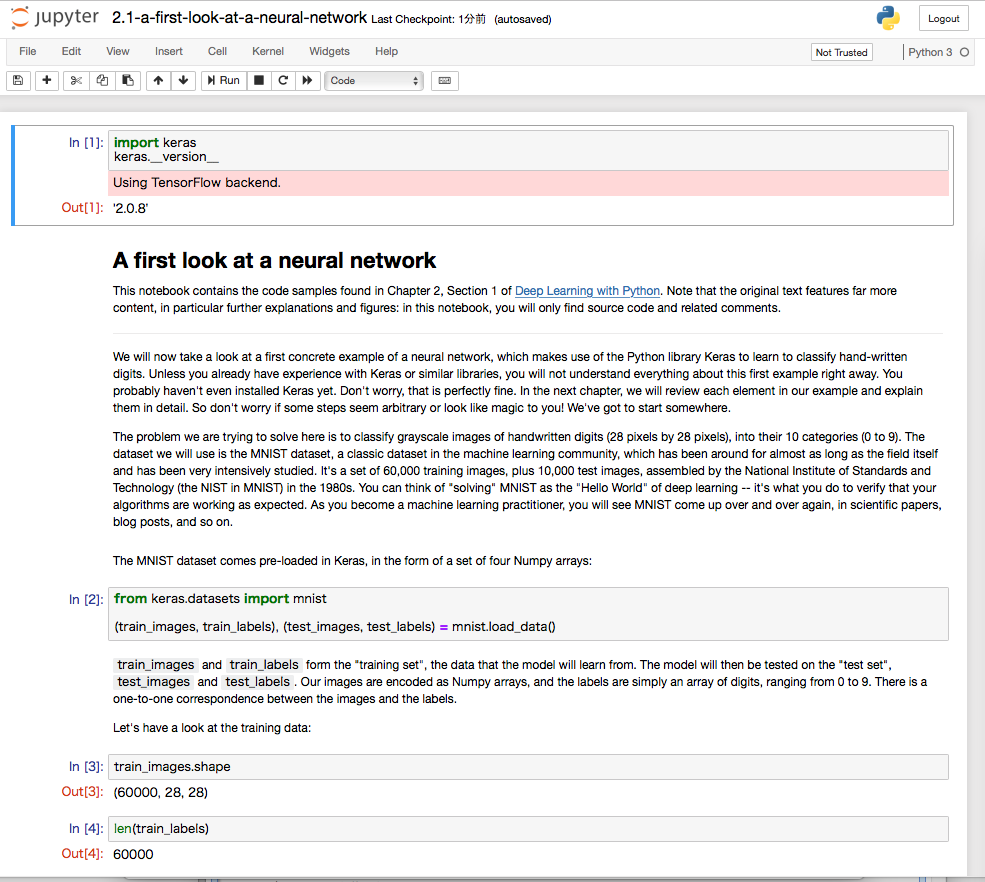

2.1-a-first-look-at-a-neural-network

Using TensorFlow backend.

##3.5-classifying-movie-reviews.ipynb

警告なし

3.6-classifying-newswires.ipynb

Using TensorFlow backend.

##3.7-predicting-house-prices.ipynb

Using TensorFlow backend.

4.4-overfitting-and-underfitting.ipynb

Using TensorFlow backend.

5.1-introduction-to-convnets.ipynb

Using TensorFlow backend.

5.2-using-convnets-with-small-datasets.ipynb

Using TensorFlow backend.

5.3-using-a-pretrained-convnet.ipynb

Using TensorFlow backend.

5.4-visualizing-what-convnets-learn

Using TensorFlow backend.

6.1-one-hot-encoding-of-words-or-characters

6.1-using-word-embeddings

Using TensorFlow backend.

6.2-understanding-recurrent-neural-networks

Using TensorFlow backend.

6.3-advanced-usage-of-recurrent-neural-networks

Using TensorFlow backend.

6.4-sequence-processing-with-convnets

Using TensorFlow backend.

8.1-text-generation-with-lstm

/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:3: RuntimeWarning: divide by zero encountered in log

This is separate from the ipykernel package so we can avoid doing imports until

{

"name": "stderr",

"output_type": "stream",

"text": [

"/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:3: RuntimeWarning: divide by zero encountered in log\n",

" This is separate from the ipykernel package so we can avoid doing imports until\n"

]

},

import keras

import numpy as np

path = keras.utils.get_file(

'nietzsche.txt',

origin='https://s3.amazonaws.com/text-datasets/nietzsche.txt')

text = open(path).read().lower()

print('Corpus length:', len(text))

# Length of extracted character sequences

maxlen = 60

# We sample a new sequence every `step` characters

step = 3

# This holds our extracted sequences

sentences = []

# This holds the targets (the follow-up characters)

next_chars = []

for i in range(0, len(text) - maxlen, step):

sentences.append(text[i: i + maxlen])

next_chars.append(text[i + maxlen])

print('Number of sequences:', len(sentences))

# List of unique characters in the corpus

chars = sorted(list(set(text)))

print('Unique characters:', len(chars))

# Dictionary mapping unique characters to their index in `chars`

char_indices = dict((char, chars.index(char)) for char in chars)

# Next, one-hot encode the characters into binary arrays.

print('Vectorization...')

x = np.zeros((len(sentences), maxlen, len(chars)), dtype=np.bool)

y = np.zeros((len(sentences), len(chars)), dtype=np.bool)

for i, sentence in enumerate(sentences):

for t, char in enumerate(sentence):

x[i, t, char_indices[char]] = 1

y[i, char_indices[next_chars[i]]] = 1

from keras import layers

model = keras.models.Sequential()

model.add(layers.LSTM(128, input_shape=(maxlen, len(chars))))

model.add(layers.Dense(len(chars), activation='softmax'))

optimizer = keras.optimizers.RMSprop(lr=0.01)

model.compile(loss='categorical_crossentropy', optimizer=optimizer)

def sample(preds, temperature=1.0):

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

import random

import sys

for epoch in range(1, 60):

print('epoch', epoch)

# Fit the model for 1 epoch on the available training data

model.fit(x, y,

batch_size=128,

epochs=1)

# Select a text seed at random

start_index = random.randint(0, len(text) - maxlen - 1)

generated_text = text[start_index: start_index + maxlen]

print('--- Generating with seed: "' + generated_text + '"')

for temperature in [0.2, 0.5, 1.0, 1.2]:

print('------ temperature:', temperature)

sys.stdout.write(generated_text)

# We generate 400 characters

for i in range(400):

sampled = np.zeros((1, maxlen, len(chars)))

for t, char in enumerate(generated_text):

sampled[0, t, char_indices[char]] = 1.

preds = model.predict(sampled, verbose=0)[0]

next_index = sample(preds, temperature)

next_char = chars[next_index]

generated_text += next_char

generated_text = generated_text[1:]

sys.stdout.write(next_char)

sys.stdout.flush()

print()

$ python 26.py

epoch 1

Traceback (most recent call last):

File "26.py", line 7, in <module>

model.fit(x, y,

NameError: name 'model' is not defined

OgawaKiyoshi-no-MacBook-Pro:deep-learning-with-python-notebooks ogawakiyoshi$ vi 26.py

OgawaKiyoshi-no-MacBook-Pro:deep-learning-with-python-notebooks ogawakiyoshi$ python 26.py

Using TensorFlow backend.

Downloading data from https://s3.amazonaws.com/text-datasets/nietzsche.txt

606208/600901 [==============================] - 1s 2us/step

Corpus length: 600893

Number of sequences: 200278

Unique characters: 57

Vectorization...

epoch 1

Epoch 1/1

2018-10-18 16:23:32.775346: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2018-10-18 16:23:32.775689: I tensorflow/core/common_runtime/process_util.cc:69] Creating new thread pool with default inter op setting: 4. Tune using inter_op_parallelism_threads for best performance.

200278/200278 [==============================] - 868s 4ms/step - loss: 1.9877

--- Generating with seed: "he race and not to him who has arrived at the goal.

somethin"

------ temperature: 0.2

he race and not to him who has arrived at the goal.

something the spection of the seept the action of the sout the present the spirit to the spection of the present the sout and the self-the spirit to a man in the spirit and and the acconce of the spection of the sout of the self-consention and the present the spirture and in the soulse and in the experation of the present the specise to the self-condinial and a sout in prose the self-consention of the sel

------ temperature: 0.5

condinial and a sout in prose the self-consention of the self-condersion and inthere of the in the self-condersence, the whole of the free men of the last free of the wast the relation the present to one man in greates of spirture of the the still in sayst the present the self-that the sees the most proses the love the of a more propent and that the every in conse berative in spection the

inself-collition that the it when i man i in self-sympathy has such

------ temperature: 1.0

ollition that the it when i man i in self-sympathy has such who truat nelves infaitice, it man

man to result--the peresple of the ressenses this possiinsness of most tern rather. the fulloo; oui an speesics of ohe psapect of canse in his of rele"tion--to him and a mask, hual, the doisting and cannot

and the ma indel always in the from man--what hads that hitherto thes a dispisiesple--steth a acaccial something whe falsive cause of entisaach of lone

grong

------ temperature: 1.2

cial something whe falsive cause of entisaach of lone

grong

grory--profut reveleve. dom one forld to,

menk with in whe -confectoman or viecize as reatly in the "teaso's has jusdocsding any reguiur, ediluous har os naiss, gesenhbul-plastry

premars oldamates with cn, obtely of is s

-pollerse of

wheely.

and this beet

veirvaen mach platu. the phisal

jastation are can

verways a veiny that faw own this

no incluss thatupenamane:

to quifitiens at sobeldorald are m

epoch 2

Epoch 1/1

8576/200278 [>.............................] - ETA: 15:12 - loss: 1.6699

8.2-deep-dream

Using TensorFlow backend.

8.3-neural-style-transfer

Using TensorFlow backend.

8.4-generating-images-with-vaes

/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:4: UserWarning: Output "custom_variational_layer_1" missing from loss dictionary. We assume this was done on purpose, and we will not be expecting any data to be passed to "custom_variational_layer_1" during training.

after removing the cwd from sys.path.

下記のように警告を出す設定になっている。原因、対策は不明。

"outputs": [

{

"name": "stderr",

"output_type": "stream",

"text": [

"/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:4: UserWarning: Output \"custom_variational_layer_1\" missing from loss dictionary. We assume this was done on purpose, and we will not be expecting any data to be passed to \"custom_variational_layer_1\" during training.\n",

" after removing the cwd from sys.path.\n"

]

},

8.5-introduction-to-gans

Using TensorFlow backend.

#2. dockerを自力で構築する方へ

ここから下は、上記のpullしていただいたdockerをどういう方針で、どういう手順で作ったかを記録します。

上記のdockerを利用する上での参考資料です。本の続きを実行する上では必要ありません。

自力でdocker/anacondaを構築する場合の手順になります。

dockerfileを作る方法ではありません。ごめんなさい。

docker

ubuntu, debianなどのLinuxを、linux, windows, mac osから共通に利用できる仕組み。

利用するOSの設定を変更せずに利用できるのがよい。

同じ仕様で、大量の人が利用することができる。

ソフトウェアの開発元が公式に対応しているものと、利用者が便利に仕立てたものの両方が利用可能である。今回は、公式に配布しているものを、自分で仕立てて、他の人にも利用できるようにする。

python

DeepLearningの実習をPhthonで行って来た。

pythonを使う理由は、多くの機械学習の仕組みがpythonで利用できることと、Rなどの統計解析の仕組みもpythonから容易に利用できることがある。

anaconda

pythonには、2と3という版の違いと、配布方法の違いなどがある。

Anacondaでpython3をこの1年半利用してきた。

Anacondaを利用した理由は、統計解析のライブラリと、JupyterNotebookが初めから入っているからである。

docker公式配布

ubuntu, debianなどのOSの公式配布,gcc, anacondaなどの言語の公式配布などがある。

これらを利用し、docker-hubに登録することにより、公式配布の質の確認と、変更権を含む幅広い情報の共有ができる。dockerが公式配布するものではなく、それぞれのソフト提供者の公式配布という意味。

docker pull

docker公式配布の利用は、URLからpullすることで実現する。

###docker Anaconda

anacondaが公式配布しているものを利用。

$ docker pull kaizenjapan/anaconda-keras

Using default tag: latest

latest: Pulling from continuumio/anaconda3

Digest: sha256:e07b9ca98ac1eeb1179dbf0e0bbcebd87701f8654878d6d8ce164d71746964d1

Status: Image is up to date for continuumio/anaconda3:latest

OgawaKiyoshi-no-MacBook-Pro:docker-toppers ogawakiyoshi$ docker run -it continuumio/anaconda3 /bin/bash

実際にはkeras, tensorflow を利用していた他のpushをpull

apt

(base) root@d8857ae56e69:/# apt update

(base) root@d8857ae56e69:/# apt install -y procps vim apt-utils sudo

ソース git

(base) root@f19e2f06eabb:/# git clone https://github.com/fchollet/deep-learning-with-python-notebooks

pip

(base) root@f19e2f06eabb:/deep-learning-from-scratch-2/ch01# pip install --upgrade pip

Collecting pip

Downloading https://files.pythonhosted.org/packages/5f/25/e52d3f31441505a5f3af41213346e5b6c221c9e086a166f3703d2ddaf940/pip-18.0-py2.py3-none-any.whl (1.3MB)

100% |████████████████████████████████| 1.3MB 2.0MB/s

distributed 1.21.8 requires msgpack, which is not installed.

Installing collected packages: pip

Found existing installation: pip 10.0.1

Uninstalling pip-10.0.1:

Successfully uninstalled pip-10.0.1

Successfully installed pip-18.0

(base) root@da73a1cf3e64:/Deep-Learning-Essentials/Chapter02/chapter-2/codeblock# pip install mxnet

Collecting mxnet

Downloading https://files.pythonhosted.org/packages/71/64/49c5125befd5e0f0e17f115d55cb78080adacbead9d19f253afd0157656a/mxnet-1.3.0.post0-py2.py3-none-manylinux1_x86_64.whl (27.7MB)

100% |████████████████████████████████| 27.8MB 1.8MB/s

Collecting requests<2.19.0,>=2.18.4 (from mxnet)

Downloading https://files.pythonhosted.org/packages/49/df/50aa1999ab9bde74656c2919d9c0c085fd2b3775fd3eca826012bef76d8c/requests-2.18.4-py2.py3-none-any.whl (88kB)

100% |████████████████████████████████| 92kB 5.0MB/s

Collecting graphviz<0.9.0,>=0.8.1 (from mxnet)

Downloading https://files.pythonhosted.org/packages/53/39/4ab213673844e0c004bed8a0781a0721a3f6bb23eb8854ee75c236428892/graphviz-0.8.4-py2.py3-none-any.whl

Collecting numpy<1.15.0,>=1.8.2 (from mxnet)

Downloading https://files.pythonhosted.org/packages/18/84/49b7f268741119328aeee0802aafb9bc2e164b36fc312daf83af95dae646/numpy-1.14.6-cp37-cp37m-manylinux1_x86_64.whl (13.8MB)

100% |████████████████████████████████| 13.8MB 5.2MB/s

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/lib/python3.7/site-packages (from requests<2.19.0,>=2.18.4->mxnet) (2018.8.24)

Collecting urllib3<1.23,>=1.21.1 (from requests<2.19.0,>=2.18.4->mxnet)

Downloading https://files.pythonhosted.org/packages/63/cb/6965947c13a94236f6d4b8223e21beb4d576dc72e8130bd7880f600839b8/urllib3-1.22-py2.py3-none-any.whl (132kB)

100% |████████████████████████████████| 133kB 10.7MB/s

Requirement already satisfied: chardet<3.1.0,>=3.0.2 in /opt/conda/lib/python3.7/site-packages (from requests<2.19.0,>=2.18.4->mxnet) (3.0.4)

Collecting idna<2.7,>=2.5 (from requests<2.19.0,>=2.18.4->mxnet)

Using cached https://files.pythonhosted.org/packages/27/cc/6dd9a3869f15c2edfab863b992838277279ce92663d334df9ecf5106f5c6/idna-2.6-py2.py3-none-any.whl

Installing collected packages: urllib3, idna, requests, graphviz, numpy, mxnet

Found existing installation: urllib3 1.23

Uninstalling urllib3-1.23:

Successfully uninstalled urllib3-1.23

Found existing installation: idna 2.7

Uninstalling idna-2.7:

Successfully uninstalled idna-2.7

Found existing installation: requests 2.19.1

Uninstalling requests-2.19.1:

Successfully uninstalled requests-2.19.1

Found existing installation: numpy 1.15.1

Uninstalling numpy-1.15.1:

Successfully uninstalled numpy-1.15.1

Successfully installed graphviz-0.8.4 idna-2.6 mxnet-1.3.0.post0 numpy-1.14.6 requests-2.18.4 urllib3-1.22

docker hubへの登録

kaizenjapanへ登録したときの手順です。

ご自身で作業した内容を登録するときにご参照ください。

githubへの登録については、作業後に追記します。

$ docker docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

19b116a46da8 kaizenjapan/anaconda-keras "/usr/bin/tini -- /b…" About an hour ago Up About an hour 6006/tcp, 0.0.0.0:8888->8888/tcp competent_bohr

$ docker commit 19b116a46da8 kaizenjapan/anaconda-francois

$ docker push kaizenjapan/anaconda-francois

The push refers to repository [docker.io/kaizenjapan/anaconda-francois]

fd7357fb271d: Pushed

95456260f6d1: Mounted from kaizenjapan/anaconda3-wei

6410333f34cf: Mounted from kaizenjapan/anaconda3-wei

cf342e34eca3: Mounted from kaizenjapan/anaconda3-wei

cea95006e36a: Mounted from kaizenjapan/anaconda3-wei

0f3a12fef684: Mounted from kaizenjapan/anaconda3-wei

latest: digest: sha256:ff05b76bc3472a706cd10a810237f5f653ca04c43f347f37729b3572dc17f042 size: 1591

参考資料(reference)

なぜdockerで機械学習するか 書籍・ソース一覧作成中 (目標100)

https://qiita.com/kaizen_nagoya/items/ddd12477544bf5ba85e2

dockerで機械学習(1) with anaconda(1)「ゼロから作るDeep Learning - Pythonで学ぶディープラーニングの理論と実装」斎藤 康毅 著

https://qiita.com/kaizen_nagoya/items/a7e94ef6dca128d035ab

dockerで機械学習(2)with anaconda(2)「ゼロから作るDeep Learning2自然言語処理編」斎藤 康毅 著

https://qiita.com/kaizen_nagoya/items/3b80dfc76933cea522c6

dockerで機械学習(3)with anaconda(3)「直感Deep Learning」Antonio Gulli、Sujit Pal 第1章,第2章

https://qiita.com/kaizen_nagoya/items/483ae708c71c88419c32

dockerで機械学習(71) 環境構築(1) docker どっかーら、どーやってもエラーばっかり。

https://qiita.com/kaizen_nagoya/items/690d806a4760d9b9e040

dockerで機械学習(72) 環境構築(2) Docker for Windows

https://qiita.com/kaizen_nagoya/items/c4daa5cf52e9f0c2c002

dockerで機械学習(73) 環境構築(3) docker/linux/macos bash スクリプト, ms-dos batchファイル

https://qiita.com/kaizen_nagoya/items/3f7b39110b7f303a5558

dockerで機械学習(74) 環境構築(4) R 難関いくつ?

https://qiita.com/kaizen_nagoya/items/5fb44773bc38574bcf1c

dockerで機械学習(75)環境構築(5)docker関連ファイルの管理

https://qiita.com/kaizen_nagoya/items/4f03df9a42c923087b5d

OpenCVをPythonで動かそうとしてlibGL.soが無いって言われたけど解決した。

https://qiita.com/toshitanian/items/5da24c0c0bd473d514c8

サーバサイドにおけるmatplotlibによる作図Tips

https://qiita.com/TomokIshii/items/3a26ee4453f535a69e9e

Dockerでホストとコンテナ間でのファイルコピー

https://qiita.com/gologo13/items/7e4e404af80377b48fd5

Docker for Mac でファイル共有を利用する

https://qiita.com/seijimomoto/items/1992d68de8baa7e29bb5

「名古屋のIoTは名古屋のOSで」Dockerをどっかーらどうやって使えばいいんでしょう。TOPPERS/FMP on RaspberryPi with Macintosh編 5つの関門

https://qiita.com/kaizen_nagoya/items/9c46c6da8ceb64d2d7af

64bitCPUへの道 and/or 64歳の決意

https://qiita.com/kaizen_nagoya/items/cfb5ffa24ded23ab3f60

ゼロから作るDeepLearning2自然言語処理編 読書会の進め方(例)

https://qiita.com/kaizen_nagoya/items/025eb3f701b36209302e

Ubuntu 16.04 LTS で NVIDIA Docker を使ってみる

https://blog.amedama.jp/entry/2017/04/03/235901

<この記事は個人の過去の経験に基づく個人の感想です。現在所属する組織、業務とは関係がありません。>

<This article is an individual impression based on the individual's experience. It has nothing to do with the organization or business to which I currently belong. >

文書履歴(document history)

ver. 0.10 初稿 20181018 昼

ver. 0.11 誤記訂正 20181018 午後3時

ver. 0.12 警告のソース例示 20181018 午後4時

ver. 0.13 参考文献等追記 20181020

ver. 0.14 本資料の使い方、参考文献等 追記 20181021

ver. 0.15 はてなブックマーク追記 20190120

ver. 0.16 M.S. Windowsでdockerを 追記 20201226

最後までおよみいただきありがとうございました。

いいね 💚、フォローをお願いします。

Thank you very much for reading to the last sentence.

Please press the like icon 💚 and follow me for your happy life.