背景

NPU搭載のPCを手に入れました。

せっかくAI PCを手に入れたならばやることは一つ!

ローカルでLLMを動かしてみようと思い立ったので構築してみました

簡単に調べたところだと「Docker Model Runner」が今後よく使いそうなのでこちらを試してみます

スペック

PC

Name: HP EleteBook X G1i 14 inch Notebook Next Gen AI PC

Memory: 32GB

CPU: Intel(R) Core(TM) Ultra 7

NPU: Intel(R) AI Boost

Human

name: gnt0608

AI初心者

開発者なのにほとんどLLM触ったことない

セットアップ

とりあえず、Docker DesktopでSmolLM3を起動するところまでやっていきます

公式 に従いつつ、モデルの取得をします

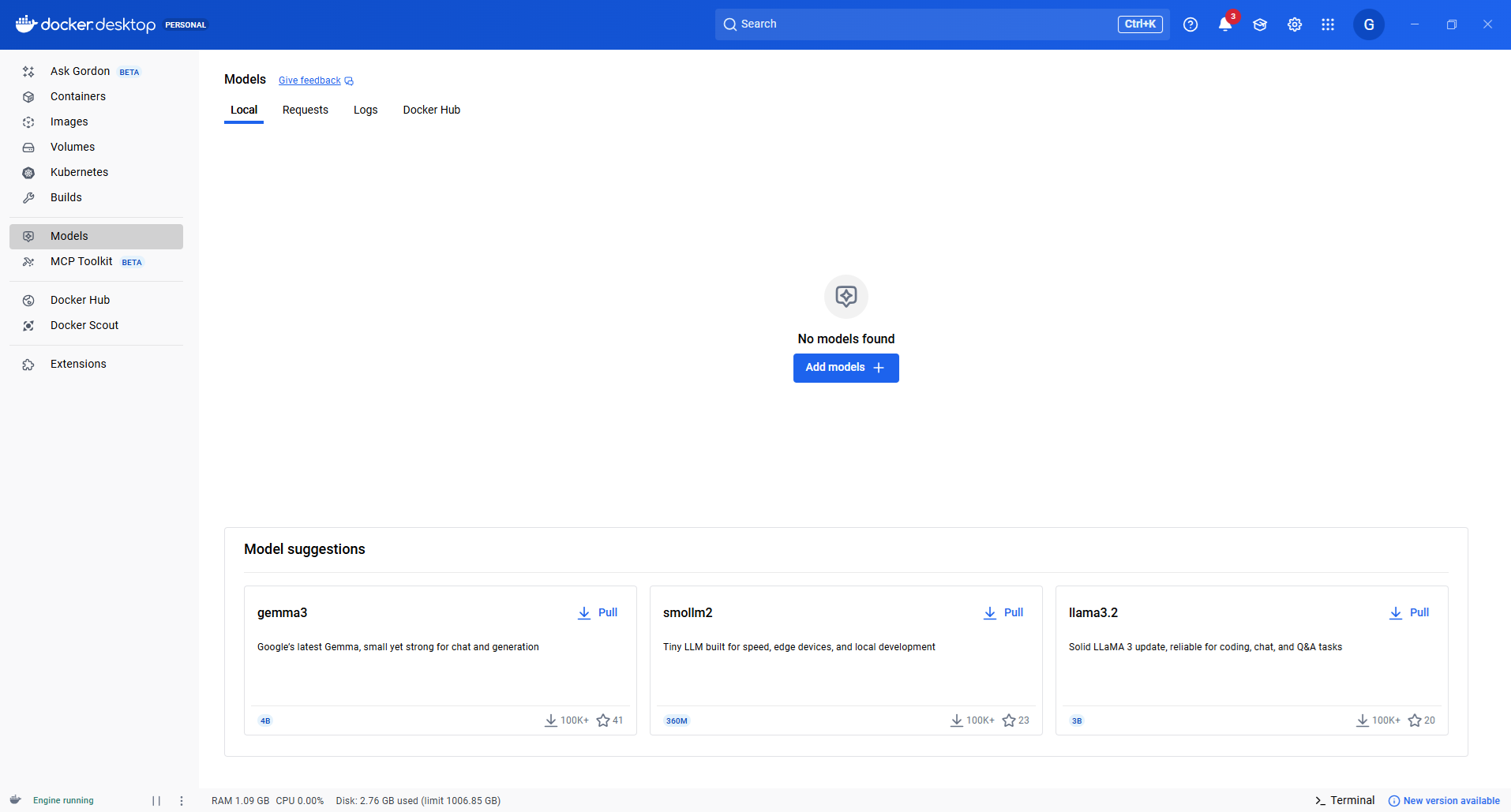

1. Docker Desktopを開く

PCを交換したところで新たにDocker Desktopを導入したので

すでに「Model」タブがありました

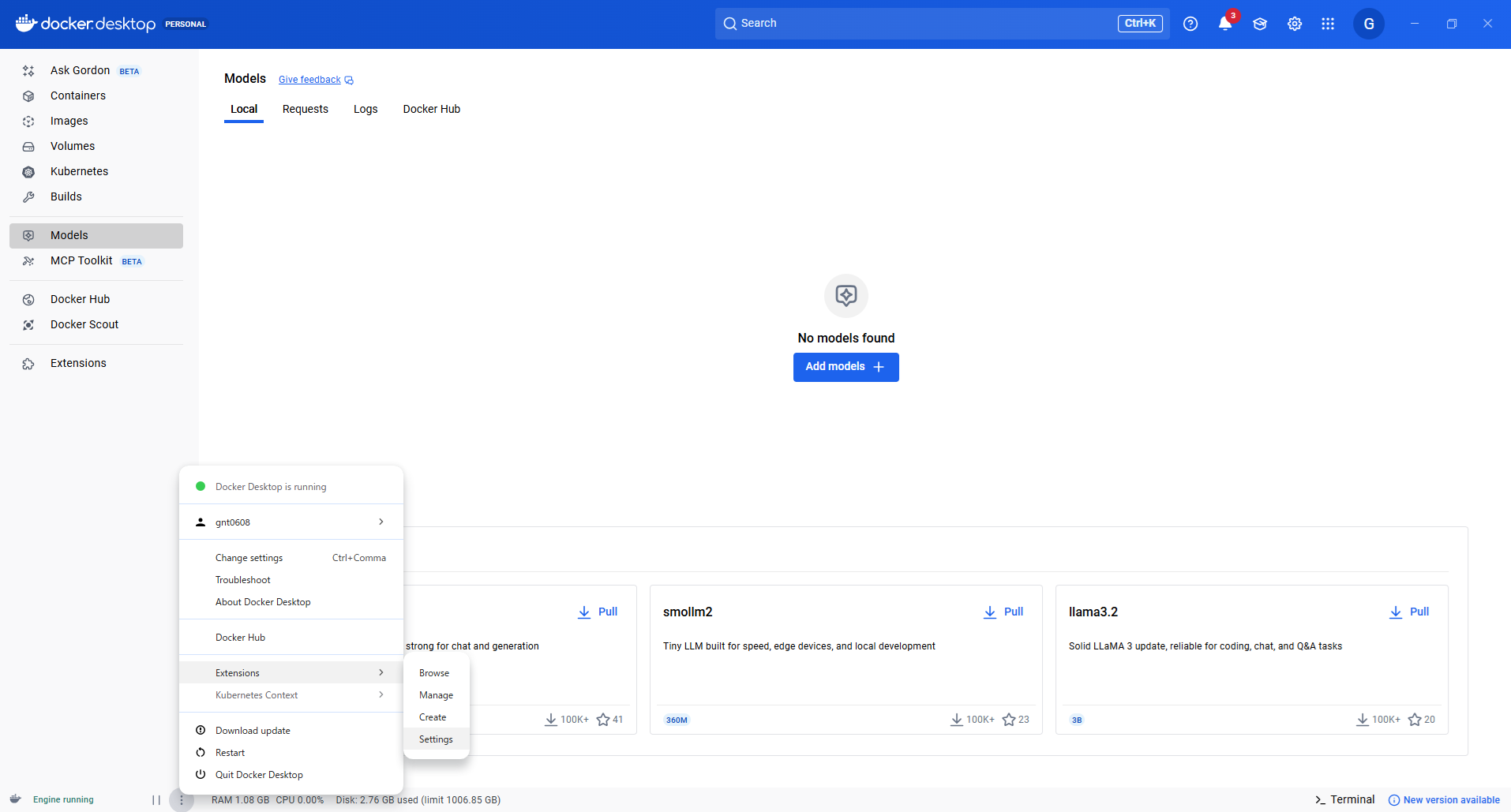

2. Settingsを開く

AI設定の有効化をするためにSettingを開きます

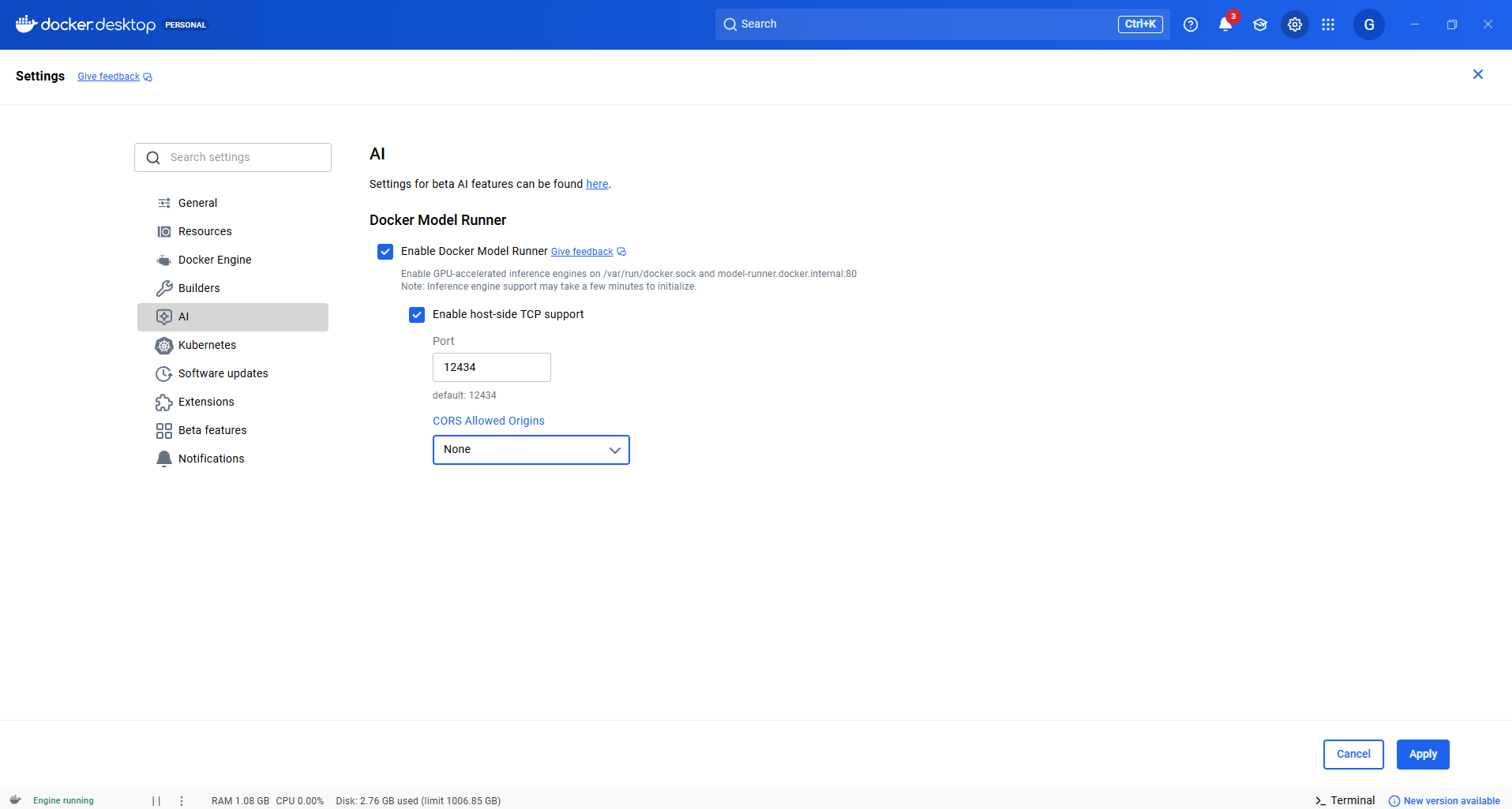

3. AIの設定を有効化

私の場合はすでに「Enable Docker Model Runner」は有効化されていました

「Enable host side TCP support」を有効化し Apply

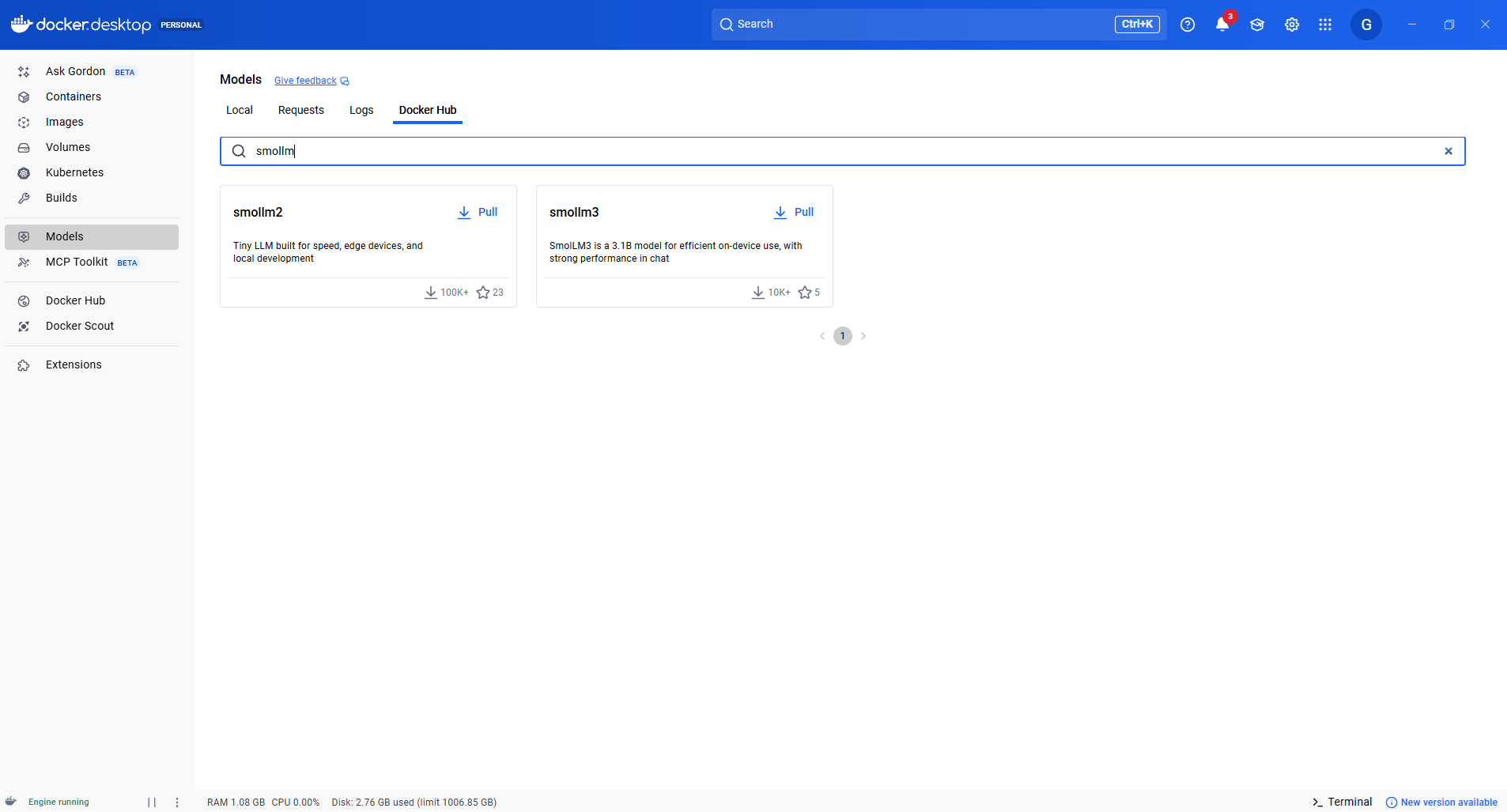

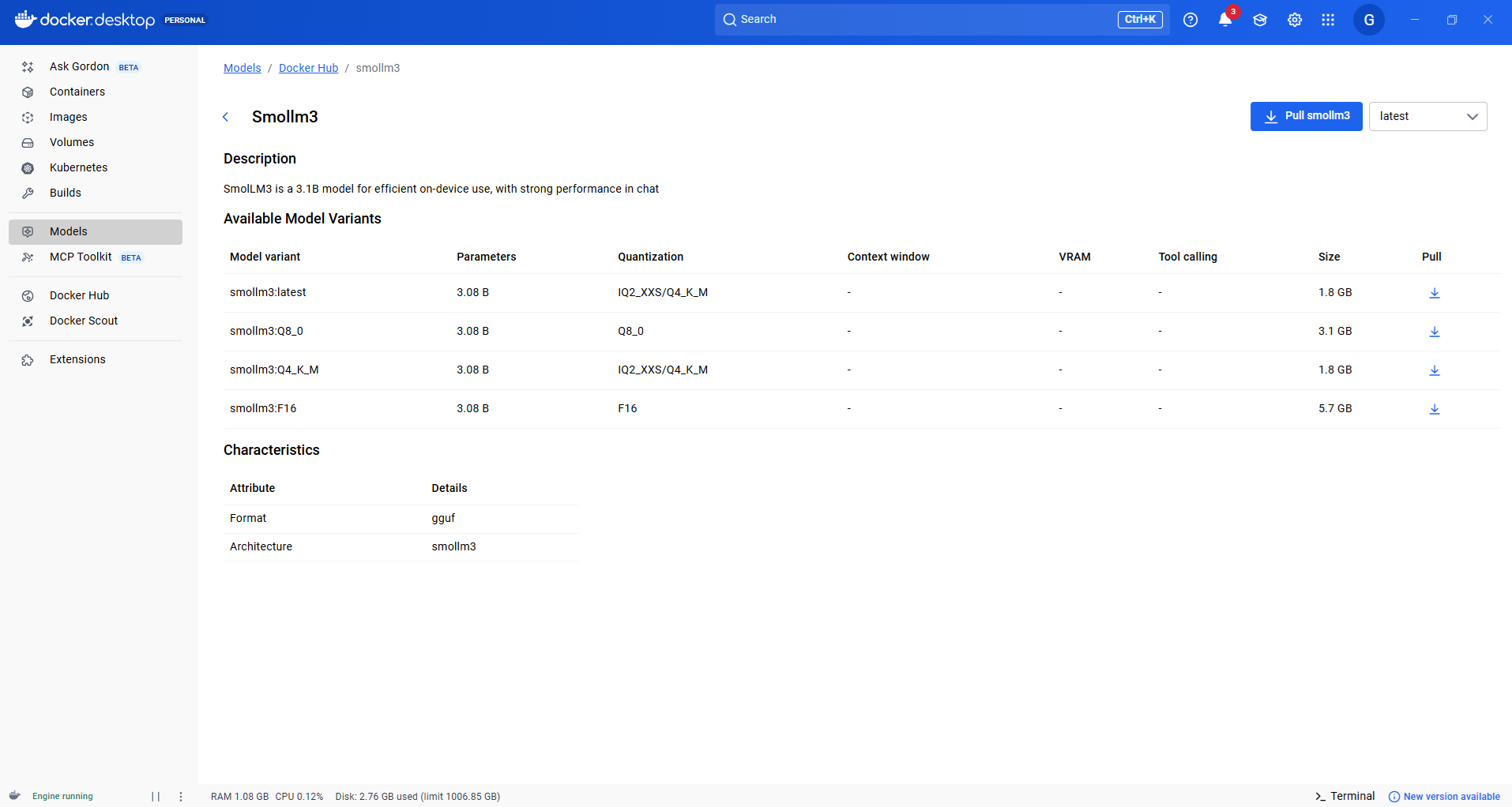

4. Docker Hubからモデルを取得

検索バーで検索し、

latestに該当するQ4_K_Mをインストール

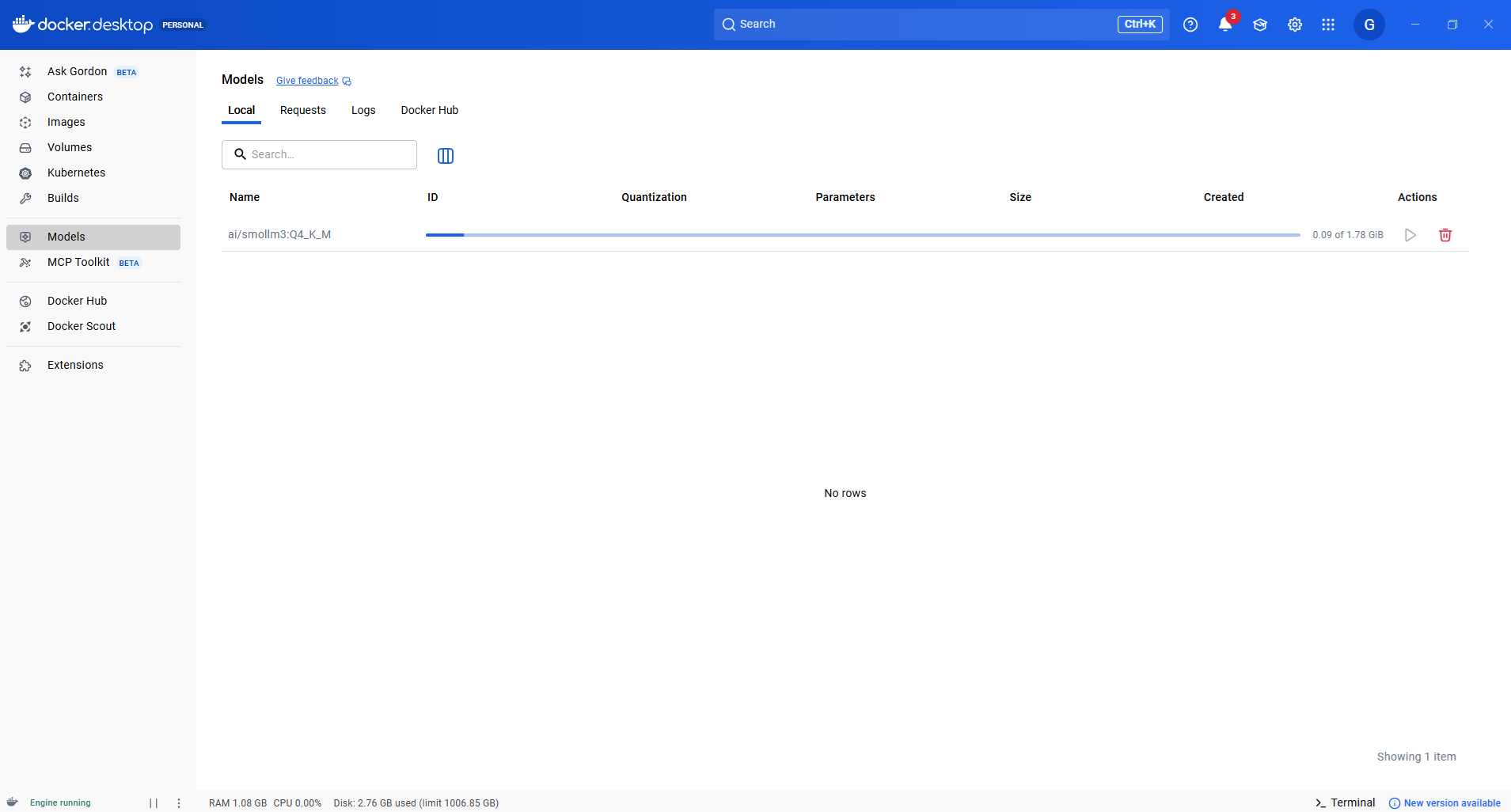

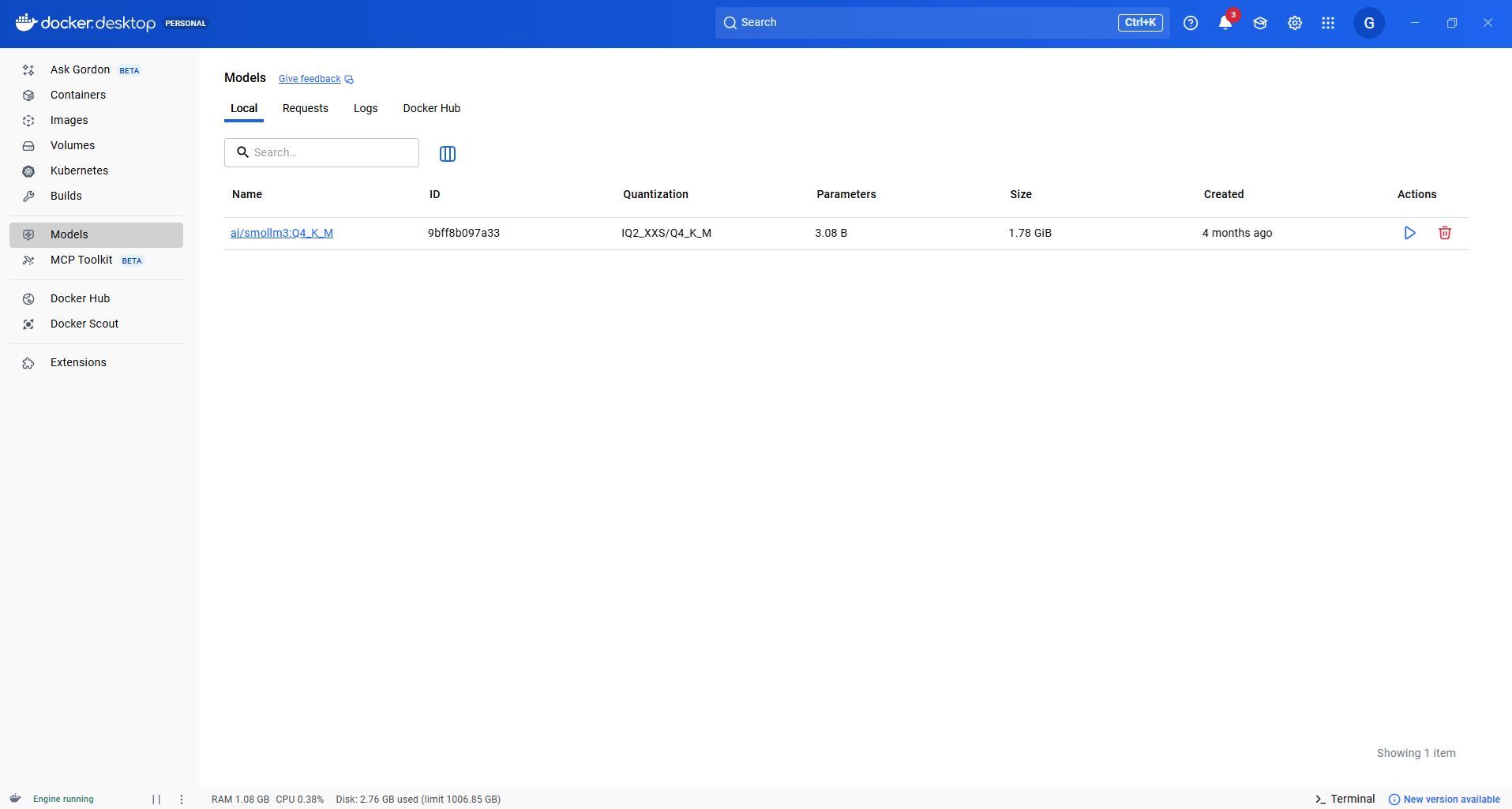

5. Model取得完了

構築できました

ここまで 10分くらい

実行してみる

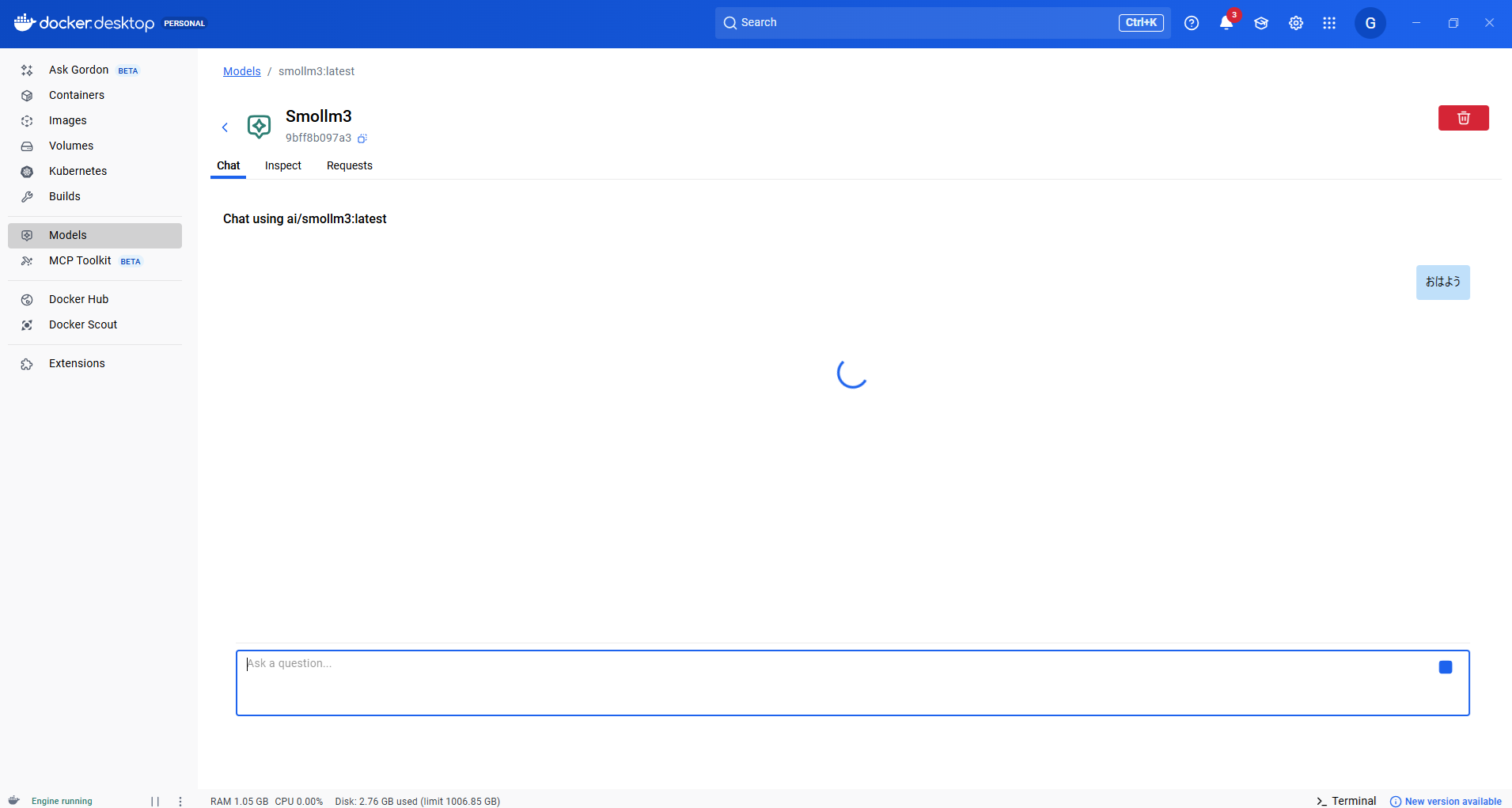

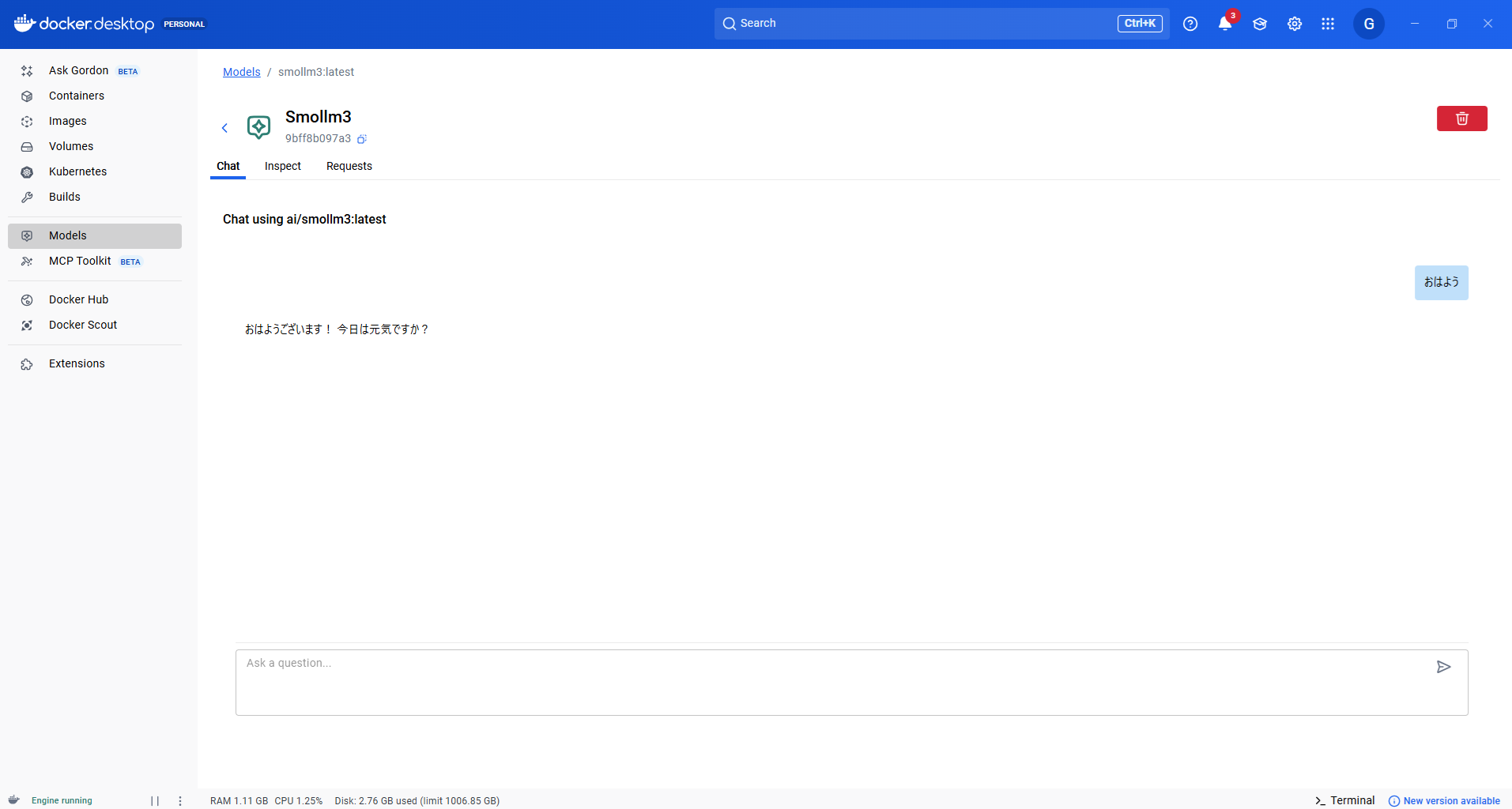

とりあえず挨拶をしてみます

1. 実行

モデルに入ると Chat 欄でチャットを実際に送ることができます

2. PCの状態を見てみる

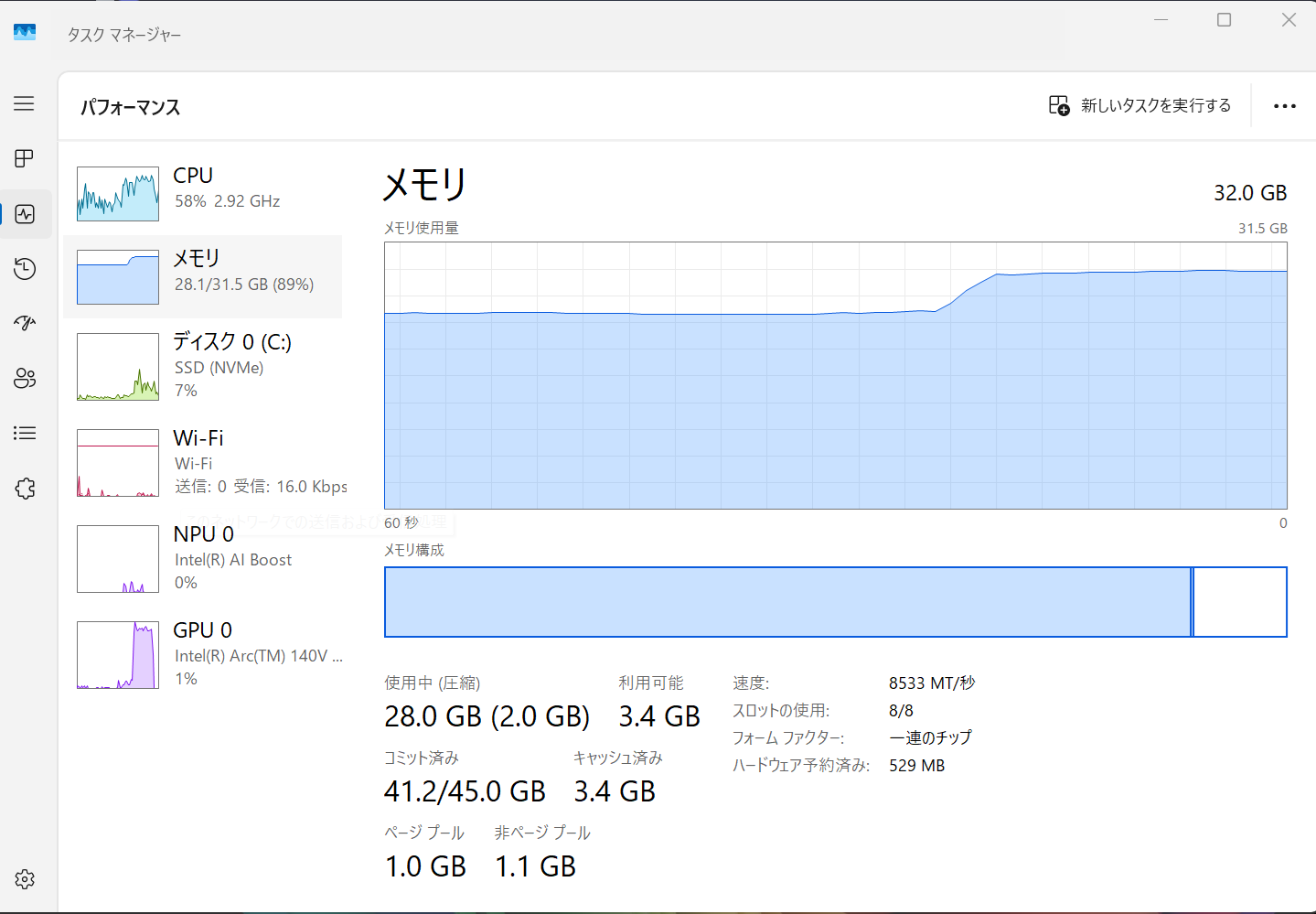

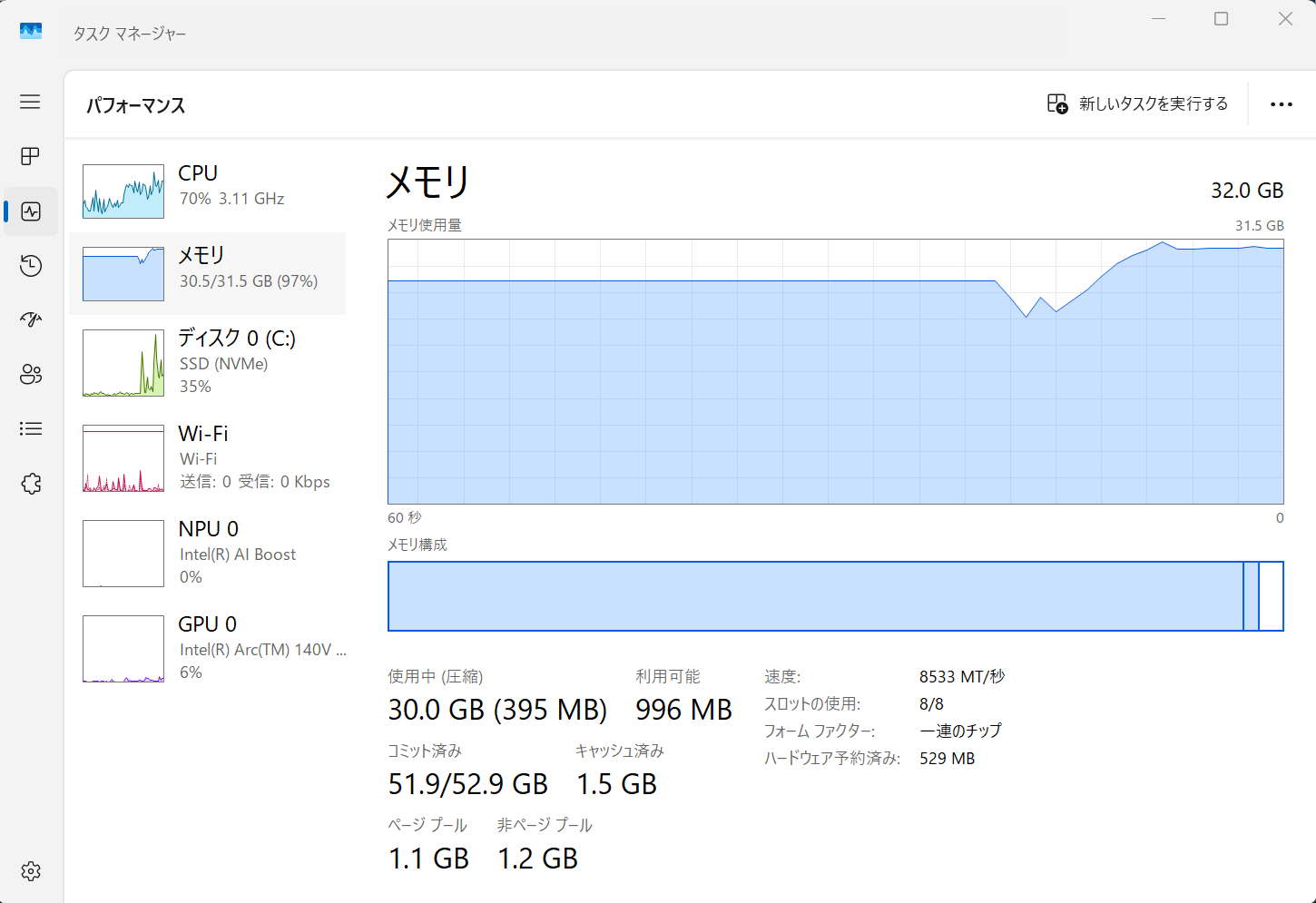

Memory

実行前から20GBくらい使っていますが、、、

たしかに上がった

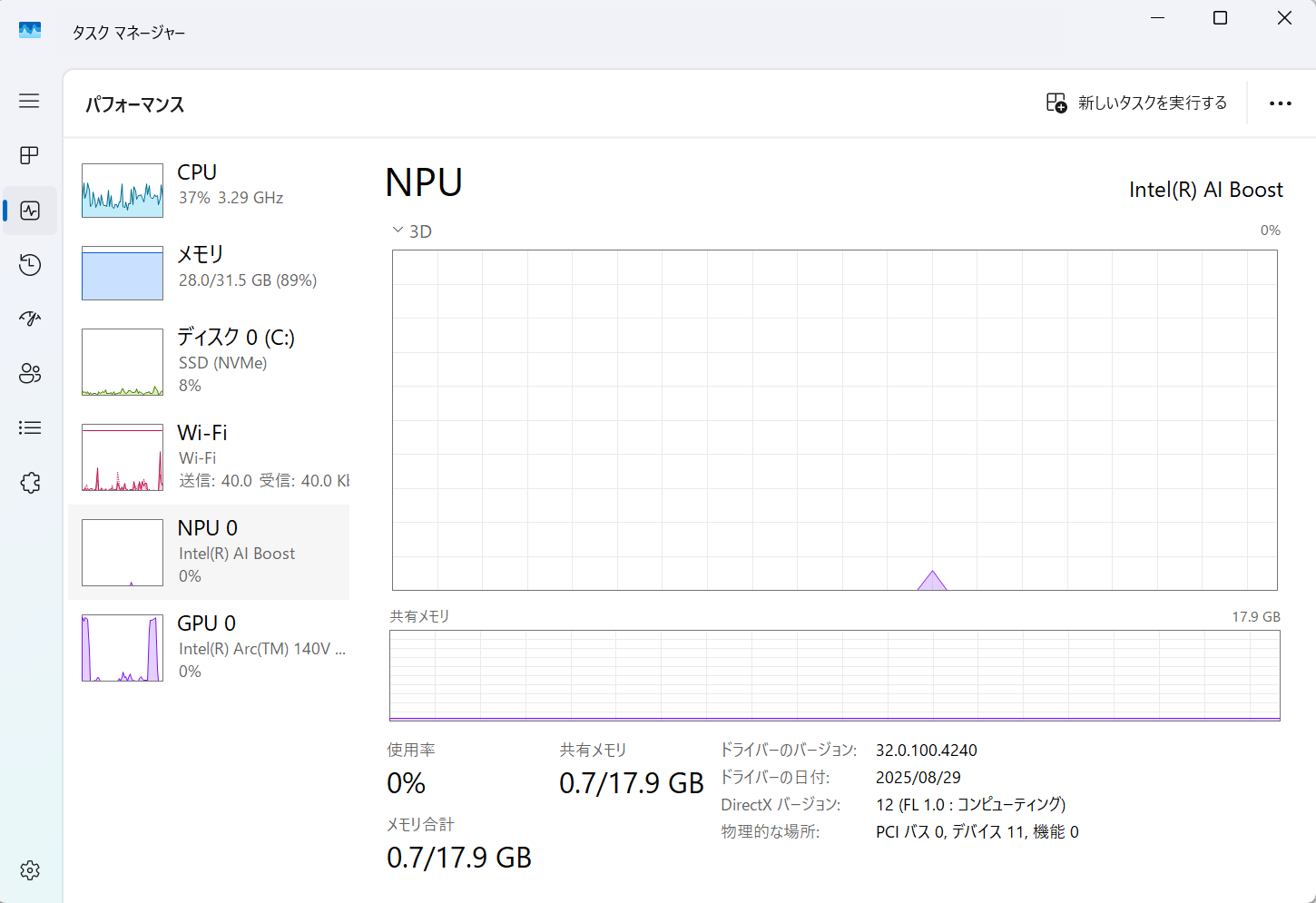

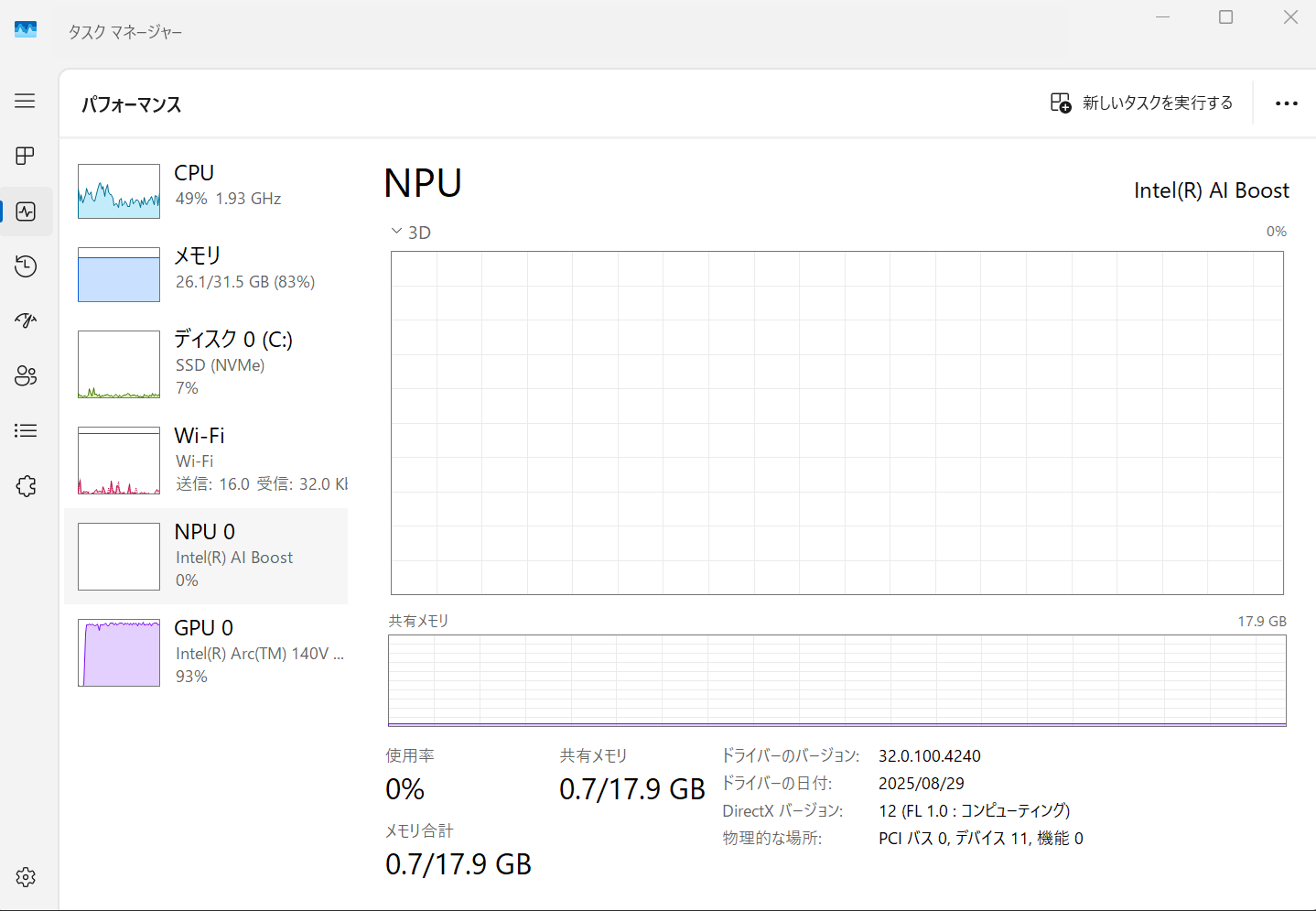

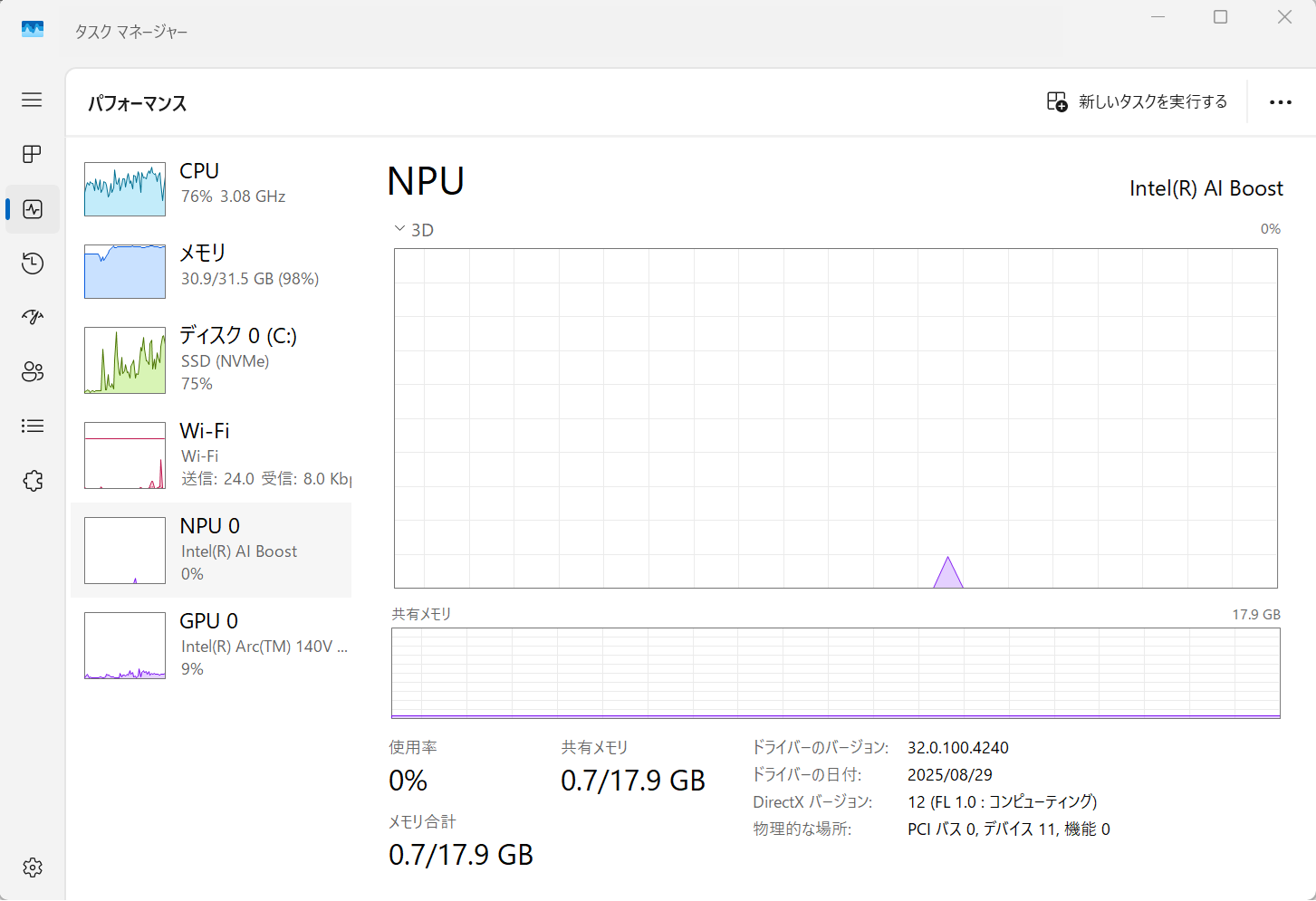

NPU

...あれ???

全く動かないぞ

3. 応答を見てみる

そうこうしているうちに回答が返ってきました

何度か繰り返しましたが 応答までは5秒くらい

APIでも実行してみる

Docker 公式ページ にもある通り、RestAPIでも実行可能なようです

アクセス先は http://localhost:(Settingで決めたPort)の/engines/llama.cpp/v1/completions です

Portはここで決めています

curl http://localhost:12434/engines/llama.cpp/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "ai/smollm3:Q4_K_M",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Please write 500 words about the fall of Rome."}

]

}'

curlを投げてみると帰ってきました

{"choices":[{"finish_reason":"stop","index":0,"message":{"role":"assistant","content":"The fall of the Roman Empire, often considered one of the most pivotal events in human history, was a complex and multifaceted process that spanned centuries. The Roman Empire, once the dominant force in Europe, North Africa, and parts of the Middle East, gradually declined and eventually collapsed in the 5th century AD. The reasons for this decline are numerous and have been debated by historians for centuries.\n\nOne of the primary causes of the fall of Rome was the economic decline of the empire. The Roman economy, once robust and prosperous, began to suffer due to a series of factors, including heavy taxation, inflation, and a decline in agricultural production. The empire's extensive network of roads and infrastructure had made trade and commerce thrive, but as the empire's wealth and power waned, so too did its ability to maintain and repair these vital systems. This decline in economic prosperity meant that the empire could no longer sustain its vast military and administrative apparatus, leading to a gradual weakening of its grip on its territories.\n\nPolitical instability also played a significant role in the fall of Rome. The empire's leadership was marked by frequent changes in emperors, often resulting in civil wars and power struggles. The Praetorian Guard, the elite guard of the emperor, frequently turned against their own emperors, leading to a cycle of violent succession. This instability made it difficult for the empire to maintain stability and cohesion, allowing external forces to exploit the empire's weaknesses.\n\nAnother critical factor was the impact of external invasions. The Roman Empire had long maintained a Pax Romana, a period of peace and security, but this stability was increasingly challenged by barbarian invasions from the north and east. The Huns, led by Attila, ravaged large parts of Europe in the 5th century, while the Visigoths, Vandals, and Ostrogoths increasingly encroached upon Roman territories. The inability of the Roman army to repel these invasions and the decline of Roman military strength further weakened the empire.\n\nThe rise of Christianity also contributed to the fall of Rome. While Christianity had been tolerated by the Roman Empire since the 4th century, it eventually became a dominant force in the empire, often challenging the traditional Roman values and authority. The church, with its emphasis on a spiritual rather than a temporal ruler, began to exert a significant influence over the Roman elite, leading to a decline in the emperor's power and authority. The conversion of Emperor Constantine to Christianity in the 4th century marked a significant turning point, as Christianity became the official state religion, leading to a gradual erosion of the traditional Roman values.\n\nThe fall of Rome was also influenced by internal social and cultural changes. The empire's population, once characterized by a strong sense of civic duty and loyalty to the state, began to shift towards a more individualistic and consumerist ethos. The rise of cities and the growth of a merchant class led to an increased demand for goods and services, which in turn created a need for greater economic and administrative complexity. However, this increased complexity also led to a greater strain on the empire's resources and a weakening of its traditional values.\n\nIn conclusion, the fall of Rome was the result of a complex interplay of internal and external factors. The economic decline, political instability, invasions from external forces, the rise of Christianity, and social and cultural changes all contributed to the empire's gradual collapse. The fall of Rome marked the end of a golden era, one that had shaped the course of human history for centuries, and the beginning of a new era in which the world was dominated by new powers and empires. Today, the legacy of Rome continues to influence the world, serving as a reminder of the power and complexity of a civilization that once reigned supreme."}}],"created":1761641879,"model":"ai/smollm3:Q4_K_M","system_fingerprint":"b1-7a50cf3","object":"chat.completion","usage":{"completion_tokens":770,"prompt_tokens":62,"total_tokens":832},"id":"chatcmpl-Yl8J9WvNFkDc0hrt2gpHKL6hrhtHp8BN","timings":{"cache_n":0,"prompt_n":62,"prompt_ms":449.422,"prompt_per_token_ms":7.248741935483872,"prompt_per_second":137.95497327678663,"predicted_n":770,"predicted_ms":33304.415,"predicted_per_token_ms":43.25248701298701,"predicted_per_second":23.120057806149724}}

jsonの末尾にある timings が実行のメトリクスとして利用できそうです

predicted_ms が応答までにかかった時間と類似しています

応答まで 35秒くらい

$ time curl http://localhost:12434/engines/llama.cpp/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "ai/smollm3:Q4_K_M",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Good Morning"}

]

}'

{"choices":[{"finish_reason":"stop","index":0,"message":{"role":"assistant","content":"Good morning! How can I help you today?"}}],"created":1761642520,"model":"ai/smollm3:Q4_K_M","system_fingerprint":"b1-7a50cf3","object":"chat.completion","usage":{"completion_tokens":15,"prompt_tokens":53,"total_tokens":68},"id":"chatcmpl-vMGhyUul1wzwoGsIyjAOyhUN8DxYTuBk","timings":{"cache_n":52,"prompt_n":1,"prompt_ms":41.761,"prompt_per_token_ms":41.761,"prompt_per_second":23.9457867388233,"predicted_n":15,"predicted_ms":496.55,"predicted_per_token_ms":33.10333333333333,"predicted_per_second":30.20843822374383}}

real 0m5.572s

user 0m0.015s

sys 0m0.000s

こちらでも挨拶をしてみたら 5秒ほどで応答

predicted_msは500msくらいなので5sくらいのオーバーヘッドがある感じ?

まとめ

とりあえず Docker Model Runner を動かすことはできた

NPUもGPUも全然使っていないので次はここを使わせることを目標にしたい

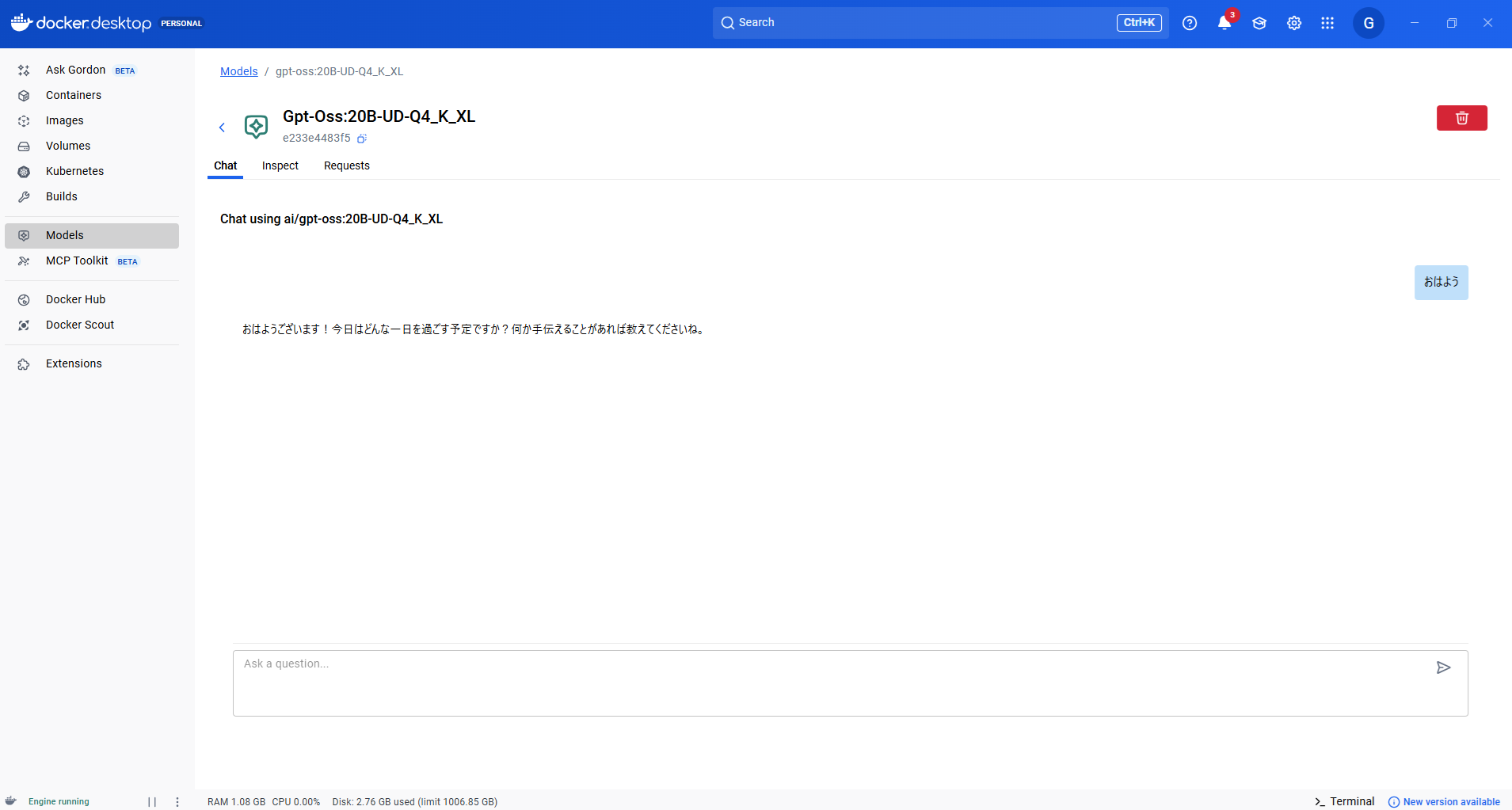

Appendix: gpt-ossも動かしてみた

せっかくなのでGPTも動かしてみた

重っ!!!

こちらは回答までだいぶ待ったが、

SmolLM3より温かみがあるような、、、

こちらもAPIで時間を計ってみたところ

$ time curl http://localhost:12434/engines/llama.cpp/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "ai/gpt-oss:20B-UD-Q4_K_XL",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Good Morning"}

]

}'

{"choices":[{"finish_reason":"stop","index":0,"message":{"role":"assistant","reasoning_content":"User says \"Good Morning\". We respond politely. Probably greet.","content":"Good morning! ☀️ How can I help you today?"}}],"created":1761642800,"model":"ai/gpt-oss:20B-UD-Q4_K_XL","system_fingerprint":"b1-7a50cf3","object":"chat.completion","usagge":{"completion_tokens":36,"prompt_tokens":82,"total_tokens":118},"id":"chatcmpl-cRO80D7dSZMKeFIv9af94DuwWNl4IcYb","timings":{"cache_n":62,"prompt_n":20,"prompt_ms":1588.651,"prompt_per_token_ms":79.43255,"prompt_per_second":12.589297460549862,"predicted_n":36,"predicted_ms":2119.612,"predicted_per_token_ms":58.87811111111111,"predicted_per_second":16.98424051194275}}

real 0m21.808s

user 0m0.016s

sys 0m0.031s

21秒くらいらしい

predicted_msは2119msなので 19sくらいオーバーヘッドある模様

(なぜかGPT-OSS側は情報が出ていないが、、、)

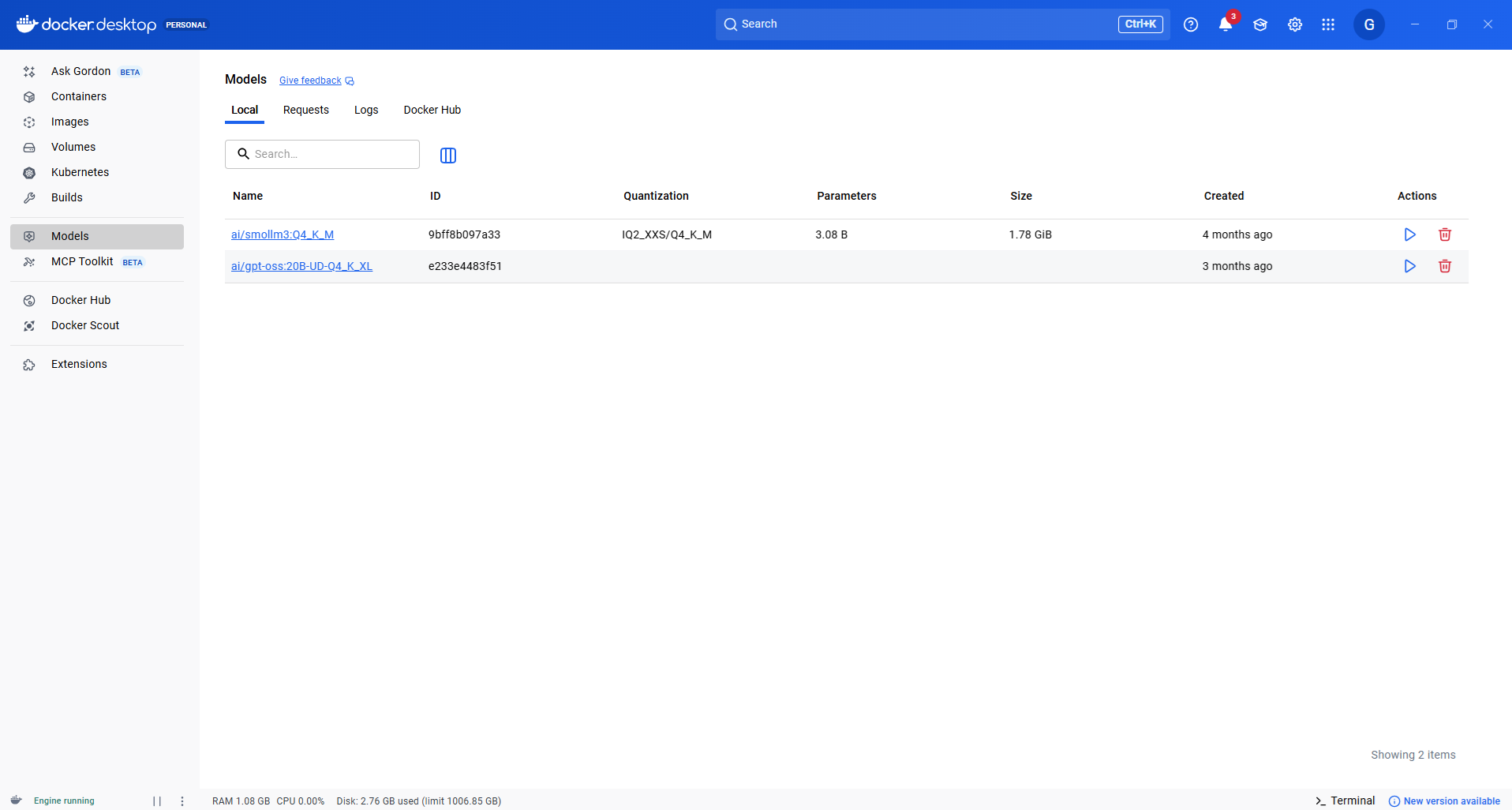

SmolLM3はSizeが1.78 GBであるのに対して

GPT-OSSはSizeが11.1 GBあるのでここのモデルをメモリに乗せるのに苦労するのかも