トイドローン Tello をWebRTCでつなげてみた

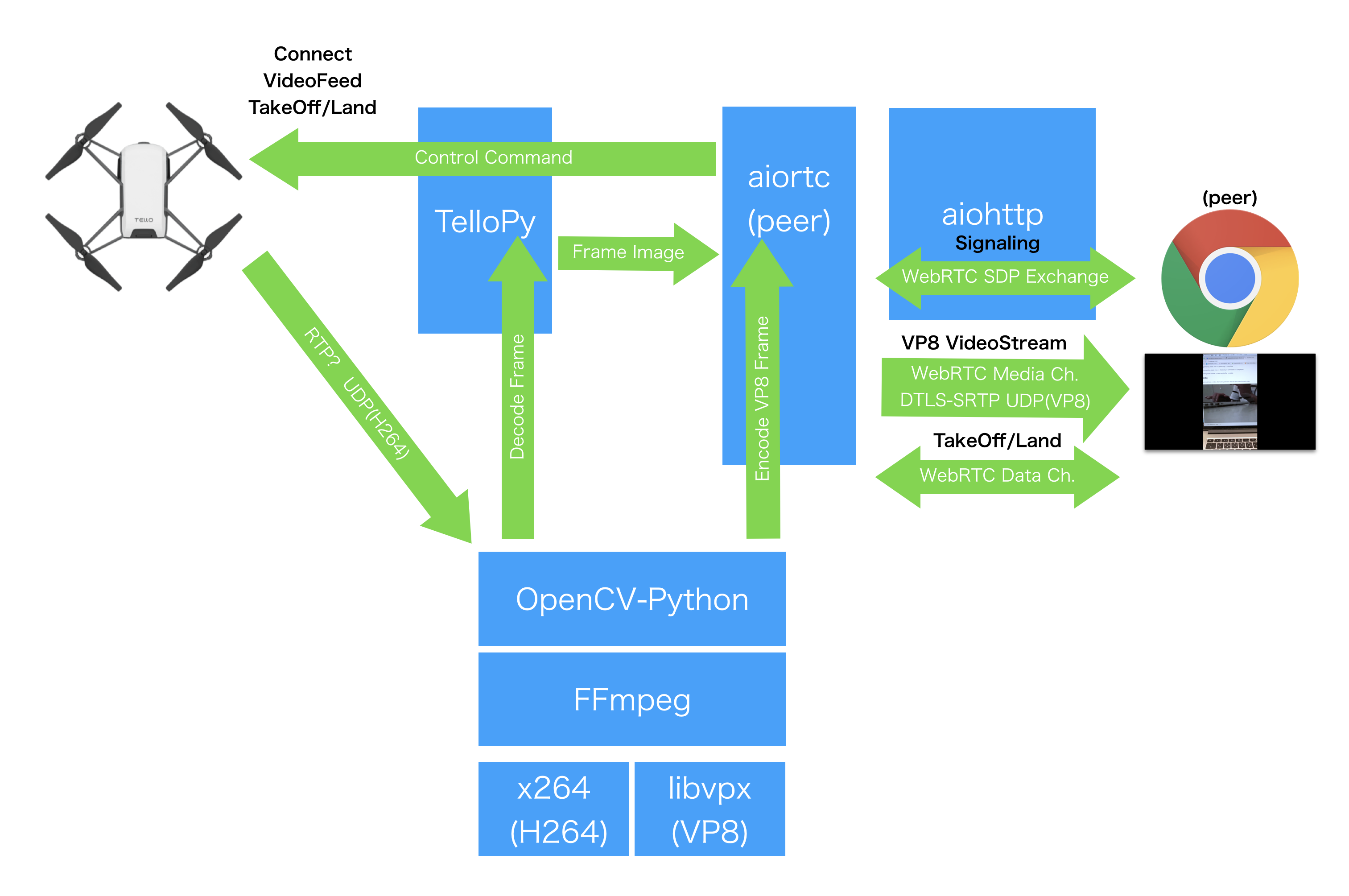

tello用のpythonライブラリtellpyから取得できるstreamをフレームに変換してWebRTCで映像送信をしてみました。動画

tellpyから取得できるstreamはキューイングされているのでWebRTCで接続した際に1分程のbig-lag(遅延)が発生していました。苦肉の策でDatachannelが接続が確立した段階で一度そのバッファをクリアしています。

注意

たまに接続が切れたり制御不能になったりするので、自己責任にてお願い致します。

環境

mac osx high sierra 10.13.5

python 3.6.1

ffmpeg 4.0.1

構成

コード

requirement.txt

aiohttp==3.3.2

aioice==0.6.2

aiortc==0.8.0

asn1crypto==0.24.0

async-timeout==3.0.0

attrs==18.1.0

av==0.4.1

cffi==1.11.5

chardet==3.0.4

crcmod==1.7

cryptography==2.2.2

Django==2.0.7

idna==2.7

idna-ssl==1.0.1

image==1.5.24

multidict==4.3.1

netifaces==0.10.7

numpy==1.14.5

opencv-python==3.4.1.15

Pillow==5.2.0

pycparser==2.18

pyee==5.0.0

pygame==1.9.3

pylibsrtp==0.5.5

pyOpenSSL==18.0.0

pytz==2018.5

six==1.11.0

tellopy==0.4.0

websockets==5.0.1

yarl==1.2.6

server.py

import argparse

import asyncio

import json

import logging

import math

import os

import time

import wave

import cv2

import numpy

from aiohttp import web

import sys

import traceback

import tellopy

import av

from aiortc import RTCPeerConnection, RTCSessionDescription

from aiortc.mediastreams import (AudioFrame, AudioStreamTrack, VideoFrame,

VideoStreamTrack)

ROOT = os.path.dirname(__file__)

def frame_from_bgr(data_bgr):

data_yuv = cv2.cvtColor(data_bgr, cv2.COLOR_BGR2YUV_YV12)

return VideoFrame(width=data_bgr.shape[1], height=data_bgr.shape[0], data=data_yuv.tobytes())

def frame_from_gray(data_gray):

data_bgr = cv2.cvtColor(data_gray, cv2.COLOR_GRAY2BGR)

data_yuv = cv2.cvtColor(data_bgr, cv2.COLOR_BGR2YUV_YV12)

return VideoFrame(width=data_bgr.shape[1], height=data_bgr.shape[0], data=data_yuv.tobytes())

def frame_to_bgr(frame):

data_flat = numpy.frombuffer(frame.data, numpy.uint8)

data_yuv = data_flat.reshape((math.ceil(frame.height * 12 / 8), frame.width))

return cv2.cvtColor(data_yuv, cv2.COLOR_YUV2BGR_YV12)

class VideoTransformTrack(VideoStreamTrack):

def __init__(self, container, video_stream, width, height):

self.container = container

self.video_stream = video_stream

self.frame_skip = 300

self.total_frame = 0

self.frame = VideoFrame(width=width,height=height)

async def recv(self):

for packet in self.container.demux(self.video_stream):

try:

frames = packet.decode()

self.total_frame = self.total_frame + len(frames)

for frm in frames:

if 0 < self.frame_skip:

self.frame_skip = self.frame_skip - 1

continue

start_time = time.time()

self.frame = frame_from_bgr(numpy.array(frm.to_image()))

self.frame_skip = 1

#self.frame_skip = int((time.time() - start_time)/frm.time_base)

cv2.waitKey(1)

return self.frame

except AVError as err:

print(err)

return self.frame

return self.frame

async def index(request):

content = open(os.path.join(ROOT, 'index.html'), 'r').read()

return web.Response(content_type='text/html', text=content)

async def javascript(request):

content = open(os.path.join(ROOT, 'client.js'), 'r').read()

return web.Response(content_type='application/javascript', text=content)

async def offer(request):

params = await request.json()

offer = RTCSessionDescription(

sdp=params['sdp'],

type=params['type'])

pc = RTCPeerConnection()

pc._consumers = []

pcs.append(pc)

width = 1920

height = 1080

local_video = VideoTransformTrack(container=container,video_stream=video_stream,width=width,height=height)

pc.addTrack(local_video)

@pc.on('datachannel')

def on_datachannel(channel):

@channel.on('message')

def on_message(message):

if message == "connect":

vs.queue = [] #キューイングされているデータをクリア

elif message == "ping":

channel.send('pong')

elif message == "takeoff":

drone.takeoff()

elif message == "down":

drone.down(50)

elif message == "land":

drone.land()

await pc.setRemoteDescription(offer)

answer = await pc.createAnswer()

await pc.setLocalDescription(answer)

return web.Response(

content_type='application/json',

text=json.dumps({

'sdp': pc.localDescription.sdp,

'type': pc.localDescription.type

}))

pcs = []

drone = {}

container = {}

video_stream = {}

vs = {}

async def on_shutdown(app):

# close peer connections

coros = [pc.close() for pc in pcs]

await asyncio.gather(*coros)

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='WebRTC audio / video / data-channels demo')

parser.add_argument('--port', type=int, default=8080,

help='Port for HTTP server (default: 8080)')

parser.add_argument('--verbose', '-v', action='count')

args = parser.parse_args()

if args.verbose:

logging.basicConfig(level=logging.DEBUG)

drone = tellopy.Tello()

drone.connect()

drone.wait_for_connection(60.0)

drone.start_video()

vs = drone.get_video_stream()

container = av.open(vs)

video_stream = next(s for s in container.streams if s.type == 'video')

app = web.Application()

app.on_shutdown.append(on_shutdown)

app.router.add_get('/', index)

app.router.add_get('/client.js', javascript)

app.router.add_post('/offer', offer)

web.run_app(app, port=args.port)

index.html

<html>

<head>

<meta charset="UTF-8"/>

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>WebRTC demo</title>

<style>

button {

padding: 8px 16px;

}

pre {

overflow-x: hidden;

overflow-y: auto;

}

video {

width: 100%;

}

.option {

margin-bottom: 8px;

}

#media {

max-width: 1280px;

}

</style>

</head>

<body>

<h2>Options</h2>

<div class="option">

<input id="use-datachannel" checked="checked" type="checkbox"/>

<label for="use-datachannel">Use datachannel</label>

</div>

<button id="start" onclick="start()">Start</button>

<button id="stop" style="display: none" onclick="stop()">Stop</button>

<button onclick="command('takeoff')">takeoff</button>

<button onclick="command('down')">down</button>

<button onclick="command('land')">land</button>

<h2>State</h2>

<p>

ICE gathering state: <span id="ice-gathering-state"></span>

</p>

<p>

ICE connection state: <span id="ice-connection-state"></span>

</p>

<p>

Signaling state: <span id="signaling-state"></span>

</p>

<div id="media" style="display: none">

<h2>Media</h2>

<p>You should see a video alternating between the raw and transformed video.</p>

<audio id="audio" autoplay="true"></audio>

<video id="video" autoplay="true"></video>

</div>

<h2>Data channel</h2>

<pre id="data-channel" style="height: 200px;"></pre>

<h2>SDP</h2>

<h3>Offer</h3>

<pre id="offer-sdp"></pre>

<h3>Answer</h3>

<pre id="answer-sdp"></pre>

<script src="client.js"></script>

</body>

</html>

client.js

var pc = new RTCPeerConnection();

// get DOM elements

var dataChannelLog = document.getElementById('data-channel'),

iceConnectionLog = document.getElementById('ice-connection-state'),

iceGatheringLog = document.getElementById('ice-gathering-state'),

signalingLog = document.getElementById('signaling-state');

// register some listeners to help debugging

pc.addEventListener('icegatheringstatechange', function() {

iceGatheringLog.textContent += ' -> ' + pc.iceGatheringState;

}, false);

iceGatheringLog.textContent = pc.iceGatheringState;

pc.addEventListener('iceconnectionstatechange', function() {

iceConnectionLog.textContent += ' -> ' + pc.iceConnectionState;

}, false);

iceConnectionLog.textContent = pc.iceConnectionState;

pc.addEventListener('signalingstatechange', function() {

signalingLog.textContent += ' -> ' + pc.signalingState;

}, false);

signalingLog.textContent = pc.signalingState;

// connect audio / video

pc.addEventListener('track', function(evt) {

if (evt.track.kind == 'video')

document.getElementById('video').srcObject = evt.streams[0];

else

document.getElementById('audio').srcObject = evt.streams[0];

});

// data channel

var dc = null, dcInterval = null;

function negotiate() {

return pc.createOffer({offerToReceiveVideo : true, offerToReceiveAudio: false}).then(function(offer) {

return pc.setLocalDescription(offer);

}).then(function() {

// wait for ICE gathering to complete

return new Promise(function(resolve) {

if (pc.iceGatheringState === 'complete') {

resolve();

} else {

function checkState() {

if (pc.iceGatheringState === 'complete') {

pc.removeEventListener('icegatheringstatechange', checkState);

resolve();

}

}

pc.addEventListener('icegatheringstatechange', checkState);

}

});

}).then(function() {

var offer = pc.localDescription;

document.getElementById('offer-sdp').textContent = offer.sdp;

return fetch('/offer', {

body: JSON.stringify({

sdp: offer.sdp,

type: offer.type

}),

headers: {

'Content-Type': 'application/json'

},

method: 'POST'

});

}).then(function(response) {

return response.json();

}).then(function(answer) {

document.getElementById('answer-sdp').textContent = answer.sdp;

return pc.setRemoteDescription(answer);

});

}

function start() {

document.getElementById('start').style.display = 'none';

if (document.getElementById('use-datachannel').checked) {

dc = pc.createDataChannel('chat');

dc.onclose = function() {

clearInterval(dcInterval);

dataChannelLog.textContent += '- close\n';

};

dc.onopen = function() {

dataChannelLog.textContent += '- open\n';

dc.send('connect');

dcInterval = setInterval(function() {

var message = 'ping';

dataChannelLog.textContent += '> ' + message + '\n';

dc.send(message);

}, 10000);

};

dc.onmessage = function(evt) {

dataChannelLog.textContent += '< ' + evt.data + '\n';

};

}

document.getElementById('media').style.display = 'block';

negotiate();

document.getElementById('stop').style.display = 'inline-block';

}

function command(text) {

dc.send(text);

}

function stop() {

document.getElementById('stop').style.display = 'none';

// close data channel

if (dc) {

dc.close();

}

// close audio / video

pc.getSenders().forEach(function(sender) {

sender.track.stop();

});

// close peer connection

setTimeout(function() {

pc.close();

}, 500);

}

動かし方

pip install -r requirement.txt

1.telloの電源をオン

2.macよりwifi接続

3.python server.py

4.chromeブラウザでhttp://localhost:8080 にアクセス

5.startボタンをクリック

6.シグナリングが開始されます。(しばらく待つ 以外と時間がかかります)

7.WebRTCの接続完了するとchromeブラウザにtelloの映像が表示されます。

ライブラリ

https://github.com/hanyazou/TelloPy

https://github.com/jlaine/aiortc

追記:重複フレームで遅延が発生していましたので修正しました。