本記事の要約

-

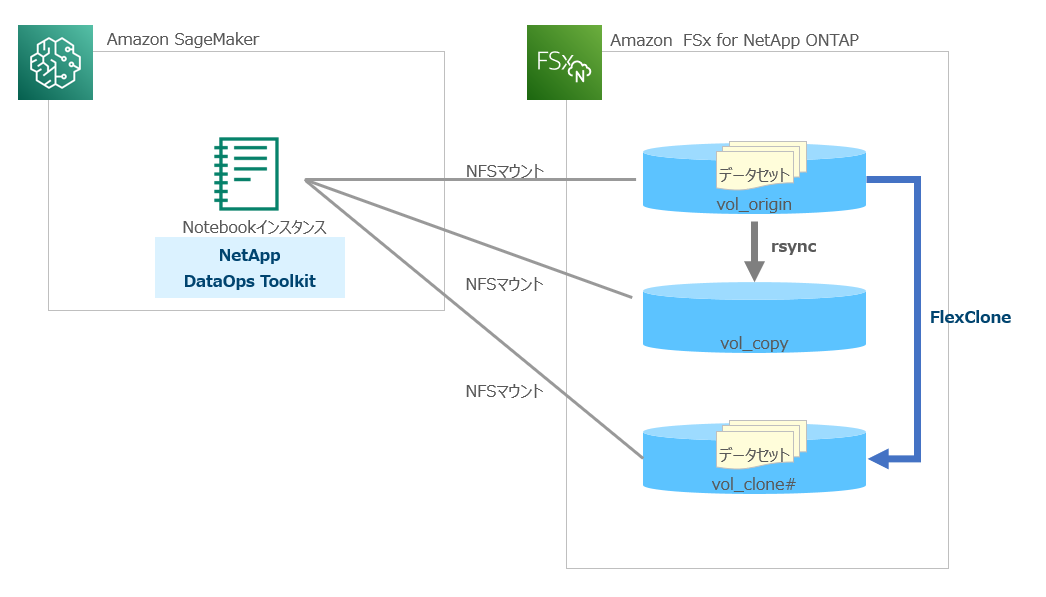

NetApp DataOps Toolkitを利用することで、Amazon FSx for NetApp ONTAP上のVolumeを簡単に複製可能ということをお伝えします。

-

Amazon SageMakerのJupyter NotebookインスタンスにDataOps Toolkitをインストールし、Python経由でデータセットが格納されたVolumeの複製からマウントまでを自動化してみます。

-

以前投稿した「Amazon SageMakerとAmazon FSx for NetApp ONTAPで実現する機械学習基盤」の派生版になります。

以前投稿した「Amazon SageMakerとAmazon FSx for NetApp ONTAPで実現する機械学習基盤」の記事はこちら

NetApp DataOps Toolkitとは

おさらいですが、NetApp ONTAPを利用するストレージ向けに提供されるPythonライブラリです。

NetApp DataOps Toolkitを利用することで、データサイエンティストやデータエンジニア自身でネットアップストレージのVolume作成や複製、Snapshot取得などの処理を簡単に操作可能です。

GitHubに公開されていますので、どなたでも利用することが可能です。

https://github.com/NetApp/netapp-dataops-toolkit

やってみた

- Amazon SageMakerのJupyter NotebookインスタンスにNetApp DataOps Toolkitをインストール

- Jupyter Notebookインスタンスには、vol_originとvol_copyというNFS Volumeをマウント

- vol_origin内には、約50GBのダミーファイルを格納(データセット相当)

- vol_origin内のデータをvol_copyへrsyncでコピー

- vol_originをNetApp DataOps Toolkitで複製し、複製したVolumeを自動マウント

rsyncコマンドなどでデータセットをコピー or 退避するよりも、DataOps Toolkit経由でデータセットが格納されたVolumeを複製した方が格段に速いということをお伝えします。

また、ONTAPのストレージ効率化機能(重複排除・圧縮)により、Volumeを単に複製しても差分データが生まれるまでストレージの容量が消費されないという点もポイントです。

NetApp DataOps Toolkitのインストール

Jupyter Notebookインスタンスのターミナルからインストールします。

- まず、Amazon FSx for NetApp ONTAP向けのルート設定やnfs-utilsのインストールを行います。

sh-4.2$ sudo route add -net 172.29.0.0/16 dev eth2

sh-4.2$ sudo yum install -y nfs-utils

Loaded plugins: dkms-build-requires, extras_suggestions, langpacks, priorities, update-motd, versionlock

amzn2-core | 3.7 kB 00:00:00

amzn2extra-docker | 3.0 kB 00:00:00

amzn2extra-kernel-5.10 | 3.0 kB 00:00:00

amzn2extra-python3.8 | 3.0 kB 00:00:00

centos-extras | 2.9 kB 00:00:00

copr:copr.fedorainfracloud.org:vbatts:shadow-utils-newxidmap | 3.3 kB 00:00:00

https://download.docker.com/linux/centos/2/x86_64/stable/repodata/repomd.xml: [Errno 14] HTTPS Error 404 - Not Found

Trying other mirror.

libnvidia-container/x86_64/signature | 833 B 00:00:00

libnvidia-container/x86_64/signature | 2.1 kB 00:00:00 !!!

neuron | 2.9 kB 00:00:00

(1/2): libnvidia-container/x86_64/primary | 28 kB 00:00:00

(2/2): neuron/primary_db | 121 kB 00:00:00

libnvidia-container 176/176

61 packages excluded due to repository priority protections

Package 1:nfs-utils-1.3.0-0.54.amzn2.0.2.x86_64 already installed and latest version

Nothing to do

- マウント先のディレクトリ作成とAmazon FSx for NetApp ONTAPのVolumeをマントします。

sh-4.2$ sudo mkdir /mnt/vol_origin

sh-4.2$ sudo mkdir /mnt/vol_copy

sh-4.2$ sudo mount -t nfs 172.29.12.139:/vol_origin /mnt/vol_origin

sh-4.2$ sudo mount -t nfs 172.29.12.139:/vol_copy /mnt/vol_copy

- NetApp DataOps Toolkitをインストールします。

sh-4.2$ python3 -m pip install netapp-dataops-traditional

Looking in indexes: https://pypi.org/simple, https://pip.repos.neuron.amazonaws.com

Collecting netapp-dataops-traditional

Downloading netapp_dataops_traditional-2.1.1-py3-none-any.whl (22 kB)

Requirement already satisfied: boto3 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from netapp-dataops-traditional) (1.26.71)

Collecting netapp-ontap

Downloading netapp_ontap-9.12.1.0-py3-none-any.whl (25.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 25.0/25.0 MB 12.1 MB/s eta 0:00:00

Requirement already satisfied: pyyaml in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from netapp-dataops-traditional) (5.4.1)

Collecting tabulate

Downloading tabulate-0.9.0-py3-none-any.whl (35 kB)

Requirement already satisfied: pandas in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from netapp-dataops-traditional) (1.3.5)

Requirement already satisfied: requests in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from netapp-dataops-traditional) (2.28.2)

Requirement already satisfied: botocore<1.30.0,>=1.29.71 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from boto3->netapp-dataops-traditional) (1.29.71)

Requirement already satisfied: jmespath<2.0.0,>=0.7.1 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from boto3->netapp-dataops-traditional) (1.0.1)

Requirement already satisfied: s3transfer<0.7.0,>=0.6.0 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from boto3->netapp-dataops-traditional) (0.6.0)

Collecting requests-toolbelt>=0.9.1

Downloading requests_toolbelt-0.10.1-py2.py3-none-any.whl (54 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 54.5/54.5 kB 16.4 MB/s eta 0:00:00

Requirement already satisfied: urllib3>=1.26.7 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from netapp-ontap->netapp-dataops-traditional) (1.26.8)

Collecting marshmallow>=3.2.1

Downloading marshmallow-3.19.0-py3-none-any.whl (49 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 49.1/49.1 kB 10.4 MB/s eta 0:00:00

Requirement already satisfied: charset-normalizer<4,>=2 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from requests->netapp-dataops-traditional) (2.1.1)

Requirement already satisfied: idna<4,>=2.5 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from requests->netapp-dataops-traditional) (3.4)

Requirement already satisfied: certifi>=2017.4.17 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from requests->netapp-dataops-traditional) (2022.12.7)

Requirement already satisfied: python-dateutil>=2.7.3 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from pandas->netapp-dataops-traditional) (2.8.2)

Requirement already satisfied: pytz>=2017.3 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from pandas->netapp-dataops-traditional) (2022.7.1)

Requirement already satisfied: numpy>=1.17.3 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from pandas->netapp-dataops-traditional) (1.21.6)

Requirement already satisfied: packaging>=17.0 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from marshmallow>=3.2.1->netapp-ontap->netapp-dataops-traditional) (23.0)

Requirement already satisfied: six>=1.5 in ./anaconda3/envs/JupyterSystemEnv/lib/python3.7/site-packages (from python-dateutil>=2.7.3->pandas->netapp-dataops-traditional) (1.16.0)

Installing collected packages: tabulate, marshmallow, requests-toolbelt, netapp-ontap, netapp-dataops-traditional

Successfully installed marshmallow-3.19.0 netapp-dataops-traditional-2.1.1 netapp-ontap-9.12.1.0 requests-toolbelt-0.10.1 tabulate-0.9.0

- NetApp DataOps Toolkitのヘルプコマンドを実行し、実行できればインストール自体は完了です。

sh-4.2$ netapp_dataops_cli.py help

The NetApp DataOps Toolkit is a Python library that makes it simple for data scientists and data engineers to perform various data management tasks, such as provisioning a new data volume, near-instantaneously cloning a data volume, and near-instantaneously snapshotting a data volume for traceability/baselining.

Basic Commands:

config Create a new config file (a config file is required to perform other commands).

help Print help text.

version Print version details.

Data Volume Management Commands:

Note: To view details regarding options/arguments for a specific command, run the command with the '-h' or '--help' option.

clone volume Create a new data volume that is an exact copy of an existing volume.

create volume Create a new data volume.

delete volume Delete an existing data volume.

list volumes List all data volumes.

mount volume Mount an existing data volume locally. Note: on Linux hosts - must be run as root.

unmount volume Unmount an existing data volume. Note: on Linux hosts - must be run as root.

Snapshot Management Commands:

Note: To view details regarding options/arguments for a specific command, run the command with the '-h' or '--help' option.

create snapshot Create a new snapshot for a data volume.

delete snapshot Delete an existing snapshot for a data volume.

list snapshots List all snapshots for a data volume.

restore snapshot Restore a snapshot for a data volume (restore the volume to its exact state at the time that the snapshot was created).

Data Fabric Commands:

Note: To view details regarding options/arguments for a specific command, run the command with the '-h' or '--help' option.

list cloud-sync-relationships List all existing Cloud Sync relationships.

sync cloud-sync-relationship Trigger a sync operation for an existing Cloud Sync relationship.

pull-from-s3 bucket Pull the contents of a bucket from S3.

pull-from-s3 object Pull an object from S3.

push-to-s3 directory Push the contents of a directory to S3 (multithreaded).

push-to-s3 file Push a file to S3.

Advanced Data Fabric Commands:

Note: To view details regarding options/arguments for a specific command, run the command with the '-h' or '--help' option.

prepopulate flexcache Prepopulate specific files/directories on a FlexCache volume (ONTAP 9.8 and above ONLY).

list snapmirror-relationships List all existing SnapMirror relationships.

sync snapmirror-relationship Trigger a sync operation for an existing SnapMirror relationship.

- NetApp DataOps Toolkitのコンフィグを設定します。

ここでの設定が接続先のNetAppストレージ(今回の場合は、Amazon FSx for NetApp ONTAP)の情報になります。

sh-4.2$ netapp_dataops_cli.py config

Enter ONTAP management LIF hostname or IP address (Recommendation: Use SVM management interface): 172.29.12.180

Enter SVM (Storage VM) name: svm2

Enter SVM NFS data LIF hostname or IP address: 172.29.12.139

Enter default volume type to use when creating new volumes (flexgroup/flexvol) [flexgroup]: flexvol

Enter export policy to use by default when creating new volumes [default]:

Enter snapshot policy to use by default when creating new volumes [none]:

Enter unix filesystem user id (uid) to apply by default when creating new volumes (ex. '0' for root user) [0]:

Enter unix filesystem group id (gid) to apply by default when creating new volumes (ex. '0' for root group) [0]:

Enter unix filesystem permissions to apply by default when creating new volumes (ex. '0777' for full read/write permissions for all users and groups) [0777]: 0777

Enter aggregate to use by default when creating new FlexVol volumes:

Enter ONTAP API username (Recommendation: Use SVM account): vsadmin

Enter ONTAP API password (Recommendation: Use SVM account):

Verify SSL certificate when calling ONTAP API (true/false): false

Do you intend to use this toolkit to trigger Cloud Sync operations? (yes/no): no

Do you intend to use this toolkit to push/pull from S3? (yes/no): no

Created config file: '/home/ec2-user/.netapp_dataops/config.json'.

- 試しにAmazon FSx for NetApp ONTAPのVolume一覧を取得します。

今回利用するvol_originとvol_copyが存在することが分かります。

sh-4.2$ netapp_dataops_cli.py list volumes

Volume Name Size Type NFS Mount Target Local Mountpoint FlexCache Clone Source Volume Source Snapshot

---------------- ------- ------- ------------------------------- ------------------ ----------- ------- --------------- ------------------------------------

vol_copy 500.0GB flexvol 172.29.12.139:/vol_copy /mnt/vol_copy no no

vol_origin 500.0GB flexvol 172.29.12.139:/vol_origin /mnt/vol_origin no no

データセットをrsyncでコピーする

- 今回利用するデータセットを確認します。

vol_origin内に約50GBのダミーファイルが存在することが分かります。

sh-4.2$ cd /mnt/vol_origin/

sh-4.2$ ls

DataSet

sh-4.2$ cd DataSet/

sh-4.2$ ls -lh

total 51G

-rw-r--r-- 1 root root 1.0G Apr 11 04:45 test_10.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:47 test_11.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:50 test_12.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:52 test_13.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:54 test_14.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:56 test_15.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:59 test_16.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:01 test_17.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:03 test_18.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:06 test_19.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_1.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:08 test_20.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:10 test_21.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:12 test_22.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:15 test_23.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:17 test_24.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:19 test_25.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:22 test_26.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:24 test_27.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:26 test_28.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:28 test_29.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_2.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:31 test_30.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:33 test_31.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:35 test_32.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:37 test_33.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:40 test_34.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:42 test_35.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:44 test_36.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:47 test_37.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:49 test_38.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:51 test_39.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_3.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:53 test_40.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:56 test_41.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:58 test_42.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:00 test_43.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:03 test_44.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:05 test_45.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:07 test_46.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:09 test_47.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:12 test_48.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:14 test_49.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_4.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_5.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:36 test_6.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:38 test_7.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:40 test_8.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:43 test_9.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:34 test.file

- コピー先のvol_copyは空になります。

sh-4.2$ cd /mnt/vol_copy/DataSet

sh-4.2$ ls -lh

total 0

- rsyncコマンドでデータセットをコピーします。

例えばvol_origin内のデータセットの改変があるので、vol_copy内にコピーしておくようなことを想定しています。

sh-4.2$ sudo rsync -av --progress /mnt/vol_origin/DataSet/ /mnt/vol_copy/

sending incremental file list

./

test.file

1,073,741,824 100% 162.46MB/s 0:00:06 (xfr#1, to-chk=49/51)

test_1.file

1,073,741,824 100% 110.24MB/s 0:00:09 (xfr#2, to-chk=48/51)

test_10.file

1,038,712,832 96% 150.01MB/s 0:00:00 ```

問題なくデータセットのコピーが行われますが、単純にファイルコピーに時間がかかります。

つまり、データセットの容量に応じて時間がかかる分、分析業務に待ち時間が発生することにつながります。

今回準備した環境では、約50GBのデータセットをコピーするのに7分43秒かかりました。

NetApp DataOps ToolkitでVolumeを複製する

前置きが長くなりましたが、いよいよNetApp DataOps Toolkitを使ってVolumeを複製してみます。

Jupyter NotebookインスタンスのNotebookから操作可能です。

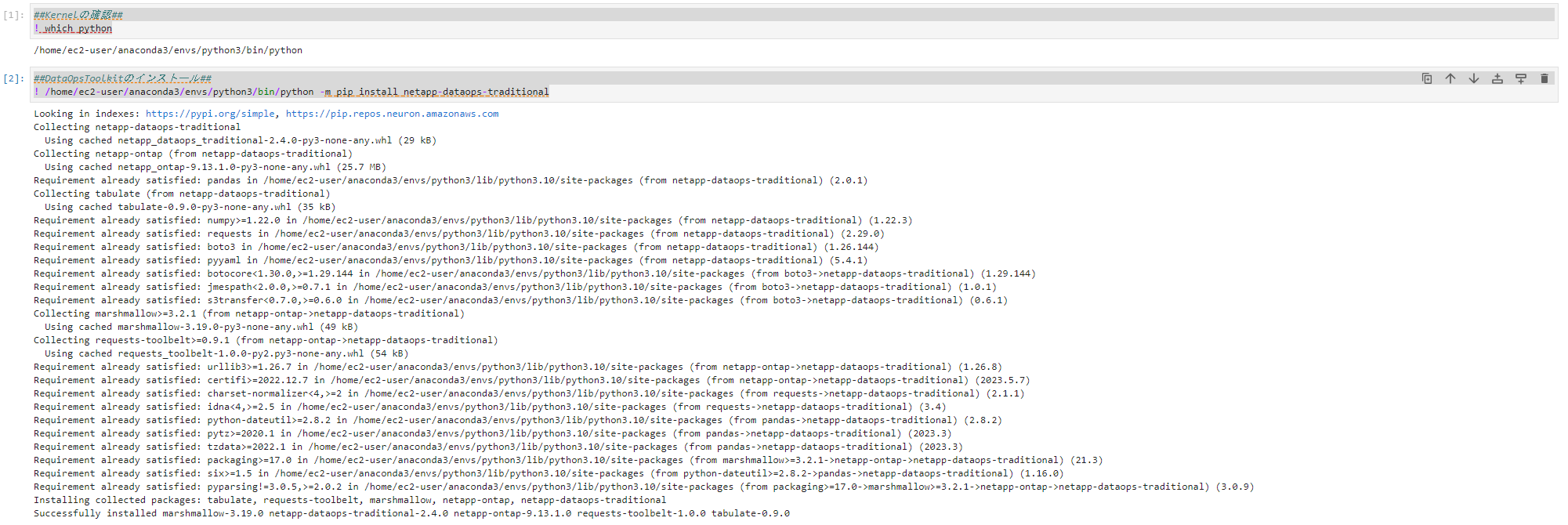

- Python環境でNetApp DataOps Toolkitを利用する準備をする。

##Kernelの確認##

! which python

/home/ec2-user/anaconda3/envs/python3/bin/python

##DataOpsToolkitのインストール##

! /home/ec2-user/anaconda3/envs/python3/bin/python -m pip install netapp-dataops-traditional

Looking in indexes: https://pypi.org/simple, https://pip.repos.neuron.amazonaws.com

Collecting netapp-dataops-traditional

Using cached netapp_dataops_traditional-2.4.0-py3-none-any.whl (29 kB)

Collecting netapp-ontap (from netapp-dataops-traditional)

Using cached netapp_ontap-9.13.1.0-py3-none-any.whl (25.7 MB)

Requirement already satisfied: pandas in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-dataops-traditional) (2.0.1)

Collecting tabulate (from netapp-dataops-traditional)

Using cached tabulate-0.9.0-py3-none-any.whl (35 kB)

Requirement already satisfied: numpy>=1.22.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-dataops-traditional) (1.22.3)

Requirement already satisfied: requests in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-dataops-traditional) (2.29.0)

Requirement already satisfied: boto3 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-dataops-traditional) (1.26.144)

Requirement already satisfied: pyyaml in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-dataops-traditional) (5.4.1)

Requirement already satisfied: botocore<1.30.0,>=1.29.144 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from boto3->netapp-dataops-traditional) (1.29.144)

Requirement already satisfied: jmespath<2.0.0,>=0.7.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from boto3->netapp-dataops-traditional) (1.0.1)

Requirement already satisfied: s3transfer<0.7.0,>=0.6.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from boto3->netapp-dataops-traditional) (0.6.1)

Collecting marshmallow>=3.2.1 (from netapp-ontap->netapp-dataops-traditional)

Using cached marshmallow-3.19.0-py3-none-any.whl (49 kB)

Collecting requests-toolbelt>=0.9.1 (from netapp-ontap->netapp-dataops-traditional)

Using cached requests_toolbelt-1.0.0-py2.py3-none-any.whl (54 kB)

Requirement already satisfied: urllib3>=1.26.7 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-ontap->netapp-dataops-traditional) (1.26.8)

Requirement already satisfied: certifi>=2022.12.7 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from netapp-ontap->netapp-dataops-traditional) (2023.5.7)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from requests->netapp-dataops-traditional) (2.1.1)

Requirement already satisfied: idna<4,>=2.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from requests->netapp-dataops-traditional) (3.4)

Requirement already satisfied: python-dateutil>=2.8.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pandas->netapp-dataops-traditional) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pandas->netapp-dataops-traditional) (2023.3)

Requirement already satisfied: tzdata>=2022.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pandas->netapp-dataops-traditional) (2023.3)

Requirement already satisfied: packaging>=17.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from marshmallow>=3.2.1->netapp-ontap->netapp-dataops-traditional) (21.3)

Requirement already satisfied: six>=1.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from python-dateutil>=2.8.2->pandas->netapp-dataops-traditional) (1.16.0)

Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from packaging>=17.0->marshmallow>=3.2.1->netapp-ontap->netapp-dataops-traditional) (3.0.9)

Installing collected packages: tabulate, requests-toolbelt, marshmallow, netapp-ontap, netapp-dataops-traditional

Successfully installed marshmallow-3.19.0 netapp-dataops-traditional-2.4.0 netapp-ontap-9.13.1.0 requests-toolbelt-1.0.0 tabulate-0.9.0

Jupyter Notebookのコンソール上のイメージは、以下のようになります。

- 試しにAmazon FSx for NetApp ONTAPのVolume一覧を取得します。

今回利用するvol_originとvol_copyが存在することが分かります。

##FSxNで実行されるVolume一覧の取得##

from netapp_dataops.traditional import list_volumes

volumesList = list_volumes(

check_local_mounts = True,

include_space_usage_details = False,

print_output = True

)

##実行結果

Volume Name Size Type NFS Mount Target Local Mountpoint FlexCache Clone Source SVM Source Volume Source Snapshot

---------------- ------- ------- ------------------------------- ------------------ ----------- ------- ------------ --------------- -----------------

vol_copy 500.0GB flexvol 172.29.12.139:/vol_copy /mnt/vol_copy no no

vol_origin 500.0GB flexvol 172.29.12.139:/vol_origin /mnt/vol_origin no no

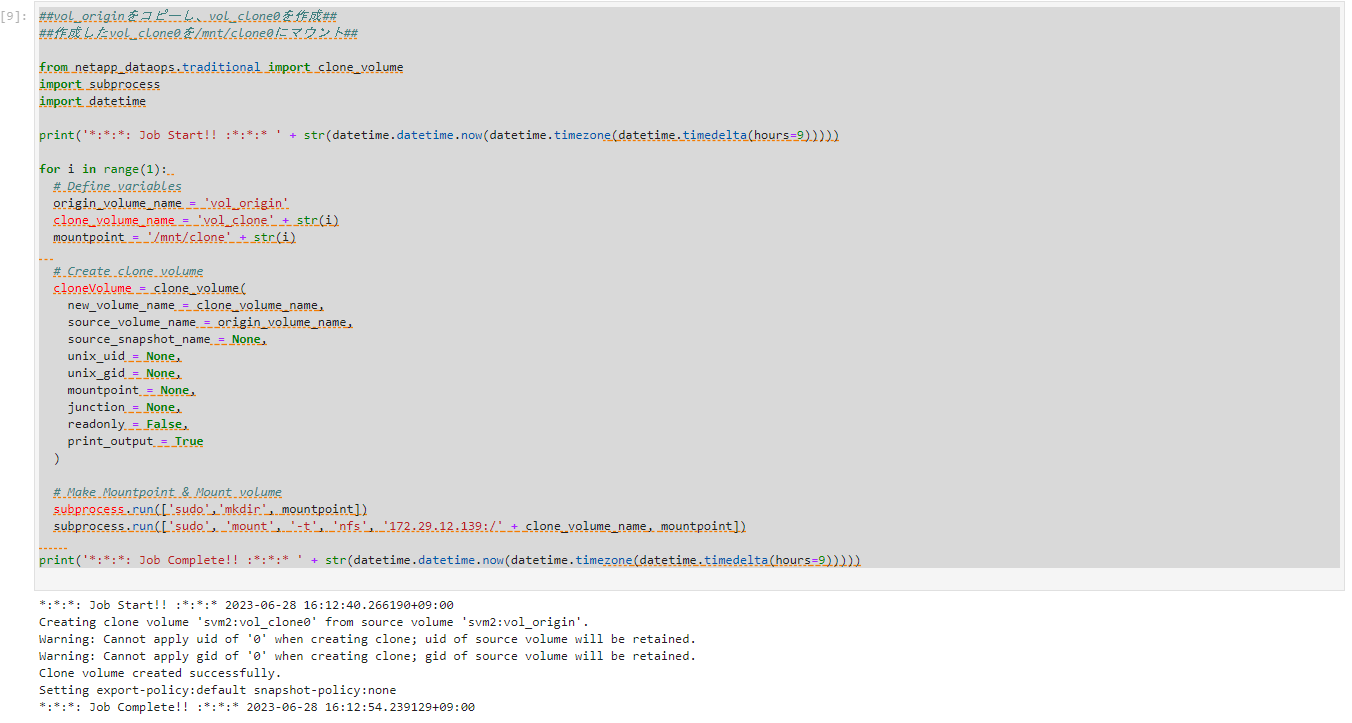

- vol_originをvol_clone0という名前で複製し、/mnt/clone0にマウントします。

##vol_originをコピーし、vol_clone0を作成##

##作成したvol_clone0を/mnt/clone0にマウント##

from netapp_dataops.traditional import clone_volume

import subprocess

import datetime

print('*:*:*: Job Start!! :*:*:* ' + str(datetime.datetime.now(datetime.timezone(datetime.timedelta(hours=9)))))

##forのrange複製Volume数を指定

##今回は1を指定し、clone0を作成

for i in range(1):

# Define variables

origin_volume_name = 'vol_origin'

clone_volume_name = 'vol_clone' + str(i)

mountpoint = '/mnt/clone' + str(i)

# Create clone volume

cloneVolume = clone_volume(

new_volume_name = clone_volume_name,

source_volume_name = origin_volume_name,

source_snapshot_name = None,

unix_uid = None,

unix_gid = None,

mountpoint = None,

junction = None,

readonly = False,

print_output = True

)

# Make Mountpoint & Mount volume

subprocess.run(['sudo','mkdir', mountpoint])

subprocess.run(['sudo', 'mount', '-t', 'nfs', '172.29.12.139:/' + clone_volume_name, mountpoint])

print('*:*:*: Job Complete!! :*:*:* ' + str(datetime.datetime.now(datetime.timezone(datetime.timedelta(hours=9)))))

- 数秒程度でスクリプトが完了します。

スクリプトが正常に完了すると以下のメッセージが出力されます。

*:*:*: Job Start!! :*:*:* 2023-06-28 16:12:40.266190+09:00

Creating clone volume 'svm2:vol_clone0' from source volume 'svm2:vol_origin'.

Warning: Cannot apply uid of '0' when creating clone; uid of source volume will be retained.

Warning: Cannot apply gid of '0' when creating clone; gid of source volume will be retained.

Clone volume created successfully.

Setting export-policy:default snapshot-policy:none

*:*:*: Job Complete!! :*:*:* 2023-06-28 16:12:54.239129+09:00

Jupyter Notebookのコンソール上のイメージは、以下のようになります。

今回準備した環境では、Volumeの複製からマウントまで14秒で完了しました。

rsyncでのコピーに7分43秒かかっていたので、7分以上の時間短縮ができたことになります。

- 作成したvol_clone0が/mnt/clone0に自動マウントされたことを確認します。

##作成したvol_clone0が/mnt/clone0にマウントされたことを確認##

! df

##実行結果

Filesystem 1K-blocks Used Available Use% Mounted on

devtmpfs 1961076 0 1961076 0% /dev

tmpfs 1971688 0 1971688 0% /dev/shm

tmpfs 1971688 624 1971064 1% /run

tmpfs 1971688 0 1971688 0% /sys/fs/cgroup

/dev/nvme0n1p1 188731372 140194380 48536992 75% /

/dev/nvme1n1 5009056 60 4730468 1% /home/ec2-user/SageMaker

tmpfs 394340 0 394340 0% /run/user/1001

tmpfs 394340 0 394340 0% /run/user/1002

tmpfs 394340 0 394340 0% /run/user/1000

172.29.12.139:/vol_origin 498073600 6920256 491153344 2% /mnt/vol_origin

172.29.12.139:/vol_copy 498073600 16331904 481741696 4% /mnt/vol_copy

172.29.12.139:/vol_clone0 498073600 6920256 491153344 2% /mnt/clone0

- データセットが複製されたことを確認します。

今回は、vol_originをvol_clone0という名前で複製し、/mnt/clone0としてマウントしています。

##データセットが複製されたことを確認##

! ls -lh /mnt/clone0/DataSet

##実行結果

total 51G

-rw-r--r-- 1 root root 1.0G Apr 11 04:45 test_10.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:47 test_11.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:50 test_12.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:52 test_13.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:54 test_14.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:56 test_15.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:59 test_16.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:01 test_17.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:03 test_18.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:06 test_19.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_1.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:08 test_20.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:10 test_21.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:12 test_22.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:15 test_23.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:17 test_24.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:19 test_25.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:22 test_26.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:24 test_27.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:26 test_28.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:28 test_29.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_2.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:31 test_30.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:33 test_31.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:35 test_32.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:37 test_33.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:40 test_34.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:42 test_35.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:44 test_36.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:47 test_37.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:49 test_38.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:51 test_39.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_3.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:53 test_40.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:56 test_41.file

-rw-r--r-- 1 root root 1.0G Apr 11 05:58 test_42.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:00 test_43.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:03 test_44.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:05 test_45.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:07 test_46.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:09 test_47.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:12 test_48.file

-rw-r--r-- 1 root root 1.0G Apr 11 06:14 test_49.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_4.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:35 test_5.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:36 test_6.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:38 test_7.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:40 test_8.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:43 test_9.file

-rw-r--r-- 1 root root 1.0G Apr 11 04:34 test.file

- NetApp DataOps Toolkitを利用し、数行程度のスクリプトでVolumeの複製が可能です。

- Volumeの複製もストレージの機能により数秒で完了、データセット複製の時間を大幅に短縮できます。

- また、ストレージ効率化機能(重複排除・圧縮)により、Volumeを単に複製しても差分データが生まれるまでストレージの容量が消費されないという点もポイントです。(※大事なポイントなので再掲)

以上、Amazon SageMakerのJupyter NotebookインスタンスとPythonを利用して、高速かつ簡単にデータセットが格納されるVolumeを複製できました。

まとめ

Amazon SageMakerとAmazon FSx for NetApp ONTAP、そしてNetApp DataOps Toolkitを組み合わせることで機械学習や分析業務で利用するデータセットを高速に複製できるということをお伝えしました。

データサイエンティストやエンジニアの方で、日ごろデータセットの準備や複製に時間がかかって困っているという方は是非お試しをいただければと思います。

この記事が少しでも参考になれば、幸いです。

NetApp DataOps ToolkitのPythonサンプルコードや利用方法は、GitHubをご参照ください。

https://github.com/NetApp/netapp-dataops-toolkit/tree/main/netapp_dataops_traditional