※ 注意 : k8s,aws,fargate,terraform 全てにおいて1ヶ月前に触り始めた初心者なので、参考にする方は自己責任でお願いいたします。(コメントで指摘していただけるととても喜びます)

やりたいこと

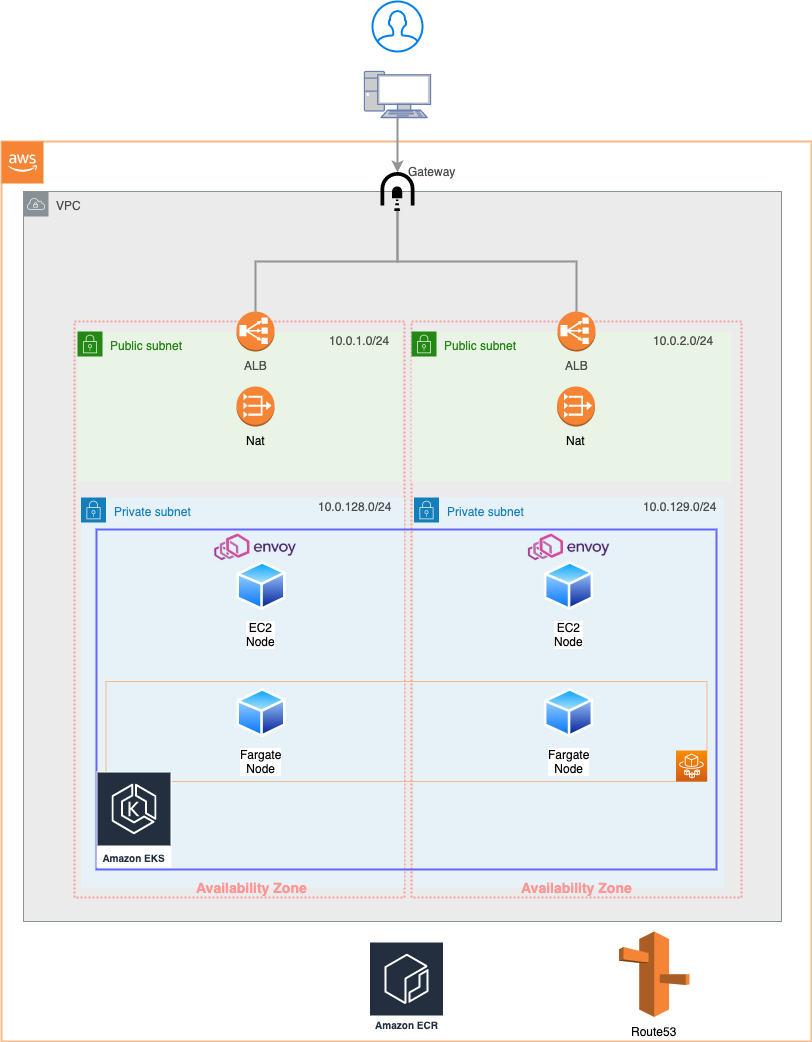

AWSにEKSを立てて、FargateでNodeを立ち上げてみます。

この領域になるとネットワーク周りの設定を載せないところが多いので、そちらも載せます。

構成

今回はこんなかんじで行こうと思います。

EC2NodeとFargateNodeを分けた理由

Fargateで作成されたNodeのIPは構築時には不明なので、一般的にはIngressControllerを利用してALBを作成すると思いますが、ネットワークはTerraformに任せたい(kubernetes側に管理されたくない)という筆者のワガママでこのような構成にしています。

Network

# =========================================

# VPC

# =========================================

resource "aws_vpc" "sample" {

cidr_block = "10.0.0.0/16"

enable_dns_hostnames = true

enable_dns_support = true

tags = {

Name = "${var.application_name}-vpc"

}

}

# =========================================

# Gateway

# =========================================

resource "aws_internet_gateway" "sample" {

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-gateway"

}

}

# =========================================

# Elastic IP

# =========================================

resource "aws_eip" "sample_nat_a" {

vpc = true

depends_on = ["aws_internet_gateway.sample"]

tags = {

Name = "${var.application_name}-eip-a"

}

}

resource "aws_eip" "sample_nat_c" {

vpc = true

depends_on = ["aws_internet_gateway.sample"]

tags = {

Name = "${var.application_name}-eip-c"

}

}

# =========================================

# Nat Gateway

# =========================================

resource "aws_nat_gateway" "sample_nat_a" {

allocation_id = "${aws_eip.sample_nat_a.id}"

subnet_id = "${aws_subnet.sample_public_a.id}"

depends_on = ["aws_internet_gateway.sample"]

tags = {

Name = "${var.application_name}-nat-a"

}

}

resource "aws_nat_gateway" "sample_nat_c" {

allocation_id = "${aws_eip.sample_nat_c.id}"

subnet_id = "${aws_subnet.sample_public_c.id}"

depends_on = ["aws_internet_gateway.sample"]

tags = {

Name = "${var.application_name}-nat-c"

}

}

# =========================================

# Route

# =========================================

# -----------------------------------------

# Route Table

# -----------------------------------------

# Public

resource "aws_route_table" "sample_public_a" {

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-table-pub-a"

}

}

resource "aws_route_table" "sample_public_c" {

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-table-pub-c"

}

}

# Private

resource "aws_route_table" "sample_private_a" {

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-table-a"

}

}

resource "aws_route_table" "sample_private_c" {

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-table-c"

}

}

# -----------------------------------------

# Route Association

# -----------------------------------------

# Public

resource "aws_route_table_association" "sample_public_a" {

route_table_id = "${aws_route_table.sample_public_a.id}"

subnet_id = "${aws_subnet.sample_public_a.id}"

}

resource "aws_route_table_association" "sample_public_c" {

route_table_id = "${aws_route_table.sample_public_c.id}"

subnet_id = "${aws_subnet.sample_public_c.id}"

}

# Private

resource "aws_route_table_association" "sample_private_a" {

route_table_id = "${aws_route_table.sample_private_a.id}"

subnet_id = "${aws_subnet.sample_private_a.id}"

}

resource "aws_route_table_association" "sample_private_c" {

route_table_id = "${aws_route_table.sample_private_c.id}"

subnet_id = "${aws_subnet.sample_private_c.id}"

}

# -----------------------------------------

# Route

# -----------------------------------------

# Public

resource "aws_route" "sample_public_a" {

route_table_id = "${aws_route_table.sample_public_a.id}"

gateway_id = "${aws_internet_gateway.sample.id}"

destination_cidr_block = "0.0.0.0/0"

}

resource "aws_route" "sample_public_c" {

route_table_id = "${aws_route_table.sample_public_c.id}"

gateway_id = "${aws_internet_gateway.sample.id}"

destination_cidr_block = "0.0.0.0/0"

}

# Private

resource "aws_route" "sample_private_a" {

route_table_id = "${aws_route_table.sample_private_a.id}"

nat_gateway_id = "${aws_nat_gateway.sample_nat_a.id}"

destination_cidr_block = "0.0.0.0/0"

}

resource "aws_route" "sample_private_c" {

route_table_id = "${aws_route_table.sample_private_c.id}"

nat_gateway_id = "${aws_nat_gateway.sample_nat_c.id}"

destination_cidr_block = "0.0.0.0/0"

}

# =========================================

# Subnet

# =========================================

# Public

resource "aws_subnet" "sample_public_a" {

availability_zone = "${data.aws_region.current.name}a"

cidr_block = "10.0.1.0/24"

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-subnet-pub-a"

"kubernetes.io/role/elb" = ""

}

}

resource "aws_subnet" "sample_public_c" {

availability_zone = "${data.aws_region.current.name}c"

cidr_block = "10.0.2.0/24"

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-subnet-pub-c"

"kubernetes.io/role/elb" = ""

}

}

# Private

resource "aws_subnet" "sample_private_a" {

availability_zone = "${data.aws_region.current.name}a"

cidr_block = "10.0.128.0/24"

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-subnet-pri-a"

"kubernetes.io/cluster/sample-dev_eks" = "shared"

"kubernetes.io/role/internal-elb" = ""

}

}

resource "aws_subnet" "sample_private_c" {

availability_zone = "${data.aws_region.current.name}c"

cidr_block = "10.0.129.0/24"

vpc_id = "${aws_vpc.sample.id}"

tags = {

Name = "${var.application_name}-subnet-pri-c"

"kubernetes.io/cluster/sample-dev_eks" = "shared"

"kubernetes.io/role/internal-elb" = ""

}

}

IAM

全許可のIAMRole作っていますが、場合に応じて分けてください。

# =========================================

# IAM Role

# =========================================

resource "aws_iam_role" "sample_eks" {

name = "sample"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": ["eks.amazonaws.com","eks-fargate-pods.amazonaws.com","ec2.amazonaws.com"]

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

# =========================================

# IAM Role Policy Attachment

# =========================================

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = "${aws_iam_role.sample_eks.name}"

}

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSServicePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSServicePolicy"

role = "${aws_iam_role.sample_eks.name}"

}

resource "aws_iam_role_policy_attachment" "example-AmazonEKSFargatePodExecutionRolePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSFargatePodExecutionRolePolicy"

role = "${aws_iam_role.sample_eks.name}"

}

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSClusterPolicy1" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = "${aws_iam_role.sample_eks.name}"

}

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSClusterPolicy2"{

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = "${aws_iam_role.sample_eks.name}"

}

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSClusterPolicy3" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = "${aws_iam_role.sample_eks.name}"

}

ALB

ALBは特定のIPからしかアクセスできないようにします。

現在の設定は 0.0.0.0/0 となっており誰でもアクセスできてしまいますが、接続中のIPからしかアクセスできないように変更しましょう。

今回はHTTPSで接続し、終端がALBとなります。ですので、AWSのCertificateを登録しそのARNを記入しましょう。

今回はTerraformでは行いません。

# =========================================

# ALB

# =========================================

resource "aws_lb" "runtime" {

name = "${var.application_name}"

load_balancer_type = "application"

internal = false

idle_timeout = 60

enable_deletion_protection = true

subnets = [

"${aws_subnet.runtime_public_a.id}",

"${aws_subnet.runtime_public_c.id}"

]

security_groups = [

"${aws_security_group.runtime_lb.id}",

]

}

# =========================================

# ALB Security Group

# =========================================

resource "aws_security_group" "runtime_lb" {

name = "${var.application_name}_lb"

vpc_id = "${aws_vpc.runtime.id}"

# 社内からのみ接続可能

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["39.110.229.9/32","39.110.229.13/32","106.133.179.141/32"]

description = "optim-tokyo, optim-vpn, optim-kobe"

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.application_name}_lb"

}

}

# =========================================

# ALB Listener

# =========================================

resource "aws_lb_listener" "runtime_eks_a" {

load_balancer_arn = "${aws_lb.runtime.arn}"

port = "80"

protocol = "HTTP"

default_action {

type = "redirect"

redirect {

port = "443"

protocol = "HTTPS"

status_code = "HTTP_301"

}

}

}

resource "aws_lb_listener" "runtime_eks_b" {

load_balancer_arn = "${aws_lb.runtime.arn}"

port = "443"

protocol = "HTTPS"

certificate_arn = "arn:aws:acm:ap-northeast-1:855131673743:certificate/4fef69e3-9135-4a29-9248-177b3a7ab9d0"

ssl_policy = "ELBSecurityPolicy-2016-08"

default_action {

target_group_arn = "${aws_lb_target_group.runtime_https.id}"

type = "forward"

}

}

# =========================================

# ALB Target Group

# =========================================

resource "aws_lb_target_group" "runtime_https" {

deregistration_delay = 10

name = "${var.application_name}-https"

port = 30080

protocol = "HTTP"

vpc_id = "${aws_vpc.runtime.id}"

}

EKS

本題のEKSです。

EnvoyのみEC2に設置と記述していますが、podのlabelによって振り分けられるためこちらでは箱を用意してあげているだけです。

正常に動くかどうか、ここの部分がかなり怪しいので間違いがあればご指摘ください

# =========================================

# EKS Cluster

# =========================================

resource "aws_eks_cluster" "sample" {

name = "${var.application_name}_eks"

role_arn = "${aws_iam_role.sample_eks.arn}"

vpc_config {

security_group_ids = ["${aws_security_group.sample_eks.id}"]

subnet_ids = ["${aws_subnet.sample_private_a.id}", "${aws_subnet.sample_private_c.id}"]

}

depends_on = [

"aws_iam_role_policy_attachment.cluster-AmazonEKSClusterPolicy",

"aws_iam_role_policy_attachment.cluster-AmazonEKSServicePolicy",

"aws_cloudwatch_log_group.sample_eks"

]

enabled_cluster_log_types = ["api", "audit","authenticator","controllerManager","scheduler"]

}

# Logger

resource "aws_cloudwatch_log_group" "sample_eks" {

name = "/aws/eks/${var.application_name}_eks/cluster"

retention_in_days = 5

}

# =========================================

# EKS Security Group

# =========================================

resource "aws_security_group" "sample_eks" {

name = "${var.application_name}_eks"

description = "Cluster communication with worker nodes"

vpc_id = "${aws_vpc.sample.id}"

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

description = ""

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.application_name}_eks"

}

}

# =========================================

# EKS Node Group

# =========================================

#

# EKSの入り口であるEnvoyProxyのみEC2のNodeにデプロイし、IPを割り当てる

resource "aws_eks_node_group" "sample" {

cluster_name = "${aws_eks_cluster.sample.name}"

node_group_name = "${var.application_name}_node_group"

node_role_arn = "${aws_iam_role.sample_eks.arn}"

subnet_ids = ["${aws_subnet.sample_private_a.id}", "${aws_subnet.sample_private_c.id}"]

scaling_config {

desired_size = 2

max_size = 2

min_size = 1

}

labels = {

engine = "ec2"

}

}

# =========================================

# EKS Node Group AutoScaling <=> ALB Target Group

# =========================================

resource "aws_autoscaling_attachment" "asg_attachment_bar" {

autoscaling_group_name = "${aws_eks_node_group.sample.resources[0].autoscaling_groups[0].name}"

alb_target_group_arn = "${aws_lb_target_group.sample_https.arn}"

}

# =========================================

# EKS Fargate Profile

# =========================================

#

# EnvoyProxy以外のアプリケーションはFargateによって生成される

resource "aws_eks_fargate_profile" "sample" {

cluster_name = "${aws_eks_cluster.sample.name}"

fargate_profile_name = "sample_fargate"

pod_execution_role_arn = "${aws_iam_role.sample_eks.arn}"

subnet_ids = ["${aws_subnet.sample_private_a.id}", "${aws_subnet.sample_private_c.id}"]

selector {

namespace = "default"

labels = {

engine = "fargate"

}

}

}

resource "aws_eks_fargate_profile" "sample_kubesystem" {

cluster_name = "${aws_eks_cluster.sample.name}"

fargate_profile_name = "sample_fargate_kube_system"

pod_execution_role_arn = "${aws_iam_role.sample_eks.arn}"

subnet_ids = ["${aws_subnet.sample_private_a.id}", "${aws_subnet.sample_private_c.id}"]

selector {

namespace = "kube-system"

}

}

最後に

雑にコピペしましたが、備忘録として残します。

labelにfargateを指定すれば自動的にfargateでNodeが立ち上がりますが、その都度IPが変わってしまいますので、IPを固定にしたEnvoyがあるNodeを起点にfargateに振り分けるような形で実装します。