Before the start

Brief introduction of rclone

Rclone ("rsync for cloud storage") is a command line program to sync files and directories to and from different cloud storage providers.

There is loooong list of the supported cloud storage providers that you could check at the rclone home page.

Why we have this post

Actually there is a white paper from Oracle which describes how to use rclone to transfer data from other cloud providers or local file system to Oracle Cloud Infrastructure Object Storage. The cloud providers mentioned in this white paper include

- Oracle Cloud Infrastructure Object Storage Classic

- Amazon S3

- Microsoft Azure Blob Storage

Google Cloud Storage is not described in detail, but it is mentioned in the note.

Note: Although not addressed specifically, the same methodology can be used to transfer data from cloud

providers that are not discussed here, including Google Cloud Storage, IBM Cloud Object Storage, Ceph,

DigitalOcean Spaces, Rackspace Cloud Files, or any object storage that provides support for OpenStack

Swift.

So, as a supplement, in this post, we will talk about how to use rclone to transfer data from Google Cloud Storage to Oracle Cloud Infrastructure Object Storage.

Assuming you already have following

- A Google Cloud Platform project with a storage bucket as the source

- A Google Cloud Service Account and the corresponding key file

- An Oracle Cloud Infrastructure Object Storage bucket as the destination

- An Oracle Cloud Infrastructure Compute Instance as the intermediate server

System preparation

Install rclone

As the first step to use rclone, we need to install it obviously.

On Oracle Linux, it is very simple to do the installation. Perform the following command should be fine.

sudo yum install -y rclone

If you are not using Oracle Linux, or you don't want to do a system-wide installation, you are recommended to check the white paper from Oracle or the [installation page of rclone] (https://rclone.org/install/).

Configure the connection to Oracle Cloud Infrastructure Object Storage

For this part, actually it is described clearly in the Configure Object Storage section of the white paper from Oracle.

Here, I just want to remind you to pay attention to the following. Because rclone has not supported Oracle Cloud Infrastructure Object Storage native API yet, so we need to use Amazon S3 Compatibility API to make the connection. Necessary information for building the connection are listed below.

Secret key and access key

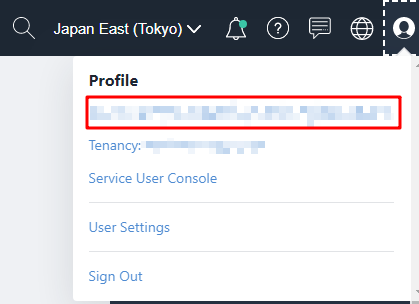

Click the [Profile] icon at the top right of the OCI web console page. And click the [username] under Profile.

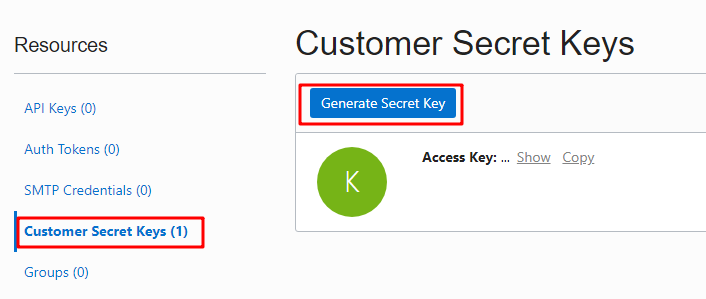

Click [Customer Secret Keys] and generate a pair of Secret Key and Access Key. Save them carefully because we will use them to configure the connection later.

Namespace

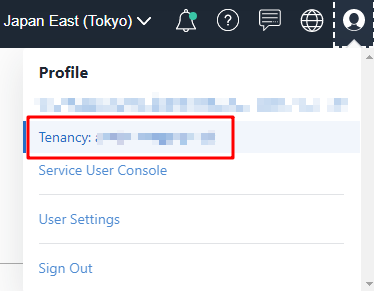

Click the [Profile] icon at the top right of the OCI web console page. And click the [Tenancy] under Profile.

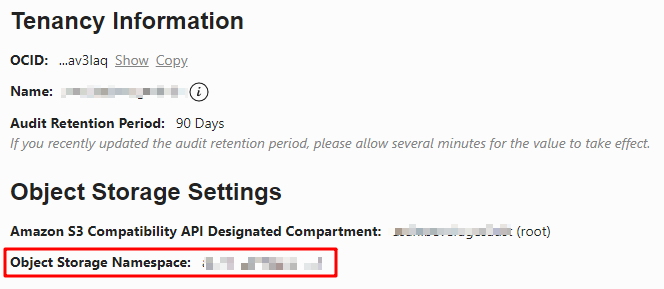

You will get the [Object Storage Namespace] on the tenancy page.

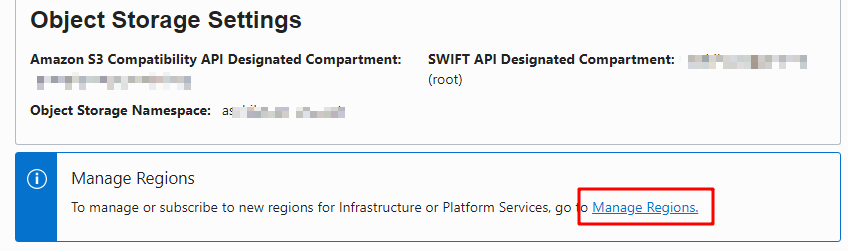

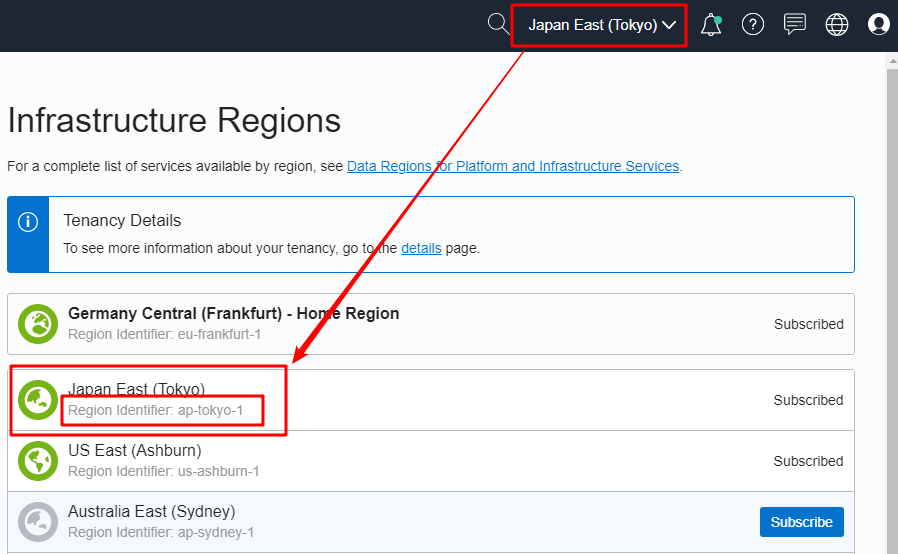

Region identifier

Please note that you may subscribe to Oracle Cloud Infrastructure services in multiple regions. The region identifier you need here is your current region instead of the home region. You could get your current region information on the [Manage Regions] page. Click the [Manage Regions] link on the Tenancy page you just visited.

On this page, you can see that my Home Region is Germany Central (Frankfurt) and the region identifier of it is eu-frankfurt-1, and my current region is Japan East (Tokyo) and the region identifier of it is ap-tokyo-1.

API endpoint

You already got the namespace and region_identifier information. Just use the following form to construct the API endpoint.

https://<your_namespace>.compat.objectstorage.<your_region_identifier>.oraclecloud.com

Make a rclone configuration for Oracle Cloud Infrastructure Object Storage

In the white paper, the configuration information was set in environment variables. In this post, we will use the rclone config command to write the configuration file, because we want the configuration could be persistent and we will use the same command to write the configuration for GCS as well.

I only list the key inputs to make the configuration, for other prompts, hit ENTER should be fine.

$ rclone config # start the configuration

...

e/n/d/r/c/s/q> n # config a new remote

name> ocios # input a name this configuration, ocios in this case

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

...

4 / Amazon S3 Compliant Storage Provider (AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, etc)

\ "s3"

...

34 / premiumize.me

\ "premiumizeme"

Storage> 4 # 4 -> we will config an Amazon S3 Compliant Storage

** See help for s3 backend at: https://rclone.org/s3/ **

Choose your S3 provider.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amazon Web Services (AWS) S3

\ "AWS"

...

10 / Any other S3 compatible provider

\ "Other"

provider> 10 # 10 -> S3 compatible provider

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Enter a boolean value (true or false). Press Enter for the default ("false").

...

env_auth>

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

access_key_id> <your_access_key> # input your access key and enter

AWS Secret Access Key (password)

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

secret_access_key> <your_secret_access_key> # input your secret access key and enter

Region to connect to.

Leave blank if you are using an S3 clone and you don't have a region.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Use this if unsure. Will use v4 signatures and an empty region.

\ ""

2 / Use this only if v4 signatures don't work, eg pre Jewel/v10 CEPH.

\ "other-v2-signature"

region> <your_current_region_identifier> # input your crrent region identifier, ap-tokyo-1 in my case

Endpoint for S3 API.

Required when using an S3 clone.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

endpoint> <your_API_endpoint> # input the API endpoint here

Location constraint - must be set to match the Region.

...

Edit advanced config? (y/n)

y) Yes

n) No

y/n> n # we don't need the advanced config

Remote config

--------------------

[ocios]

type = s3

provider = Other

access_key_id =

secret_access_key =

region = ap-tokyo-1

endpoint =

--------------------

y) Yes this is OK

e) Edit this remote

d) Delete this remote

y/e/d> y # save this config

Current remotes:

Name Type

==== ====

ocios s3 # here it is

gcs google cloud storage

Verify the connection

List all the remote configurations

rclone listremotes

List all the buckets of the OCI Object Storage we just configured. Don't miss the colon : at the end.

rclone lsd ocios:

Configure the connection to Google Cloud Storage

Service Account & key file

If you don't have Service Account yet, please refer to create a service account and generate the key file accordingly.

Once you have the service account and it's key file, store it somewhere safe.

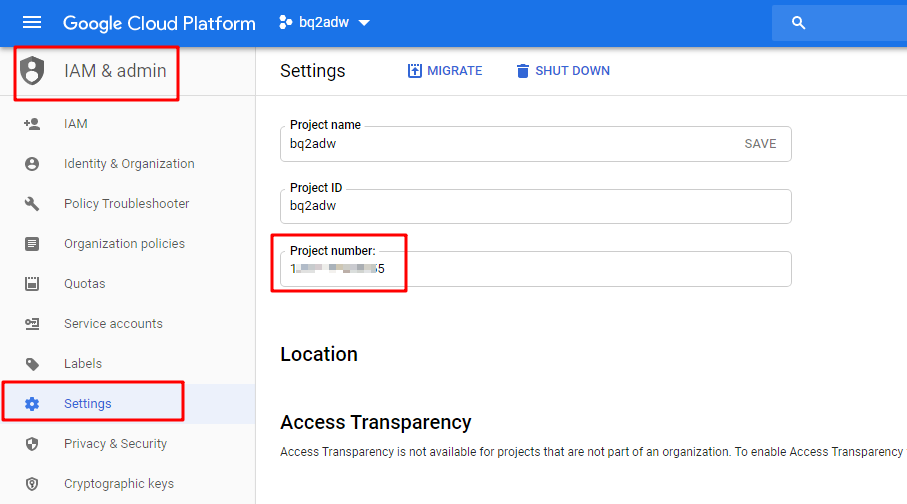

Project number

In GCP, you can have multiple projects, but using rclone you can only interact with one project with one configuration. Because the service account is in project scope. So we need the project number to list the buckets in GCS.

Visit the "IAM & Admin" page in Google Cloud Platform web console, then click [Settings].

On the settings page, you will find the project number.

Make a rclone configuration for Google Cloud Storage

[opc@cibq2adw rclone]$ rclone config

...

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit config

n/s/q> n # config a new remote

name> gcs # input a name this configuration, gcs in this case

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

...

12 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

...

34 / premiumize.me

\ "premiumizeme"

Storage> 12 # 12 -> Google Cloud Storage

...

Project number.

Optional - needed only for list/create/delete buckets - see your developer console.

Enter a string value. Press Enter for the default ("").

project_number> <your_project_number> # input your project number

Service Account Credentials JSON file path

Leave blank normally.

Needed only if you want use SA instead of interactive login.

Enter a string value. Press Enter for the default ("").

service_account_file> <your_service_account_key_file_path> # input the key file path

Access Control List for new objects.

Enter a string value. Press Enter for the default ("")

...

Location for the newly created buckets.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Empty for default location (US).

\ ""

2 / Multi-regional location for Asia.

\ "asia"

3 / Multi-regional location for Europe.

\ "eu"

4 / Multi-regional location for United States.

\ "us"

...

20 / California.

\ "us-west2"

location> 4 # input the number of your location.

# 4 (Multi-regional location for United States) in this case

The storage class to use when storing objects in Google Cloud Storage.

...

Remote config

--------------------

[gcs]

...

Current remotes:

Name Type

==== ====

gcs google cloud storage

Verify the connection

List all the remote configurations

rclone listremotes

List all the buckets of the GCP project you specified. Don't miss the colon : at the end.

rclone lsd gcs:

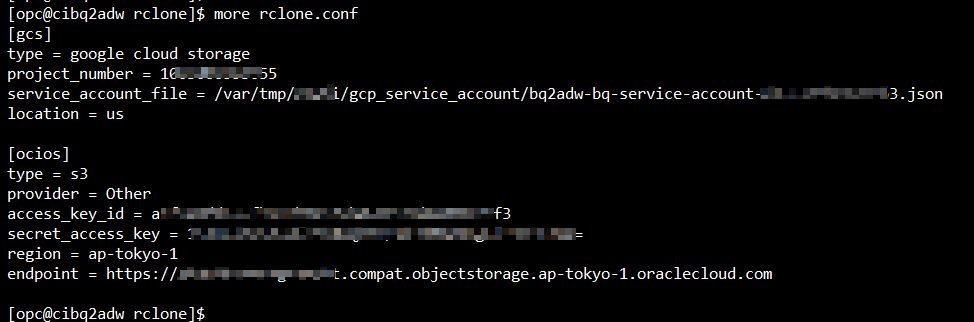

Check the rclone config file

rclone config file is stored in the /home/<user_name>/.config/rclone directory.

You could check the configuration information we just set.

more /home/<user_name>/.config/rclone/rclone.conf

Transfer the data

Connections to Google Cloud Storage and Oracle Cloud Infrastructure Object Storage are configured. We just need one command to transfer the data from GCS to OCI Object Storage.

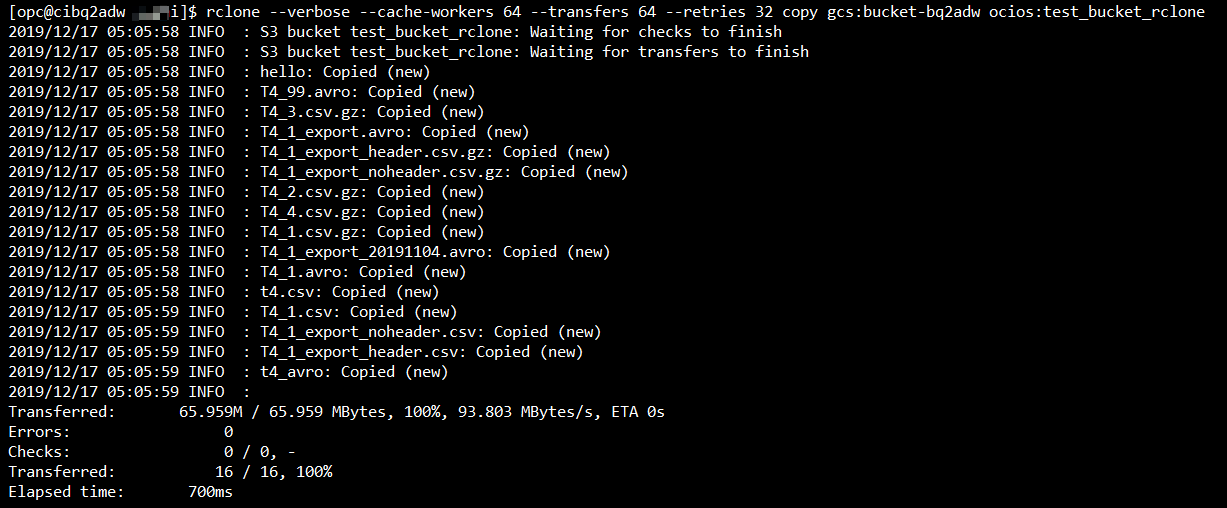

rclone --verbose --cache-workers 64 --transfers 64 --retries 32 copy gcs:bucket-bq2adw ocios:test_bucket_rclone

# +-- SOURCE -----+ +--- DESTINATION ------+

For more details of the options of rclone command, please check the official document.

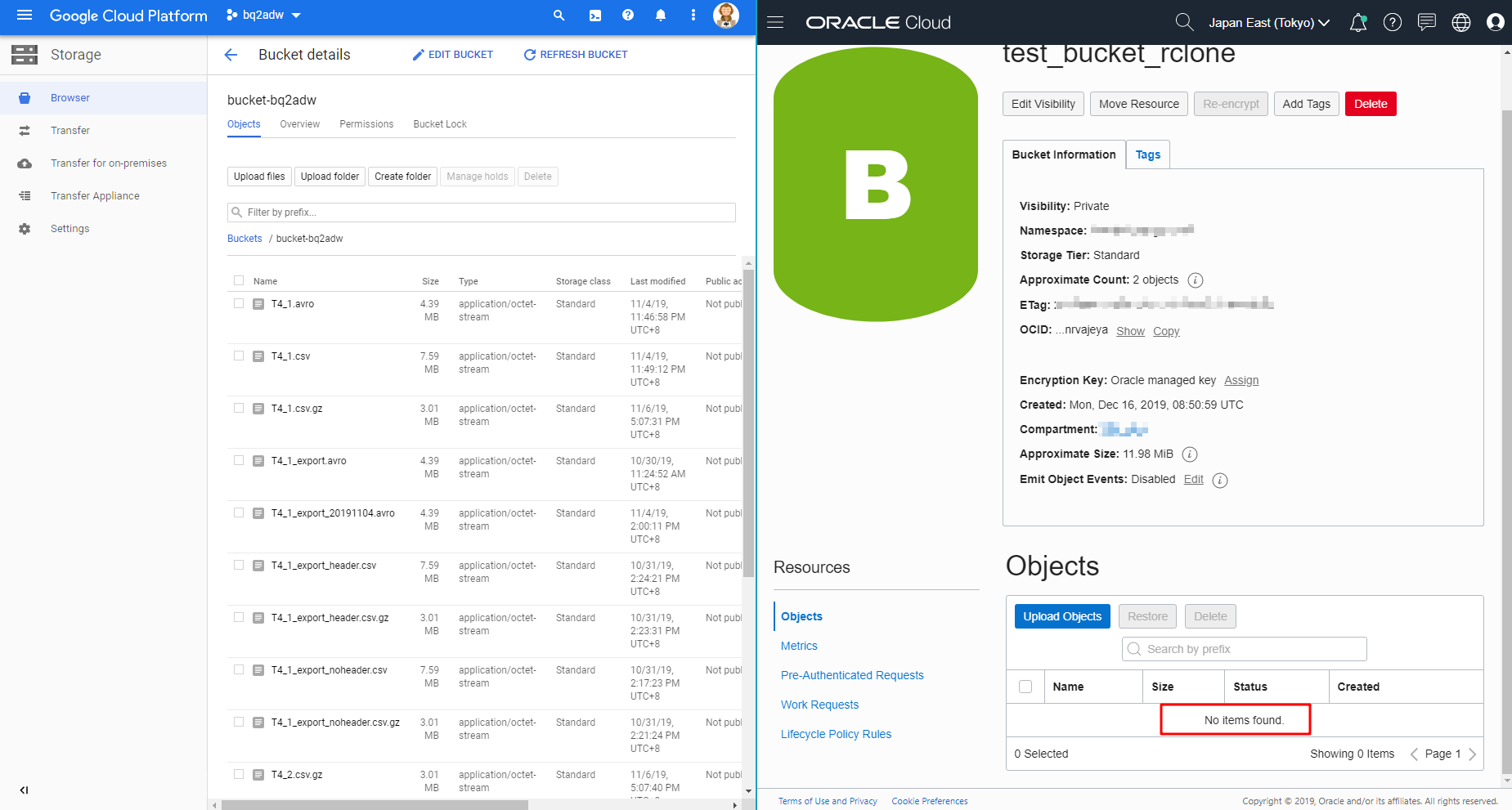

Before transferring

Perform the rclone copy command

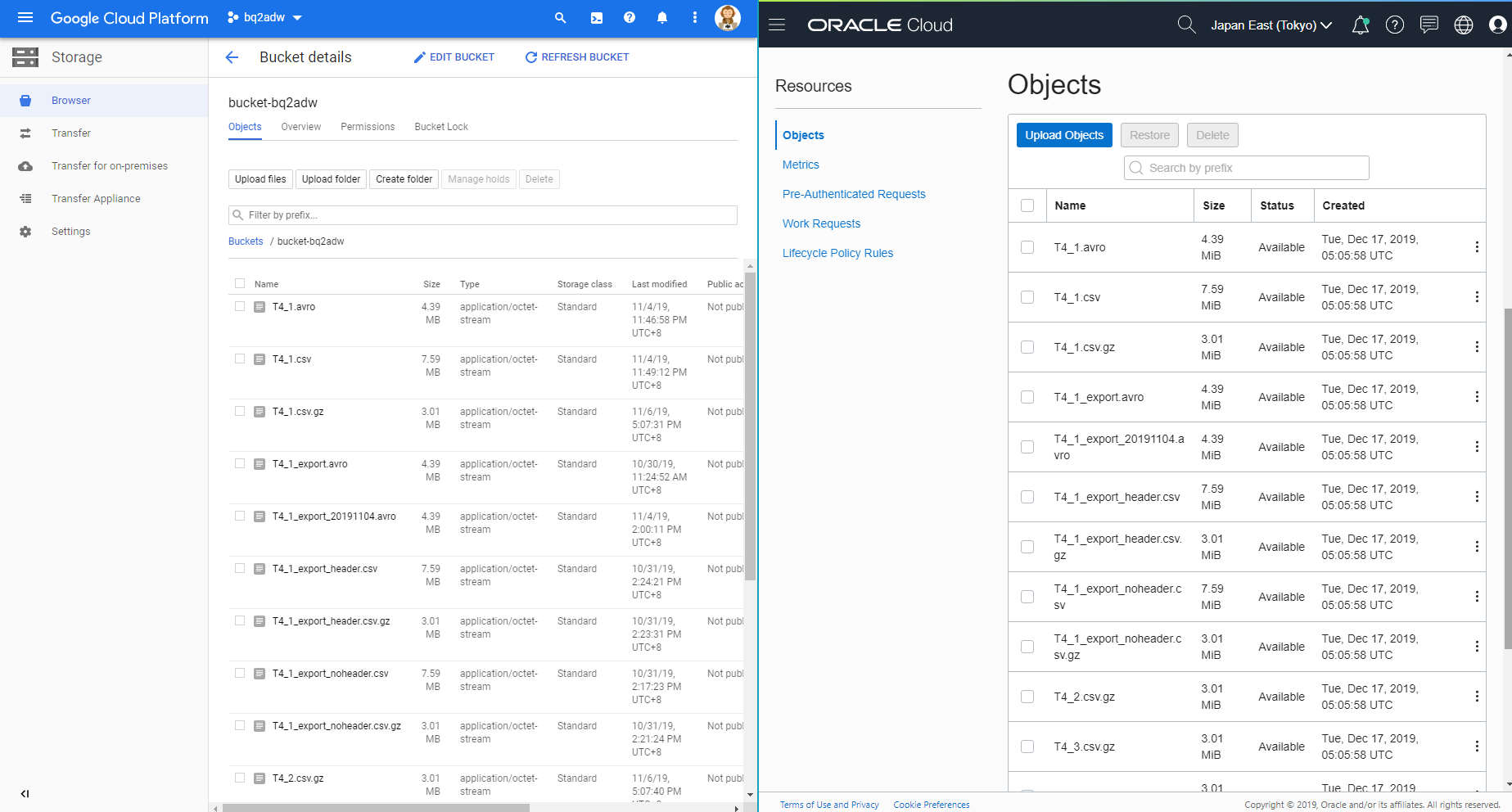

Data transferring is complete!

As you can see, everything in the GCS bucket bucket-bq2adw has been transferred to OCI Object Storage bucket test_bucket_rclone.