1.Introduction

This article is not about speed tuning. It is the result of investigating how to execute offload to Tensorflow-CPU and execute a model that OpenVINO does not support or OP that OpenVINO does not support. Even if you build the environment according to the Intel official tutorial Offloading Sub-Graph Inference to TensorFlow, it will not work.

This article shows how to optimize Tensorflow with the CPU extension instruction set and run non-OpenVINO OPs on optimized Tensorflow. Please note that offload processing to Tensorflow of OP which OpenVINO does not support can be executed only by CPU.

This time I will organize the offloading method using the MobileNetV3-SSD model provided on the Tensorflow official website as an example. Since the purpose is to verify that the offload process can be executed without causing an error, the reasoning result is not pursued. Among MobileNetV3-SSD models, FusedBatchNormV3 operation is an OP not supported by OpenVINO.

2.Environment

- Ubuntu 18.04 x86_64 (Corei7 Gen8)

- OpenVINO 2019 R3.1

- Model Optimizer 2019.3.0-408-gac8584cb7

- Tensorflow v1.15.0 (CPU optimization with self-build, SSE4.1 SSE4.2 AVX AVX2 FMA)

- Bazel 0.26.1

- Python 3.6.8

- MobileNetV3-SSD

3.Procedure

$ cd ~

$ mkdir test

$ sudo apt-get install -y build-essential zip unzip openjdk-8-jdk cmake make git wget \

curl libhdf5-dev libc-ares-dev libeigen3-dev libatlas-base-dev \

libopenblas-dev openmpi-bin libopenmpi-dev

$ sudo pip3 install keras_applications==1.0.8 --no-deps

$ sudo pip3 install keras_preprocessing==1.1.0 --no-deps

$ sudo pip3 install h5py==2.9.0

$ sudo -H pip3 install -U --user six numpy wheel mock

$ sudo pip3 uninstall tensorflow tensorflow-gpu

$ cd ~

$ git clone https://github.com/PINTO0309/OpenVINO-bin.git

$ cd OpenVINO-bin

$ Linux/download_2019R3.1.sh

$ cd l_openvino_toolkit_p_2019.3.376

$ sudo ./install_GUI.sh

or

$ sudo ./install.sh

$ cd /opt/intel/openvino/install_dependencies

$ sudo -E ./install_openvino_dependencies.sh

$ source /opt/intel/openvino/bin/setupvars.sh

$ nano ~/.bashrc

### Add the following line to the last line

source /opt/intel/openvino/bin/setupvars.sh

$ source ~/.bashrc

$ cd /opt/intel/openvino/deployment_tools/model_optimizer/install_prerequisites

$ sudo ./install_prerequisites.sh

$ cd /opt/intel/openvino/install_dependencies/

$ sudo -E su

$ ./install_NEO_OCL_driver.sh

$ sudo usermod -a -G users "$(whoami)"

$ sudo cp /opt/intel/openvino/inference_engine/external/97-myriad-usbboot.rules /etc/udev/rules.d/

$ sudo udevadm control --reload-rules

$ sudo udevadm trigger

$ sudo ldconfig

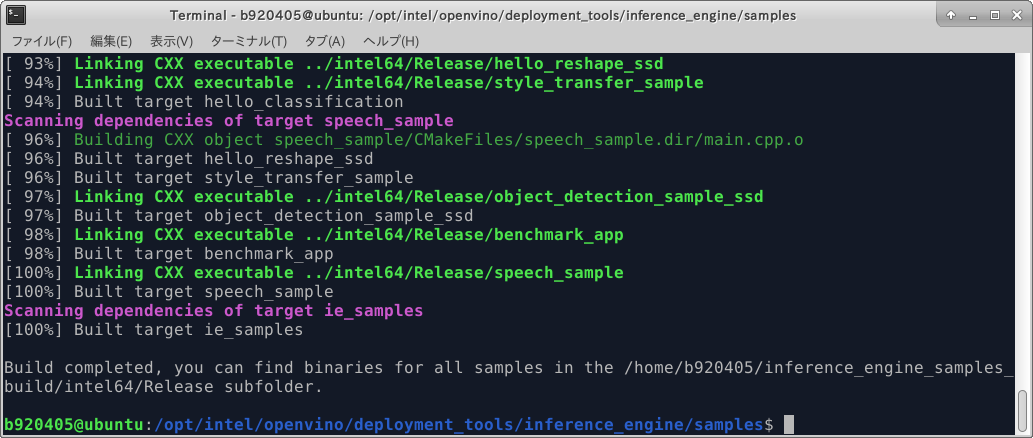

$ cd /opt/intel/openvino/deployment_tools/inference_engine/samples/

$ sudo ./build_samples.sh

$ cd ~/test

$ sudo cp ~/inference_engine_samples_build/intel64/Release/lib/* .

$ wget http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v3_small_coco_2019_08_14.tar.gz

$ wget http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v3_large_coco_2019_08_14.tar.gz

$ tar -zxvf ssd_mobilenet_v3_small_coco_2019_08_14.tar.gz

$ tar -zxvf ssd_mobilenet_v3_large_coco_2019_08_14.tar.gz

$ cd ssd_mobilenet_v3_small_coco_2019_08_14

$ mkdir FP32

$ sudo python3 /opt/intel/openvino/deployment_tools/model_optimizer/mo_tf.py \

--input_model frozen_inference_graph.pb \

--output_dir FP32 \

--input normalized_input_image_tensor \

--output raw_outputs/class_predictions,raw_outputs/box_encodings \

--data_type FP32 \

--batch 1 \

--tensorflow_subgraph_patterns "FeatureExtractor/MobilenetV3/Conv/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_1/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_1/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_1/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_2/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_2/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_2/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_3/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_3/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_3/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_4/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_4/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_4/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_5/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_5/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_5/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_6/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_6/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_6/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_7/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_7/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_7/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_8/expand/BatchNorm/.*,BoxPredictor_0/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_8/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_8/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_9/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_9/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_9/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_10/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_10/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_10/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/Conv_1/BatchNorm/.*,BoxPredictor_1/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_1_Conv2d_2_1x1_256/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_2_3x3_s2_512_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_2_3x3_s2_512/BatchNorm/.*,BoxPredictor_2/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_1_Conv2d_3_1x1_128/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_3_3x3_s2_256_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_3_3x3_s2_256/BatchNorm/.*,BoxPredictor_3/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_1_Conv2d_4_1x1_128/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_4_3x3_s2_256_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_4_3x3_s2_256/BatchNorm/.*,BoxPredictor_4/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_1_Conv2d_5_1x1_64/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_5_3x3_s2_128_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_13_2_Conv2d_5_3x3_s2_128/BatchNorm/.*,BoxPredictor_5/BoxEncodingPredictor_depthwise/BatchNorm/.*,BoxPredictor_0/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_1/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_2/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_3/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_4/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_5/ClassPredictor_depthwise/BatchNorm/.*"

$ cd ..

$ cd ssd_mobilenet_v3_large_coco_2019_08_14

$ mkdir FP32

$ sudo python3 /opt/intel/openvino/deployment_tools/model_optimizer/mo_tf.py \

--input_model frozen_inference_graph.pb \

--output_dir FP32 \

--input normalized_input_image_tensor \

--output raw_outputs/class_predictions,raw_outputs/box_encodings \

--data_type FP32 \

--batch 1 \

--tensorflow_subgraph_patterns "FeatureExtractor/MobilenetV3/Conv/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_1/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_1/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_1/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_2/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_2/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_2/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_3/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_3/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_3/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_4/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_4/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_4/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_5/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_5/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_5/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_6/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_6/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_6/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_7/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_7/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_7/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_8/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_8/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_8/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_9/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_9/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_9/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_10/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_10/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_10/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_11/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_11/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_11/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_12/expand/BatchNorm/.*,BoxPredictor_0/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_12/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_12/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_13/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_13/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_13/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_14/expand/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_14/depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/expanded_conv_14/project/BatchNorm/.*,FeatureExtractor/MobilenetV3/Conv_1/BatchNorm/.*,BoxPredictor_1/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_1_Conv2d_2_1x1_256/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_2_3x3_s2_512_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_2_3x3_s2_512/BatchNorm/.*,BoxPredictor_2/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_1_Conv2d_3_1x1_128/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_3_3x3_s2_256_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_3_3x3_s2_256/BatchNorm/.*,BoxPredictor_3/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_1_Conv2d_4_1x1_128/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_4_3x3_s2_256_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_4_3x3_s2_256/BatchNorm/.*,BoxPredictor_4/BoxEncodingPredictor_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_1_Conv2d_5_1x1_64/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_5_3x3_s2_128_depthwise/BatchNorm/.*,FeatureExtractor/MobilenetV3/layer_17_2_Conv2d_5_3x3_s2_128/BatchNorm/.*,BoxPredictor_5/BoxEncodingPredictor_depthwise/BatchNorm/.*,BoxPredictor_0/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_1/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_2/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_3/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_4/ClassPredictor_depthwise/BatchNorm/.*,BoxPredictor_5/ClassPredictor_depthwise/BatchNorm/.*"

$ cd ..

```

**Model structure of MobileNet V3-SSD before conversion (.pb)**

**Model structure of MobileNet V3-SSD after conversion (.bin / .xml)**

```protobuf:OP_structure_of_TFCustomSubgraphCall

node {

name: "IE_NHWC_TO_NCHW"

op: "Const"

attr {

key: "dtype"

value {

type: DT_INT32

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_INT32

tensor_shape {

dim {

size: 4

}

}

tensor_content: "\000\000\000\000\003\000\000\000\001\000\000\000\002\000\000\000"

}

}

}

}

node {

name: "IE_NCHW_TO_NHWC"

op: "Const"

attr {

key: "dtype"

value {

type: DT_INT32

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_INT32

tensor_shape {

dim {

size: 4

}

}

tensor_content: "\000\000\000\000\002\000\000\000\003\000\000\000\001\000\000\000"

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/Conv2D_port_0_ie_placeholder"

op: "Placeholder"

attr {

key: "dtype"

value {

type: DT_FLOAT

}

}

attr {

key: "shape"

value {

shape {

dim {

size: 1

}

dim {

size: 16

}

dim {

size: 160

}

dim {

size: 160

}

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/Conv2D_port_0_ie_placeholder_transpose"

op: "Transpose"

input: "FeatureExtractor/MobilenetV3/Conv/Conv2D_port_0_ie_placeholder"

input: "IE_NCHW_TO_NHWC"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "Tperm"

value {

type: DT_INT32

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/beta"

op: "Const"

attr {

key: "dtype"

value {

type: DT_FLOAT

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_FLOAT

tensor_shape {

dim {

size: 16

}

}

tensor_content: "\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000"

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/beta/read"

op: "Identity"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/beta"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "_class"

value {

list {

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/gamma"

op: "Const"

attr {

key: "dtype"

value {

type: DT_FLOAT

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_FLOAT

tensor_shape {

dim {

size: 16

}

}

tensor_content: "\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?"

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/gamma/read"

op: "Identity"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/gamma"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "_class"

value {

list {

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_mean"

op: "Const"

attr {

key: "dtype"

value {

type: DT_FLOAT

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_FLOAT

tensor_shape {

dim {

size: 16

}

}

tensor_content: "\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000"

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_mean/read"

op: "Identity"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_mean"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "_class"

value {

list {

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_variance"

op: "Const"

attr {

key: "dtype"

value {

type: DT_FLOAT

}

}

attr {

key: "value"

value {

tensor {

dtype: DT_FLOAT

tensor_shape {

dim {

size: 16

}

}

tensor_content: "\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?\000\000\200?"

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_variance/read"

op: "Identity"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_variance"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "_class"

value {

list {

}

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/FusedBatchNormV3"

op: "FusedBatchNormV3"

input: "FeatureExtractor/MobilenetV3/Conv/Conv2D_port_0_ie_placeholder_transpose"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/gamma/read"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/beta/read"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_mean/read"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/moving_variance/read"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "U"

value {

type: DT_FLOAT

}

}

attr {

key: "data_format"

value {

s: "NHWC"

}

}

attr {

key: "epsilon"

value {

f: 0.0010000000474974513

}

}

attr {

key: "is_training"

value {

b: false

}

}

}

node {

name: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/FusedBatchNormV3_port_0_transpose"

op: "Transpose"

input: "FeatureExtractor/MobilenetV3/Conv/BatchNorm/FusedBatchNormV3:0"

input: "IE_NHWC_TO_NCHW"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "Tperm"

value {

type: DT_INT32

}

}

}

```

```console:Build_and_Install_Bazel

$ cd ~

$ mkdir bazel;cd bazel

$ wget https://github.com/bazelbuild/bazel/releases/download/0.26.1/bazel-0.26.1-dist.zip

$ unzip bazel-0.26.1-dist.zip

$ nano compile.sh

#################################################################################

bazel_build "src:bazel_nojdk${EXE_EXT}" \

--action_env=PATH \

--host_platform=@bazel_tools//platforms:host_platform \

--platforms=@bazel_tools//platforms:target_platform \

|| fail "Could not build Bazel"

#################################################################################

↓

#################################################################################

bazel_build "src:bazel_nojdk${EXE_EXT}" \

--host_javabase=@local_jdk//:jdk \

--action_env=PATH \

--host_platform=@bazel_tools//platforms:host_platform \

--platforms=@bazel_tools//platforms:target_platform \

|| fail "Could not build Bazel"

#################################################################################

```

<kbd>Ctrl</kbd> + <kbd>O</kbd>

<kbd>Ctrl</kbd> + <kbd>X</kbd>

```console:Build_and_Install_Bazel

$ sudo bash ./compile.sh #<--- Execute it directly under the bazel folder

$ sudo cp output/bazel /usr/local/bin #<--- Always execute after completion of build

```

```console:Building_a_Tensorflow_offload_library

$ cd ~

$ git clone -b v1.15.0 https://github.com/tensorflow/tensorflow.git

$ export TF_ROOT_DIR=${HOME}/tensorflow

$ source /opt/intel/openvino/bin/setupvars.sh

$ cd /opt/intel/openvino/deployment_tools/model_optimizer

$ sudo nano tf_call_ie_layer/build.sh

```

```console:Before_editing

bazel build --config=monolithic //tensorflow/cc/inference_engine_layer:libtensorflow_call_layer.so

```

↓

Tune library to use the CPU extended instruction set.

```console:After_editing

bazel build \

--config=monolithic \

--config=noaws \

--config=nogcp \

--config=nohdfs \

--config=noignite \

--config=nokafka \

--config=nonccl \

--copt=-mavx \

--copt=-mavx2 \

--copt=-mfma \

--copt=-msse4.1 \

--copt=-msse4.2 \

//tensorflow/cc/inference_engine_layer:libtensorflow_call_layer.so

```

<kbd>Ctrl</kbd> + <kbd>O</kbd>

<kbd>Ctrl</kbd> + <kbd>X</kbd>

```console:Building_a_Tensorflow_offload_library

$ sudo -E bash ./tf_call_ie_layer/build.sh

```

```console:Copy_the_generated_library_to_the_work_folder

$ cd ~/test

$ sudo cp ${TF_ROOT_DIR}/bazel-bin/tensorflow/cc/inference_engine_layer/libtensorflow_call_layer.so .

```

The following program measures the inference time and confirms that no error occurs.

```python:mobilenetv3ssd_usbcam_cpu.py

import sys

import os

import cv2

import time

import ctypes

import numpy as np

from argparse import ArgumentParser

try:

from armv7l.openvino.inference_engine import IENetwork, IEPlugin

except:

from openvino.inference_engine import IENetwork, IEPlugin

# classes = 80

# LABELS = ("person", "bicycle", "car", "motorbike", "aeroplane",

# "bus", "train", "truck", "boat", "traffic light",

# "fire hydrant", "stop sign", "parking meter", "bench", "bird",

# "cat", "dog", "horse", "sheep", "cow",

# "elephant", "bear", "zebra", "giraffe", "backpack",

# "umbrella", "handbag", "tie", "suitcase", "frisbee",

# "skis", "snowboard", "sports ball", "kite", "baseball bat",

# "baseball glove", "skateboard", "surfboard","tennis racket", "bottle",

# "wine glass", "cup", "fork", "knife", "spoon",

# "bowl", "banana", "apple", "sandwich", "orange",

# "broccoli", "carrot", "hot dog", "pizza", "donut",

# "cake", "chair", "sofa", "pottedplant", "bed",

# "diningtable", "toilet", "tvmonitor", "laptop", "mouse",

# "remote", "keyboard", "cell phone", "microwave", "oven",

# "toaster", "sink", "refrigerator", "book", "clock",

# "vase", "scissors", "teddy bear", "hair drier", "toothbrush")

# label_text_color = (255, 255, 255)

# label_background_color = (125, 175, 75)

# box_color = (255, 128, 0)

# box_thickness = 1

def build_argparser():

parser = ArgumentParser()

parser.add_argument('-mp','--pathofmodel',dest='path_of_model',type=str,default='ssd_mobilenet_v3_small_coco_2019_08_14/FP32/frozen_inference_graph.xml',help='Relative path of IR format XML file.')

parser.add_argument('-cn','--numberofcamera',dest='number_of_camera',type=int,default=0,help='USB camera number. (Default=0)')

parser.add_argument('-fp','--FPSofcamera',dest='FPS_of_camera',type=int,default=30,help='USB camera shooting speed(FPS). (Default=30)')

parser.add_argument('-wd','--width',dest='camera_width',type=int,default=320,help='Width of the frames in the video stream. (Default=320)')

parser.add_argument('-ht','--height',dest='camera_height',type=int,default=240,help='Height of the frames in the video stream. (Default=240)')

return parser

def main_IE_infer():

model_input_size = 320

fps = ""

elapsedTime = 0

args = build_argparser().parse_args()

model_path = args.path_of_model

camera_number = args.number_of_camera

camera_width = args.camera_width

camera_height = args.camera_height

camera_FPS = args.FPS_of_camera

model_xml = args.path_of_model

model_bin = os.path.splitext(model_xml)[0] + ".bin"

new_w = int(camera_width * min(model_input_size/camera_width, model_input_size/camera_height))

new_h = int(camera_height * min(model_input_size/camera_width, model_input_size/camera_height))

cap = cv2.VideoCapture(camera_number)

cap.set(cv2.CAP_PROP_FPS, camera_FPS)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, camera_width)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, camera_height)

time.sleep(1)

plugin = IEPlugin(device="CPU")

plugin.add_cpu_extension("libcpu_extension.so")

ctypes.CDLL("libinference_engine.so", ctypes.RTLD_GLOBAL)

plugin.add_cpu_extension("libtensorflow_call_layer.so")

net = IENetwork(model=model_xml, weights=model_bin)

input_blob = next(iter(net.inputs))

exec_net = plugin.load(network=net, num_requests=12)

while cap.isOpened():

t1 = time.time()

ret, image = cap.read()

if not ret:

break

resized_image = cv2.resize(image, (new_w, new_h), interpolation = cv2.INTER_CUBIC)

canvas = np.full((model_input_size, model_input_size, 3), 127.5)

canvas[(model_input_size-new_h)//2:(model_input_size-new_h)//2 + new_h,(model_input_size-new_w)//2:(model_input_size-new_w)//2 + new_w, :] = resized_image

prepimg = canvas

prepimg = prepimg - 127.5

prepimg = prepimg * 0.007843

prepimg = prepimg[np.newaxis, :, :, :] # Batch size axis add (NHWC)

prepimg = prepimg.transpose((0, 3, 1, 2)) # NHWC to NCHW

s = time.time()

outputs = exec_net.infer(inputs={input_blob: prepimg})

print("elapsedTime =", time.time() - s)

#np.set_printoptions(threshold=np.inf)

#Squeeze, convert_scores

#print(outputs["Squeeze"])

#sys.exit(0)

if cv2.waitKey(1)&0xFF == ord('q'):

break

elapsedTime = time.time() - t1

fps = "(Playback) {:.1f} FPS".format(1/elapsedTime)

cv2.destroyAllWindows()

del net

del exec_net

del plugin

if __name__ == '__main__':

sys.exit(main_IE_infer() or 0)

```

# 4.Reference articles

1. **[Install Intel® Distribution of OpenVINO™ toolkit for Linux](https://docs.openvinotoolkit.org/latest/_docs_install_guides_installing_openvino_linux.html)**

2. **[Offloading Sub-Graph Inference to TensorFlow](https://docs.openvinotoolkit.org/latest/_docs_MO_DG_prepare_model_customize_model_optimizer_Offloading_Sub_Graph_Inference.html)**

3. **[How to offload OpenVINO non-compliant layer to Tensorflow (undefined symbol: _ZN15InferenceEngine10TensorDescC1Ev)](https://software.intel.com/en-us/forums/computer-vision/topic/802345)**

4. **[MobileNetV3-SSD FusedBatchNormV3 layer not supported (OpenVINO R3.1)](https://software.intel.com/en-us/forums/computer-vision/topic/831459)**