ちょっと前からPytorchが一番いいよということで、以下の参考を見ながら、MNISTとCifar10のカテゴライズをやってみた。

やったこと

・Pytorchインストール

・MNISTを動かしてみる

・Cifar10を動かしてみる

・VGG16で動かしてみる

・Pytorchインストール

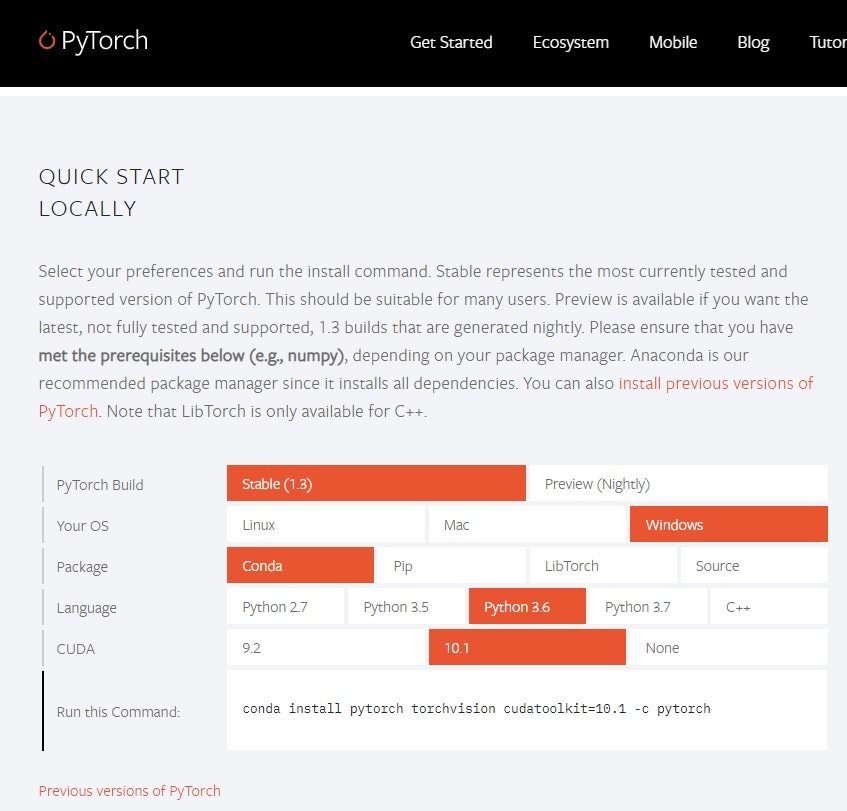

以下の参考⓪のページに自分の環境に合わせて入力すると自動的に、コマンドを指定してくれる。

【参考】

⓪https://pytorch.org/

ということで、ウワンの環境だと以下のコマンドでインストールできました。

(keras-gpu) C:\Users\user\pytorch>conda install pytorch torchvision cudatoolkit=10.1 -c pytorch

実はここでちょっと問題が発生しました。

いろいろツールもインストールされているからということで、(keras-gpu)環境にインストールしました。

すると、うまくインストールはできましたが、以下の三つの事象が発生しました。

1.keras環境がremoveされた

2.いくつかのLibがdowngradeされた

3.あるものはupdateされた

つまりKeras-gpu環境は破壊されたようです。

ということで、通常のconda環境にインストールすることを強くお勧めいたします。

歯切れが悪いのは、インストールが完了すると標準出力が消去されて、doneという表示に変わってしまったからです。

・MNISTを動かしてみる

これは、以下の参考①のとおり実施すれば動くと思いますので、省略します。

【参考】

①PyTorchでMNIST

ただし、データの読み込みなどでエラーが発生する場合がありました。

それは、以下の参考②によれば

「2. DataLoaderの設定値に関するエラー.

BrokenPipeError: [Errno 32] Broken pipe

-> 本件は, 参照URL② を元に, num_workers = 0 を設定することで回避できた.」

ということで、当該コードをnum_workers = 0と変更することでエラーが消えました。

・Cifar10を動かしてみる

Cifar10のコード例は以下の参考で詳細に示されています。

ところが、このコードは理由はわかりませんが、ほぼ動きませんでした。

【参考】

③TRAINING A CLASSIFIER

ということで、上記のMNISTのコードを参考③のコードを見ながらCifar10に拡張しました。

結果は、以下のようになりました。

まず、利用するLib等は以下のとおり

'''

PyTorch Cifar10 sample

'''

import argparse

import time

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

import torchvision

import torchvision.transforms as transforms

from torchvision.datasets import CIFAR10 #MNIST

import torch.optim as optim

from torchsummary import summary

# from Net_cifar10 import Net_cifar10

from Net_vgg16 import VGG16

import matplotlib.pyplot as plt

import numpy as np

以下は画像描画の関数です。

# functions to show an image

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.pause(1)

以下は引数関係の関数で初期値を与えています。

def parser():

'''

argument

'''

parser = argparse.ArgumentParser(description='PyTorch Cifar10')

parser.add_argument('--epochs', '-e', type=int, default=20,

help='number of epochs to train (default: 2)')

parser.add_argument('--lr', '-l', type=float, default=0.01,

help='learning rate (default: 0.01)')

args = parser.parse_args()

return args

以下がmain()関数です。

最初にデータ読み込み部分です。

MNISTとCifar10だとClassesが異なるのが分かります。

また、bach_size=32で学習しました。

def main():

'''

main

'''

args = parser()

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=32, #batch_size=4

shuffle=True, num_workers=0) #num_workers=2

testset = CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=32, #batch_size=4

shuffle=False, num_workers=0) #num_workers=2

#classes = tuple(np.linspace(0, 9, 10, dtype=np.uint8))

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

次に学習データのimages, labelsを表示させてプリントさせています。

# get some random training images

dataiter = iter(trainloader)

images, labels = dataiter.next()

# show images

imshow(torchvision.utils.make_grid(images))

# print labels

print(' '.join('%5s' % classes[labels[j]] for j in range(4)))

以下は、GPUを使う計算のための準備でdeviceを定義しています。

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") #for gpu

# Assuming that we are on a CUDA machine, this should print a CUDA device:

print(device)

モデルの定義をします。

ここではいくつかの定義をしてその変化を見てみました。

summary(model,(3,32,32))

は参考④のとおり、Kerasのmodel.summary()と同じような情報が得られます。

【参考】

④Pytorchのモデル構築、評価を補助するVisdomとtorchsummary

Visdomはtensorboardと同じようにグラフ表示ができるツールなようですが、今回は使っていません。

# model

#net = Net_cifar10()

#net = VGG13()

net = VGG16()

model = net.to(device) #for gpu

summary(model,(3,32,32))

criterionとoptimizerを以下で定義しています。

なお、MNISTとCifar10だとパラメータが異なるようです。

※実際やってみるとMNISTの値だと全く収束しませんでした

# define loss function and optimier

criterion = nn.CrossEntropyLoss()

#optimizer = optim.SGD(net.parameters(),lr=args.lr, momentum=0.99, nesterov=True)

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

以下で学習します。

cpuの場合のコードをコメントアウトしただけで残しました。

MNISTのときは最後にTestデータに対して、Accuracyを評価していましたがKerasなどと同じように毎回というか200回に一回学習lossと同じタイミングで評価するようにしました。

# train

for epoch in range(args.epochs):

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

#inputs, labels = data #for cpu

inputs, labels = data[0].to(device), data[1].to(device) #for gpu

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 200 == 199: # print every 2000 mini-batches

# test

correct = 0

total = 0

with torch.no_grad():

for (images, labels) in testloader:

outputs = net(images.to(device)) #for gpu

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels.to(device)).sum().item()

#print('Accuracy: {:.2f} %'.format(100 * float(correct/total)))

print('[%d, %5d] loss: %.3f '% (epoch + 1, i + 1, running_loss / 200), 'Accuracy: {:.2f} %'.format(100 * float(correct/total)))

running_loss = 0.0

学習を終了すると、得られたnet.state_dict()を保存します。

print('Finished Training')

PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH)

以下、再度Testの精度を計算して出力します。

# test

correct = 0

total = 0

with torch.no_grad():

for (images, labels) in testloader:

outputs = net(images.to(device)) #for gpu

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels.to(device)).sum().item()

print('Accuracy: {:.2f} %'.format(100 * float(correct/total)))

以下でTestデータを表示し、予測して結果を表示します。

dataiter = iter(testloader)

images, labels = dataiter.next()

# print images

imshow(torchvision.utils.make_grid(images))

print('GroundTruth: ', ' '.join('%5s' % classes[labels[j]] for j in range(4)))

outputs = net(images.to(device))

_, predicted = torch.max(outputs, 1)

print('Predicted: ', ' '.join('%5s' % classes[predicted[j]] for j in range(4)))

最後に、クラス毎の予測の精度を計算します。

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in testloader:

images, labels = data #for cpu

#inputs, labels = data[0].to(device), data[1].to(device) #for gpu

outputs = net(images.to(device))

_, predicted = torch.max(outputs, 1)

c = (predicted == labels.to(device)).squeeze()

for i in range(4):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (

classes[i], 100 * class_correct[i] / class_total[i]))

main()が終了すると、計算に要した時間を表示します。

if __name__ == '__main__':

start_time = time.time()

main()

print('elapsed time: {:.3f} [sec]'.format(time.time() - start_time))

Pytorchのページで利用しているモデルは以下のとおりで、簡単なものを使っています。

import torch.nn as nn

import torch.nn.functional as F

class Net_cifar10(nn.Module):

def __init__(self):

super(Net_cifar10, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

・VGG16で動かしてみる

PytorchのVGG familyのモデルはググるといろいろありますが、以下の参考のものが分かりやすいです。

【参考】

⑤PyTorch 0.4.1 examples (コード解説) : 画像分類 – Oxford 花 17 種 (VGG)

ただし、ここにはVGG13までしか例示されていません。

ということで、以前のウワンの記事を参考に以下のようにVGG16に拡張しました。

import torch.nn as nn

import torch.nn.functional as F

class VGG16(nn.Module):

def __init__(self): # , num_classes):

super(VGG16, self).__init__()

num_classes=10

self.block1_output = nn.Sequential (

nn.Conv2d(3, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block2_output = nn.Sequential (

nn.Conv2d(64, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block3_output = nn.Sequential (

nn.Conv2d(128, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block4_output = nn.Sequential (

nn.Conv2d(256, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block5_output = nn.Sequential (

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.classifier = nn.Sequential(

nn.Linear(512, 512), #512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(512, 32 ), #4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(32, num_classes), #4096

)

def forward(self, x):

x = self.block1_output(x)

x = self.block2_output(x)

x = self.block3_output(x)

x = self.block4_output(x)

x = self.block5_output(x)

#print(x.size())

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

いろいろなモデルに対するCifar10の計算例をおまけに掲載しています。

まとめ

・PytorchでCifar10のカテゴライズをやってみた

・当初いくつかエラーがあったが、どうにか安定して計算できるようになった

・Pytorchならではの例を動かしてみたいと思う

おまけ

(keras-gpu) C:\Users\user\pytorch\cifar10>python pytorch_cifar10_.py

Files already downloaded and verified

Files already downloaded and verified

cuda:0

[1, 200] loss: 2.303 Accuracy: 13.34 %

[1, 400] loss: 2.299 Accuracy: 14.55 %

[1, 600] loss: 2.296 Accuracy: 14.71 %

[1, 800] loss: 2.284 Accuracy: 16.72 %

[1, 1000] loss: 2.248 Accuracy: 17.70 %

[1, 1200] loss: 2.144 Accuracy: 24.59 %

[1, 1400] loss: 2.039 Accuracy: 27.71 %

[2, 200] loss: 1.943 Accuracy: 30.32 %

[2, 400] loss: 1.900 Accuracy: 31.92 %

[2, 600] loss: 1.883 Accuracy: 32.70 %

[2, 800] loss: 1.831 Accuracy: 34.42 %

[2, 1000] loss: 1.802 Accuracy: 34.84 %

[2, 1200] loss: 1.776 Accuracy: 35.06 %

[2, 1400] loss: 1.733 Accuracy: 37.69 %

[3, 200] loss: 1.688 Accuracy: 37.61 %

[3, 400] loss: 1.657 Accuracy: 38.20 %

[3, 600] loss: 1.627 Accuracy: 41.01 %

[3, 800] loss: 1.636 Accuracy: 41.60 %

[3, 1000] loss: 1.596 Accuracy: 41.73 %

[3, 1200] loss: 1.582 Accuracy: 41.52 %

[3, 1400] loss: 1.543 Accuracy: 43.17 %

[4, 200] loss: 1.517 Accuracy: 44.28 %

[4, 400] loss: 1.508 Accuracy: 45.50 %

[4, 600] loss: 1.503 Accuracy: 45.83 %

[4, 800] loss: 1.493 Accuracy: 46.98 %

[4, 1000] loss: 1.480 Accuracy: 45.65 %

[4, 1200] loss: 1.472 Accuracy: 47.23 %

[4, 1400] loss: 1.465 Accuracy: 47.72 %

[5, 200] loss: 1.440 Accuracy: 47.90 %

[5, 400] loss: 1.406 Accuracy: 50.01 %

[5, 600] loss: 1.419 Accuracy: 49.09 %

[5, 800] loss: 1.393 Accuracy: 50.10 %

[5, 1000] loss: 1.362 Accuracy: 49.50 %

[5, 1200] loss: 1.367 Accuracy: 49.13 %

[5, 1400] loss: 1.392 Accuracy: 51.04 %

[6, 200] loss: 1.336 Accuracy: 52.19 %

[6, 400] loss: 1.329 Accuracy: 52.20 %

[6, 600] loss: 1.312 Accuracy: 51.44 %

[6, 800] loss: 1.315 Accuracy: 51.34 %

[6, 1000] loss: 1.323 Accuracy: 52.54 %

[6, 1200] loss: 1.323 Accuracy: 53.76 %

[6, 1400] loss: 1.302 Accuracy: 53.15 %

[7, 200] loss: 1.257 Accuracy: 53.11 %

[7, 400] loss: 1.258 Accuracy: 53.91 %

[7, 600] loss: 1.262 Accuracy: 54.56 %

[7, 800] loss: 1.280 Accuracy: 55.07 %

[7, 1000] loss: 1.249 Accuracy: 54.81 %

[7, 1200] loss: 1.255 Accuracy: 54.41 %

[7, 1400] loss: 1.234 Accuracy: 55.69 %

[8, 200] loss: 1.213 Accuracy: 56.52 %

[8, 400] loss: 1.214 Accuracy: 56.52 %

[8, 600] loss: 1.213 Accuracy: 56.60 %

[8, 800] loss: 1.202 Accuracy: 55.38 %

[8, 1000] loss: 1.200 Accuracy: 57.14 %

[8, 1200] loss: 1.190 Accuracy: 56.84 %

[8, 1400] loss: 1.173 Accuracy: 57.08 %

[9, 200] loss: 1.144 Accuracy: 57.51 %

[9, 400] loss: 1.170 Accuracy: 57.25 %

[9, 600] loss: 1.136 Accuracy: 56.35 %

[9, 800] loss: 1.169 Accuracy: 58.69 %

[9, 1000] loss: 1.141 Accuracy: 57.84 %

[9, 1200] loss: 1.146 Accuracy: 56.51 %

[9, 1400] loss: 1.150 Accuracy: 57.88 %

[10, 200] loss: 1.128 Accuracy: 58.77 %

[10, 400] loss: 1.123 Accuracy: 58.69 %

[10, 600] loss: 1.120 Accuracy: 59.92 %

[10, 800] loss: 1.102 Accuracy: 58.37 %

[10, 1000] loss: 1.104 Accuracy: 59.26 %

[10, 1200] loss: 1.101 Accuracy: 59.45 %

[10, 1400] loss: 1.106 Accuracy: 59.75 %

[11, 200] loss: 1.081 Accuracy: 58.35 %

[11, 400] loss: 1.098 Accuracy: 59.52 %

[11, 600] loss: 1.040 Accuracy: 60.00 %

[11, 800] loss: 1.083 Accuracy: 60.39 %

[11, 1000] loss: 1.073 Accuracy: 60.55 %

[11, 1200] loss: 1.074 Accuracy: 61.02 %

[11, 1400] loss: 1.075 Accuracy: 60.78 %

[12, 200] loss: 1.027 Accuracy: 59.02 %

[12, 400] loss: 1.052 Accuracy: 60.14 %

[12, 600] loss: 1.025 Accuracy: 61.39 %

[12, 800] loss: 1.047 Accuracy: 59.45 %

[12, 1000] loss: 1.047 Accuracy: 61.99 %

[12, 1200] loss: 1.055 Accuracy: 60.82 %

[12, 1400] loss: 1.023 Accuracy: 62.17 %

[13, 200] loss: 0.994 Accuracy: 61.23 %

[13, 400] loss: 1.008 Accuracy: 61.94 %

[13, 600] loss: 1.014 Accuracy: 61.18 %

[13, 800] loss: 1.013 Accuracy: 62.04 %

[13, 1000] loss: 1.018 Accuracy: 61.59 %

[13, 1200] loss: 1.010 Accuracy: 61.81 %

[13, 1400] loss: 0.998 Accuracy: 61.81 %

[14, 200] loss: 0.961 Accuracy: 61.17 %

[14, 400] loss: 0.985 Accuracy: 61.63 %

[14, 600] loss: 0.977 Accuracy: 62.18 %

[14, 800] loss: 0.996 Accuracy: 61.84 %

[14, 1000] loss: 0.978 Accuracy: 61.70 %

[14, 1200] loss: 0.974 Accuracy: 61.63 %

[14, 1400] loss: 0.980 Accuracy: 62.09 %

[15, 200] loss: 0.935 Accuracy: 61.29 %

[15, 400] loss: 0.944 Accuracy: 63.11 %

[15, 600] loss: 0.936 Accuracy: 62.98 %

[15, 800] loss: 0.961 Accuracy: 62.76 %

[15, 1000] loss: 0.961 Accuracy: 62.42 %

[15, 1200] loss: 0.956 Accuracy: 61.82 %

[15, 1400] loss: 0.975 Accuracy: 62.35 %

[16, 200] loss: 0.901 Accuracy: 63.24 %

[16, 400] loss: 0.906 Accuracy: 62.88 %

[16, 600] loss: 0.924 Accuracy: 63.13 %

[16, 800] loss: 0.905 Accuracy: 62.71 %

[16, 1000] loss: 0.930 Accuracy: 62.22 %

[16, 1200] loss: 0.950 Accuracy: 62.95 %

[16, 1400] loss: 0.953 Accuracy: 63.11 %

[17, 200] loss: 0.894 Accuracy: 63.93 %

[17, 400] loss: 0.896 Accuracy: 63.65 %

[17, 600] loss: 0.880 Accuracy: 62.02 %

[17, 800] loss: 0.889 Accuracy: 63.14 %

[17, 1000] loss: 0.897 Accuracy: 63.36 %

[17, 1200] loss: 0.918 Accuracy: 63.98 %

[17, 1400] loss: 0.925 Accuracy: 63.66 %

[18, 200] loss: 0.853 Accuracy: 63.52 %

[18, 400] loss: 0.852 Accuracy: 62.60 %

[18, 600] loss: 0.877 Accuracy: 64.43 %

[18, 800] loss: 0.872 Accuracy: 63.48 %

[18, 1000] loss: 0.879 Accuracy: 63.45 %

[18, 1200] loss: 0.905 Accuracy: 63.76 %

[18, 1400] loss: 0.897 Accuracy: 63.30 %

[19, 200] loss: 0.823 Accuracy: 63.08 %

[19, 400] loss: 0.833 Accuracy: 63.93 %

[19, 600] loss: 0.855 Accuracy: 62.89 %

[19, 800] loss: 0.845 Accuracy: 63.44 %

[19, 1000] loss: 0.872 Accuracy: 63.94 %

[19, 1200] loss: 0.861 Accuracy: 64.28 %

[19, 1400] loss: 0.853 Accuracy: 64.58 %

[20, 200] loss: 0.817 Accuracy: 63.54 %

[20, 400] loss: 0.809 Accuracy: 63.82 %

[20, 600] loss: 0.813 Accuracy: 63.07 %

[20, 800] loss: 0.815 Accuracy: 64.33 %

[20, 1000] loss: 0.852 Accuracy: 64.66 %

[20, 1200] loss: 0.850 Accuracy: 63.97 %

[20, 1400] loss: 0.844 Accuracy: 64.47 %

Finished Training

Accuracy: 64.12 %

GroundTruth: cat ship ship plane

Predicted: cat ship ship ship

Accuracy of plane : 61 %

Accuracy of car : 80 %

Accuracy of bird : 50 %

Accuracy of cat : 53 %

Accuracy of deer : 50 %

Accuracy of dog : 52 %

Accuracy of frog : 66 %

Accuracy of horse : 67 %

Accuracy of ship : 82 %

Accuracy of truck : 75 %

elapsed time: 602.200 [sec]

import torch.nn as nn

import torch.nn.functional as F

class VGG13(nn.Module):

def __init__(self): # , num_classes):

super(VGG13, self).__init__()

num_classes=10

self.block1_output = nn.Sequential (

nn.Conv2d(3, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block2_output = nn.Sequential (

nn.Conv2d(64, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block3_output = nn.Sequential (

nn.Conv2d(128, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block4_output = nn.Sequential (

nn.Conv2d(256, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.block5_output = nn.Sequential (

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.classifier = nn.Sequential(

nn.Linear(512, 512), #512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(512, 32 ), #4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(32, num_classes), #4096

)

def forward(self, x):

x = self.block1_output(x)

x = self.block2_output(x)

x = self.block3_output(x)

x = self.block4_output(x)

x = self.block5_output(x)

#print(x.size())

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

(keras-gpu) C:\Users\user\pytorch\cifar10>python pytorch_cifar10_.py

Files already downloaded and verified

Files already downloaded and verified

cuda:0

[1, 200] loss: 2.156 Accuracy: 24.39 %

[1, 400] loss: 1.869 Accuracy: 33.88 %

[1, 600] loss: 1.728 Accuracy: 39.04 %

[1, 800] loss: 1.578 Accuracy: 43.44 %

[1, 1000] loss: 1.496 Accuracy: 47.75 %

[1, 1200] loss: 1.436 Accuracy: 52.35 %

[1, 1400] loss: 1.363 Accuracy: 54.04 %

[2, 200] loss: 1.231 Accuracy: 57.80 %

[2, 400] loss: 1.209 Accuracy: 58.82 %

[2, 600] loss: 1.163 Accuracy: 61.31 %

[2, 800] loss: 1.131 Accuracy: 61.99 %

[2, 1000] loss: 1.115 Accuracy: 62.97 %

[2, 1200] loss: 1.084 Accuracy: 63.12 %

[2, 1400] loss: 1.028 Accuracy: 65.87 %

[3, 200] loss: 0.925 Accuracy: 65.56 %

[3, 400] loss: 0.928 Accuracy: 66.94 %

[3, 600] loss: 0.910 Accuracy: 68.22 %

[3, 800] loss: 0.916 Accuracy: 67.86 %

[3, 1000] loss: 0.902 Accuracy: 69.14 %

[3, 1200] loss: 0.848 Accuracy: 69.07 %

[3, 1400] loss: 0.883 Accuracy: 70.32 %

[4, 200] loss: 0.752 Accuracy: 71.35 %

[4, 400] loss: 0.782 Accuracy: 71.42 %

[4, 600] loss: 0.757 Accuracy: 71.67 %

[4, 800] loss: 0.767 Accuracy: 72.89 %

[4, 1000] loss: 0.767 Accuracy: 73.36 %

[4, 1200] loss: 0.746 Accuracy: 73.61 %

[4, 1400] loss: 0.764 Accuracy: 73.88 %

[5, 200] loss: 0.647 Accuracy: 74.12 %

[5, 400] loss: 0.627 Accuracy: 74.62 %

[5, 600] loss: 0.618 Accuracy: 74.07 %

[5, 800] loss: 0.663 Accuracy: 75.19 %

[5, 1000] loss: 0.661 Accuracy: 74.28 %

[5, 1200] loss: 0.649 Accuracy: 76.79 %

[5, 1400] loss: 0.650 Accuracy: 74.59 %

[6, 200] loss: 0.556 Accuracy: 77.10 %

[6, 400] loss: 0.543 Accuracy: 75.73 %

[6, 600] loss: 0.528 Accuracy: 76.50 %

[6, 800] loss: 0.552 Accuracy: 76.03 %

[6, 1000] loss: 0.568 Accuracy: 77.13 %

[6, 1200] loss: 0.580 Accuracy: 76.73 %

[6, 1400] loss: 0.563 Accuracy: 76.20 %

[7, 200] loss: 0.475 Accuracy: 77.29 %

[7, 400] loss: 0.470 Accuracy: 77.17 %

[7, 600] loss: 0.503 Accuracy: 77.16 %

[7, 800] loss: 0.484 Accuracy: 77.60 %

[7, 1000] loss: 0.485 Accuracy: 78.23 %

[7, 1200] loss: 0.491 Accuracy: 78.32 %

[7, 1400] loss: 0.480 Accuracy: 78.08 %

[8, 200] loss: 0.386 Accuracy: 78.60 %

[8, 400] loss: 0.413 Accuracy: 78.82 %

[8, 600] loss: 0.401 Accuracy: 78.03 %

[8, 800] loss: 0.421 Accuracy: 78.75 %

[8, 1000] loss: 0.450 Accuracy: 77.68 %

[8, 1200] loss: 0.439 Accuracy: 78.55 %

[8, 1400] loss: 0.420 Accuracy: 79.05 %

[9, 200] loss: 0.315 Accuracy: 79.21 %

[9, 400] loss: 0.366 Accuracy: 78.72 %

[9, 600] loss: 0.374 Accuracy: 79.63 %

[9, 800] loss: 0.378 Accuracy: 79.75 %

[9, 1000] loss: 0.371 Accuracy: 78.52 %

[9, 1200] loss: 0.377 Accuracy: 79.65 %

[9, 1400] loss: 0.396 Accuracy: 79.51 %

[10, 200] loss: 0.306 Accuracy: 79.25 %

[10, 400] loss: 0.320 Accuracy: 79.06 %

[10, 600] loss: 0.341 Accuracy: 79.20 %

[10, 800] loss: 0.340 Accuracy: 79.21 %

[10, 1000] loss: 0.327 Accuracy: 78.73 %

[10, 1200] loss: 0.334 Accuracy: 79.49 %

[10, 1400] loss: 0.335 Accuracy: 79.33 %

[11, 200] loss: 0.253 Accuracy: 78.67 %

[11, 400] loss: 0.267 Accuracy: 79.47 %

[11, 600] loss: 0.278 Accuracy: 79.17 %

[11, 800] loss: 0.294 Accuracy: 80.12 %

[11, 1000] loss: 0.311 Accuracy: 79.86 %

[11, 1200] loss: 0.299 Accuracy: 80.65 %

[11, 1400] loss: 0.297 Accuracy: 80.39 %

[12, 200] loss: 0.226 Accuracy: 80.51 %

[12, 400] loss: 0.237 Accuracy: 80.22 %

[12, 600] loss: 0.253 Accuracy: 79.49 %

[12, 800] loss: 0.261 Accuracy: 79.71 %

[12, 1000] loss: 0.252 Accuracy: 80.68 %

[12, 1200] loss: 0.272 Accuracy: 80.75 %

[12, 1400] loss: 0.281 Accuracy: 80.64 %

[13, 200] loss: 0.201 Accuracy: 80.44 %

[13, 400] loss: 0.234 Accuracy: 80.49 %

[13, 600] loss: 0.220 Accuracy: 79.90 %

[13, 800] loss: 0.221 Accuracy: 80.00 %

[13, 1000] loss: 0.236 Accuracy: 80.46 %

[13, 1200] loss: 0.216 Accuracy: 80.66 %

[13, 1400] loss: 0.239 Accuracy: 80.45 %

[14, 200] loss: 0.168 Accuracy: 80.75 %

[14, 400] loss: 0.203 Accuracy: 77.86 %

[14, 600] loss: 0.231 Accuracy: 80.50 %

[14, 800] loss: 0.192 Accuracy: 80.81 %

[14, 1000] loss: 0.195 Accuracy: 80.73 %

[14, 1200] loss: 0.209 Accuracy: 81.04 %

[14, 1400] loss: 0.207 Accuracy: 80.03 %

[15, 200] loss: 0.142 Accuracy: 81.15 %

[15, 400] loss: 0.169 Accuracy: 80.88 %

[15, 600] loss: 0.174 Accuracy: 80.52 %

[15, 800] loss: 0.167 Accuracy: 80.88 %

[15, 1000] loss: 0.208 Accuracy: 80.02 %

[15, 1200] loss: 0.181 Accuracy: 81.65 %

[15, 1400] loss: 0.198 Accuracy: 81.14 %

[16, 200] loss: 0.125 Accuracy: 81.02 %

[16, 400] loss: 0.142 Accuracy: 81.41 %

[16, 600] loss: 0.172 Accuracy: 80.92 %

[16, 800] loss: 0.157 Accuracy: 82.58 %

[16, 1000] loss: 0.140 Accuracy: 81.21 %

[16, 1200] loss: 0.179 Accuracy: 80.29 %

[16, 1400] loss: 0.185 Accuracy: 81.94 %

[17, 200] loss: 0.125 Accuracy: 80.94 %

[17, 400] loss: 0.155 Accuracy: 80.92 %

[17, 600] loss: 0.140 Accuracy: 81.45 %

[17, 800] loss: 0.169 Accuracy: 81.80 %

[17, 1000] loss: 0.162 Accuracy: 81.31 %

[17, 1200] loss: 0.141 Accuracy: 81.42 %

[17, 1400] loss: 0.185 Accuracy: 80.21 %

[18, 200] loss: 0.140 Accuracy: 81.76 %

[18, 400] loss: 0.129 Accuracy: 80.78 %

[18, 600] loss: 0.135 Accuracy: 81.52 %

[18, 800] loss: 0.139 Accuracy: 82.01 %

[18, 1000] loss: 0.149 Accuracy: 81.43 %

[18, 1200] loss: 0.134 Accuracy: 81.39 %

[18, 1400] loss: 0.162 Accuracy: 80.56 %

[19, 200] loss: 0.102 Accuracy: 82.01 %

[19, 400] loss: 0.100 Accuracy: 80.91 %

[19, 600] loss: 0.148 Accuracy: 80.74 %

[19, 800] loss: 0.115 Accuracy: 82.43 %

[19, 1000] loss: 0.110 Accuracy: 81.74 %

[19, 1200] loss: 0.115 Accuracy: 80.78 %

[19, 1400] loss: 0.142 Accuracy: 81.88 %

[20, 200] loss: 0.109 Accuracy: 82.20 %

[20, 400] loss: 0.112 Accuracy: 81.65 %

[20, 600] loss: 0.139 Accuracy: 81.70 %

[20, 800] loss: 0.109 Accuracy: 82.88 %

[20, 1000] loss: 0.116 Accuracy: 82.73 %

[20, 1200] loss: 0.112 Accuracy: 82.07 %

[20, 1400] loss: 0.123 Accuracy: 82.28 %

Finished Training

Accuracy: 82.00 %

GroundTruth: cat ship ship plane

Predicted: cat ship ship plane

Accuracy of plane : 88 %

Accuracy of car : 91 %

Accuracy of bird : 75 %

Accuracy of cat : 55 %

Accuracy of deer : 84 %

Accuracy of dog : 70 %

Accuracy of frog : 84 %

Accuracy of horse : 81 %

Accuracy of ship : 92 %

Accuracy of truck : 87 %

elapsed time: 6227.035 [sec]

(keras-gpu) C:\Users\user\pytorch\cifar10>pip install torchsummary

Collecting torchsummary

Downloading https://files.pythonhosted.org/packages/7d/18/1474d06f721b86e6a9b9d7392ad68bed711a02f3b61ac43f13c719db50a6/torchsummary-1.5.1-py3-none-any.whl

Installing collected packages: torchsummary

Successfully installed torchsummary-1.5.1

(keras-gpu) C:\Users\user\pytorch\cifar10>python pytorch_cifar10_.py

Files already downloaded and verified

Files already downloaded and verified

cuda:0

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 32, 32] 1,792

BatchNorm2d-2 [-1, 64, 32, 32] 128

ReLU-3 [-1, 64, 32, 32] 0

Conv2d-4 [-1, 64, 32, 32] 36,928

BatchNorm2d-5 [-1, 64, 32, 32] 128

ReLU-6 [-1, 64, 32, 32] 0

MaxPool2d-7 [-1, 64, 16, 16] 0

Conv2d-8 [-1, 128, 16, 16] 73,856

BatchNorm2d-9 [-1, 128, 16, 16] 256

ReLU-10 [-1, 128, 16, 16] 0

Conv2d-11 [-1, 128, 16, 16] 147,584

BatchNorm2d-12 [-1, 128, 16, 16] 256

ReLU-13 [-1, 128, 16, 16] 0

MaxPool2d-14 [-1, 128, 8, 8] 0

Conv2d-15 [-1, 256, 8, 8] 295,168

BatchNorm2d-16 [-1, 256, 8, 8] 512

ReLU-17 [-1, 256, 8, 8] 0

Conv2d-18 [-1, 256, 8, 8] 590,080

BatchNorm2d-19 [-1, 256, 8, 8] 512

ReLU-20 [-1, 256, 8, 8] 0

MaxPool2d-21 [-1, 256, 4, 4] 0

Conv2d-22 [-1, 512, 4, 4] 1,180,160

BatchNorm2d-23 [-1, 512, 4, 4] 1,024

ReLU-24 [-1, 512, 4, 4] 0

Conv2d-25 [-1, 512, 4, 4] 2,359,808

BatchNorm2d-26 [-1, 512, 4, 4] 1,024

ReLU-27 [-1, 512, 4, 4] 0

MaxPool2d-28 [-1, 512, 2, 2] 0

Conv2d-29 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-30 [-1, 512, 2, 2] 1,024

ReLU-31 [-1, 512, 2, 2] 0

Conv2d-32 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-33 [-1, 512, 2, 2] 1,024

ReLU-34 [-1, 512, 2, 2] 0

MaxPool2d-35 [-1, 512, 1, 1] 0

Linear-36 [-1, 512] 262,656

ReLU-37 [-1, 512] 0

Dropout-38 [-1, 512] 0

Linear-39 [-1, 32] 16,416

ReLU-40 [-1, 32] 0

Dropout-41 [-1, 32] 0

Linear-42 [-1, 10] 330

================================================================

Total params: 9,690,282

Trainable params: 9,690,282

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 5.97

Params size (MB): 36.97

Estimated Total Size (MB): 42.95

----------------------------------------------------------------

(keras-gpu) C:\Users\user\pytorch\cifar10>python pytorch_cifar10_.py

Files already downloaded and verified

Files already downloaded and verified

cuda:0

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 32, 32] 1,792

BatchNorm2d-2 [-1, 64, 32, 32] 128

ReLU-3 [-1, 64, 32, 32] 0

Conv2d-4 [-1, 64, 32, 32] 36,928

BatchNorm2d-5 [-1, 64, 32, 32] 128

ReLU-6 [-1, 64, 32, 32] 0

MaxPool2d-7 [-1, 64, 16, 16] 0

Conv2d-8 [-1, 128, 16, 16] 73,856

BatchNorm2d-9 [-1, 128, 16, 16] 256

ReLU-10 [-1, 128, 16, 16] 0

Conv2d-11 [-1, 128, 16, 16] 147,584

BatchNorm2d-12 [-1, 128, 16, 16] 256

ReLU-13 [-1, 128, 16, 16] 0

MaxPool2d-14 [-1, 128, 8, 8] 0

Conv2d-15 [-1, 256, 8, 8] 295,168

BatchNorm2d-16 [-1, 256, 8, 8] 512

ReLU-17 [-1, 256, 8, 8] 0

Conv2d-18 [-1, 256, 8, 8] 590,080

BatchNorm2d-19 [-1, 256, 8, 8] 512

ReLU-20 [-1, 256, 8, 8] 0

MaxPool2d-21 [-1, 256, 4, 4] 0

Conv2d-22 [-1, 512, 4, 4] 1,180,160

BatchNorm2d-23 [-1, 512, 4, 4] 1,024

ReLU-24 [-1, 512, 4, 4] 0

Conv2d-25 [-1, 512, 4, 4] 2,359,808

BatchNorm2d-26 [-1, 512, 4, 4] 1,024

ReLU-27 [-1, 512, 4, 4] 0

MaxPool2d-28 [-1, 512, 2, 2] 0

Conv2d-29 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-30 [-1, 512, 2, 2] 1,024

ReLU-31 [-1, 512, 2, 2] 0

Conv2d-32 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-33 [-1, 512, 2, 2] 1,024

ReLU-34 [-1, 512, 2, 2] 0

MaxPool2d-35 [-1, 512, 1, 1] 0

Linear-36 [-1, 4096] 2,101,248

ReLU-37 [-1, 4096] 0

Dropout-38 [-1, 4096] 0

Linear-39 [-1, 4096] 16,781,312

ReLU-40 [-1, 4096] 0

Dropout-41 [-1, 4096] 0

Linear-42 [-1, 10] 40,970

================================================================

Total params: 28,334,410

Trainable params: 28,334,410

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 6.14

Params size (MB): 108.09

Estimated Total Size (MB): 114.24

----------------------------------------------------------------

[1, 200] loss: 1.935 Accuracy: 37.73 %

[1, 400] loss: 1.564 Accuracy: 46.54 %

[1, 600] loss: 1.355 Accuracy: 51.21 %

[1, 800] loss: 1.243 Accuracy: 57.66 %

[1, 1000] loss: 1.149 Accuracy: 61.24 %

[1, 1200] loss: 1.081 Accuracy: 64.30 %

[1, 1400] loss: 1.037 Accuracy: 65.43 %

[2, 200] loss: 0.876 Accuracy: 68.62 %

[2, 400] loss: 0.840 Accuracy: 68.47 %

[2, 600] loss: 0.819 Accuracy: 70.76 %

[2, 800] loss: 0.812 Accuracy: 70.56 %

[2, 1000] loss: 0.776 Accuracy: 72.58 %

[2, 1200] loss: 0.772 Accuracy: 72.98 %

[2, 1400] loss: 0.737 Accuracy: 73.90 %

[3, 200] loss: 0.590 Accuracy: 74.99 %

[3, 400] loss: 0.589 Accuracy: 74.98 %

[3, 600] loss: 0.575 Accuracy: 76.83 %

[3, 800] loss: 0.603 Accuracy: 76.16 %

[3, 1000] loss: 0.586 Accuracy: 75.61 %

[3, 1200] loss: 0.594 Accuracy: 77.48 %

[3, 1400] loss: 0.575 Accuracy: 77.80 %

[4, 200] loss: 0.421 Accuracy: 76.95 %

[4, 400] loss: 0.474 Accuracy: 79.14 %

[4, 600] loss: 0.450 Accuracy: 78.46 %

[4, 800] loss: 0.458 Accuracy: 78.70 %

[4, 1000] loss: 0.436 Accuracy: 78.99 %

[4, 1200] loss: 0.460 Accuracy: 78.49 %

[4, 1400] loss: 0.439 Accuracy: 79.29 %

[5, 200] loss: 0.324 Accuracy: 80.00 %

[5, 400] loss: 0.326 Accuracy: 79.82 %

[5, 600] loss: 0.340 Accuracy: 79.58 %

[5, 800] loss: 0.355 Accuracy: 79.85 %

[5, 1000] loss: 0.353 Accuracy: 78.64 %

[5, 1200] loss: 0.358 Accuracy: 79.53 %

[5, 1400] loss: 0.375 Accuracy: 80.18 %

[6, 200] loss: 0.197 Accuracy: 80.41 %

[6, 400] loss: 0.240 Accuracy: 79.51 %

[6, 600] loss: 0.253 Accuracy: 80.12 %

[6, 800] loss: 0.257 Accuracy: 79.99 %

[6, 1000] loss: 0.280 Accuracy: 80.19 %

[6, 1200] loss: 0.290 Accuracy: 80.65 %

[6, 1400] loss: 0.279 Accuracy: 80.54 %

[7, 200] loss: 0.163 Accuracy: 80.61 %

[7, 400] loss: 0.159 Accuracy: 80.54 %

[7, 600] loss: 0.214 Accuracy: 80.71 %

[7, 800] loss: 0.207 Accuracy: 80.06 %

[7, 1000] loss: 0.230 Accuracy: 80.94 %

[7, 1200] loss: 0.202 Accuracy: 80.87 %

[7, 1400] loss: 0.229 Accuracy: 80.88 %

[8, 200] loss: 0.111 Accuracy: 81.43 %

[8, 400] loss: 0.117 Accuracy: 80.23 %

[8, 600] loss: 0.141 Accuracy: 81.27 %

[8, 800] loss: 0.144 Accuracy: 80.94 %

[8, 1000] loss: 0.162 Accuracy: 81.23 %

[8, 1200] loss: 0.186 Accuracy: 80.36 %

[8, 1400] loss: 0.172 Accuracy: 81.31 %

[9, 200] loss: 0.115 Accuracy: 82.08 %

[9, 400] loss: 0.093 Accuracy: 81.80 %

[9, 600] loss: 0.110 Accuracy: 80.76 %

[9, 800] loss: 0.124 Accuracy: 80.36 %

[9, 1000] loss: 0.121 Accuracy: 81.47 %

[9, 1200] loss: 0.127 Accuracy: 82.10 %

[9, 1400] loss: 0.126 Accuracy: 82.00 %

[10, 200] loss: 0.069 Accuracy: 81.54 %

[10, 400] loss: 0.076 Accuracy: 81.65 %

[10, 600] loss: 0.086 Accuracy: 81.65 %

[10, 800] loss: 0.096 Accuracy: 81.21 %

[10, 1000] loss: 0.097 Accuracy: 81.36 %

[10, 1200] loss: 0.125 Accuracy: 81.14 %

[10, 1400] loss: 0.115 Accuracy: 81.67 %

[11, 200] loss: 0.065 Accuracy: 82.97 %

[11, 400] loss: 0.072 Accuracy: 82.64 %

[11, 600] loss: 0.068 Accuracy: 81.99 %

[11, 800] loss: 0.078 Accuracy: 82.35 %

[11, 1000] loss: 0.092 Accuracy: 80.93 %

[11, 1200] loss: 0.097 Accuracy: 82.51 %

[11, 1400] loss: 0.089 Accuracy: 82.36 %

[12, 200] loss: 0.052 Accuracy: 82.49 %

[12, 400] loss: 0.044 Accuracy: 82.01 %

[12, 600] loss: 0.059 Accuracy: 82.71 %

[12, 800] loss: 0.060 Accuracy: 82.39 %

[12, 1000] loss: 0.073 Accuracy: 82.73 %

[12, 1200] loss: 0.057 Accuracy: 82.53 %

[12, 1400] loss: 0.067 Accuracy: 82.27 %

[13, 200] loss: 0.050 Accuracy: 82.59 %

[13, 400] loss: 0.051 Accuracy: 82.51 %

[13, 600] loss: 0.046 Accuracy: 83.08 %

[13, 800] loss: 0.041 Accuracy: 82.59 %

[13, 1000] loss: 0.057 Accuracy: 82.74 %

[13, 1200] loss: 0.072 Accuracy: 82.47 %

[13, 1400] loss: 0.055 Accuracy: 82.31 %

[14, 200] loss: 0.046 Accuracy: 82.98 %

[14, 400] loss: 0.048 Accuracy: 82.69 %

[14, 600] loss: 0.036 Accuracy: 82.45 %

[14, 800] loss: 0.066 Accuracy: 82.31 %

[14, 1000] loss: 0.047 Accuracy: 82.56 %

[14, 1200] loss: 0.057 Accuracy: 82.21 %

[14, 1400] loss: 0.052 Accuracy: 81.95 %

[15, 200] loss: 0.045 Accuracy: 82.63 %

[15, 400] loss: 0.042 Accuracy: 82.32 %

[15, 600] loss: 0.033 Accuracy: 82.95 %

[15, 800] loss: 0.045 Accuracy: 82.65 %

[15, 1000] loss: 0.050 Accuracy: 82.56 %

[15, 1200] loss: 0.051 Accuracy: 81.83 %

[15, 1400] loss: 0.056 Accuracy: 82.11 %

[16, 200] loss: 0.029 Accuracy: 82.95 %

[16, 400] loss: 0.024 Accuracy: 82.57 %

[16, 600] loss: 0.036 Accuracy: 81.98 %

[16, 800] loss: 0.036 Accuracy: 82.66 %

[16, 1000] loss: 0.042 Accuracy: 82.54 %

[16, 1200] loss: 0.032 Accuracy: 82.41 %

[16, 1400] loss: 0.041 Accuracy: 82.57 %

[17, 200] loss: 0.028 Accuracy: 82.20 %

[17, 400] loss: 0.027 Accuracy: 83.26 %

[17, 600] loss: 0.025 Accuracy: 83.30 %

[17, 800] loss: 0.027 Accuracy: 82.94 %

[17, 1000] loss: 0.037 Accuracy: 81.51 %

[17, 1200] loss: 0.031 Accuracy: 82.83 %

[17, 1400] loss: 0.034 Accuracy: 82.57 %

[18, 200] loss: 0.030 Accuracy: 82.78 %

[18, 400] loss: 0.024 Accuracy: 83.46 %

[18, 600] loss: 0.020 Accuracy: 83.02 %

[18, 800] loss: 0.016 Accuracy: 83.47 %

[18, 1000] loss: 0.030 Accuracy: 82.85 %

[18, 1200] loss: 0.031 Accuracy: 82.56 %

[18, 1400] loss: 0.040 Accuracy: 82.16 %

[19, 200] loss: 0.023 Accuracy: 82.91 %

[19, 400] loss: 0.015 Accuracy: 82.99 %

[19, 600] loss: 0.017 Accuracy: 83.53 %

[19, 800] loss: 0.025 Accuracy: 82.35 %

[19, 1000] loss: 0.033 Accuracy: 82.55 %

[19, 1200] loss: 0.040 Accuracy: 82.92 %

[19, 1400] loss: 0.029 Accuracy: 82.75 %

[20, 200] loss: 0.020 Accuracy: 82.80 %

[20, 400] loss: 0.016 Accuracy: 83.21 %

[20, 600] loss: 0.017 Accuracy: 82.76 %

[20, 800] loss: 0.017 Accuracy: 82.93 %

[20, 1000] loss: 0.018 Accuracy: 83.16 %

[20, 1200] loss: 0.024 Accuracy: 83.23 %

[20, 1400] loss: 0.023 Accuracy: 82.91 %

Finished Training

Accuracy: 82.15 %

GroundTruth: cat ship ship plane

Predicted: cat ship ship plane

Accuracy of plane : 84 %

Accuracy of car : 91 %

Accuracy of bird : 69 %

Accuracy of cat : 59 %

Accuracy of deer : 81 %

Accuracy of dog : 76 %

Accuracy of frog : 90 %

Accuracy of horse : 86 %

Accuracy of ship : 94 %

Accuracy of truck : 88 %

elapsed time: 2177.621 [sec]

(keras-gpu) C:\Users\user\pytorch\cifar10>python pytorch_cifar10_.py

Files already downloaded and verified

Files already downloaded and verified

cuda:0

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 32, 32] 1,792

BatchNorm2d-2 [-1, 64, 32, 32] 128

ReLU-3 [-1, 64, 32, 32] 0

Conv2d-4 [-1, 64, 32, 32] 36,928

BatchNorm2d-5 [-1, 64, 32, 32] 128

ReLU-6 [-1, 64, 32, 32] 0

MaxPool2d-7 [-1, 64, 16, 16] 0

Conv2d-8 [-1, 128, 16, 16] 73,856

BatchNorm2d-9 [-1, 128, 16, 16] 256

ReLU-10 [-1, 128, 16, 16] 0

Conv2d-11 [-1, 128, 16, 16] 147,584

BatchNorm2d-12 [-1, 128, 16, 16] 256

ReLU-13 [-1, 128, 16, 16] 0

MaxPool2d-14 [-1, 128, 8, 8] 0

Conv2d-15 [-1, 256, 8, 8] 295,168

BatchNorm2d-16 [-1, 256, 8, 8] 512

ReLU-17 [-1, 256, 8, 8] 0

Conv2d-18 [-1, 256, 8, 8] 590,080

BatchNorm2d-19 [-1, 256, 8, 8] 512

ReLU-20 [-1, 256, 8, 8] 0

MaxPool2d-21 [-1, 256, 4, 4] 0

Conv2d-22 [-1, 512, 4, 4] 1,180,160

BatchNorm2d-23 [-1, 512, 4, 4] 1,024

ReLU-24 [-1, 512, 4, 4] 0

Conv2d-25 [-1, 512, 4, 4] 2,359,808

BatchNorm2d-26 [-1, 512, 4, 4] 1,024

ReLU-27 [-1, 512, 4, 4] 0

MaxPool2d-28 [-1, 512, 2, 2] 0

Conv2d-29 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-30 [-1, 512, 2, 2] 1,024

ReLU-31 [-1, 512, 2, 2] 0

Conv2d-32 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-33 [-1, 512, 2, 2] 1,024

ReLU-34 [-1, 512, 2, 2] 0

MaxPool2d-35 [-1, 512, 1, 1] 0

Linear-36 [-1, 10] 5,130

================================================================

Total params: 9,416,010

Trainable params: 9,416,010

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 5.96

Params size (MB): 35.92

Estimated Total Size (MB): 41.89

----------------------------------------------------------------

[1, 200] loss: 1.694 Accuracy: 45.04 %

[1, 400] loss: 1.393 Accuracy: 52.05 %

[1, 600] loss: 1.245 Accuracy: 59.09 %

[1, 800] loss: 1.119 Accuracy: 63.34 %

[1, 1000] loss: 1.034 Accuracy: 67.15 %

[1, 1200] loss: 0.987 Accuracy: 64.93 %

[1, 1400] loss: 0.922 Accuracy: 69.80 %

[2, 200] loss: 0.732 Accuracy: 71.40 %

[2, 400] loss: 0.765 Accuracy: 70.54 %

[2, 600] loss: 0.730 Accuracy: 72.81 %

[2, 800] loss: 0.703 Accuracy: 74.63 %

[2, 1000] loss: 0.726 Accuracy: 74.41 %

[2, 1200] loss: 0.695 Accuracy: 75.12 %

[2, 1400] loss: 0.676 Accuracy: 76.17 %

[3, 200] loss: 0.484 Accuracy: 76.41 %

[3, 400] loss: 0.496 Accuracy: 76.92 %

[3, 600] loss: 0.519 Accuracy: 76.57 %

[3, 800] loss: 0.521 Accuracy: 76.75 %

[3, 1000] loss: 0.523 Accuracy: 77.10 %

[3, 1200] loss: 0.499 Accuracy: 77.52 %

[3, 1400] loss: 0.506 Accuracy: 78.88 %

[4, 200] loss: 0.320 Accuracy: 79.10 %

[4, 400] loss: 0.348 Accuracy: 78.58 %

[4, 600] loss: 0.368 Accuracy: 78.86 %

[4, 800] loss: 0.398 Accuracy: 79.05 %

[4, 1000] loss: 0.387 Accuracy: 79.22 %

[4, 1200] loss: 0.409 Accuracy: 79.54 %

[4, 1400] loss: 0.416 Accuracy: 78.79 %

[5, 200] loss: 0.212 Accuracy: 79.96 %

[5, 400] loss: 0.243 Accuracy: 80.23 %

[5, 600] loss: 0.257 Accuracy: 79.61 %

[5, 800] loss: 0.270 Accuracy: 79.62 %

[5, 1000] loss: 0.297 Accuracy: 79.50 %

[5, 1200] loss: 0.282 Accuracy: 79.86 %

[5, 1400] loss: 0.307 Accuracy: 79.68 %

[6, 200] loss: 0.159 Accuracy: 80.35 %

[6, 400] loss: 0.168 Accuracy: 78.92 %

[6, 600] loss: 0.176 Accuracy: 80.20 %

[6, 800] loss: 0.198 Accuracy: 79.92 %

[6, 1000] loss: 0.203 Accuracy: 79.62 %

[6, 1200] loss: 0.196 Accuracy: 80.84 %

[6, 1400] loss: 0.223 Accuracy: 80.23 %

[7, 200] loss: 0.117 Accuracy: 80.72 %

[7, 400] loss: 0.112 Accuracy: 80.82 %

[7, 600] loss: 0.111 Accuracy: 80.64 %

[7, 800] loss: 0.134 Accuracy: 80.78 %

[7, 1000] loss: 0.137 Accuracy: 79.52 %

[7, 1200] loss: 0.160 Accuracy: 80.54 %

[7, 1400] loss: 0.149 Accuracy: 80.22 %

[8, 200] loss: 0.080 Accuracy: 80.49 %

[8, 400] loss: 0.080 Accuracy: 79.94 %

[8, 600] loss: 0.081 Accuracy: 81.20 %

[8, 800] loss: 0.087 Accuracy: 79.86 %

[8, 1000] loss: 0.107 Accuracy: 79.85 %

[8, 1200] loss: 0.128 Accuracy: 81.13 %

[8, 1400] loss: 0.124 Accuracy: 80.82 %

[9, 200] loss: 0.064 Accuracy: 81.60 %

[9, 400] loss: 0.070 Accuracy: 81.56 %

[9, 600] loss: 0.076 Accuracy: 80.87 %

[9, 800] loss: 0.079 Accuracy: 81.40 %

[9, 1000] loss: 0.109 Accuracy: 79.99 %

[9, 1200] loss: 0.112 Accuracy: 80.14 %

[9, 1400] loss: 0.092 Accuracy: 80.49 %

[10, 200] loss: 0.075 Accuracy: 81.39 %

[10, 400] loss: 0.052 Accuracy: 80.67 %

[10, 600] loss: 0.055 Accuracy: 80.81 %

[10, 800] loss: 0.048 Accuracy: 81.62 %

[10, 1000] loss: 0.050 Accuracy: 81.03 %

[10, 1200] loss: 0.072 Accuracy: 80.54 %

[10, 1400] loss: 0.092 Accuracy: 80.93 %

[11, 200] loss: 0.051 Accuracy: 81.15 %

[11, 400] loss: 0.042 Accuracy: 81.66 %

[11, 600] loss: 0.052 Accuracy: 81.73 %

[11, 800] loss: 0.044 Accuracy: 81.80 %

[11, 1000] loss: 0.045 Accuracy: 81.38 %

[11, 1200] loss: 0.041 Accuracy: 81.75 %

[11, 1400] loss: 0.051 Accuracy: 81.69 %

[12, 200] loss: 0.043 Accuracy: 82.13 %

[12, 400] loss: 0.026 Accuracy: 82.22 %

[12, 600] loss: 0.038 Accuracy: 81.66 %

[12, 800] loss: 0.030 Accuracy: 82.17 %

[12, 1000] loss: 0.040 Accuracy: 81.41 %

[12, 1200] loss: 0.036 Accuracy: 82.57 %

[12, 1400] loss: 0.040 Accuracy: 81.92 %

[13, 200] loss: 0.028 Accuracy: 82.66 %

[13, 400] loss: 0.028 Accuracy: 83.11 %

[13, 600] loss: 0.028 Accuracy: 81.71 %

[13, 800] loss: 0.023 Accuracy: 83.15 %

[13, 1000] loss: 0.018 Accuracy: 82.23 %

[13, 1200] loss: 0.025 Accuracy: 82.45 %

[13, 1400] loss: 0.030 Accuracy: 82.09 %

[14, 200] loss: 0.019 Accuracy: 82.08 %

[14, 400] loss: 0.029 Accuracy: 81.89 %

[14, 600] loss: 0.029 Accuracy: 82.36 %

[14, 800] loss: 0.019 Accuracy: 82.19 %

[14, 1000] loss: 0.020 Accuracy: 81.79 %

[14, 1200] loss: 0.028 Accuracy: 81.67 %

[14, 1400] loss: 0.037 Accuracy: 81.56 %

[15, 200] loss: 0.029 Accuracy: 82.03 %

[15, 400] loss: 0.024 Accuracy: 82.66 %

[15, 600] loss: 0.024 Accuracy: 82.21 %

[15, 800] loss: 0.022 Accuracy: 81.62 %

[15, 1000] loss: 0.024 Accuracy: 82.61 %

[15, 1200] loss: 0.028 Accuracy: 82.36 %

[15, 1400] loss: 0.032 Accuracy: 82.21 %

[16, 200] loss: 0.018 Accuracy: 82.14 %

[16, 400] loss: 0.013 Accuracy: 82.07 %

[16, 600] loss: 0.016 Accuracy: 82.62 %

[16, 800] loss: 0.014 Accuracy: 82.77 %

[16, 1000] loss: 0.017 Accuracy: 82.30 %

[16, 1200] loss: 0.031 Accuracy: 82.07 %

[16, 1400] loss: 0.021 Accuracy: 82.14 %

[17, 200] loss: 0.021 Accuracy: 82.37 %

[17, 400] loss: 0.019 Accuracy: 81.47 %

[17, 600] loss: 0.016 Accuracy: 82.76 %

[17, 800] loss: 0.014 Accuracy: 82.85 %

[17, 1000] loss: 0.012 Accuracy: 82.11 %

[17, 1200] loss: 0.021 Accuracy: 82.27 %

[17, 1400] loss: 0.025 Accuracy: 81.77 %

[18, 200] loss: 0.017 Accuracy: 82.24 %

[18, 400] loss: 0.015 Accuracy: 82.22 %

[18, 600] loss: 0.010 Accuracy: 82.42 %

[18, 800] loss: 0.011 Accuracy: 83.26 %

[18, 1000] loss: 0.014 Accuracy: 82.56 %

[18, 1200] loss: 0.020 Accuracy: 82.53 %

[18, 1400] loss: 0.025 Accuracy: 82.08 %

[19, 200] loss: 0.017 Accuracy: 82.10 %

[19, 400] loss: 0.014 Accuracy: 82.57 %

[19, 600] loss: 0.012 Accuracy: 82.03 %

[19, 800] loss: 0.014 Accuracy: 82.27 %

[19, 1000] loss: 0.010 Accuracy: 82.89 %

[19, 1200] loss: 0.006 Accuracy: 82.79 %

[19, 1400] loss: 0.010 Accuracy: 82.54 %

[20, 200] loss: 0.006 Accuracy: 83.22 %

[20, 400] loss: 0.005 Accuracy: 83.32 %

[20, 600] loss: 0.010 Accuracy: 82.79 %

[20, 800] loss: 0.008 Accuracy: 82.95 %

[20, 1000] loss: 0.007 Accuracy: 83.04 %

[20, 1200] loss: 0.017 Accuracy: 82.34 %

[20, 1400] loss: 0.022 Accuracy: 81.85 %

Finished Training

Accuracy: 82.37 %

GroundTruth: cat ship ship plane

Predicted: cat ship ship plane

Accuracy of plane : 79 %

Accuracy of car : 88 %

Accuracy of bird : 75 %

Accuracy of cat : 65 %

Accuracy of deer : 79 %

Accuracy of dog : 79 %

Accuracy of frog : 81 %

Accuracy of horse : 84 %

Accuracy of ship : 88 %

Accuracy of truck : 91 %

elapsed time: ...

(keras-gpu) C:\Users\user\pytorch\cifar10>python pytorch_cifar10_.py

Files already downloaded and verified

Files already downloaded and verified

cuda:0

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 32, 32] 1,792

BatchNorm2d-2 [-1, 64, 32, 32] 128

ReLU-3 [-1, 64, 32, 32] 0

Conv2d-4 [-1, 64, 32, 32] 36,928

BatchNorm2d-5 [-1, 64, 32, 32] 128

ReLU-6 [-1, 64, 32, 32] 0

MaxPool2d-7 [-1, 64, 16, 16] 0

Conv2d-8 [-1, 128, 16, 16] 73,856

BatchNorm2d-9 [-1, 128, 16, 16] 256

ReLU-10 [-1, 128, 16, 16] 0

Conv2d-11 [-1, 128, 16, 16] 147,584

BatchNorm2d-12 [-1, 128, 16, 16] 256

ReLU-13 [-1, 128, 16, 16] 0

MaxPool2d-14 [-1, 128, 8, 8] 0

Conv2d-15 [-1, 256, 8, 8] 295,168

BatchNorm2d-16 [-1, 256, 8, 8] 512

ReLU-17 [-1, 256, 8, 8] 0

Conv2d-18 [-1, 256, 8, 8] 590,080

BatchNorm2d-19 [-1, 256, 8, 8] 512

ReLU-20 [-1, 256, 8, 8] 0

Conv2d-21 [-1, 256, 8, 8] 590,080

BatchNorm2d-22 [-1, 256, 8, 8] 512

ReLU-23 [-1, 256, 8, 8] 0

MaxPool2d-24 [-1, 256, 4, 4] 0

Conv2d-25 [-1, 512, 4, 4] 1,180,160

BatchNorm2d-26 [-1, 512, 4, 4] 1,024

ReLU-27 [-1, 512, 4, 4] 0

Conv2d-28 [-1, 512, 4, 4] 2,359,808

BatchNorm2d-29 [-1, 512, 4, 4] 1,024

ReLU-30 [-1, 512, 4, 4] 0

Conv2d-31 [-1, 512, 4, 4] 2,359,808

BatchNorm2d-32 [-1, 512, 4, 4] 1,024

ReLU-33 [-1, 512, 4, 4] 0

MaxPool2d-34 [-1, 512, 2, 2] 0

Conv2d-35 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-36 [-1, 512, 2, 2] 1,024

ReLU-37 [-1, 512, 2, 2] 0

Conv2d-38 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-39 [-1, 512, 2, 2] 1,024

ReLU-40 [-1, 512, 2, 2] 0

Conv2d-41 [-1, 512, 2, 2] 2,359,808

BatchNorm2d-42 [-1, 512, 2, 2] 1,024

ReLU-43 [-1, 512, 2, 2] 0

MaxPool2d-44 [-1, 512, 1, 1] 0

Linear-45 [-1, 10] 5,130

================================================================

Total params: 14,728,266

Trainable params: 14,728,266

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 6.57

Params size (MB): 56.18

Estimated Total Size (MB): 62.76

----------------------------------------------------------------

[1, 200] loss: 1.799 Accuracy: 40.17 %

[1, 400] loss: 1.469 Accuracy: 48.53 %

[1, 600] loss: 1.295 Accuracy: 58.68 %

[1, 800] loss: 1.183 Accuracy: 59.18 %

[1, 1000] loss: 1.091 Accuracy: 63.12 %

[1, 1200] loss: 1.016 Accuracy: 67.31 %

[1, 1400] loss: 0.943 Accuracy: 67.08 %

[2, 200] loss: 0.774 Accuracy: 69.65 %

[2, 400] loss: 0.773 Accuracy: 72.26 %

[2, 600] loss: 0.739 Accuracy: 72.27 %

[2, 800] loss: 0.742 Accuracy: 73.00 %

[2, 1000] loss: 0.716 Accuracy: 73.47 %

[2, 1200] loss: 0.730 Accuracy: 75.37 %

[2, 1400] loss: 0.686 Accuracy: 75.08 %

[3, 200] loss: 0.530 Accuracy: 75.96 %

[3, 400] loss: 0.532 Accuracy: 76.04 %

[3, 600] loss: 0.557 Accuracy: 76.72 %

[3, 800] loss: 0.540 Accuracy: 77.04 %

[3, 1000] loss: 0.560 Accuracy: 76.86 %

[3, 1200] loss: 0.541 Accuracy: 78.71 %

[3, 1400] loss: 0.534 Accuracy: 77.87 %

[4, 200] loss: 0.367 Accuracy: 78.03 %

[4, 400] loss: 0.385 Accuracy: 78.14 %

[4, 600] loss: 0.399 Accuracy: 77.48 %

[4, 800] loss: 0.421 Accuracy: 80.07 %

[4, 1000] loss: 0.423 Accuracy: 79.78 %

[4, 1200] loss: 0.419 Accuracy: 77.99 %

[4, 1400] loss: 0.435 Accuracy: 77.94 %

[5, 200] loss: 0.251 Accuracy: 79.96 %

[5, 400] loss: 0.263 Accuracy: 80.21 %

[5, 600] loss: 0.305 Accuracy: 79.52 %

[5, 800] loss: 0.325 Accuracy: 79.28 %

[5, 1000] loss: 0.328 Accuracy: 79.60 %

[5, 1200] loss: 0.310 Accuracy: 80.36 %

[5, 1400] loss: 0.321 Accuracy: 79.35 %

[6, 200] loss: 0.197 Accuracy: 80.52 %

[6, 400] loss: 0.175 Accuracy: 81.41 %

[6, 600] loss: 0.205 Accuracy: 79.99 %

[6, 800] loss: 0.225 Accuracy: 80.46 %

[6, 1000] loss: 0.226 Accuracy: 81.30 %

[6, 1200] loss: 0.268 Accuracy: 80.72 %

[6, 1400] loss: 0.260 Accuracy: 80.55 %

[7, 200] loss: 0.137 Accuracy: 81.70 %

[7, 400] loss: 0.154 Accuracy: 80.79 %

[7, 600] loss: 0.159 Accuracy: 81.09 %

[7, 800] loss: 0.163 Accuracy: 80.51 %

[7, 1000] loss: 0.181 Accuracy: 81.27 %

[7, 1200] loss: 0.188 Accuracy: 81.19 %

[7, 1400] loss: 0.175 Accuracy: 81.94 %

[8, 200] loss: 0.097 Accuracy: 81.12 %

[8, 400] loss: 0.127 Accuracy: 80.91 %

[8, 600] loss: 0.122 Accuracy: 81.28 %

[8, 800] loss: 0.136 Accuracy: 81.21 %

[8, 1000] loss: 0.128 Accuracy: 81.71 %

[8, 1200] loss: 0.144 Accuracy: 81.51 %

[8, 1400] loss: 0.152 Accuracy: 81.56 %

[9, 200] loss: 0.079 Accuracy: 82.23 %

[9, 400] loss: 0.082 Accuracy: 81.96 %

[9, 600] loss: 0.082 Accuracy: 81.99 %

[9, 800] loss: 0.088 Accuracy: 81.79 %

[9, 1000] loss: 0.095 Accuracy: 81.77 %

[9, 1200] loss: 0.105 Accuracy: 82.10 %

[9, 1400] loss: 0.119 Accuracy: 82.12 %

[10, 200] loss: 0.068 Accuracy: 82.85 %

[10, 400] loss: 0.054 Accuracy: 82.08 %

[10, 600] loss: 0.075 Accuracy: 81.81 %

[10, 800] loss: 0.077 Accuracy: 81.26 %

[10, 1000] loss: 0.088 Accuracy: 81.52 %

[10, 1200] loss: 0.092 Accuracy: 82.67 %

[10, 1400] loss: 0.086 Accuracy: 81.33 %

[11, 200] loss: 0.058 Accuracy: 82.81 %

[11, 400] loss: 0.054 Accuracy: 82.56 %

[11, 600] loss: 0.061 Accuracy: 82.24 %

[11, 800] loss: 0.076 Accuracy: 82.50 %

[11, 1000] loss: 0.073 Accuracy: 82.36 %

[11, 1200] loss: 0.058 Accuracy: 82.78 %

[11, 1400] loss: 0.081 Accuracy: 81.89 %

[12, 200] loss: 0.052 Accuracy: 82.33 %

[12, 400] loss: 0.034 Accuracy: 82.74 %

[12, 600] loss: 0.039 Accuracy: 82.18 %

[12, 800] loss: 0.049 Accuracy: 82.51 %

[12, 1000] loss: 0.054 Accuracy: 82.29 %

[12, 1200] loss: 0.051 Accuracy: 83.02 %

[12, 1400] loss: 0.058 Accuracy: 82.70 %

[13, 200] loss: 0.053 Accuracy: 82.71 %

[13, 400] loss: 0.060 Accuracy: 82.67 %

[13, 600] loss: 0.043 Accuracy: 82.62 %

[13, 800] loss: 0.049 Accuracy: 82.43 %

[13, 1000] loss: 0.051 Accuracy: 82.64 %

[13, 1200] loss: 0.064 Accuracy: 82.29 %

[13, 1400] loss: 0.060 Accuracy: 82.71 %

[14, 200] loss: 0.039 Accuracy: 82.99 %

[14, 400] loss: 0.031 Accuracy: 82.65 %

[14, 600] loss: 0.029 Accuracy: 83.03 %

[14, 800] loss: 0.029 Accuracy: 83.56 %

[14, 1000] loss: 0.036 Accuracy: 83.31 %

[14, 1200] loss: 0.035 Accuracy: 83.16 %

[14, 1400] loss: 0.050 Accuracy: 81.60 %

[15, 200] loss: 0.029 Accuracy: 83.00 %

[15, 400] loss: 0.020 Accuracy: 83.58 %

[15, 600] loss: 0.021 Accuracy: 83.13 %

[15, 800] loss: 0.030 Accuracy: 82.34 %

[15, 1000] loss: 0.030 Accuracy: 82.31 %

[15, 1200] loss: 0.028 Accuracy: 82.54 %

[15, 1400] loss: 0.038 Accuracy: 82.27 %

[16, 200] loss: 0.027 Accuracy: 82.22 %

[16, 400] loss: 0.027 Accuracy: 82.48 %

[16, 600] loss: 0.029 Accuracy: 82.61 %

[16, 800] loss: 0.034 Accuracy: 82.41 %

[16, 1000] loss: 0.043 Accuracy: 82.86 %

[16, 1200] loss: 0.034 Accuracy: 83.38 %

[16, 1400] loss: 0.035 Accuracy: 83.11 %

[17, 200] loss: 0.022 Accuracy: 83.67 %

[17, 400] loss: 0.024 Accuracy: 82.72 %

[17, 600] loss: 0.023 Accuracy: 82.82 %

[17, 800] loss: 0.016 Accuracy: 83.68 %

[17, 1000] loss: 0.019 Accuracy: 83.34 %

[17, 1200] loss: 0.025 Accuracy: 82.77 %

[17, 1400] loss: 0.034 Accuracy: 83.47 %

[18, 200] loss: 0.021 Accuracy: 83.69 %

[18, 400] loss: 0.020 Accuracy: 83.29 %

[18, 600] loss: 0.014 Accuracy: 83.81 %

[18, 800] loss: 0.020 Accuracy: 83.58 %

[18, 1000] loss: 0.028 Accuracy: 82.57 %

[18, 1200] loss: 0.029 Accuracy: 82.51 %

[18, 1400] loss: 0.030 Accuracy: 82.37 %

[19, 200] loss: 0.022 Accuracy: 83.79 %

[19, 400] loss: 0.012 Accuracy: 83.80 %

[19, 600] loss: 0.012 Accuracy: 83.77 %

[19, 800] loss: 0.017 Accuracy: 83.51 %

[19, 1000] loss: 0.016 Accuracy: 83.54 %

[19, 1200] loss: 0.011 Accuracy: 83.88 %

[19, 1400] loss: 0.011 Accuracy: 83.56 %

[20, 200] loss: 0.018 Accuracy: 82.86 %

[20, 400] loss: 0.023 Accuracy: 83.04 %

[20, 600] loss: 0.026 Accuracy: 83.26 %

[20, 800] loss: 0.020 Accuracy: 82.70 %

[20, 1000] loss: 0.016 Accuracy: 83.13 %

[20, 1200] loss: 0.021 Accuracy: 82.92 %

[20, 1400] loss: 0.029 Accuracy: 82.57 %

Finished Training

Accuracy: 83.03 %

GroundTruth: cat ship ship plane

Predicted: cat ship ship plane

Accuracy of plane : 87 %

Accuracy of car : 93 %

Accuracy of bird : 76 %

Accuracy of cat : 59 %

Accuracy of deer : 80 %

Accuracy of dog : 77 %

Accuracy of frog : 85 %

Accuracy of horse : 85 %

Accuracy of ship : 92 %

Accuracy of truck : 93 %

elapsed time: 2412.977 [sec]