In the previous article, we analyzed the retrieved recordset, flattened the hierarchical data, and determined what columns (and values) existed.

This time, let's create the part that writes data to the database table.

Preparing the database

Since I already know the required columns, I create the table with the SQL CREATE TABLE statement.

Launch SQL Server Management Studio and create an empty database. Create a table in that database like this:

CREATE TABLE [dbo].[Transaction_Info](

[paypal_account_id] [nvarchar](256) NULL,

[transaction_id] [nvarchar](256) NOT NULL,

[transaction_event_code] [nvarchar](256) NULL,

[transaction_initiation_date] [datetime] NULL,

[transaction_updated_date] [datetime] NULL,

[transaction_amount_currency_code] [nvarchar](20) NULL,

[transaction_amount_value] [decimal](36, 6) NULL,

[transaction_status] [nvarchar](10) NULL,

[ending_balance_currency_code] [nvarchar](20) NULL,

[ending_balance_value] [decimal](36, 6) NULL,

[available_balance_currency_code] [nvarchar](20) NULL,

[available_balance_value] [decimal](36, 6) NULL,

[protection_eligibility] [nvarchar](10) NULL,

CONSTRAINT [PK_Transaction_Info_1] PRIMARY KEY CLUSTERED

(

[transaction_id] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

Creating the data model

Import Schema

Now that I have the database and tables ready, let's create a data model using Sharperlight Studio.

Launch Studio from the Sharperlight application menu.

Start creating with the New icon.

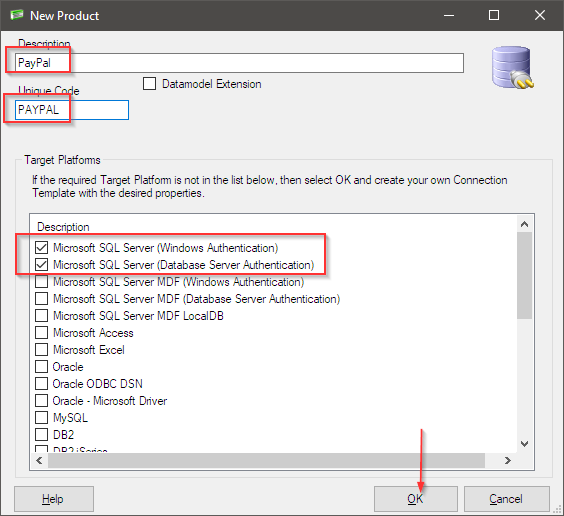

Set the Description Unique Code Target Platforms and click the OK button.

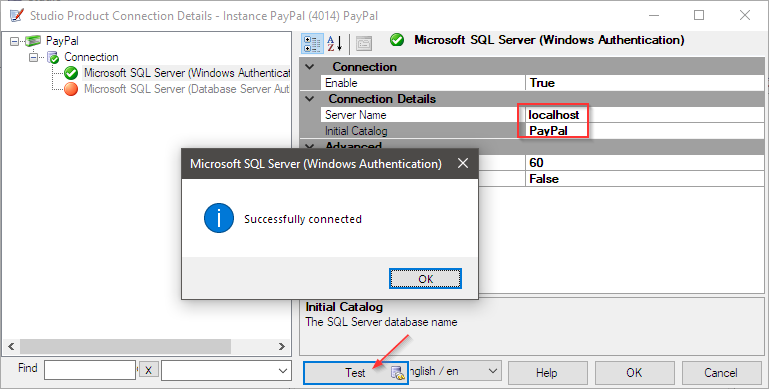

Select a connection type and enter the required connection information. Check the connection with the Test button. This is a connection setting for incorporating database schema information into the data model.

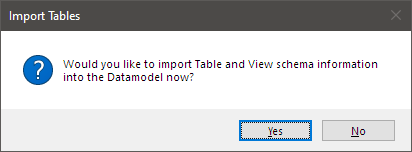

If you press the OK button, a message will appear asking if you want to import the schema information, so press the Yes button to proceed.

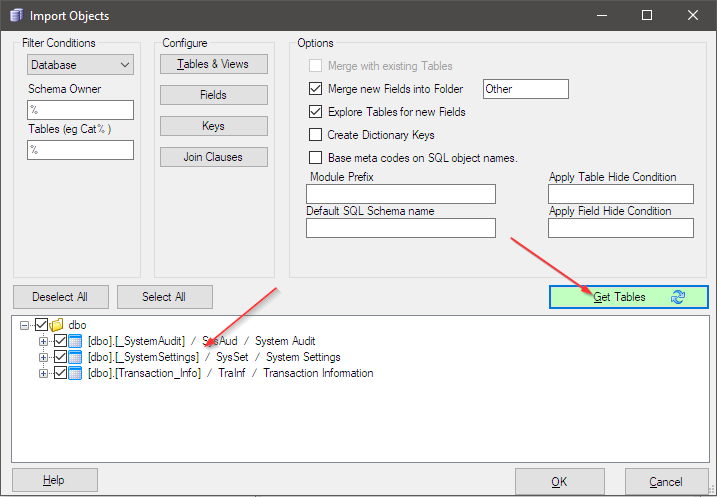

In the Import Objects dialog, the Get Tables button initiates schema import.

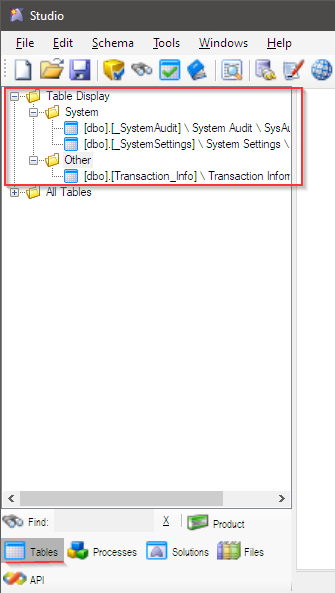

Table and field information is retrieved from the connected database. I found two tables for the system (not used this time).

With all selected, press the OK button to start importing to the data model.

The engine will analyze and classify the table names like this. Table codes and field codes that are used as recognition codes in all Sharperlight applications are also automatically assigned.

You are free to change these codes and descriptions in the data model to whatever values you like during the data model development stage.

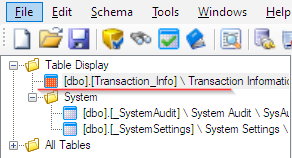

This is what it will look like in the end.

Field Code

Here I am talking about changing field codes.

This data model can be used as a mapping between the acquired dataset and the database table in the process of acquiring the dataset from the API endpoint and writing it to the database like this time. Use field codes for that mapping.

In other words, set the column name of the dataset obtained from the API to the field code. This will result in a mapping like this:

Through the data model, the data set from the API and the database table are connected.

The data model is now ready.

Create scheduler task for writing

Now that the database and data model have been prepared, let's write a JavaScript task that writes the contents of the dataset to the database.

As for the logic, I would like to read the data set from the API saved as a JSON file one record at a time, dynamically create SQL statements using the data model mapping settings, and write the records to the database.

Refer to Get for Dataset from API saved as JSON file.

See Analysis for the part of reading one record at a time.

This time, I will use the task created in the Analysis section. I have already flattened the records by reading each record from the JSON file using the query combination action, so we will use the definition information of the data model to create and execute the SQL statement. I used some of Sharperlight JavaScript functions to create the process. Please excuse the messy code below![]()

Function to get data model definition

function metaDetail_Get(xObjectName){

try{

var filters = { 'TabCod': xObjectName };

var rs = lib_app.query.Execute(_gMetaQueryTemplate_XML, filters);

lib_sys.io.file.WriteAllText(_gWorkFolder + "\\metaDetail.json", rs);

var rsObj = JSON.parse(rs);

if( rsObj.status.errorMessage != '' ) {

throw 'metaDetail_Get(): ' + rsObj.status.errorMessage;

}

return rsObj;

}catch (err){

throw 'metaDetail_Get(): ' + err;

}

}

Function that retrieves the field code from the data model table definition

function get_KeyFields(metaObject){

try{

var keys = [];

for (var wbcol in metaObject.rows) {

if (metaObject.rows[wbcol].IsUni == true){

keys.push(metaObject.rows[wbcol].FieCod.slice(1));

}

}

return keys;

}catch (err){

throw 'get_KeyFields(): ' + err;

}

}

Function that retrieves values from a dataset

function build_SQLValue(value, code, metaObject){

for (var wbcol in metaObject.rows) {

if (metaObject.rows[wbcol].FieCod.toLowerCase().slice(1) == code.toLowerCase()){

switch(metaObject.rows[wbcol].DatTyp){

case 'String' : return "N'" + lib_data.QuoteProtect(value) + "'" ; break;

case 'DateTimeOffset' : return "N'" + value + "'" ; break;

case 'Guid' : return "N'" + lib_data.QuoteProtect(value) + "'" ; break;

case 'Boolean' : return value == false ? 0 : 1; break;

case 'Decimal' : return value == '' ? (metaObject.rows[wbcol].IsNul == true ? " null " : 0) : value; break;

case 'Currency' : return value == '' ? (metaObject.rows[wbcol].IsNul == true ? " null " : 0) : value; break;

case 'Integer' : return value == '' ? (metaObject.rows[wbcol].IsNul == true ? " null " : 0) : value; break;

case 'Int32' : return value == '' ? (metaObject.rows[wbcol].IsNul == true ? " null " : 0) : value; break;

case 'Date':

if (value == ''){

var xDefault = new Date('1900-01-01 00:00:00');

return (metaObject.rows[wbcol].IsNul == true ? " null " : "N'" + xDefault.toISOString() + "'"); break;

}else{

var xDate = new Date(value);

myDateFormat = xDate.toISOString();

if (myDateFormat == '0001-01-01')

return " null ";

else

return "N'" + myDateFormat + "'";

break;

}

case 'Stream': return "N'" + lib_data.QuoteProtect(value) + "'" ; break;

default : return value; break;

}

}

}

return null;

}

Function that build and execute SQL statements

function generate_run_SQLStatement(colObjects, metaObject){

var sqlStatement = '';

var fieldValuePairs = [];

var sqlfields = [];

var sqlvalues = [];

var sqlwhere = "";

var keyFields = get_KeyFields(metaObject);

for (let col=0; col < colObjects.length; col++) {

let sqlValue = build_SQLValue(colObjects[col].value, colObjects[col].code, metaObject);

if (sqlValue != null){

fieldValuePairs.push( "[" + colObjects[col].code + "] = " + sqlValue);

sqlfields.push("[" + colObjects[col].code + "]");

sqlvalues.push(sqlValue);

for (key in keyFields){

if(keyFields[key].toLowerCase() == colObjects[col].code.toLowerCase()){

if(sqlwhere == "")

sqlwhere += " WHERE [" + colObjects[col].code + "] = " + sqlValue;

else

sqlwhere += " and [" + colObjects[col].code + "] = " + sqlValue;

}

}

}

}

console.log("WHERE clause: " + sqlwhere);

var updateStatement = "UPDATE [dbo].[" + 'Transaction_Info' + "] set " + fieldValuePairs.join(" , ") + sqlwhere;

sqlStatement = updateStatement + "\r\n IF @@ROWCOUNT=0 \r\n \

BEGIN \r\n \

insert into [dbo].[" + 'Transaction_Info' + "] ( " + sqlfields.join(",") + " ) values ( " + sqlvalues.join(",") + " ); \r\n \

END ";

console.log("SQL clause: " + sqlStatement);

//exec sql

try{

lib_app.sql.Execute(lib_task.UserSessionIdGet(),'PAYPAL', sqlStatement);

}catch (err){

console.log("Error: " + err);

}

}

The Main Processe

// Get DB Schema Info via Datamodel using Sharperlight query.

// データモデルから対象テーブルの定義情報をSharperlightクエリを利用して取得。

var metaObject = metaDetail_Get('TransInfo');

// Get a record from Query Combination

// クエリの組み合わせアクションから渡される1レコードを取得

var output = lib_task.QueryCombinationValueGet('trans_info');

var rec = JSON.parse(output);

// Get columns for the record

var colObjects = [];

var cols = Object.keys(rec); // 列の取得, Get columns from a record

for (var c in cols){

// 子情報の有無をチェック, Check the existence of child information

if (typeof rec[cols[c]] !== 'object'){ // 通常の列、Normal Column

console.log(cols[c] + " / " + rec[cols[c]]);

let obj = {

code: cols[c],

value: rec[cols[c]]

};

colObjects.push(obj);

}else{ // 子情報、Child Information

var childRec = rec[cols[c]];

var childRecCols = Object.keys(childRec);

for (var cc in childRecCols){

console.log(cols[c] + ': ' + childRecCols[cc] + " / " + childRec[childRecCols[cc]]);

let obj = {

code: cols[c] + '_' + childRecCols[cc],

value: childRec[childRecCols[cc]]

};

colObjects.push(obj);

}

}

}

// Create SQL Statement and Writeback data to database

// SQL文を作成して、データベースに書き込む

generate_run_SQLStatement(colObjects, metaObject);

Paste the code here to complete.

Save it with the OK button, and select Execute from the scheduler's right-click menu to run the process.

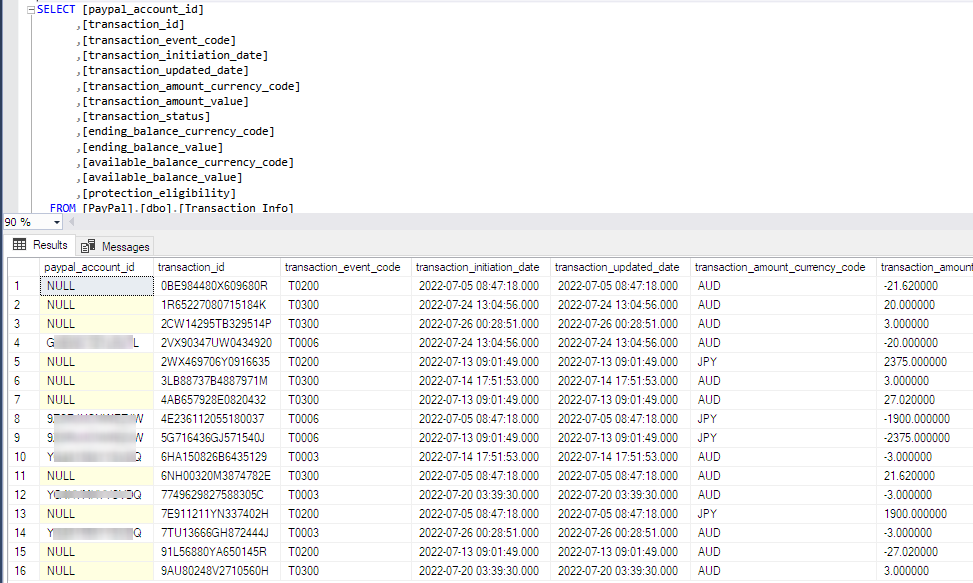

If there are no errors, here you go! This is how the data was written to the database.

Afterword

I've written three articles about automating dataset acquisition from the API, and this time I've finally written data to the database.

As the next step, I will edit the task that retrieves the data from the API a bit to include a delta update feature so that each time the task is run it only retrieves the updates since the last time. It actually looks for the latest update date from the data already stored in the database and passes that date to the start_date parameter when querying the API.

Up to here for this time.

Thank you very much.