昨日の記事の続きだが、BERnaT-baseとUD_Basque-BDTをもとに、roberta-base-basque-ud-goeswithを試作してみた。Google Colaboratoryで動かしてみよう。

!pip install transformers deplacy

from transformers import pipeline

nlp=pipeline("universal-dependencies","KoichiYasuoka/roberta-base-basque-ud-goeswith",trust_remote_code=True,aggregation_strategy="simple")

doc=nlp("Gaur ama hil zen, gaur goizean ama hil nuen.")

import deplacy

deplacy.serve(doc,port=None)

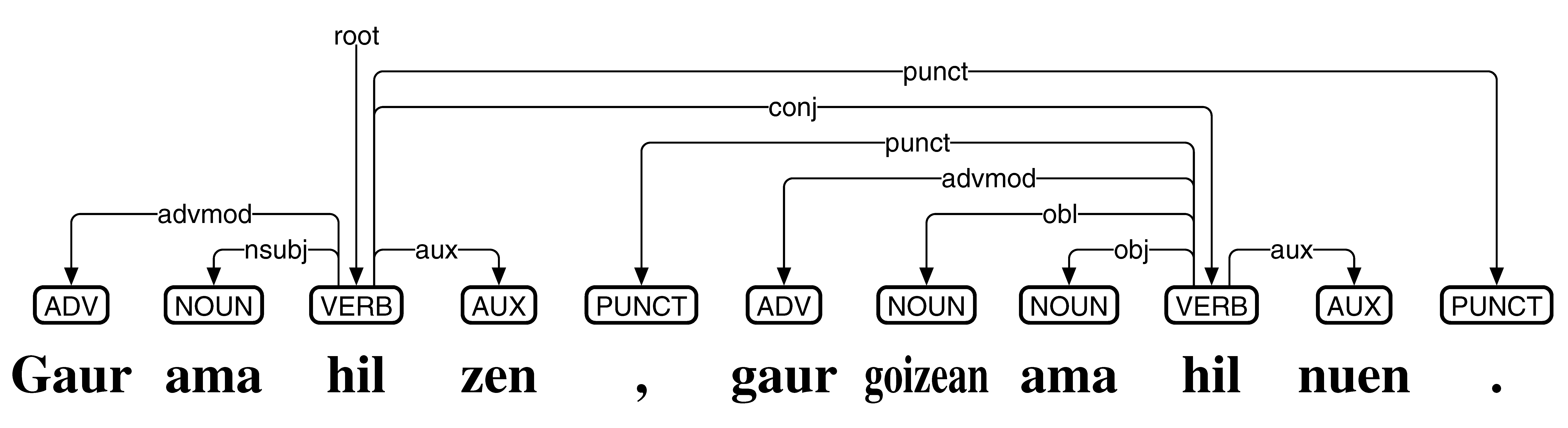

「Gaur ama hil zen, gaur goizean ama hil nuen.」を係り受け解析してみたところ、私(安岡孝一)の手元では以下の結果が出力された。

# text = Gaur ama hil zen, gaur goizean ama hil nuen.

1 Gaur _ ADV _ _ 3 advmod _ _

2 ama _ NOUN _ Case=Abs|Definite=Def|Number=Sing 3 nsubj _ _

3 hil _ VERB _ Aspect=Perf|VerbForm=Part 0 root _ _

4 zen _ AUX _ Mood=Ind|Number[abs]=Sing|Person[abs]=3|VerbForm=Fin 3 aux _ SpaceAfter=No

5 , _ PUNCT _ _ 9 punct _ _

6 gaur _ ADV _ _ 9 advmod _ _

7 goizean _ NOUN _ Animacy=Inan|Case=Ine|Definite=Def|Number=Sing 9 obl _ _

8 ama _ NOUN _ Case=Abs|Definite=Def|Number=Sing 9 obj _ _

9 hil _ VERB _ Aspect=Perf|VerbForm=Part 3 conj _ _

10 nuen _ AUX _ Mood=Ind|Number[abs]=Sing|Number[erg]=Sing|Person[abs]=3|Person[erg]=1|VerbForm=Fin 9 aux _ SpaceAfter=No

11 . _ PUNCT _ _ 3 punct _ SpaceAfter=No

私個人としては悩ましいところもあるのだが、一応ちゃんと読めているようだ。roberta-small-basque-ud-goeswithとroberta-large-basque-ud-goeswithも試作したので、Google Colaboratoryで精度を比較してみよう。

!pip install transformers

models=[

"KoichiYasuoka/roberta-small-basque-ud-goeswith",

"KoichiYasuoka/roberta-base-basque-ud-goeswith",

"KoichiYasuoka/roberta-large-basque-ud-goeswith"

]

import os,sys,subprocess

url="https://github.com/UniversalDependencies/UD_Basque-BDT"

d=os.path.basename(url)

!test -d {d} || git clone --depth=1 {url}

os.system("for F in train dev test ; do sed 's/\u0092//' "+d+"/*-$F.conllu > $F.conllu ; done")

url="https://universaldependencies.org/conll18/conll18_ud_eval.py"

c=os.path.basename(url)

!test -f {c} || curl -LO {url}

for mdl in models:

from transformers import pipeline

nlp=pipeline("universal-dependencies",mdl,trust_remote_code=True,aggregation_strategy="simple")

for f in ["dev.conllu","test.conllu"]:

with open(f,"r",encoding="utf-8") as r:

s=[t[8:].strip() for t in r if t.startswith("# text =")]

with open("result-"+f,"w",encoding="utf-8") as w:

for t in s:

w.write(nlp(t))

os.system(f"mkdir -p result/{mdl}")

with open(f"result/{mdl}/result.txt","w",encoding="utf-8") as w:

for f in ["dev.conllu","test.conllu"]:

p=subprocess.run([sys.executable,c,"-v",f,"result-"+f],encoding="utf-8",stdout=subprocess.PIPE,stderr=subprocess.STDOUT)

print(f"\n*** {mdl} ({f})",p.stdout,sep="\n",file=w)

!( cd result && cat `find {" ".join(models)} -name result.txt` )

eu_bdt-ud-dev.conlluとeu_bdt-ud-test.conlluの両方で評価したところ、私の手元では以下の結果が出力された。

*** KoichiYasuoka/roberta-small-basque-ud-goeswith (dev.conllu)

Metric | Precision | Recall | F1 Score | AligndAcc

-----------+-----------+-----------+-----------+-----------

Tokens | 99.45 | 98.96 | 99.20 |

Sentences | 100.00 | 100.00 | 100.00 |

Words | 99.45 | 98.96 | 99.20 |

UPOS | 93.49 | 93.03 | 93.26 | 94.01

XPOS | 99.45 | 98.96 | 99.20 | 100.00

UFeats | 84.19 | 83.78 | 83.98 | 84.66

AllTags | 82.15 | 81.75 | 81.95 | 82.61

Lemmas | 0.12 | 0.12 | 0.12 | 0.12

UAS | 87.69 | 87.26 | 87.47 | 88.18

LAS | 83.20 | 82.79 | 83.00 | 83.66

CLAS | 81.45 | 81.20 | 81.33 | 81.24

MLAS | 64.38 | 64.18 | 64.28 | 64.21

BLEX | 0.00 | 0.00 | 0.00 | 0.00

*** KoichiYasuoka/roberta-small-basque-ud-goeswith (test.conllu)

Metric | Precision | Recall | F1 Score | AligndAcc

-----------+-----------+-----------+-----------+-----------

Tokens | 99.47 | 98.98 | 99.23 |

Sentences | 100.00 | 100.00 | 100.00 |

Words | 99.47 | 98.98 | 99.23 |

UPOS | 93.52 | 93.06 | 93.29 | 94.02

XPOS | 99.47 | 98.98 | 99.23 | 100.00

UFeats | 84.46 | 84.04 | 84.25 | 84.91

AllTags | 82.42 | 82.02 | 82.22 | 82.86

Lemmas | 0.10 | 0.10 | 0.10 | 0.10

UAS | 88.54 | 88.11 | 88.32 | 89.01

LAS | 84.04 | 83.63 | 83.83 | 84.49

CLAS | 82.33 | 81.98 | 82.16 | 82.03

MLAS | 65.20 | 64.93 | 65.07 | 64.97

BLEX | 0.00 | 0.00 | 0.00 | 0.00

*** KoichiYasuoka/roberta-base-basque-ud-goeswith (dev.conllu)

Metric | Precision | Recall | F1 Score | AligndAcc

-----------+-----------+-----------+-----------+-----------

Tokens | 99.38 | 98.95 | 99.16 |

Sentences | 100.00 | 100.00 | 100.00 |

Words | 99.38 | 98.95 | 99.16 |

UPOS | 95.73 | 95.31 | 95.52 | 96.33

XPOS | 99.38 | 98.95 | 99.16 | 100.00

UFeats | 90.65 | 90.26 | 90.45 | 91.22

AllTags | 89.08 | 88.69 | 88.88 | 89.64

Lemmas | 0.12 | 0.12 | 0.12 | 0.12

UAS | 86.97 | 86.59 | 86.78 | 87.52

LAS | 83.51 | 83.15 | 83.33 | 84.03

CLAS | 84.01 | 84.05 | 84.03 | 84.11

MLAS | 73.61 | 73.65 | 73.63 | 73.70

BLEX | 0.00 | 0.00 | 0.00 | 0.00

*** KoichiYasuoka/roberta-base-basque-ud-goeswith (test.conllu)

Metric | Precision | Recall | F1 Score | AligndAcc

-----------+-----------+-----------+-----------+-----------

Tokens | 99.25 | 98.92 | 99.08 |

Sentences | 100.00 | 100.00 | 100.00 |

Words | 99.25 | 98.92 | 99.08 |

UPOS | 95.37 | 95.06 | 95.21 | 96.10

XPOS | 99.25 | 98.92 | 99.08 | 100.00

UFeats | 90.33 | 90.03 | 90.18 | 91.01

AllTags | 88.58 | 88.28 | 88.43 | 89.25

Lemmas | 0.10 | 0.10 | 0.10 | 0.10

UAS | 87.70 | 87.41 | 87.55 | 88.37

LAS | 84.56 | 84.28 | 84.42 | 85.21

CLAS | 85.16 | 85.26 | 85.21 | 85.40

MLAS | 74.16 | 74.25 | 74.20 | 74.37

BLEX | 0.01 | 0.01 | 0.01 | 0.01

*** KoichiYasuoka/roberta-large-basque-ud-goeswith (dev.conllu)

Metric | Precision | Recall | F1 Score | AligndAcc

-----------+-----------+-----------+-----------+-----------

Tokens | 97.87 | 96.48 | 97.17 |

Sentences | 100.00 | 100.00 | 100.00 |

Words | 97.87 | 96.48 | 97.17 |

UPOS | 89.88 | 88.60 | 89.23 | 91.83

XPOS | 97.87 | 96.48 | 97.17 | 100.00

UFeats | 85.31 | 84.10 | 84.70 | 87.16

AllTags | 83.65 | 82.46 | 83.05 | 85.46

Lemmas | 0.12 | 0.12 | 0.12 | 0.12

UAS | 82.60 | 81.42 | 82.00 | 84.39

LAS | 76.19 | 75.10 | 75.64 | 77.84

CLAS | 80.62 | 81.11 | 80.86 | 82.81

MLAS | 65.37 | 65.76 | 65.56 | 67.14

BLEX | 0.00 | 0.00 | 0.00 | 0.00

*** KoichiYasuoka/roberta-large-basque-ud-goeswith (test.conllu)

Metric | Precision | Recall | F1 Score | AligndAcc

-----------+-----------+-----------+-----------+-----------

Tokens | 97.92 | 96.79 | 97.35 |

Sentences | 100.00 | 100.00 | 100.00 |

Words | 97.92 | 96.79 | 97.35 |

UPOS | 89.85 | 88.82 | 89.33 | 91.76

XPOS | 97.92 | 96.79 | 97.35 | 100.00

UFeats | 85.45 | 84.47 | 84.96 | 87.27

AllTags | 83.64 | 82.67 | 83.15 | 85.42

Lemmas | 0.10 | 0.10 | 0.10 | 0.11

UAS | 83.43 | 82.47 | 82.95 | 85.21

LAS | 77.32 | 76.43 | 76.87 | 78.97

CLAS | 81.37 | 82.09 | 81.73 | 83.67

MLAS | 66.14 | 66.73 | 66.43 | 68.01

BLEX | 0.01 | 0.01 | 0.01 | 0.01

UPOS/LAS/MLASを表にしてみよう。

| eu_bdt-ud-dev.conllu | eu_bdt-ud-test.conllu | |

|---|---|---|

| roberta-small-basque-ud-goeswith | 93.26/83.00/64.28 | 93.29/83.83/65.07 |

| roberta-base-basque-ud-goeswith | 95.52/83.33/73.63 | 95.21/84.42/74.20 |

| roberta-large-basque-ud-goeswith | 89.23/75.64/65.56 | 89.33/76.87/66.43 |

roberta-base-basque-ud-goeswithが健闘しているものの、他の2モデルはイマイチふるわない。ただ、バスク語Universal Dependenciesは、能格言語としての特徴をあまり押し出していないので、係り受け解析モデルをどう作っていくかは、もう少し考える必要がありそうだ。