「ラビットチャレンジ」 提出レポート

1.非線形回帰モデル

複数な非線形構造を内在する現象に対して、非線形回帰モデリングを実施する必要がある。

■ 基底展開法

● 回帰関数として、既定関数法と呼ばれる既知の非線形関数とパラメータベクトルの線形結合を使用する。

● 未知パラメータは、線形回帰モデルと同様に最小二乗法や最尤法による推定する。

● よく使われる基底関数:多項式関数、ガウス型基底関数、スプライン関数。

■ 未学習

● 学習データに対して、十分小さいな誤差が得られないモデルという。

● 対策:表現力の高いモデルを利用する。

■ 過学習

● 学習データに対して、小さな誤差が得られたが、検証データに対して、誤差が大きくなってしまう。

● 対策:学習データの数を増やすや正則法を利用する。

2.正則化法

機械学習では、過学習を防ぐ手段として正則化が行われることが多い。L1正則化とL2正則化をよく用いられる正則化です。

■ $L1$ 正則化

● ペナルティとして学習モデルのパラメータの絶対値の総和($L1$ノルム)を用いるものである。

● 特定のデータの重みを0にする事で、不要なデータを削除するので、次元圧縮のために用いられる。

■ $L2$ 正則化

● ペナルティとして学習モデルのパラメータの二乗の総和($L2$ノルム)を用いるものである。

● データの大きさに応じて0に近づけて、滑らかなモデルとするので、モデルの過学習を防ぐことで精度を高めるために用いられる。

3.モデル選択

適切な予測モデルを構築するためには、モデルの汎化性能評価が不可欠です。**ホールドアウト法(Holdout)と交差検証法(Cross Validation)**はモデルの性能評価の手法とする。

■ ホールドアウト法(Holdout)

● データセットを訓練データとテストデータに分割し、訓練データを使って学習モデルを構築し、テストデータを入力した時の出力を答え合わせすることで予測精度を検証する。

● 問題点:データの分割の仕方によって性能評価に影響が出る可能性がある。

■ 交差検証法(Cross Validation)

● データの分割を何度も繰り返して、複数のモデルを訓練し、一般に最後は複数のモデルの平均値を取って最終的な性能とする。

● ホールドアウト法より、評価結果の信頼が高くなる。

4.実装演習結果

【実装演習】

■ 必要モジュールインポートとデータ関数設定

■ 真の関数からノイズを伴うデータを生成

■ 線形回帰モデルより予測

**【考察】**上記の結果によると、線形回帰を求めるsklearn.linear_modelモジュールからLinearRegressionのクラスを使用すると、正解率が低いです。

■ KernelRidge

Kernel Ridge Regression は $L2$ 制約付き最小二乗学習です。

■ RBFカーネルによる最小二乗学習

#Ridge

**【考察】**sklearn.linear_modelモジュールからRidgeのクラスを使用すると、パラメータalphaの設定が必要となる。正解率は0.83で、線形モデルより改善された。

#Lasso

■ Support Vector Regression

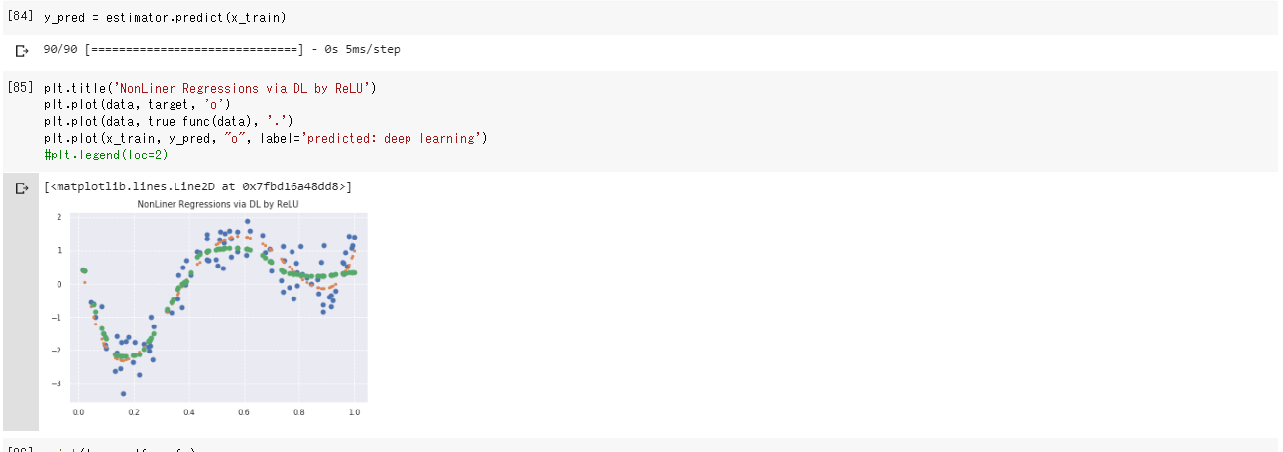

■ NonLiner Regressions via DL by ReLU

Train on 90 samples, validate on 10 samples

Epoch 1/100

90/90 [==============================] - 1s 9ms/step - loss: 1.5399 - val_loss: 1.0177

Epoch 00001: saving model to ./weights.01-1.02.hdf5

Epoch 2/100

90/90 [==============================] - 0s 2ms/step - loss: 1.3654 - val_loss: 0.7789

Epoch 00002: saving model to ./weights.02-0.78.hdf5

Epoch 3/100

90/90 [==============================] - 0s 2ms/step - loss: 0.8682 - val_loss: 0.8151

Epoch 00003: saving model to ./weights.03-0.82.hdf5

Epoch 4/100

90/90 [==============================] - 0s 2ms/step - loss: 0.9923 - val_loss: 0.6966

Epoch 00004: saving model to ./weights.04-0.70.hdf5

Epoch 5/100

90/90 [==============================] - 0s 3ms/step - loss: 0.8529 - val_loss: 0.5245

Epoch 00005: saving model to ./weights.05-0.52.hdf5

Epoch 6/100

90/90 [==============================] - 0s 2ms/step - loss: 0.7379 - val_loss: 0.4245

Epoch 00006: saving model to ./weights.06-0.42.hdf5

Epoch 7/100

90/90 [==============================] - 0s 2ms/step - loss: 0.6347 - val_loss: 0.4024

Epoch 00007: saving model to ./weights.07-0.40.hdf5

Epoch 8/100

90/90 [==============================] - 0s 2ms/step - loss: 0.7075 - val_loss: 0.4524

Epoch 00008: saving model to ./weights.08-0.45.hdf5

Epoch 9/100

90/90 [==============================] - 0s 2ms/step - loss: 0.6787 - val_loss: 0.3682

Epoch 00009: saving model to ./weights.09-0.37.hdf5

Epoch 10/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5505 - val_loss: 0.4591

Epoch 00010: saving model to ./weights.10-0.46.hdf5

Epoch 11/100

90/90 [==============================] - 0s 3ms/step - loss: 1.0630 - val_loss: 0.5899

Epoch 00011: saving model to ./weights.11-0.59.hdf5

Epoch 12/100

90/90 [==============================] - 0s 2ms/step - loss: 0.6482 - val_loss: 0.3552

Epoch 00012: saving model to ./weights.12-0.36.hdf5

Epoch 13/100

90/90 [==============================] - 0s 3ms/step - loss: 0.9105 - val_loss: 0.4476

Epoch 00013: saving model to ./weights.13-0.45.hdf5

Epoch 14/100

90/90 [==============================] - 0s 2ms/step - loss: 0.6084 - val_loss: 0.5306

Epoch 00014: saving model to ./weights.14-0.53.hdf5

Epoch 15/100

90/90 [==============================] - 0s 2ms/step - loss: 0.7093 - val_loss: 0.5703

Epoch 00015: saving model to ./weights.15-0.57.hdf5

Epoch 16/100

90/90 [==============================] - 0s 3ms/step - loss: 0.5859 - val_loss: 0.2589

Epoch 00016: saving model to ./weights.16-0.26.hdf5

Epoch 17/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5765 - val_loss: 0.3469

Epoch 00017: saving model to ./weights.17-0.35.hdf5

Epoch 18/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5619 - val_loss: 0.4871

Epoch 00018: saving model to ./weights.18-0.49.hdf5

Epoch 19/100

90/90 [==============================] - 0s 2ms/step - loss: 0.6217 - val_loss: 0.7794

Epoch 00019: saving model to ./weights.19-0.78.hdf5

Epoch 20/100

90/90 [==============================] - 0s 2ms/step - loss: 0.8317 - val_loss: 0.3086

Epoch 00020: saving model to ./weights.20-0.31.hdf5

Epoch 21/100

90/90 [==============================] - 0s 2ms/step - loss: 0.6066 - val_loss: 0.3045

Epoch 00021: saving model to ./weights.21-0.30.hdf5

Epoch 22/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5178 - val_loss: 0.3959

Epoch 00022: saving model to ./weights.22-0.40.hdf5

Epoch 23/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5563 - val_loss: 0.3050

Epoch 00023: saving model to ./weights.23-0.30.hdf5

Epoch 24/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4811 - val_loss: 0.3663

Epoch 00024: saving model to ./weights.24-0.37.hdf5

Epoch 25/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4220 - val_loss: 0.2775

Epoch 00025: saving model to ./weights.25-0.28.hdf5

Epoch 26/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4845 - val_loss: 0.3477

Epoch 00026: saving model to ./weights.26-0.35.hdf5

Epoch 27/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4824 - val_loss: 0.4453

Epoch 00027: saving model to ./weights.27-0.45.hdf5

Epoch 28/100

90/90 [==============================] - 0s 2ms/step - loss: 0.4690 - val_loss: 0.2465

Epoch 00028: saving model to ./weights.28-0.25.hdf5

Epoch 29/100

90/90 [==============================] - 0s 2ms/step - loss: 0.4113 - val_loss: 0.2765

Epoch 00029: saving model to ./weights.29-0.28.hdf5

Epoch 30/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3957 - val_loss: 0.2737

Epoch 00030: saving model to ./weights.30-0.27.hdf5

Epoch 31/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3699 - val_loss: 0.2247

Epoch 00031: saving model to ./weights.31-0.22.hdf5

Epoch 32/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3853 - val_loss: 0.3053

Epoch 00032: saving model to ./weights.32-0.31.hdf5

Epoch 33/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5317 - val_loss: 0.9632

Epoch 00033: saving model to ./weights.33-0.96.hdf5

Epoch 34/100

90/90 [==============================] - 0s 2ms/step - loss: 0.5188 - val_loss: 0.4550

Epoch 00034: saving model to ./weights.34-0.46.hdf5

Epoch 35/100

90/90 [==============================] - 0s 2ms/step - loss: 0.4946 - val_loss: 0.3305

Epoch 00035: saving model to ./weights.35-0.33.hdf5

Epoch 36/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4418 - val_loss: 0.2938

Epoch 00036: saving model to ./weights.36-0.29.hdf5

Epoch 37/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4241 - val_loss: 0.3461

Epoch 00037: saving model to ./weights.37-0.35.hdf5

Epoch 38/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3522 - val_loss: 0.2416

Epoch 00038: saving model to ./weights.38-0.24.hdf5

Epoch 39/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3410 - val_loss: 0.2154

Epoch 00039: saving model to ./weights.39-0.22.hdf5

Epoch 40/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3501 - val_loss: 0.4063

Epoch 00040: saving model to ./weights.40-0.41.hdf5

Epoch 41/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3530 - val_loss: 0.2538

Epoch 00041: saving model to ./weights.41-0.25.hdf5

Epoch 42/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3726 - val_loss: 0.1919

Epoch 00042: saving model to ./weights.42-0.19.hdf5

Epoch 43/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3455 - val_loss: 0.1800

Epoch 00043: saving model to ./weights.43-0.18.hdf5

Epoch 44/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3373 - val_loss: 0.2227

Epoch 00044: saving model to ./weights.44-0.22.hdf5

Epoch 45/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3095 - val_loss: 0.2392

Epoch 00045: saving model to ./weights.45-0.24.hdf5

Epoch 46/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3631 - val_loss: 0.1921

Epoch 00046: saving model to ./weights.46-0.19.hdf5

Epoch 47/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3119 - val_loss: 0.2159

Epoch 00047: saving model to ./weights.47-0.22.hdf5

Epoch 48/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3129 - val_loss: 0.1837

Epoch 00048: saving model to ./weights.48-0.18.hdf5

Epoch 49/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2856 - val_loss: 0.2100

Epoch 00049: saving model to ./weights.49-0.21.hdf5

Epoch 50/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2907 - val_loss: 0.1866

Epoch 00050: saving model to ./weights.50-0.19.hdf5

Epoch 51/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3109 - val_loss: 0.2164

Epoch 00051: saving model to ./weights.51-0.22.hdf5

Epoch 52/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3558 - val_loss: 0.2273

Epoch 00052: saving model to ./weights.52-0.23.hdf5

Epoch 53/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3120 - val_loss: 0.2116

Epoch 00053: saving model to ./weights.53-0.21.hdf5

Epoch 54/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3400 - val_loss: 0.2549

Epoch 00054: saving model to ./weights.54-0.25.hdf5

Epoch 55/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3027 - val_loss: 0.1980

Epoch 00055: saving model to ./weights.55-0.20.hdf5

Epoch 56/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3048 - val_loss: 0.1839

Epoch 00056: saving model to ./weights.56-0.18.hdf5

Epoch 57/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3008 - val_loss: 0.2261

Epoch 00057: saving model to ./weights.57-0.23.hdf5

Epoch 58/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2925 - val_loss: 0.1979

Epoch 00058: saving model to ./weights.58-0.20.hdf5

Epoch 59/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3516 - val_loss: 0.2349

Epoch 00059: saving model to ./weights.59-0.23.hdf5

Epoch 60/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3265 - val_loss: 0.2879

Epoch 00060: saving model to ./weights.60-0.29.hdf5

Epoch 61/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3820 - val_loss: 0.2063

Epoch 00061: saving model to ./weights.61-0.21.hdf5

Epoch 62/100

90/90 [==============================] - 0s 2ms/step - loss: 0.4572 - val_loss: 0.2729

Epoch 00062: saving model to ./weights.62-0.27.hdf5

Epoch 63/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3665 - val_loss: 0.1768

Epoch 00063: saving model to ./weights.63-0.18.hdf5

Epoch 64/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2842 - val_loss: 0.2659

Epoch 00064: saving model to ./weights.64-0.27.hdf5

Epoch 65/100

90/90 [==============================] - 0s 3ms/step - loss: 0.2819 - val_loss: 0.3310

Epoch 00065: saving model to ./weights.65-0.33.hdf5

Epoch 66/100

90/90 [==============================] - 0s 3ms/step - loss: 0.4116 - val_loss: 0.1841

Epoch 00066: saving model to ./weights.66-0.18.hdf5

Epoch 67/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3487 - val_loss: 0.3098

Epoch 00067: saving model to ./weights.67-0.31.hdf5

Epoch 68/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3119 - val_loss: 0.2996

Epoch 00068: saving model to ./weights.68-0.30.hdf5

Epoch 69/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3584 - val_loss: 0.2107

Epoch 00069: saving model to ./weights.69-0.21.hdf5

Epoch 70/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3264 - val_loss: 0.2875

Epoch 00070: saving model to ./weights.70-0.29.hdf5

Epoch 71/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3813 - val_loss: 0.2908

Epoch 00071: saving model to ./weights.71-0.29.hdf5

Epoch 72/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2980 - val_loss: 0.3795

Epoch 00072: saving model to ./weights.72-0.38.hdf5

Epoch 73/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3139 - val_loss: 0.1953

Epoch 00073: saving model to ./weights.73-0.20.hdf5

Epoch 74/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3133 - val_loss: 0.2336

Epoch 00074: saving model to ./weights.74-0.23.hdf5

Epoch 75/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3332 - val_loss: 0.2375

Epoch 00075: saving model to ./weights.75-0.24.hdf5

Epoch 76/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3013 - val_loss: 0.2362

Epoch 00076: saving model to ./weights.76-0.24.hdf5

Epoch 77/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2725 - val_loss: 0.1961

Epoch 00077: saving model to ./weights.77-0.20.hdf5

Epoch 78/100

90/90 [==============================] - 0s 3ms/step - loss: 0.3080 - val_loss: 0.2879

Epoch 00078: saving model to ./weights.78-0.29.hdf5

Epoch 79/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3290 - val_loss: 0.2109

Epoch 00079: saving model to ./weights.79-0.21.hdf5

Epoch 80/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3404 - val_loss: 0.3119

Epoch 00080: saving model to ./weights.80-0.31.hdf5

Epoch 81/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3082 - val_loss: 0.2819

Epoch 00081: saving model to ./weights.81-0.28.hdf5

Epoch 82/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3588 - val_loss: 0.2166

Epoch 00082: saving model to ./weights.82-0.22.hdf5

Epoch 83/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3540 - val_loss: 0.3222

Epoch 00083: saving model to ./weights.83-0.32.hdf5

Epoch 84/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3680 - val_loss: 0.2149

Epoch 00084: saving model to ./weights.84-0.21.hdf5

Epoch 85/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2883 - val_loss: 0.1962

Epoch 00085: saving model to ./weights.85-0.20.hdf5

Epoch 86/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2764 - val_loss: 0.2149

Epoch 00086: saving model to ./weights.86-0.21.hdf5

Epoch 87/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3137 - val_loss: 0.2827

Epoch 00087: saving model to ./weights.87-0.28.hdf5

Epoch 88/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3411 - val_loss: 0.2367

Epoch 00088: saving model to ./weights.88-0.24.hdf5

Epoch 89/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3253 - val_loss: 0.3265

Epoch 00089: saving model to ./weights.89-0.33.hdf5

Epoch 90/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3212 - val_loss: 0.2589

Epoch 00090: saving model to ./weights.90-0.26.hdf5

Epoch 91/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3255 - val_loss: 0.2383

Epoch 00091: saving model to ./weights.91-0.24.hdf5

Epoch 92/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2798 - val_loss: 0.3637

Epoch 00092: saving model to ./weights.92-0.36.hdf5

Epoch 93/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3192 - val_loss: 0.2963

Epoch 00093: saving model to ./weights.93-0.30.hdf5

Epoch 94/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3099 - val_loss: 0.2598

Epoch 00094: saving model to ./weights.94-0.26.hdf5

Epoch 95/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3259 - val_loss: 0.2751

Epoch 00095: saving model to ./weights.95-0.28.hdf5

Epoch 96/100

90/90 [==============================] - 0s 3ms/step - loss: 0.2871 - val_loss: 0.2547

Epoch 00096: saving model to ./weights.96-0.25.hdf5

Epoch 97/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3404 - val_loss: 0.2437

Epoch 00097: saving model to ./weights.97-0.24.hdf5

Epoch 98/100

90/90 [==============================] - 0s 2ms/step - loss: 0.2997 - val_loss: 0.2109

Epoch 00098: saving model to ./weights.98-0.21.hdf5

Epoch 99/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3539 - val_loss: 0.2640

Epoch 00099: saving model to ./weights.99-0.26.hdf5

Epoch 100/100

90/90 [==============================] - 0s 2ms/step - loss: 0.3389 - val_loss: 0.1863

Epoch 00100: saving model to ./weights.100-0.19.hdf5

【機械学習】レポート一覧

【ラビットチャレンジ】【機械学習】線形回帰モデル

【ラビットチャレンジ】【機械学習】ロジスティク回帰モデル

【ラビットチャレンジ】【機械学習】主成分分析

【ラビットチャレンジ】【機械学習】アルゴリズム

【ラビットチャレンジ】【機械学習】サポートベクターマシン