Azure AI SearchでAzure Document Intelligenceを使ってファイルをマークダウンフォーマット化した後に、画像切り出し、言語化をしてインデックス化しました。

以下がサポートされている要素です。表はHTMLタグで出てくるし、箇条書きのマークダウン化などはされないので注意。また、PDFの見出しがそのままHeadingとして使われるわけでないようなので注意。

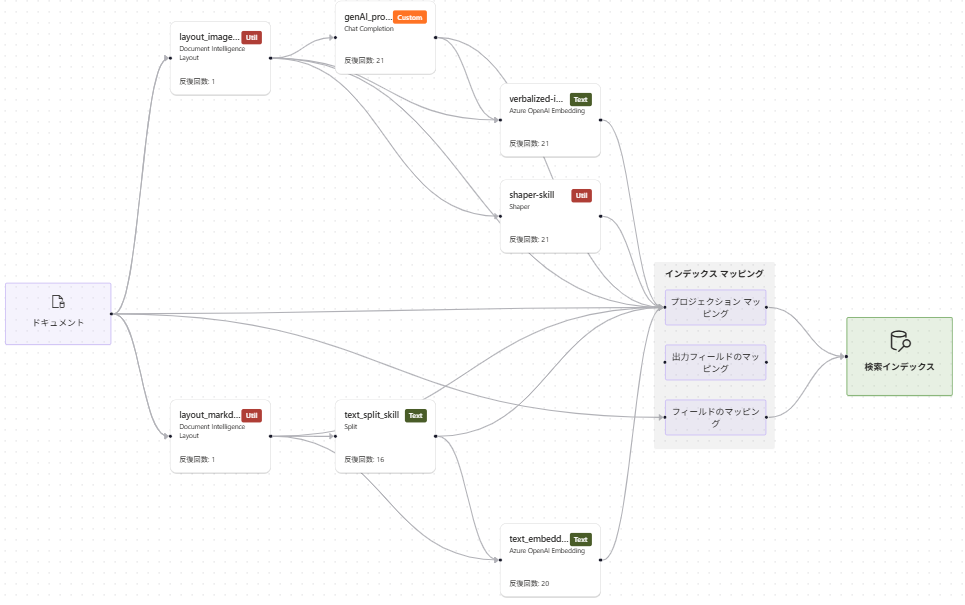

完成時のパイプライン

デバッガセッション使うとこんなパイプラインに可視化できます。

前提

REST Client 0.25.1 をVS Codeから使って実行しています。

REST Client 設定で Decode Escaped Unicode Characters を ON にするとHTTP Response Bodyの日本語がデコードされます。

また、以下の記事の1~5までのStepも前提作業です。

Steps

1. インデックス作成

試行錯誤目的で各ステップに削除も末尾に乗せています。

1.0. 固定値定義

固定値を定義しておきます。

## Azure AI Searchのエンドポイント

@endpoint = https://<ai searchresource name>.search.windows.net

## インデックス名

@index_name=test-index-2

## スキルセット名

@skillset_name=test-skillset-2

## データソース名

@datasource_name=test-datasource-2

## インデクサー名

@indexer_name=test-indexer-2

## Azure AI SearchのAPI Key

@admin_key=<ai search key>

## Azure AI SearchのAPI Version

@api_version=2025-09-01

## AOAIのリソース名

@aoai_resourceUri=https://<AOAI resource name>.openai.azure.com

## AOAIのAPI Key

@aoai_apiKey=<key>

## Embeddingモデルのデプロイメント名

@aoai_embedding_deploymentId=text-embedding-3-small

## Embeddingモデルのモデル名

@aoai_embedding_modelName=text-embedding-3-small

## Embeddingモデルの次元数

@aoai_embedding_dimension=1536

## Blob Storageのコンテナ名

@blob_container_name=rag-doc-test

## Blob Storageの接続文字列

@blob_connectionString=<connection string>

# Chat Completion の情報(画像のテキスト化)

@chatCompletionResourceUri = https://<aoai resource name>.openai.azure.com/openai/deployments/gpt-4o-mini/chat/completions?api-version=2025-01-01-preview

@chatCompletionKey = <key>

## Blob Storageのコンテナ名(画像格納)

@imageProjectionContainer=images

1.1. データ ソースを作成

ADLS Gen2を使っています。

### データソース更新

PUT {{endpoint}}/datasources('{{datasource_name}}')?api-version={{api_version}}

Content-Type: application/json

api-key: {{admin_key}}

{

"name": "{{datasource_name}}",

"description": null,

"type": "adlsgen2",

"subtype": null,

"credentials": {

"connectionString": "{{blob_connectionString}};"

},

"container": {

"name": "{{blob_container_name}}",

"query": null

},

"dataChangeDetectionPolicy": null,

"dataDeletionDetectionPolicy": null,

"encryptionKey": null

}

### データソース削除

DELETE {{endpoint}}/datasources/{{datasource_name}}?api-version={{api_version}}

Content-Type: application/json

api-key: {{admin_key}}

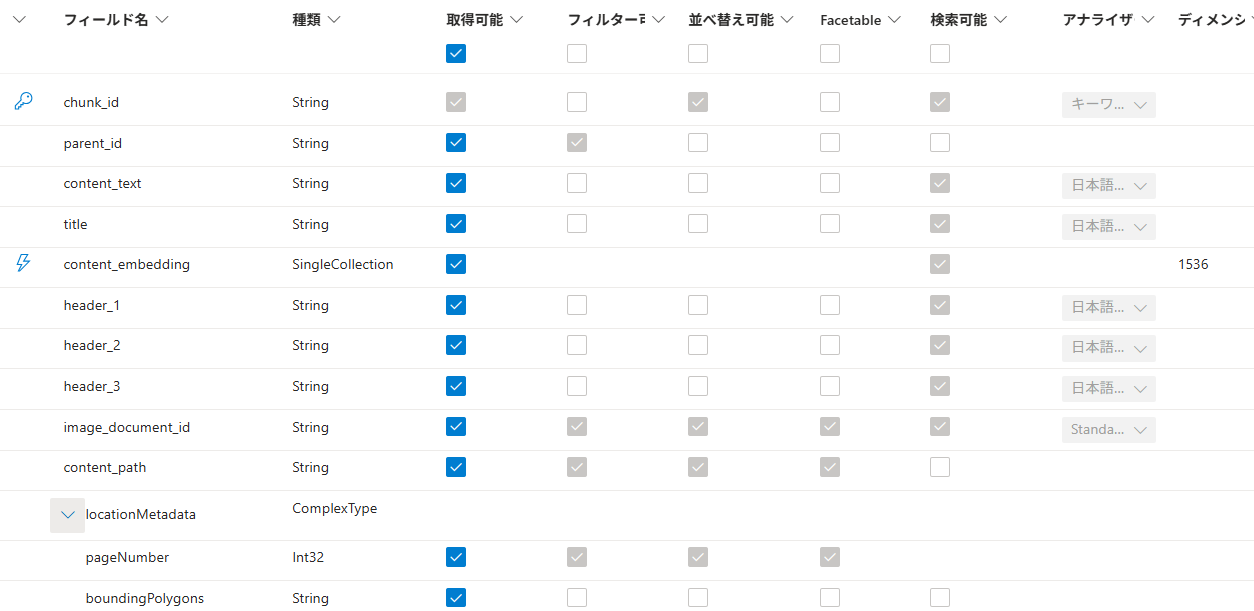

1.2. インデックスを作成

h3までを入れる項目を作っています。

### インデックス作成

PUT {{endpoint}}/indexes('{{index_name}}')?api-version={{api_version}}

Content-Type: application/json

api-key: {{admin_key}}

{

"name": "{{index_name}}",

"fields": [

{

"name": "chunk_id",

"type": "Edm.String",

"key": true,

"retrievable": true,

"stored": true,

"searchable": true,

"filterable": false,

"sortable": true,

"facetable": false,

"analyzer": "keyword",

"synonymMaps": []

},

{

"name": "parent_id",

"type": "Edm.String",

"key": false,

"retrievable": true,

"stored": true,

"searchable": false,

"filterable": true,

"sortable": false,

"facetable": false,

"synonymMaps": []

},

{

"name": "content_text",

"type": "Edm.String",

"key": false,

"retrievable": true,

"stored": true,

"searchable": true,

"analyzer": "ja.lucene",

"filterable": false,

"sortable": false,

"facetable": false,

"synonymMaps": []

},

{

"name": "title",

"type": "Edm.String",

"key": false,

"retrievable": true,

"stored": true,

"searchable": true,

"analyzer": "ja.lucene",

"filterable": false,

"sortable": false,

"facetable": false,

"synonymMaps": []

},

{

"name": "content_embedding",

"type": "Collection(Edm.Single)",

"key": false,

"retrievable": true,

"stored": true,

"searchable": true,

"filterable": false,

"sortable": false,

"facetable": false,

"synonymMaps": [],

"dimensions": {{aoai_embedding_dimension}},

"vectorSearchProfile": "azureOpenAi-text-profile"

},

{

"name": "header_1",

"type": "Edm.String",

"searchable": true,

"analyzer": "ja.lucene",

"filterable": false,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false

},

{

"name": "header_2",

"type": "Edm.String",

"searchable": true,

"analyzer": "ja.lucene",

"filterable": false,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false

},

{

"name": "header_3",

"type": "Edm.String",

"searchable": true,

"analyzer": "ja.lucene",

"filterable": false,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false

},

{

"name": "image_document_id",

"type": "Edm.String",

"filterable": true,

"retrievable": true

},

{

"name": "content_path",

"type": "Edm.String",

"searchable": false,

"retrievable": true

},

{

"name": "locationMetadata",

"type": "Edm.ComplexType",

"fields": [

{

"name": "pageNumber",

"type": "Edm.Int32",

"searchable": false,

"retrievable": true

},

{

"name": "boundingPolygons",

"type": "Edm.String",

"searchable": false,

"retrievable": true,

"filterable": false,

"sortable": false,

"facetable": false

}

]

}

],

"scoringProfiles": [],

"suggesters": [],

"analyzers": [],

"tokenizers": [],

"tokenFilters": [],

"charFilters": [],

"normalizers": [],

"similarity": {

"@odata.type": "#Microsoft.Azure.Search.BM25Similarity"

},

"semantic": {

"defaultConfiguration": "semantic-configuration",

"configurations": [

{

"name": "semantic-configuration",

"prioritizedFields": {

"titleField": {

"fieldName": "title"

},

"prioritizedContentFields": [

{

"fieldName": "content_text"

}

],

"prioritizedKeywordsFields": []

}

}

]

},

"vectorSearch": {

"algorithms": [

{

"name": "vector-algorithm",

"kind": "hnsw",

"hnswParameters": {

"m": 4,

"efConstruction": 400,

"efSearch": 500,

"metric": "cosine"

}

}

],

"profiles": [

{

"name": "azureOpenAi-text-profile",

"algorithm": "vector-algorithm",

"vectorizer": "azureOpenAi-text-vectorizer"

}

],

"vectorizers": [

{

"name": "azureOpenAi-text-vectorizer",

"kind": "azureOpenAI",

"azureOpenAIParameters": {

"resourceUri": "{{aoai_resourceUri}}",

"deploymentId": "{{aoai_embedding_deploymentId}}",

"apiKey": "{{aoai_apiKey}}",

"modelName": "{{aoai_embedding_modelName}}"

}

}

],

"compressions": []

}

}

1.3. スキルセットを作成

api-versionはperviewでないやつだとエラーになったので、previewにしています。

一回でマークダウン化と画像切り抜きができなかったので、2回に分けています。画像切り抜きのインプットにマークダウン化したテキストはないので、h1からh3までの見出しは、画像側には入れていません。

### Skillset作成

PUT {{endpoint}}/skillsets/{{skillset_name}}?api-version=2025-08-01-preview

content-type: application/json

api-key: {{admin_key}}

{

"name": "{{skillset_name}}",

"description": "Skillset to chunk documents and generate embeddings",

"skills": [

{

"@odata.type": "#Microsoft.Skills.Util.DocumentIntelligenceLayoutSkill",

"name": "layout_markdown_h3",

"description": "Extract Markdown up to h3 (text only)",

"context": "/document",

"outputMode": "oneToMany",

"outputFormat": "markdown",

"markdownHeaderDepth": "h3",

"inputs": [

{ "name": "file_data", "source": "/document/file_data" }

],

"outputs": [

{ "name": "markdown_document", "targetName": "markdownDocument" }

]

},

{

"@odata.type": "#Microsoft.Skills.Util.DocumentIntelligenceLayoutSkill",

"name": "layout_images_with_location",

"description": "Extract normalized images (includes figures/charts) and location metadata",

"context": "/document",

"outputMode": "oneToMany",

"outputFormat": "text",

"extractionOptions": [ "images", "locationMetadata" ],

"inputs": [

{ "name": "file_data", "source": "/document/file_data" }

],

"outputs": [

{ "name": "normalized_images", "targetName": "normalized_images" }

]

},

{

"@odata.type": "#Microsoft.Skills.Text.SplitSkill",

"name": "text_split_skill",

"description": "Split skill to chunk documents",

"context": "/document/markdownDocument/*",

"inputs": [

{

"name": "text",

"source": "/document/markdownDocument/*/content",

"inputs": []

}

],

"outputs": [

{

"name": "textItems",

"targetName": "pages"

}

],

"defaultLanguageCode": "ja",

"textSplitMode": "pages",

"maximumPageLength": 2000,

"pageOverlapLength": 500

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "text_embedding_skill",

"context": "/document/markdownDocument/*/pages/*",

"inputs": [

{

"name": "text",

"source": "/document/markdownDocument/*/pages/*",

"inputs": []

}

],

"outputs": [

{

"name": "embedding",

"targetName": "text_vector"

}

],

"resourceUri": "{{aoai_resourceUri}}",

"deploymentId": "{{aoai_embedding_deploymentId}}",

"apiKey": "{{aoai_apiKey}}",

"modelName": "{{aoai_embedding_modelName}}",

"dimensions": {{aoai_embedding_dimension}}

},

{

"@odata.type": "#Microsoft.Skills.Custom.ChatCompletionSkill",

"name": "genAI_prompt_skill",

"description": "GenAI Prompt skill for image verbalization",

"uri": "{{chatCompletionResourceUri}}",

"timeout": "PT1M",

"apiKey": "{{chatCompletionKey}}",

"context": "/document/normalized_images/*",

"inputs": [

{

"name": "systemMessage",

"source": "='You are tasked with generating concise, accurate descriptions of images, figures, diagrams, or charts in documents. The goal is to capture the key information and meaning conveyed by the image without including extraneous details like style, colors, visual aesthetics, or size.\n\nInstructions:\nContent Focus: Describe the core content and relationships depicted in the image.\n\nFor diagrams, specify the main elements and how they are connected or interact.\nFor charts, highlight key data points, trends, comparisons, or conclusions.\nFor figures or technical illustrations, identify the components and their significance.\nClarity & Precision: Use concise language to ensure clarity and technical accuracy. Avoid subjective or interpretive statements.\n\nAvoid Visual Descriptors: Exclude details about:\n\nColors, shading, and visual styles.\nImage size, layout, or decorative elements.\nFonts, borders, and stylistic embellishments.\nContext: If relevant, relate the image to the broader content of the technical document or the topic it supports.\n\nExample Descriptions:\nDiagram: \"A flowchart showing the four stages of a machine learning pipeline: data collection, preprocessing, model training, and evaluation, with arrows indicating the sequential flow of tasks.\"\n\nChart: \"A bar chart comparing the performance of four algorithms on three datasets, showing that Algorithm A consistently outperforms the others on Dataset 1.\"\n\nFigure: \"A labeled diagram illustrating the components of a transformer model, including the encoder, decoder, self-attention mechanism, and feedforward layers.\"'"

},

{

"name": "userMessage",

"source": "='Please describe this image.'"

},

{

"name": "image",

"source": "/document/normalized_images/*/data"

}

],

"outputs": [

{

"name": "response",

"targetName": "verbalizedImage"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "verbalized-image-embedding-skill",

"description": "Embedding skill for verbalized images",

"context": "/document/normalized_images/*",

"inputs": [

{

"name": "text",

"source": "/document/normalized_images/*/verbalizedImage",

"inputs": []

}

],

"outputs": [

{

"name": "embedding",

"targetName": "verbalizedImage_vector"

}

],

"resourceUri": "{{aoai_resourceUri}}",

"deploymentId": "{{aoai_embedding_deploymentId}}",

"apiKey": "{{aoai_apiKey}}",

"dimensions": {{aoai_embedding_dimension}},

"modelName": "{{aoai_embedding_deploymentId}}"

},

{

"@odata.type": "#Microsoft.Skills.Util.ShaperSkill",

"name": "shaper-skill",

"description": "Shaper skill to reshape the data to fit the index schema",

"context": "/document/normalized_images/*",

"inputs": [

{

"name": "imagePath",

"source": "='{{imageProjectionContainer}}/'+$(/document/normalized_images/*/imagePath)",

"inputs": []

}

],

"outputs": [

{

"name": "output",

"targetName": "new_normalized_images"

}

]

}

],

"indexProjections": {

"selectors": [

{

"targetIndexName": "{{index_name}}",

"parentKeyFieldName": "parent_id",

"sourceContext": "/document/markdownDocument/*/pages/*",

"mappings": [

{

"name": "content_embedding",

"source": "/document/markdownDocument/*/pages/*/text_vector"

},

{

"name": "content_text",

"source": "/document/markdownDocument/*/pages/*"

},

{

"name": "title",

"source": "/document/title"

},

{

"name": "header_1",

"source": "/document/markdownDocument/*/sections/h1"

},

{

"name": "header_2",

"source": "/document/markdownDocument/*/sections/h2"

},

{

"name": "header_3",

"source": "/document/markdownDocument/*/sections/h3"

}

]

},

{

"targetIndexName": "{{index_name}}",

"parentKeyFieldName": "image_document_id",

"sourceContext": "/document/normalized_images/*",

"mappings": [

{

"name": "content_text",

"source": "/document/normalized_images/*/verbalizedImage"

},

{

"name": "content_embedding",

"source": "/document/normalized_images/*/verbalizedImage_vector"

},

{

"name": "content_path",

"source": "/document/normalized_images/*/new_normalized_images/imagePath"

},

{

"name": "title",

"source": "/document/title"

},

{

"name": "locationMetadata",

"source": "/document/normalized_images/*/locationMetadata"

}

]

} ],

"parameters": {

"projectionMode": "skipIndexingParentDocuments"

}

}

}

### スキルセット削除

DELETE {{endpoint}}/skillsets/{{skillset_name}}?api-version={{api_version}}

content-type: application/json

api-key: {{admin_key}}

AI 使って項目のフローをマーメイド記法で書きました。少し不足はありますが、正しいです。

ただ、Qiitaで見ると小さいので以下のツールなどを使ってみてください。

1.4. インデクサー登録

特に変哲なしです。

### インデクサー作成

PUT {{endpoint}}/indexers/{{indexer_name}}?api-version={{api_version}}

Content-Type: application/json

api-key: {{admin_key}}

{

"name": "{{indexer_name}}",

"description": null,

"dataSourceName": "{{datasource_name}}",

"skillsetName": "{{skillset_name}}",

"targetIndexName": "{{index_name}}",

"disabled": null,

"schedule": null,

"parameters": {

"batchSize": null,

"maxFailedItems": null,

"maxFailedItemsPerBatch": null,

"configuration": {

"dataToExtract": "contentAndMetadata",

"parsingMode": "default",

"allowSkillsetToReadFileData": true

}

},

"fieldMappings": [

{

"sourceFieldName": "metadata_storage_name",

"targetFieldName": "title",

"mappingFunction": null

}

],

"outputFieldMappings": [],

"encryptionKey": null

}

### インデクサー削除

DELETE {{endpoint}}/indexers/{{indexer_name}}?api-version={{api_version}}

Content-Type: application/json

api-key: {{admin_key}}

2. 検索

2.1. フル検索

locationMetadataも出力したフル検索

### Query the index

POST {{endpoint}}/indexes/{{index_name}}/docs/search?api-version={{api_version}}

Content-Type: application/json

api-key: {{admin_key}}

{

"search": "*",

"count": true,

"select": "chunk_id, content_text, title, header_1, header_2, header_3, content_path, image_document_id, locationMetadata"

}

画像の検索結果です。テキスト側も似たようにboundingPolygonsが出ます。

{

"@search.score": 1.0,

"chunk_id": "c7b0f04fdd0b_aHR0cHM6Ly9zdG9yYWdlcmFnanBlLmJsb2IuY29yZS53aW5kb3dzLm5ldC9yYWctZG9jLXRlc3QvMDEucGRm0_markdownDocument_3_pages_0",

"content_text": "■ 少子高齢化や自然災害の激甚化、自動車保険市場の縮小等の中長期的な事業環境の変化 など",

"title": "01.pdf",

"header_1": "保険モニタリングレポート【概要】",

"header_2": "環境変化と諸課題",

"header_3": "環境変化",

"image_document_id": null,

"content_path": null,

"locationMetadata": null

},

{

"@search.score": 1.0,

"chunk_id": "6f495a8c939e_aHR0cHM6Ly9zdG9yYWdlcmFnanBlLmJsb2IuY29yZS53aW5kb3dzLm5ldC9yYWctZG9jLXRlc3QvMDEucGRm0_normalized_images_0",

"content_text": "The image features a logo consisting of stylized letters that represent the acronym \"S.\" The design includes overlapping shapes that suggest movement or flow, indicating dynamism and innovation. The composition emphasizes a streamlined form, suggesting efficiency.",

"title": "01.pdf",

"header_1": null,

"header_2": null,

"header_3": null,

"image_document_id": "aHR0cHM6Ly9zdG9yYWdlcmFnanBlLmJsb2IuY29yZS53aW5kb3dzLm5ldC9yYWctZG9jLXRlc3QvMDEucGRm0",

"content_path": "images/aHR0cHM6Ly9zdG9yYWdlcmFnanBlLmJsb2IuY29yZS53aW5kb3dzLm5ldC9yYWctZG9jLXRlc3QvMDEucGRm0/normalized_images_0.jpg",

"locationMetadata": {

"pageNumber": 2,

"boundingPolygons": "[[{\"x\":0.0474,\"y\":0.0402},{\"x\":0.5967,\"y\":0.0403},{\"x\":0.5968,\"y\":0.5351},{\"x\":0.0475,\"y\":0.535}]]"

}

},