Summary

HDP 3.1.xの場合、Spark+HWCでHiveテーブルを作成し、更に自動的にそのMetadataをAtlasに反映する方法はあります。

やり方:

1)Atlas+Hiveの連携

2)HWC準備

前提条件:Hive Warehouse Connector (HWC) and low-latency analytical processing (LLAP) 両方利用

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/integrating-hive/content/hive_configure_a_spark_hive_connection.html

3)Spark - Hive 連携

Sparkの設定追加:

Set the values of these properties as follows:

spark.sql.hive.hiveserver2.jdbc.url

In Ambari, copy the value from Services > Hive > Summary > HIVESERVER2 INTERACTIVE JDBC URL.

spark.datasource.hive.warehouse.metastoreUri

Copy the value from hive.metastore.uris. In Hive, at the hive> prompt, enter set hive.metastore.uris and copy the output. For example, thrift://mycluster-1.com:9083.

spark.hadoop.hive.llap.daemon.service.hosts

Copy value from Advanced hive-interactive-site > hive.llap.daemon.service.hosts.

spark.hadoop.hive.zookeeper.quorum

Copy the value from Advanced hive-sitehive.zookeeper.quorum.

例:

4)動作確認

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/integrating-hive/content/hive-hwc-catalog-operations.html

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/integrating-hive/content/hive_hivewarehousesession_api_operations.html

[centos@zzeng-hdp-1 ~/git/ops/hwx-field-cloud/hdp]$ spark-shell --jars /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

19/11/30 04:21:12 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

Spark context Web UI available at http://zzeng-hdp-1.field.hortonworks.com:4041

Spark context available as 'sc' (master = yarn, app id = application_1575083036450_0018).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.3.2.3.1.4.0-315

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_112)

Type in expressions to have them evaluated.

Type :help for more information.

scala> import com.hortonworks.hwc.HiveWarehouseSession

import com.hortonworks.hwc.HiveWarehouseSession

scala> import com.hortonworks.hwc.HiveWarehouseSession._

import com.hortonworks.hwc.HiveWarehouseSession._

scala> val hive = HiveWarehouseSession.session(spark).build()

hive: com.hortonworks.spark.sql.hive.llap.HiveWarehouseSessionImpl = com.hortonworks.spark.sql.hive.llap.HiveWarehouseSessionImpl@7b88dd58

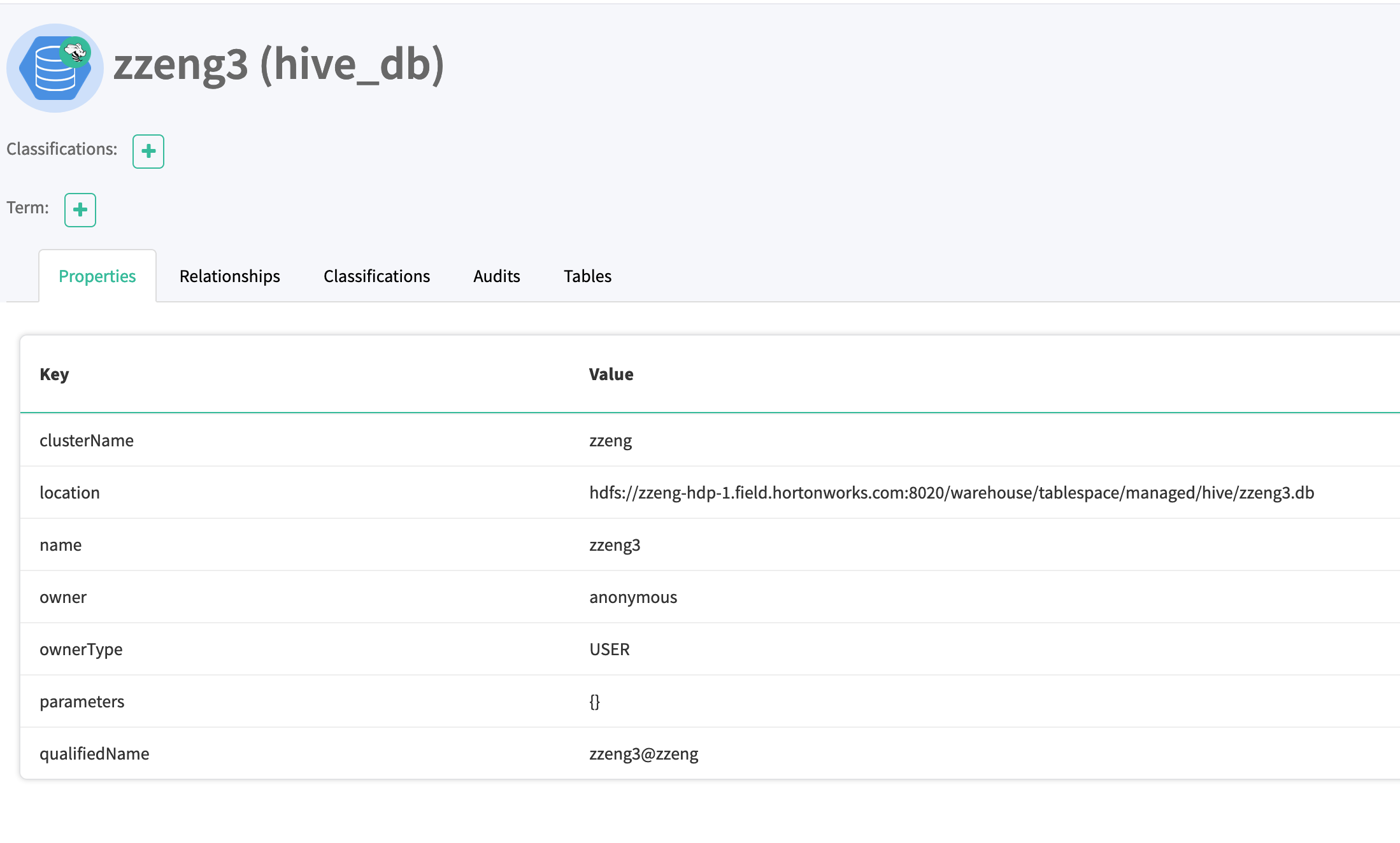

scala> hive.createDatabase("zzeng3", false);

scala> hive.setDatabase("zzeng3")

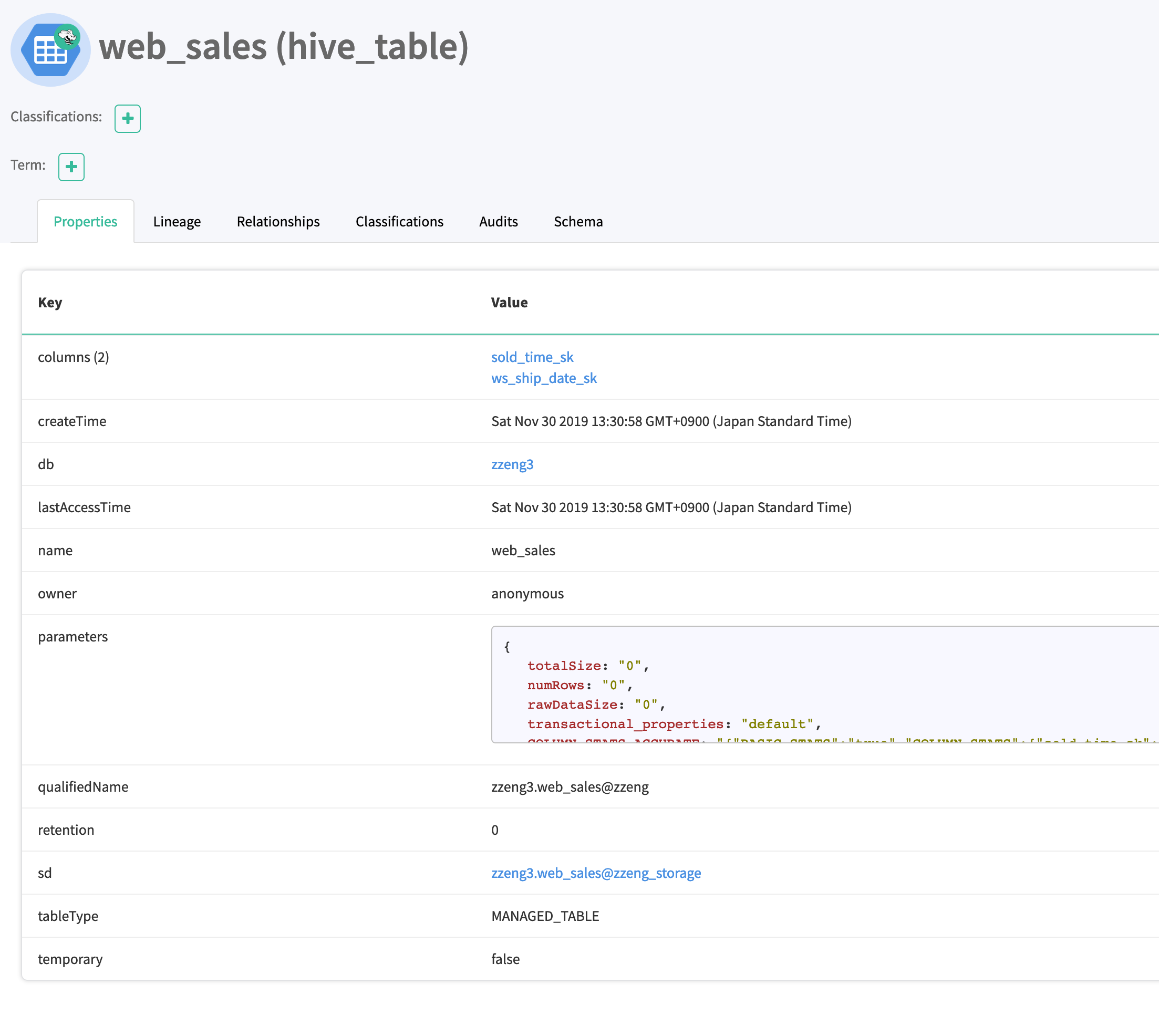

scala> hive.createTable("web_sales").ifNotExists().column("sold_time_sk", "bigint").column("ws_ship_date_sk", "bigint").create()

scala>

制約事項:

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/integrating-hive/content/hive_hivewarehouseconnector_for_handling_apache_spark_data.html

1)ORCテーブルのみ対応

2)Spark Thrift Server未対応