概要

Kafkaに魅せられて、とりあえず手元のマシンで動かしてみましょうか、、、と、インフラしか知らないSEがMacBookProを新たに購入し、Qiita等にアップされている諸先輩方の記事を参考にさせていただき、ローカルのDocker環境でKafkaを動作確認したまでの手順を、過去に7回に分け記載しました。その7回に記事についてはこちらから参照ください。

今回は、そのDocker環境で動いていたKafka検証システムの docker-compose.yml を Kubernetes環境のマニファスト(yaml形式)でどのように定義し直すのかを確認しました。

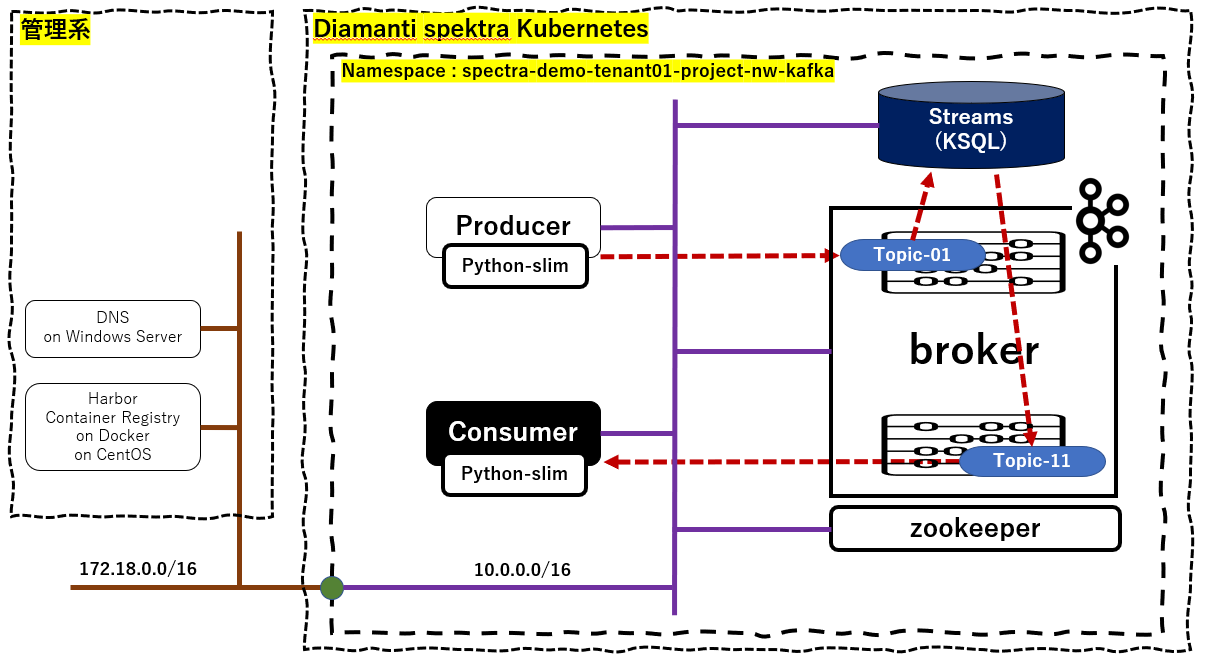

今回のKubernetes環境の Kafka検証システム の全体構成は下図のようになります。

上図に従い、マニフェストを定義しています。

| Pod | Manifest |

|---|---|

| Producer/Comsumer | python-iot-dev.yaml |

| zookeeper | zookeeper-v1.yaml |

| broker | broker-v1.yaml |

| ksql-server/ksql-cli | ksql-v1.yaml |

なお、Producwe/Consumer については、Docker Image 作成のため、Dockerfile も定義しています。

実行環境

macOS Big Sur 11.1

Docker version 20.10.2, build 2291f61

Python 3.8.3

Kubernetes環境

Diamanti spektra kubernetes

Harbor

windows DNS

作業のディレクトリ構成

作業のディレクトリ構成は以下となります

$ tree

.

├── Dockerfile

├── broker-v1.yaml

├── ksql-v1.yaml

├── opt

│ ├── Consumer-v1.py

│ └── Producer-v1.py

├── python-iot-dev.yaml

├── requirements.txt

└── zookeeper-v1.yaml

Producer/Consumer の Dockerfile

Producer/Consumer で共通のDocker Image となります。

Producer/Consumerで稼働させるプログラムをコピーしておきます。

FROM python:3.8.3-slim

USER root

RUN apt-get update

RUN apt-get -y install locales && localedef -f UTF-8 -i ja_JP ja_JP.UTF-8

ENV LANG ja_JP.UTF-8

ENV LANGUAGE ja_JP:ja

ENV LC_ALL ja_JP.UTF-8

ENV TZ JST-9

ENV TERM xterm

RUN apt-get install -y vim less

RUN apt-get install -y dnsutils iputils-ping net-tools procps netcat

COPY requirements.txt .

RUN pip install --upgrade pip

RUN pip install --upgrade setuptools

RUN pip install -r requirements.txt

WORKDIR /opt/app

COPY ./opt/*.py /opt/app/.

マニフェスト

zookeeper、broker、 ksql-server/ksql-cli、Producer/Comsumer の4つに分けて定義しました。

### Producer/Comsumer ###

apiVersion: apps/v1

kind: Deployment

metadata:

name: iot

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: iot

spec:

replicas: 2

selector:

matchLabels:

app: iot

template:

metadata:

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: iot

spec:

containers:

- name: iot-dev

resources:

limits:

cpu: 1000m

memory: 1Gi

image: harbor.nwdiamanti.local/nw-kafka/python-iot:1.0.0

tty: true

dnsConfig:

options:

- name: ndots

value: "1"

imagePullSecrets:

- name: harbor

### zookeeper ###

apiVersion: v1

kind: Service

metadata:

name: kafka-zookeeper

namespace: spektra-demo-tenant01-project-nw-kafka

spec:

ports:

- port: 32181

protocol: TCP

targetPort: 32181

selector:

app: kafka-zookeeper

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-zookeeper

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-zookeeper

spec:

replicas: 1

selector:

matchLabels:

app: kafka-zookeeper

template:

metadata:

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-zookeeper

spec:

containers:

- name: zookeeper

image: confluentinc/cp-zookeeper:5.5.1

imagePullPolicy: Always

resources:

limits:

cpu: 1000m

memory: 1Gi

env:

- name: ZOOKEEPER_CLIENT_PORT

value: "32181"

- name: ZOOKEEPER_TICK_TIME

value: "2000"

ports:

- containerPort: 32181

protocol: TCP

dnsConfig:

options:

- name: ndots

value: "1"

### broker ###

apiVersion: v1

kind: Service

metadata:

name: kafka-broker

namespace: spektra-demo-tenant01-project-nw-kafka

spec:

ports:

- port: 29092

protocol: TCP

targetPort: 29092

selector:

app: kafka-broker

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-broker

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-broker

spec:

replicas: 1

selector:

matchLabels:

app: kafka-broker

template:

metadata:

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-broker

spec:

containers:

- name: broker

image: confluentinc/cp-kafka:5.5.1

imagePullPolicy: Always

resources:

limits:

cpu: 1000m

memory: 2Gi

env:

- name: KAFKA_BROKER_ID

value: "1"

- name: KAFKA_ZOOKEEPER_CONNECT

value: kafka-zookeeper:32181

- name: KAFKA_LISTENER_SECURITY_PROTOCOL_MAP

value: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

- name: KAFKA_ADVERTISED_LISTENERS

value: PLAINTEXT://broker:29092,PLAINTEXT_HOST://localhost:9092

- name: KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR

value: "1"

- name: CONFLUENT_SUPPORT_METRICS_ENABLE

value: "false"

ports:

- containerPort: 9092

protocol: TCP

- containerPort: 29092

protocol: TCP

dnsConfig:

options:

- name: ndots

value: "1"

### ksql-server ###

apiVersion: v1

kind: Service

metadata:

name: kafka-ksql-server

namespace: spektra-demo-tenant01-project-nw-kafka

spec:

ports:

- port: 8088

protocol: TCP

targetPort: 8088

selector:

app: kafka-ksql-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-ksql-server

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-ksql-server

spec:

replicas: 1

selector:

matchLabels:

app: kafka-ksql-server

template:

metadata:

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-ksql-server

spec:

containers:

- name: ksql-server

image: confluentinc/cp-ksql-server:5.4.3

imagePullPolicy: Always

resources:

limits:

cpu: 2000m

memory: 2Gi

env:

- name: KSQL_CONFIG_DIR

value: /etc/ksql

- name: KSQL_LOG4J_OPTS

value: -Dlog4j.configuration=file:/etc/ksql/log4j-rolling.properties

- name: KSQL_BOOTSTRAP_SERVERS

value: kafka-broker:29092

- name: KSQL_HOST_NAME

value: ksql-server

- name: KSQL_APPLICATION_ID

value: IoT-K8S-1

- name: KSQL_LISTENERS

value: http://0.0.0.0:8088

- name: KSQL_CACHE_MAX_BYTES_BUFFERING

value: "0"

- name: KSQL_AUTO_OFFSET_RESET

value: earliest

ports:

- containerPort: 8088

protocol: TCP

dnsConfig:

options:

- name: ndots

value: "1"

---

### ksql-cli ###

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-ksql-cli

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-ksql-cli

spec:

replicas: 1

selector:

matchLabels:

app: kafka-ksql-cli

template:

metadata:

namespace: spektra-demo-tenant01-project-nw-kafka

labels:

app: kafka-ksql-cli

spec:

containers:

- name: ksql-cli

image: confluentinc/cp-ksql-cli:5.4.3

imagePullPolicy: Always

resources:

limits:

cpu: 1000m

memory: 512Mi

tty: true

dnsConfig:

options:

- name: ndots

value: "1"

Docker環境下での Dockerfile と docker-compose.yml の過去情報

Producer/Comsumer の Dockerfile と docker-compose.yaml についてはこちらを参照ください。

zookeeper と broker と ksql-server/ksql-cli の docker-compose.yaml についてはこちらを参照ください。

Harbor へ Producer/Comsumer の Docker Image の Push

Docker Image を作成し、コンテナレジストリ(Harbor)に Push します。

$ docker image build -t python-iot:3.8.3-slim .

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

python-iot 3.8.3-slim 5966a0f42ed5 29 minutes ago 331MB

$ docker tag python-iot:3.8.3-slim harbor.nwdiamanti.local/nw-kafka/python-iot:1.0.0

$ docker login harbor.nwdiamanti.local

$ docker push harbor.nwdiamanti.local/nw-kafka/python-iot:1.0.0

各Pod の作成

4つのマニフェストから各種Podの起動と確認

$ kubectl apply -f python-iot-dev.yaml

deployment.apps/iot created

$ kubectl apply -f zookeeper-v1.yaml

service/kafka-zookeeper created

deployment.apps/kafka-zookeeper created

$ kubectl apply -f broker-v1.yaml

service/kafka-broker created

deployment.apps/kafka-broker created

$ kubectl apply -f ksql-v1.yaml

service/kafka-ksql-server created

deployment.apps/kafka-ksql-server created

deployment.apps/kafka-ksql-cli created

$ kubectl get pod -n spektra-demo-tenant01-project-nw-kafka -o wide

NAME READY STATUS RESTARTS AGE

iot-b948b544-82nhj 1/1 Running 0 12h <--- Producer使用

iot-b948b544-whkbn 1/1 Running 0 12h <--- Consumer使用

kafka-broker-f79866747-vp6tc 1/1 Running 0 12h

kafka-ksql-cli-64565d78db-fx6xz 1/1 Running 0 12h

kafka-ksql-server-f8766c6f5-mtgqz 1/1 Running 0 12h

kafka-zookeeper-6bf5c4c6bf-88zrz 1/1 Running 0 12h

実行結果

もちろん、これ と同じ結果となりました。

最後に

アプリ屋さんが、kubectl等のコマンドを叩き、Kubernetes環境下でのコンテナプログラムの動きまでを体感しましたが、やはり、アプリ屋が本業に注力するためには、CI/CDの仕組の導入が必要だと痛感した。

参考情報

以下の情報を参考にさせていただきました。感謝申し上げます。

Kubernetes まとめ

Kubernetes のServiceについてまとめてみた

コンテナリポジトリ Harbor in kubernetes の検証

Kubernetes :kubectl コマンド一覧

Kubernetes のポッドが起動しない原因と対策

Kubernetes 1.10 Cluster内DNSの挙動確認