ポイント

- LSTMをベースに Zoneout を実装。MNIST 手書き数字データでパフォーマンスを検証。

レファレンス

1. Zoneout: Regularizing RNNs by Randomly Preserving Hidden Activations

検証方法

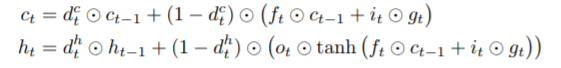

(参照論文より引用)

データ

MNIST handwritten digits

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('***/mnist', \

one_hot = True)

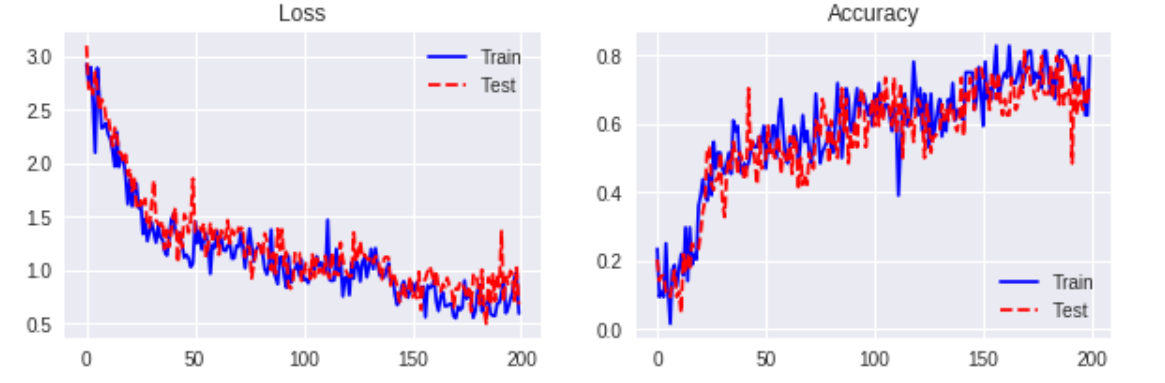

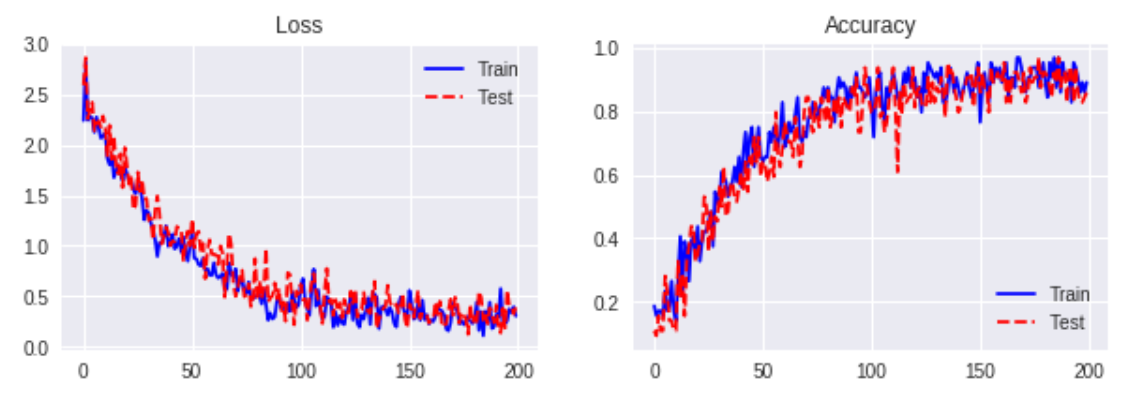

検証結果

数値計算例:

- n_units = 100

- learning_rate = 0.1

- batch_size = 64

- zoneout_prob = 0.2

Zoneout ( no gradient clipping )

Zoneout ( clipped by norm, 0.5 )

サンプルコード

def get_zoneout_mask(self, zoneout_prob, shape):

keep_prob = tf.convert_to_tensor(zoneout_prob)

random_tensor = keep_prob + tf.random_uniform(shape)

binary_tensor = tf.floor(random_tensor)

zoneout_mask = binary_tensor

return zoneout_mask

# Zoneout

def inference(self, x, length, n_in, n_units, n_out, \

batch_size, forget_bias, zoneout_prob):

x = tf.reshape(x, [-1, length, n_in])

h = tf.zeros(shape = [batch_size, n_units], \

dtype = tf.float32)

c = tf.zeros(shape = [batch_size, n_units], \

dtype = tf.float32)

list_h = []

list_c = []

with tf.variable_scope('lstm'):

init_norm = tf.truncated_normal_initializer(mean \

= 0.0, stddev = 0.05, dtype = tf.float32)

init_constant1 = tf.constant_initializer(value = \

0.0, dtype = tf.float32)

init_constant2 = tf.constant_initializer(value = \

0.1, dtype = tf.float32)

w_x = tf.get_variable('w_x', shape = [n_in, n_units \

* 4], initializer = init_norm)

w_h = tf.get_variable('w_h', shape = [n_units, \

n_units * 4], initializer = init_norm)

b = tf.get_variable('b', shape = [n_units * 4], \

initializer = init_constant1)

zoneout_mask_c = self.get_zoneout_mask(zoneout_prob, \

[n_units])

zoneout_mask_complement_c = tf.ones(shape = \

[n_units], dtype = tf.float32) - zoneout_mask_c

zoneout_mask_h = self.get_zoneout_mask(zoneout_prob, \

[n_units])

zoneout_mask_complement_h = tf.ones(shape = \

[n_units], dtype = tf.float32) - zoneout_mask_h

for t in range(length):

t_x = tf.matmul(x[:, t, :], w_x)

t_h = tf.matmul(h, w_h)

i, f, o, g = tf.split(tf.add(tf.add(t_x, t_h), b), \

4, axis = 1)

i = tf.nn.sigmoid(i)

f = tf.nn.sigmoid(f + forget_bias)

o = tf.nn.sigmoid(o)

g = tf.nn.tanh(g)

# zoneout

c_temp = tf.add(tf.multiply(f, c), tf.multiply(i, g))

h_temp = tf.multiply(o, tf.nn.tanh(c))

c = zoneout_mask_c * c + \

zoneout_mask_complement_c * c_temp

h = zoneout_mask_h * h + \

zoneout_mask_complement_h * h_temp

list_h.append(h)

list_c.append(c)

with tf.variable_scope('pred'):

w = self.weight_variable('w', [n_units, n_out])

b = self.bias_variable('b', [n_out])

y = tf.add(tf.matmul(list_h[-1], w), b)

y = tf.nn.softmax(y, axis = 1)

return y