ポイント

- Variational Autoencoder (Gaussian) を実装し、具体的な数値で確認。

サンプルコード

# Gaussian

class VariationalAutoencoder():

def __init__(self):

pass

def weight_variable(self, name, shape):

initializer = tf.truncated_normal_initializer(mean = 0.0, stddev = 0.01, dtype = tf.float32)

return tf.get_variable(name, shape, initializer = initializer)

def bias_variable(self, name, shape):

initializer = tf.constant_initializer(value = 0.0, dtype = tf.float32)

return tf.get_variable(name, shape, initializer = initializer)

def const_zero(self, name, shape):

initializer = tf.constant_initializer(value = 0.0, dtype = tf.float32)

return tf.get_variable(name, shape, initializer = initializer)

def const_one(self, name, shape):

initializer = tf.constant_initializer(value = 1.0, dtype = tf.float32)

return tf.get_variable(name, shape, initializer = initializer)

def inference(self, x, n_in, n_units_1, dim_z, batch_size):

with tf.variable_scope('enc_z_dec'):

w_1 = self.weight_variable('w_1', [n_in, n_units_1])

b_1 = self.bias_variable('b_1', [n_units_1])

h_1 = tf.add(tf.matmul(x, w_1), b_1)

# batch normalization

beta_1 = self.const_zero('beta_1', [n_units_1])

gamma_1 = self.const_one('gamma_1', [n_units_1])

mean_1, var_1 = tf.nn.moments(h_1, [0])

h_1 = gamma_1 * (h_1 - mean_1) / tf.sqrt(var_1 + 1e-5) + beta_1

bn_1 = tf.nn.softplus(h_1)

w_2 = self.weight_variable('w_2', [n_units_1, dim_z * 2])

b_2 = self.bias_variable('b_2', [dim_z * 2])

h_2 = tf.add(tf.matmul(bn_1, w_2), b_2)

mu = h_2[:, : dim_z]

logvar = h_2[:, dim_z :]

z = mu + tf.random_normal(shape = [batch_size, dim_z], mean = 0.0, stddev = 1.0) * tf.exp(0.5 * logvar)

w_3 = self.weight_variable('w_3', [dim_z, n_units_1])

b_3 = self.bias_variable('b_3', [n_units_1])

h_3 = tf.add(tf.matmul(z, w_3), b_3)

# batch normalization

beta_2 = self.const_zero('beta_2', [n_units_1])

gamma_2 = self.const_one('gamma_2', [n_units_1])

mean_2, var_2 = tf.nn.moments(h_3, [0])

h_3 = gamma_2 * (h_3 - mean_2) / tf.sqrt(var_2 + 1e-5) + beta_2

bn_2 = tf.nn.softplus(h_3)

w_4 = tf.transpose(w_1)

b_4 = self.bias_variable('b_4', [n_in])

y = tf.nn.sigmoid(tf.add(tf.matmul(bn_2, w_4), b_4))

return y, mu, logvar

def loss(self, y, t, mu, logvar):

likelihood = tf.reduce_sum(t * tf.log(tf.clip_by_value(y, 1e-10, 1.0)) + \

(1 - t) * tf.log(tf.clip_by_value(1 - y, 1e-10, 1.0)), axis = 1)

kl = -0.5 * tf.reduce_sum(1.0 + logvar - mu * mu - tf.exp(logvar), axis = 1)

elbo = tf.reduce_mean(likelihood - kl)

return -elbo

def training(self, loss, learning_rate):

optimizer = tf.train.AdamOptimizer(learning_rate = learning_rate)

train_step = optimizer.minimize(loss)

return train_step

def training_clipped(self, loss, learning_rate, clip_norm):

optimizer = tf.train.AdamOptimizer(learning_rate = learning_rate)

grads_and_vars = optimizer.compute_gradients(loss)

clipped_grads_and_vars = [(tf.clip_by_norm(grad, clip_norm = clip_norm), \

var) for grad, var in grads_and_vars]

train_step = optimizer.apply_gradients(clipped_grads_and_vars)

return train_step

def fit(self, images_train, labels_train, images_test, labels_test, \

n_in, n_units_1, dim_z, \

learning_rate, n_iter, batch_size, show_step, is_saving, model_path):

tf.reset_default_graph()

x = tf.placeholder(shape = [None, 5 * 5], dtype = tf.float32)

y, mu, logvar = self.inference(x, n_in, n_units_1, dim_z, batch_size)

loss = self.loss(y, x, mu, logvar)

train_step = self.training(loss, learning_rate)

init = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(init)

history_loss_train = []

history_loss_test = []

for i in range(n_iter):

# Train

rand_index = np.random.choice(len(images_train), size = batch_size)

x_batch = images_train[rand_index]

feed_dict = {x: x_batch}

sess.run(train_step, feed_dict = feed_dict)

temp_loss = sess.run(loss, feed_dict = feed_dict)

history_loss_train.append(temp_loss)

if (i + 1) % show_step == 0:

print ('--------------------')

print ('Iteration: ' + str(i + 1) + ' Loss: ' + str(temp_loss))

# Test

rand_index = np.random.choice(len(images_test), size = batch_size)

x_batch = images_test[rand_index]

feed_dict = {x: x_batch}

temp_loss = sess.run(loss, feed_dict = feed_dict)

history_loss_test.append(temp_loss)

if is_saving:

model_path = saver.save(sess, model_path)

print ('--------------------')

print ('done saving at ', model_path)

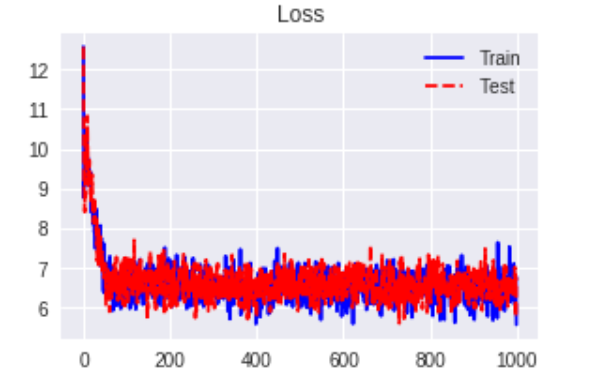

fig = plt.figure(figsize = (10, 3))

ax1 = fig.add_subplot(1, 2, 1)

ax1.plot(range(n_iter), history_loss_train, 'b-', label = 'Train')

ax1.plot(range(n_iter), history_loss_test, 'r--', label = 'Test')

ax1.set_title('Loss')

ax1.legend(loc = 'upper right')

plt.show()

def reconstruct_image(self, x, n_in, n_units_1, n_units_2, model_path):

with tf.variable_scope('enc_z_dec', reuse = True):

w_1 = self.weight_variable('w_1', [n_in, n_units_1])

b_1 = self.bias_variable('b_1', [n_units_1])

h_1 = tf.add(tf.matmul(x, w_1), b_1)

beta_1 = self.const_zero('beta_1', [n_units_1])

gamma_1 = self.const_one('gamma_1', [n_units_1])

mean_1, var_1 = tf.nn.moments(h_1, [0])

h_1 = gamma_1 * (h_1 - mean_1) / tf.sqrt(var_1 + 1e-5) + beta_1

bn_1 = tf.nn.softplus(h_1)

w_2 = self.weight_variable('w_2', [n_units_1, dim_z * 2])

b_2 = self.bias_variable('b_2', [dim_z * 2])

h_2 = tf.add(tf.matmul(bn_1, w_2), b_2)

mu = h_2[:, : dim_z]

logvar = h_2[:, dim_z :]

z = mu + tf.random_normal(shape = [batch_size, dim_z], mean = 0.0, stddev = 1.0) * tf.exp(0.5 * logvar)

w_3 = self.weight_variable('w_3', [dim_z, n_units_1])

b_3 = self.bias_variable('b_3', [n_units_1])

h_3 = tf.add(tf.matmul(z, w_3), b_3)

beta_2 = self.const_zero('beta_2', [n_units_1])

gamma_2 = self.const_one('gamma_2', [n_units_1])

mean_2, var_2 = tf.nn.moments(h_3, [0])

h_3 = gamma_2 * (h_3 - mean_2) / tf.sqrt(var_2 + 1e-5) + beta_2

bn_2 = tf.nn.softplus(h_3)

w_4 = tf.transpose(w_1)

b_4 = self.bias_variable('b_4', [n_in])

y = tf.nn.sigmoid(tf.add(tf.matmul(bn_2, w_4), b_4))

saver = tf.train.Saver()

with tf.Session() as sess:

saver.restore(sess, model_path)

return sess.run(y)

def generate_code(self, x, n_in, n_units_1, n_units_2, model_path):

with tf.variable_scope('enc_z_dec', reuse = True):

w_1 = self.weight_variable('w_1', [n_in, n_units_1])

b_1 = self.bias_variable('b_1', [n_units_1])

h_1 = tf.add(tf.matmul(x, w_1), b_1)

beta_1 = self.const_zero('beta_1', [n_units_1])

gamma_1 = self.const_one('gamma_1', [n_units_1])

mean_1, var_1 = tf.nn.moments(h_1, [0])

h_1 = gamma_1 * (h_1 - mean_1) / tf.sqrt(var_1 + 1e-5) + beta_1

bn_1 = tf.nn.softplus(h_1)

w_2 = self.weight_variable('w_2', [n_units_1, dim_z * 2])

b_2 = self.bias_variable('b_2', [dim_z * 2])

h_2 = tf.add(tf.matmul(bn_1, w_2), b_2)

mu = h_2[:, : dim_z]

logvar = h_2[:, dim_z :]

z = mu + tf.random_normal(shape = [batch_size, dim_z], mean = 0.0, stddev = 1.0) * tf.exp(0.5 * logvar)

saver = tf.train.Saver()

with tf.Session() as sess:

saver.restore(sess, model_path)

return sess.run(z)

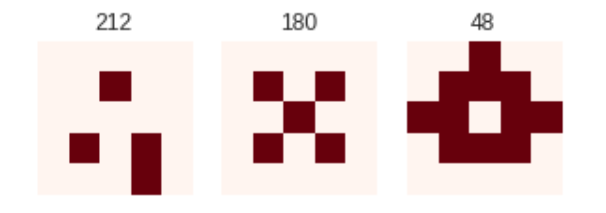

データ

# create data

def create_data(n_data_1, n_data_2, n_data_3, n_classes, p_h, p_l):

probs_1 = np.array([p_l, p_l, p_l, p_l, p_l, \

p_l, p_h, p_h, p_h, p_l, \

p_l, p_h, p_l, p_h, p_l, \

p_l, p_h, p_h, p_h, p_l, \

p_l, p_l, p_l, p_l, p_l])

probs_2 = np.array([p_l, p_l, p_l, p_l, p_l, \

p_l, p_h, p_l, p_h, p_l, \

p_l, p_l, p_h, p_l, p_l, \

p_l, p_h, p_l, p_h, p_l, \

p_l, p_l, p_l, p_l, p_l])

probs_3 = np.array([p_l, p_l, p_l, p_l, p_l, \

p_l, p_l, p_h, p_l, p_l, \

p_l, p_l, p_h, p_l, p_l, \

p_l, p_h, p_h, p_h, p_l, \

p_l, p_l, p_l, p_l, p_l])

p_x_1 = tf.contrib.distributions.Bernoulli(probs = probs_1)

p_x_2 = tf.contrib.distributions.Bernoulli(probs = probs_2)

p_x_3 = tf.contrib.distributions.Bernoulli(probs = probs_3)

x_1 = p_x_1.sample(n_data_1)

x_2 = p_x_2.sample(n_data_2)

x_3 = p_x_3.sample(n_data_3)

x = tf.concat([x_1, x_2, x_3], axis = 0)

y_1 = tf.one_hot([0] * n_data_1, depth = n_classes)

y_2 = tf.one_hot([1] * n_data_2, depth = n_classes)

y_3 = tf.one_hot([2] * n_data_3, depth = n_classes)

y = tf.concat([y_1, y_2, y_3], axis = 0)

with tf.Session() as sess:

x = sess.run(tf.cast(x, tf.float32))

y = sess.run(y)

return x, y

n_classes = 3

p_h = 0.95

p_l = 0.05

images_train, labels_train = create_data(300, 300, 300, n_classes, p_h, p_l)

images_test, labels_test = create_data(100, 100, 100, n_classes, p_h, p_l)

パラメータ

n_in = 5*5

n_units_1 = 128

dim_z = 2

learning_rate = 0.01

batch_size = 100

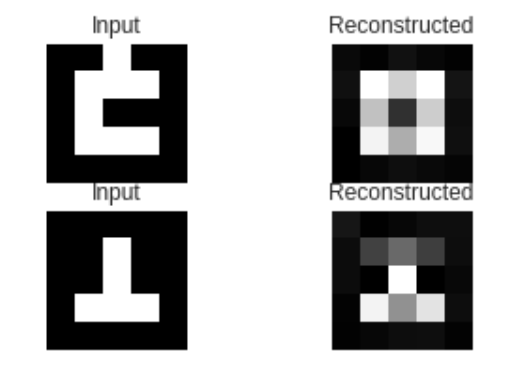

アウトプット

index = np.random.choice(300, batch_size)

x = images_test[index]

labels = np.argmax(labels_test[index], axis = 1)

reconstructed = ae.reconstruct_image(x, n_in, n_units_1, n_units_2, model_path)

print (np.shape(x))

print (np.shape(reconstructed))

fig = plt.figure(figsize = (5, 3))

ax1 = fig.add_subplot(2, 2, 1)

ax1.imshow(np.reshape(x[0], [5, 5]), cmap = 'gray')

ax1.set_axis_off()

ax1.set_title('Input')

ax2 = fig.add_subplot(2, 2, 2)

ax2.imshow(np.reshape(reconstructed[0], [5, 5]), cmap = 'gray')

ax2.set_axis_off()

ax2.set_title('Reconstructed')

ax3 = fig.add_subplot(2, 2, 3)

ax3.imshow(np.reshape(x[1], [5, 5]), cmap = 'gray')

ax3.set_axis_off()

ax3.set_title('Input')

ax4 = fig.add_subplot(2, 2, 4)

ax4.imshow(np.reshape(reconstructed[1], [5, 5]), cmap = 'gray')

ax4.set_axis_off()

ax4.set_title('Reconstructed')

plt.show()

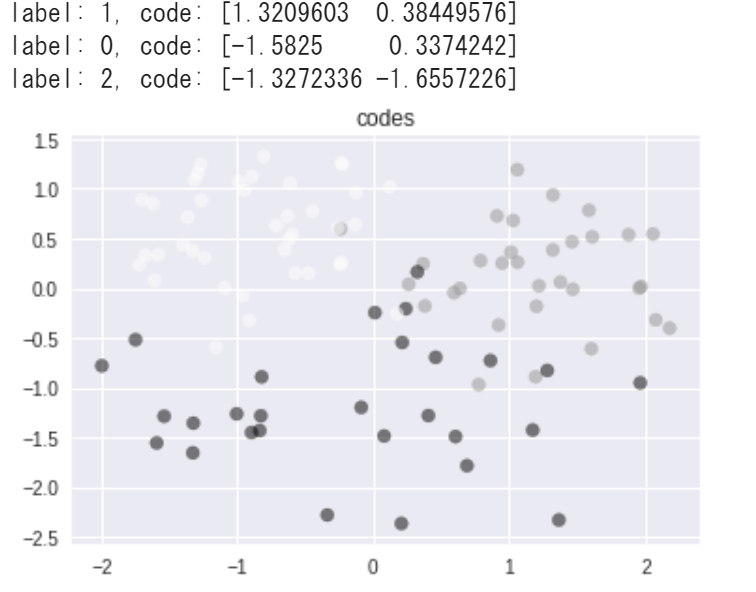

index = np.random.choice(300, batch_size)

x = images_test[index]

labels = np.argmax(labels_test[index], axis = 1)

codes = ae.generate_code(x, n_in, n_units_1, n_units_2, model_path)

print (np.shape(x))

print (np.shape(codes))

print ('label: {0}, code: {1}'.format(labels[0], codes[0]))

print ('label: {0}, code: {1}'.format(labels[1], codes[1]))

print ('label: {0}, code: {1}'.format(labels[2], codes[2]))

fig = plt.figure(figsize = (6, 4))

ax = fig.add_subplot(1, 1, 1)

ax.scatter(codes[:, 0], codes[:, 1], c = labels, alpha = 0.5)

ax.set_title('codes')

plt.show()

dim_z = 2