Oracle Clusterwareを使用すると、複数のサーバーが相互に通信することにより、1つの集合単位として機能するように見えるクラスタ・ソフトで、個々のサーバーはアプリケーションおよびエンド・ユーザーから1つのシステムとして認識されます。

目的は、クラウドでOracle Clusterwareを活用して、必要な状況でエンタープライズ・クラスの回復性を提供すること、および必要な場所と時間に計算リソースの動的なオンライン割当てを提供することです。

ということで今回、自己勉強用に家庭のVMware環境へOOracle Grid Infrastructure 19cをインストールしてみてみます。

・ORACLEソフトウェアとOSはソフトウェア・ダウンロードからダウンロードしてみてみます。

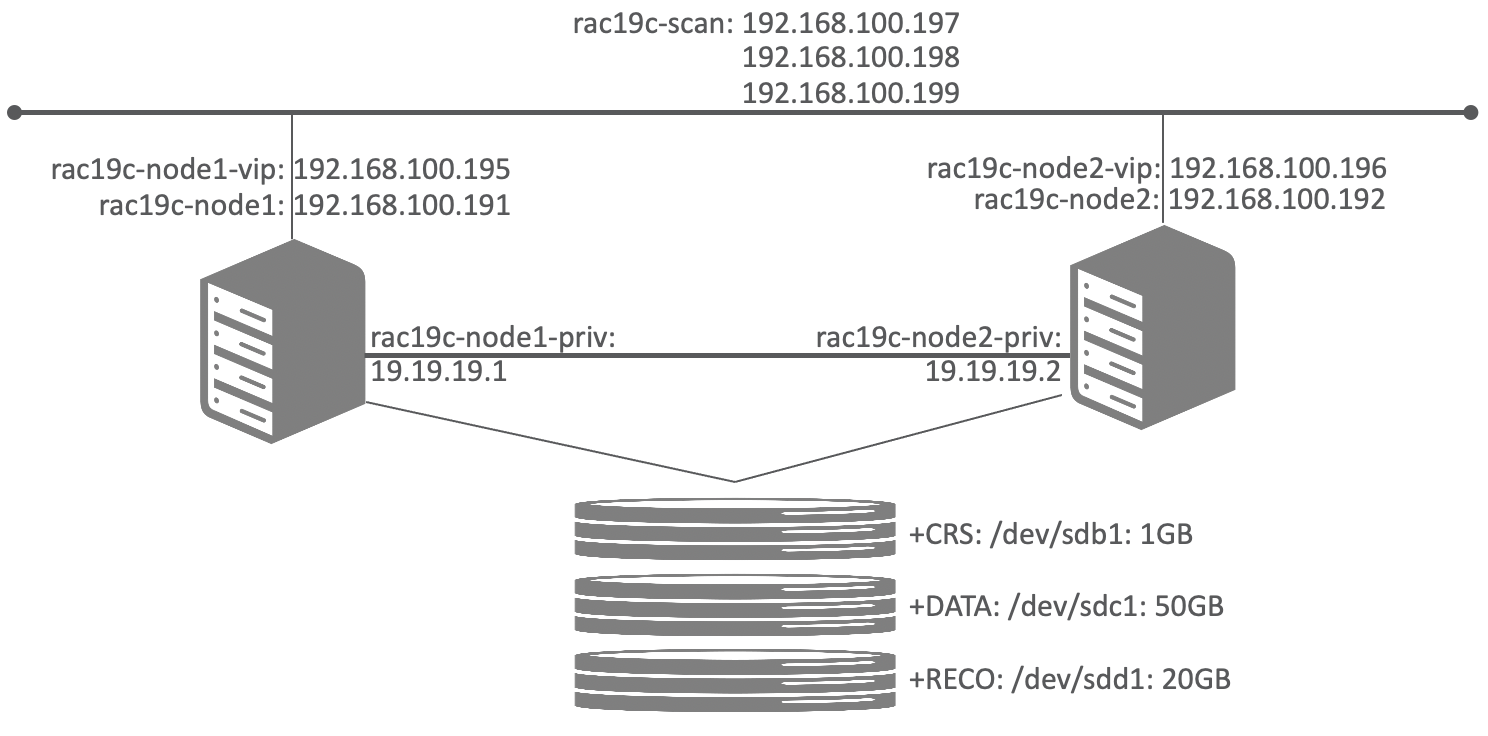

■ 構成イメージ

環境はVMwareを使用します。

・参考: VMware vSphere Hypervisor 7.0 (ESXi) をインストールしてみてみた

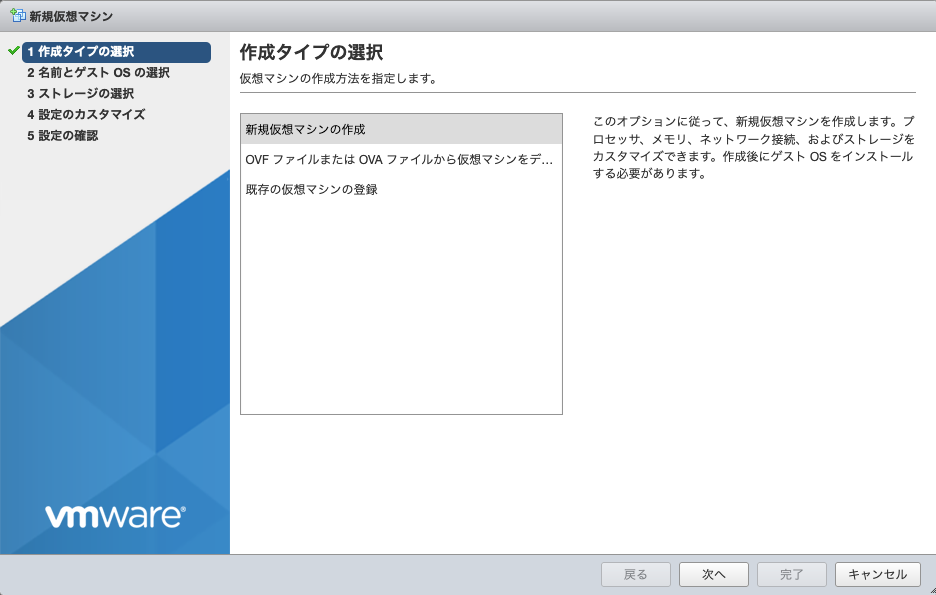

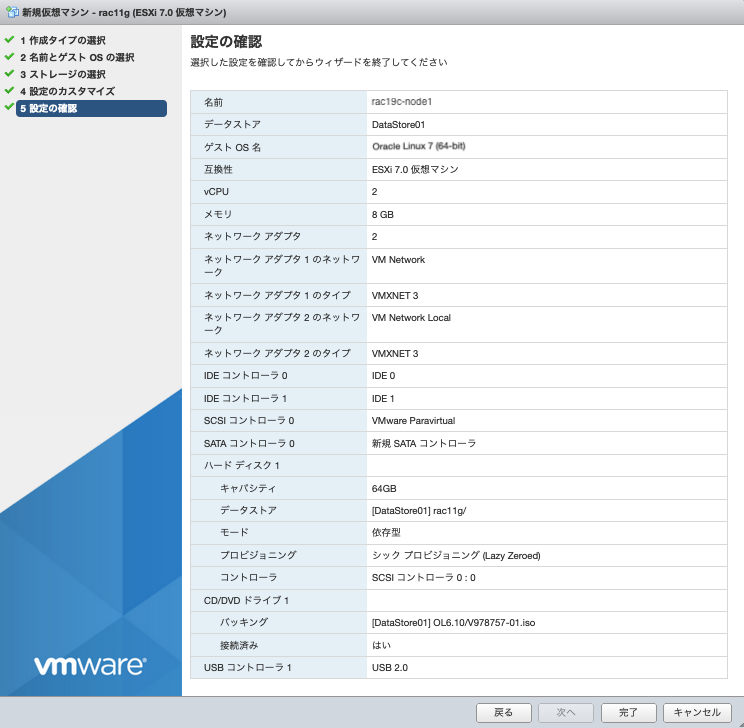

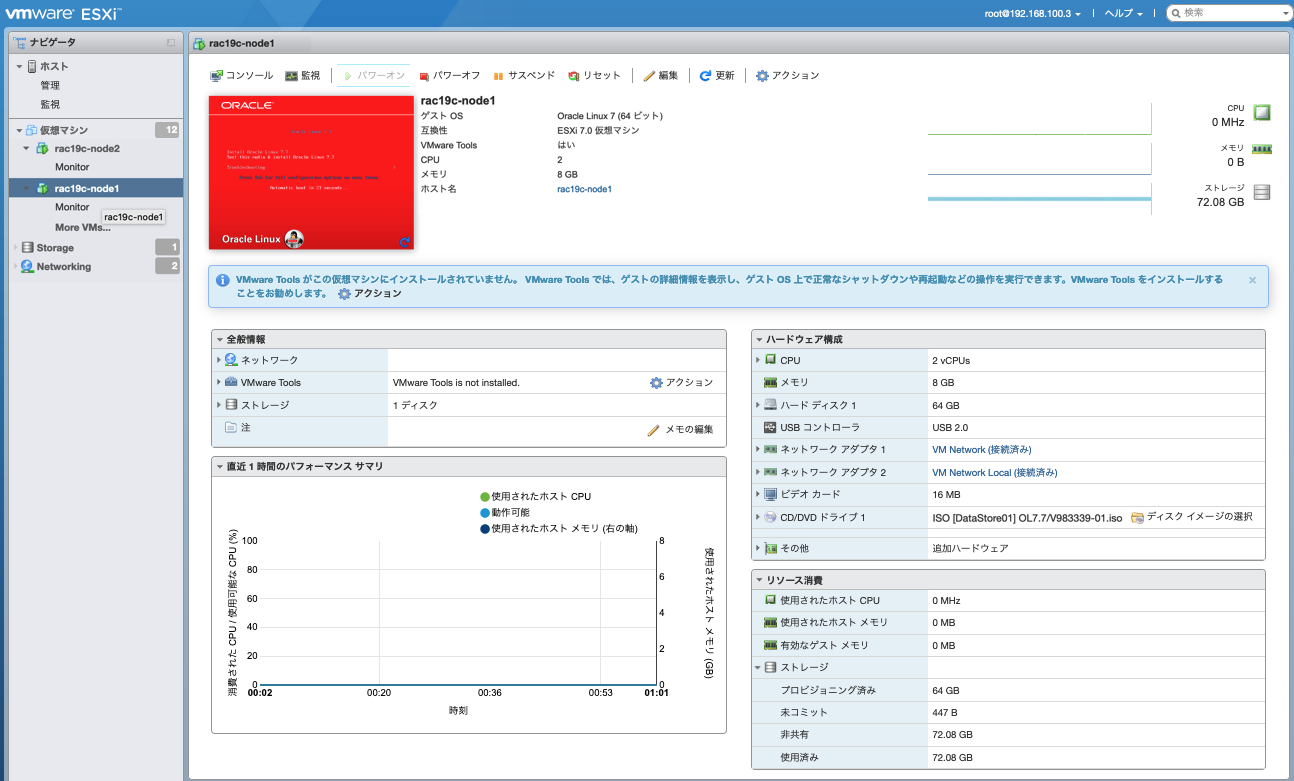

■ Oracle Linux 7 の仮想マシン作成

VMwareでOracle Linux 7の仮想マシンを作成

● RAC Node仮想マシン作成

RAC Node分の仮想マシンを以下のように作成

-

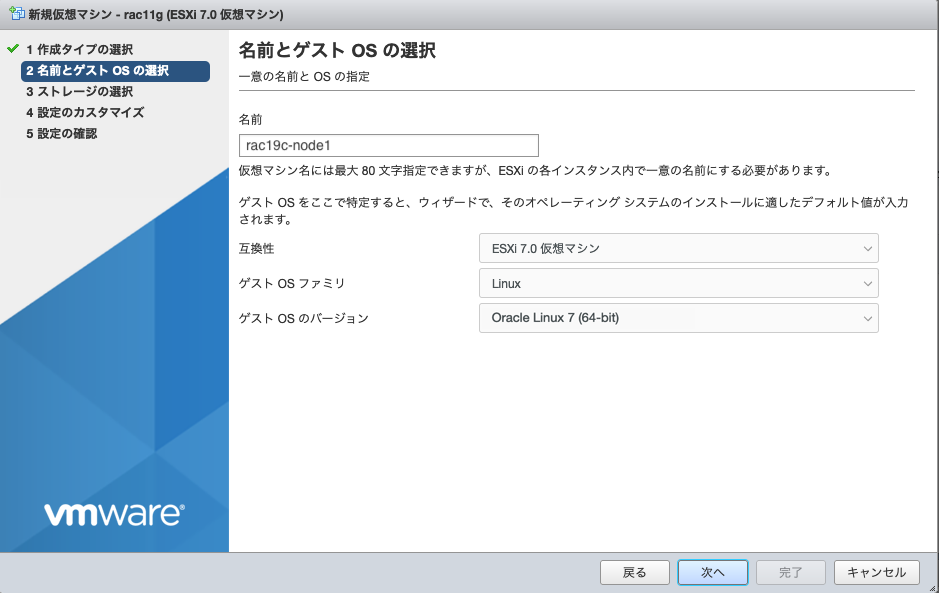

名前とゲストOSの選択

以下情報を入力し、[次へ]をクリック・名前: 仮想マシンの名前

・互換性: 互換対応したいESXiバージョン

・ゲストOSファミリ: Linuxを選択

・ゲストOSのバージョン: Oracle Linux 7(64bit)を選択

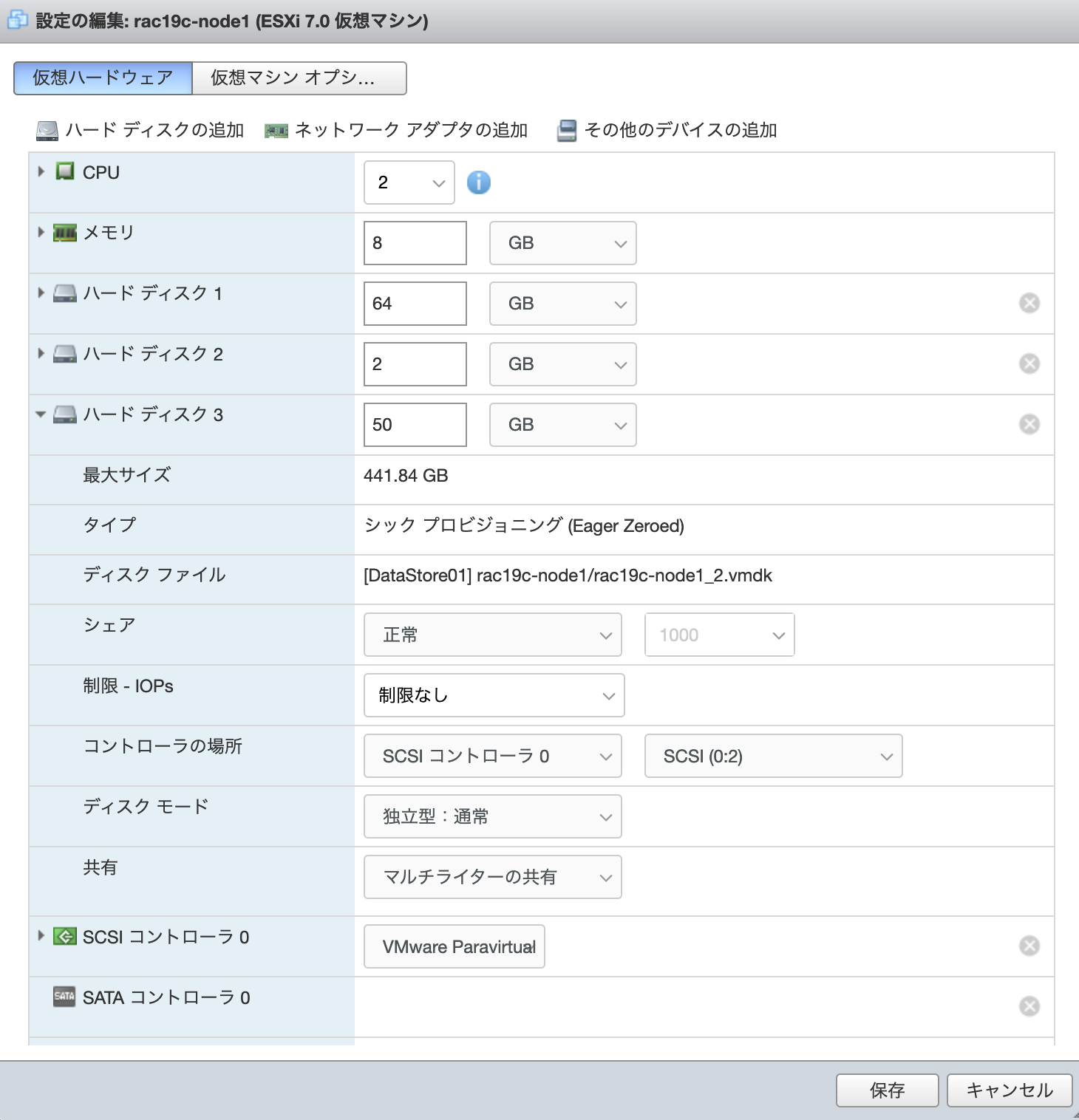

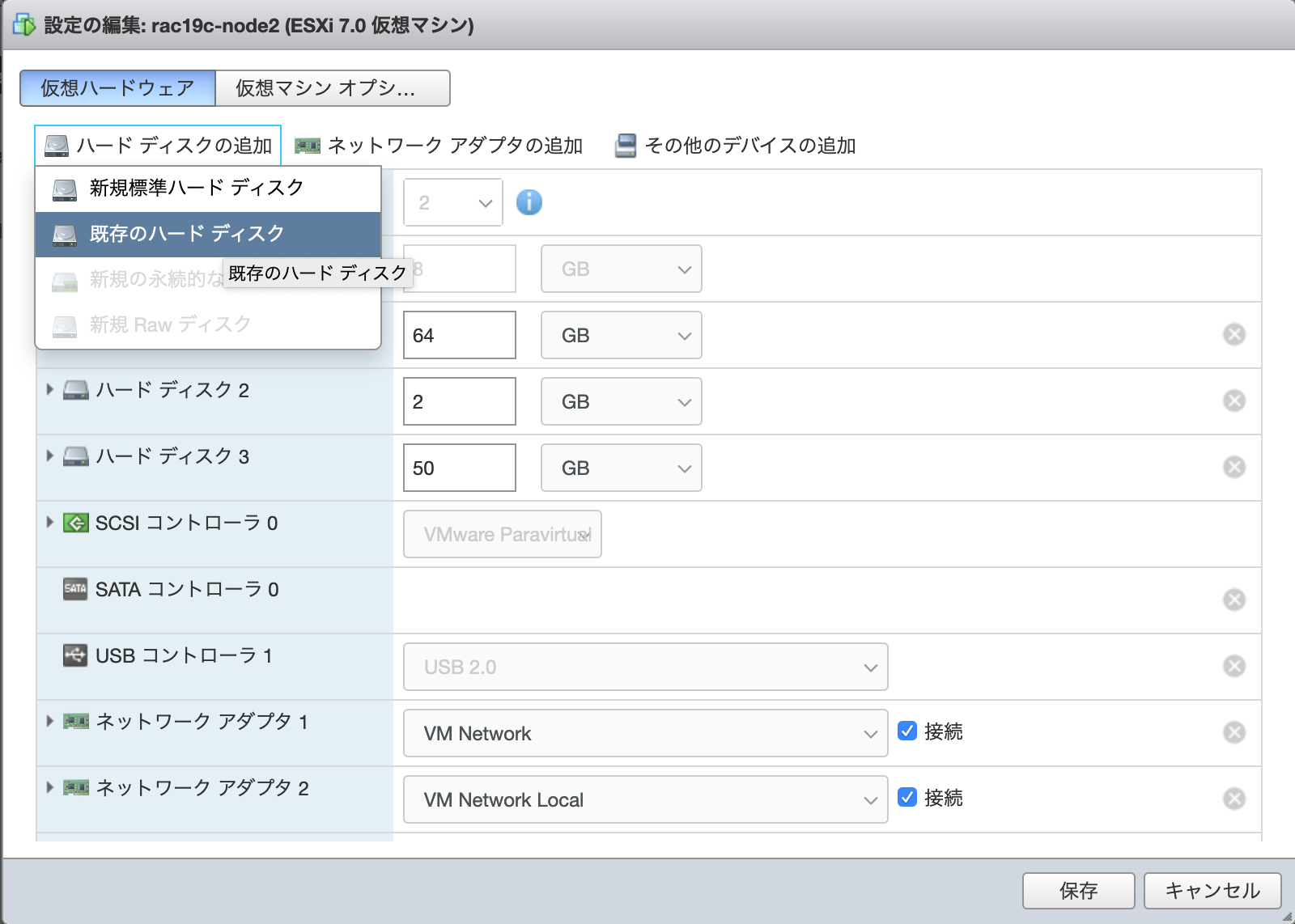

● GI用共有Disk追加

Node1の仮想マシンでGI用共有Diskを作成し、他ノードでその共有Diskを取り付けます

- Node1の仮想マシン実施

GI用共有DISKを4つ追加します。

以下画面のように以下項目を入力し、[保存]をクリック

・新規ハードディスク: OCR用Diskは1G, Database用DIskは必要量を入力

・ディスク プロビジョニング: [シック プロビジョンングEager Zeroed]を選択

・ディスク モード: [独立型: 通常]を選択

・共有: [マルチライターの共有]を選択

- Node1以外の仮想マシン

Node1で作成した共有Diskを取り付け

[ハードディスクの追加] > [既存のハードディスク]をクリックし、Node1で作成した共有Diskを取り付ける

■ OSインストール

Oracle Linux 7.7を使用

なるべく最小限のRPMでOSをインストールするため。以下のようにインストール

・パッケージ: Basicを選択、GUIをつかいたいのでXのKDMのみ追加

・Network関連: ホスト名、eth0とeht1は図面のように設定して

・Disk関連: Swapは8GB以上が要件

■ ORACLE インストール要件

x86-64でサポートされているOracle Linux 7のディストリビューションより以下Patches/Packagesをインストールします。

● Patches/Packages

次のパッケージの最新リリース・バージョンをインストール

bc

binutils

compat-libcap1

compat-libstdc++

elfutils-libelf

elfutils-libelf-devel

fontconfig-devel

glibc

glibc-devel

ksh

libaio

libaio-devel

libXrender

libXrender-devel

libX11

libXau

libXi

libXtst

libgcc

libstdc++

libstdc++-devel

libxcb

make

net-tools (for Oracle RAC and Oracle Clusterware)

nfs-utils (for Oracle ACFS)

python (for Oracle ACFS Remote)

python-configshell (for Oracle ACFS Remote)

python-rtslib (for Oracle ACFS Remote)

python-six (for Oracle ACFS Remote)

targetcli (for Oracle ACFS Remote)

smartmontools

sysstat

gcc

gcc-c++

gcc-info

gcc-locale

gcc48

gcc48-info

gcc48-locale

gcc48-c++

・RPMインストールコマンド例

以下コマンドもしくはrpmコマンドで必要パッケージインストール

yum install -y bc*

yum install -y binutils*

yum install -y compat-libcap1*

yum install -y compat-libstdc++*

yum install -y elfutils-libelf*

yum install -y elfutils-libelf-devel*

yum install -y fontconfig-devel*

yum install -y glibc*

yum install -y glibc-devel*

yum install -y ksh*

yum install -y libaio*

yum install -y libaio-devel*

yum install -y libXrender*

yum install -y libXrender-devel*

yum install -y libX11*

yum install -y ibXau*

yum install -y libXi*

yum install -y libXtst*

yum install -y libgcc*

yum install -y libstdc++*

yum install -y libstdc++-devel*

yum install -y libxcb*

yum install -y make*

yum install -y net-tools*

yum install -y nfs-utils*

yum install -y python*

yum install -y python-configshell*

yum install -y python-rtslib*

yum install -y python-six*

yum install -y targetcli*

yum install -y smartmontools*

yum install -y sysstat*

yum install -y gcc*

yum install -y gcc-c++*

yum install -y gcc-info*

yum install -y gcc-locale*

● Kernel settings

・カーネル要件設定

- sysctl.conf設定

Oracle用に97-oracle-database-sysctl.confを新規に作成設定

[root@rac19c-node1 ~]# vi /etc/sysctl.d/97-oracle-database-sysctl.conf

#Oracle 19c

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.ip_local_port_range = 9000 65500

kernel.sem = 250 32000 100 128

kernel.shmmax = 4294967295 #Half the size of physical memory in bytes

#kernel.shmall = 2097152 #Greater than or equal to the value of shmmax, in pages.

kernel.shmmni = 4096

fs.file-max = 6815744

fs.aio-max-nr = 1048576

kernel.panic_on_oops = 1

- 設定反映

[root@rac19c-node1 ~]# /sbin/sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /usr/lib/sysctl.d/60-libvirtd.conf ...

fs.aio-max-nr = 1048576

* Applying /etc/sysctl.d/97-oracle-database-sysctl.conf ...

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.ip_local_port_range = 9000 65500

kernel.sem = 250 32000 100 128

kernel.shmmax = 4294967295 #Half the size of physical memory in bytes

kernel.shmmni = 4096

fs.file-max = 6815744

fs.aio-max-nr = 1048576

kernel.panic_on_oops = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.conf ...

● カーネル設定反映

[root@rac19c-node1 etc]# sysctl -p

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

■ クラスタ時刻同期のためのネットワーク・タイム・プロトコル (NTP)の設定

Oracleクラスタ時刻同期化サービスは、クラスタ・サーバーからNTPサービスにアクセスできない組織のために設計されています。NTPを使用する場合は、Oracle Cluster時刻同期化デーモン(ctssd)は、オブザーバ・モードで起動します。NTPデーモンがない場合は、ctssdがアクティブ・モードで起動し、外部の時刻サーバーに接続しなくても、クラスタ・メンバー間の時刻が同期されます。

● NTPサービスの非アクティブ化

Network Time Protocol (NTP)サービスを非アクティブ化するには、ntpdサービスとchronydサービスを停止し、confファイルをリネームして無効にする必要があります。

- ntpdサービス停止

[root@rac19c-node1 ~]# systemctl stop ntpd

[root@rac19c-node1 ~]# systemctl disable ntpd

[root@rac19c-node1 ~]# mv /etc/ntp.conf /etc/ntp.conf.org

- chronydサービス停止

[root@rac19c-node1 ~]# systemctl stop chronyd

[root@rac19c-node1 ~]# systemctl disable chronyd

[root@rac19c-node1 ~]# mv /etc/chrony.conf /etc/chrony.conf.org

■ Oracle Grid InfrastructureおよびOracle Databaseユーザー、グループ環境構成

● OS Grupe作成

以下コマンドで、oracle, grid用グループ作成

groupadd -g 54321 oinstall

groupadd -g 54329 asmadmin

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54330 racdba

groupadd -g 54331 osasm

groupadd -g 54332 osdba

● Grid Infrastructureユーザ作成

- Gridユーザー作成

[root@rac19c-node1 ~]# /usr/sbin/useradd -u 54331 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,asmadmin grid

- ユーザー作成確認

[root@rac19c-node1 ~]# id -a grid

uid=54331(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54324(backupdba),54325(dgdba),54326(kmdba),54327(asmdba),54328(asmoper),54329(asmadmin),54330(racdba) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

- ユーザーパスワード設定

[root@rac19c-node1 ~]# passwd grid

Changing password for user grid.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

- 環境変数.bash_profile設定

[grid@rac19c-node1 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

umask 022

export LANG=C

export NLS_LANG=American_America.UTF8

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.0.0/grid

export ORACLE_SID=+ASM1

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$LD_LIBRARY_PATH

● Oracle Databaseユーザ作成

- Orale ユーザー作成

[root@rac19c-node1 ~]# /usr/sbin/useradd -u 54321 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba oracle

- ユーザー作成確認

[root@rac19c-node1 ~]# id -a oracle

id=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54323(oper),54324(backupdba),54325(dgdba),54326(kmdba),54330(racdba),54327(asmdba)

- ユーザーパスワード設定

[root@rac19c-node1 ~]# passwd oracle

Changing password for user oracle.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

● ULIMIT設定

- limits.conf設定

[root@rac19c1 ~]# vi /etc/security/limits.conf

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft stack 12040

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 12040

grid hard stack 32768

grid soft memlock 3145728

grid hard memlock 3145728

- soft limits確認

[root@rac19c-node1 ~]# su - grid

[grid@rac19c-node1 ~]$ ulimit -aS

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 31709

max locked memory (kbytes, -l) 134217728

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 16384

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

- Hard limits確認

[grid@rac19c-node1 ~]$ ulimit -aH

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 31709

max locked memory (kbytes, -l) 134217728

max memory size (kbytes, -m) unlimited

open files (-n) 65536

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 32768

cpu time (seconds, -t) unlimited

max user processes (-u) 16384

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

■ Oracle Grid InfrastructureおよびOracle RACのネットワークの構成

● hosts設定

[root@rac19c-node1 ~]# vi /etc/hosts

### Oracle Public IP

192.168.100.191 rac19c-node1.oracle.com rac19c-node1

192.168.100.192 rac19c-node2.oracle.com rac19c-node2

### Oracle VIP

192.168.100.195 rac19c-node1-vip.oracle.com rac19c-node1-vip

192.168.100.196 rac19c-node2-vip.oracle.com rac19c-node2-vip

### Oracle SCAN

192.168.100.197 rac19c-scan.oracle.com rac19c-scan

192.168.100.198 rac19c-scan.oracle.com rac19c-scan

192.168.100.199 rac19c-scan.oracle.com rac19c-scan

### Oracle Private IP(Interconnect)

19.19.19.1 rac19c-node1-priv1.oracle.com rac19c-node1-priv1

19.19.19.2 rac19c-node2-priv1.oracle.com rac19c-node2-priv1

● KVM用virbrインターフェース無効化

[root@rac19c-node1 ~]# systemctl stop libvirtd

[root@rac19c-node1 ~]# systemctl disable libvirtd

Removed symlink /etc/systemd/system/multi-user.target.wants/libvirtd.service.

Removed symlink /etc/systemd/system/sockets.target.wants/virtlogd.socket.

Removed symlink /etc/systemd/system/sockets.target.wants/virtlockd.socket.

■ Oracle Grid Infrastructureストレージ構成

● Automatic Storage Management(ASM)用記憶域構成

今回、以下ASM用共有ディスクは以下

| Devise File | ASM DIsk | 概要 |

|---|---|---|

| /dev/sdb | CRS | CRS記憶域(1GB) |

| /dev/sdc | DATA | DB記憶域 |

| /dev/sdd | RECO | DB FRA記憶域 |

###1) ASM用共有Disk事前確認

fdisk -l

Disk /dev/sdb: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdc: 53.7 GB, 53687091200 bytes, 104857600 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

###2) パーティション作成

共有Diskなので、片ノードだけで実施

以下のように、fidiskで、共有Disk全部にパーティション作成

###3) fdisk実行

[root@rac19c-node1 ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x8ac29fcb.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-4194303, default 4194303):

Using default value 4194303

Partition 1 of type Linux and of size 2 GiB is set

Command (m for help): p

Disk /dev/sdb: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x8ac29fcb

Device Boot Start End Blocks Id System

/dev/sdb1 2048 4194303 2096128 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

###4)パーティション作成確認

[root@rac19c-node1 ~]# fdisk -l

・・・

Disk /dev/sdb: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x8ac29fcb

Device Boot Start End Blocks Id System

/dev/sdb1 2048 4194303 2096128 83 Linux

Disk /dev/sdc: 53.7 GB, 53687091200 bytes, 104857600 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0xf9a6dcd9

Device Boot Start End Blocks Id System

/dev/sdc1 2048 104857599 52427776 83 Linux

Disk /dev/sdd: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0xb8639712

Device Boot Start End Blocks Id System

/dev/sdd1 2048 41943039 20970496 83 Linux

● Oracle ASMストレージ・デバイス・パスの永続性の構成

作成したASM共有 Diskのパーミッション設定

- UDEV設定

[root@rac19c-node1 ~]# vi /etc/udev/rules.d/99-oracle.rules

KERNEL=="sdb1", ACTION=="add|change",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sdc1", ACTION=="add|change",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sdd1", ACTION=="add|change",OWNER="grid", GROUP="asmadmin", MODE="0660"

- UDEV設定テスト

[root@rac19c-node1 ~]# udevtest /dev/sdb1

[root@rac19c-node1 ~]# udevtest /dev/sdc1

[root@rac19c-node1 ~]# udevtest /dev/sdd1

- 設定反映

UDEVサービスを再起動

[root@rac19c-node1 ~]# udevadm control --reload-rules

- パーミッション設定確認

[root@rac19c-node1 ~]# ls -la /dev | grep asm

drwxr-xr-x. 4 root root 0 Nov 26 04:53 oracleasm

brw-rw----. 1 grid asmadmin 8, 17 Nov 26 04:53 sdb1

brw-rw----. 1 grid asmadmin 8, 33 Nov 26 04:53 sdc1

brw-rw----. 1 grid asmadmin 8, 33 Nov 26 04:53 sdd1

- Disk 初期化と書き込み確認

gridユーザーで書き込み確認

[root@rac19c-node1 ~]# su - grid

[grid@rac19c-node1 ~]$ dd if=/dev/zero of=/dev/sdb1 bs=1024k count=100

[grid@rac19c-node1 ~]$ dd if=/dev/zero of=/dev/sdc1 bs=1024k count=100

[grid@rac19c-node1 ~]$ dd if=/dev/zero of=/dev/sdd1 bs=1024k count=100

● Oracle ASMLIBを使用したストレージ・デバイス・パス永続性構成

Oracle ASMLIBを使用してOracle ASMデバイスを構成

- ASMパッケージのインストール

[root@rac19c-node1 ~]# yum install -y oracleasm

[root@rac19c-node1 ~]# yum install -y oracleasm-support

[root@rac19c-node1 ~]# yum install oracleasmlib

- ASMlib構成

[root@rac19c-node1 ~]# /usr/sbin/oracleasm configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [n]: y

Writing Oracle ASM library driver configuration: done

- Oracleasmカーネルモジュールの読み込み

[root@rac19c-node1 ~]# /usr/sbin/oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module "oracleasm": oracleasm

Configuring "oracleasm" to use device physical block size

Mounting ASMlib driver filesystem: /dev/oracleasm

- 確認

[root@rac19c-node1 ~]# oracleasm status

Checking if ASM is loaded: yes

Checking if /dev/oracleasm is mounted: yes

- ASM Disk 設定

[root@rac19c-node1 ~]# oracleasm createdisk CRS /dev/sdb1

[root@rac19c-node1 ~]# oracleasm createdisk DATA /dev/sdc1

[root@rac19c-node1 ~]# oracleasm createdisk RECO /dev/sdd1

Writing disk header: done

Instantiating disk: done

- 設定反映

[root@rac19c-node1 ~]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

- 設定確認

・listdisks

[root@rac19c-node1 ~]# oracleasm listdisks

CRS

DATA

RECO

・ls

[root@rac19c-node1 ~]# ls -l /dev/oracleasm/disks

total 0

brw-rw----. 1 grid asmadmin 8, 17 Dec 3 08:21 CRS

brw-rw----. 1 grid asmadmin 8, 33 Dec 3 08:21 DATA

brw-rw----. 1 grid asmadmin 8, 49 Dec 3 08:20 RECO

- 他のクラスタ・ノードからマーキング設定読み込み

ノード2で以下実行し、設定確認

[root@rac19c-node2 ~]# oracleasm scandisks

[root@rac19c-node2 ~]# oracleasm listdisks

[root@rac19c-node2 ~]# ls -l /dev/oracleasm/disks/

total 0

brw-rw----. 1 grid asmadmin 8, 17 Dec 3 08:22 CRS

brw-rw----. 1 grid asmadmin 8, 33 Dec 3 08:22 DATA

brw-rw----. 1 grid asmadmin 8, 49 Dec 3 08:21 RECO

■ インストール前の作業の手動実行

● SE Linux停止

インストール前にSE Linux停止

- SE Linux確認

[root@rac19c-node1 ~]# getenforce

Enforcing

- SE Linux停止

[root@rac19c-node1 ~]# setenforce 0

- SE Linux自動起動停止

configファイルへ SELINUX=disabled を設定

[root@rac19c-node1 ~]# vi /etc/selinux/config

#SELINUX=enforcing

SELINUX=disabled

- SE Linux 自動起動停止確認

[root@rac19c-node1 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

#SELINUX=enforcing

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

- パラメータ"SELINUX"ではなく、間違えて"SELINUXTYPE" に設定してしまうとOSが起動されなくなります。その場合は Single-User ModeでOS起動し、configファイル修正して復旧します。

- SE Linux設定反映確認

OS再起動して、設定が反映されていることを確認

[root@rac19c-node1 ~]# getenforce

Disabled

● Firewall停止

- Firewall停止

# systemctl stop firewalld

- Firewall自動起動停止

# systemctl disable firewall

● avahi-daemon停止

- avahi-daemon停止

[root@rac19c-node1 ~]# systemctl stop avahi-daemon

- avahi-daemon自動起動停止

[root@rac19c-node1 ~]# systemctl disable avahi-daemon

■ Grid Infrastructureインストール

● ソフトウェア・インストール・ディレクトリの作成

[root@rac19c-node1 ~]# mkdir -p /u01/app/19.0.0/grid

[root@rac19c-node1 ~]# mkdir -p /u01/app/grid

[root@rac19c-node1 ~]# mkdir -p /u01/app/oracle

[root@rac19c-node1 ~]# chown -R grid:oinstall /u01

[root@rac19c-node1 ~]# chown oracle:oinstall /u01/app/oracle

[root@rac19c-node1 ~]# chmod -R 775 /u01/

● インストールメディア配置

Node1でダウンロードしたzipファイルをORACLE_HOMEへ展開

他ノードへは配布インストールされます

[root@rac19c-node1 ~]# su - grid

[grid@rac19c-node1 ~]$ cd /u01/app/19.0.0/grid

[grid@rac19c-node1 ~]$ unzip -q <download_location>/grid.zip

● Linuxのcvuqdisk RPMインストール

[root@rac19c-node1 rpm]# cd /u01/app/19.0.0/grid/cv/rpm

[root@rac19c-node1 rpm]# ls -l

total 12

-rw-r--r--. 1 grid oinstall 11412 Mar 13 2019 cvuqdisk-1.0.10-1.rpm

[root@rac19c-node1 rpm]# rpm -ivh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

● CVU (runcluvfy.sh)インストール前チェック

クラスタ検証ユーティリティ(CVU)は、インストール、パッチ更新またはその他のシステム変更に備えてシステム・チェックを行います。

CVUを使用すると、必要なシステム構成やインストール前の手順を確実に行えるようになり、Oracle Grid InfrastructureやOracle Real Application Clusters (Oracle RAC)のインストール、更新またはパッチ操作を正常に完了できます。

・runcluvfy.sh実行

すべてのクラスタ・ノードがインストール要件を満たしていることを検証

[grid@rac19c-node1 grid]$ cd /u01/app/19.0.0/grid

[grid@rac19c-node1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac19c-node1,rac19c-node2

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...PASSED

Verifying Free Space: rac19c-node1:/usr,rac19c-node1:/var,rac19c-node1:/etc,rac19c-node1:/sbin,rac19c-node1:/tmp ...PASSED

Verifying Free Space: rac19c-node2:/usr,rac19c-node2:/var,rac19c-node2:/etc,rac19c-node2:/sbin,rac19c-node2:/tmp ...PASSED

Verifying User Existence: grid ...

Verifying Users With Same UID: 54331 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: asmadmin ...PASSED

Verifying Group Existence: asmdba ...PASSED

Verifying Group Existence: oinstall ...PASSED

Verifying Group Membership: asmdba ...PASSED

Verifying Group Membership: asmadmin ...PASSED

Verifying Group Membership: oinstall(Primary) ...PASSED

Verifying Run Level ...PASSED

Verifying Hard Limit: maximum open file descriptors ...PASSED

Verifying Soft Limit: maximum open file descriptors ...PASSED

Verifying Hard Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum stack size ...PASSED

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...PASSED

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: ip_local_port_range ...PASSED

Verifying OS Kernel Parameter: rmem_default ...PASSED

Verifying OS Kernel Parameter: rmem_max ...PASSED

Verifying OS Kernel Parameter: wmem_default ...PASSED

Verifying OS Kernel Parameter: wmem_max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...PASSED

Verifying OS Kernel Parameter: panic_on_oops ...PASSED

Verifying Package: kmod-20-21 (x86_64) ...PASSED

Verifying Package: kmod-libs-20-21 (x86_64) ...PASSED

Verifying Package: binutils-2.23.52.0.1 ...PASSED

Verifying Package: compat-libcap1-1.10 ...PASSED

Verifying Package: libgcc-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-devel-4.8.2 (x86_64) ...PASSED

Verifying Package: sysstat-10.1.5 ...PASSED

Verifying Package: ksh ...PASSED

Verifying Package: make-3.82 ...PASSED

Verifying Package: glibc-2.17 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.17 (x86_64) ...PASSED

Verifying Package: libaio-0.3.109 (x86_64) ...PASSED

Verifying Package: libaio-devel-0.3.109 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-6.2-4 ...PASSED

Verifying Package: net-tools-2.0-0.17 ...PASSED

Verifying Port Availability for component "Oracle Notification Service (ONS)" ...PASSED

Verifying Port Availability for component "Oracle Cluster Synchronization Services (CSSD)" ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...PASSED

Verifying Package: cvuqdisk-1.0.10-1 ...PASSED

Verifying Host name ...PASSED

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.100.0" ...PASSED

Verifying subnet mask consistency for subnet "19.19.19.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying Multicast or broadcast check ...PASSED

Verifying Network Time Protocol (NTP) ...PASSED

Verifying Same core file name pattern ...PASSED

Verifying User Mask ...PASSED

Verifying User Not In Group "root": grid ...PASSED

Verifying Time zone consistency ...PASSED

Verifying Time offset between nodes ...PASSED

Verifying resolv.conf Integrity ...PASSED

Verifying DNS/NIS name service ...PASSED

Verifying Domain Sockets ...PASSED

Verifying /boot mount ...PASSED

Verifying Daemon "avahi-daemon" not configured and running ...PASSED

Verifying Daemon "proxyt" not configured and running ...PASSED

Verifying User Equivalence ...PASSED

Verifying RPM Package Manager database ...INFORMATION (PRVG-11250)

Verifying /dev/shm mounted as temporary file system ...PASSED

Verifying File system mount options for path /var ...PASSED

Verifying DefaultTasksMax parameter ...PASSED

Verifying zeroconf check ...PASSED

Verifying ASM Filter Driver configuration ...PASSED

Verifying Systemd login manager IPC parameter ...PASSED

Pre-check for cluster services setup was successful.

Verifying RPM Package Manager database ...INFORMATION

PRVG-11250 : The check "RPM Package Manager database" was not performed because

it needs 'root' user privileges.

CVU operation performed: stage -pre crsinst

Date: Dec 2, 2020 5:48:48 AM

CVU home: /u01/app/19.0.0/grid/

User: grid

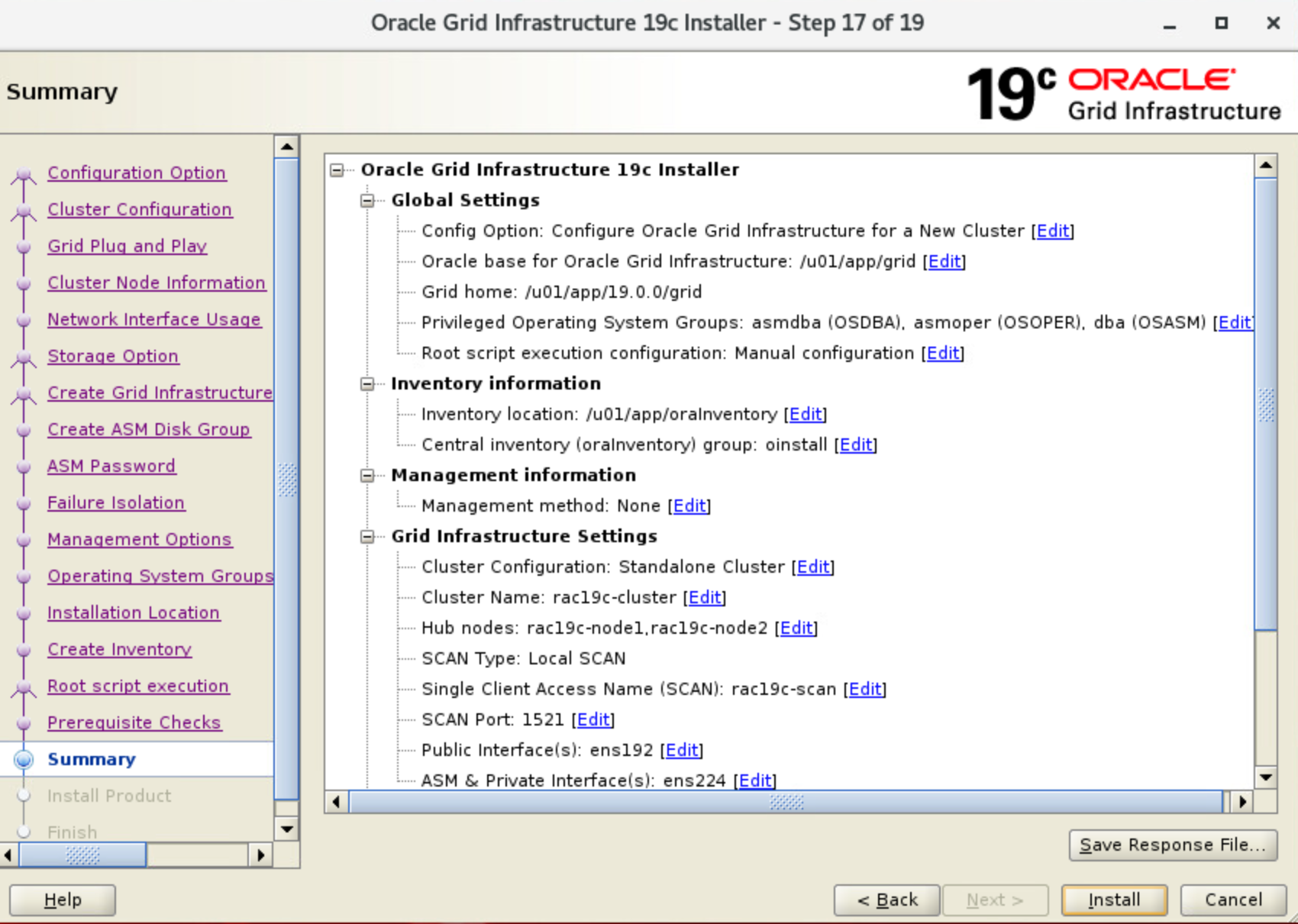

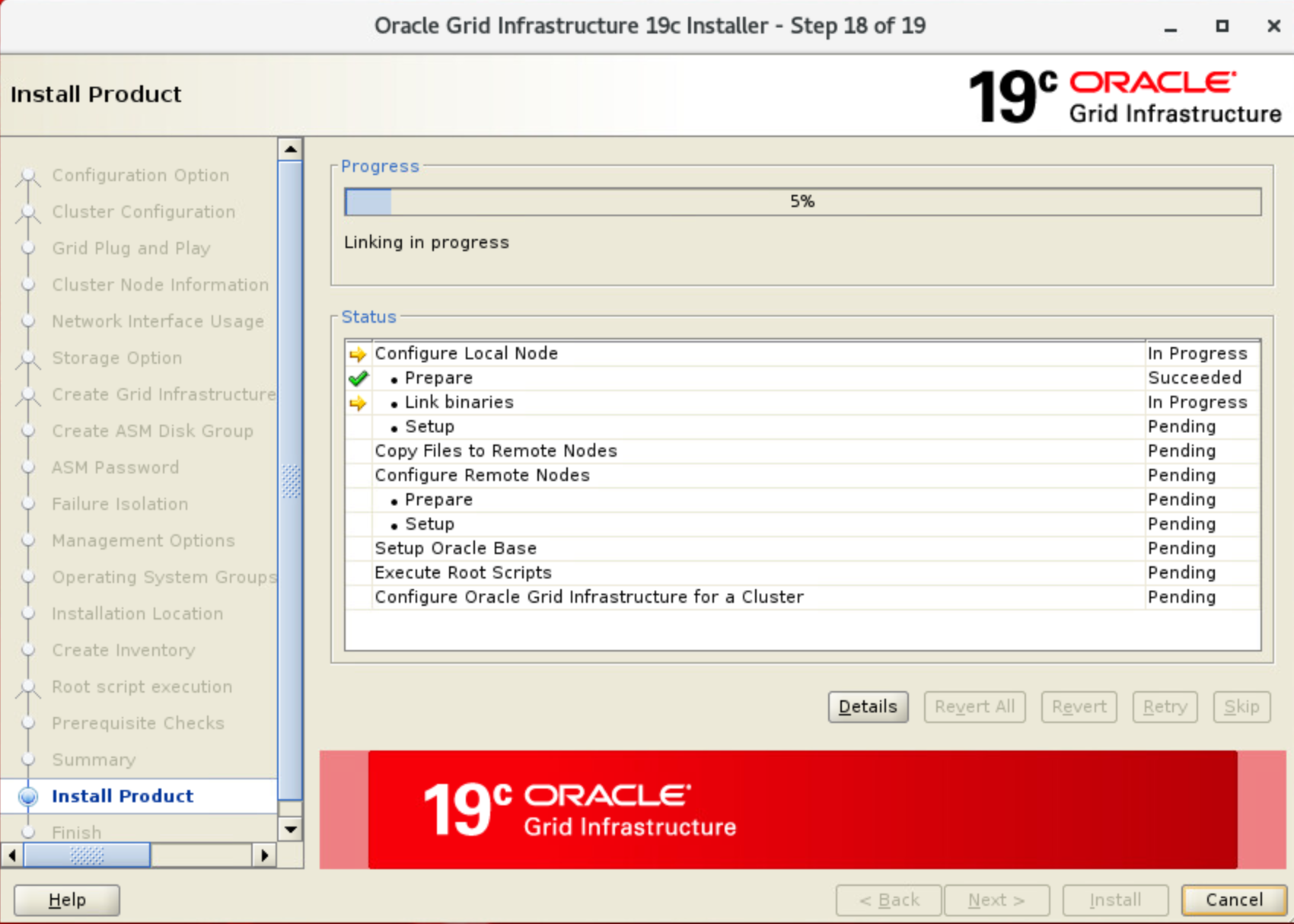

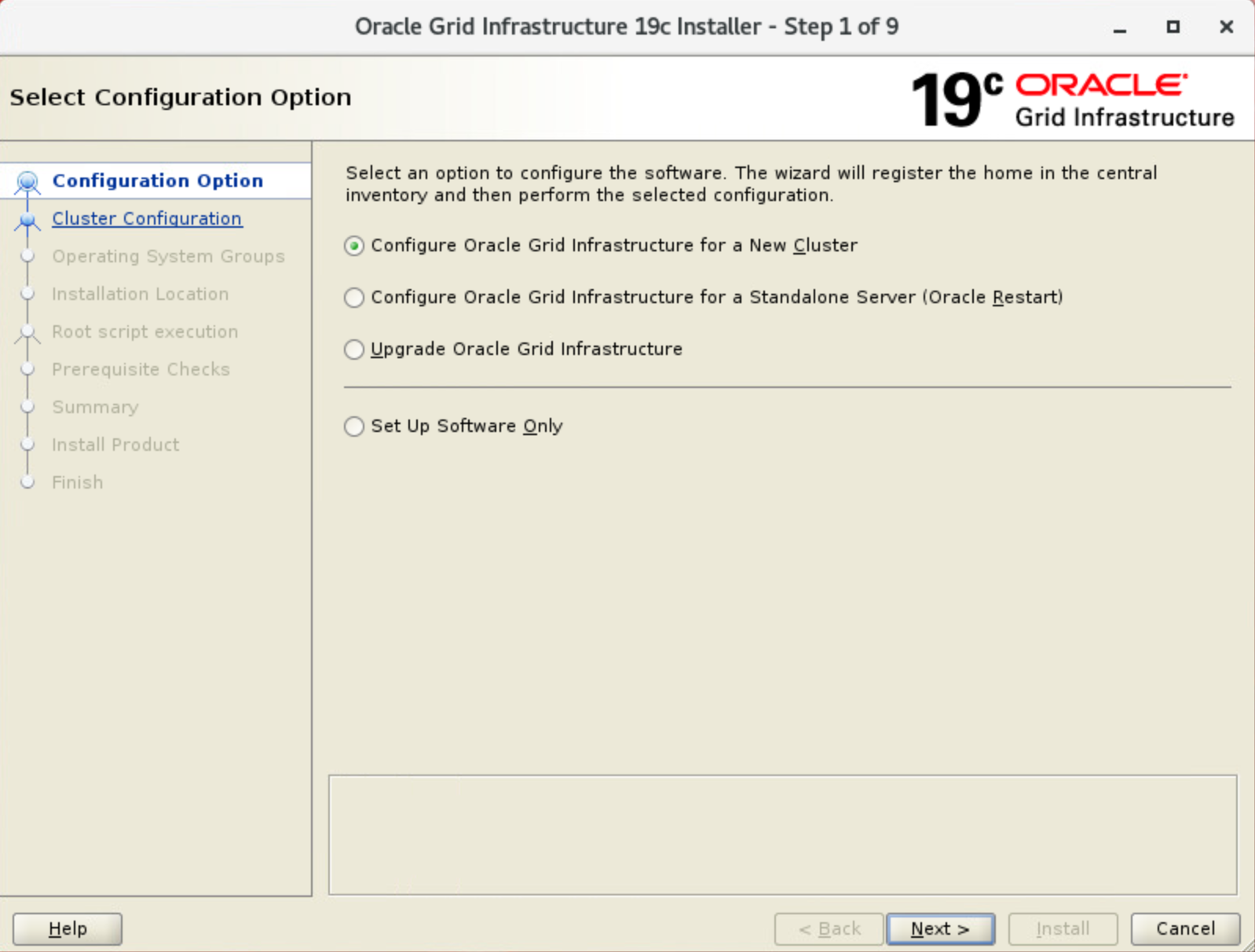

● OUIインストーラー起動

GUIモードでOracle Grid Infrastructure構成ウィザードを開始して、ソフトウェア・バイナリを構成

ローカル・ノードの構成が完了すると、OUIによりOracle Grid Infrastructure構成ファイルが他のクラスタ・メンバー・ノードにコピーされます。

- gridSetup.sh実行

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/gridSetup.sh

-

Select Configuration Option画面

[Configure Oracle Grid Infrastructure a New Cluster] を選択し、[Next]をクリック

-

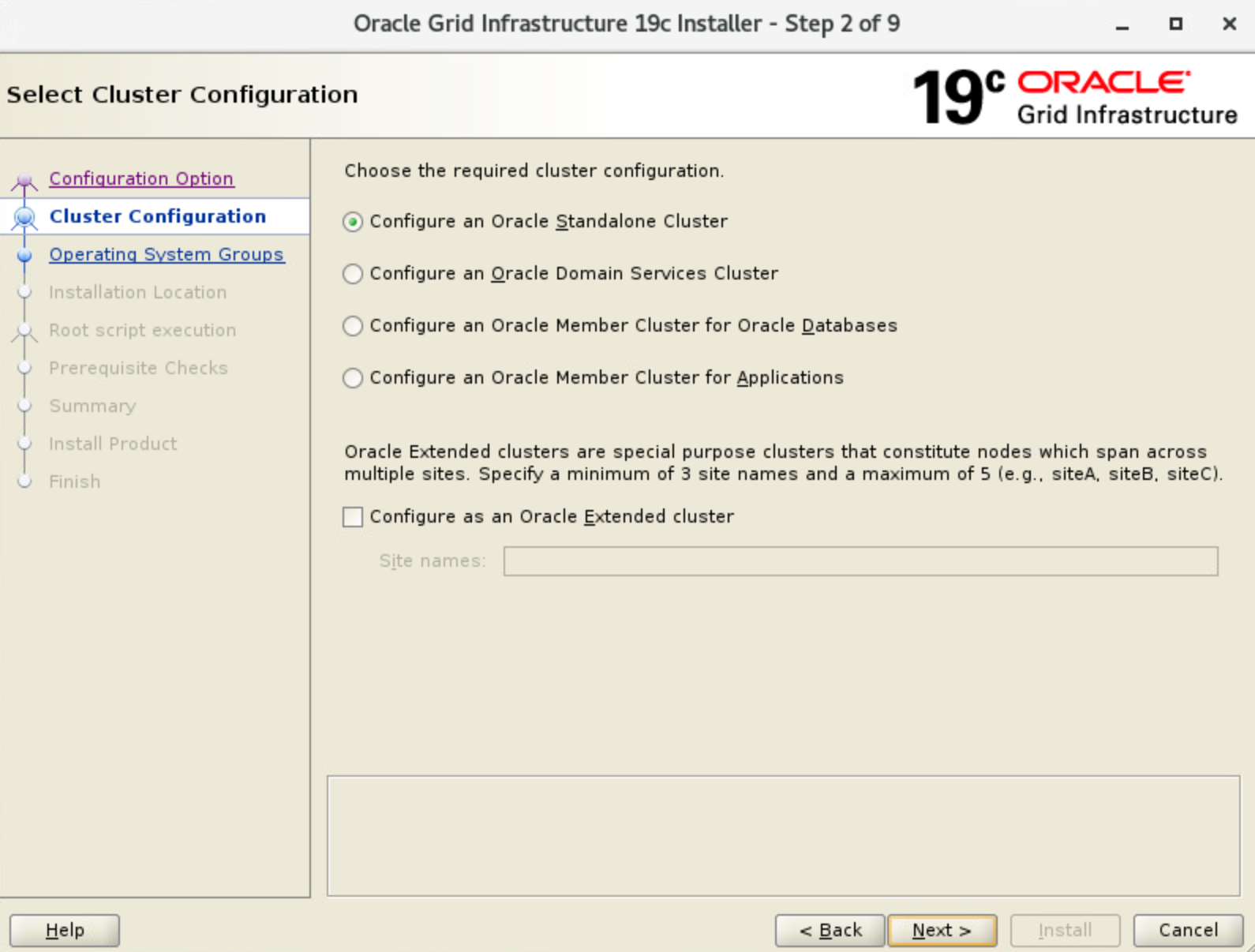

Select Cluster Configuration画面

[Configure Oracle Standalone Cluster] を選択し、[Next]をクリック

-

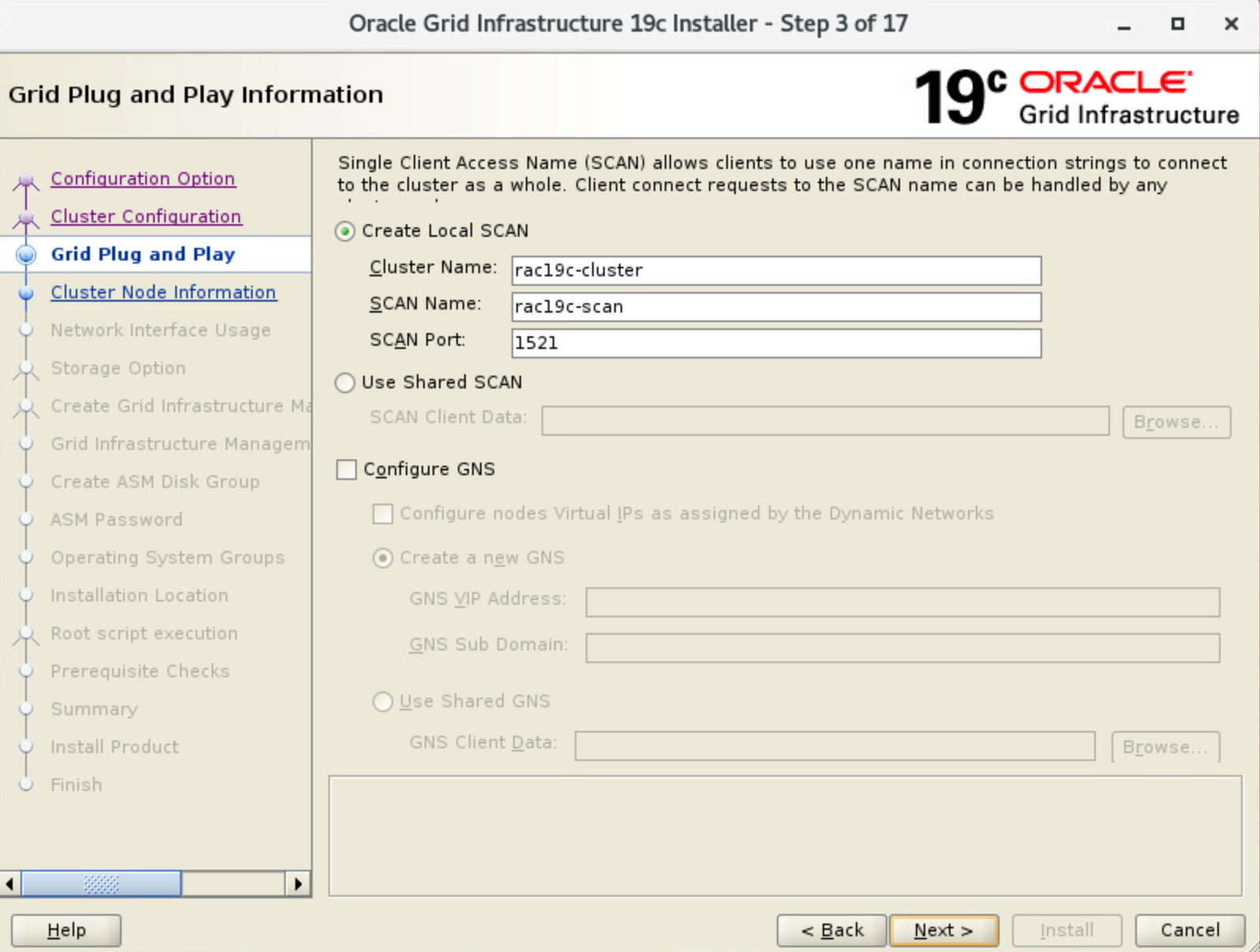

Grid Plug and Play Information画面

以下項目を選択し、[Next]をクリック・Cluster Name: Cluster名

・SCAN NAME: DNSで名前解決できるSCANホスト名

・SCAN Port: SCANリスーナーのPort番号

-

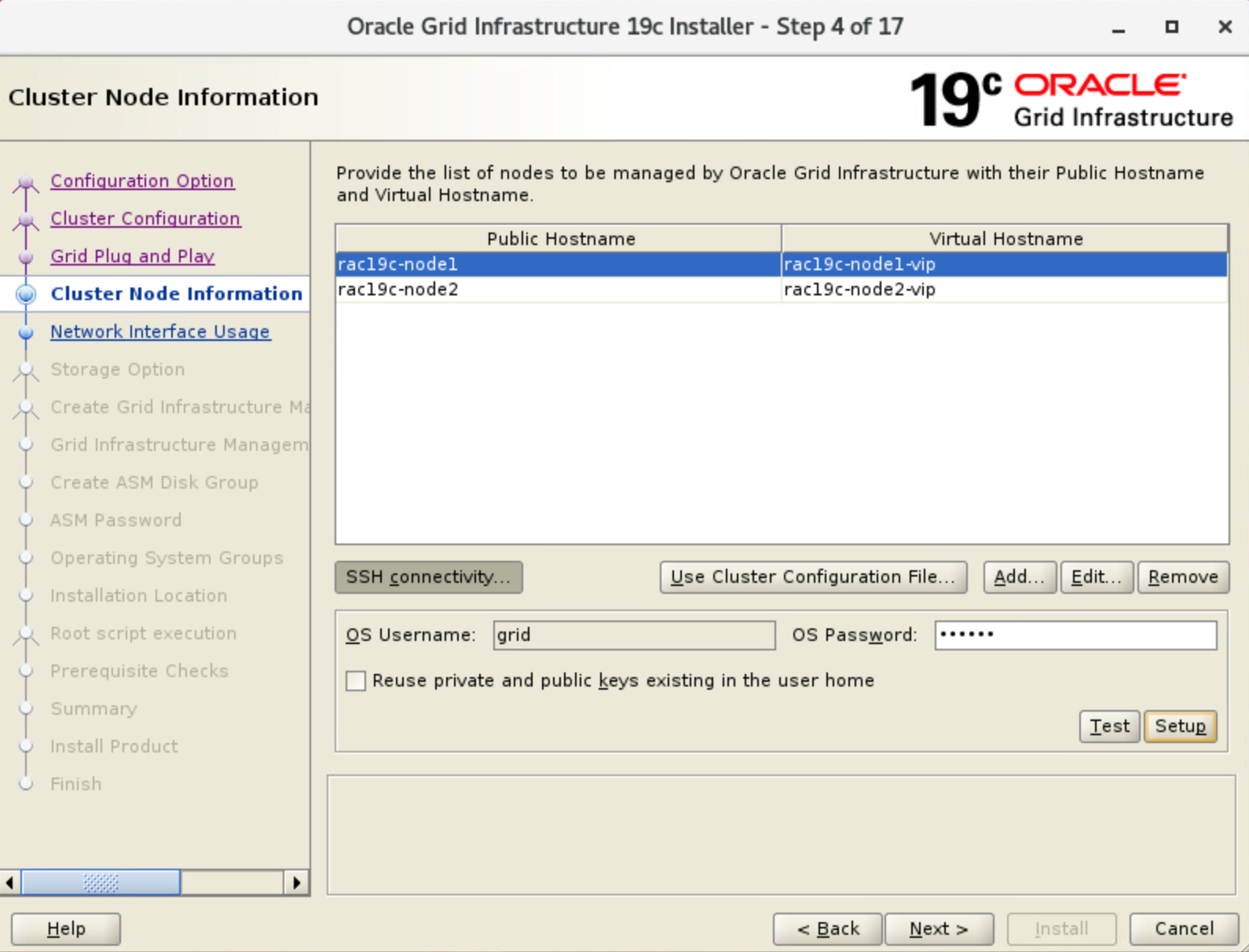

Cluster Node Information画面

[Add]をクリックしてインストールするノードを入力し、[SSH Connectivity]をクリックして、[Setup]を実行

gridユーザーでInstall全ノードどうしがパスワード無しssh鍵で接続できるよう自動設定してくれます

-

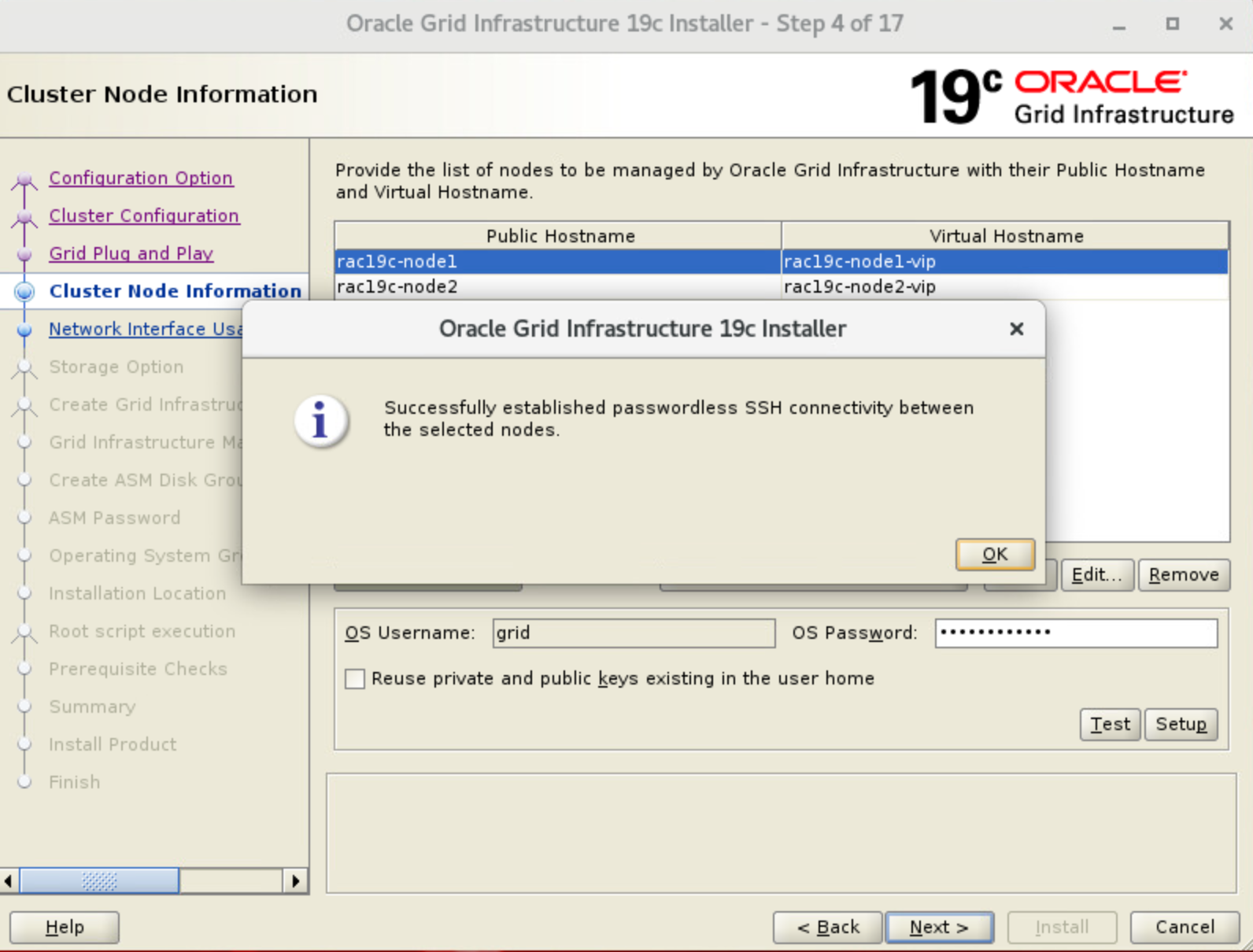

Successfullyプロンプト

[OK]をクリックし、[Next]をクリック

Successfullyしなかった場合は、sshで全ノードsshで接続できることを確認し、再実行します

Successfullyしたら、[Advanced Installation] を選択し、[Next]をクリック

-

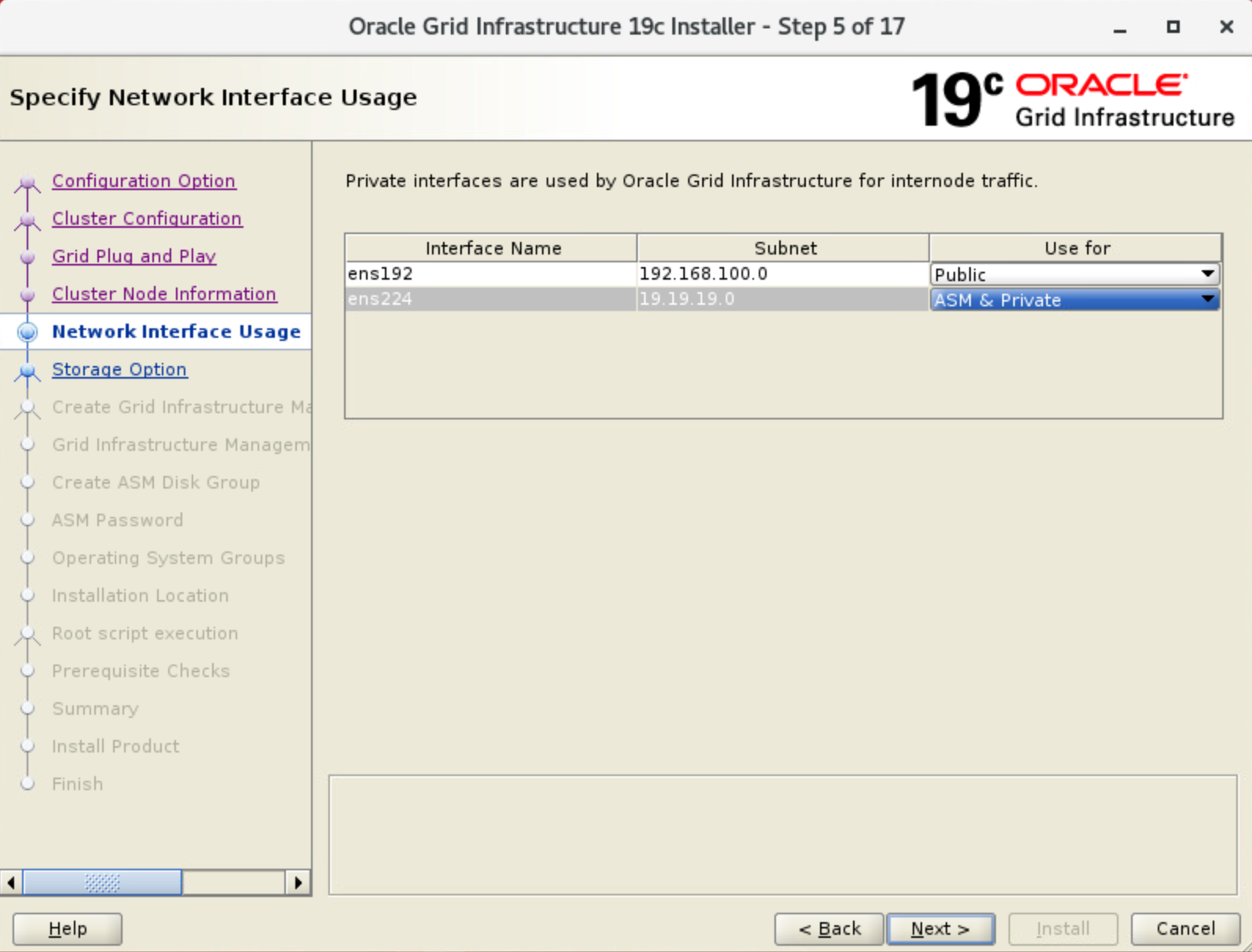

Specify Network Interface Usage画面

Interconnectは、ASMとPrivateを共有するので[ASM & Private]を選択

Interface NameとSubnet,Use for が正しいことを確認し、[Next]をクリックens192: Public

ens224: ASM & Private

-

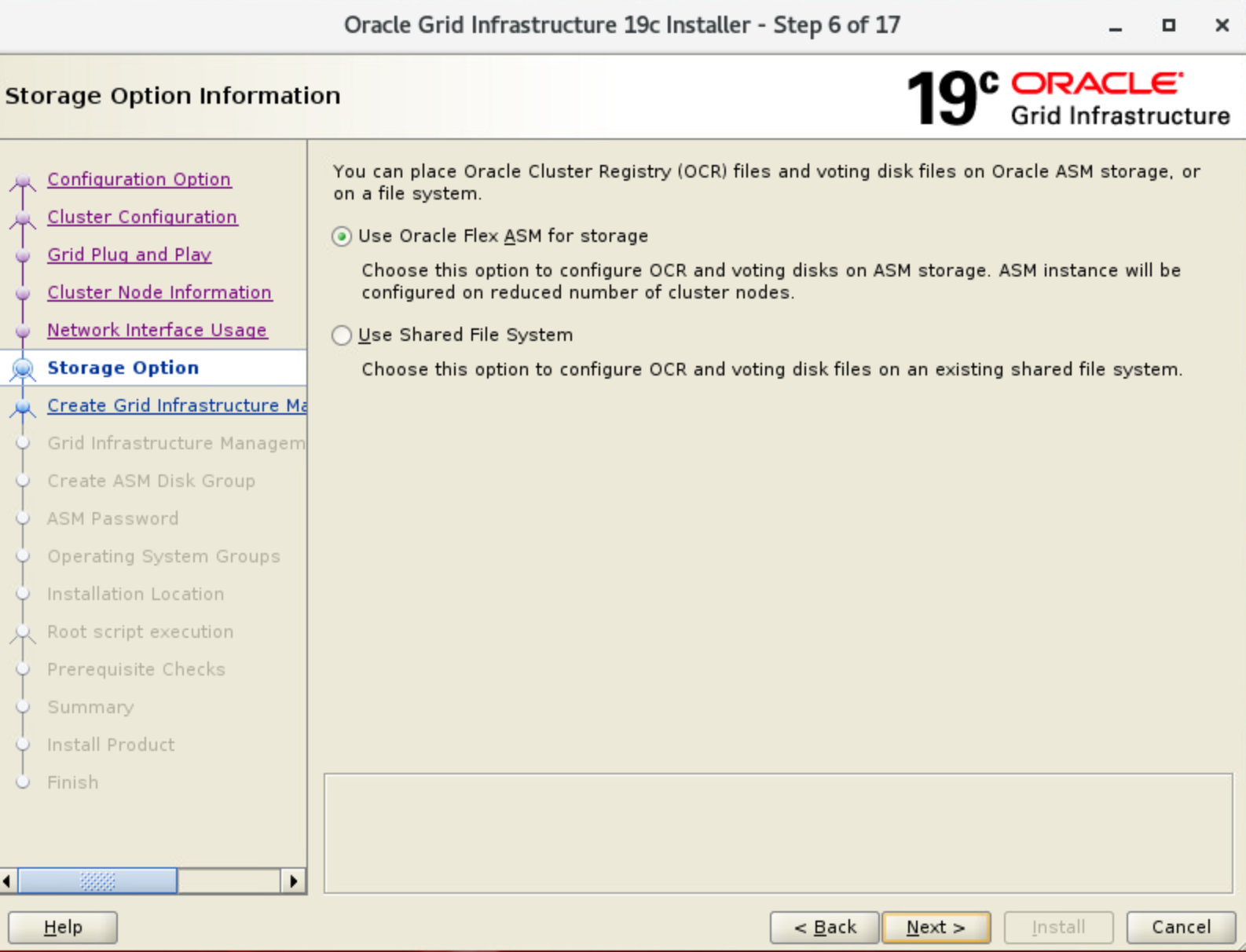

Storage OPtion Information画面

[Use Oracle Flex ASM for storage] を選択し、[Next]をクリック

-

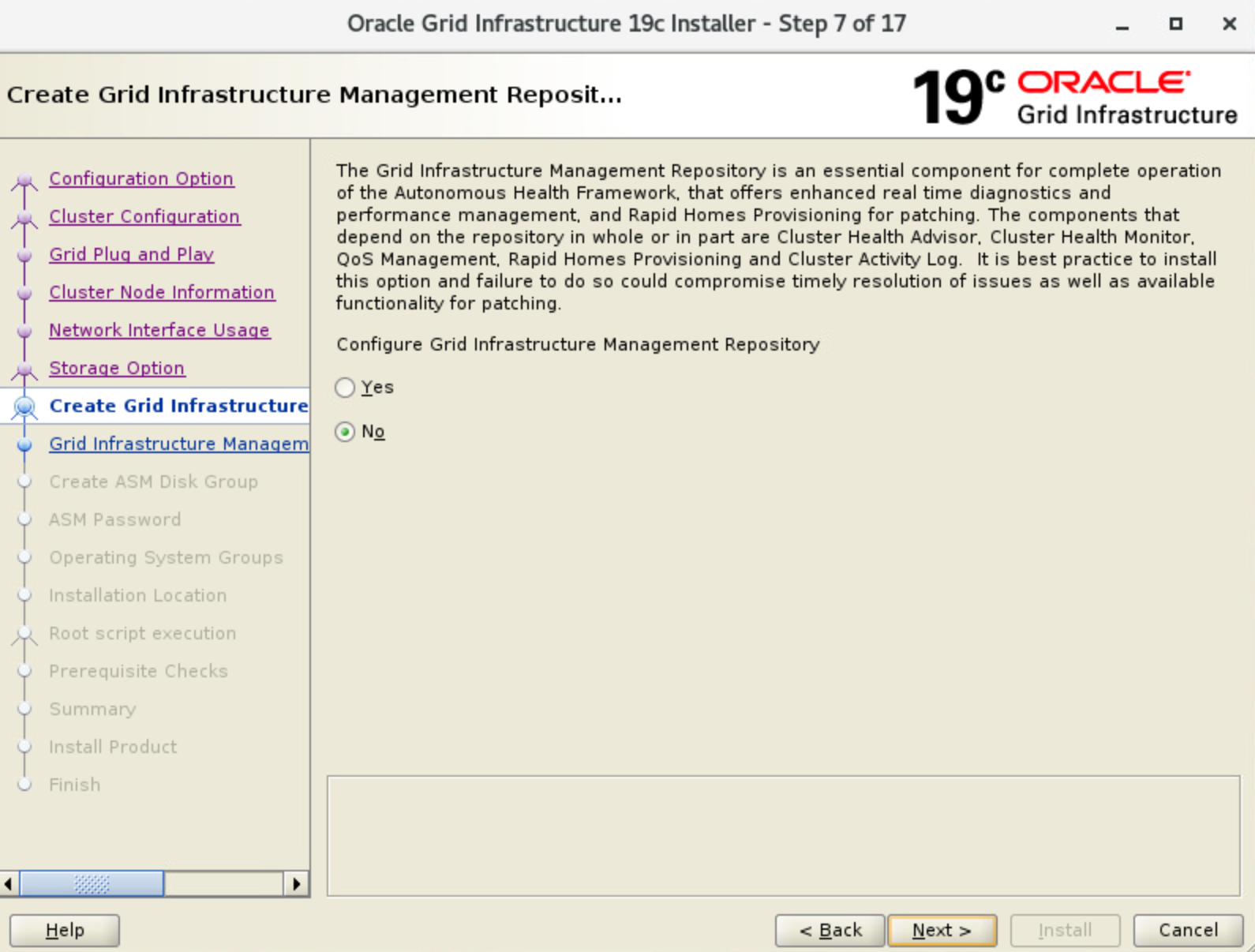

CreateCreate Grid Infrastructure Management Reposit...画面

今回、Grid Infrastructure Management Repository (GIMR)を使用しないため、[No] を選択し、[Next]をクリック

GIMRを使用する場合は、[Yes]を選択

-

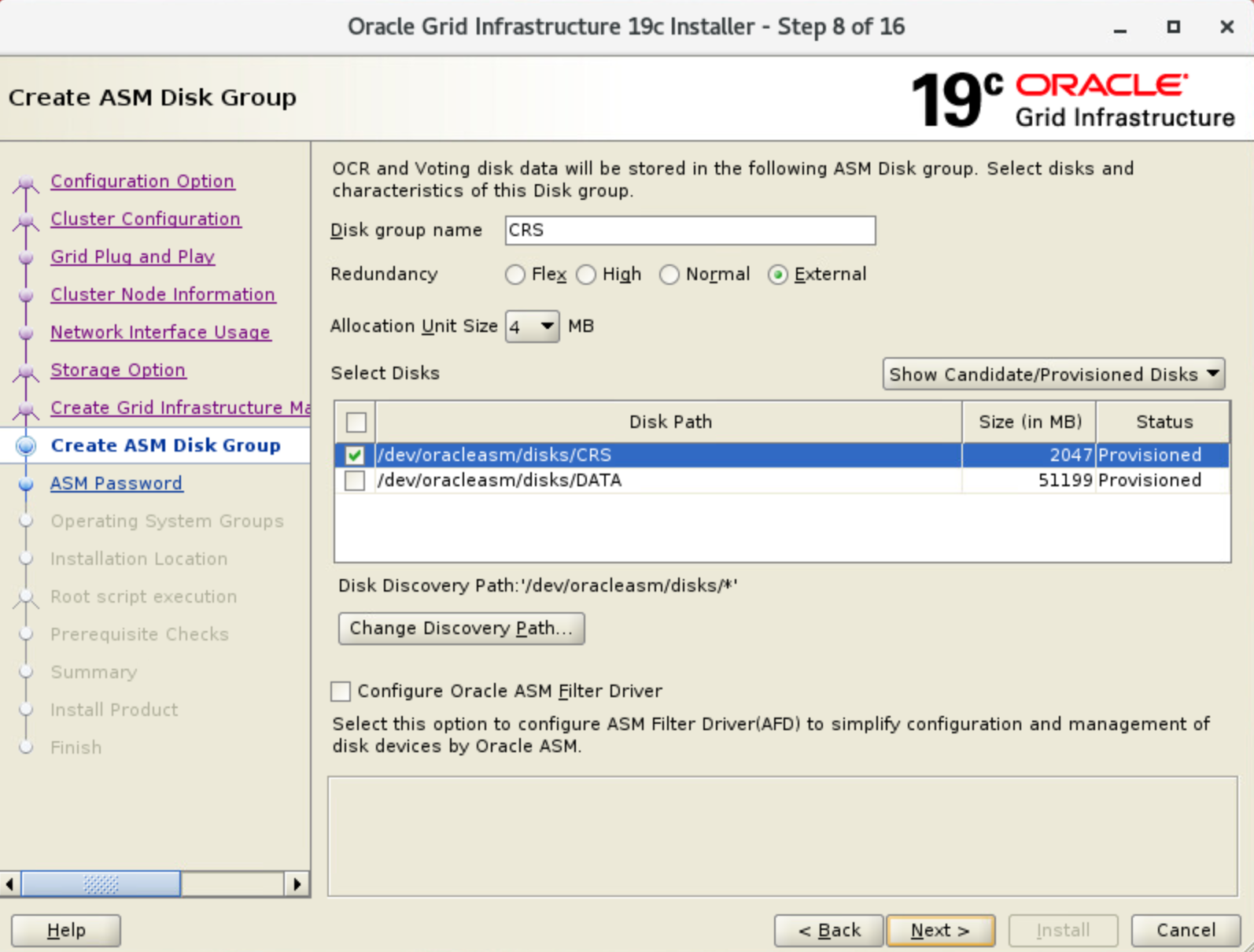

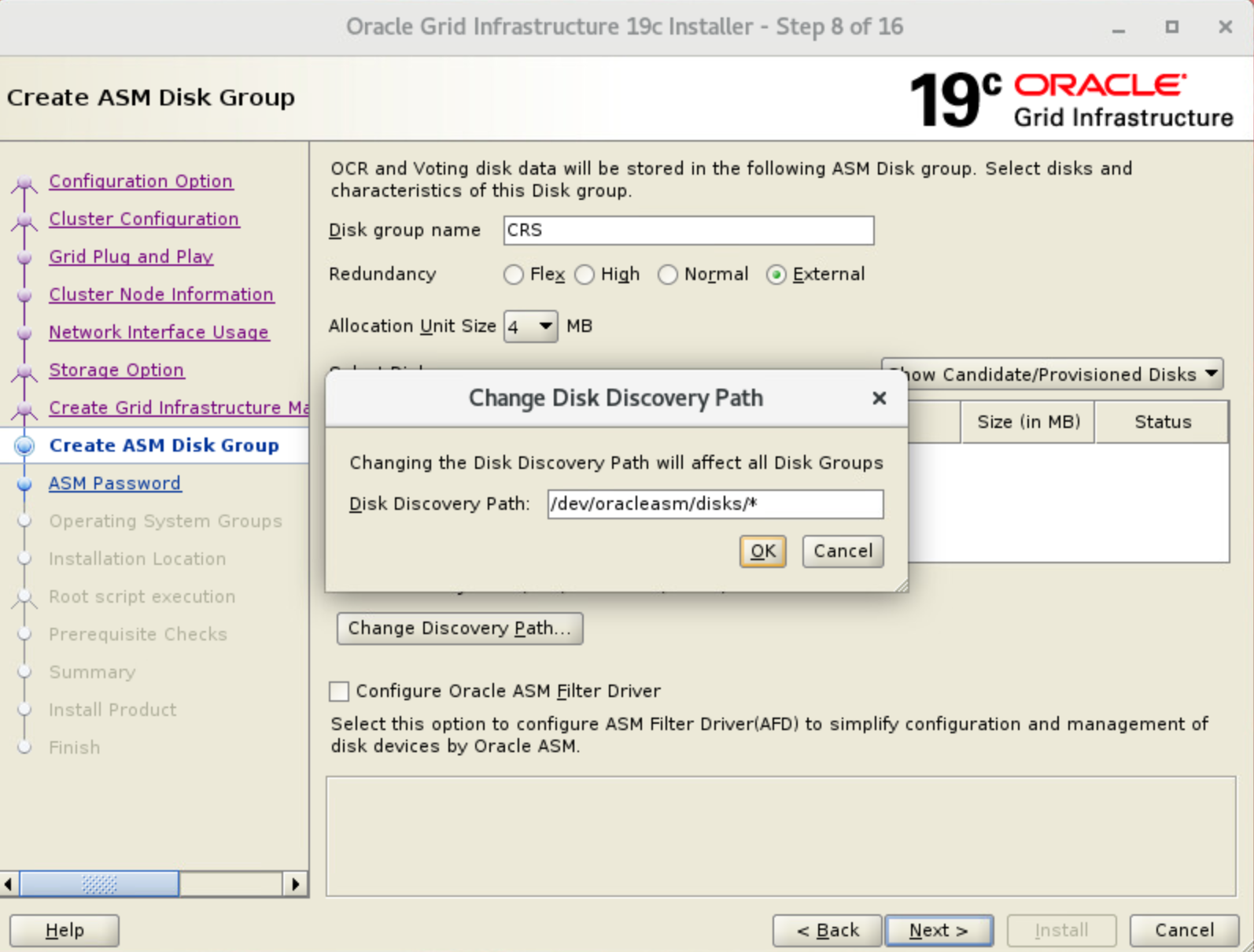

Create ASM DIsk Group画面

ASMLibを使用しているので、[Change Discovery Path]を選択し、

”/dev/oracleasm/disls/*”を入力し、[OK]をクリック

-

Create ASM DIsk Group画面

以下項目を入力し、[Next]をクリック・Disk Group Name: CRS Disk Group名

・Redundancy: 冗長度、今回はExternalのASM機能での非冗長を選択

・AU size: AUサイズ

・Add disks: CRSを設定するDiskへチェック

-

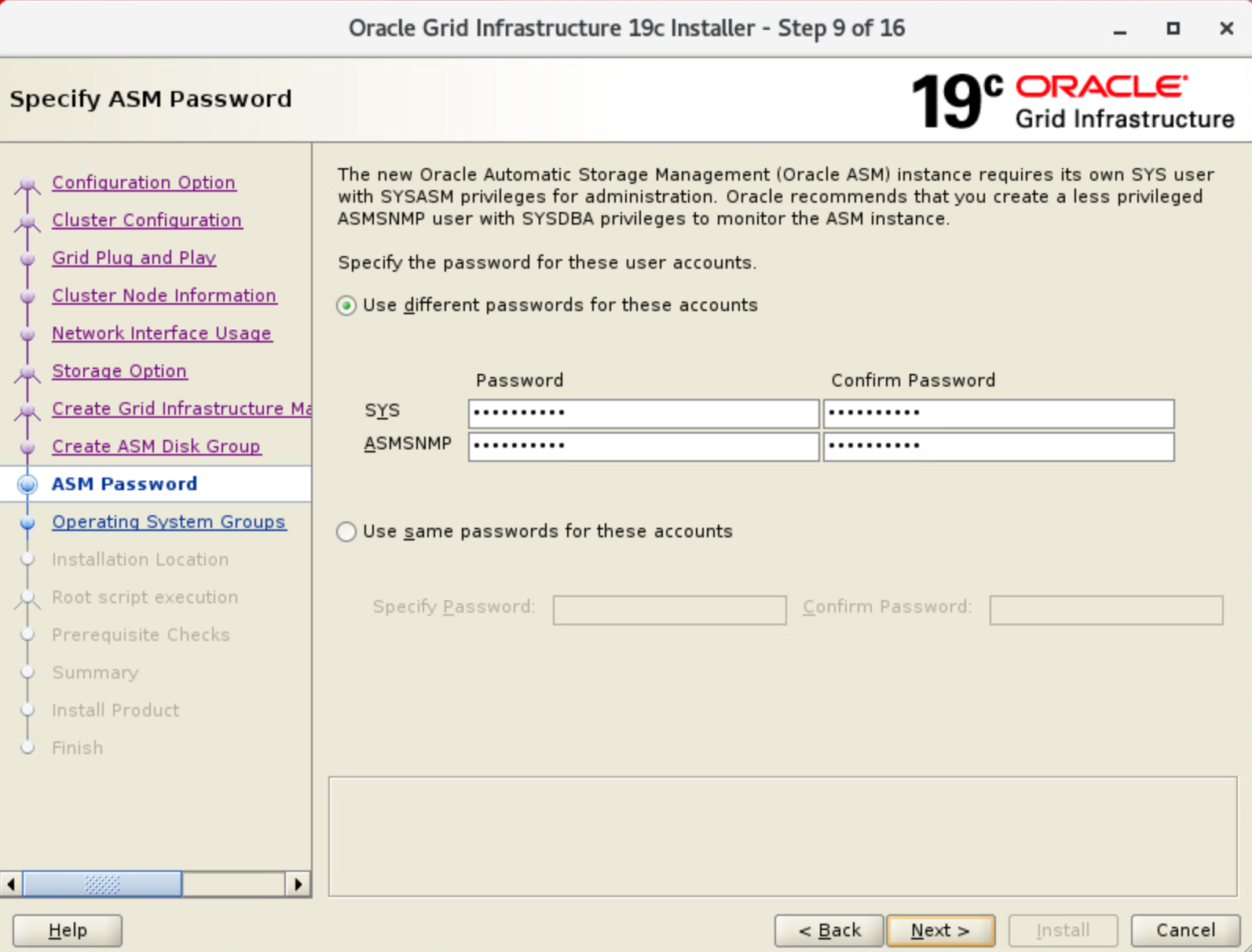

Specify ASM Password画面

SYS, ASMSNMPユーザーのパスワードを設定し、[Next]をクリック

-

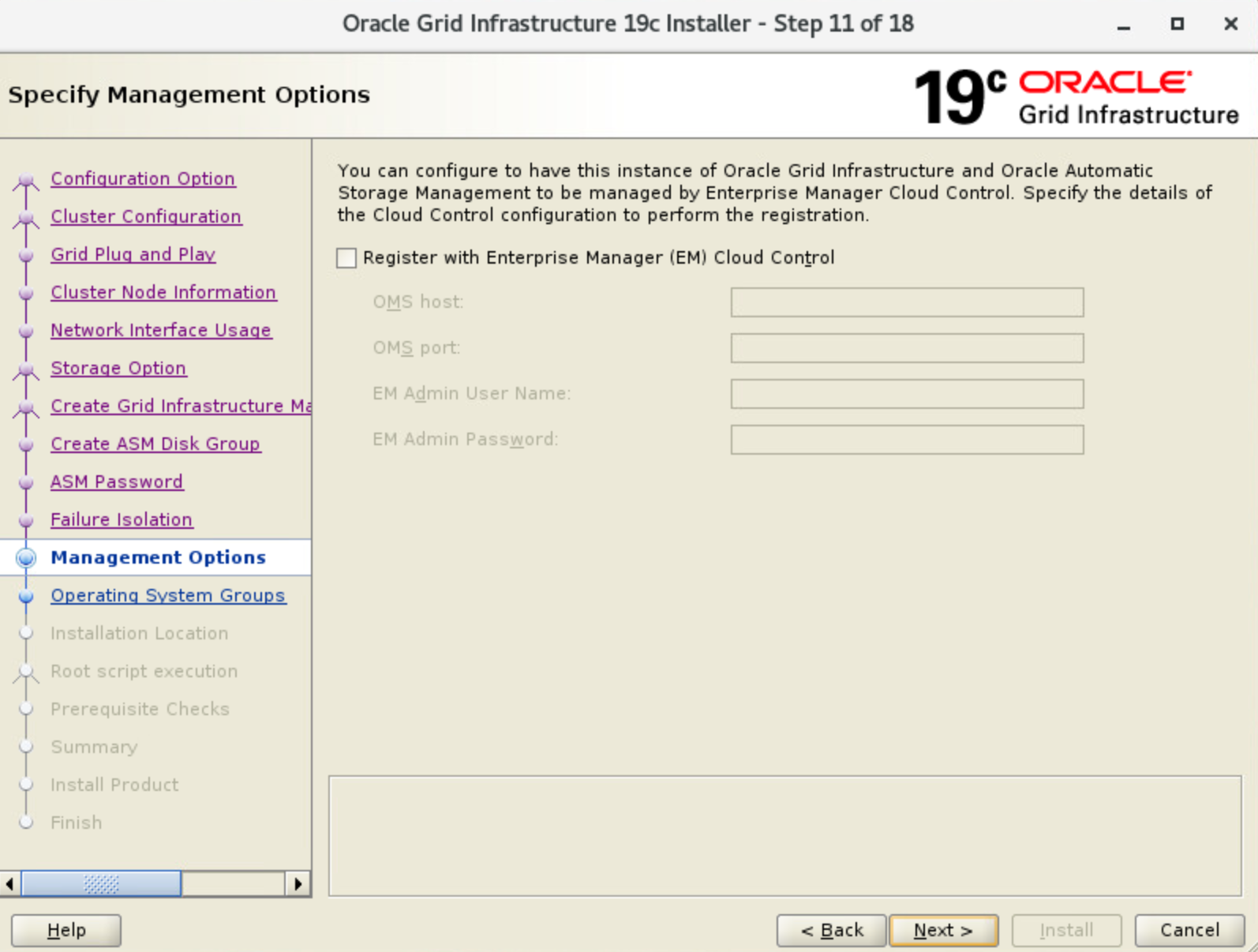

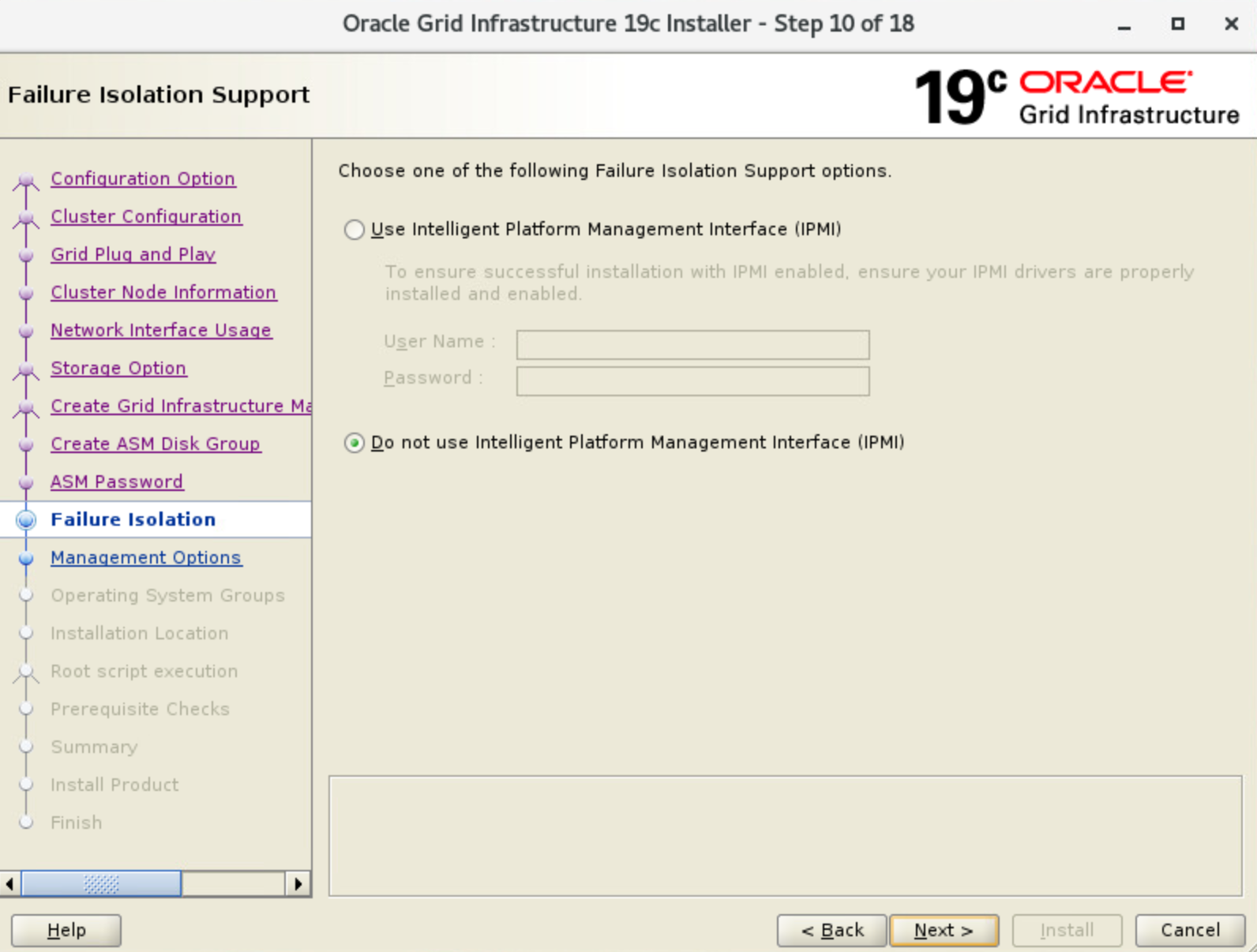

Failure Isolation Support画面

[Do not use IPMI] を選択し、[Next]をクリック

-

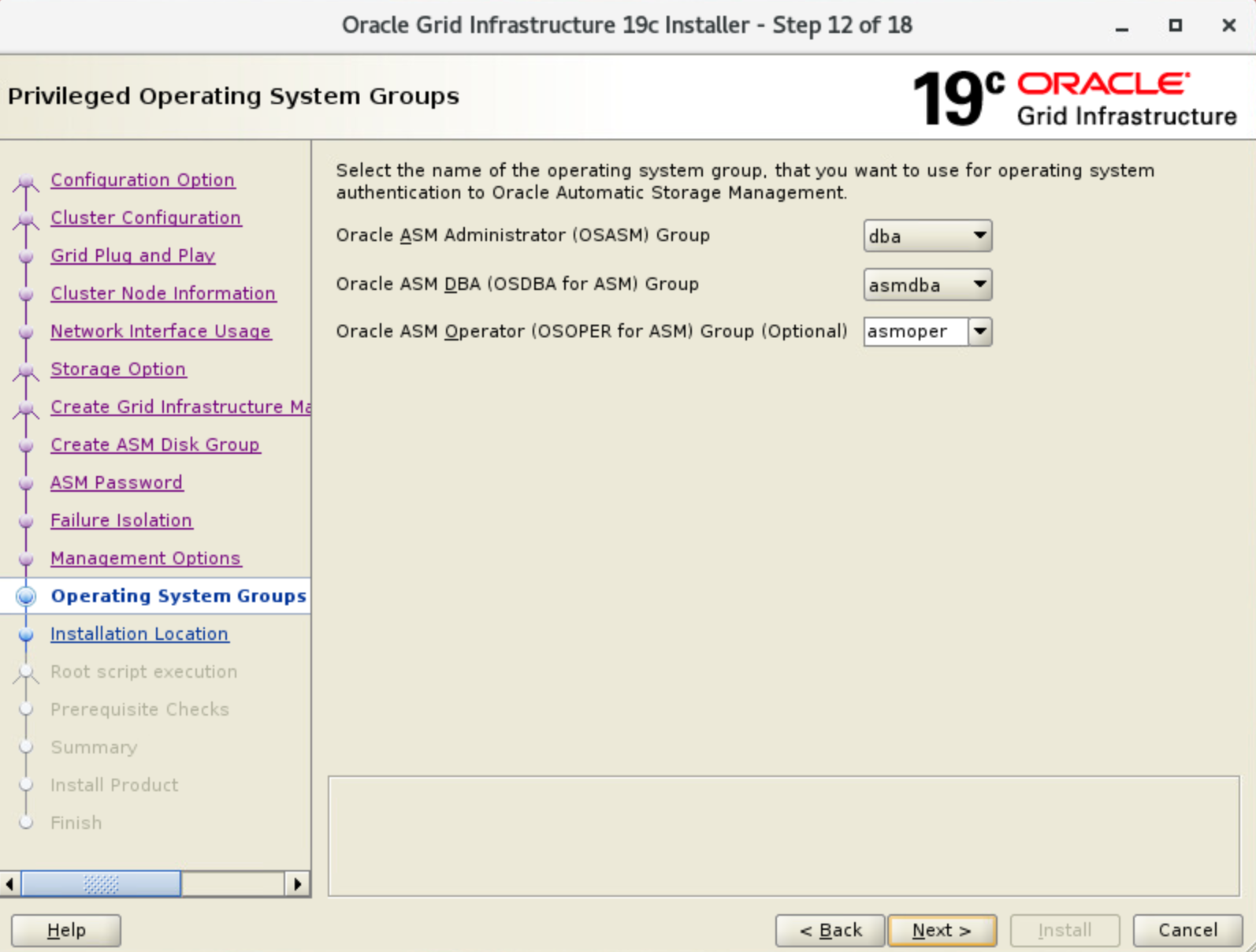

Privileged Operation System Groups画面

OSASM, OSDBA, OSPER各ASM用グループを設定し、[Next]をクリック

-

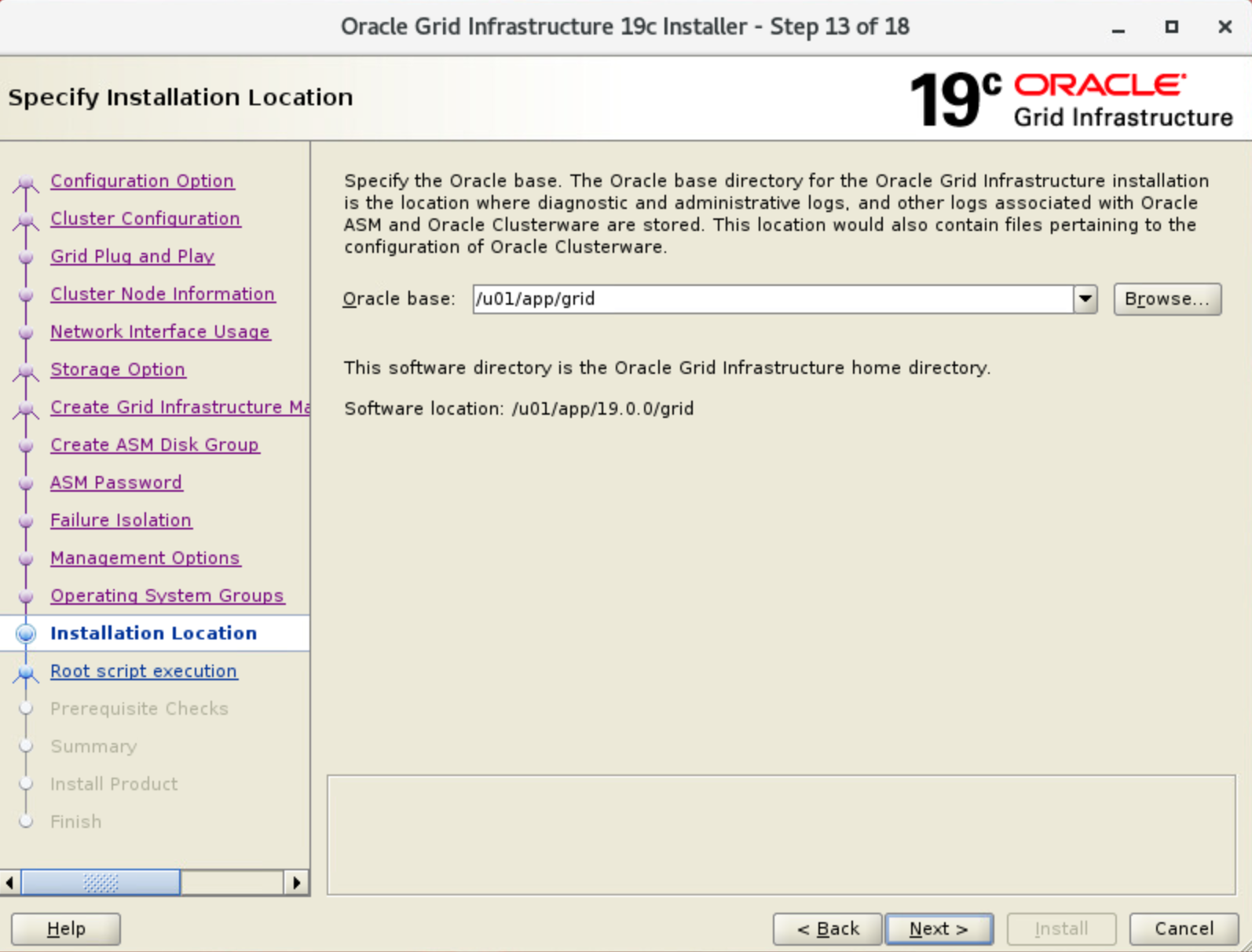

Specify Installation Location画面

以下項目Oracle Baseを確認し、[Next]をクリックOracle Base: ORACE_BASEパスが入力されていることを確認

-

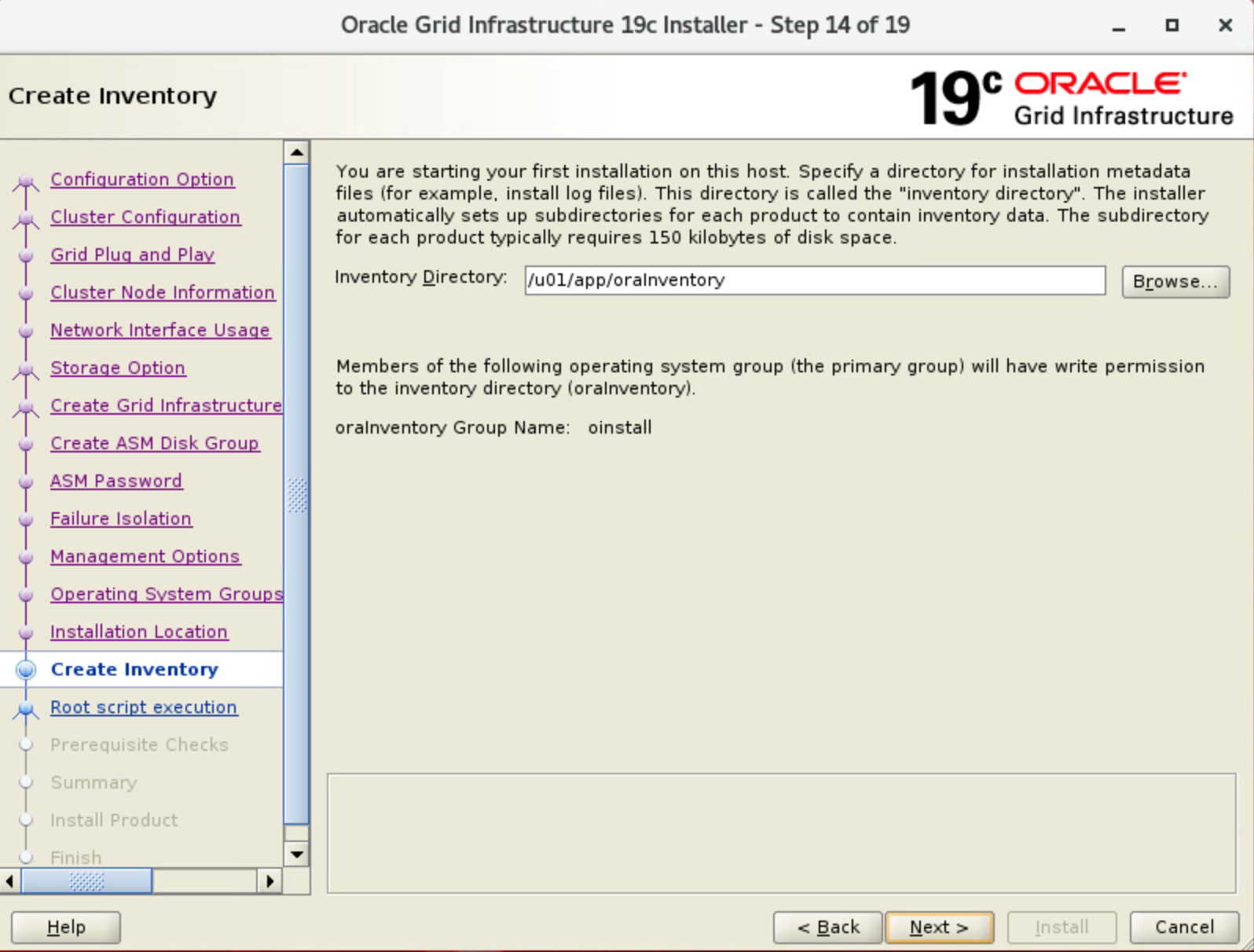

Create Inventory画面

[Inventory Directory] はデフォルト値のまま、[Next]をクリック

-

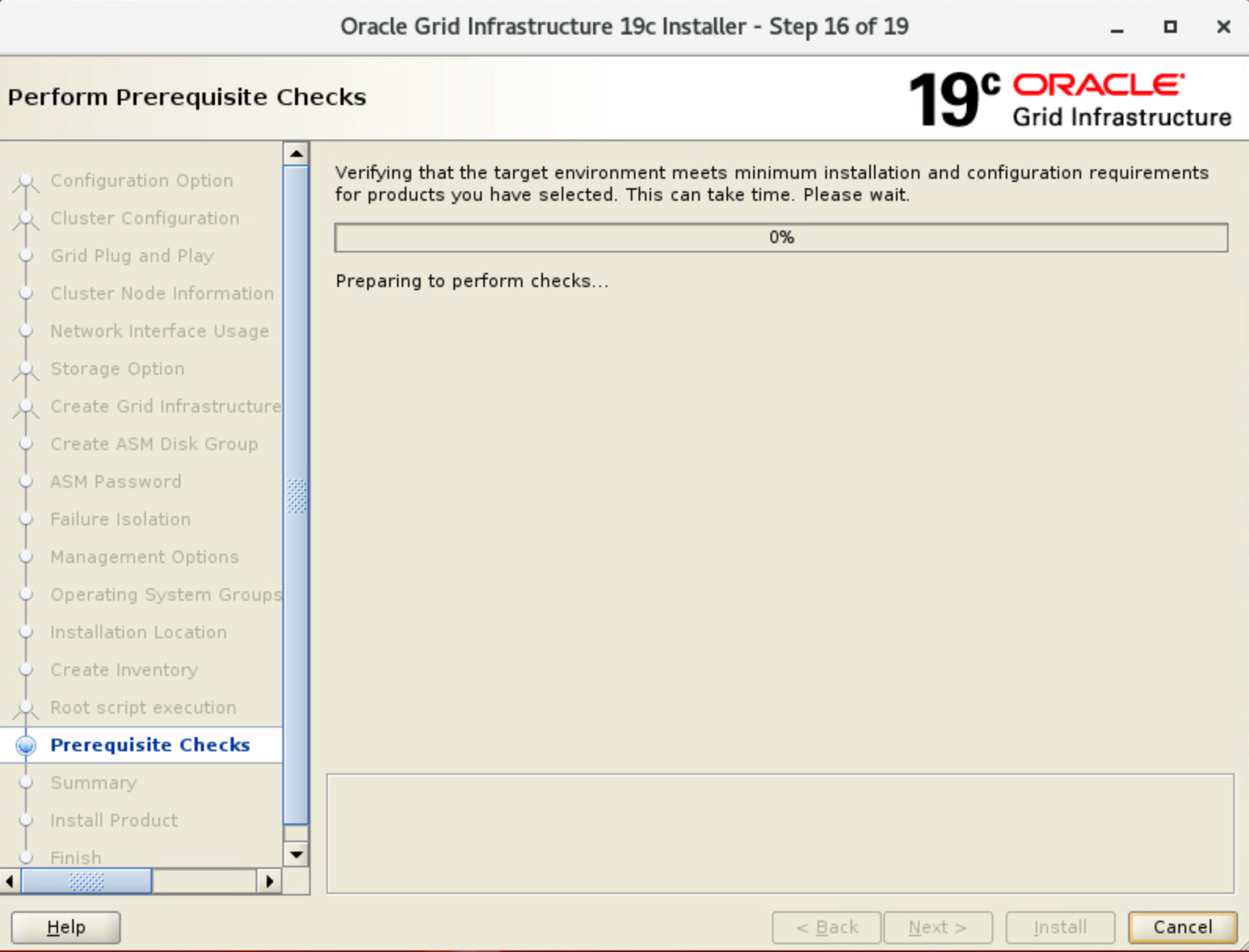

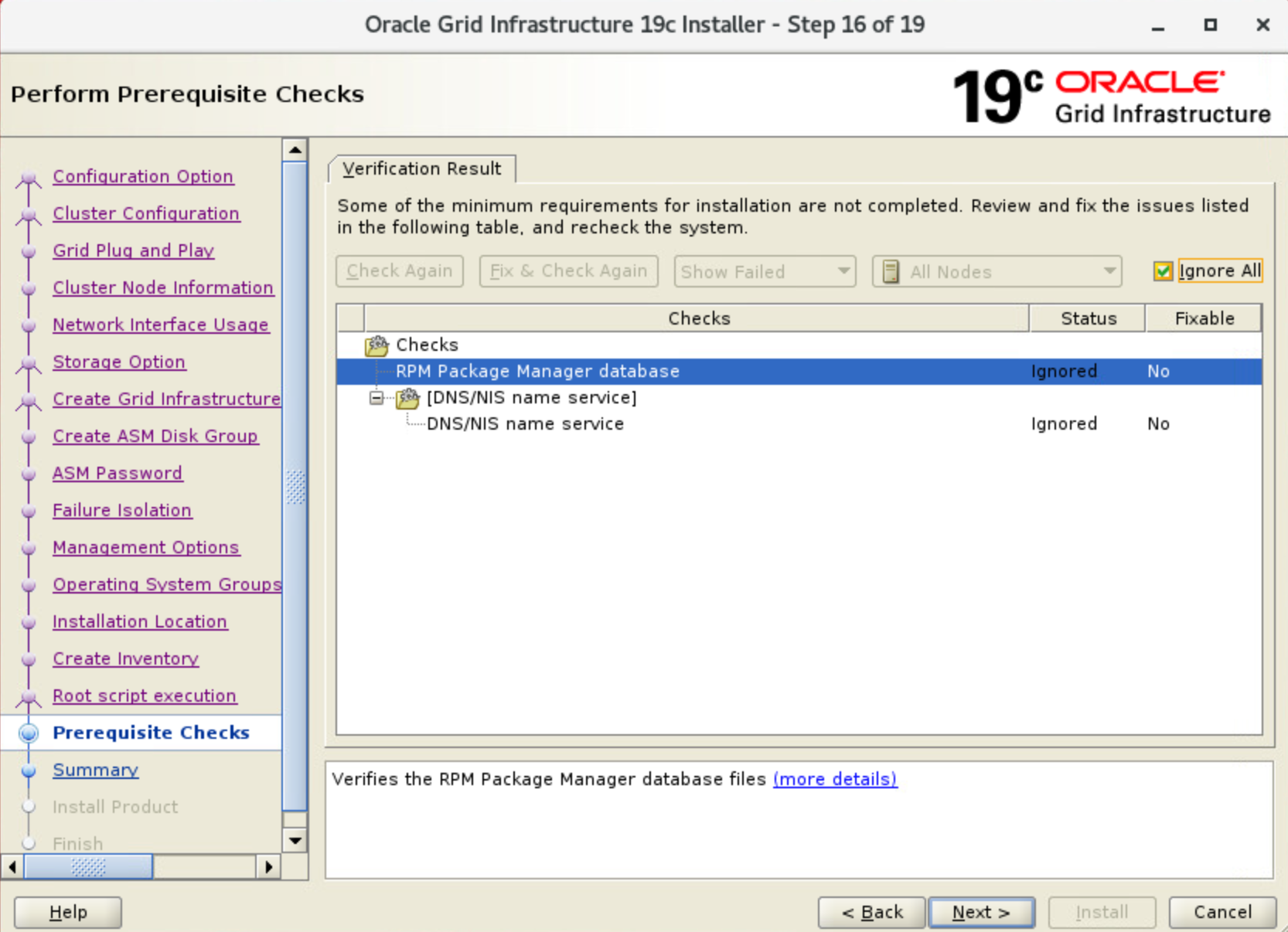

Perform Prerequisite CHecks画面

問題がないことを確認し、[Next]をクリック

今回は勉強用途で、SCAN用DNSサーバー未使用なので、[Ignore All]をチェック

-

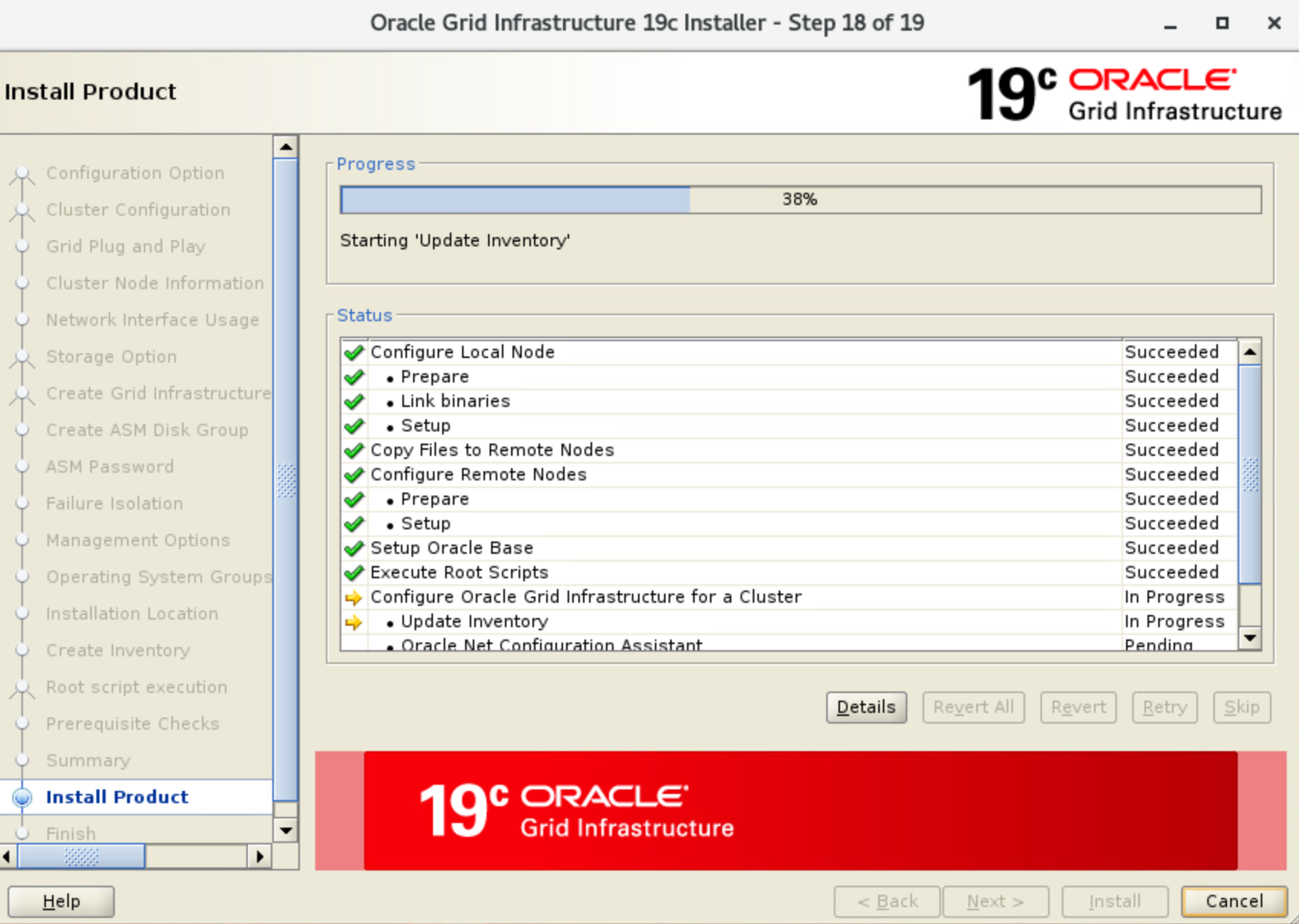

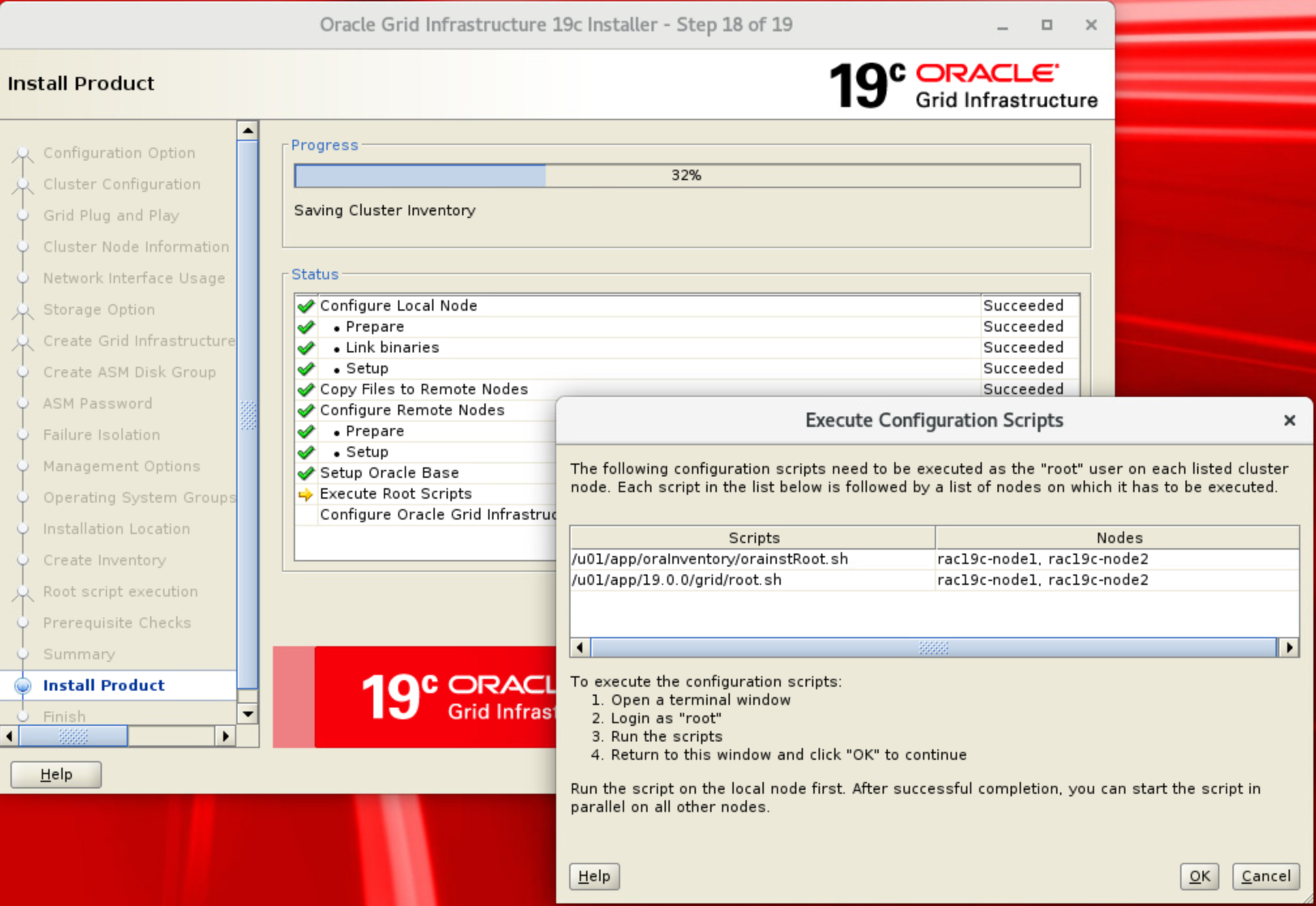

Execute Configuration scripts画面

orainstRoot.sh と root.shをまず、node1実行完了してから、node2実行

※同時実行禁止

● orainstRoot.sh実行

- Node1実行

[root@rac19c-node1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

- Node2実行

[root@rac19c-node2 grid]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

● root.sh実行

- Node1実行

[root@rac19c-node1 ~]# /u01/app/19.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac19c-node1/crsconfig/rootcrs_rac19c-node1_2020-11-26_01-39-36AM.log

2020/11/26 01:39:44 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2020/11/26 01:39:44 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2020/11/26 01:39:44 CLSRSC-363: User ignored prerequisites during installation

2020/11/26 01:39:45 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2020/11/26 01:39:46 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2020/11/26 01:39:46 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2020/11/26 01:39:46 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2020/11/26 01:39:47 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2020/11/26 01:40:04 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2020/11/26 01:40:10 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2020/11/26 01:40:13 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2020/11/26 01:40:24 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2020/11/26 01:40:24 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2020/11/26 01:40:28 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2020/11/26 01:40:28 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2020/11/26 01:40:47 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2020/11/26 01:40:51 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2020/11/26 01:40:55 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2020/11/26 01:40:59 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

ASM has been created and started successfully.

[DBT-30001] Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-201126AM014128.log for details.

2020/11/26 01:42:23 CLSRSC-482: Running command: '/u01/app/19.0.0/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-4256: Updating the profile

Successful addition of voting disk b54055b7a6754f5cbf5118b761be11e2.

Successfully replaced voting disk group with +CRS.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE b54055b7a6754f5cbf5118b761be11e2 (/dev/oracleasm/disks/CRS) [CRS]

Located 1 voting disk(s).

2020/11/26 01:43:36 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2020/11/26 01:44:37 CLSRSC-343: Successfully started Oracle Clusterware stack

2020/11/26 01:44:37 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2020/11/26 01:45:47 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2020/11/26 01:46:07 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

- Node2実行

[root@rac19c-node2 grid]# /u01/app/19.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac19c-node2/crsconfig/rootcrs_rac19c-node2_2020-11-26_01-51-08AM.log

2020/11/26 01:51:11 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2020/11/26 01:51:11 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2020/11/26 01:51:11 CLSRSC-363: User ignored prerequisites during installation

2020/11/26 01:51:11 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2020/11/26 01:51:12 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2020/11/26 01:51:12 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2020/11/26 01:51:12 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2020/11/26 01:51:13 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2020/11/26 01:51:13 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2020/11/26 01:51:14 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2020/11/26 01:51:21 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2020/11/26 01:51:21 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2020/11/26 01:51:22 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2020/11/26 01:51:22 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2020/11/26 01:51:34 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2020/11/26 01:51:48 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2020/11/26 01:51:48 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2020/11/26 01:51:49 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2020/11/26 01:51:50 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

2020/11/26 01:51:59 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2020/11/26 01:52:42 CLSRSC-343: Successfully started Oracle Clusterware stack

2020/11/26 01:52:42 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2020/11/26 01:52:53 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2020/11/26 01:52:57 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

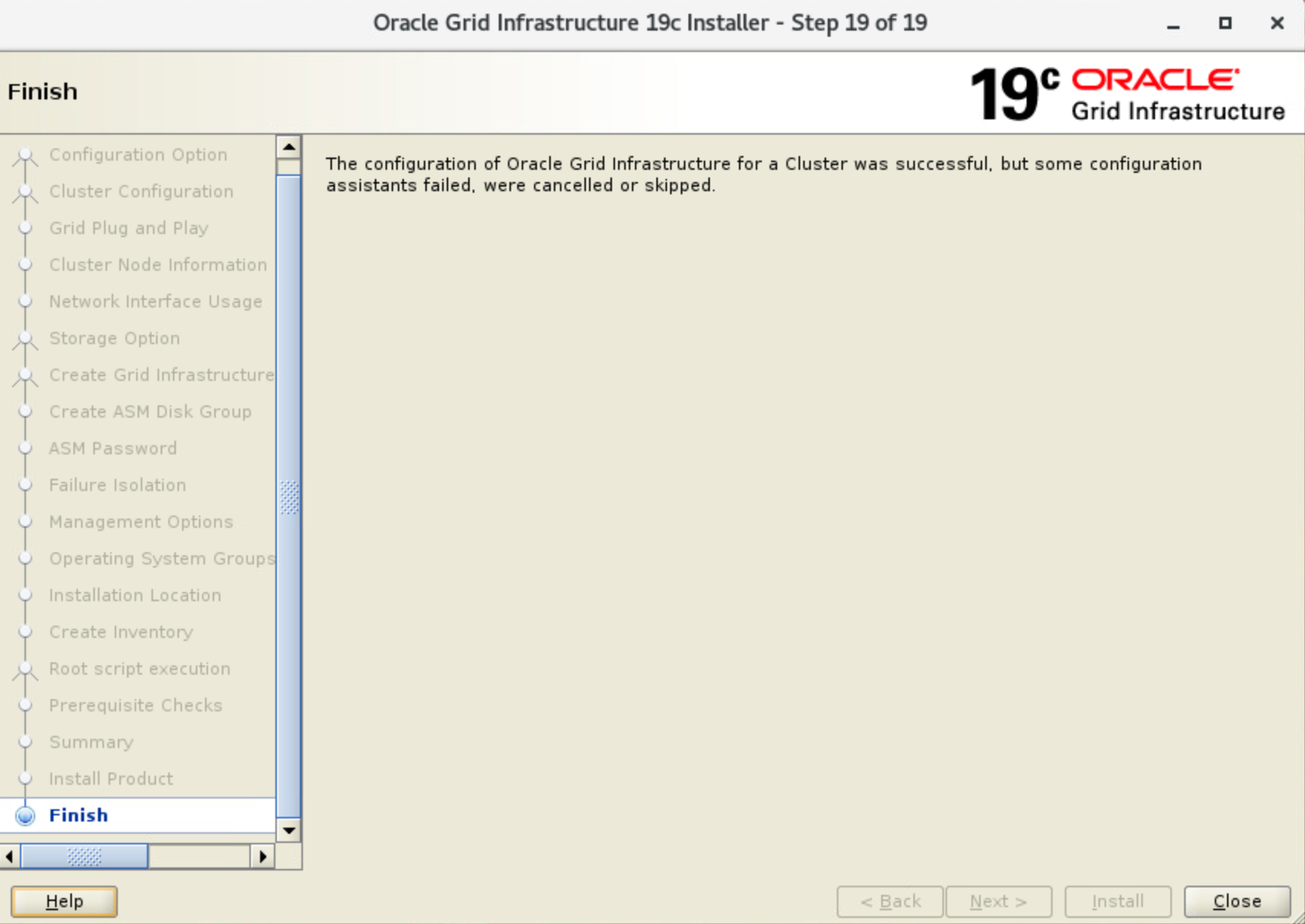

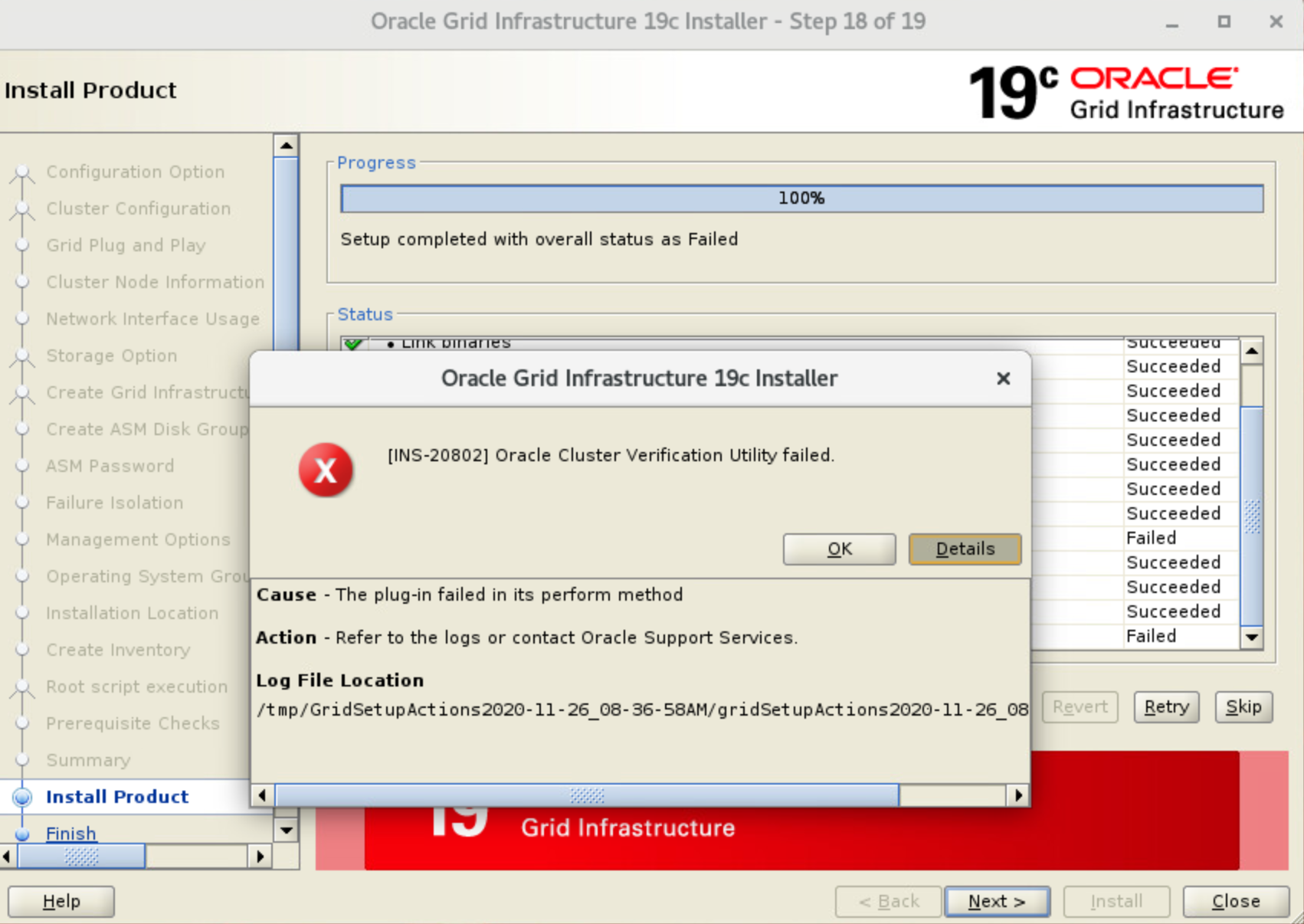

今回、logを確認し、SCANホスト名がDNSにより名前解決されていないエラーであったため、無視して[OK]をクリックして続行

●CVU (runcluvfy.sh)インストール完了チェック

[grid@rac19c-node1 ~]$ cd /u01/app/19.0.0/grid

[grid@rac19c-node1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac19c-node1,rac19c-node2 -method root

Enter "ROOT" password:

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...PASSED

Verifying Free Space: rac19c-node1:/usr,rac19c-node1:/var,rac19c-node1:/etc,rac19c-node1:/u01/app/19.0.0/grid,rac19c-node1:/sbin,rac19c-node1:/tmp ...PASSED

Verifying Free Space: rac19c-node2:/usr,rac19c-node2:/var,rac19c-node2:/etc,rac19c-node2:/u01/app/19.0.0/grid,rac19c-node2:/sbin,rac19c-node2:/tmp ...PASSED

Verifying User Existence: grid ...

Verifying Users With Same UID: 54331 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: asmadmin ...PASSED

Verifying Group Existence: asmdba ...PASSED

Verifying Group Existence: oinstall ...PASSED

Verifying Group Membership: asmdba ...PASSED

Verifying Group Membership: asmadmin ...PASSED

Verifying Group Membership: oinstall(Primary) ...PASSED

Verifying Run Level ...PASSED

Verifying Hard Limit: maximum open file descriptors ...PASSED

Verifying Soft Limit: maximum open file descriptors ...PASSED

Verifying Hard Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum stack size ...PASSED

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...PASSED

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: ip_local_port_range ...PASSED

Verifying OS Kernel Parameter: rmem_default ...PASSED

Verifying OS Kernel Parameter: rmem_max ...PASSED

Verifying OS Kernel Parameter: wmem_default ...PASSED

Verifying OS Kernel Parameter: wmem_max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...PASSED

Verifying OS Kernel Parameter: panic_on_oops ...PASSED

Verifying Package: kmod-20-21 (x86_64) ...PASSED

Verifying Package: kmod-libs-20-21 (x86_64) ...PASSED

Verifying Package: binutils-2.23.52.0.1 ...PASSED

Verifying Package: compat-libcap1-1.10 ...PASSED

Verifying Package: libgcc-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-devel-4.8.2 (x86_64) ...PASSED

Verifying Package: sysstat-10.1.5 ...PASSED

Verifying Package: ksh ...PASSED

Verifying Package: make-3.82 ...PASSED

Verifying Package: glibc-2.17 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.17 (x86_64) ...PASSED

Verifying Package: libaio-0.3.109 (x86_64) ...PASSED

Verifying Package: libaio-devel-0.3.109 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-6.2-4 ...PASSED

Verifying Package: net-tools-2.0-0.17 ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...PASSED

Verifying Package: cvuqdisk-1.0.10-1 ...PASSED

Verifying Host name ...PASSED

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.100.0" ...PASSED

Verifying subnet mask consistency for subnet "19.19.19.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying Multicast or broadcast check ...PASSED

Verifying ASM Integrity ...PASSED

Verifying Network Time Protocol (NTP) ...PASSED

Verifying Same core file name pattern ...PASSED

Verifying User Mask ...PASSED

Verifying User Not In Group "root": grid ...PASSED

Verifying Time zone consistency ...PASSED

Verifying Time offset between nodes ...PASSED

Verifying resolv.conf Integrity ...PASSED

Verifying DNS/NIS name service ...PASSED

Verifying Domain Sockets ...FAILED (PRVG-11750)

Verifying /boot mount ...PASSED

Verifying Daemon "avahi-daemon" not configured and running ...PASSED

Verifying Daemon "proxyt" not configured and running ...PASSED

Verifying loopback network interface address ...PASSED

Verifying Grid Infrastructure home path: /u01/app/19.0.0/grid ...

Verifying '/u01/app/19.0.0/grid' ...FAILED (PRVG-11931)

Verifying Grid Infrastructure home path: /u01/app/19.0.0/grid ...FAILED (PRVG-11931)

Verifying User Equivalence ...PASSED

Verifying RPM Package Manager database ...PASSED

Verifying Network interface bonding status of private interconnect network interfaces ...PASSED

Verifying /dev/shm mounted as temporary file system ...PASSED

Verifying File system mount options for path /var ...PASSED

Verifying DefaultTasksMax parameter ...PASSED

Verifying zeroconf check ...PASSED

Verifying ASM Filter Driver configuration ...PASSED

Verifying Systemd login manager IPC parameter ...PASSED

Pre-check for cluster services setup was unsuccessful on all the nodes.

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Domain Sockets ...FAILED

rac19c-node1: PRVG-11750 : File "/var/tmp/.oracle/ora_gipc_rac19c-node1_CTSSD"

exists on node "rac19c-node1".

・・・

Verifying Grid Infrastructure home path: /u01/app/19.0.0/grid ...FAILED

Verifying '/u01/app/19.0.0/grid' ...FAILED

rac19c-node1: PRVG-11931 : Path "/u01/app/19.0.0/grid" is not writeable on

node "rac19c-node1".

rac19c-node2: PRVG-11931 : Path "/u01/app/19.0.0/grid" is not writeable on

node "rac19c-node2".

CVU operation performed: stage -pre crsinst

Date: Dec 2, 2020 7:11:57 AM

CVU home: /u01/app/19.0.0/grid/

User: grid

■ Install確認

● CRSステータス確認

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/bin/crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac19c-node1 STABLE

ONLINE ONLINE rac19c-node2 STABLE

ora.chad

ONLINE ONLINE rac19c-node1 STABLE

ONLINE ONLINE rac19c-node2 STABLE

ora.net1.network

ONLINE ONLINE rac19c-node1 STABLE

ONLINE ONLINE rac19c-node2 STABLE

ora.ons

ONLINE ONLINE rac19c-node1 STABLE

ONLINE ONLINE rac19c-node2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac19c-node1 STABLE

2 ONLINE ONLINE rac19c-node2 STABLE

3 OFFLINE OFFLINE STABLE

ora.CRS.dg(ora.asmgroup)

1 ONLINE ONLINE rac19c-node1 STABLE

2 ONLINE ONLINE rac19c-node2 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac19c-node2 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE rac19c-node1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE rac19c-node1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac19c-node1 Started,STABLE

2 ONLINE ONLINE rac19c-node2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac19c-node1 STABLE

2 ONLINE ONLINE rac19c-node2 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac19c-node1 STABLE

ora.qosmserver

1 ONLINE ONLINE rac19c-node1 STABLE

ora.rac19c-node1.vip

1 ONLINE ONLINE rac19c-node1 STABLE

ora.rac19c-node2.vip

1 ONLINE ONLINE rac19c-node2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac19c-node2 STABLE

ora.scan2.vip

1 ONLINE ONLINE rac19c-node1 STABLE

ora.scan3.vip

1 ONLINE ONLINE rac19c-node1 STABLE

--------------------------------------------------------------------------------

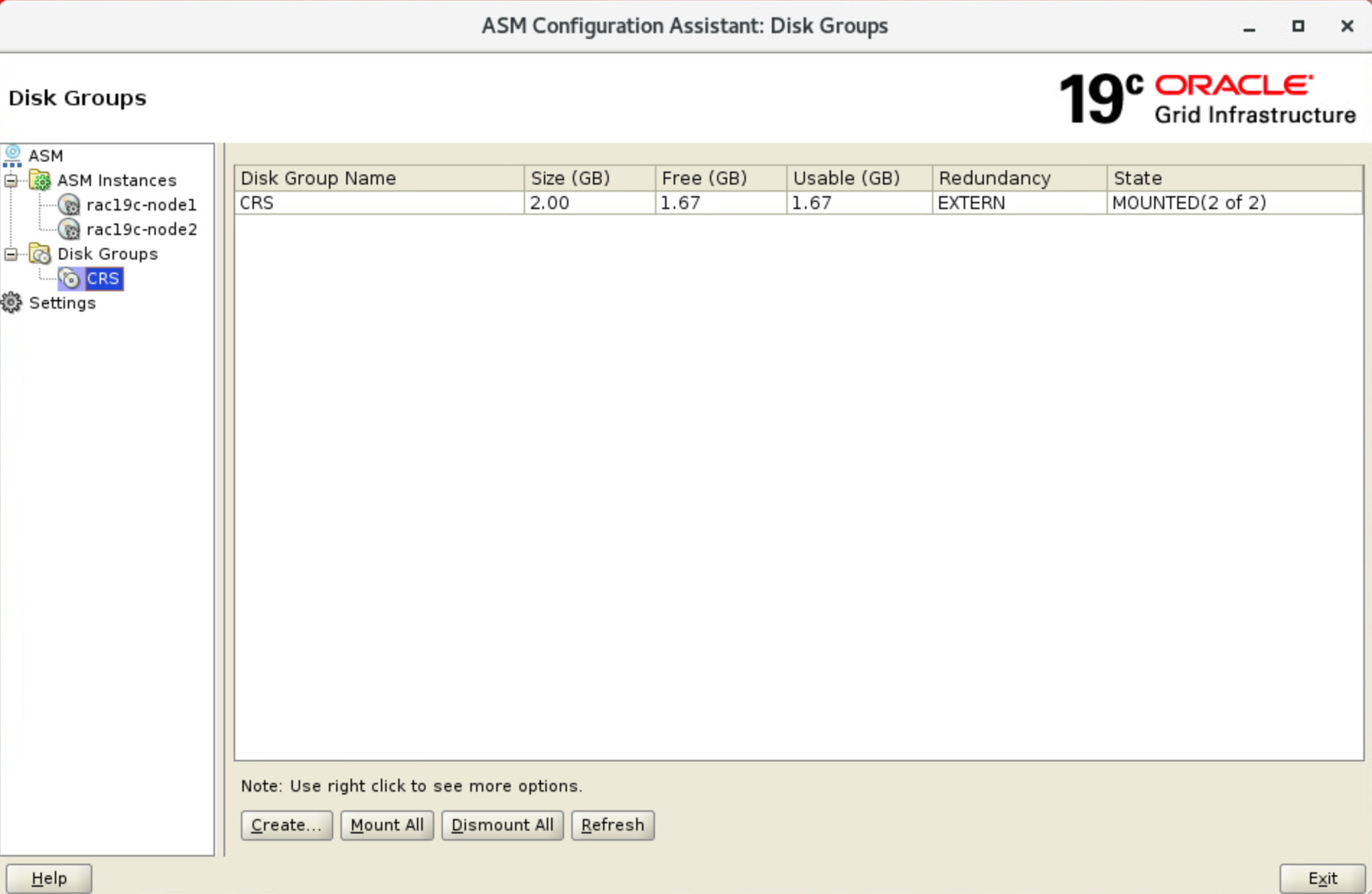

● ASM Disk Group確認

[grid@rac19c-node1 ~]$ asmcmd

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 2044 1684 0 1684 0 Y CRS/

● ASM Instance 接続確認

[grid@rac19c-node1 ~]$ sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Fri Nov 27 19:43:48 2020

Version 19.0.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.0.0.0.0

SQL> select Instance_name from v$instance;

INSTANCE_NAME

------------------------------------------------

+ASM1

● CTSS(Cluster Time Synchronization Service)確認

クラスタ時刻同期化サービス(CTSS)起動確認

[grid@rac19c-node2 ~]$ crsctl check ctss

CRS-4701: The Cluster Time Synchronization Service is in Active mode.

CRS-4702: Offset (in msec): 0

● GI設定確認

- node確認

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/bin/olsnodes -i -n

rac19c-node1 1 <none>

rac19c-node2 2 <none>

- Interconnect確認

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/bin/olsnodes -l -p

rac19c-node1 19.19.19.1

- interface確認

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/bin/oifcfg getif

ens192 192.168.100.0 global public

ens224 19.19.19.0 global cluster_interconnect,asm

- Cluster名確認

[grid@rac19c-node1 ~]$ cat /u01/app/19.0.0/grid/install/cluster.ini

[cluster_info]

cluster_name=rac19c-cluster

[grid@rac19c-node1 ~]$ olsnodes -c

rac19c-cluster

- OCR情報確認

[grid@rac19c-node1 ~]$ cat /etc/oracle/ocr.loc

#Device/file +CRS getting replaced by device +CRS/rac19c-cluster/OCRFILE/registry.255.1057455747

ocrconfig_loc=+CRS/rac19c-cluster/OCRFILE/registry.255.1057455747

local_only=false

● SCAN確認

- SCAN確認

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/bin/srvctl config scan

SCAN name: rac19c-scan, Network: 1

Subnet IPv4: 192.168.100.0/255.255.255.0/ens192, static

Subnet IPv6:

SCAN 1 IPv4 VIP: 192.168.100.197

SCAN VIP is enabled.

SCAN 2 IPv4 VIP: 192.168.100.198

SCAN VIP is enabled.

SCAN 3 IPv4 VIP: 192.168.100.199

SCAN VIP is enabled.

- SCAN Listener確認

[grid@rac19c-node1 ~]$ /u01/app/19.0.0/grid/bin/srvctl config scan_listener

SCAN Listeners for network 1:

Registration invited nodes:

Registration invited subnets:

Endpoints: TCP:1521

SCAN Listener LISTENER_SCAN1 exists

SCAN Listener is enabled.

SCAN Listener LISTENER_SCAN2 exists

SCAN Listener is enabled.

SCAN Listener LISTENER_SCAN3 exists

SCAN Listener is enabled.

● inventory / インストール先の確認

- oraInventoryディレクトリ確認

[grid@rac19c-node1 ~]$ cat /u01/app/oraInventory/oraInst.loc

inventory_loc=/u01/app/oraInventory

inst_group=oinstall

- HOME NAME確認

[grid@rac19c-node1 ~]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml | grep "HOME NAME"

<HOME NAME="OraGI19Home1" LOC="/u01/app/19.0.0/grid" TYPE="O" IDX="1" CRS="true"/>

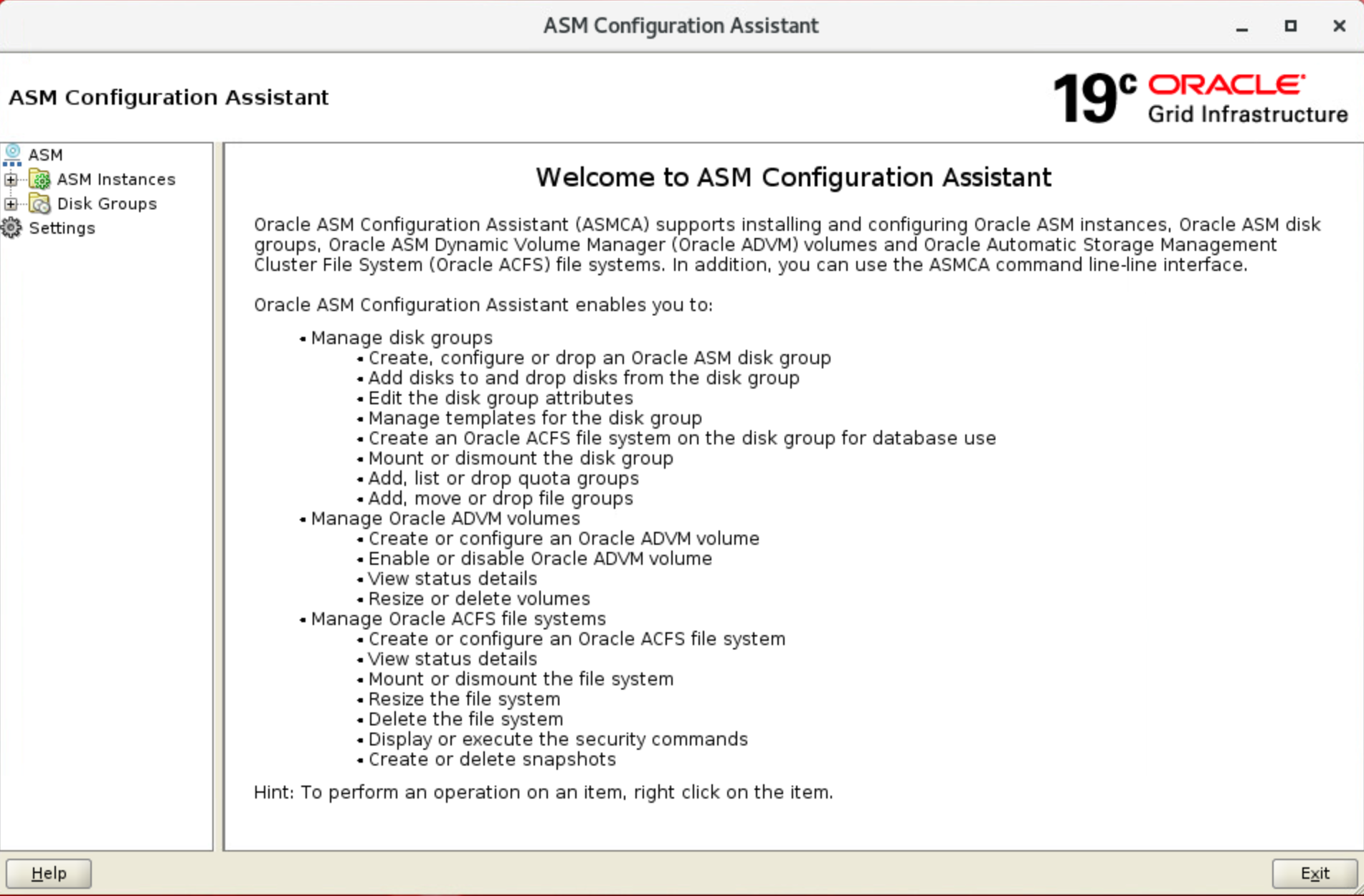

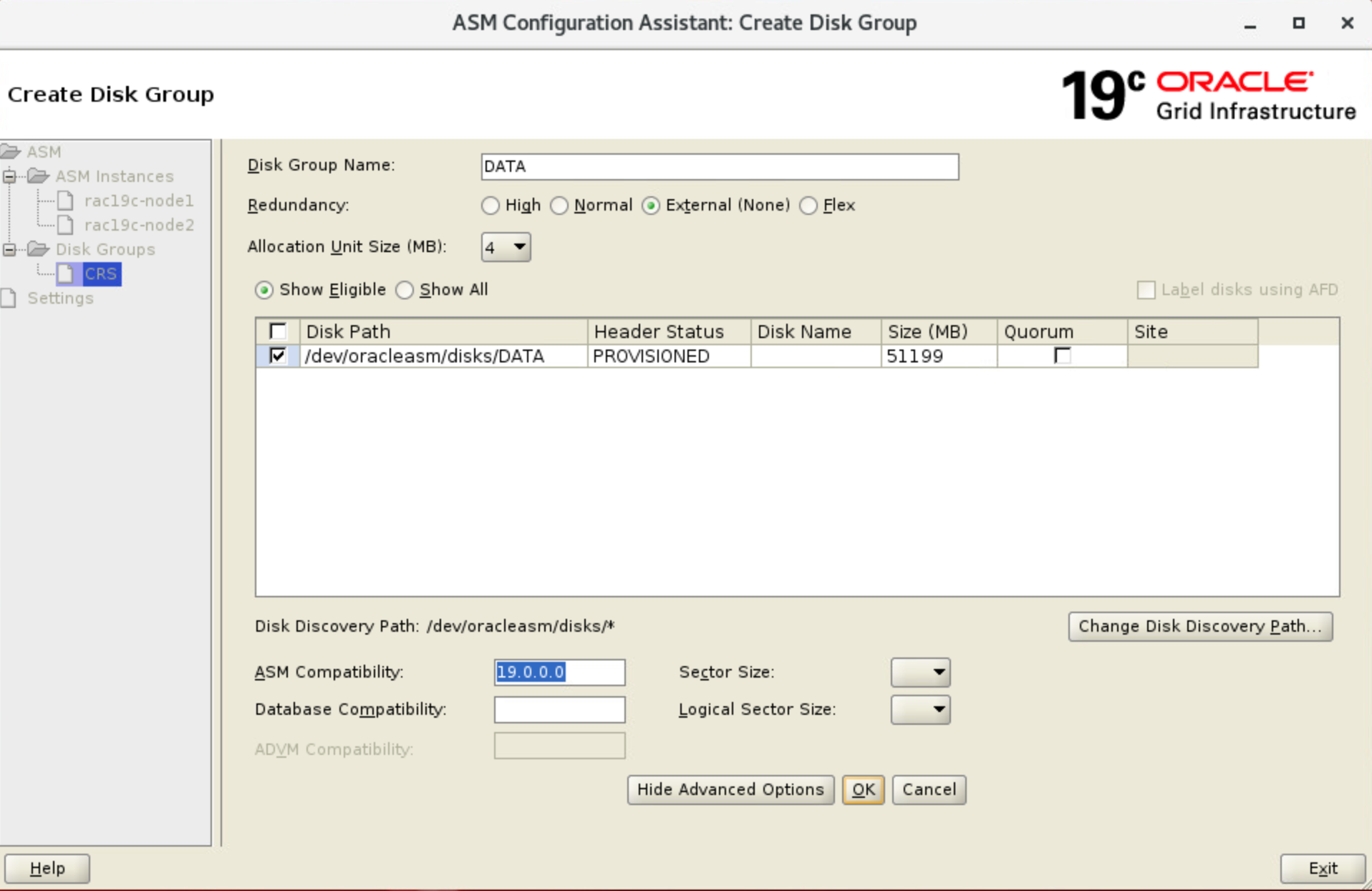

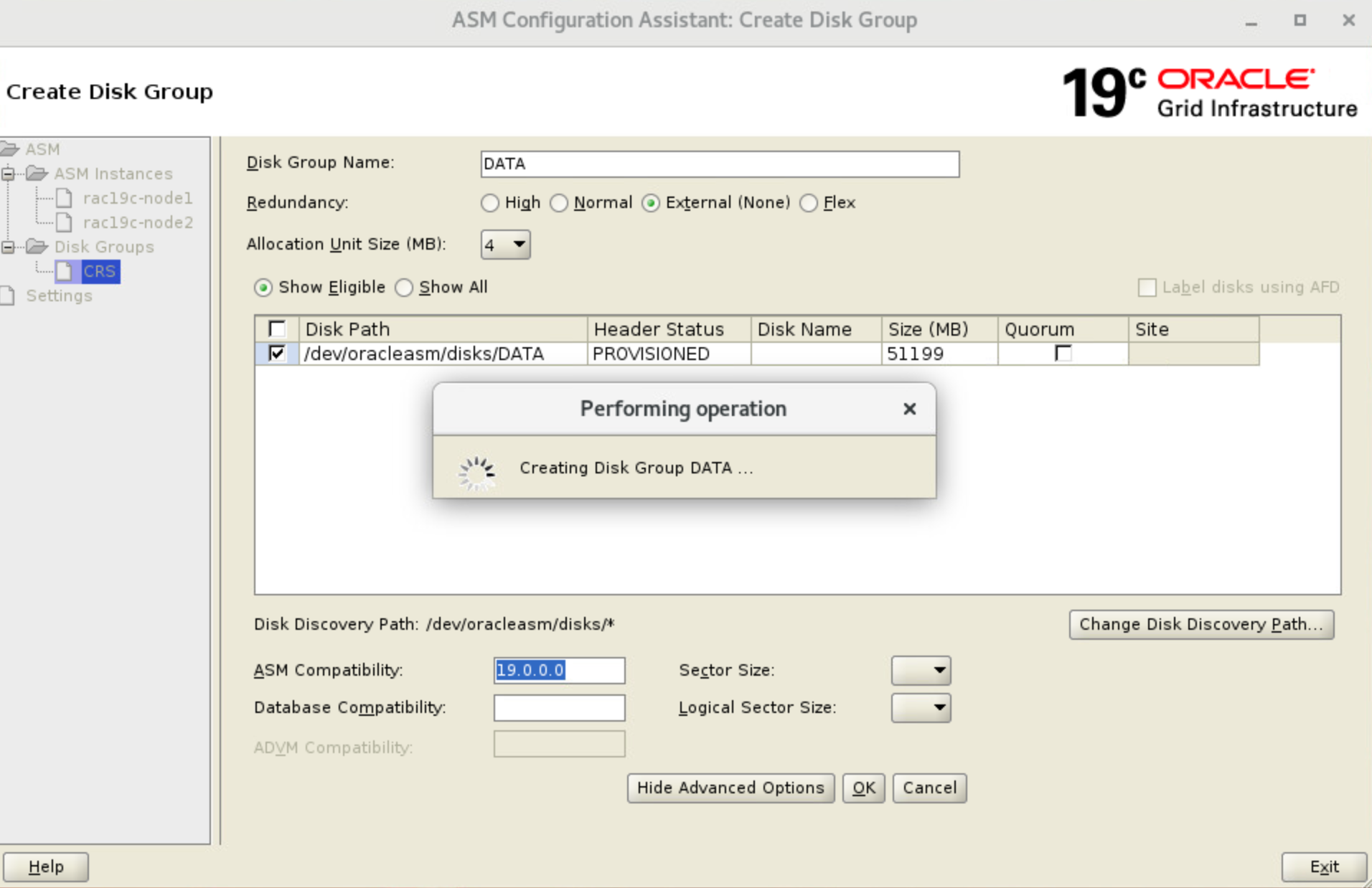

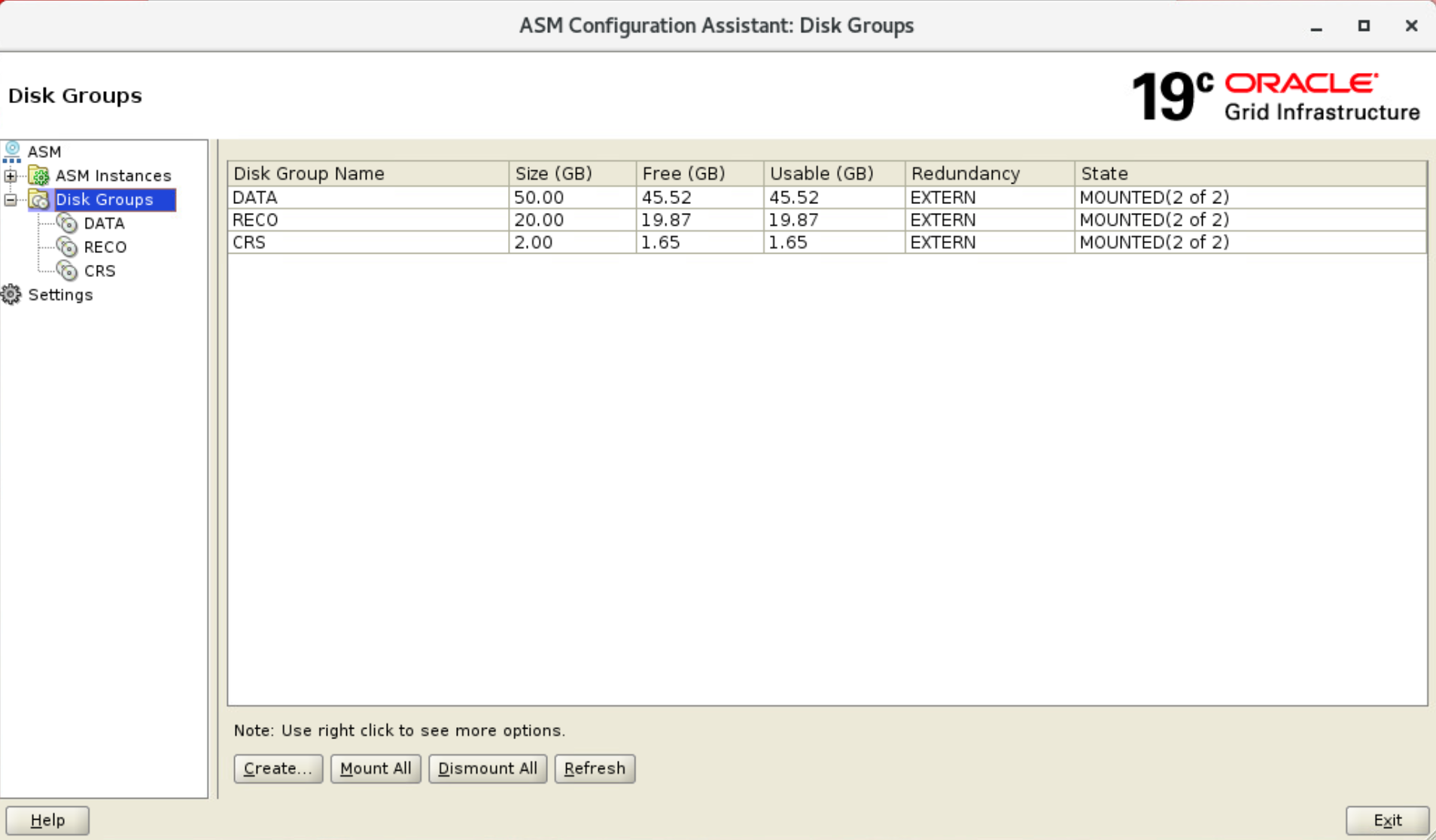

■ Database用ASM Disk作成

Database用ASM DIskの +DATA, +RECOをASM Configuration Assistant (asmca)で作成

● asmca実行

[root@rac19c-node1 ~]# su - grid

[grid@rac19c-node1 ~]$ asmca

-

Create Disk Group画面

以下内容を設定し、[OK]をクリックしてASMディスクを作成・Disk Group Name: ASMディスク名を設定

・Redundancy: 冗長度を設定、冗長度に応じてDiskが必要

・Allocation Unit Size(MB): AUサイズを設定

作成確認

[grid@rac19c-node1 ~]$ asmcmd

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 2044 1692 0 1692 0 Y CRS/

MOUNTED EXTERN N 512 512 4096 4194304 51196 46608 0 46608 0 N DATA/

MOUNTED EXTERN N 512 512 4096 4194304 20476 17092 0 17092 0 N RECO/

■ GI関連バックアップ

リストアできるようにCRSとASM関連情報をバックアップしておきます

● CRSバックアップ

- バックアップ

[root@rac19c-node1 ~]# /u01/app/19.0.0/grid/bin/ocrconfig -manualbackup

rac19c-node2 2020/12/03 01:22:10 +CRS:/rac19c-cluster/OCRBACKUP/backup_20201203_012210.ocr.258.1058145731 724960844

- バックアップ確認

[root@rac19c-node1 ~]# /u01/app/19.0.0/grid/bin/ocrconfig -showbackup

PROT-24: Auto backups for the Oracle Cluster Registry are not available

rac19c-node2 2020/12/03 01:22:10 +CRS:/rac19c-cluster/OCRBACKUP/backup_20201203_012210.ocr.258.1058145731 724960844

● ASM SPFILEバックアップ

- ASMの SPFILEバックアップ

[grid@rac19c-node1 ~]$ sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Thu Dec 3 01:24:22 2020

Version 0.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.0.0.0.0

SQL> create pfile='/tmp/initASM.ora' from spfile;

File created.

SQL> host cat /tmp/initASM.ora

+ASM1.__oracle_base='/u01/app/grid'#ORACLE_BASE set from in memory value

+ASM2.__oracle_base='/u01/app/grid'#ORACLE_BASE set from in memory value

*.asm_diskgroups='DATA'#Manual Mount

*.asm_diskstring='/dev/oracleasm/disks/*'

*.asm_power_limit=1

*.large_pool_size=12M

*.remote_login_passwordfile='EXCLUSIVE'

■ 参考

● マニュアル

・Grid Infrastructureインストレーションおよびアップグレード・ガイドfor Linux

・Real Application Clustersインストレーション・ガイドfor Linux and UNIX