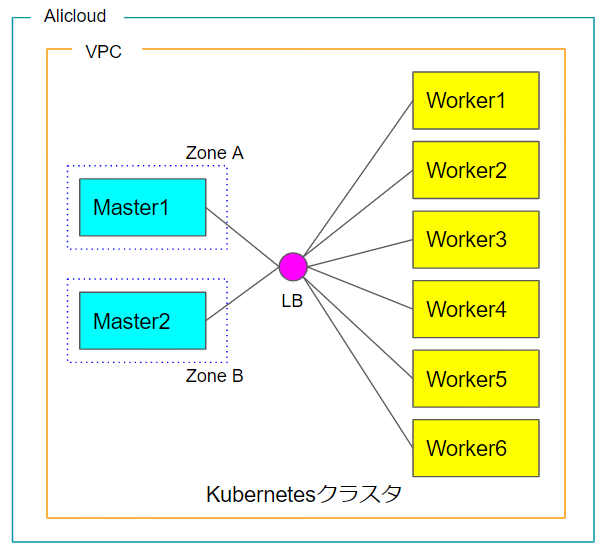

Alicloud上にマスターnodeをマルチゾーン化したKubernetesクラスタを構築する

マスターnodeをマルチゾーン化し、LBで束ねて高可用性なKubernetesクラスタを構築するのを、Terraformで自動化する。

Kubernetesのnodeは、あらかじめイメージを作成しておく。[こちら][link1]の記事を参考に。

[link1]:https://qiita.com/settembre21/items/58e20d27043bff339de5

作業場所

Teraformを実行する端末は何でもよく、手元のMacやLinux PCでも構わない。今回はAWSのUbuntuインスタンスから実行している。Terraformのバージョンは次の通り。

root@ip-172-31-27-178:~/terraform/alibaba# terraform version

Terraform v0.12.18

+ provider.alicloud v1.71.0

Your version of Terraform is out of date! The latest version

is 0.12.21. You can update by downloading from https://www.terraform.io/downloads.html

嗚呼、バージョンが古い。。

各種tfファイル

# Alicloud Providerの設定

provider "alicloud" {

region = var.alicloud_region

}

# 有効なゾーンを問い合わせ、local.all_zonesで参照する

data "alicloud_zones" "available" {

}

# local変数の設定

locals {

all_vswitchs = alicloud_vswitch.vsw.*.id

all_zones = data.alicloud_zones.available.ids

}

# sshキーペアの登録

resource "alicloud_key_pair" "deployer" {

key_name = "${var.cluster_name}-deployer-key"

public_key = file(var.ssh_public_key_file)

}

# セキュリティグループの作成(common)

resource "alicloud_security_group" "common" {

name = "${var.cluster_name}-common"

vpc_id = alicloud_vpc.vpc.id

}

# セキュリティグループのルール設定(common)

resource "alicloud_security_group_rule" "common" {

type = "ingress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "${var.ssh_port}/${var.ssh_port}"

priority = 1

security_group_id = alicloud_security_group.common.id

cidr_ip = "0.0.0.0/0"

}

# セキュリティグループの作成(master)

resource "alicloud_security_group" "master" {

name = "${var.cluster_name}-master"

vpc_id = alicloud_vpc.vpc.id

}

# セキュリティグループのルール設定(master)

resource "alicloud_security_group_rule" "master" {

type = "ingress"

ip_protocol = "tcp"

nic_type = "intranet"

policy = "accept"

port_range = "6443/6443"

priority = 1

security_group_id = alicloud_security_group.master.id

cidr_ip = "0.0.0.0/0"

}

# VPCの作成

resource "alicloud_vpc" "vpc" {

name = "${var.cluster_name}-vpc"

cidr_block = var.vpc_cidr

}

# vswitch(subnet)の作成

resource "alicloud_vswitch" "vsw" {

count = length(local.all_zones)

name = "${var.cluster_name}-${local.all_zones[count.index]}"

vpc_id = alicloud_vpc.vpc.id

cidr_block = cidrsubnet(var.vpc_cidr, var.subnet_netmask_bits, var.subnet_offset + count.index)

availability_zone = local.all_zones[count.index]

}

# ロードバランサーの作成

resource "alicloud_slb" "master" {

name = "${var.cluster_name}-api-slb"

address_type = "internet"

}

# リスナーの作成

resource "alicloud_slb_listener" "master_api" {

load_balancer_id = alicloud_slb.master.id

frontend_port = 6443

backend_port = 6443

bandwidth = 5

protocol = "tcp"

health_check_type = "tcp"

}

# インスタンスのアタッチ

resource "alicloud_slb_attachment" "master_api" {

load_balancer_id = alicloud_slb.master.id

instance_ids = alicloud_instance.master.*.id

}

# Kubernetesマスターノードの作成(ゾーン数分)

resource "alicloud_instance" "master" {

count = length(local.all_zones)

instance_name = "${var.cluster_name}-master-${count.index + 1}"

host_name = "${var.cluster_name}-master-${count.index + 1}"

availability_zone = local.all_zones[count.index % length(local.all_zones)]

image_id = var.node_image

key_name = alicloud_key_pair.deployer.key_name

instance_type = "ecs.t5-lc1m2.large"

system_disk_category = "cloud_efficiency"

security_groups = [alicloud_security_group.common.id, alicloud_security_group.master.id]

vswitch_id = local.all_vswitchs[count.index % length(local.all_zones)]

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_out = 5

}

# outputデータ用に情報取得

data "alicloud_instances" "masters" {

ids = alicloud_instance.master.*.id

}

# Kubernetesワーカーノードの作成(6つ)

resource "alicloud_instance" "worker" {

count = 6

instance_name = "${var.cluster_name}-worker-${count.index + 1}"

host_name = "${var.cluster_name}-worker-${count.index + 1}"

availability_zone = local.all_zones[count.index % length(local.all_zones)]

image_id = var.node_image

key_name = alicloud_key_pair.deployer.key_name

instance_type = "ecs.t5-lc1m2.large"

system_disk_category = "cloud_efficiency"

security_groups = [alicloud_security_group.common.id]

vswitch_id = local.all_vswitchs[count.index % length(local.all_zones)]

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_out = 5

}

# outputデータ用に情報取得

data "alicloud_instances" "workers" {

ids = alicloud_instance.worker.*.id

}

# 踏み台サーバの作成

resource "alicloud_instance" "bastion" {

instance_name = "${var.cluster_name}-bastion"

host_name = "${var.cluster_name}-bastion"

availability_zone = local.all_zones[0]

image_id = var.bastion_image

key_name = alicloud_key_pair.deployer.key_name

instance_type = "ecs.t5-lc2m1.nano"

system_disk_category = "cloud_efficiency"

security_groups = [alicloud_security_group.common.id, alicloud_security_group.master.id]

vswitch_id = local.all_vswitchs[0]

internet_charge_type = "PayByTraffic"

internet_max_bandwidth_out = 5

}

# outputデータ用に情報取得

data "alicloud_instances" "bastion" {

ids = alicloud_instance.bastion.*.id

}

# 共通設定

variable "cluster_name" {

default = "fabric"

}

variable "ssh_public_key_file" {

default = "~/.ssh/id_rsa.pub"

}

variable "ssh_port" {

default = 22

}

variable "vpc_cidr" {

default = "10.10.0.0/16"

}

variable "subnet_offset" {

default = 0

}

variable "subnet_netmask_bits" {

default = 8

}

# クラウドベンダー依存部分

variable "alicloud_region" {

default = "ap-northeast-1"

}

variable "node_image" {

default = "m-6we7k89y7wwevump3joc"

}

variable "bastion_image" {

default = "ubuntu_18_04_x64_20G_alibase_20191225.vhd"

}

output "kubeadm_api" {

value = {

endpoint = alicloud_slb.master.address

}

}

output "kubernetes_bastion" {

value = {

public_ip = data.alicloud_instances.bastion.instances.0.public_ip

}

}

output "kubernetes_masters" {

value = {

private_ip = data.alicloud_instances.masters.instances.*.private_ip

}

}

output "kubernetes_workers" {

value = {

private_ip = data.alicloud_instances.workers.instances.*.private_ip

}

}

terraform applyを実行

Apply complete! Resources: 20 added, 0 changed, 0 destroyed.

Outputs:

kubeadm_api = {

"endpoint" = "47.91.31.176"

}

kubernetes_bastion = {

"public_ip" = "47.74.3.221"

}

kubernetes_masters = {

"private_ip" = [

"10.10.1.163",

"10.10.0.133",

]

}

kubernetes_workers = {

"private_ip" = [

"10.10.1.164",

"10.10.0.134",

"10.10.0.132",

"10.10.0.131",

"10.10.1.162",

"10.10.1.161",

]

}

Alicloudのコンソールでインスタンスを確認。ちゃんとマスターnodeがゾーンで分かれている。

LB(SLB)にマスターnodeが2つぶら下がっているのがわかる。

Kubernetesの設定

これでインフラが構築できたので、ここからKubernetes環境を設定していく。基本的には[この][link2]ページのMaster nodes:以下を実行すれば良い。

[link2]:https://kruschecompany.com/kubernetes-1-15-whats-new/

あとすべてのnodeであらかじめ、

swapoff -a

export KUBECONFIG=/etc/kubernetes/admin.conf

してある。というかマシンイメージの.bashrcに書いてある。

1つのマスターnodeへ入って

/etc/kubernetes/kubeadm/kubeadm-config.yamlを次の通り記述。

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: stable

controlPlaneEndpoint: "47.91.31.176:6443"

controlPlaneEndpointには、Outputs:のkubeadm_api.endpointを設定する。

kubeadm initを実行。

root@fabric-master-2:~# kubeadm init --config=/etc/kubernetes/kubeadm/kubeadm-config.yaml --upload-certs

- 途中のkubeadmのメッセージは省略 -

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 47.91.31.176:6443 --token 35gsx8.3ea2u1u2dd4dttc7 \

--discovery-token-ca-cert-hash sha256:7b3ca182885585ec33e31d92caff81586acaf60553df2dc9387b5d4590d4289d \

--control-plane --certificate-key 83a57824d8a6eab1370a9d6c510a3a6dfd3c1c2154da713cf5cf73fdaf46e563

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 47.91.31.176:6443 --token 35gsx8.3ea2u1u2dd4dttc7 \

--discovery-token-ca-cert-hash sha256:7b3ca182885585ec33e31d92caff81586acaf60553df2dc9387b5d4590d4289d

もう1つのマスターnodeでは、kubeadm initの出力にあるcontrol-plane node用のjoinコマンドを実行する。

6つのワーカーnodeでは、worker node用のjoinコマンドをひたすら実行する。

できた環境を確認する

terraformを実行したAWSのインスタンスへ、Kubernetes環境を一式(たぶんkubectlだけで良いと思う)インストール。

最初にkubeadm initしたマスターnodeから/etc/kubernetes/admin.confをコピーする。

これでkubectlが利用できるようになる。

export KUBECONFIG=/etc/kubernetes/admin.conf

を忘れずに。

root@ip-172-31-27-178:~/fabric-dev# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

fabric-master-1 Ready master 20m v1.17.0 10.10.0.133 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-master-2 Ready master 44m v1.17.0 10.10.1.163 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-worker-1 Ready <none> 11m v1.17.0 10.10.0.131 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-worker-2 Ready <none> 10m v1.17.0 10.10.1.162 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-worker-3 Ready <none> 12m v1.17.0 10.10.0.132 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-worker-4 Ready <none> 9m52s v1.17.0 10.10.1.161 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-worker-5 Ready <none> 13m v1.17.0 10.10.0.134 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

fabric-worker-6 Ready <none> 13m v1.17.0 10.10.1.164 <none> Ubuntu 18.04.3 LTS 4.15.0-74-generic docker://18.9.9

マスターnodeが2台、ワーカーnodeが6台確認でき、すべてReadyになっている。

root@ip-172-31-27-178:~/fabric-dev# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-5c45f5bd9f-6xwsl 1/1 Running 0 2m33s 192.168.91.129 fabric-worker-4 <none> <none>

kube-system calico-node-4hfbj 1/1 Running 0 2m33s 10.10.1.161 fabric-worker-4 <none> <none>

kube-system calico-node-52c69 1/1 Running 0 2m33s 10.10.0.132 fabric-worker-3 <none> <none>

kube-system calico-node-7k2q6 1/1 Running 0 2m33s 10.10.1.164 fabric-worker-6 <none> <none>

kube-system calico-node-fpmx6 1/1 Running 0 2m33s 10.10.0.133 fabric-master-1 <none> <none>

kube-system calico-node-ncjw9 1/1 Running 0 2m33s 10.10.0.131 fabric-worker-1 <none> <none>

kube-system calico-node-ptjhm 1/1 Running 0 2m33s 10.10.0.134 fabric-worker-5 <none> <none>

kube-system calico-node-x9zsg 1/1 Running 0 2m33s 10.10.1.163 fabric-master-2 <none> <none>

kube-system calico-node-ztfdn 1/1 Running 0 2m33s 10.10.1.162 fabric-worker-2 <none> <none>

kube-system coredns-6955765f44-8qtjq 1/1 Running 0 46m 192.168.124.129 fabric-worker-2 <none> <none>

kube-system coredns-6955765f44-jxkxc 1/1 Running 0 46m 192.168.91.130 fabric-worker-4 <none> <none>

kube-system etcd-fabric-master-1 1/1 Running 0 22m 10.10.0.133 fabric-master-1 <none> <none>

kube-system etcd-fabric-master-2 1/1 Running 0 45m 10.10.1.163 fabric-master-2 <none> <none>

kube-system kube-apiserver-fabric-master-1 1/1 Running 0 22m 10.10.0.133 fabric-master-1 <none> <none>

kube-system kube-apiserver-fabric-master-2 1/1 Running 0 45m 10.10.1.163 fabric-master-2 <none> <none>

kube-system kube-controller-manager-fabric-master-1 1/1 Running 0 22m 10.10.0.133 fabric-master-1 <none> <none>

kube-system kube-controller-manager-fabric-master-2 1/1 Running 1 45m 10.10.1.163 fabric-master-2 <none> <none>

kube-system kube-proxy-4lndk 1/1 Running 0 22m 10.10.0.133 fabric-master-1 <none> <none>

kube-system kube-proxy-bclfq 1/1 Running 0 14m 10.10.0.132 fabric-worker-3 <none> <none>

kube-system kube-proxy-ghjf4 1/1 Running 0 15m 10.10.1.164 fabric-worker-6 <none> <none>

kube-system kube-proxy-jlpvp 1/1 Running 0 14m 10.10.0.134 fabric-worker-5 <none> <none>

kube-system kube-proxy-k8275 1/1 Running 0 46m 10.10.1.163 fabric-master-2 <none> <none>

kube-system kube-proxy-phrzl 1/1 Running 0 13m 10.10.0.131 fabric-worker-1 <none> <none>

kube-system kube-proxy-pw9xk 1/1 Running 0 12m 10.10.1.162 fabric-worker-2 <none> <none>

kube-system kube-proxy-zt95z 1/1 Running 0 11m 10.10.1.161 fabric-worker-4 <none> <none>

kube-system kube-scheduler-fabric-master-1 1/1 Running 0 22m 10.10.0.133 fabric-master-1 <none> <none>

kube-system kube-scheduler-fabric-master-2 1/1 Running 1 45m 10.10.1.163 fabric-master-2 <none> <none>

kube-system podも問題なく動作している。

AWSのインスタンスからAlicloud上のKubernetesクラスタを操作するのは、なんだか妙な気分である。

node名から分かる通り、このクラスタはHyperledger Fabricのために構築したものである。Fabric Appをデプロイして遊んでみよう。