Introduction

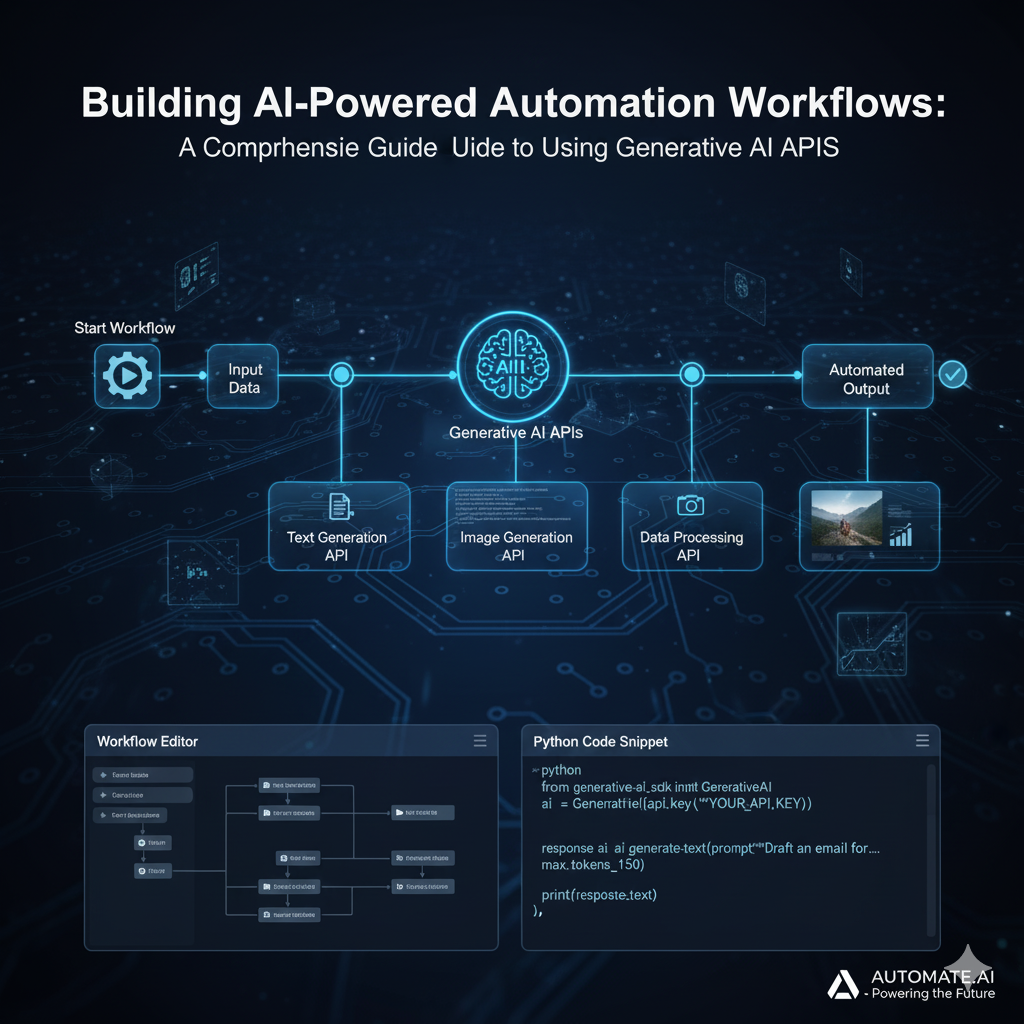

AI-powered automation workflows have become essential components in modern business and software development. This article provides a technical deep-dive into building robust automation systems using generative AI API keys, covering architecture patterns, security best practices, and real-world implementation examples.

Understanding Automation Workflows

An automation workflow is a systematic sequence of tasks executed without human intervention. By integrating generative AI APIs, we can enhance these workflows with advanced capabilities like natural language processing, image generation, data analysis, and intelligent decision-making.

Core Workflow Components

- Trigger: Initiating events (webhook, file upload, scheduled job)

- Processing: Data transformation and validation

- AI Integration: API calls to generative AI services

- Conditional Logic: Decision-making based on AI responses

- Actions: Executing downstream tasks

- Completion: Workflow termination and logging

Benefits of AI-Powered Automation

Efficiency Gains

- Task Automation: Reduce manual work by 70-90% for routine tasks

- Processing Speed: Handle thousands of requests per minute

- 24/7 Operations: Continuous processing without downtime

Advanced Capabilities

- Natural Language Understanding: Process unstructured text data

- Content Generation: Create human-like text, code, and creative content

- Multimodal Processing: Handle text, images, audio, and video

- Intelligent Reasoning: Make context-aware decisions

Scalability Benefits

# Example: Processing capacity comparison

manual_processing = 10 # items per hour

ai_automated_processing = 1000 # items per hour

efficiency_gain = (ai_automated_processing / manual_processing) * 100

print(f"Efficiency gain: {efficiency_gain}%") # 10,000% improvement

Technology Stack for AI Workflows

1. Programming Languages and Frameworks

Python remains the optimal choice for AI workflow development due to its rich ecosystem:

# Essential libraries for AI workflows

dependencies = {

"requests": "HTTP API communication",

"aiohttp": "Async HTTP requests for high throughput",

"pandas": "Data manipulation and analysis",

"pydantic": "Data validation and serialization",

"celery": "Distributed task queue",

"fastapi": "API framework for webhook endpoints",

"python-dotenv": "Environment variable management"

}

Alternative Stacks:

- Node.js: Excellent for real-time workflows and webhook handling

- Go: High-performance concurrent processing

- Java: Enterprise-grade workflows with Spring Boot

2. Generative AI Service Providers

OpenAI GPT Series

# OpenAI API configuration

OPENAI_CONFIG = {

"base_url": "https://api.openai.com/v1",

"models": {

"gpt-4": "Advanced reasoning and complex tasks",

"gpt-4-turbo": "Faster processing with large context",

"gpt-3.5-turbo": "Cost-effective for simple tasks"

},

"rate_limits": {

"requests_per_minute": 10000,

"tokens_per_minute": 300000

}

}

Google Gemini

# Gemini API configuration

GEMINI_CONFIG = {

"base_url": "https://generativelanguage.googleapis.com/v1beta",

"models": {

"gemini-1.5-pro": "Multimodal with 2M token context",

"gemini-1.5-flash": "Fast processing for simple tasks"

},

"multimodal_support": ["text", "image", "audio", "video"]

}

Anthropic Claude

# Claude API configuration

CLAUDE_CONFIG = {

"base_url": "https://api.anthropic.com/v1",

"models": {

"claude-3-opus": "Highest intelligence for complex reasoning",

"claude-3-sonnet": "Balanced performance and speed",

"claude-3-haiku": "Fast and cost-effective"

},

"max_tokens": 200000

}

3. Security and API Key Management

Environment-Based Configuration

import os

from dataclasses import dataclass

from typing import Optional

@dataclass

class AIServiceConfig:

api_key: str

base_url: str

model: str

max_tokens: int = 1000

temperature: float = 0.7

@classmethod

def from_env(cls, service: str) -> 'AIServiceConfig':

return cls(

api_key=os.getenv(f"{service.upper()}_API_KEY"),

base_url=os.getenv(f"{service.upper()}_BASE_URL"),

model=os.getenv(f"{service.upper()}_MODEL"),

max_tokens=int(os.getenv(f"{service.upper()}_MAX_TOKENS", "1000")),

temperature=float(os.getenv(f"{service.upper()}_TEMPERATURE", "0.7"))

)

# Usage

openai_config = AIServiceConfig.from_env("openai")

Secure Key Storage Solutions

# Docker Secrets

echo "your_api_key" | docker secret create openai_api_key -

# Kubernetes Secrets

kubectl create secret generic ai-api-keys \

--from-literal=openai-key=your_openai_key \

--from-literal=claude-key=your_claude_key

# AWS Secrets Manager

aws secretsmanager create-secret \

--name "ai-workflow-keys" \

--description "API keys for AI services" \

--secret-string '{"openai":"key1","claude":"key2"}'

Key Rotation Strategy

import time

from datetime import datetime, timedelta

class APIKeyRotator:

def __init__(self, keys: list[str], rotation_interval: int = 3600):

self.keys = keys

self.current_index = 0

self.rotation_interval = rotation_interval

self.last_rotation = time.time()

def get_current_key(self) -> str:

if time.time() - self.last_rotation > self.rotation_interval:

self.rotate_key()

return self.keys[self.current_index]

def rotate_key(self):

self.current_index = (self.current_index + 1) % len(self.keys)

self.last_rotation = time.time()

print(f"Rotated to key index: {self.current_index}")

Real-World Implementation: Email Processing Workflow

Let's build a comprehensive email processing system that summarizes, translates, and categorizes incoming emails.

Architecture Overview

from abc import ABC, abstractmethod

from typing import Dict, Any, Optional, List

from dataclasses import dataclass

from enum import Enum

import asyncio

import aiohttp

import logging

class ProcessingStatus(Enum):

PENDING = "pending"

PROCESSING = "processing"

COMPLETED = "completed"

FAILED = "failed"

@dataclass

class EmailTask:

id: str

content: str

sender: str

subject: str

target_language: str = "Japanese"

status: ProcessingStatus = ProcessingStatus.PENDING

result: Optional[Dict[str, Any]] = None

error_message: Optional[str] = None

AI Service Abstraction Layer

class AIService(ABC):

@abstractmethod

async def process_text(self, text: str, instructions: str) -> str:

pass

class OpenAIService(AIService):

def __init__(self, config: AIServiceConfig):

self.config = config

self.session: Optional[aiohttp.ClientSession] = None

async def __aenter__(self):

self.session = aiohttp.ClientSession()

return self

async def __aexit__(self, exc_type, exc_val, exc_tb):

if self.session:

await self.session.close()

async def process_text(self, text: str, instructions: str) -> str:

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {self.config.api_key}"

}

payload = {

"model": self.config.model,

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"{instructions}\n\nText: {text}"}

],

"max_tokens": self.config.max_tokens,

"temperature": self.config.temperature

}

async with self.session.post(

f"{self.config.base_url}/chat/completions",

headers=headers,

json=payload

) as response:

if response.status == 200:

result = await response.json()

return result['choices'][0]['message']['content'].strip()

else:

raise Exception(f"API call failed: {response.status}")

class ClaudeService(AIService):

def __init__(self, config: AIServiceConfig):

self.config = config

self.session: Optional[aiohttp.ClientSession] = None

async def __aenter__(self):

self.session = aiohttp.ClientSession()

return self

async def __aexit__(self, exc_type, exc_val, exc_tb):

if self.session:

await self.session.close()

async def process_text(self, text: str, instructions: str) -> str:

headers = {

"Content-Type": "application/json",

"x-api-key": self.config.api_key,

"anthropic-version": "2023-06-01"

}

payload = {

"model": self.config.model,

"max_tokens": self.config.max_tokens,

"messages": [

{"role": "user", "content": f"{instructions}\n\nText: {text}"}

]

}

async with self.session.post(

f"{self.config.base_url}/messages",

headers=headers,

json=payload

) as response:

if response.status == 200:

result = await response.json()

return result['content'][0]['text']

else:

raise Exception(f"API call failed: {response.status}")

Workflow Engine Implementation

class EmailWorkflowEngine:

def __init__(self, ai_service: AIService):

self.ai_service = ai_service

self.logger = logging.getLogger(__name__)

async def process_email(self, task: EmailTask) -> EmailTask:

"""Process a single email through the complete workflow"""

try:

task.status = ProcessingStatus.PROCESSING

self.logger.info(f"Processing email task {task.id}")

# Step 1: Summarize and translate

summary_translation = await self._summarize_and_translate(

task.content, task.target_language

)

# Step 2: Categorize email

category = await self._categorize_email(task.content, task.subject)

# Step 3: Extract action items

action_items = await self._extract_action_items(task.content)

# Step 4: Generate response suggestions

response_suggestions = await self._generate_response_suggestions(

task.content, task.sender

)

task.result = {

"summary_translation": summary_translation,

"category": category,

"action_items": action_items,

"response_suggestions": response_suggestions,

"processed_at": datetime.utcnow().isoformat()

}

task.status = ProcessingStatus.COMPLETED

self.logger.info(f"Successfully processed email task {task.id}")

except Exception as e:

task.status = ProcessingStatus.FAILED

task.error_message = str(e)

self.logger.error(f"Failed to process email task {task.id}: {e}")

return task

async def _summarize_and_translate(self, text: str, target_language: str) -> str:

instructions = f"""

Please perform the following tasks:

1. Summarize the email content in 2-3 sentences

2. Translate the summary to {target_language}

Format your response as:

Summary: [original summary]

Translation: [translated summary]

"""

return await self.ai_service.process_text(text, instructions)

async def _categorize_email(self, content: str, subject: str) -> str:

instructions = """

Categorize this email into one of the following categories:

- URGENT: Requires immediate attention

- MEETING: Meeting requests or scheduling

- SUPPORT: Customer support or technical issues

- MARKETING: Promotional or marketing content

- PERSONAL: Personal communication

- OTHER: Doesn't fit other categories

Return only the category name.

"""

return await self.ai_service.process_text(f"Subject: {subject}\n\n{content}", instructions)

async def _extract_action_items(self, content: str) -> List[str]:

instructions = """

Extract any action items or tasks mentioned in this email.

Return them as a JSON array of strings.

If no action items are found, return an empty array.

"""

result = await self.ai_service.process_text(content, instructions)

try:

import json

return json.loads(result)

except json.JSONDecodeError:

return []

async def _generate_response_suggestions(self, content: str, sender: str) -> List[str]:

instructions = f"""

Generate 3 brief, professional response suggestions for this email from {sender}.

Return them as a JSON array of strings.

Each suggestion should be no more than 50 words.

"""

result = await self.ai_service.process_text(content, instructions)

try:

import json

return json.loads(result)

except json.JSONDecodeError:

return []

Async Batch Processing

class BatchProcessor:

def __init__(self, workflow_engine: EmailWorkflowEngine, max_concurrent: int = 10):

self.workflow_engine = workflow_engine

self.max_concurrent = max_concurrent

self.semaphore = asyncio.Semaphore(max_concurrent)

async def process_batch(self, tasks: List[EmailTask]) -> List[EmailTask]:

"""Process multiple email tasks concurrently"""

async def process_with_semaphore(task: EmailTask) -> EmailTask:

async with self.semaphore:

return await self.workflow_engine.process_email(task)

# Process all tasks concurrently with rate limiting

results = await asyncio.gather(

*[process_with_semaphore(task) for task in tasks],

return_exceptions=True

)

# Handle exceptions

processed_tasks = []

for i, result in enumerate(results):

if isinstance(result, Exception):

tasks[i].status = ProcessingStatus.FAILED

tasks[i].error_message = str(result)

processed_tasks.append(tasks[i])

else:

processed_tasks.append(result)

return processed_tasks

Usage Example

async def main():

# Configuration

openai_config = AIServiceConfig.from_env("openai")

# Sample email data

sample_emails = [

EmailTask(

id="email_001",

content="""

Hi team,

We've noticed a significant increase in user engagement on our new mobile app.

The latest update, which introduced the dark mode feature, has been particularly

well-received. We need to schedule a meeting this week to discuss the roadmap

for the next quarter and allocate resources for the upcoming features.

Please let me know your availability for Thursday or Friday.

Best regards,

John

""",

sender="john@company.com",

subject="Mobile App Performance Update",

target_language="Japanese"

),

EmailTask(

id="email_002",

content="""

Dear Support Team,

I'm experiencing issues with the payment gateway integration. Customers are

reporting failed transactions, and our conversion rate has dropped by 15%

since yesterday. This needs immediate attention as it's affecting our revenue.

Error logs are attached. Please prioritize this issue.

Thanks,

Sarah

""",

sender="sarah@company.com",

subject="URGENT: Payment Gateway Issues",

target_language="Spanish"

)

]

# Process emails

async with OpenAIService(openai_config) as ai_service:

workflow_engine = EmailWorkflowEngine(ai_service)

batch_processor = BatchProcessor(workflow_engine, max_concurrent=5)

processed_emails = await batch_processor.process_batch(sample_emails)

# Display results

for email in processed_emails:

print(f"\n--- Email {email.id} ---")

print(f"Status: {email.status.value}")

if email.status == ProcessingStatus.COMPLETED:

print(f"Category: {email.result['category']}")

print(f"Summary & Translation: {email.result['summary_translation']}")

print(f"Action Items: {email.result['action_items']}")

elif email.status == ProcessingStatus.FAILED:

print(f"Error: {email.error_message}")

if __name__ == "__main__":

asyncio.run(main())

Advanced Workflow Patterns

1. Retry Mechanism with Exponential Backoff

import asyncio

from typing import Callable, Any

class RetryHandler:

def __init__(self, max_retries: int = 3, base_delay: float = 1.0):

self.max_retries = max_retries

self.base_delay = base_delay

async def execute_with_retry(self, func: Callable, *args, **kwargs) -> Any:

for attempt in range(self.max_retries + 1):

try:

return await func(*args, **kwargs)

except Exception as e:

if attempt == self.max_retries:

raise e

delay = self.base_delay * (2 ** attempt)

print(f"Attempt {attempt + 1} failed: {e}. Retrying in {delay}s...")

await asyncio.sleep(delay)

2. Circuit Breaker Pattern

import time

from enum import Enum

class CircuitState(Enum):

CLOSED = "closed"

OPEN = "open"

HALF_OPEN = "half_open"

class CircuitBreaker:

def __init__(self, failure_threshold: int = 5, recovery_timeout: int = 60):

self.failure_threshold = failure_threshold

self.recovery_timeout = recovery_timeout

self.failure_count = 0

self.last_failure_time = None

self.state = CircuitState.CLOSED

async def call(self, func: Callable, *args, **kwargs) -> Any:

if self.state == CircuitState.OPEN:

if time.time() - self.last_failure_time < self.recovery_timeout:

raise Exception("Circuit breaker is OPEN")

else:

self.state = CircuitState.HALF_OPEN

try:

result = await func(*args, **kwargs)

self._on_success()

return result

except Exception as e:

self._on_failure()

raise e

def _on_success(self):

self.failure_count = 0

self.state = CircuitState.CLOSED

def _on_failure(self):

self.failure_count += 1

self.last_failure_time = time.time()

if self.failure_count >= self.failure_threshold:

self.state = CircuitState.OPEN

3. Workflow Monitoring and Metrics

import time

from dataclasses import dataclass, field

from typing import Dict, List

from collections import defaultdict

@dataclass

class WorkflowMetrics:

total_processed: int = 0

successful: int = 0

failed: int = 0

avg_processing_time: float = 0.0

processing_times: List[float] = field(default_factory=list)

error_counts: Dict[str, int] = field(default_factory=lambda: defaultdict(int))

def add_processing_time(self, duration: float):

self.processing_times.append(duration)

self.avg_processing_time = sum(self.processing_times) / len(self.processing_times)

def record_success(self, duration: float):

self.total_processed += 1

self.successful += 1

self.add_processing_time(duration)

def record_failure(self, error: str, duration: float):

self.total_processed += 1

self.failed += 1

self.error_counts[error] += 1

self.add_processing_time(duration)

def get_success_rate(self) -> float:

if self.total_processed == 0:

return 0.0

return (self.successful / self.total_processed) * 100

class MonitoredWorkflowEngine(EmailWorkflowEngine):

def __init__(self, ai_service: AIService):

super().__init__(ai_service)

self.metrics = WorkflowMetrics()

async def process_email(self, task: EmailTask) -> EmailTask:

start_time = time.time()

try:

result = await super().process_email(task)

duration = time.time() - start_time

if result.status == ProcessingStatus.COMPLETED:

self.metrics.record_success(duration)

else:

self.metrics.record_failure(result.error_message or "Unknown error", duration)

return result

except Exception as e:

duration = time.time() - start_time

self.metrics.record_failure(str(e), duration)

raise

def get_metrics_summary(self) -> Dict[str, Any]:

return {

"total_processed": self.metrics.total_processed,

"success_rate": f"{self.metrics.get_success_rate():.2f}%",

"avg_processing_time": f"{self.metrics.avg_processing_time:.2f}s",

"error_distribution": dict(self.metrics.error_counts)

}

Production Deployment Considerations

1. Containerized Deployment

# Dockerfile

FROM python:3.11-slim

WORKDIR /app

# Install dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy application code

COPY . .

# Set environment variables

ENV PYTHONPATH=/app

ENV WORKERS=4

# Expose port

EXPOSE 8000

# Run application

CMD ["gunicorn", "--workers", "$WORKERS", "--bind", "0.0.0.0:8000", "main:app"]

# docker-compose.yml

version: '3.8'

services:

ai-workflow:

build: .

ports:

- "8000:8000"

environment:

- OPENAI_API_KEY=${OPENAI_API_KEY}

- CLAUDE_API_KEY=${CLAUDE_API_KEY}

- REDIS_URL=redis://redis:6379/0

depends_on:

- redis

- postgres

volumes:

- ./logs:/app/logs

redis:

image: redis:7-alpine

ports:

- "6379:6379"

postgres:

image: postgres:15

environment:

- POSTGRES_DB=workflow_db

- POSTGRES_USER=user

- POSTGRES_PASSWORD=password

volumes:

- postgres_data:/var/lib/postgresql/data

celery-worker:

build: .

command: celery -A main.celery worker --loglevel=info

environment:

- OPENAI_API_KEY=${OPENAI_API_KEY}

- CLAUDE_API_KEY=${CLAUDE_API_KEY}

- REDIS_URL=redis://redis:6379/0

depends_on:

- redis

- postgres

volumes:

postgres_data:

2. Kubernetes Deployment

# k8s-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ai-workflow

spec:

replicas: 3

selector:

matchLabels:

app: ai-workflow

template:

metadata:

labels:

app: ai-workflow

spec:

containers:

- name: ai-workflow

image: your-registry/ai-workflow:latest

ports:

- containerPort: 8000

env:

- name: OPENAI_API_KEY

valueFrom:

secretKeyRef:

name: ai-api-keys

key: openai-key

- name: CLAUDE_API_KEY

valueFrom:

secretKeyRef:

name: ai-api-keys

key: claude-key

resources:

requests:

memory: "512Mi"

cpu: "250m"

limits:

memory: "1Gi"

cpu: "500m"

livenessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /ready

port: 8000

initialDelaySeconds: 5

periodSeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: ai-workflow-service

spec:

selector:

app: ai-workflow

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

3. Monitoring and Observability

from prometheus_client import Counter, Histogram, Gauge, start_http_server

# Metrics

processed_emails = Counter('emails_processed_total', 'Total processed emails', ['status'])

processing_time = Histogram('email_processing_duration_seconds', 'Email processing duration')

active_tasks = Gauge('active_email_tasks', 'Number of active email processing tasks')

api_calls = Counter('ai_api_calls_total', 'Total AI API calls', ['service', 'model'])

class MetricsMiddleware:

def __init__(self, workflow_engine: EmailWorkflowEngine):

self.workflow_engine = workflow_engine

async def process_email_with_metrics(self, task: EmailTask) -> EmailTask:

active_tasks.inc()

with processing_time.time():

try:

result = await self.workflow_engine.process_email(task)

processed_emails.labels(status=result.status.value).inc()

return result

finally:

active_tasks.dec()

# Start metrics server

start_http_server(8001)

Cost Optimization Strategies

1. Model Selection Based on Task Complexity

class SmartModelSelector:

def __init__(self):

self.model_costs = {

"gpt-4": 0.03, # per 1K tokens

"gpt-3.5-turbo": 0.002,

"claude-3-haiku": 0.00025,

"claude-3-sonnet": 0.003

}

self.complexity_thresholds = {

"simple": 100, # tokens

"medium": 500,

"complex": 1500

}

def select_model(self, task_complexity: str, content_length: int) -> str:

if task_complexity == "simple" and content_length < self.complexity_thresholds["simple"]:

return "claude-3-haiku" # Most cost-effective

elif task_complexity == "medium":

return "gpt-3.5-turbo"

else:

return "gpt-4" # Best performance for complex tasks

def estimate_cost(self, model: str, input_tokens: int, output_tokens: int) -> float:

total_tokens = input_tokens + output_tokens

cost_per_token = self.model_costs[model] / 1000

return total_tokens * cost_per_token

2. Caching Strategy

import hashlib

import json

from typing import Optional

class ResponseCache:

def __init__(self, redis_client):

self.redis = redis_client

self.cache_ttl = 3600 # 1 hour

def _generate_key(self, content: str, instructions: str, model: str) -> str:

"""Generate a unique cache key for the request"""

data = f"{content}:{instructions}:{model}"

return f"ai_cache:{hashlib.md5(data.encode()).hexdigest()}"

async def get_cached_response(self, content: str, instructions: str, model: str) -> Optional[str]:

"""Retrieve cached response if available"""

key = self._generate_key(content, instructions, model)

cached = await self.redis.get(key)

return cached.decode() if cached else None

async def cache_response(self, content: str, instructions: str, model: str, response: str):

"""Cache the AI response"""

key = self._generate_key(content, instructions, model)

await self.redis.setex(key, self.cache_ttl, response)

class CachedAIService(OpenAIService):

def __init__(self, config: AIServiceConfig, cache: ResponseCache):

super().__init__(config)

self.cache = cache

async def process_text(self, text: str, instructions: str) -> str:

# Check cache first

cached_response = await self.cache.get_cached_response(text, instructions, self.config.model)

if cached_response:

return cached_response

# If not cached, make API call

response = await super().process_text(text, instructions)

# Cache the response

await self.cache.cache_response(text, instructions, self.config.model, response)

return response

Error Handling and Resilience

1. Comprehensive Error Handling

from enum import Enum

from typing import Union

class ErrorType(Enum):

RATE_LIMIT = "rate_limit"

API_ERROR = "api_error"

TIMEOUT = "timeout"

VALIDATION_ERROR = "validation_error"

NETWORK_ERROR = "network_error"

class WorkflowError(Exception):

def __init__(self, error_type: ErrorType, message: str, details: dict = None):

self.error_type = error_type

self.message = message

self.details = details or {}

super().__init__(message)

class ResilientWorkflowEngine(EmailWorkflowEngine):

async def process_email(self, task: EmailTask) -> EmailTask:

try:

return await super().process_email(task)

except aiohttp.ClientResponseError as e:

if e.status == 429: # Rate limit

raise WorkflowError(

ErrorType.RATE_LIMIT,

"Rate limit exceeded",

{"retry_after": e.headers.get("retry-after")}

)

elif 400 <= e.status < 500:

raise WorkflowError(

ErrorType.API_ERROR,

f"API error: {e.status}",

{"status_code": e.status, "response": str(e)}

)

else:

raise WorkflowError(

ErrorType.NETWORK_ERROR,

"Network error occurred",

{"status_code": e.status}

)

except asyncio.TimeoutError:

raise WorkflowError(

ErrorType.TIMEOUT,

"Request timed out",

{"timeout_duration": 30}

)

except Exception as e:

raise WorkflowError(

ErrorType.API_ERROR,

f"Unexpected error: {str(e)}",

{"original_error": type(e).__name__}

)

Performance Optimization

1. Request Batching

class BatchedAIService:

def __init__(self, base_service: AIService, batch_size: int = 10):

self.base_service = base_service

self.batch_size = batch_size

self.pending_requests = []

async def add_request(self, text: str, instructions: str) -> str:

# Add to batch

request_future = asyncio.Future()

self.pending_requests.append((text, instructions, request_future))

# Process batch when full

if len(self.pending_requests) >= self.batch_size:

await self._process_batch()

return await request_future

async def _process_batch(self):

if not self.pending_requests:

return

batch = self.pending_requests[:self.batch_size]

self.pending_requests = self.pending_requests[self.batch_size:]

# Process all requests concurrently

tasks = [

self.base_service.process_text(text, instructions)

for text, instructions, _ in batch

]

results = await asyncio.gather(*tasks, return_exceptions=True)

# Set results for futures

for i, (_, _, future) in enumerate(batch):

result = results[i]

if isinstance(result, Exception):

future.set_exception(result)

else:

future.set_result(result)

Conclusion

Building robust AI-powered automation workflows requires careful consideration of architecture, security, performance, and cost optimization. Key takeaways:

Best Practices

- Modular Design: Create reusable, testable components

- Security First: Implement proper API key management and rotation

- Async Processing: Use async/await for high-throughput operations

- Error Resilience: Implement comprehensive error handling and retry mechanisms

- Monitoring: Track performance metrics and system health

- Cost Optimization: Select appropriate models and implement caching

Future Considerations

- Multi-Agent Systems: Coordinate multiple AI agents for complex workflows

- Real-time Processing: Stream processing for immediate response requirements

- Edge Computing: Deploy workflows closer to data sources

- MLOps Integration: Combine with traditional ML pipelines for hybrid solutions

The combination of generative AI APIs with robust automation frameworks opens unprecedented opportunities for business process optimization. Start with simple use cases, establish solid foundations, and gradually expand to more complex workflows.

Resources and Next Steps

- API Documentation: OpenAI, Anthropic, Google AI Platform docs

- Async Python: FastAPI, asyncio, aiohttp documentation

- Monitoring Tools: Prometheus, Grafana, Datadog

- Deployment Platforms: Docker, Kubernetes, AWS Lambda

- Message Queues: Redis, RabbitMQ, Apache Kafka

Begin by identifying repetitive tasks in your organization that could benefit from AI automation, then apply the patterns and practices outlined in this article to build production-ready solutions.