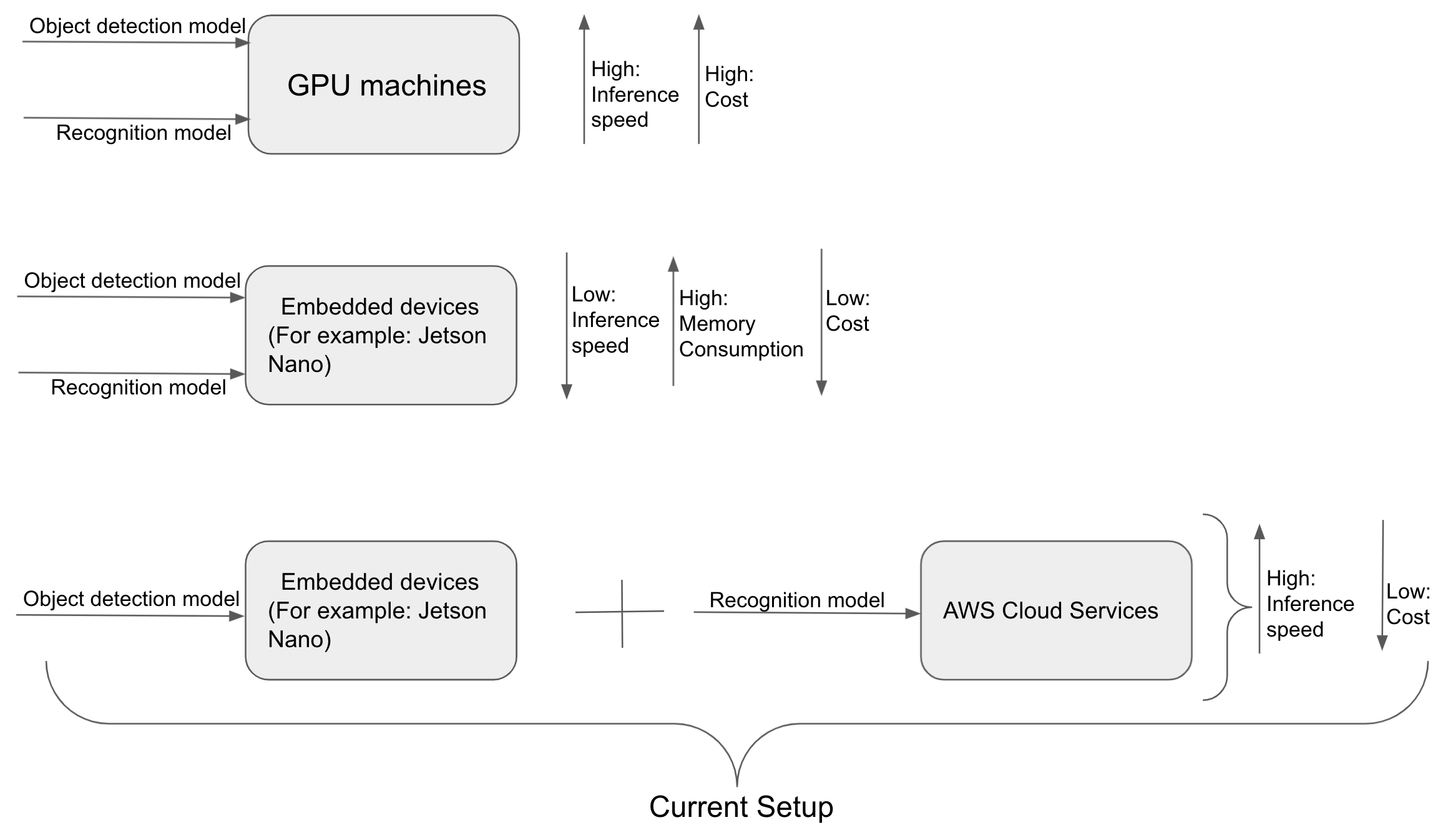

Implementing machine learning models on GPU devices, embedded devices, etc. is the best option. But on the other hand, it is very costly. The following figure explained about the models deployment on embedded device and AWS:

Therefore, I used NVIDIA Jetson Nano Developer Kit B01 to run object detection model (Pytorch) and recognition model (Tensorflow-Keras).

I used an optimized model to deploy on Jetson nano but it seems memory hungry and it is very difficult to run both models on same device.

Hence, I decided to use AWS cloud system to deploy atleast one model. The detection model on Jetson Nano and Recognition Model on AWS Cloud Services (AWS) helped me to improve the performance and speed of the overall system.

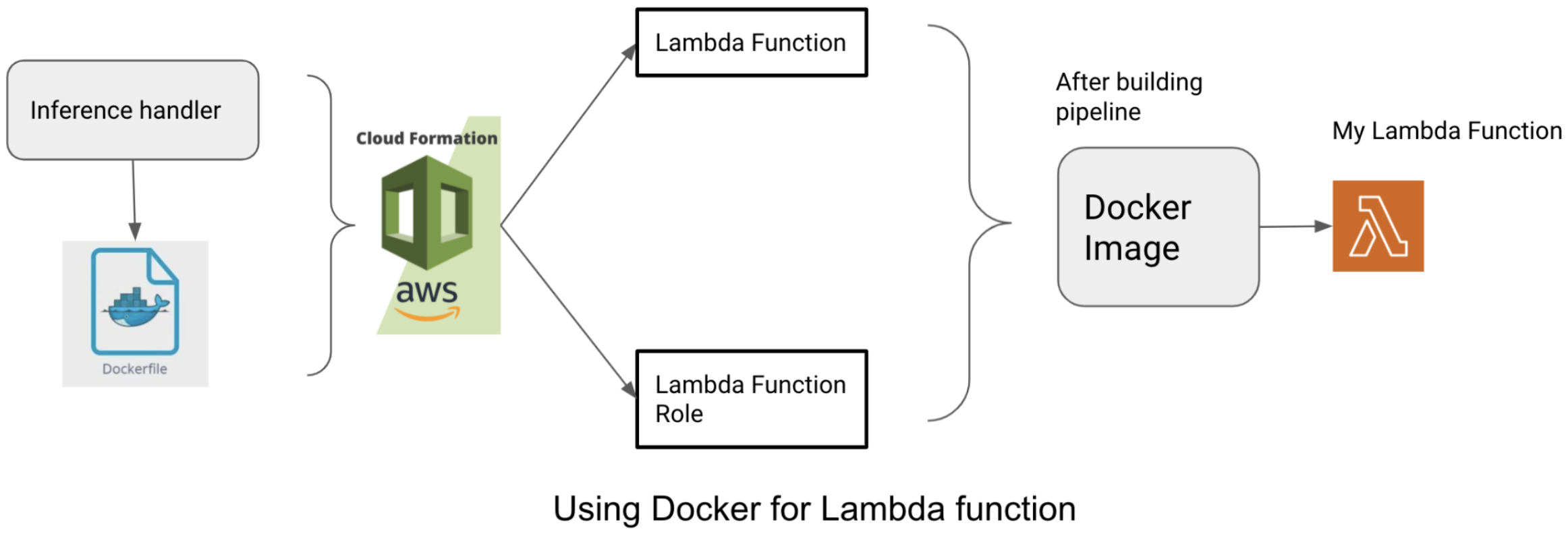

For this, I used AWS Lambda function, docker repository and Cloudformation. Lambda function and docker repository will be automatically created by using AWS Cloudformation pipeline. Cloudformation pipeline is created using yaml file. There is no restrictions of using JSON format too but YAML have many advantages over JSON while working on Cloudformation.

Creating a Dockerfile:

This is just a sample dockerfile.

# Pull the base image with python 3.8 as a runtime for your Lambda

FROM public.ecr.aws/lambda/python:3.8

# Use Argument to store variables

ARG AWS_CONTAINER_CREDENTIALS_RELATIVE_URI

ARG AWS_DEFAULT_REGION

# This app.py will be the machine learning inference script.

COPY app.py ./

# Install the python requirements from requirements.txt

RUN python3.8 -m pip install -r requirements.txt

# Set the CMD to your lambda function handler

CMD ["app.handler"]

This Dockerfile will create an image once we run the Cloudformation pipeline which is explained in the next section.

Need to know more about Docker, please check here.

Creating a Pipeline Template using Cloudformation:

AWS has prepared a very good documentation for each services. Therefore, I will use AWS::Lambda::Function documentation for creating a Lambda function.

We can setup Parameters Template Snippets as below:

Note: This way we can set the default name for each options. For example, Application name, S3 bucket name, docker repository name, lambda function name, etc. If we don't setup the name in the Parameters section, then once we run the pipeline, it will create a scary and long names.

AWSTemplateFormatVersion: 2010-09-09

Parameters:

ApplicationName:

Default: project_name_abc

Type: String

Description: Enter the name of your application

CodeBuildImage:

Default: 'aws/codebuild/standard:2.0'

Type: String

Description: Name of CodeBuild image.

SourceBranch:

Default: 'master'

Type: String

Description: Name of the branch.

Environment:

Default: 'development'(or 'production') # We can change depending on the environment

Type: String

Description: The environment to build.

LambdaFunction:

Default: 'lambda-function-name'

Type: String

Description: The predict lambda name.

Once we create a basic snippet, we will now create a Resources for the pipeline.

Some important points to be noted before writing the Resources:

1. Repository name should follow the name convention.

2. The name must start with a letter and can only contain lowercase letters, numbers, hyphens, underscores, and forward slashes.

3. It is a good practice to give AWS::IAM:Role for every Resources.

4. Amazon CloudWatch is a monitoring and management service that provides data and actionable insights for AWS, hybrid, and on-premises applications and infrastructure resources. CloudWatch enables you to monitor your complete stack (applications, infrastructure, and services) and leverage alarms, logs, and events data to take automated actions.

5. The AWS::CodeBuild::Project resource configures how AWS CodeBuild builds the source code.

6. Provide a buildspec.yaml at the root level

7. The AWS::CodePipeline::Pipeline resource creates a pipeline that describes how release process works when a piece of software changes.

8. The AWS::Lambda::Function creates a Lambda function. The maximum memory available to the Lambda function during runtime is 10GB.

Resources:

SourceRepository:

Type: 'AWS::CodeCommit::Repository'

Properties:

RepositoryName: !Sub '${ApplicationName}-${Environment}'

RepositoryDescription: !Sub 'Source code for ${ApplicationName}'

MyRepository:

Type: AWS::ECR::Repository

Properties:

RepositoryName: !Sub '${ApplicationName}-${Environment}/repository_name'

CodeBuildRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Action:

- 'sts:AssumeRole'

Effect: Allow

Principal:

Service:

- codebuild.amazonaws.com

CodeBuildPolicy:

Type: 'AWS::IAM::Policy'

Properties:

PolicyName: CodeBuildPolicy

PolicyDocument:

Version: 2012-10-17

Statement:

- Action:

- 'logs:CreateLogGroup'

- 'logs:CreateLogStream'

- 'logs:PutLogEvents'

Resource: '*'

Effect: Allow

Roles:

- !Ref CodeBuildRole

MyContainerBuild:

Type: 'AWS::CodeBuild::Project'

Properties:

Artifacts:

Type: CODEPIPELINE

Environment:

ComputeType: BUILD_GENERAL1_SMALL # Use up to 3 GB memory and 2 vCPUs for builds.

Image: !Ref CodeBuildImage

Type: LINUX_CONTAINER

PrivilegedMode: True

EnvironmentVariables:

- Name: REPOSITORY_URI # It can be set in the environment variables (Not covered in this article)

Value: !Sub '${AWS::AccountId}.dkr.ecr.${AWS::Region}.amazonaws.com/${MyRepository}'

- Name: ENVIRONMENT # Not covered in this article

Value: !Sub '${Environment}'

Name: !Sub '${ApplicationName}-${Environment}-MyContainer-Build'

ServiceRole: !GetAtt

- CodeBuildRole

- Arn

Source:

Type: CODEPIPELINE

BuildSpec: 'buildspec.yaml'

AppPipeline:

Type: 'AWS::CodePipeline::Pipeline'

Properties:

Name: !Sub '${ApplicationName}-${Environment}-Pipeline'

ArtifactStore:

Type: S3

Location: !Ref ArtifactBucketStore

RoleArn: !GetAtt

- CodePipelineRole

- Arn

Stages:

- Name: Source

Actions:

- ActionTypeId:

Category: Source

Owner: AWS

Version: '1'

Provider: CodeCommit

Configuration:

BranchName: !Ref SourceBranch

RepositoryName: !GetAtt

- SourceRepository

- Name

OutputArtifacts:

- Name: SourceRepo

RunOrder: 1

Name: Source

- Name: Build-Containers

Actions:

- InputArtifacts:

- Name: SourceRepo

Name: Build-My-Container

ActionTypeId:

Category: Build

Owner: AWS

Version: '1'

Provider: CodeBuild

Configuration:

ProjectName: !Ref MyContainerBuild

RunOrder: 1

CodePipelineRole:

Type: 'AWS::IAM::Role'

Properties:

Policies:

- PolicyName: DefaultPolicy

PolicyDocument:

Version: 2012-10-17

Statement:

- Action:

- 'codecommit:CancelUploadArchive'

- 'codecommit:GetBranch'

- 'codecommit:GetCommit'

Resource: '*'

Effect: Allow

- Action:

- 'cloudwatch:*'

- 'iam:PassRole'

Resource: '*'

Effect: Allow

- Action:

- 'lambda:InvokeFunction'

- 'lambda:ListFunctions'

Resource: '*'

Effect: Allow

- Action:

- 'codebuild:BatchGetBuilds'

- 'codebuild:StartBuild'

Resource: '*'

Effect: Allow

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Action:

- 'sts:AssumeRole'

Effect: Allow

Principal:

Service:

- codepipeline.amazonaws.com

LambdaFunctionRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- sts:AssumeRole

Path: "/"

Policies:

- PolicyName: LambdaFunctionPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Resource: '*'

Effect: Allow

- Action:

- 'lambda:InvokeFunction'

- 'lambda:InvokeAsync'

Resource: '*'

Effect: Allow

LambdaFunction:

Type: 'AWS::Lambda::Function'

Properties:

FunctionName: !Sub '${ApplicationName}-${MyLambda}-${Environment}'

MemorySize: 4096

Timeout: 500

Role: !GetAtt LambdaFunctionRole.Arn

Code:

ImageUri: !Sub '${AWS::AccountId}.dkr.ecr.${AWS::Region}.amazonaws.com/${MyRepository}:latest'

PackageType: Image

Environment:

Variables:

S3BUCKETNAME: !Sub ${BucketName}

S3BUCKETREGION: !Sub ${AWS::Region}

Now, once we prepare the CloudFormation template, it's time to create stack.

We need to chose Upload a template file option and select the pipeline.yml file.

Provide a stack name (Stack name can include letters (A-Z and a-z), numbers (0-9), and dashes (-).)

We can also able to see all the parameters we set during creating pipeline.

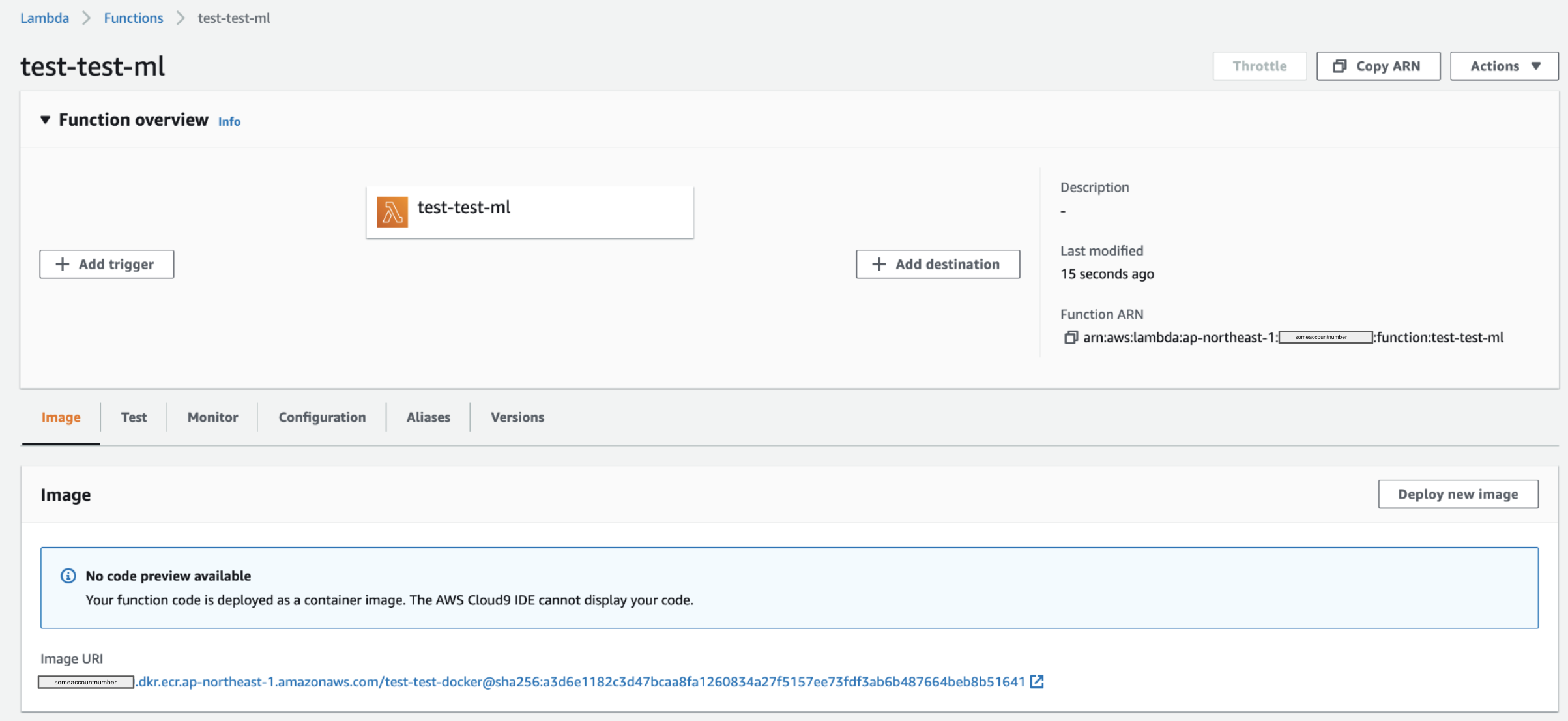

And finally, let's create the stack. If there is no issue or no errors, then a docker image will be created which can be accessed to Elastic Container Registry

Once the Lambda function has been created, then we need to manually deploy the newest version of docker image from the AWS console. Select the latest docker image. Currently, it cannot deploy image automatically from the Cloudformation pipeline build.

That's all for now!