はじめに

前回、VTF(Vertex Texture Fetch)の方法について解説しました。

Blender→Unity間でVTF(Vertex Texture Fetch)を行いオブジェクトを変形させる

簡単に言えば静止オブジェクトの頂点情報をテクスチャに書きこむことを行いました。

そこで、上記データをモーションフレーム毎に用意すればアニメーションデータすらも格納できることがわかります。

テクスチャを何枚も用意して切り替えるのは大変なので、1枚にまとめることが多いです。

また、そのテスクチャのことをVAT(Vertex Animation Texture)と言います。

それでは今回はBlenderにてVATを作成し、Unityで読みだすということをやっていきます。

やっていることはVTFの時とほとんど同じなので先にそちらを読んでおくとよいかもしれません。

Blender

テクスチャ作成

テクスチャクラスは前回作成したものを流用していきます。

画像形式はEXRです。

import bpy

import numpy as np

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

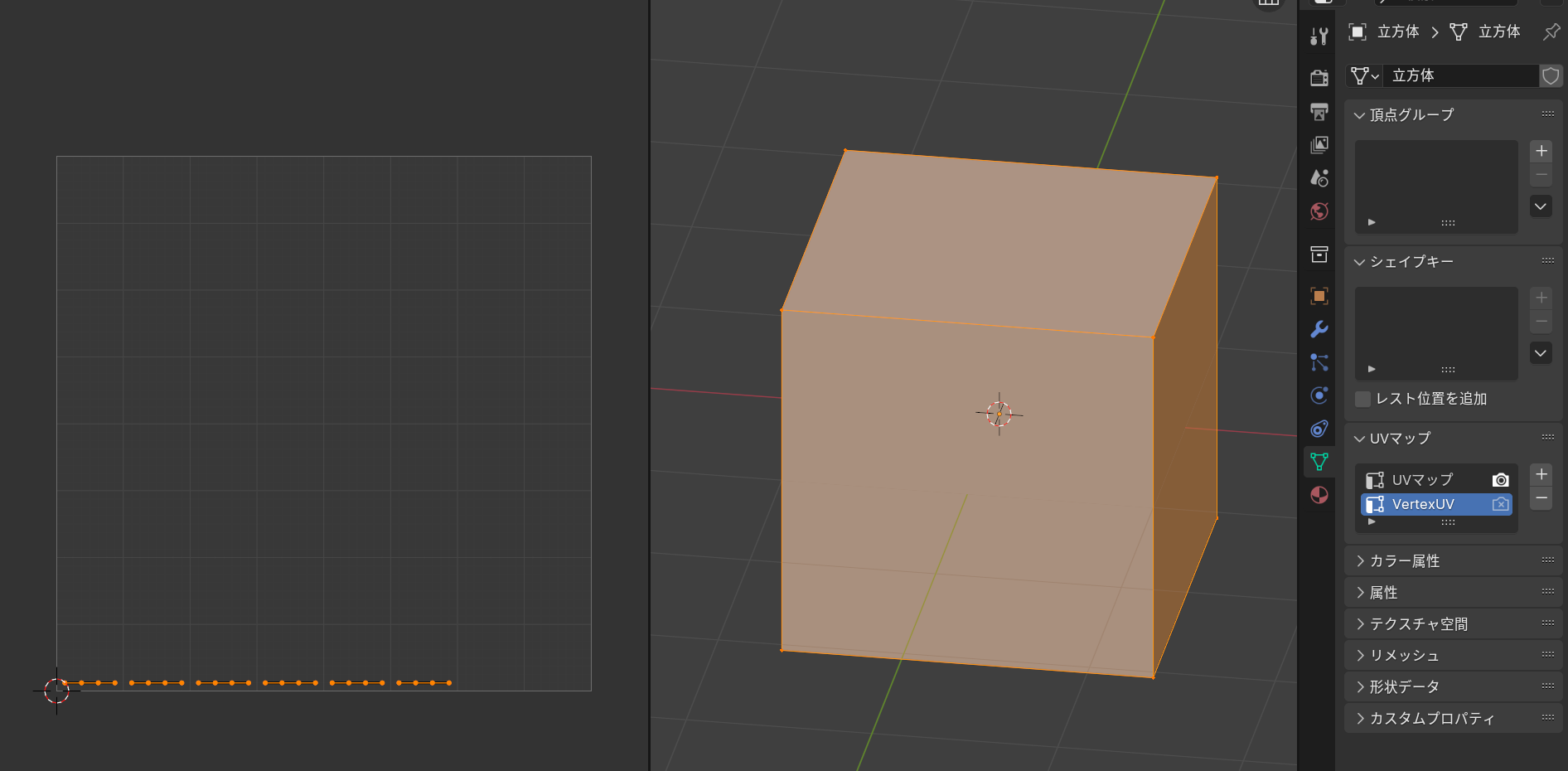

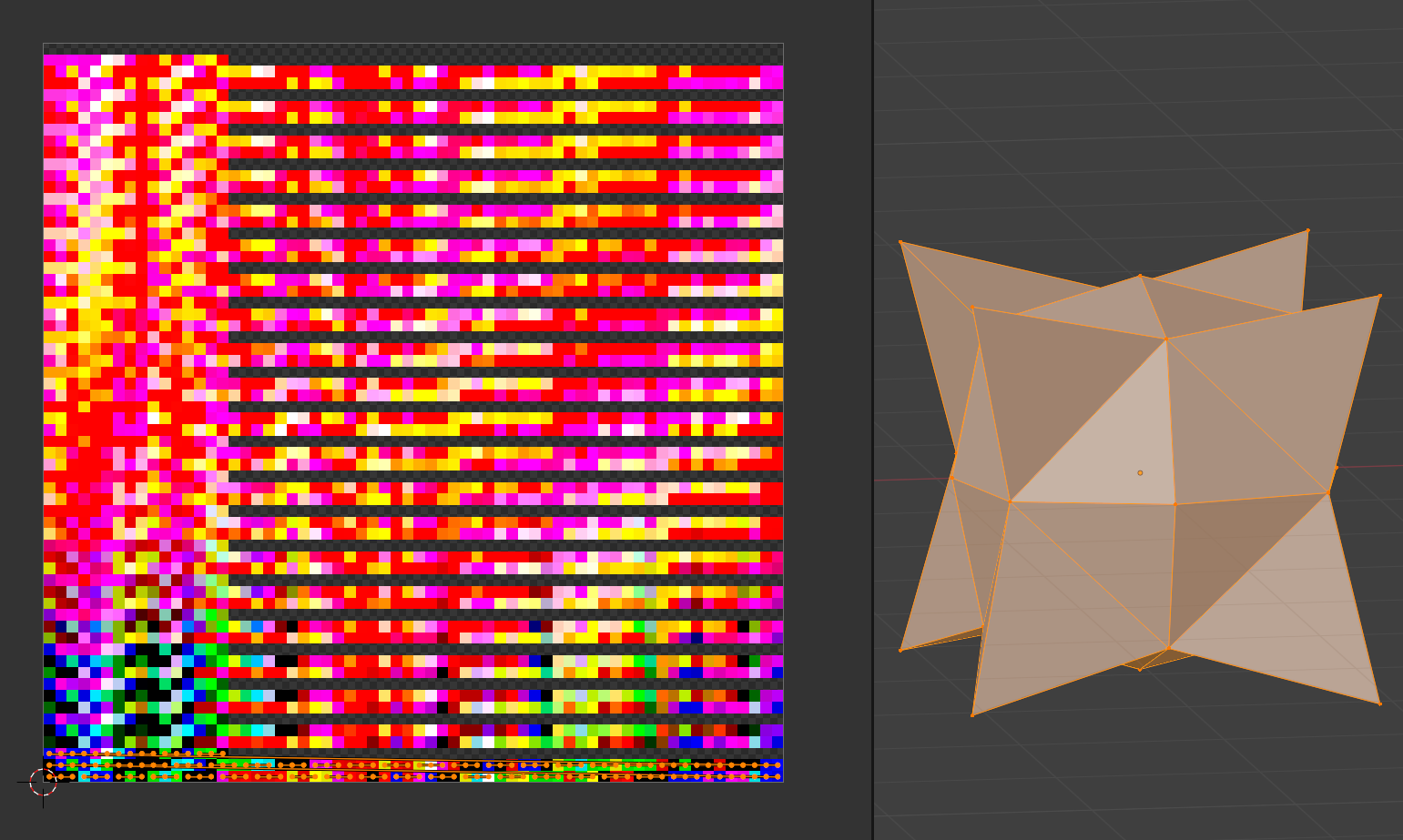

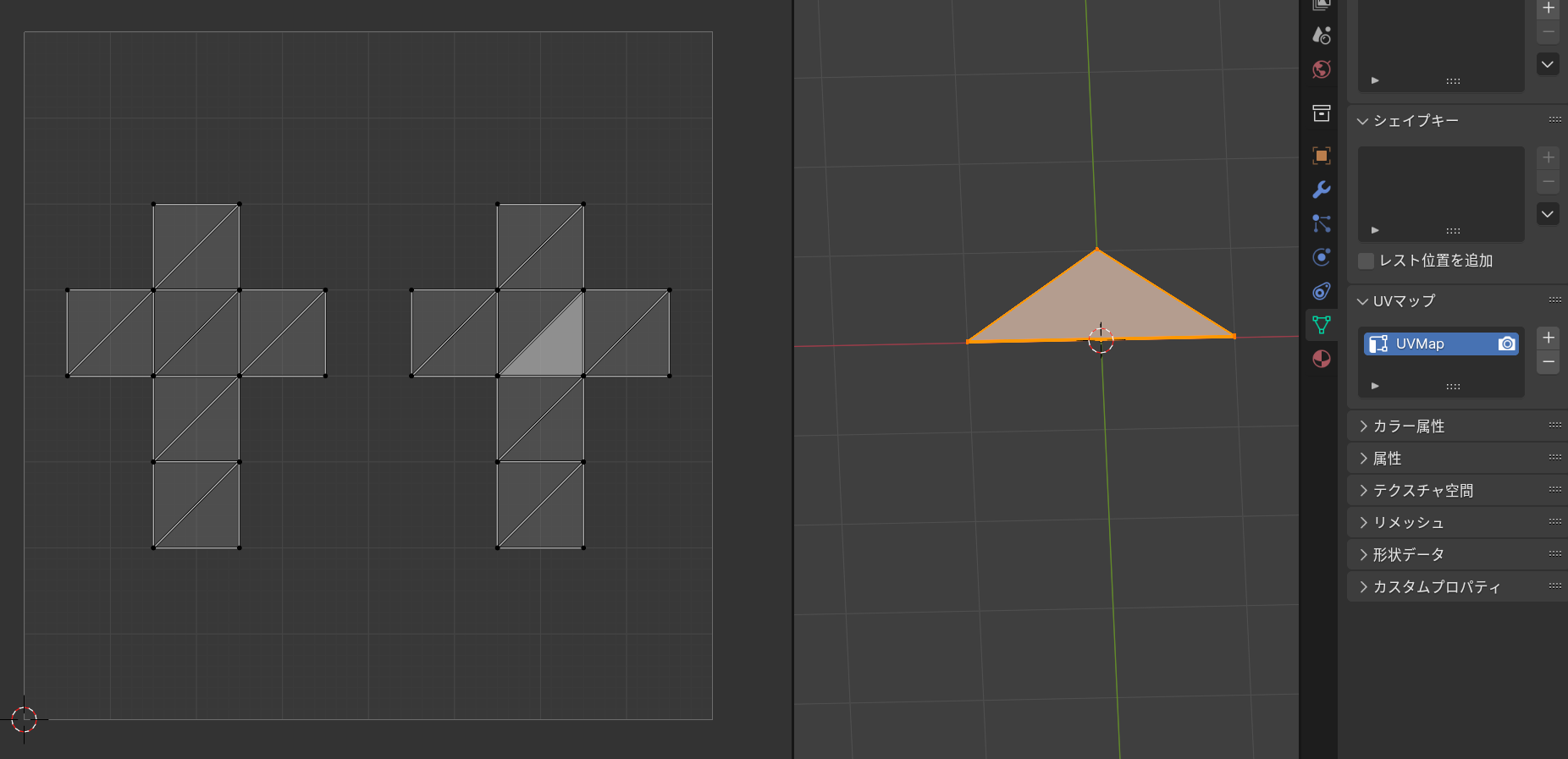

UV配置

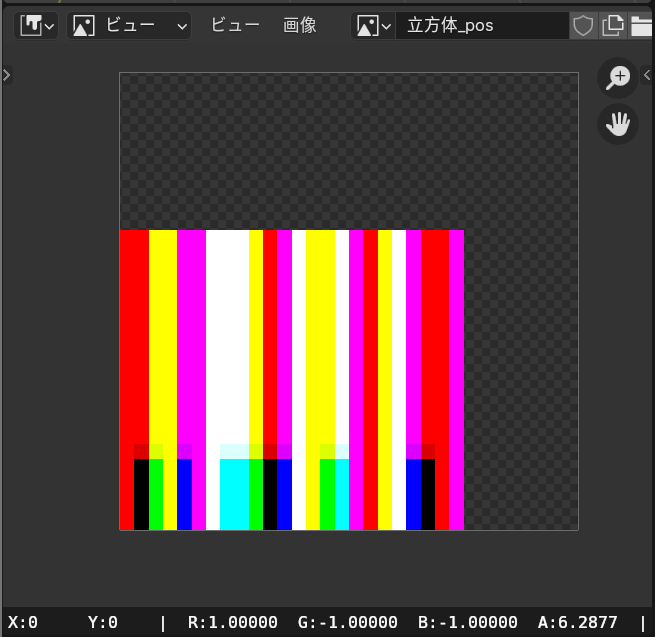

テクスチャにはX軸とY軸があるので、今回はX軸(横軸)に各頂点の情報を羅列し、Y軸(縦軸)を時間軸とします。

なのでUV配置は各頂点が横一列に並ぶように配置します。

import bpy

import numpy as np

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

+ def FixUV(mesh, width, height):

+ uv_layer = mesh.uv_layers.get("VertexUV")

+ if not uv_layer:

+ uv_layer = mesh.uv_layers.new(name="VertexUV")

+

+ for face in mesh.polygons:

+ for i, loop_index in enumerate(face.loop_indices):

+ uv_layer.data[loop_index].uv = [

+ loop_index * (1 / width) + 1 / (width * 2),

+ 0 + 1 / (height * 2)

+ ]

# テスト用

obj = bpy.context.active_object

FixUV(obj.data, 32, 32)

内容についてはほぼ前回と同じなので、細かな説明はそちらを参考にしてください。

アニメーション後のメッシュ情報の取得

指定したアニメーションフレームのメッシュ情報を取得する関数を定義します。

import bpy

import numpy as np

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

loop_index * (1 / width) + 1 / (width * 2),

0 + 1 / (height * 2)

]

+ def GetMesh(obj, frame):

+ bpy.context.scene.frame_set(frame)

+ bpy.context.view_layer.update()

+ depsgraph = bpy.context.evaluated_depsgraph_get()

+ eval_obj = obj.evaluated_get(depsgraph)

+ return eval_obj.to_mesh()

# テスト用

obj = bpy.context.active_object

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

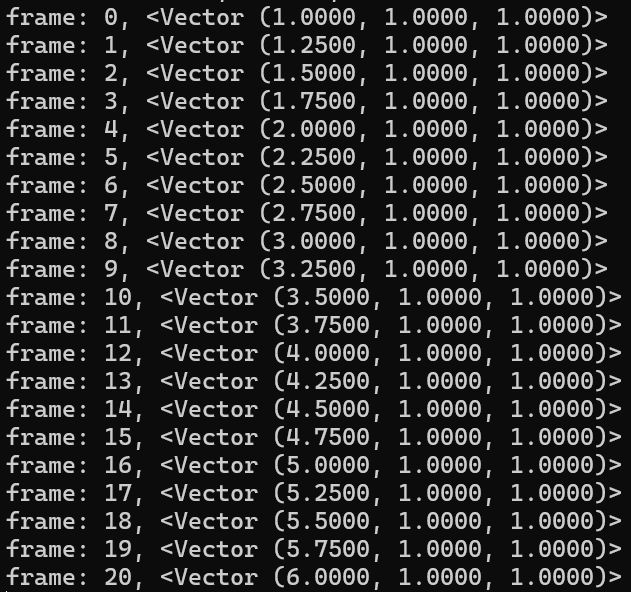

for frame in range(start_frame, end_frame + 1):

mesh = GetMesh(obj, frame)

# 0ポリゴン目の0頂点目の位置情報(ワールド座標系)を表示する

print(f"frame: {frame}, {obj.matrix_world @ mesh.vertices[mesh.polygons[0].vertices[0]].co}")

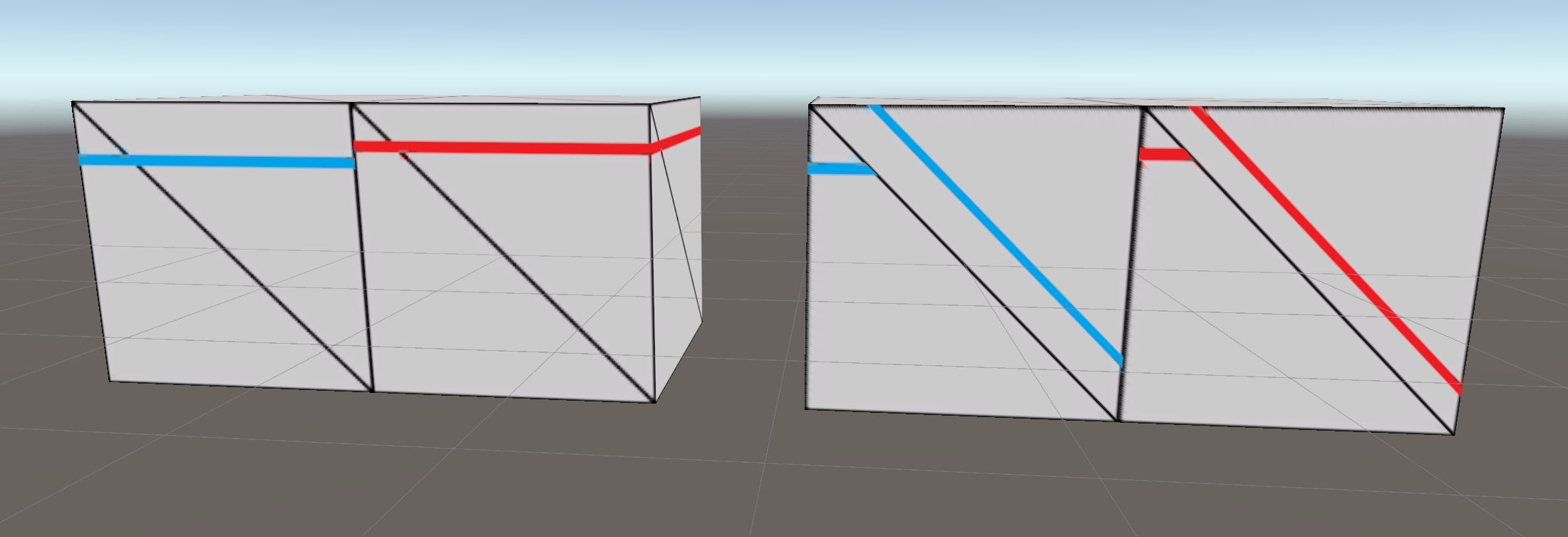

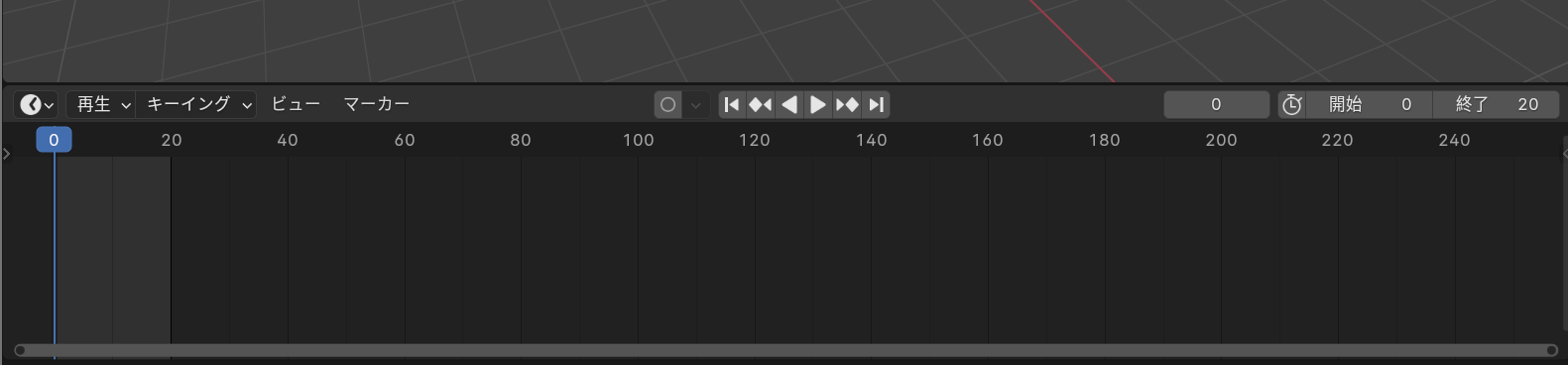

関数の検証として、立方体に対して0フレームから20フレームにかけてX軸に5m移動するというアニメーションを作成しました。

またアニメーション範囲はタイムラインの値から引用しているため、開始と終了の数値を変更する必要があります。

きちんと動いているのが確認できますね。

メッシュ取得

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

通常、アニメーションフレームを動かすだけならframe_set関数のみで良いのですが、その場合モディファイアが適応されていません。

そのため物理演算などの結果を得ることが出来ません。

モディファイアを適応した後のメッシュを得るためにはいくつかの手順を踏む必要があるようです。

スクリプトでモディファイアを適用したメッシュを作成する

また注意点として座標値などを得る場合、ワールド座標系である必要があります。

頂点情報記録

それでは前回と同じように各ピクセルに頂点情報と法線情報を格納します。

import bpy

import numpy as np

+ import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

loop_index * (1 / width) + 1 / (width * 2),

0 + 1 / (height * 2)

]

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

+ def NormalToFloat(x, y, z):

+ x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

+ packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

+ return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

+ def Main(obj, width, height):

+ start_frame = bpy.context.scene.frame_start

+ end_frame = bpy.context.scene.frame_end

+ texture = TextureClass(obj.name + '_pos', width, height)

+

+ FixUV(obj.data, width, height)

+ uv_layer = obj.data.uv_layers.get("VertexUV")

+

+ for frame in range(start_frame, end_frame + 1):

+ mesh = GetMesh(obj, frame)

+ for polygon in mesh.polygons:

+ for i, loop_index in enumerate(polygon.loop_indices):

+ uv = uv_layer.data[loop_index].uv

+ pixel = [int(uv.y * height), int(uv.x * width)]

+ pixel[0] += frame - start_frame

+

+ vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

+ normal = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

+ normal = normal.normalized()

+

+ texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

+

+ texture.Export()

+ obj = bpy.context.active_object

+ Main(obj, 32, 32)

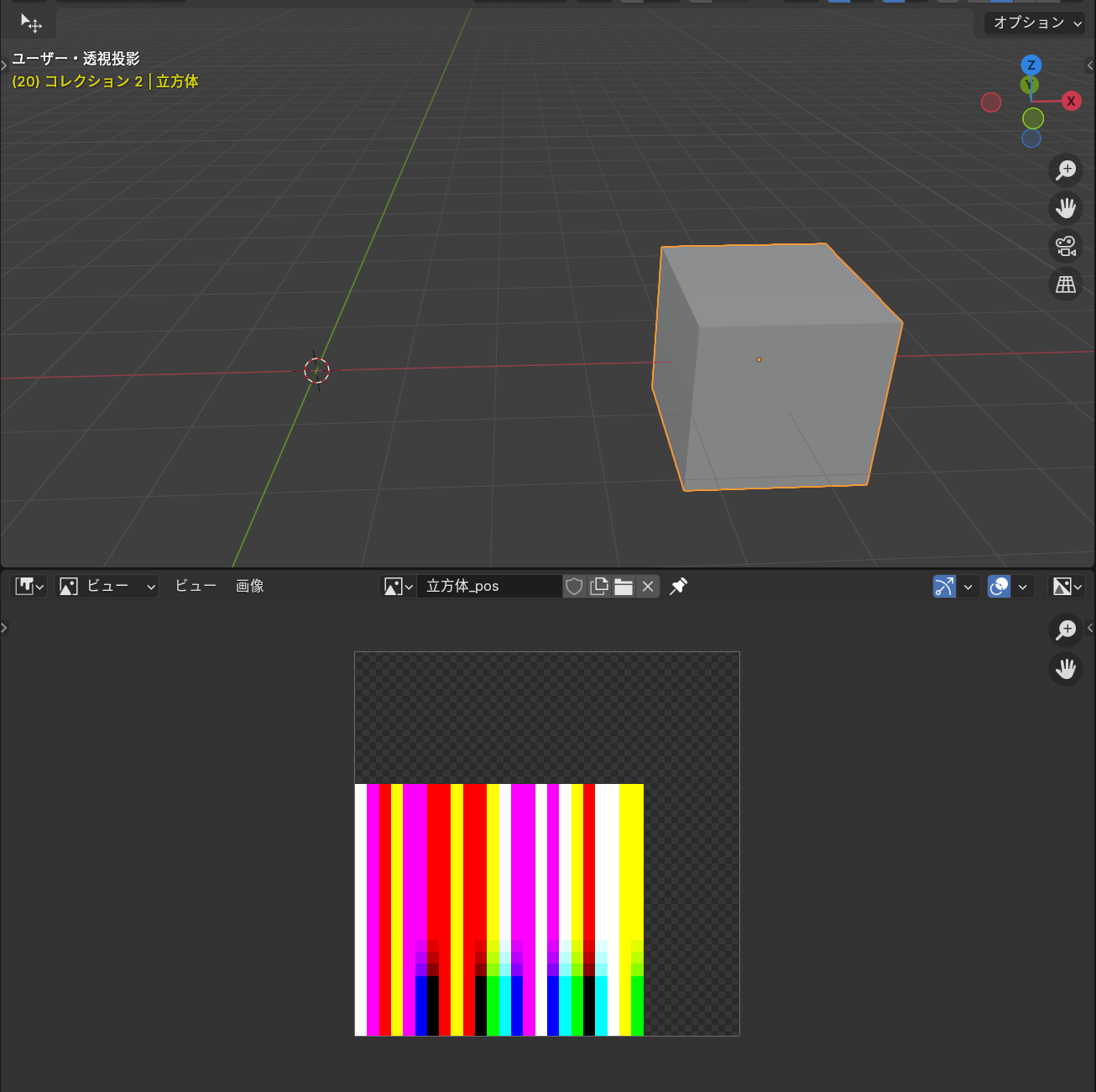

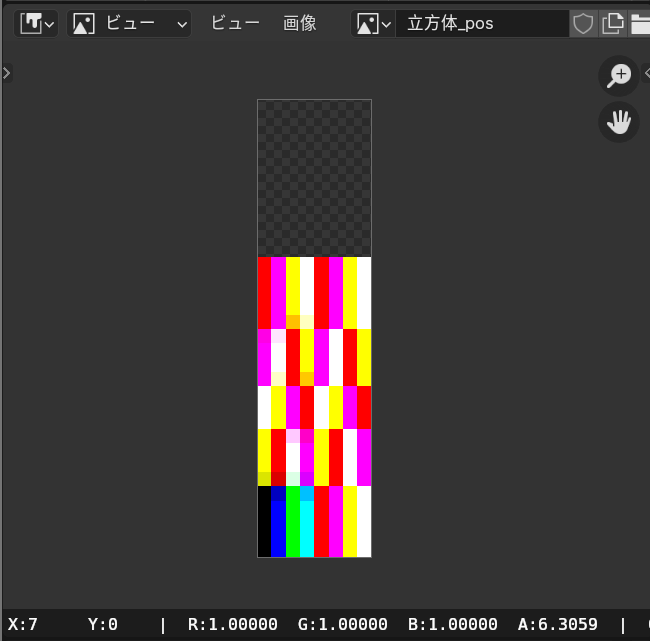

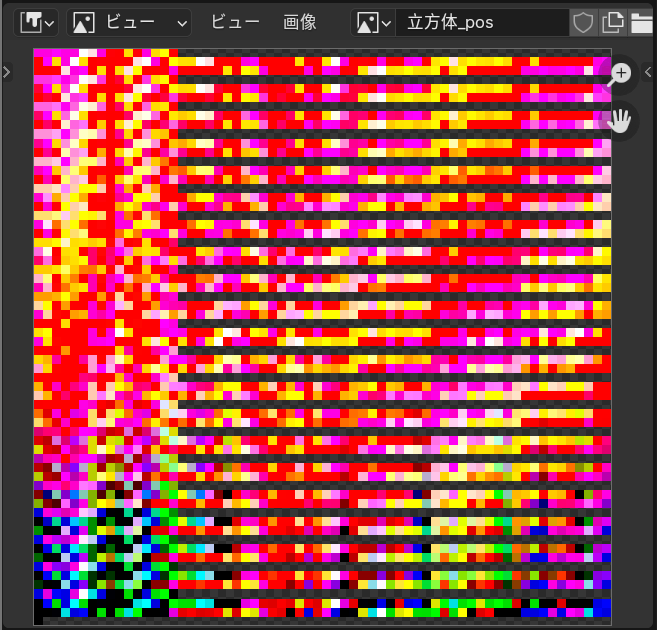

先ほど作成したアニメーション付きの立方体に対して実行すると、以下のテクスチャが得られました。

時間軸の変更

for frame in range(start_frame, end_frame + 1):

#...

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += frame - start_frame

フレームが進むごとにY軸を変えています。

頂点座標の取得

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

先述した通り、座標値などはワールド座標系を参照するようにします。

normalについては多少複雑になっていますが、奥が深いので色々試してみてください。

欲しい法線を取得するのに数時間苦戦しました pic.twitter.com/5kdzPy2LiC

— nekoco (@nekoco_vrc) August 1, 2024

スクリプト全文

import bpy

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

loop_index * (1 / width) + 1 / (width * 2),

0 + 1 / (height * 2)

]

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

def Main(obj, width, height):

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

texture = TextureClass(obj.name + '_pos', width, height)

FixUV(obj.data, width, height)

uv_layer = obj.data.uv_layers.get("VertexUV")

for frame in range(start_frame, end_frame + 1):

mesh = GetMesh(obj, frame)

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += frame - start_frame

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

obj = bpy.context.active_object

Main(obj, 32, 32)

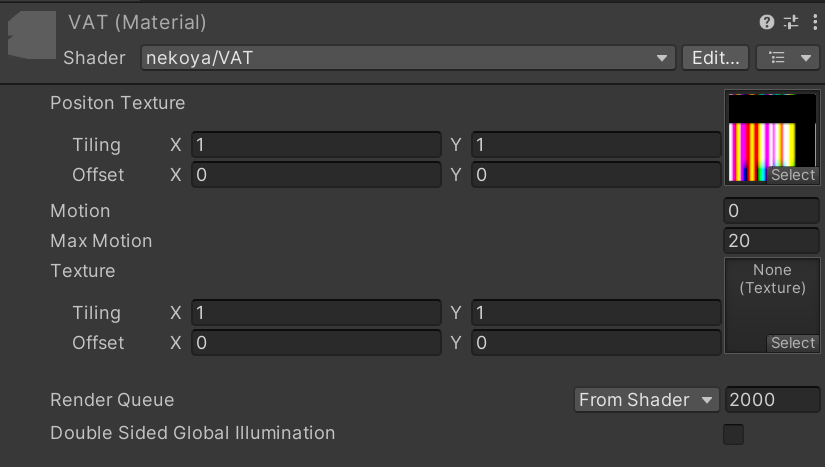

Unity

改変元プログラム

以下のランバートシェーダーを基本として拡張していきます。

Shader "nekoya/VAT"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" "LightMode" = "ForwardBase" }

LOD 100

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float3 normal : NORMAL;

float2 uv : TEXCOORD0;

};

struct v2f

{

float4 vertex : SV_POSITION;

float2 uv : TEXCOORD0;

half3 normal : TEXCOORD1;

};

sampler2D _MainTex;

float4 _MainTex_ST;

fixed4 _LightColor0;

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.normal = UnityObjectToWorldNormal(v.normal);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

col.rgb *= clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

return col;

}

ENDCG

}

}

}

改変後プログラム

VTFに対応させたシェーダーは以下の通りです。

Shader "nekoya/VAT"

{

Properties

{

+ _Texture("Positon Texture", 2D) = "white" {}

+ _Motion("Motion", Float) = 0.0

+ _MaxMotion("Max Motion", Float) = 0.0

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" "LightMode" = "ForwardBase" }

LOD 100

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

- float3 normal : NORMAL;

float2 uv : TEXCOORD0;

+ float2 VertexUV : TEXCOORD1;

};

struct v2f

{

float4 vertex : SV_POSITION;

float2 uv : TEXCOORD0;

half3 normal : TEXCOORD1;

};

- sampler2D _MainTex;

+ sampler2D _MainTex, _Texture;

- float4 _MainTex_ST;

+ float4 _MainTex_ST, _Texture_TexelSize;

fixed4 _LightColor0;

+ float _Motion, _MaxMotion;

+ half3 NormalUnpack(float v){

+ uint ix = asuint(v);

+ half3 normal = half3((ix & 0x00FF0000) >> 16, (ix & 0x0000FF00) >> 8, ix & 0x000000FF);

+ return ((normal / 255.0f) - 0.5f) * 2.0f;

+ }

v2f vert (appdata v)

{

+ float motion = _Motion % _MaxMotion;

+ float2 uv = v.VertexUV;

+ uv.y += floor(motion) * _Texture_TexelSize.y;

+

+ float4 tex = tex2Dlod(_Texture, float4(uv, 0, 0));

+ float4 pos = float4(tex.r, tex.b, tex.g, v.vertex.w);

+ half3 normal = NormalUnpack(tex.a);

+ normal = normalize(half3(normal.x, normal.z, normal.y));

v2f o;

- o.vertex = UnityObjectToClipPos(v.vertex);

+ o.vertex = UnityObjectToClipPos(pos);

- o.normal = UnityObjectToWorldNormal(v.normal);

+ o.normal = UnityObjectToWorldNormal(normal);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

col.rgb *= clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

return col;

}

ENDCG

}

}

}

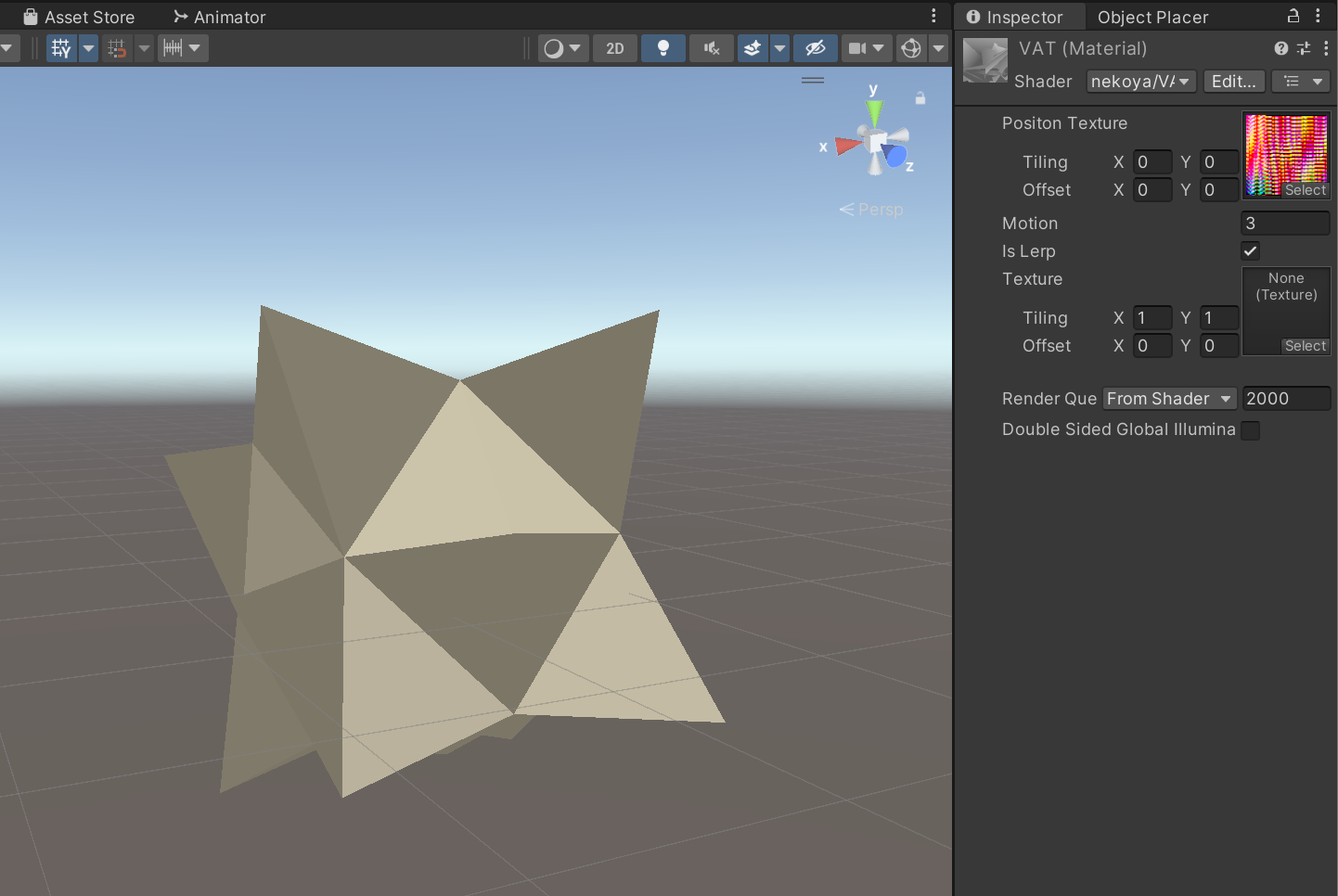

各種設定を行い、Motionパラメータを変更するとオブジェクトが動きます。

法線情報がちゃんと保存できているか確認するためにX軸上に360度回転するアニメーションも追加しました。

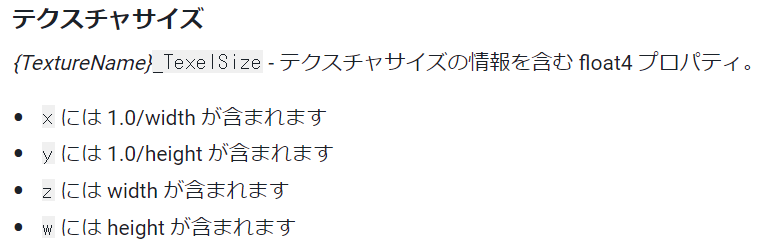

画像サイズ

sampler2D ..., _Texture;

float4 ..., _Texture_TexelSize;

{テクスチャ名}_TexelSizeを定義するとテクスチャサイズが取得できるようです。

Cg/HLSL でシェーダープロパティを参照する

時間軸

float2 uv = v.VertexUV;

uv.y += int(_Motion) % _MaxMotion * _Texture_TexelSize.y;

_Motionパラメータを変えることで参照するテクスチャ位置を変更するようにします。

floor(_Motion) % _MaxMotionにて何px縦軸に移動するか計算した後、_Texture_TexelSize.y(1.0/height)を掛けることでUVに変換します。

シェーダー全文

Shader "nekoya/VAT"

{

Properties

{

_Texture("Positon Texture", 2D) = "white" {}

_Motion("Motion", Float) = 0.0

_MaxMotion("Max Motion", Float) = 0.0

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" "LightMode" = "ForwardBase" }

LOD 100

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

float2 VertexUV : TEXCOORD1;

};

struct v2f

{

float4 vertex : SV_POSITION;

float2 uv : TEXCOORD0;

half3 normal : TEXCOORD1;

};

sampler2D _MainTex, _Texture;

float4 _MainTex_ST, _Texture_TexelSize;

fixed4 _LightColor0;

float _Motion, _MaxMotion;

half3 NormalUnpack(float v){

uint ix = asuint(v);

half3 normal = half3((ix & 0x00FF0000) >> 16, (ix & 0x0000FF00) >> 8, ix & 0x000000FF);

return ((normal / 255.0f) - 0.5f) * 2.0f;

}

v2f vert (appdata v)

{

float motion = _Motion % _MaxMotion;

float2 uv = v.VertexUV;

uv.y += floor(motion) * _Texture_TexelSize.y;

float4 tex = tex2Dlod(_Texture, float4(uv, 0, 0));

float4 pos = float4(tex.r, tex.b, tex.g, v.vertex.w);

half3 normal = NormalUnpack(tex.a);

normal = normalize(half3(normal.x, normal.z, normal.y));

v2f o;

o.vertex = UnityObjectToClipPos(pos);

o.normal = UnityObjectToWorldNormal(normal);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

col.rgb *= clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

return col;

}

ENDCG

}

}

}

使用方法

1.頂点テクスチャ作成

まずBlenderにてオブジェクトを作成し、アニメーションを作成します。

タイムラインの開始と終了の値も変更します。

続いてオブジェクトを選択して、スクリプトを実行します。

テクスチャが出力出来たら保存します。

保存形式は以前の記事に書いた通りです。

| 項目 | 値 |

|---|---|

| ファイルフォーマット | OpenEXR |

| カラー | RGBA |

| 色深度 | Float(Full) |

| コーデック | なし |

| 色空間 | 非カラー |

2.オブジェクト出力

選択したオブジェクトをFBXとして出力します。

出力前にアニメーションフレームを開始地点に戻しておくとよいかもしれません。

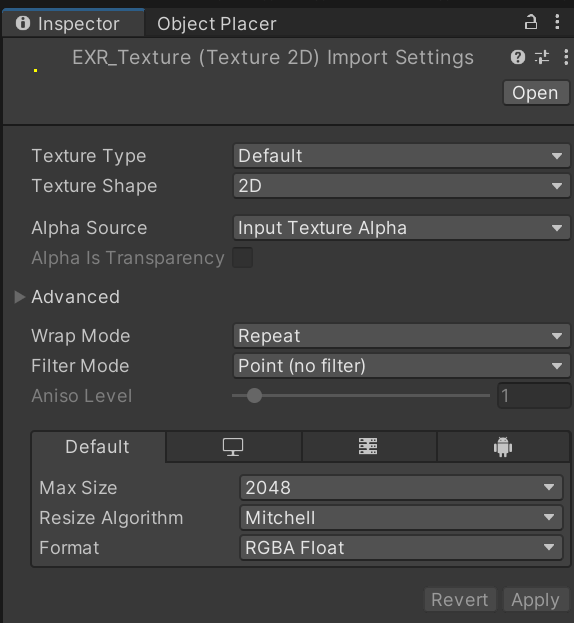

3.Unityにインポート

テクスチャ、FBXをUnityにインポートして設定します。

テクスチャのインポート設定は以下の通りです。

Filter Mode:Point (no filter)

Format:RGBA Float

また、プロジェクトの色空間設定はLinerに設定しておいてください。

4.マテリアル設定、適応

Positon Texture:インポートした頂点情報の入ったテクスチャ

Max Motion:作成したアニメーションの合計フレーム数(終了フレームー開始フレーム)

マテリアルを適応して完成です。

モーションの線形補完

現状ではMotionパラメータが1増えるごとに動きが変わっています。

しかしそのままでは動きがカクカクしているため、線形補完しましょう。

シェーダーは以下の通りです。

Shader "nekoya/VAT"

{

Properties

{

_Texture("Positon Texture", 2D) = "white" {}

_Motion("Motion", Float) = 0.0

_MaxMotion("Max Motion", Float) = 0.0

+ [Toggle]_IsLerp("Is Lerp", Float) = 0.0

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" "LightMode" = "ForwardBase" }

LOD 100

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

float2 VertexUV : TEXCOORD1;

};

struct v2f

{

float4 vertex : SV_POSITION;

float2 uv : TEXCOORD0;

half3 normal : TEXCOORD1;

};

sampler2D _MainTex, _Texture;

float4 _MainTex_ST, _Texture_TexelSize;

fixed4 _LightColor0;

float _Motion, _MaxMotion;

half3 NormalUnpack(float v){

uint ix = asuint(v);

half3 normal = half3((ix & 0x00FF0000) >> 16, (ix & 0x0000FF00) >> 8, ix & 0x000000FF);

return ((normal / 255.0f) - 0.5f) * 2.0f;

}

+ #pragma shader_feature _ISLERP_ON

v2f vert (appdata v)

{

float motion = _Motion % _MaxMotion;

float2 uv = v.VertexUV;

uv.y += floor(motion) * _Texture_TexelSize.y;

float4 tex = tex2Dlod(_Texture, float4(uv, 0, 0));

float4 pos = float4(tex.r, tex.b, tex.g, v.vertex.w);

half3 normal = NormalUnpack(tex.a);

normal = normalize(half3(normal.x, normal.z, normal.y));

+ # ifdef _ISLERP_ON

+ uv.y = (motion >= _MaxMotion - 1.0f)

+ ? v.VertexUV.y + _Texture_TexelSize.y

+ : v.VertexUV.y + ceil(motion) * _Texture_TexelSize.y;

+

+ float4 tex2 = tex2Dlod(_Texture, float4(uv, 0, 0));

+ float4 pos2 = float4(tex2.r, tex2.b, tex2.g, v.vertex.w);

+ half3 normal2 = NormalUnpack(tex2.a);

+ normal2 = normalize(half3(normal2.x, normal2.z, normal2.y));

+

+ pos = lerp(pos, pos2, frac(motion));

+ normal = lerp(normal, normal2, frac(motion));

+ #endif

v2f o;

o.vertex = UnityObjectToClipPos(pos);

o.normal = UnityObjectToWorldNormal(normal);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

col.rgb *= clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

return col;

}

ENDCG

}

}

}

法線をハードエッジ化

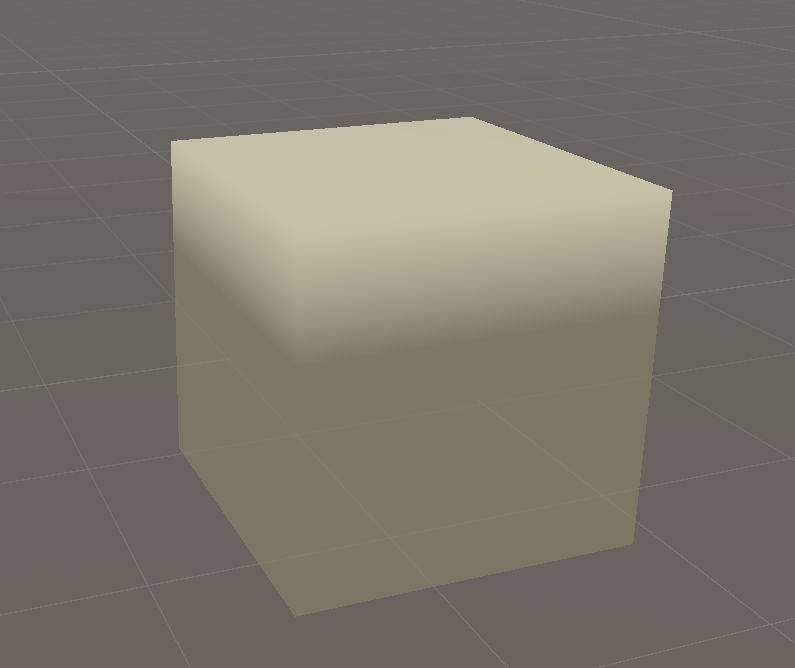

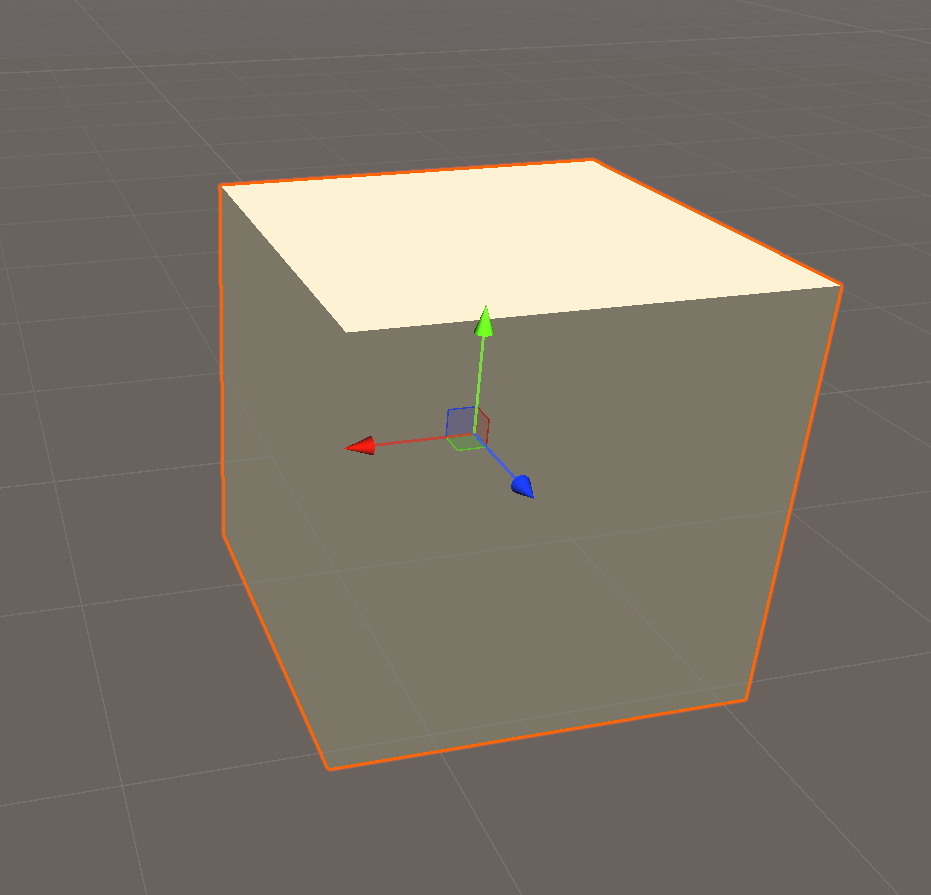

Unityの画面を見てみると影の付き方がおかしいことが分かります。

これはBlenderでいうスムーズシェードになっているためこのような見た目になります。

なぜスムーズシェードになってしまうかというと、1つの頂点に対して1種類の法線情報しか存在しないためです。

各頂点から法線情報を取得する方法だとスムーズシェードの法線情報を取得してしまいます。

そのためフラットシェードにするためには、各ポリゴンから法線情報を取得し保存する必要があります。

スクリプトは以下の通りです。

import bpy

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

loop_index / width + 1 / (width * 2),

0 + 1 / (height * 2)

]

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

- def Main(obj, width, height)

+ def Main(obj, width, height, hard_edge):

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

texture = TextureClass(obj.name + '_pos', width, height)

FixUV(obj.data, width, height)

uv_layer = obj.data.uv_layers.get("VertexUV")

for frame in range(start_frame, end_frame + 1):

mesh = GetMesh(obj, frame)

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += frame - start_frame

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

- normal = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

+ normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

+ if hard_edge \

+ else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

obj = bpy.context.active_object

+ hard_edge = True

- Main(obj, 32, 32)

+ Main(obj, 64, 64, hard_edge)

生成したテクスチャを適応するとフラットシェードになりました。

テクスチャ容量削減

現状すべての頂点座標を保存していますが、オブジェクトの形が変わらない場合情報量を削減することが出来ます。

形が変わらないというのも厳密には重複した頂点数の数が変わらないということですね。

つまり重複した情報は削減するということです。

現状はUVを用いて頂点情報を読みだしており、UVを同じ位置に配置させれば同じ情報を取得できます。

このことを用いてテクスチャサイズを削減します。

スクリプトは以下の通りです。

import bpy

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

loop_index / width + 1 / (width * 2),

0 + 1 / (height * 2)

]

+ def FixUV_Dupe(mesh, width, height):

+ uv_layer = mesh.uv_layers.get("VertexUV")

+ if not uv_layer:

+ uv_layer = mesh.uv_layers.new(name="VertexUV")

+

+ seen_vertices = {}

+ for vertex in mesh.vertices:

+ vertex_co = vertex.co

+ # 位置が重複していない場合のみ追加

+ if tuple(vertex_co) not in seen_vertices:

+ seen_vertices[tuple(vertex_co)] = None

+

+ for polygon in mesh.polygons:

+ for i, loop_index in enumerate(polygon.loop_indices):

+ vertex_co = mesh.vertices[polygon.vertices[i]].co

+ if tuple(vertex_co) in seen_vertices:

+ index = list(seen_vertices.keys()).index(tuple(vertex_co))

+ uv_layer.data[loop_index].uv = [

+ index / width + 1 / (width * 2),

+ 0 + 1 / (height * 2)

+ ]

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

- def Main(obj, width, height, hard_edge):

+ def Main(obj, width, height, hard_edge, vertex_compress):

+ if vertex_compress:

+ hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

texture = TextureClass(obj.name + '_pos', width, height)

- FixUV(obj.data, width, height)

+ if vertex_compress:

+ FixUV_Dupe(obj.data, width, height)

+ else:

+ FixUV(obj.data, width, height)

uv_layer = obj.data.uv_layers.get("VertexUV")

for frame in range(start_frame, end_frame + 1):

mesh = GetMesh(obj, frame)

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += frame - start_frame

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

obj = bpy.context.active_object

hard_edge = False

+ vertex_compress = True

- Main(obj, 8, 32, hard_edge)

+ Main(obj, 8, 32, hard_edge, vertex_compress)

なお、先述した法線をハードエッジ化の項目とは共存できないため、必ずスムーズシェードになってしまいます。

複数行対応、テクスチャサイズ判定

現状では頂点を横1行にしか配置しないため、頂点数が増えるとテクスチャサイズも増えてしまいます。

しかしUnityで扱える画像サイズは、Unity2022.3.6f1では16384pxまでしかありません。

また頂点数に対してアニメーションフレームはそこまで必要でないと思われるので、複数行に分けて保存できるように変更します。

テクスチャサイズに関しては正方形でなくても良く、2のべき乗値であればよいとのことなのでいい感じの値を返してくれる関数を作成します。

スクリプトは以下の通りです。

import bpy

+ import math

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

+ def GetResolution(max_resolution, vertex_num, frame):

+ height = -1

+ width = -1

+ column = 1

+

+ for column in range(1, max_resolution + 1):

+ for i in range(0, int(math.log2(max_resolution)) + 1):

+ if vertex_num <= (1 << i) * column:

+ width = 1 << i

+ break

+ if width != -1:

+ break

+

+ for i in range(0, int(math.log2(max_resolution)) + 1):

+ if frame * column + 1 <= (1 << i):

+ height = 1 << i

+ break;

+

+ return width, height, column

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

- uv_layer.data[loop_index].uv = [

- loop_index / width + 1 / (width * 2),

- 0 + 1 / (height * 2)

- ]

+ uv_layer.data[loop_index].uv = [

+ loop_index % width / width + 1 / (width * 2),

+ loop_index // width / height + 1 / (height * 2)

+ ]

def FixUV_Dupe(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

seen_vertices = {}

for vertex in mesh.vertices:

vertex_co = vertex.co

# 位置が重複していない場合のみ追加

if tuple(vertex_co) not in seen_vertices:

seen_vertices[tuple(vertex_co)] = None

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

vertex_co = mesh.vertices[polygon.vertices[i]].co

if tuple(vertex_co) in seen_vertices:

index = list(seen_vertices.keys()).index(tuple(vertex_co))

- uv_layer.data[loop_index].uv = [

- index / width + 1 / (width * 2),

- 0 + 1 / (height * 2)

- ]

+ uv_layer.data[loop_index].uv = [

+ index % width / width + 1 / (width * 2),

+ index // width / height + 1 / (height * 2)

+ ]

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

- def Main(obj, width, height, hard_edge, vertex_compress):

+ def Main(obj, max_resolution, hard_edge, vertex_compress):

if vertex_compress:

hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

+ vertex_num = len(obj.data.vertices) \

+ if vertex_compress \

+ else len([loop for polygon in obj.data.polygons for loop in polygon.loop_indices])

+ width, height, column = GetResolution(max_resolution, vertex_num, end_frame - start_frame + 1)

+

+ if width == -1 or height == -1:

+ print("頂点数またはフレーム数が大きすぎます")

+ return

texture = TextureClass(obj.name + '_pos', width, height)

if vertex_compress:

FixUV_Dupe(obj.data, width, height)

else:

FixUV(obj.data, width, height)

uv_layer = obj.data.uv_layers.get("VertexUV")

for frame in range(start_frame, end_frame + 1):

mesh = GetMesh(obj, frame)

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

- pixel[0] += frame - start_frame

+ pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

obj = bpy.context.active_object

hard_edge = False

vertex_compress = True

Main(obj, 64, hard_edge, vertex_compress)

また現状ではColumnとMaxMotionパラメータをシェーダーに渡す必要があります。

しかし毎回入力するのは面倒なので、テクスチャに入れ込んでしまいましょう。

スクリプトは以下の通りです。

import bpy

import math

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def GetResolution(max_resolution, vertex_num, frame):

height = -1

width = -1

column = 1

for column in range(1, max_resolution + 1):

for i in range(0, int(math.log2(max_resolution)) + 1):

if vertex_num <= (1 << i) * column:

width = 1 << i

break

if width != -1:

break

for i in range(0, int(math.log2(max_resolution)) + 1):

if frame * column + 1 <= (1 << i):

height = 1 << i

break;

return width, height, column

def FixUV(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

loop_index % width / width + 1 / (width * 2),

- loop_index // width / height + 1 / (height * 2)

+ (loop_index // width + 1) / height + 1 / (height * 2)

]

def FixUV_Dupe(mesh, width, height):

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

seen_vertices = {}

for vertex in mesh.vertices:

vertex_co = vertex.co

# 位置が重複していない場合のみ追加

if tuple(vertex_co) not in seen_vertices:

seen_vertices[tuple(vertex_co)] = None

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

vertex_co = mesh.vertices[polygon.vertices[i]].co

if tuple(vertex_co) in seen_vertices:

index = list(seen_vertices.keys()).index(tuple(vertex_co))

uv_layer.data[loop_index].uv = [

index % width / width + 1 / (width * 2),

- index // width / height + 1 / (height * 2)

+ (index // width + 1) / height + 1 / (height * 2)

]

def GetMesh(obj, frame):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

eval_obj = obj.evaluated_get(depsgraph)

return eval_obj.to_mesh()

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

+ def ShortsToFloat(value1, value2):

+ paced_32bit = (value1 << 16) | value2

+ return struct.unpack('!f', struct.pack('!I', paced_32bit))[0]

def Main(obj, max_resolution, hard_edge, vertex_compress):

if vertex_compress:

hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

vertex_num = len(obj.data.vertices) \

if vertex_compress \

else len([loop for polygon in obj.data.polygons for loop in polygon.loop_indices])

width, height, column = GetResolution(max_resolution, vertex_num, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(obj.name + '_pos', width, height)

+ param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

+ texture.SetPixel(0, 0, *param)

if vertex_compress:

FixUV_Dupe(obj.data, width, height)

else:

FixUV(obj.data, width, height)

uv_layer = obj.data.uv_layers.get("VertexUV")

for frame in range(start_frame, end_frame + 1):

mesh = GetMesh(obj, frame)

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

obj = bpy.context.active_object

hard_edge = True

vertex_compress = False

Main(obj, 128, hard_edge, vertex_compress)

ではシェーダーの方も変更していきましょう。

Shader "nekoya/VAT"

{

Properties

{

_Texture("Positon Texture", 2D) = "white" {}

_Motion("Motion", Float) = 0.0

[Toggle]_IsLerp("Is Lerp", Float) = 0.0

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" "LightMode" = "ForwardBase" }

LOD 100

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

float2 VertexUV : TEXCOORD1;

};

struct v2f

{

float4 vertex : SV_POSITION;

float2 uv : TEXCOORD0;

half3 normal : TEXCOORD1;

};

sampler2D _MainTex, _Texture;

float4 _MainTex_ST, _Texture_TexelSize;

fixed4 _LightColor0;

float _Motion;

half3 NormalUnpack(float v){

uint ix = asuint(v);

half3 normal = half3((ix & 0x00FF0000) >> 16, (ix & 0x0000FF00) >> 8, ix & 0x000000FF);

return ((normal / 255.0f) - 0.5f) * 2.0f;

}

+ void ShortUnpack(float v, out int v1, out int v2){

+ uint ix = asuint(v);

+ v1 = (ix & 0xFFFF0000) >> 16;

+ v2 = (ix & 0x0000FFFF);

+ }

#pragma shader_feature _ISLERP_ON

v2f vert (appdata v)

{

+ int column, maxMotion;

+ float4 param = tex2Dlod(_Texture, 0);

+ ShortUnpack(param.r, column, maxMotion);

- float motion = _Motion % _MaxMotion;

+ float motion = _Motion % maxMotion;

float2 uv = v.VertexUV;

- uv.y += floor(motion) * _Texture_TexelSize.y;

+ uv.y += floor(motion) * _Texture_TexelSize.y * column;

float4 tex = tex2Dlod(_Texture, float4(uv, 0, 0));

float4 pos = float4(tex.r, tex.b, tex.g, v.vertex.w);

half3 normal = NormalUnpack(tex.a);

normal = normalize(half3(normal.x, normal.z, normal.y));

# ifdef _ISLERP_ON

- uv.y = (motion >= _MaxMotion - 1.0f)

- ? v.VertexUV.y + _Texture_TexelSize.y

- : v.VertexUV.y + ceil(motion) * _Texture_TexelSize.y;

+ uv.y = (motion >= maxMotion - 1.0f)

+ ? v.VertexUV.y + _Texture_TexelSize.y * column

+ : v.VertexUV.y + ceil(motion) * _Texture_TexelSize.y * column;

float4 tex2 = tex2Dlod(_Texture, float4(uv, 0, 0));

float4 pos2 = float4(tex2.r, tex2.b, tex2.g, v.vertex.w);

half3 normal2 = NormalUnpack(tex2.a);

normal2 = normalize(half3(normal2.x, normal2.z, normal2.y));

pos = lerp(pos, pos2, frac(motion));

normal = lerp(normal, normal2, frac(motion));

#endif

v2f o;

o.vertex = UnityObjectToClipPos(pos);

o.normal = UnityObjectToWorldNormal(normal);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

col.rgb *= clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

return col;

}

ENDCG

}

}

}

複数オブジェクトをまとめる

現状はbpy.context.active_objectでオブジェクトを取得していますが、それでは1つのアクティブなオブジェクトしか取得できません。

そこでbpy.context.selected_objectsを使用することで選択したオブジェクトすべてを取得できます。

各処理も複数オブジェクトに対応するように修正しましょう。

スクリプトは以下の通りです。

import bpy

import math

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def GetResolution(max_resolution, vertex_num, frame):

height = -1

width = -1

column = 1

for column in range(1, max_resolution + 1):

for i in range(0, int(math.log2(max_resolution)) + 1):

if vertex_num <= (1 << i) * column:

width = 1 << i

break

if width != -1:

break

for i in range(0, int(math.log2(max_resolution)) + 1):

if frame * column + 1 <= (1 << i):

height = 1 << i

break;

return width, height, column

- def FixUV(mesh, width, height):

+ def FixUV(meshs, width, height):

+ count = 0

+ for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

- uv_layer.data[loop_index].uv = [

- loop_index % width / width + 1 / (width * 2),

- (loop_index // width + 1) / height + 1 / (height * 2)

- ]

+ uv_layer.data[loop_index].uv = [

+ count % width / width + 1 / (width * 2),

+ (count // width + 1) / height + 1 / (height * 2)

+ ]

+ count += 1

- def FixUV_Dupe(mesh, width, height):

+ def FixUV_Dupe(meshs, width, height):

+ count = 0

+ for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

seen_vertices = {}

for vertex in mesh.vertices:

vertex_co = vertex.co

# 位置が重複していない場合のみ追加

if tuple(vertex_co) not in seen_vertices:

seen_vertices[tuple(vertex_co)] = None

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

vertex_co = mesh.vertices[polygon.vertices[i]].co

if tuple(vertex_co) in seen_vertices:

- index = list(seen_vertices.keys()).index(tuple(vertex_co))

+ index = list(seen_vertices.keys()).index(tuple(vertex_co)) + count

uv_layer.data[loop_index].uv = [

index % width / width + 1 / (width * 2),

(index // width + 1) / height + 1 / (height * 2)

]

+ count += len(seen_vertices)

- def GetMesh(obj, frame):

- bpy.context.scene.frame_set(frame)

- bpy.context.view_layer.update()

- depsgraph = bpy.context.evaluated_depsgraph_get()

- eval_obj = obj.evaluated_get(depsgraph)

- return eval_obj.to_mesh()

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

def ShortsToFloat(value1, value2):

paced_32bit = (value1 << 16) | value2

return struct.unpack('!f', struct.pack('!I', paced_32bit))[0]

def Main(objs, max_resolution, hard_edge, vertex_compress):

if vertex_compress:

hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

vertex_num = sum(len(obj.data.vertices) for obj in objs) \

if vertex_compress \

else len([loop for obj in objs for polygon in obj.data.polygons for loop in polygon.loop_indices])

width, height, column = GetResolution(max_resolution, vertex_num, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(objs[0].name + '_pos', width, height)

param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

texture.SetPixel(0, 0, *param)

+ bpy.context.scene.frame_set(0)

+ origins = {}

+ for obj in objs:

+ origins[obj] = obj.location.copy()

if vertex_compress:

- FixUV_Dupe(obj.data, width, height)

+ FixUV_Dupe([obj.data for obj in objs], width, height)

else:

- FixUV(obj.data, width, height)

+ FixUV([obj.data for obj in objs], width, height)

- uv_layer = obj.data.uv_layers.get("VertexUV")

for frame in range(start_frame, end_frame + 1):

- mesh = GetMesh(obj, frame)

+ bpy.context.scene.frame_set(frame)

+ bpy.context.view_layer.update()

+ depsgraph = bpy.context.evaluated_depsgraph_get()

+ for obj in objs:

+ eval_obj = obj.evaluated_get(depsgraph)

+ mesh = eval_obj.to_mesh()

+ uv_layer = eval_obj.data.uv_layers.get("VertexUV")

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co - origins[obj]

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

objs = bpy.context.selected_objects

hard_edge = True

vertex_compress = False

Main(objs, 1024, hard_edge, vertex_compress)

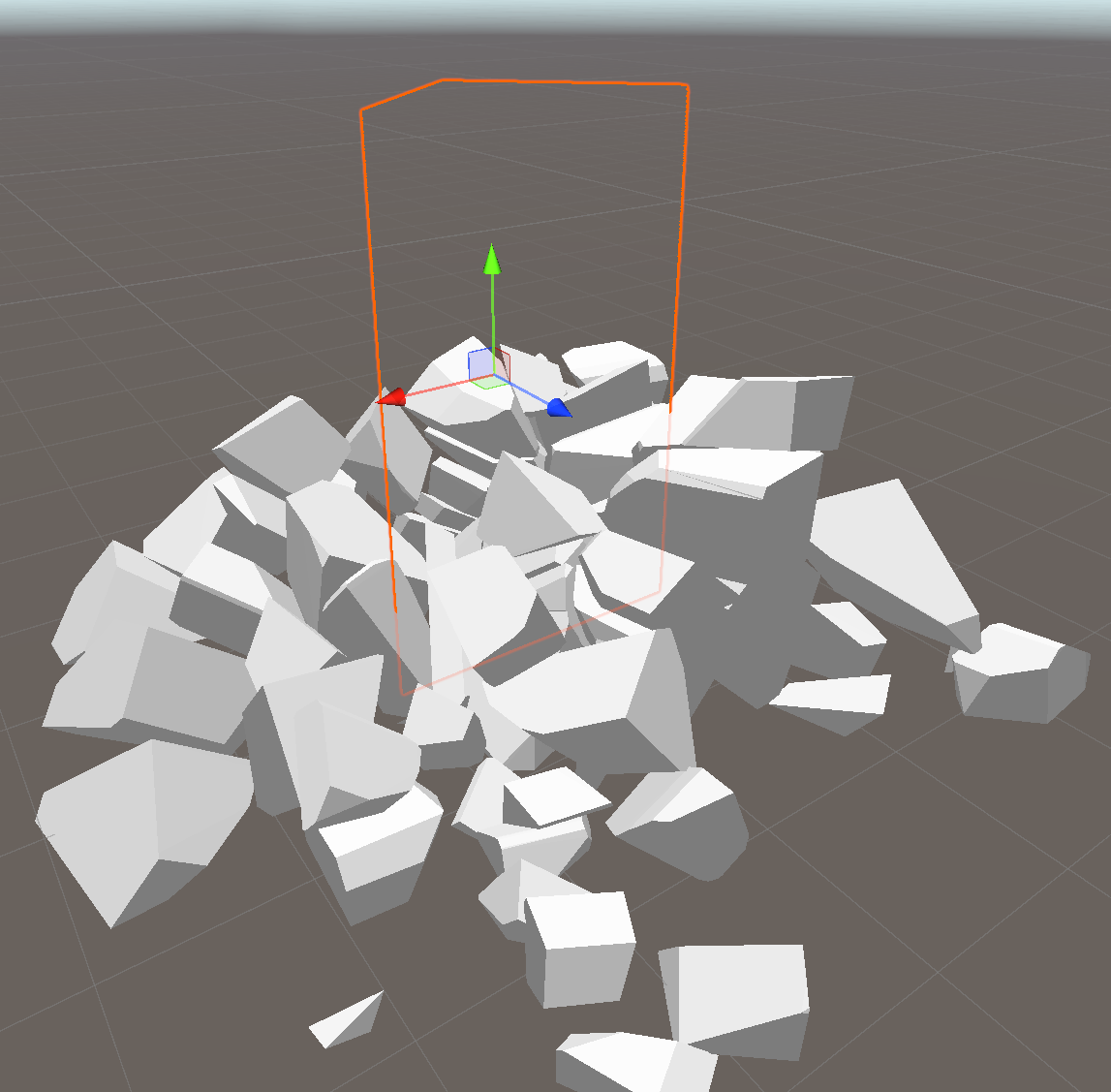

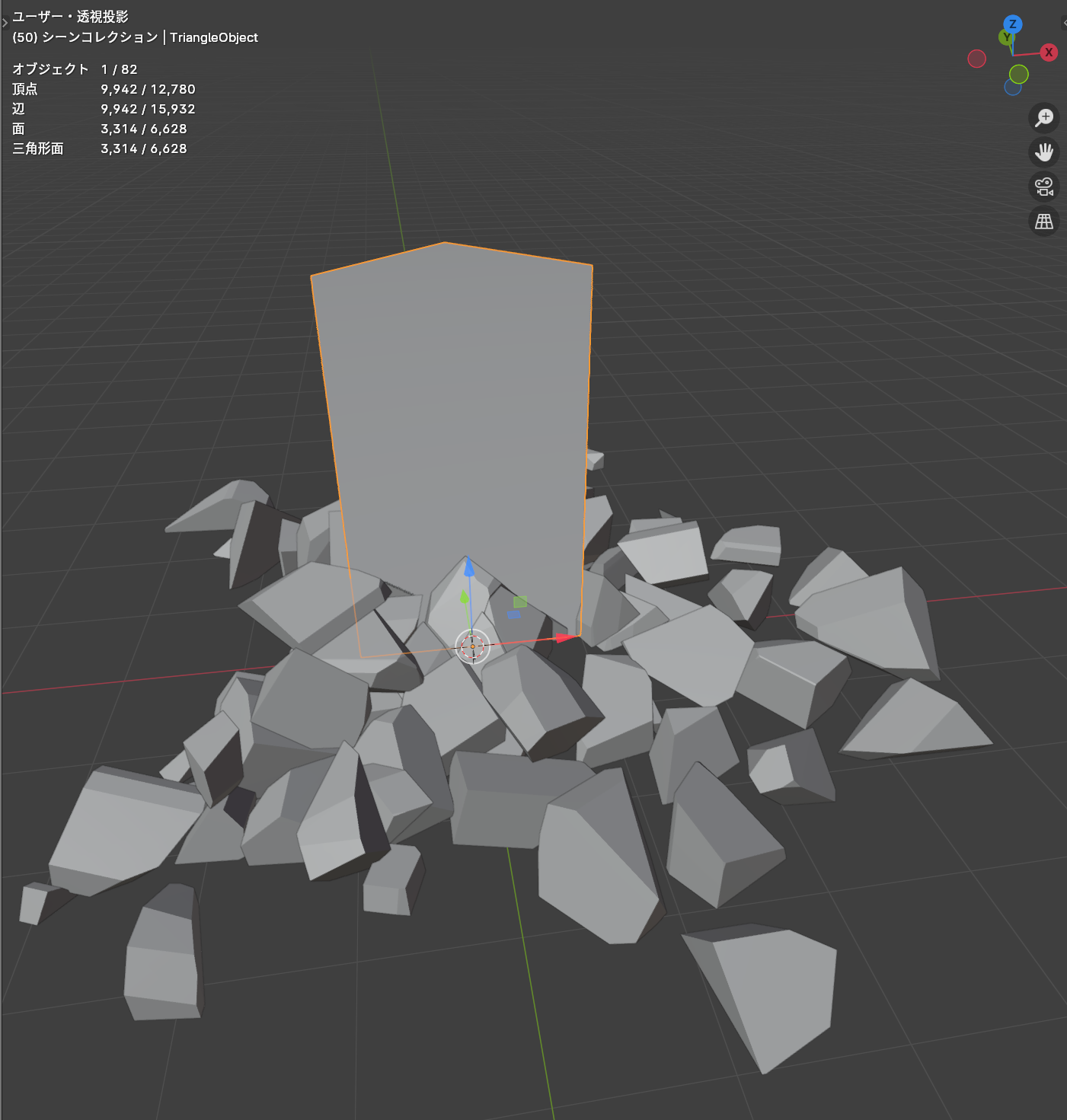

ちょっと豪勢に立方体が崩壊するアニメーションを作成しました。

このアニメーションがテクスチャ1枚で完結しています。

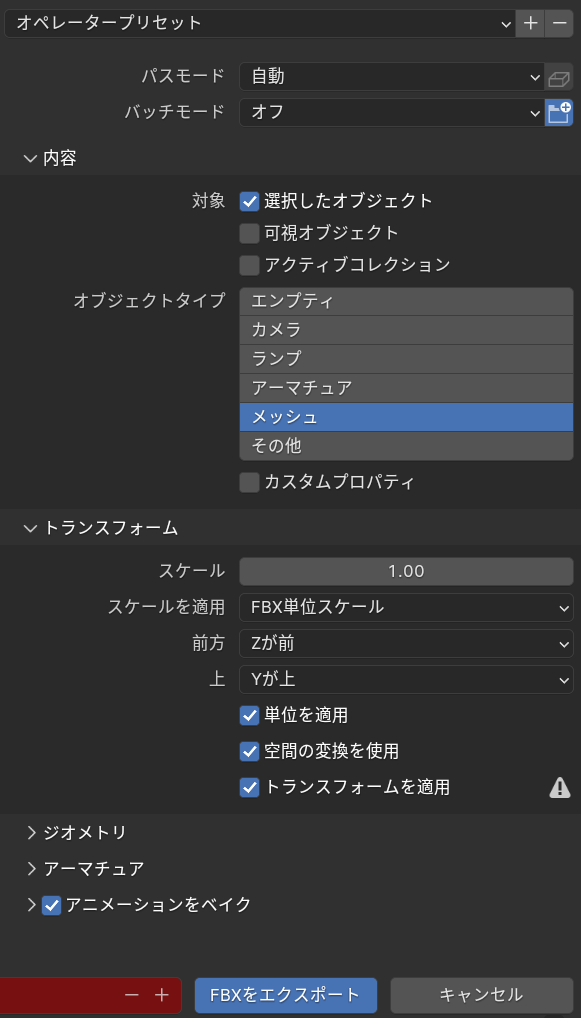

なお、BlenderとUnityでは座標軸が異なるため、FBX出力時に調整する必要があります。

FBX出力時の設定は以下の通りです。

スケールを適応:FBX単位スケール

前方:Zが前

上:Yが上

トランスフォームを適応:ON

表示用オブジェクトの作成、頂点番号の使用

これまではアニメーションを作成したオブジェクト自体をFXBで出力し、マテリアルを適応していました。

今回は選択した全オブジェクトのポリゴン数を持つオブジェクトを別途作成し、そちらをFBXとして出力するようにしましょう。

また頂点情報の読み出しにUVを使用していましたが、今回は頂点番号を使用して読み出すようにシェーダーも変更します。

スクリプトは以下の通りです。

import bpy

import math

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

+ def GetVertexMax(objs):

+ bpy.context.scene.frame_set(0)

+ bpy.context.view_layer.update()

+ depsgraph = bpy.context.evaluated_depsgraph_get()

+

+ vertex = 0

+ for obj in objs:

+ mesh = obj.evaluated_get(depsgraph).to_mesh()

+ for polygon in mesh.polygons:

+ if len(polygon.vertices) >= 4:

+ return -1

+ vertex += len(polygon.vertices)

+

+ return vertex

def GetResolution(max_resolution, vertex_num, frame):

height = -1

width = -1

column = 1

for column in range(1, max_resolution + 1):

for i in range(0, int(math.log2(max_resolution)) + 1):

if vertex_num <= (1 << i) * column:

width = 1 << i

break

if width != -1:

break

for i in range(0, int(math.log2(max_resolution)) + 1):

if frame * column + 1 <= (1 << i):

height = 1 << i

break;

return width, height, column

def FixUV(meshs, width, height):

count = 0

for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

count % width / width + 1 / (width * 2),

(count // width + 1) / height + 1 / (height * 2)

]

count += 1

def FixUV_Dupe(meshs, width, height):

count = 0

for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

seen_vertices = {}

for vertex in mesh.vertices:

vertex_co = vertex.co

# 位置が重複していない場合のみ追加

if tuple(vertex_co) not in seen_vertices:

seen_vertices[tuple(vertex_co)] = None

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

vertex_co = mesh.vertices[polygon.vertices[i]].co

if tuple(vertex_co) in seen_vertices:

index = list(seen_vertices.keys()).index(tuple(vertex_co)) + count

uv_layer.data[loop_index].uv = [

index % width / width + 1 / (width * 2),

(index // width + 1) / height + 1 / (height * 2)

]

count += len(seen_vertices)

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

def ShortsToFloat(value1, value2):

paced_32bit = (value1 << 16) | value2

return struct.unpack('!f', struct.pack('!I', paced_32bit))[0]

+ def CreateObject(polygon_count):

+ mesh = bpy.data.meshes.new(name="TriangleMesh")

+ obj = bpy.data.objects.new("TriangleObject", mesh)

+ bpy.context.collection.objects.link(obj)

+

+ vertices = []

+ faces = []

+ for p in range(polygon_count):

+ vertices.append((1, 0, p * 0.001))

+ vertices.append((-1, 0, p * 0.001))

+ vertices.append((0, 1, p * 0.001))

+ faces.append((p * 3, p * 3 + 1, p * 3 + 2))

+

+ mesh.from_pydata(vertices, [], faces)

+ mesh.update()

- def Main(objs, max_resolution, hard_edge, vertex_compress):

+ def Main_UV(objs, max_resolution, hard_edge, vertex_compress):

if vertex_compress:

hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

vertex_num = sum(len(obj.data.vertices) for obj in objs) \

if vertex_compress \

else len([loop for obj in objs for polygon in obj.data.polygons for loop in polygon.loop_indices])

width, height, column = GetResolution(max_resolution, vertex_num, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(objs[0].name + '_pos', width, height)

param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

texture.SetPixel(0, 0, *param)

bpy.context.scene.frame_set(0)

origins = {}

for obj in objs:

origins[obj] = obj.location.copy()

if vertex_compress:

FixUV_Dupe([obj.data for obj in objs], width, height)

else:

FixUV([obj.data for obj in objs], width, height)

for frame in range(start_frame, end_frame + 1):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

for obj in objs:

eval_obj = obj.evaluated_get(depsgraph)

mesh = eval_obj.to_mesh()

uv_layer = eval_obj.data.uv_layers.get("VertexUV")

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co - origins[obj]

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

+ def Main_VertexID(objs, max_resolution, hard_edge):

+ start_frame = bpy.context.scene.frame_start

+ end_frame = bpy.context.scene.frame_end

+ vertex_max = GetVertexMax(objs)

+ if vertex_max == -1:

+ print("三角形以外のポリゴンが含まれています")

+ return

+

+ width, height, column = GetResolution(max_resolution, vertex_max, end_frame - start_frame + 1)

+ if width == -1 or height == -1:

+ print("頂点数またはフレーム数が大きすぎます")

+ return

+

+ texture = TextureClass(objs[0].name + '_pos', width, height)

+

+ param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

+ texture.SetPixel(0, 0, *param)

+

+ for frame in range(start_frame, end_frame + 1):

+ bpy.context.scene.frame_set(frame)

+ bpy.context.view_layer.update()

+ depsgraph = bpy.context.evaluated_depsgraph_get()

+

+ count = 0

+ for obj in objs:

+ eval_obj = obj.evaluated_get(depsgraph)

+ mesh = eval_obj.to_mesh()

+ for polygon in mesh.polygons:

+ for i, loop_index in enumerate(polygon.loop_indices):

+ pixel = [count // width + 1, count % width]

+ pixel[0] += (frame - start_frame) * column

+

+ vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

+ normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

+ if hard_edge \

+ else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

+ normal = normal.normalized()

+

+ texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

+

+ count += 1

+

+ texture.Export()

+ CreateObject(math.ceil(vertex_max / 3))

objs = bpy.context.selected_objects

hard_edge = True

vertex_compress = False

+ mock_object = True

- Main(objs, 1024, hard_edge, vertex_compress)

+ if mock_object:

+ Main_VertexID(objs, 1024, hard_edge)

+ else:

+ Main_UV(objs, 1024, hard_edge, vertex_compress)

TriangleObjectという全体のポリゴン数を持ったオブジェクトが生成されました。

こちらのオブジェクトとテクスチャをUnityにインポートします。

また、シェーダーは以下の通りです。

新しく別のシェーダーに書くのがおすすめです。

- Shader "nekoya/VAT"

+ Shader "nekoya/VAT_Vertex"

{

Properties

{

_Texture("Positon Texture", 2D) = "white" {}

_Motion("Motion", Float) = 0.0

[Toggle]_IsLerp("Is Lerp", Float) = 0.0

- _MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" "LightMode" = "ForwardBase" }

LOD 100

+ Cull Off

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

- float2 uv : TEXCOORD0;

- float2 VertexUV : TEXCOORD1;

};

struct v2f

{

float4 vertex : SV_POSITION;

- float2 uv : TEXCOORD0;

half3 normal : TEXCOORD1;

};

- sampler2D _MainTex, _Texture;

+ sampler2D _Texture;

- float4 _MainTex_ST, _Texture_TexelSize;

+ float4 _Texture_TexelSize;

fixed4 _LightColor0;

float _Motion;

half3 NormalUnpack(float v){

uint ix = asuint(v);

half3 normal = half3((ix & 0x00FF0000) >> 16, (ix & 0x0000FF00) >> 8, ix & 0x000000FF);

return ((normal / 255.0f) - 0.5f) * 2.0f;

}

void ShortUnpack(float v, out int v1, out int v2){

uint ix = asuint(v);

v1 = (ix & 0xFFFF0000) >> 16;

v2 = (ix & 0x0000FFFF);

}

#pragma shader_feature _ISLERP_ON

- v2f vert (appdata v)

+ v2f vert (appdata v, uint vid : SV_VertexID)

{

int column, maxMotion;

float4 param = tex2Dlod(_Texture, 0);

ShortUnpack(param.r, column, maxMotion);

float motion = _Motion % maxMotion;

- float2 uv = v.VertexUV;

+ float2 uv = float2(

+ float(vid) % _Texture_TexelSize.z * _Texture_TexelSize.x,

+ (int(float(vid) * _Texture_TexelSize.x) + 1.0f) * _Texture_TexelSize.y

+ );

uv.y += floor(motion) * _Texture_TexelSize.y * column;

float4 tex = tex2Dlod(_Texture, float4(uv, 0, 0));

float4 pos = float4(tex.r, tex.b, tex.g, v.vertex.w);

half3 normal = NormalUnpack(tex.a);

normal = normalize(half3(normal.x, normal.z, normal.y));

# ifdef _ISLERP_ON

- uv.y = (motion >= maxMotion - 1.0f)

- ? v.VertexUV.y + _Texture_TexelSize.y * column

- : v.VertexUV.y + ceil(motion) * _Texture_TexelSize.y * column;

+ uv.y = (int(float(vid) * _Texture_TexelSize.x) + 1.0f) * _Texture_TexelSize.y;

+ uv.y += (motion >= maxMotion - 1.0f)

+ ? _Texture_TexelSize.y * column

+ : ceil(motion) * _Texture_TexelSize.y * column;

float4 tex2 = tex2Dlod(_Texture, float4(uv, 0, 0));

float4 pos2 = float4(tex2.r, tex2.b, tex2.g, v.vertex.w);

half3 normal2 = NormalUnpack(tex2.a);

normal2 = normalize(half3(normal2.x, normal2.z, normal2.y));

pos = lerp(pos, pos2, frac(motion));

normal = lerp(normal, normal2, frac(motion));

#endif

v2f o;

o.vertex = UnityObjectToClipPos(pos);

o.normal = UnityObjectToWorldNormal(normal);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

- fixed4 col = tex2D(_MainTex, i.uv);

- col.rgb *= clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

- return col;

+ return clamp(dot(i.normal, _WorldSpaceLightPos0), .2f, .98f) * _LightColor0;

}

ENDCG

}

}

}

なおこちらのオブジェクトは欠点として、テクスチャを張ることが出来ません。

今まではUV展開されたオブジェクト自体にUV2を追加していたので、テクスチャ用のUVも保持されていました。

しかしこちらはUV転写が上手くいかないようなのでテクスチャを張るのは今回断念しました。

流体対応化

それではVATの花形ともいえる流体オブジェクトの対応を行っていきます。

流体の懸念点としては、ポリゴン数が常時変化することが挙げられます。

また最大ポリゴン数も探索する必要があります。

流体についてはUV展開が行えないため、先ほど作成した頂点番号用の処理で行う必要があります。

それではスクリプトは以下の通りです。

import bpy

import math

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

+ def GetVertexMax(objs, start_frame, end_frame):

+ vertex_max = 0

+ for frame in range(start_frame, end_frame + 1):

- bpy.context.scene.frame_set(0)

+ bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

vertex = 0

for obj in objs:

mesh = obj.evaluated_get(depsgraph).to_mesh()

for polygon in mesh.polygons:

if len(polygon.vertices) >= 4:

return -1

vertex += len(polygon.vertices)

+ vertex_max = max(vertex_max, vertex)

- return vertex

+ return vertex_max

def GetResolution(max_resolution, vertex_num, frame):

height = -1

width = -1

column = 1

for column in range(1, max_resolution + 1):

for i in range(0, int(math.log2(max_resolution)) + 1):

if vertex_num <= (1 << i) * column:

width = 1 << i

break

if width != -1:

break

for i in range(0, int(math.log2(max_resolution)) + 1):

if frame * column + 1 <= (1 << i):

height = 1 << i

break;

return width, height, column

def FixUV(meshs, width, height):

count = 0

for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

count % width / width + 1 / (width * 2),

(count // width + 1) / height + 1 / (height * 2)

]

count += 1

def FixUV_Dupe(meshs, width, height):

count = 0

for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

seen_vertices = {}

for vertex in mesh.vertices:

vertex_co = vertex.co

# 位置が重複していない場合のみ追加

if tuple(vertex_co) not in seen_vertices:

seen_vertices[tuple(vertex_co)] = None

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

vertex_co = mesh.vertices[polygon.vertices[i]].co

if tuple(vertex_co) in seen_vertices:

index = list(seen_vertices.keys()).index(tuple(vertex_co)) + count

uv_layer.data[loop_index].uv = [

index % width / width + 1 / (width * 2),

(index // width + 1) / height + 1 / (height * 2)

]

count += len(seen_vertices)

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

def ShortsToFloat(value1, value2):

paced_32bit = (value1 << 16) | value2

return struct.unpack('!f', struct.pack('!I', paced_32bit))[0]

def CreateObject(polygon_count):

mesh = bpy.data.meshes.new(name="TriangleMesh")

obj = bpy.data.objects.new("TriangleObject", mesh)

bpy.context.collection.objects.link(obj)

vertices = []

faces = []

for p in range(polygon_count):

vertices.append((1, 0, p * 0.001))

vertices.append((-1, 0, p * 0.001))

vertices.append((0, 1, p * 0.001))

faces.append((p * 3, p * 3 + 1, p * 3 + 2))

mesh.from_pydata(vertices, [], faces)

mesh.update()

def Main_UV(objs, max_resolution, hard_edge, vertex_compress):

if vertex_compress:

hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

vertex_num = sum(len(obj.data.vertices) for obj in objs) \

if vertex_compress \

else len([loop for obj in objs for polygon in obj.data.polygons for loop in polygon.loop_indices])

width, height, column = GetResolution(max_resolution, vertex_num, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(objs[0].name + '_pos', width, height)

param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

texture.SetPixel(0, 0, *param)

bpy.context.scene.frame_set(0)

origins = {}

for obj in objs:

origins[obj] = obj.location.copy()

if vertex_compress:

FixUV_Dupe([obj.data for obj in objs], width, height)

else:

FixUV([obj.data for obj in objs], width, height)

for frame in range(start_frame, end_frame + 1):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

for obj in objs:

eval_obj = obj.evaluated_get(depsgraph)

mesh = eval_obj.to_mesh()

uv_layer = eval_obj.data.uv_layers.get("VertexUV")

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co - origins[obj]

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

def Main_VertexID(objs, max_resolution, hard_edge):

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

- vertex_max = GetVertexMax(objs)

+ vertex_max = GetVertexMax(objs, start_frame, end_frame)

if vertex_max == -1:

print("三角形以外のポリゴンが含まれています")

return

width, height, column = GetResolution(max_resolution, vertex_max, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(objs[0].name + '_pos', width, height)

param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

texture.SetPixel(0, 0, *param)

for frame in range(start_frame, end_frame + 1):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

count = 0

for obj in objs:

eval_obj = obj.evaluated_get(depsgraph)

mesh = eval_obj.to_mesh()

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

pixel = [count // width + 1, count % width]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

count += 1

texture.Export()

CreateObject(math.ceil(vertex_max / 3))

objs = bpy.context.selected_objects

hard_edge = True

vertex_compress = False

mock_object = True

if mock_object:

Main_VertexID(objs, 4096, hard_edge)

else:

Main_UV(objs, 4096, hard_edge, vertex_compress)

流体アニメーションに関してはポリゴン数が一定ではないため、モーションの線形補完が行えません。

スクリプト全体

import bpy

import math

import numpy as np

import struct

class TextureClass:

def __init__(self, texture_name, width, height):

self.image = bpy.data.images.get(texture_name)

if not self.image:

self.image = bpy.data.images.new(texture_name, width=width, height=height, alpha=True, float_buffer=True)

elif self.image.size[0] != width or self.image.size[1] != height:

self.image.scale(width, height)

self.image.file_format = 'OPEN_EXR'

self.point = np.array(self.image.pixels[:])

self.point.resize(height, width * 4)

self.point[:] = 0

self.point_R = self.point[::, 0::4]

self.point_G = self.point[::, 1::4]

self.point_B = self.point[::, 2::4]

self.point_A = self.point[::, 3::4]

def SetPixel(self, py, px, r, g, b, a):

self.point_R[py][px] = r

self.point_G[py][px] = g

self.point_B[py][px] = b

self.point_A[py][px] = a

def Export(self):

self.image.pixels = self.point.flatten()

def GetVertexMax(objs, start_frame, end_frame):

vertex_max = 0

for frame in range(start_frame, end_frame + 1):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

vertex = 0

for obj in objs:

mesh = obj.evaluated_get(depsgraph).to_mesh()

for polygon in mesh.polygons:

if len(polygon.vertices) >= 4:

return -1

vertex += len(polygon.vertices)

vertex_max = max(vertex_max, vertex)

return vertex_max

def GetResolution(max_resolution, vertex_num, frame):

height = -1

width = -1

column = 1

for column in range(1, max_resolution + 1):

for i in range(0, int(math.log2(max_resolution)) + 1):

if vertex_num <= (1 << i) * column:

width = 1 << i

break

if width != -1:

break

for i in range(0, int(math.log2(max_resolution)) + 1):

if frame * column + 1 <= (1 << i):

height = 1 << i

break;

return width, height, column

def FixUV(meshs, width, height):

count = 0

for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

for face in mesh.polygons:

for i, loop_index in enumerate(face.loop_indices):

uv_layer.data[loop_index].uv = [

count % width / width + 1 / (width * 2),

(count // width + 1) / height + 1 / (height * 2)

]

count += 1

def FixUV_Dupe(meshs, width, height):

count = 0

for mesh in meshs:

uv_layer = mesh.uv_layers.get("VertexUV")

if not uv_layer:

uv_layer = mesh.uv_layers.new(name="VertexUV")

seen_vertices = {}

for vertex in mesh.vertices:

vertex_co = vertex.co

# 位置が重複していない場合のみ追加

if tuple(vertex_co) not in seen_vertices:

seen_vertices[tuple(vertex_co)] = None

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

vertex_co = mesh.vertices[polygon.vertices[i]].co

if tuple(vertex_co) in seen_vertices:

index = list(seen_vertices.keys()).index(tuple(vertex_co)) + count

uv_layer.data[loop_index].uv = [

index % width / width + 1 / (width * 2),

(index // width + 1) / height + 1 / (height * 2)

]

count += len(seen_vertices)

def NormalToFloat(x, y, z):

x_8bit, y_8bit, z_8bit = map(lambda v: int((v / 2 + 0.5) * 255), (x, y, z))

packed_24bit = (x_8bit << 16) | (y_8bit << 8) | z_8bit

return struct.unpack('!f', struct.pack('!I', packed_24bit))[0]

def ShortsToFloat(value1, value2):

paced_32bit = (value1 << 16) | value2

return struct.unpack('!f', struct.pack('!I', paced_32bit))[0]

def CreateObject(polygon_count):

mesh = bpy.data.meshes.new(name="TriangleMesh")

obj = bpy.data.objects.new("TriangleObject", mesh)

bpy.context.collection.objects.link(obj)

vertices = []

faces = []

for p in range(polygon_count):

vertices.append((1, 0, p * 0.001))

vertices.append((-1, 0, p * 0.001))

vertices.append((0, 1, p * 0.001))

faces.append((p * 3, p * 3 + 1, p * 3 + 2))

mesh.from_pydata(vertices, [], faces)

mesh.update()

def Main_UV(objs, max_resolution, hard_edge, vertex_compress):

if vertex_compress:

hard_edge = False

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

vertex_num = sum(len(obj.data.vertices) for obj in objs) \

if vertex_compress \

else len([loop for obj in objs for polygon in obj.data.polygons for loop in polygon.loop_indices])

width, height, column = GetResolution(max_resolution, vertex_num, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(objs[0].name + '_pos', width, height)

param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

texture.SetPixel(0, 0, *param)

bpy.context.scene.frame_set(0)

origins = {}

for obj in objs:

origins[obj] = obj.location.copy()

if vertex_compress:

FixUV_Dupe([obj.data for obj in objs], width, height)

else:

FixUV([obj.data for obj in objs], width, height)

for frame in range(start_frame, end_frame + 1):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

for obj in objs:

eval_obj = obj.evaluated_get(depsgraph)

mesh = eval_obj.to_mesh()

uv_layer = eval_obj.data.uv_layers.get("VertexUV")

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

uv = uv_layer.data[loop_index].uv

pixel = [int(uv.y * height), int(uv.x * width)]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co - origins[obj]

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

texture.Export()

def Main_VertexID(objs, max_resolution, hard_edge):

start_frame = bpy.context.scene.frame_start

end_frame = bpy.context.scene.frame_end

vertex_max = GetVertexMax(objs, start_frame, end_frame)

if vertex_max == -1:

print("三角形以外のポリゴンが含まれています")

return

width, height, column = GetResolution(max_resolution, vertex_max, end_frame - start_frame + 1)

if width == -1 or height == -1:

print("頂点数またはフレーム数が大きすぎます")

return

texture = TextureClass(objs[0].name + '_pos', width, height)

param = [ShortsToFloat(column, end_frame - start_frame), 0, 0, 1]

texture.SetPixel(0, 0, *param)

for frame in range(start_frame, end_frame + 1):

bpy.context.scene.frame_set(frame)

bpy.context.view_layer.update()

depsgraph = bpy.context.evaluated_depsgraph_get()

count = 0

for obj in objs:

eval_obj = obj.evaluated_get(depsgraph)

mesh = eval_obj.to_mesh()

for polygon in mesh.polygons:

for i, loop_index in enumerate(polygon.loop_indices):

pixel = [count // width + 1, count % width]

pixel[0] += (frame - start_frame) * column

vertex_pos = obj.matrix_world @ mesh.vertices[polygon.vertices[i]].co

normal = obj.matrix_world @ polygon.normal - obj.matrix_world.translation \

if hard_edge \

else obj.matrix_world @ mesh.vertices[polygon.vertices[i]].normal - obj.matrix_world.translation

normal = normal.normalized()

texture.SetPixel(*pixel, *vertex_pos, NormalToFloat(*normal))

count += 1

texture.Export()

CreateObject(math.ceil(vertex_max / 3))