Disclaimer

Note that the approach presented in this article is only meant to be used within a trusted network!:

- Command injection codes are contained (as pointed out by my colleague and supervisor E. Torii).

- Exposing

/var/run/docker.sockgives the container root privileges of host (as pointed out by my colleague and supervisor E. Torii).

Again, I would like to emphasize that this is NOT a solution I would suggest to use in production environments at one's workplace.

Problem Statement

It is often desirable to use Docker containers inside another Docker container.

One example is the Docker-baised-Airflow deployments mentioned below,

where docker command does not work out-of-box with LocalExecutor (hence, DockerOperators do not work as well).

One solution would be to build a privileged container and run Docker within it, however this is known to pose a security threat (moreover, I personally find them difficult to setup).

Besides, if you already use Docker this necessarily means that you have at least one working Docker deployment -- on your host machine! So why not try to leverage it? That is the basic idea.

Solution

I have put together an image, which I will call dood-flask in subsequent (GitHub repo, Dockerfile, Dockerhub Image). It can be easily hooked with your existing Docker installation (see the sample docker-compose.yml) and comes equipped with a simple Flask server so it can run Docker images triggered via

HTTP requests and hence can be easily added to existing infrastructures (more on that later).

Let us take it for a spin:

> git clone https://github.com/nailbiter/dood-flask/

> cd dood-flask

> docker-compose up --build -d

> docker-compose run --rm busybox ash

/home # curl -X POST -H 'Content-type: application/json' --data '{"image":"hello-world"}' web:5000

{"error_code": 0, "output": "\nHello from Docker!\nThis message shows that your installation appears to be working correctly.\n\nTo gene

rate this message, Docker took the following steps:\n 1. The Docker client contacted the Docker daemon.\n 2. The Docker daemon pulled th

e \"hello-world\" image from the Docker Hub.\n (amd64)\n 3. The Docker daemon created a new container from that image which runs the\

n executable that produces the output you are currently reading.\n 4. The Docker daemon streamed that output to the Docker client, wh

ich sent it\n to your terminal.\n\nTo try something more ambitious, you can run an Ubuntu container with:\n $ docker run -it ubuntu b

ash\n\nShare images, automate workflows, and more with a free Docker ID:\n https://hub.docker.com/\n\nFor more examples and ideas, visit

:\n https://docs.docker.com/get-started/\n"}

/home # curl -X POST -H 'Content-type: application/json' --data '{"image":"busybox","cmd":"echo hi"}' web:5000

{"error_code": 0, "output": "hi"}

/home # exit

The server's output is in JSON, so it may be a bit difficult to understand, but the point is that (inside the docker-compose's infrastructure) a POST-request to web:5000 allows us to run containers (provided via image in JSON payload)

and optional commands (provided via cmd)! Server runs the image and return error code and text output (error_code and output respectively).

Applications

Docker-in-Docker-based-Airflow

One application would be enabling Docker in Docker-based-Airflow (as briefly mentioned above):

> git clone https://github.com/nailbiter/docker-airflow

> cd docker-airflow

> docker-compose -f docker-compose-LocalExecutor.yml up --build -d

> docker-compose -f docker-compose-LocalExecutor.yml run --rm webserver airflow unpause tutorial_docker

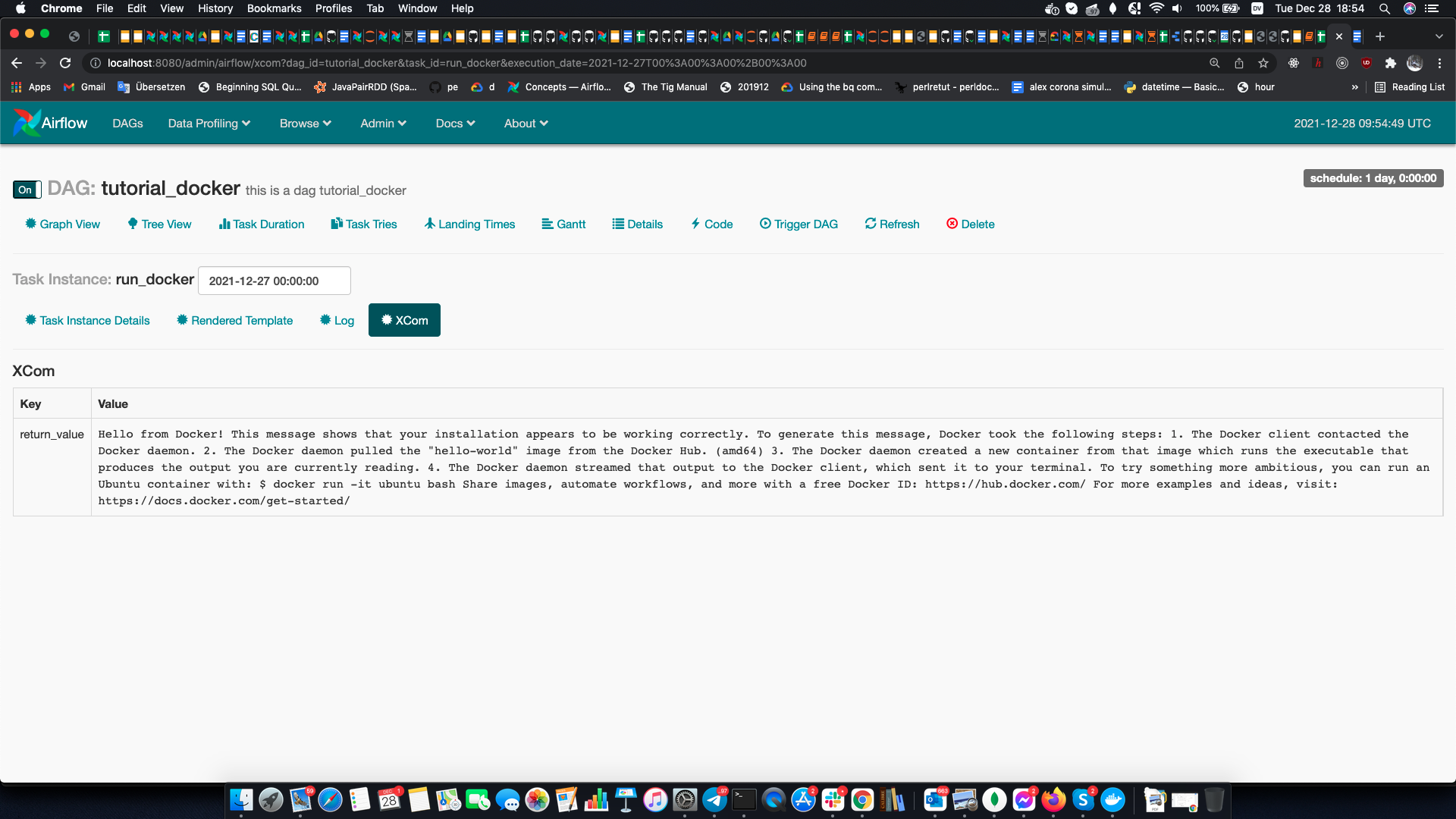

Then, go to localhost:8080 (may need to wait for a few minutes), and you will see the Airflow's WebUI. In it, you will see two DAGs: tuto and tutorial_docker.

Click on the tutorial_docker and you should see two dagruns each with a single task: run_docker. Wait till it finishes (it should become green) and zoom in it. In the output you should see

the result of running the command docker run hello-world. Output should be similar to below:

By changing the optional kwarg docker_image you should be easily able to run other images within Airflow. Have fun!

Future Work

- eliminate the danger of command injection (perhaps, switch to Docker Python SDK instead of

os.system)