TL;DR

In order to check how many mebibytes of quota you have used and have many have in total for the given project (my-project in my example), do the following:

> pip3 install git+https://github.com/nailbiter/alex_python_toolbox@v4.8.0

> python3

Python 3.9.6 (default, Aug 25 2021, 16:22:38)

[GCC 8.5.0 20210514 (Red Hat 8.5.0-3)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from alex_python_toolbox import bigquery_quota

>>> from google.cloud import bigquery

>>> client = bigquery.Client(project="my-project") #replace with name of your project

>>> used_mib, quota_mib = alex_python_toolbox.bigquery_quota.get_quota_usage(client)

>>> (used_mib, quota_mib)

(32784, 2097152) # used and total *daily* quota in mebibytes (can be different in your case)

# the below is optional -- pretty-print

>>> from alex_python_toolbox import utils

>>> print(f"quota {utils.format_coverage(used_mib/2**10, quota_mib/2**10)} (in tib)")

quota coverage = 32.015625/2048.0 = 1.56%

Note. This assumes that you have gcloud installed (see here).

goal

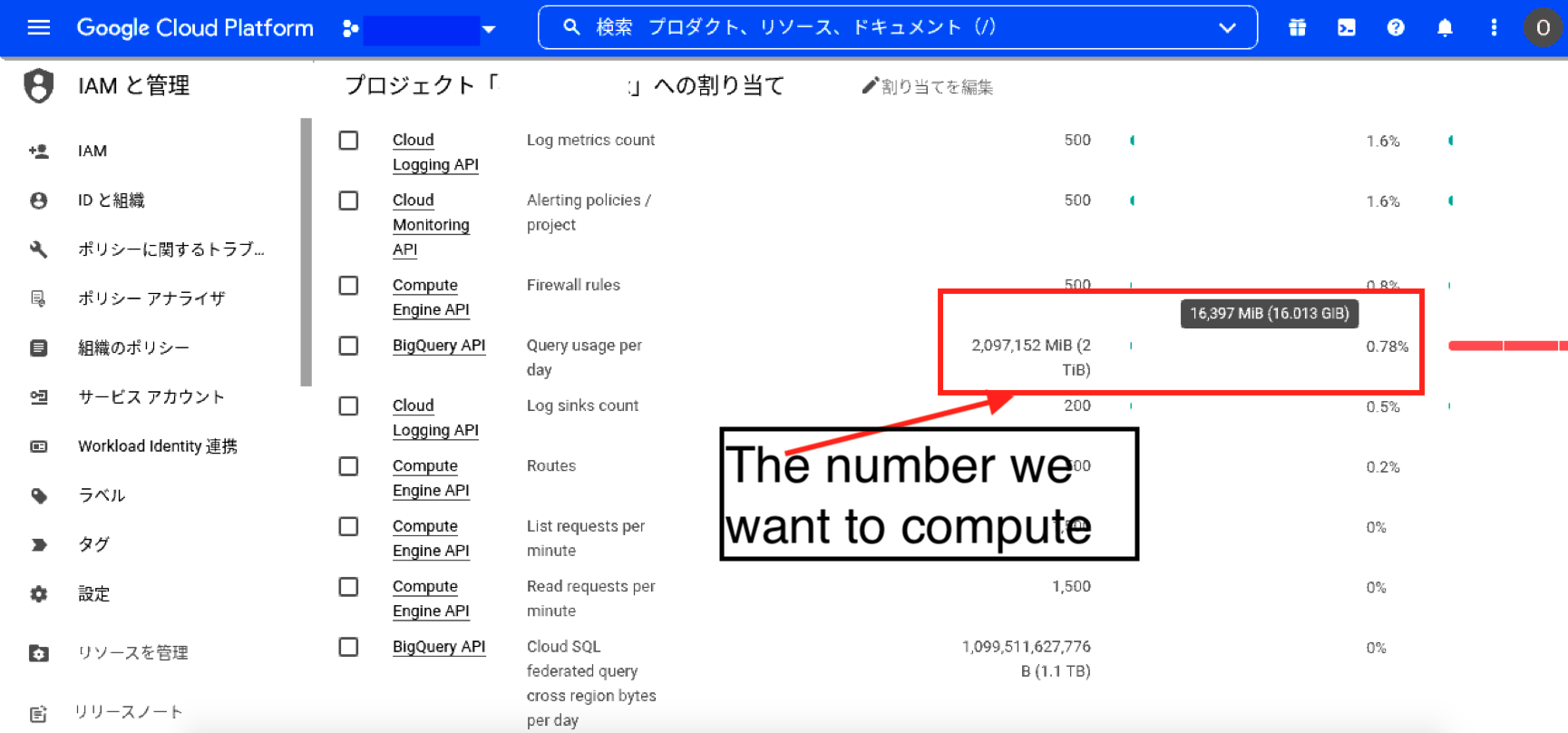

At my current workplace, we spend most of our budget on BigQuery, and hence our administrator has set some quota cost controls. For example, in the project my-project above I cannot process more that 2Tib per day normally, above that I have to ask for a special permission.

It becomes important, therefore, to know how much quota you have used and how much you have left. Fortunately, GCP provides this info on a nice webpage (https://console.cloud.google.com/iam-admin/quotas?authuser=0&project=my-project in my case), which looks like

this:

Now, for some reason, I would like to get this number programmatically (that is, by running some script, as opposed to having to manually open some webpage). The actual reason is not so important, but it has to do with me not wanting to use VNC server in otherwise

text-console-based environment just for this one thing.

implementation

Getting this exact number turned out to be a surprisingly formidable problem, at least for me. There are several approaches one can imagine (for example, rolling out headless chrome-in-docker, having it to go to that webpage and then render it out as readable pdf),

but after trying several approaches I decided to use BigQuery Python API, which luckily has methods for listing all the jobs and their respective quota used on a per-project basis. The approach,

wrapped out in a simple functional API can be seen in the TL;DR section above. For those curious about the source code, here it is.

further work

As mentioned above, this solution has yet much to be worked on. Most notably (in order of decreasing priority),

- in some cases, numbers on quota webpage and results of the function above do not match perfectly (as in example above);

- remove dependency on installed

gcloudcommand-line